Abstract

The development of high magnification retinal imaging has brought with it the ability to track eye motion with a precision of less than an arc minute. Previously these systems have provided only monocular records. Here we describe a modification to the Tracking Scanning Laser Ophthalmoscope (Sheehy et al. 2012) that splits the optical path in a way that slows the left and right retinas to be scanned almost simultaneously by a single system. A mirror placed at a retinal conjugate point redirects half of each horizontal scan line to the fellow eye. The collected video is a split image with left and right retinas appearing side by side in each frame. Analysis of the retinal motion in the recorded video provides an eye movement trace with very high temporal and spatial resolution.

Results are presented from scans of subjects with normal ocular motility that fixated steadily on a green laser dot. The retinas were scanned at 4 degrees eccentricity with a 2 degree square field. Eye position was extracted offline from recorded videos with an FFT based image analysis program written in Matlab. The noise level of the tracking was estimated to range from 0.25 to 0.5 arc minutes SD for three subjects. In the binocular recordings, the left eye / right eye difference was 1 to 2 arc minutes SD for vertical motion and 10 to 15 arc minutes SD for horizontal motion, in agreement with published values from other tracking techniques.

Keywords: Fixational eye movements, Retinal imaging, Binocular coordination

Introduction

Recent advances in retinal imaging with the scanning laser ophthalmoscope have led to retina based eye trackers that rival high end systems like the dual Purkinje image tracker and the magnetic induction search coil. Eye motion during retinal scans produce artifacts in the image that distort each recorded frame with shear, compression, stretch, or twist, depending on the eye motions. Removal of these distortions allows averaging of multiple frames for improved signal to noise ratios. This process of removal also yields a record of the eye motion that occurred during the recording (Mulligan, 1997; Stevenson & Roorda, 2005, Stevenson, Roorda, & Kumar 2010). This analysis was initially conducted off line with recorded video. More recently a robust real time tracking system has been developed that allows stabilization of targets on chosen retinal locations with precision on the order of an arc minute so that individual cones can be targeted and stimulated repeatedly (Arathorn, Yang, Vogel, et al. 2007).

Although adaptive optics (AO) provides the best possible images in retinal scanners, scanning through the natural optics of the eye can usually provide images of sufficient quality for tracking. The Tracking Scanning Laser Ophthalmoscope (Sheehy, Yang, Arathorn, et al. 2012) or TSLO is a non-AO system that scans the retina over a 1 to 5 degree field with sufficient contrast and resolution to see individual cones over most of the retina. Real time image analysis associated with the TSLO provides an on line estimate of horizontal and vertical eye position with an accuracy of better than an arc minute, and it produces analog output signals for use outside the TSLO. This system has recently been combined with an OCT scanner to improve the quality of volumetric images (Vienola, Braaf, Sheehy, et al. 2012).

Fixational eye movements recorded with retina scanners have to date been limited to monocular eye movements. Here we describe a modification to the TSLO that allows for recording of both eyes simultaneously with a single scanner. Real time tracking of the two eyes has not yet been implemented, so we report on results from off line analysis of the videos that show eye motion features comparable to the best high precision eye tracking systems. Briefly, we split the optical path with a knife edge mirror so that half the horizontal scan goes to one eye and half to the other, resulting in a split field image containing simultaneous records of the two eyes. The noise level is well under an arc minute, and the statistics of binocular fixation match those reported previously with the optical lever and search coil techniques.

For binocular tracking, one could employ two TSLOs to get full field tracking of each eye independently, and obtain real time eye motion for each eye. However, it is also possible to modify a single TSLO to image both eyes simultaneously for binocular tracking. Here we describe a method for dividing the optical path so that half the recorded field is from the right eye and the other half is from the left eye. Analysis of the motion was conducted off line after splitting the video in half. For comparison, a full field video from one eye was split in half and analyzed the same way, to obtain an estimate of the noise level of eye motion extraction. Results show that this system has a noise level of below one arc minute and thus can resolve the microsaccades and drifts of fixation.

Methods

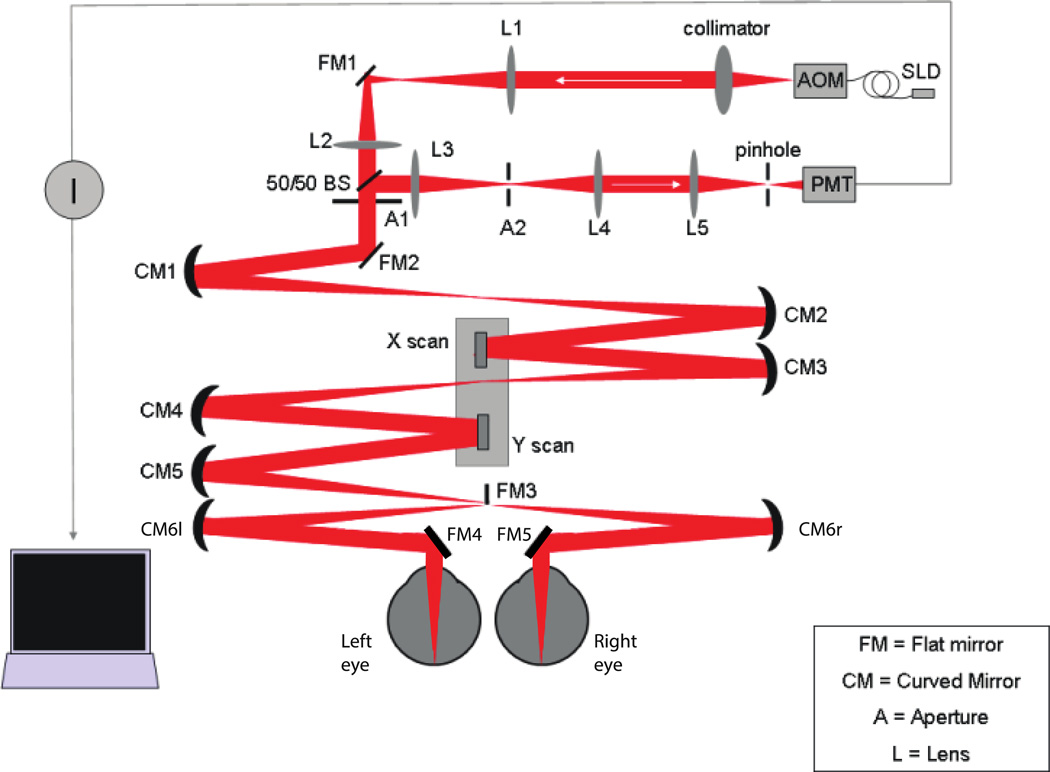

The layout of our system is shown in schematic form in Figure 1. For a complete description of the TSLO design and performance the reader is referred to Sheehy et al. (2012). Briefly, an 840 nm diode source is collimated to form a beam that is deflected horizontally at 15 kHz by a resonant scanner and vertically at 30 Hz by a mirror galvanometer scanner. The scanned beam is relayed by concave mirrors so that the pivot point of the scanners is conjugate to the subject’s pupil. The eye’s own optics focus the scanned beam to a point on the retina. Reflected light from this point travels back along the same optical path, is descanned by the same deflectors, and is then focused on a pinhole to reject scattered light from outside of the plane of focus. A photomultiplier tube detects the light that passes through the pinhole. The signal from the PMT is recorded by a special purpose video capture card that is synchronized with the scanners. The result is an image of the retina over the area scanned by the point of light (Figure 2).

Figure 1.

Schematic layout of the binocular modification to the tracking Scanning Laser Ophthalmoscope (modified from Sheehy et al., 2012). Mirror FM3 is placed at a retinal conjugate point, splitting the field into left eye and right eye halves. Each half of the scan is reflected by a concave mirror and a flat mirror into the respective eyes. The resulting scan produces a split field image with left and right retinal images side by side in the each frame. The horizontal scanner is a polished bar resonating at 15.4 kHz and images are collected during a 26 microsecond time window during one direction of scan, so left and right eye samples are collected about 13 microseconds apart. Eyes are not shown to scale.

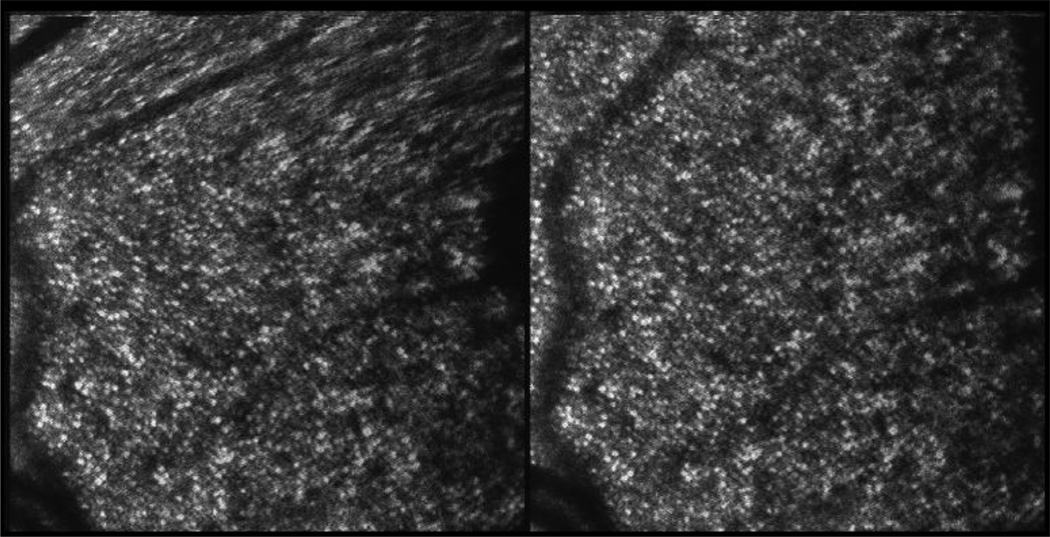

Figure 2.

Distortion in SLO images due to eye motion is illustrated in this pair of successive frames of a monocular (right eye) movie from subject 3. Images are 2 degree square fields at about 4 degrees eccentricity. Note the shear distortion in the upper part of the image on the left due to a horizontal saccade. Image analysis software (Stevenson & Roorda 2005; Stevenson, Roorda & Kumar, 2010) was used to recover the eye motion from recorded videos. Free fusion of these frames produces a dramatic stereoscopic view of the relative distortion.

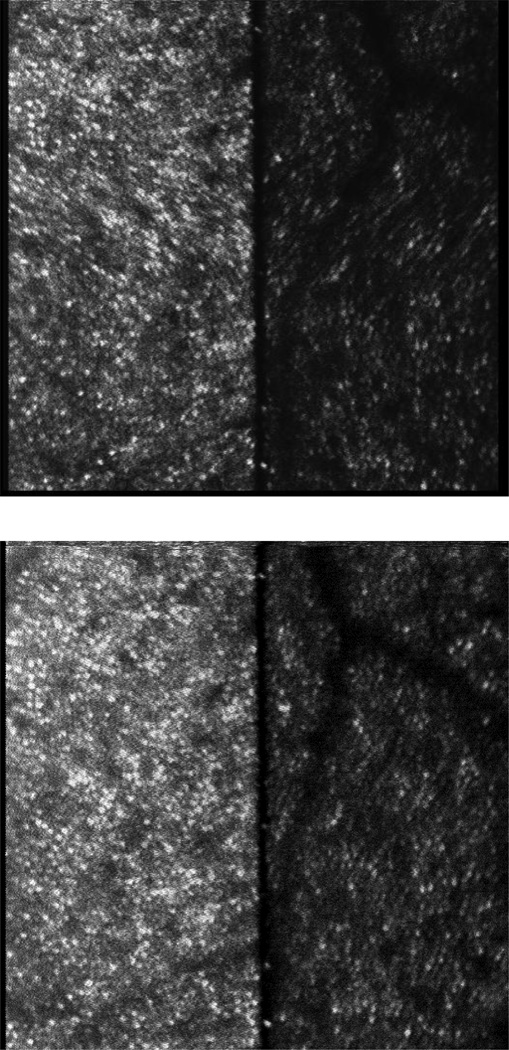

For the binocular modification, a knife edge mirror (FM3 in Figure 1) was placed at a retinal conjugate point between the scanners and the eye. The mirror was positioned so that it deflected half of the horizontal scan into a second path and thereby into the left eye of the subject. The result is a split field view, with the subject’s right retina on the left half of the video frame and the left retina on the right half (Figure 3). The knife edge of the mirror scatters a small fraction of the light, resulting in a black line down middle of the frame.

Figure 3.

Video frames captured during binocular recording from subjects 2 (top) and 3 (bottom). The right eye is on the left side of the image, left eye is on the right side. The black line down the center is the edge of the knife edge mirror used to redirect light to the left eye. The left eye image is mirror reversed and appears slightly darker, due to light losses from suboptimal alignment and focus, and the additional mirror in the left eye path. Halfway down the frame in the top image a horizontal saccade occurred, producing a shear distortion that is symmetric due to the mirror reversal of the left eye image. The field size was 2 degrees and the imaging was centered at 4 degrees in the upper visual field. Cone photoreceptors are clearly visible at this eccentricity even without wavefront correction by adaptive optics.

Subjects were first aligned with the right eye path by fine adjustment of the chin rest/ forehead support. The left eye was then aligned with the system by adjustment of the last two mirrors in the optical path. This process was somewhat tedious and we did not attempt to also precisely align the scans to corresponding retinal areas, as this requires adjusting five degrees of freedom (x, y for pupil alignment, and x, y, t for retinal alignment).

Subjects monocularly fixated a 530 nm green laser dot projected on the wall about two meters away, seen by the right eye through a beam splitter. The retina(s) were imaged at about 4 degrees eccentricity (superior field) with a two degree square raster. Subjects had natural pupils and were emmetropes needing no optical correction. Subjects gave informed consent and all procedures were approved by the University of California Berkeley IRB in accordance with The Code of Ethics of the World Medical Association (Declaration of Helsinki) for experiments involving humans.

Five subjects (4 Male, 1 Female, age range 28 to 54, with normal ocular motility and no retinal pathology) in all were tested with this system, but here we report results from three of them. The other two are not reported because we were unable to obtain sufficient quality images from the left eye to recover the eye movement traces. Two of the five had a very small, subclinical (< .5 degree) upbeating vertical nystagmus (see Figure 6).

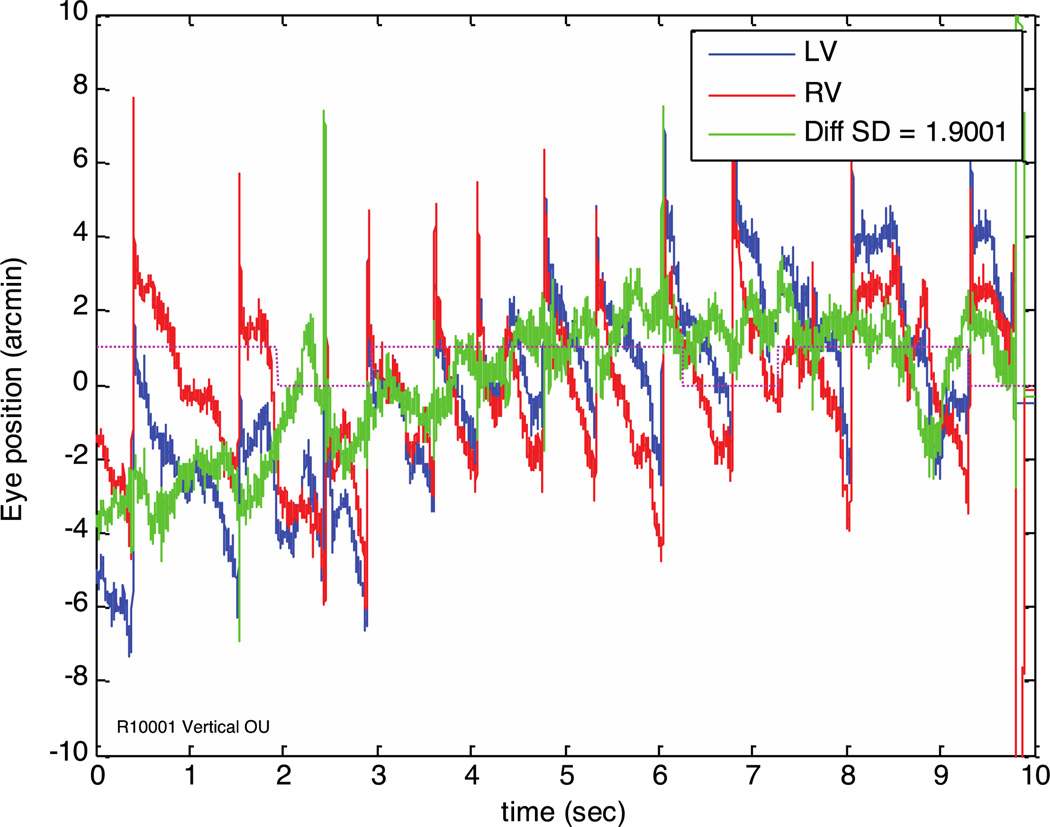

Figure 6.

Vertical eye traces from subject 1. This subject exhibits an upbeating vertical nystagmus with amplitude of about 5 arc minutes and frequency of about 1 beat per second. Two of the five individuals we imaged in the development of this instrument have a small vertical nystagmus, and neither knew it was there until eye tracking revealed it. In our experience around 1 of 10 individuals shows this pattern without noticeable visual consequence. The difference trace (green) shows very little of the nystagmus, indicating that it is almost completely conjugate. Each vertical saccade shows a distinct overshoot that is itself about 4 arc minutes. These are most likely due to image shifts caused by crystalline lens wobble associated with the saccades (He, et al. 2010).

The effect of poor quality images is to reduce the peak value of the cross-correlation between the reference image and the strip of video being analyzed. When this peak gets closer to the background noise, false matches are occasionally higher and the resulting eye trace shows artifactual jumps in position. In order to avoid these artifacts, our algorithm applies a set of tests to the data and rejects video that fails to pass the tests. For the current analysis, blinks and low light level images were rejected when the average pixel value in a frame was less than 15 (out of 255) or changed by more than 25 from frame to frame. Correlation peaks were rejected if the next highest peak was within 15% of the highest peak value. All subjects showed occasional tracking loss based on these criteria, and these sections were not included in our statistical analysis. Regions of tracking loss are indicated by the fine magenta line in Figures 4–6.

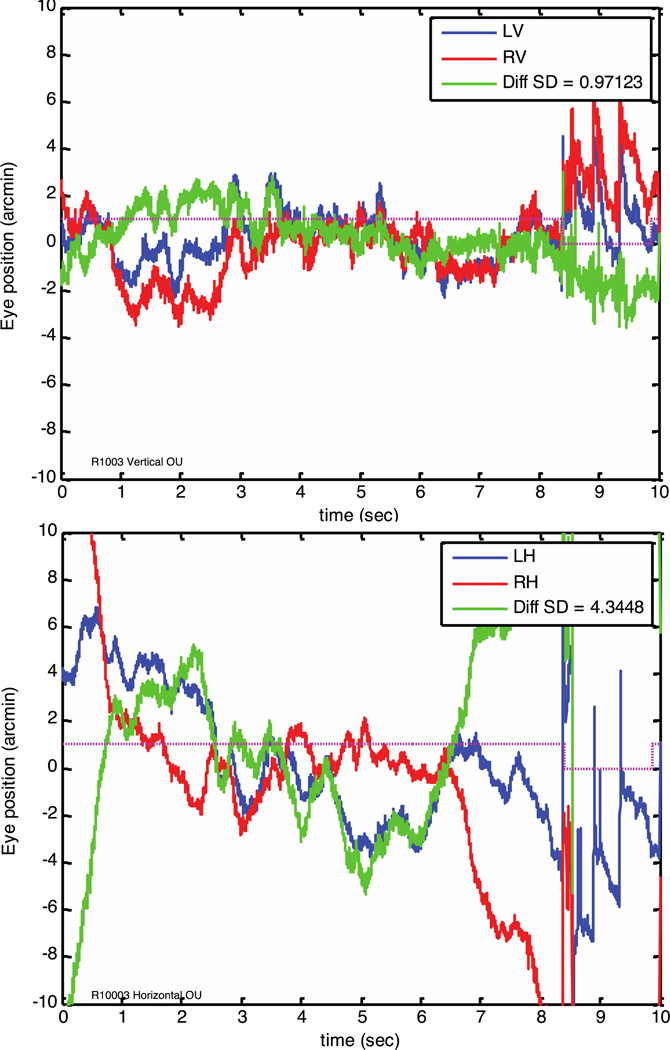

Figure 4.

Binocular eye traces from subject 3, plotted separately for vertical (top) and horizontal (bottom) eye motion. Left eye motion is plotted in blue, right eye in red, and the difference (vergence movement) is plotted in green. The inset shows the standard deviation of the difference signal in arc minutes. The fine magenta line indicates the section of the records used to calculate the SD, with the last 1.5 seconds omitted due to tracking loss of the right eye. The difference between the left and right eye vertical traces had an SD of just under 1 arc minute.

Ten seconds of video were recorded and saved for off line analysis. Monocular (right eye) videos were taken with the splitting mirror removed. The best quality of several videos from each subject were analyzed and are presented here. Analysis proceeded according to the method of Stevenson et al. (2005) in which strips of each video frame were cross correlated to an average frame constructed from the best frames of the video. Sections of video with blinks or poor quality images were ignored for the purposes of the statistical analysis. For these measures we broke each video frame into 64 strips, resulting in an effective eye tracking sample rate of 1920 Hz.

Conventional calibration of eye movement amplitude with the TSLO is not required. The only requirement is that the angular subtense of the scanned field is known. Eye motion is then simply calculated from the pixel shifts in the retinal image. The exact field size measurement in pixels per degree was made with a calibrated model eye.

The accuracy of the resulting eye traces depends principally on the quality of the image and on the selection of a reference frame against which all others are compared. Artifacts due to the reference frame show up as 30 Hz periodic motions and are removed by subtracting the average motion across frames (Stevenson et al. 2010). This has the drawback that any actual 30 Hz eye movements are removed, but these have very small amplitude in normal fixation.

In order to estimate the noise level of the binocular analysis, we also recorded monocular images of the right eye only by removing the knife edge mirror. These videos were split in half and analyzed with the same procedure as for binocular recordings. Our assumption is that the motion in the left and right halves of a single eye image are essentially identical and so any difference found can be attributed to noise. This assumption is violated if the eye makes significant amounts of torsion, because the rotation about the line of sight produces vertical motion that is in opposite directions on the left and right sides of the image. Torsion also produces an apparent shear of the image relative to the reference frame, and we analyzed the videos for this combined signature of torsion. No significant torsion artifacts were found in the videos used for this analysis.

The recorded movies were split in half and analyzed separately for eye motion. For comparison, monocular movies were also split in half and analyzed independently. Assuming no significant torsion, the comparison of left and right halves of a monocular video provide an estimate of the noise level of tracking for this method.

Results

Images recorded from the binocular SLO are shown in figure 3 for two subjects. A single frame from each subject’s video is shown, and the frame for subject 2 was chosen to illustrate the distortion produced by a horizontal saccade. Because the left eye is imaged through an additional mirror, the direction of shear in the left eye image is reversed relative to the right eye.

Eye traces extracted from 10 second videos are shown in figures 4, 5, and 6. The traces shown are raw data, with each sample representing the extracted eye position for a single strip of a video frame, with 64 strips per frame. Binocular traces in figure 4 show the characteristics of fixational eye movements described previously with other methods, with occasional micro-saccades added to a random walk motion usually described as a combination of drift and tremor. Saccades in figures 5 and 6 show a distinct, very brief overshoot that is likely due to motion of the eye’s crystalline lens at the end of the saccade. For these microsaccades, the amplitude of the lens wobble induced retinal motion is sometimes larger than the saccade itself.

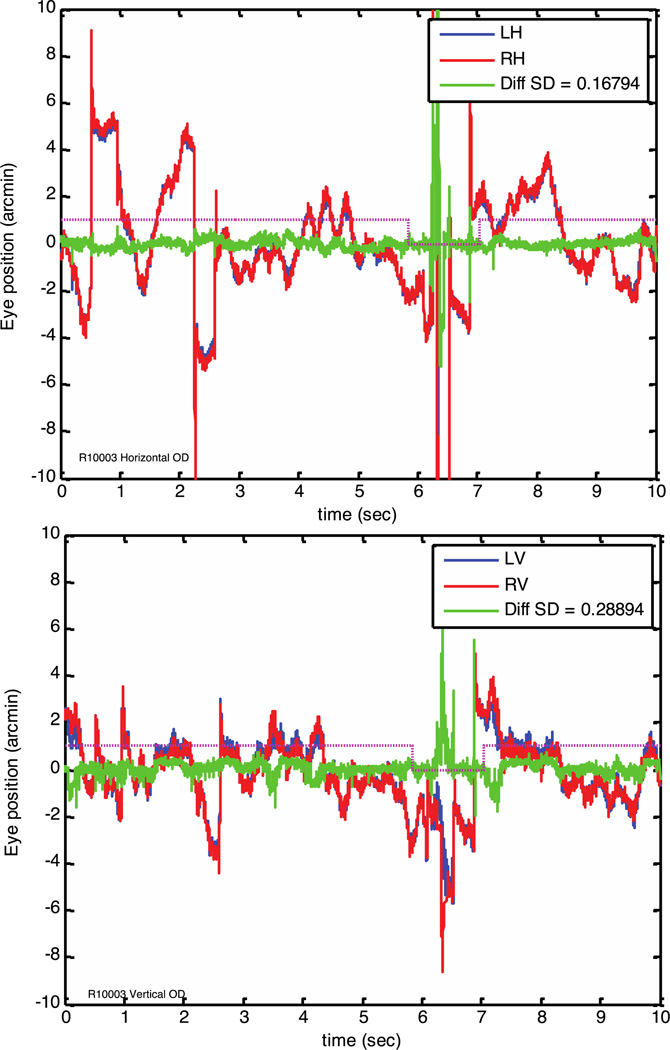

Figure 5.

Eye traces from subject 3 extracted from a monocular (right eye only) video that was split in half, for comparison to the binocular video. Neglecting possible torsion artifacts, we expect the left and right halves of a monocular video to have the same motion signal, and so this extraction provides an estimate of the noise level of the method. Colors are as for figure 3, except left and right now refer just to the left and right halves of the image of one eye. The SD for the difference is given in the inset, and was around 0.2 arc minutes. This eye tracker is thus a factor of 3 to 6 times more sensitive than required to see these small differences in binocular fixation for this subject.

Records from one subject with a sustained vertical nystagmus are shown in Figure 6. The overshoot associated with saccades is particularly evident here, and the conjugacy of the nystagmus is clear. A small vertical vergence change in sync with the drift component of nystagmus is evident, but the saccadic component appears to be fully conjugate, resulting in an almost sinusoidal vertical vergence oscillation.

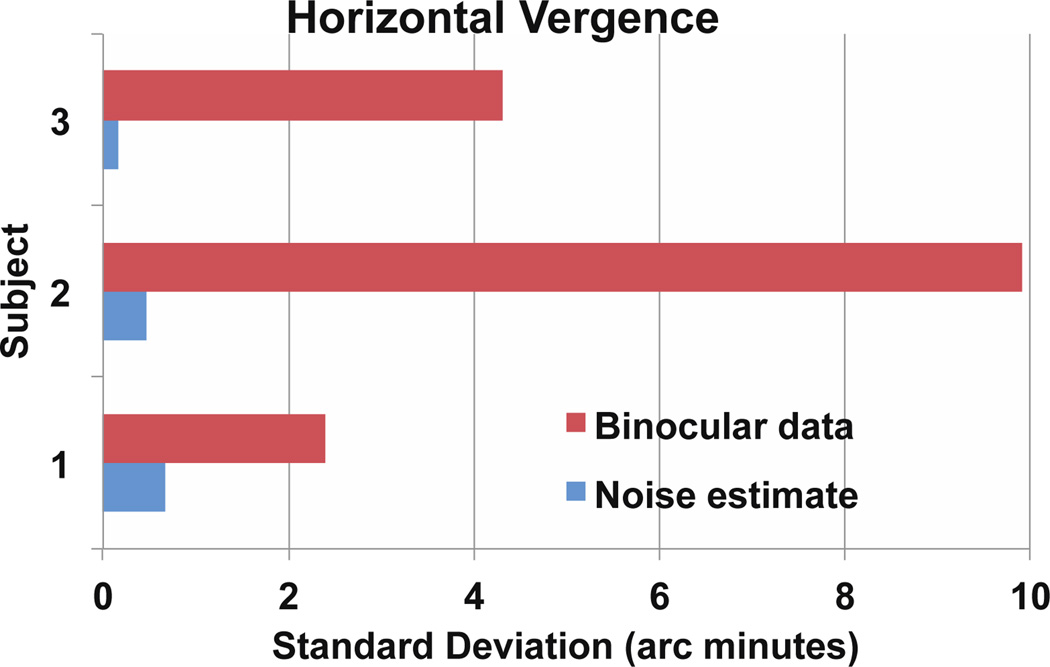

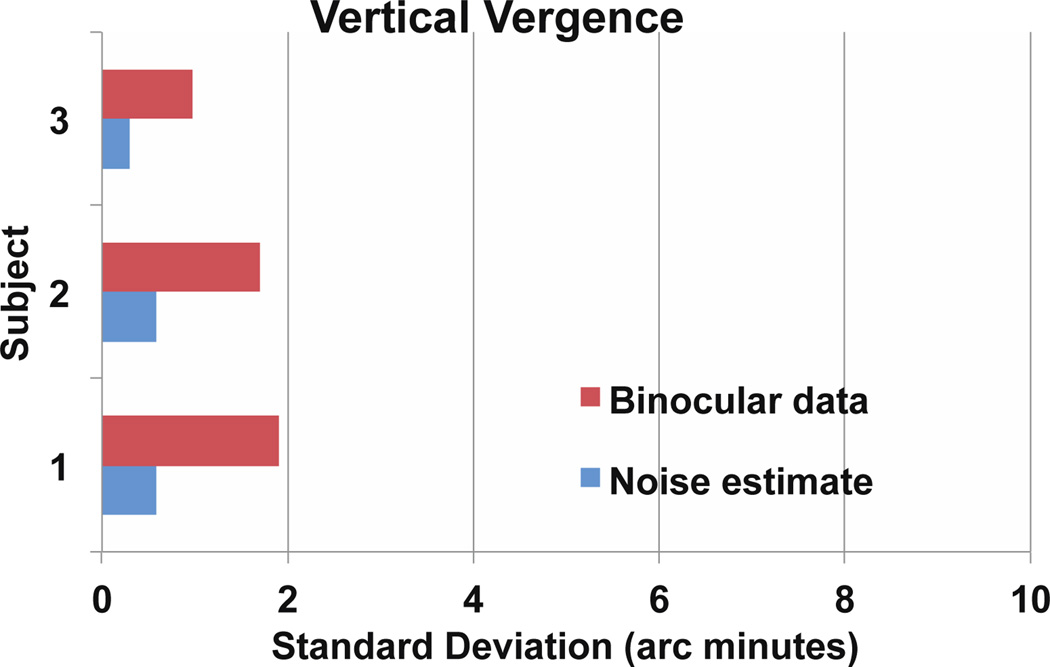

Subtraction of motions in the left (blue) and right (red) eye traces yields the vergence traces shown in green in figures 4, 5, and 6. We calculated the standard deviation of these movements over blink and artifact free regions of the signal and found that our recordings agree very well with previously published statistics on vergence positional variability in fixation eye movements (Krauskopf et al., 1960; van Rijn, van der Steen, & Collewijn 1994). For the statistics presented in figure 7, we chose representative 10 second videos from each subject to calculate the standard deviation of binocular eye position and along with noise estimates from monocular videos. Vertical vergence during steady fixation had a standard deviation of 1 to 2 arc minutes in three subjects for whom we had good quality monocular and binocular video. Horizontal vergence had more variability, ranging from 2 to 10 arc minutes.

Figure 7.

Summary of binocular difference SD values for three subjects. Horizontal eye motion analysis is in the top bar graph, vertical motion is in the bottom. For each subject, the blue bar is the noise estimate for tracking based on analysis of a monocular video. The red bar is the SD of the difference between right and left eyes. As expected, the difference between the left and right eye vertical positions has a standard deviation of 1 to 2 arc minutes while horizontal position shows more variability (van Rijn, et al. 1994). The noise level for both axes is between .2 and .6 arc minutes. Image quality in the video is probably the primary factor in determining this noise level. A torsion analysis (not shown) indicated that torsion was not contributing significantly to these measurements.

Estimates of the noise level from analysis of a split field monocular video on each subject showed differences of less than an arc minute in all cases (Fig 5 and 7). The movements we record are thus several times larger than the noise level of this tracking method.

Discussion

Development of precise eye tracking

Precise measurement of binocular eye position during fixation was first achieved in the middle of the last century using a tight fitting contact lens with attached mirror (Riggs & Ratliff, 1951). These systems are able to record eye movements smaller than one arc minute. They have the disadvantage that their range of tracking is also generally small and the contact lens is uncomfortable for subjects. Subsequently, magnetic induction search coils were developed with much better range, marginally better comfort, and the ability to measure horizontal, vertical, and torsional components at the same time with precision at or below one arc minute. The dual Purkinje image tracker (Cornsweet & Crane, 1973; Crane & Steele, 1985) uses a less invasive optical method for tracking and similarly has a precision of around 1 arc minute (Stevenson & Roorda, 2005).

The development of high magnification retinal imaging has added another important tool in this area, with precision well under an arc minute and the added benefit that targets can be placed and then stabilized on precise retinal locations to an accuracy of one cone photoreceptor. The modification we have described further extends this to binocular recording, and future development will allow studies of binocular correspondence at the same level of accuracy.

The Components of Fixation

In one of the earliest precise measurements of fixation eye movements, Adler and Fliegelman (1934) used a mirror placed directly on the eye at the limbus to record fixation movements while subjects fixated on a cross hair target. They described three components to the recorded horizontal motion of the eye: “rapid shifts,” “waves,” and “fine vibratory movements”. These are now commonly called microsaccades, drifts, and tremors, respectively. Adler and Fliegelman described the tremor component as having a frequency of 50 to 100 Hz and amplitude of a little more than 2 arc minutes. They suggested the fine vibrations “probably represent the vibration frequency of the extraocular muscles.”

Riggs and Ratliff (1951) refer to fixational movements generally as “normal tremor of the eye,” but further describe these as comprising “relatively large involuntary drifts and jerky motions” and “relatively small involuntary tremor movements.” They describe the fine tremor as having a frequency of 90 Hz and amplitude of 15 arc seconds. In a follow-up paper, Krauskopf, Cornsweet, & Riggs (1960) reported that drifts and tremors were uncorrelated in the two eyes, but that “Saccades in one eye seem to be always accompanied by simultaneous saccades in the other eye which are almost always in the same direction and about the same in size. Examination of records obtained during 80 min of binocular fixation failed to reveal one unequivocal case of a saccade in one eye unaccompanied by a saccade in the other.” Thus, with their resolution of a few seconds of arc, they found no evidence for monocular saccades in their two subjects.

The existence of tremor as a distinct component has been called into question, however. Recent measurements with the AOSLO at high frequencies (Stevenson, Roorda & Kumar, 2010; Vienol, Sheehy, Yang, et al. 2012) indicate that fixation movements are best characterized as having amplitude that is the inverse of frequency (1/f). Some records show a small relative increase at around 50 Hz., but not a distinct peak as might be expected from earlier reports. This confirmed earlier measurements from other methods that showed an overall 1/f amplitude spectrum. (Findlay, 1971; Ezenman, Hallett, & Frecker, 1985).

Binocular fixation statistics

Krauskopf et al. (1960) reported on the standard deviation of horizontal eye position for fixations lasting 1 minute and also for briefer, 2 second fixations. For the longer durations, the two eyes differed with a standard deviation of about 2 arc minutes, while for the shorter 2 second records this value was 1.3 arc minutes. This demonstrated how very precise the alignment of the eyes can be under optimal conditions of a highly trained subject fixating a sharp, well focused target.

In a more recent study, van Rijn, van der Steen, & Collewijn (1994) used a scleral magnetic search coil system to measure all three axes of eye rotation in both eyes of four subjects who fixated a dot with or without a larger background. Expressed as the standard deviation of eye position over 32 seconds of fixation, they found that torsional version (cycloversion) had the greatest variability at about 12 to 18 arc minutes, while vertical vergence had the smallest, ranging from .33 to 1.9 arc minutes. Variability was found to increase with sample duration up to about 30 seconds, a fact that must be considered when comparing their results to others.

The precision of vertical eye alignment is not limited to steady fixation. Using scleral search coils, Schor, Maxwell, & Stevenson (1994) measured vertical eye alignment in an open loop condition in which the eyes had no vertical disparity cues to maintain alignment. The variation in vertical eye alignment varied by less than .25 degrees for three subjects whether fixating straight ahead, 15 degrees up, or 15 degrees down. The binocular coordination of vertical eye alignment is thus very precise even without vision to provide feedback.

It should be noted that in all the foregoing papers, subjects used a bite bar to maintain stable head posture. If the head is free to move during fixation, causing a vestibular component to be added to the eye motion, binocular alignment can be significantly worse (Steinman & Collewijn, 1980).

Vertical vergence has the lowest variation of the six directions of binocular eye motion, making it a good test case for eye tracker performance. It also has the advantage that it is controlled by reflexes, with no voluntary component to add variability from changes in effort, attention, or motivation (Stevenson, Lott, & Yang, 1997; Stevenson, Reed & Yang, 1999). Subjects fixating on a target with no vertical disparity change to drive the eyes will invariably show a vertical eye position difference of no more than 2 to 3 arc minutes. This fact is useful in evaluating a binocular eye tracker, because it provides a convenient benchmark for evaluating tracker noise. If a binocular eye tracker shows a standard deviation for the difference in vertical position of more than a few arc minutes, it is almost certainly due to system noise (Bedell & Stevenson, 2013). Common video eye trackers show noise levels of around 15 arc minutes by this test, despite the fact that benchmarks with artificial eyes may show much better precision.

Advantages and Limitations of the binocular tracking SLO

Our primary objective in the development of this system was to achieve binocular imaging and tracking from a single SLO system. Although one might design a truly binocular system with independent stimulating and detecting channels for each eye, the modification we describe to a single SLO greatly reduces the cost and complexity over a two system design. The modification we describe does have some drawbacks: splitting the scan reduces the field size for each eye; the added left eye channel has one extra mirror in the path, which slightly reduces light levels; and alignment of both eyes to one system is a challenge. These factors can impact image quality, and thus increase noise. However, with high quality optics and careful alignment, the system has the same performance as the monocular system described by Sheehy et al. (2012). Our noise level estimates here are in agreement with the previous report.

Compared to other systems for precise eye tracking, such as search coils, contact lens mounted mirrors, or dual Purkinje image trackers, the tracking SLO has the advantage that the retina itself is being imaged and tracked. The position of a target on the retina can thus be visualized, lending high confidence to the accuracy of stabilization. The system we describe does not yet stabilize targets on both retinas in real time tracking, but this requires only a relatively straightforward software modification to implement the split field independent tracking.

The alignment of the system to both eyes is a significant challenge. The standard practice with monocular imaging is to position the subject’s head to align the eye with the table mounted optics. The retinal location of interest is then controlled by having the subject fixate points either inside or outside the imaging raster. We follow this head positioning procedure for aligning one eye of our subjects, but aligning the second eye requires adjustment of the mirrors in the system to accommodate variable pupil separation and head angle. In this first implementation we concentrated our efforts on aligning the beam with the pupil to optimize the image quality by adjusting the position and angle of mirrors CM6l and FM4 (see Figure 1). Aligning the rasters to also fall on corresponding retinal loci proved difficult due to the interaction between beam angle and pupil entry location as mirrors are adjusted. For the measurements we describe here, the rasters always appeared overlapped in the peripheral visual field but were not precisely aligned to binocular correspondence. Future designs will incorporate better controls on the mirror components to facilitate orthogonal control of beam position (alignment in the pupil) and beam direction (alignment on the retina) for both eyes, for real time stabilization of targets with well-controlled binocular disparity.

The tracking SLO design allows for stimuli to be presented in the scanning raster, but also provides real time eye position output signals. The implementation of real time tracking of both eyes will allow stabilization of targets in secondary displays as one might do with a search coil or Purkinje tracking system. In that case strict alignment of the rasters to corresponding retinal locations is not required.

In summary, we describe a binocular eye tracking system that uses a single retina scanner to collect images of both eyes simultaneously. The system has exquisite sensitivity and allows visualization of the retinal locations being stimulated. Measurements with this system produce records consistent with previous studies of binocular fixation movements with high precision eye trackers.

Highlights.

Modification of the tracking SLO allows simultaneous recording of both eyes.

Sensitivity of tracking was found to range between 0.2 and 0.6 arc minutes.

Vertical vergence had an SD of 1 to 2 arc minutes.

Acknowledgments

The authors wish to thank their subjects for participation. SBS was supported by a sabbatical leave from the University of Houston. Funding was also provided by support from the Macula Vision Research Foundation (CKS and AJR) and NIH/NEI RO1EY014375, NIH/NEI R01EY023591 (AJR)

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosures:

Sheehy: Coinventor on a patent that has been filed on technology related to the tracking scanning laser ophthalmoscope. Financial interest in C.Light Technologies.

Roorda: Coinventor on a patent that has been filed on technology related to the tracking scanning laser ophthalmoscope. Financial interest in C.Light Technologies.

References

- Adler FH, Fliegelman M. Influence of fixation on the visual acuity. Archives of Ophthalmology. 1934;11:475–483. [Google Scholar]

- Arathorn DW, Yang Q, Vogel CR, Zhang Y, Tiruveedhula P, Roorda A. Retinally stabilized cone targeted stimulus delivery. Opt. Express. 2007;15(21):13731–13744. doi: 10.1364/oe.15.013731. (2007) [DOI] [PubMed] [Google Scholar]

- Bedell HE, Stevenson SB. Eye movement testing in clinical examination. Vision Research. 2013;90:32–37. doi: 10.1016/j.visres.2013.02.001. (2013) [DOI] [PubMed] [Google Scholar]

- Cornsweet TN, Crane HD. Accurate two-dimensional eye tracker using first and fourth Purkinje images. JOSA. 1973;63:921–928. doi: 10.1364/josa.63.000921. [DOI] [PubMed] [Google Scholar]

- Crane Hewitt D, Steele Carroll M. Generation-V dual-Purkinje-image eyetracker. Applied Optics. 1985;24:527–537. doi: 10.1364/ao.24.000527. [DOI] [PubMed] [Google Scholar]

- Ezenman M, Hallett PE, Frecker RC. Power spectra for ocular drift and tremor. Vision Res. 1985;25:1635–1640. doi: 10.1016/0042-6989(85)90134-8. [DOI] [PubMed] [Google Scholar]

- Findlay JM. Frequency analysis of human involuntary eye movement. Kybernetik. 1971;8:207–214. doi: 10.1007/BF00288749. [DOI] [PubMed] [Google Scholar]

- He L, Donnelly WJ, III, Stevenson SB, Glasser A. Saccadic lens instability increases with accommodative stimulus in presbyopes. Journal of Vision. 2010;10(4):14, 1–16. doi: 10.1167/10.4.14. http://journalofvision.org/10/4/14/, doi:10.1167/10.4.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulligan JB. Recovery of motion parameters from distortions in scanned images. Proceedings of the NASA Image Registration Workshop (IRW97) (NASA Goddard Space Flight Center, MD, 1997), no. 19980236600. 1997 [Google Scholar]

- Riggs LA, Armington JC, Ratliff F. Motions of the retinal image during fixation. J. Opt. Soc. Am. 1954;44(4):315–321. doi: 10.1364/josa.44.000315. (1954) [DOI] [PubMed] [Google Scholar]

- Riggs LA, Ratliff F. Visual Acuity and the Normal Tremor of the Eyes. Science. 1951 Jul 6;114(2949):17–18. doi: 10.1126/science.114.2949.17. (1951) [DOI] [PubMed] [Google Scholar]

- Riggs LA, Schick AM. Accuracy of retinal image stabilization achieved with a plane mirror on a tightly fitting contact lens. Vision Res. 1968;8(2):159–169. doi: 10.1016/0042-6989(68)90004-7. (1968) [DOI] [PubMed] [Google Scholar]

- Schor CM, Maxwell JS, Stevenson SB. Isovergence surfaces: the conjugacy of vertical eye movements in tertiary positions of gaze. Ophthalmic and Physiological Optics. 1994;14:279–286. doi: 10.1111/j.1475-1313.1994.tb00008.x. [DOI] [PubMed] [Google Scholar]

- Sheehy CK, Yang Q, Arathorn DW, Tiruveedhula P, de Boer JF, Roorda A. High-speed, image-based eye tracking with a scanning laser ophthalmoscope. Biomedical Optics Express. 2012;3(10):2611–2622. doi: 10.1364/BOE.3.002611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinman RM, Collewijn H. Binocular retinal image motion during active head rotation. Vision Research. 1980;20:415–429. doi: 10.1016/0042-6989(80)90032-2. [DOI] [PubMed] [Google Scholar]

- Stevenson SB, Roorda A. Correcting for miniature eye movements in high resolution scanning laser ophthalmoscopy. In: Manns F, Soderberg P, Ho A, editors. Ophthalmic technologies XI. Bellingham, WA: SPIE; 2005. pp. 145–151. [Google Scholar]

- Stevenson SB, Roorda A, Kumar G. Eye tracking with the adaptive optics scanning laser ophthalmoscope. In: Spencer SN, editor. Proceedings of the 2010 Symposium on Eye-Tracking Research and Applications. New York: Association for Computed Machinery; 2010. pp. 195–198. (2010) [Google Scholar]

- van Rijn LJ, van der Steen J, Collewijn H. Instability of ocular torsion during fixation: Cyclovergence is more stable than cycloversion. Vision Research. 1994;34:1077–1087. doi: 10.1016/0042-6989(94)90011-6. [DOI] [PubMed] [Google Scholar]

- Vienola KV, Braaf B, Sheehy CK, Yang Q, Tiruveedhula P, Arathorn DW, de Boer J, Roorda A. Real-time eye motion compensation for OCT imaging with tracking SLO. Biomedical Optics Express. 2012;3(11):2950–2963. doi: 10.1364/BOE.3.002950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel CR, Arathorn DW, Roorda A, Parker A. Retinal motion estimation in adaptive optics scanning laser ophthalmoscopy. Opt. Express. 2006;14(2):487–497. doi: 10.1364/opex.14.000487. (2006) [DOI] [PubMed] [Google Scholar]

- Yang Q, Arathorn DW, Tiruveedhula P, Vogel CR, Roorda A. Design of an integrated hardware interface for AOSLO image capture and conetargeted stimulus delivery. Opt. Express. 2010;18(17):17841–17858. doi: 10.1364/OE.18.017841. (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]