Abstract

Lung cancer has a poor prognosis when not diagnosed early and unresectable lesions are present. The management of small lung nodules noted on computed tomography scan is controversial due to uncertain tumor characteristics. A conventional computer-aided diagnosis (CAD) scheme requires several image processing and pattern recognition steps to accomplish a quantitative tumor differentiation result. In such an ad hoc image analysis pipeline, every step depends heavily on the performance of the previous step. Accordingly, tuning of classification performance in a conventional CAD scheme is very complicated and arduous. Deep learning techniques, on the other hand, have the intrinsic advantage of an automatic exploitation feature and tuning of performance in a seamless fashion. In this study, we attempted to simplify the image analysis pipeline of conventional CAD with deep learning techniques. Specifically, we introduced models of a deep belief network and a convolutional neural network in the context of nodule classification in computed tomography images. Two baseline methods with feature computing steps were implemented for comparison. The experimental results suggest that deep learning methods could achieve better discriminative results and hold promise in the CAD application domain.

Keywords: nodule classification, deep learning, deep belief network, convolutional neural network

Introduction

Lung cancer is a malignant disease carrying a poor prognosis, with sufferers having an average 5-year survival rate of less than 20%.1 Patients with locally advanced, unresectable, or medically inoperable disease are usually treated with concurrent radiotherapy and chemotherapy. Although target therapeutics and various chemotherapy regimens are available, locally advanced lung cancer carries a very poor prognosis, with a mean survival time of less than 12 months. Thus, early detection of a lung lesion to improve the complete resection rate (R0 resection) and increase the likelihood of survival rate is important. Chest computed tomography (CT) scan, especially high resolution CT, has been widely accepted for detection of lung tumors. Intriguingly, small lung nodule(s) noted on CT images make the differential diagnosis clinically difficult and may confuse clinical decision-making. Small lung nodules are regarded infrequently malignant, difficult to biopsy or excise, and not reliably characterized by positron emission tomography scan.2 In clinical practice, the American College of Chest Physicians has published a guideline for the diagnosis and management of pulmonary nodules.3 In this expert consensus-based recommendation, small nodules less than 8 mm could be further surveyed, characterized, or kept under observation according to evidence-based risk estimation. However, the current guideline relies only on the size of lung nodules, rendering clinical decision-making difficult and controversial. Clearly, the development of a more informative tool for clinicians to differentiate the nature of pulmonary nodules noted on CT scan remains an urgent task.

Computer-aided diagnosis (CAD) is a research field concerned with offering a quantitative opinion to improve the clinical diagnostic process.4,5 One of the major purposes of CAD is to automatically differentiate the malignant/benign nature of tumors/lesions6–9 based on numeric image features to assist decision-making whenever there is diagnostic uncertainty and disagreement.5,10 A traditional CAD scheme commonly involves several image processing tasks and then performs a classification task for differentiation of a tumor/lesion. The performance of a conventional CAD scheme relies heavily on the intermediate results of the image processing tasks6,8,11,12 for reliable features. Meanwhile, integration and selection of computed features are other important issues in many CAD problems.

The recent advent of deep learning techniques has highlighted the possibility of automatically uncovering features from the training images13–15 and exploiting the interaction,16 even hierarchy,17 among features within the deep structure of a neural network. Accordingly, the issues of feature computing, selection, and integration can potentially be addressed by this new learning framework without a complicated pipeline of image processing and pattern recognition steps. Further, the annotation costs with deep learning may not be as expensive as those in the conventional CAD frameworks. The annotator can simply specify the malignant/benign nature of the training images without the need for meticulous drawing of the tumor boundaries on the training data.

Deep learning has been less explored in the context of CAD. The first study was by Suk and Shen,16 who introduced the technique of a stacked autoencoder for the problem of CAD in Alzheimer’s disease, with promising accuracy. However, there is no related previous work on tumor differentiation with deep learning techniques. In this study, we exploit a deep learning framework for the problem of tumor differentiation to address feature-related and annotation cost issues.

The specific CAD problem targeted in this paper is differentiation of a pulmonary nodule on CT images. The deep belief network (DBN)14,15 and convolutional neural network (CNN) models18 have been tested using the public Lung Image Database Consortium dataset19,20 for classification of malignancy of lung nodules without computing the morphology and texture features. In this paper, the effectiveness of a deep learning CAD framework in lung cancer is demonstrated, with comparison to explicit feature computing CAD frameworks.

As discussed earlier, computerized classification of a lung nodule needs to characterize the nodules with several quantitative features.21 Lately, geometric feature descriptors, like scale invariant feature transform (SIFT)7,21 and local binary pattern (LBP),7 have been shown to be successful in modeling pulmonary nodules seen on CT in the context of detection and classification. The fractal analysis technique9 has also recently been revisited to characterize the textural features in multiple spatial scales to determine if solitary nodules are malignant. These computed features require a latter classifier, eg, K-nearest neighbor7,21 or support vector machine,9 to conduct the differentiation task. In this study, geometric descriptors (SIFT + LBP) and fractal features with related classifiers7,9,21 were used to compare the efficacy of the DBN and CNN learning frameworks. The experimental results confirm that the deep learning framework can outperform the conventional feature computing CAD frameworks.

The major contribution of this paper lies in the exploitation of deep learning techniques in the application of tumor classification. The deep learning framework is free of the explicit feature computing, selection, and integration steps. To our best knowledge, this is the first work introducing deep learning techniques for the problem of pulmonary nodule classification, and it could serve as a basis for addressing the advanced nodule detection problem.

Materials and methods

Pulmonary nodules can be diagnosed as cancer based on the characteristics of shape, eg, sphericity and spiculation, and composition of interior structures, like fluid, calcification, and fat. A pulmonary nodule could occur anywhere within the lung, including in the chest wall, airway, pulmonary fissure, or vessel, thus making clinical diagnosis a complicated task. Figure 1 lists several types of pulmonary nodules that can be seen on CT scans.

Figure 1.

Various types of pulmonary nodules seen on computed tomography scan images. Lung nodules were highlighted by yellow color.

Elaboration of quantification regarding the semantic nodule characteristics of sphericity, spiculation, and calcification remains an open issue,7,9,21 and again commonly requires the image segmentation step to obtain quantitative features. In this study, we utilized the deep learning framework to circumvent the need to elaborate semantic features, but still achieved satisfactory classification performance.

Deep learning is the current state-of-the-art machine learning technique, where a number of layers of data computational stages in a hierarchical structure are exploited for feature learning and pattern classification. The basic idea of deep learning is similar to how the human brain works, although in greatly simplified form. The way deep learning functions is that every layer of the deep learning “brain” creates abstractions and then select. The more layers, the more abstractions. In this paper, we propose two deep learning architectures for classification of the malignant or benign nature of lung nodules without actually computing the morphology and texture features in the DBN and CNN variants.

Deep belief network

The idea of a deep multilayer neural network was proposed more than a decade ago. In general, it is a more complex approach than a single perceptron. Although a multilayer perceptron possesses more freedom, it was too difficult to train deep multilayer neural networks, since gradient-based optimization starting from random initialization often trapped near poor results until recently. Empirically, deep networks were found to be not better, and often worse, than neural networks with a single perceptron. Hinton et al14 recently presented a greedy layer-wise unsupervised learning algorithm for DBN, ie, a probabilistic generative model made up of a multilayer perceptron. The training strategy used by Hinton et al14 shows excellent results, hence builds a good foundation to handle the problem of training deep networks. This greedy layer-by-layer approach constructs the deep architectures that exploit hierarchical explanatory factors. Different concepts are learned from other concepts, with the more abstract, higher level concepts being learned from the lower level ones. Deep learning helps to disentangle these abstractions and pick out which features are useful for learning.

Given the observation x and the l hidden layers hk of neurons, 1≤ k ≤ l, the DBN framework is a generative graphical model to model the join distribution of the observation and the neural networks as:

| (1) |

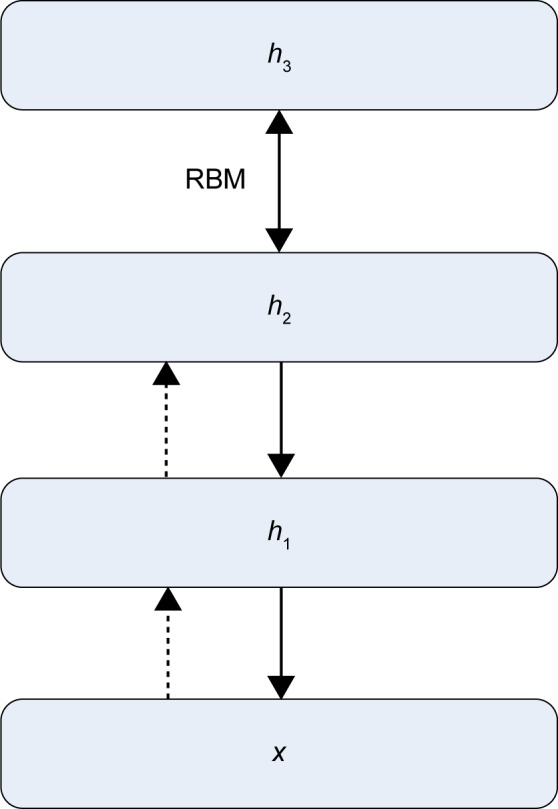

where h0 layer is the input observation x. With such factorization, the learning process can be realized in a greedy layer-wise14,15 fashion. In equation (1), the term P(hk−1|hk) is the conditional distribution of the visible unit layer conditioned on the hidden layer of the restricted Boltzmann machine (RBM) at the level k.

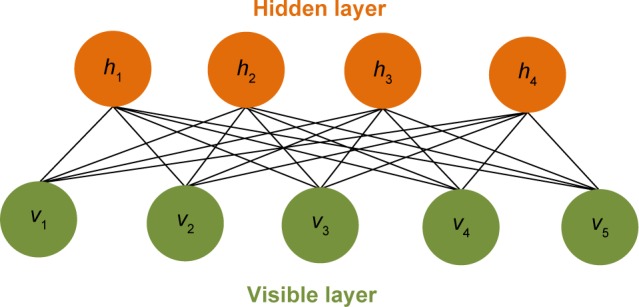

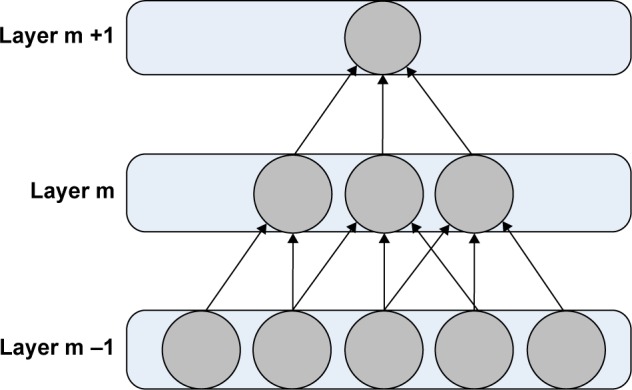

An RBM is a fully connected bipartite graph as shown in Figure 2. The energy function of an RBM can be defined as:

| (2) |

where v and h are visible and hidden layers of RBM, respectively. In equation (2)c′ is the bias vector for the hidden layer, whereas b′ is the bias vector for the visible layer. W is the weight matrix defining the interaction between units of visible and hidden layers. Since the RBM has the shape of a bipartite graph, with no intra-layer connections, the hidden unit activations are mutually independent given the visible unit activations and conversely, the visible unit activations are mutually independent given the hidden unit activations. The conditional independence of units in the two layers can then be expressed as:

| (3) |

| (4) |

where m and n are the indices of hidden and visible units, respectively. Since in most cases the units of RBM are binary variables, the activation probability of each unit can be described with a sigmoid function, σ as follows:

| (5) |

| (6) |

Figure 2.

Scheme for restricted Boltzmann machine. The restricted Boltzmann machine is a fully connected bipartite graph.

With the above mathematical definition and modeling, the RBM can be constructed from the input data using the Markov chain Monte Carlo and Gibbs sampling technique.

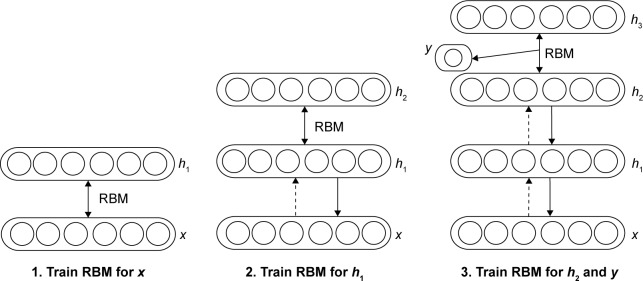

Training an effective deep generative graphical model like DBN could not be easily achieved with the traditional back-propagation method. Hinton et al14 proposed a fast and greedy learning scheme to establish the DBN by iteratively constructing stacked RBMs. The DBN is firstly trained by the training data x unsupervisedly to obtain the preliminary deep network. The pretrained network is then refined supervisedly for the specific classification or recognition purpose. The training process for the DBN is summarized below. More technical details on DBN can be found in Hinton et al14 and Bengio et al.15

Step 1

Let k =1, construct a RBM by taking the layer hk as the hidden of current RBM and the observation layer hk−1, ie, x, as the visible layer of the RBM.

Step 2

Draw samples of the layer k according to equation (4).

Step 3

Construct an upper layer of RBM at level k+1 by taking samples from step 2 as the training samples for the visible layer of this new upper layer RBM.

Step 4

Iterate step 2 and step 3 to k = l −1, and propagate the drawn samples.

Step 5

Add an extra neuron on top of the unsupervisedly pretrained deep network in steps 1–4 and train the modified neural network with the specific labels, here malignant or benign, of training data x supervisedly to achieve the classification goal.

Convolutional neural network

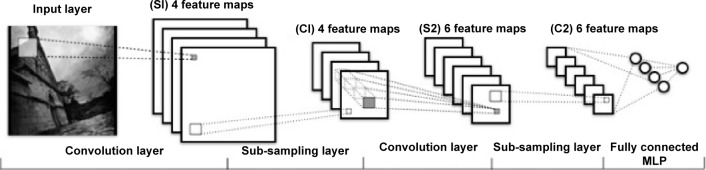

A CNN18 is a biologically inspired deep architecture. This framework was devised to mimic the mechanism of visual perception with overlapping neurons to exploit the spatial and local pattern of the objects of interest. The typical CNN framework is comprised of several convolutional and subsampling layers, followed by a fully connected traditional multiple layer perceptron.

For two-dimensional image analysis, the dimensionality of a convolutional layer is usually set as 2 to capture local spatial patterns of the object of interest. The size of the convolutional layer is smaller than its input layer and can be spanned with multiple parallel feature maps. In each feature map, the neighboring hidden units are replicated units that share the same parameterization (weight vector and bias) to reduce the number of free parameters to be learnt. The feature map can be interpreted as the input image/map being convolved with a linear filter, which is a sigmoid function, parameterized by synaptic weights and bias.

The subsampling layer performs non-linear downsampling of the input image or feature map. The non-linear downsampling is mostly realized with way of maximum pooling, which selects the maximum value of every non-overlapping subregion of the input map. The major function of the subsampling layer may lie in the reduction of learning complexity for the upper layer and is also invariant to the translation effect upon the input image.

The CNN model can be constructed from training data with the gradient back-propagation method from the top of the fully connected multiple layer perceptron downward to each convolution layer to adjust the parameters of each hidden unit with respect to each feature map. The architecture of a CNN is illustrated in Figure 3. Note that since feature map size decreases with depth, layers near the input layer will tend to have fewer filters, while layers higher up can have many more filters. In this case, we have four and six feature maps for the lower and higher layers, respectively.

Figure 3.

Architecture of a convolutional neural network. This model was constructed from training data using the gradient back-propagation method.

Abbreviation: MLP, multiple layer perceptron.

Nodule classification with DBN

Because CT scans are three-dimensional images with lower resolution on the sagittal, ie, z, axis, the two-dimensional region of interest (ROI) of a pulmonary nodule depicted in a two-dimensional CT slice is served as individual training sample. Since the physical resolution of CT images on the x and y axes is higher, three-dimensional features may sometimes be inaccurate due to low resolution on the z axis. Meanwhile, the two-dimensional ROIs of a nodule are treated equally and independently as the training samples for the DBN. Because image patches in some ROIs may depict partial information about the nodule, it will train the DBN model more robust with such two-dimensional input samples. As illustrated in Figure 4, the concept of training nodule classifier of DBN is the construction of multiple bipartite undirected graphical models (RBMs) that are defined in the earlier subsection on DBN. Note that each RBM consists of a layer of visible units that represent the data and a layer of hidden units that learn to represent features capturing higher-order correlations in the data. The two layers are connected by a matrix, but there are no connections between latent units within a layer. When two RBMs are to construct a DBN, the layer with hidden units of the first RBM is linked with the layer with visible units of the second RBM. DBN trains one layer at a time, starting from the bottom layer. The values of the hidden units in one layer, when they are being inferred from data, are treated as the data for training the next layer. The training is based on the stochastic gradient descent method and the contrastive divergence algorithm to approximate the maximum log-likelihood. The classification task is performed via a combination of unsupervised pretraining and subsequent supervised fine-tuning.

Figure 4.

Deep belief network learning framework to circumvent elaboration of semantic features. The concept of a training nodule classifier is illustrated.

Abbreviation: RBM, restricted Boltzmann machine.

Results and discussion

Classification of pulmonary nodules with deep learning techniques was tested on the Lung Image Database Consortium dataset, which includes 1,010 patients collected from Weill Cornell Medical College, University of California at Los Angeles, University of Chicago, University of Chicago, University of Iowa, and University of Michigan. Each patient record contains at least one lung CT scan with four sets of annotations from four radiologists. Nodules with a diameter larger than 3 mm were further annotated with diagnostic information, including identification of malignancy, by the radiologists.

In this study, we selected all nodules with diameters larger than 3 mm from the Lung Image Database Consortium dataset. Note that the total selected number WAs 2,545 for this criterion. To alleviate the impact of data bias, we perform leave one out cross-validation in the training phase.

The DBN and CNN deep learning frameworks were adopted to achieve nodule differentiation without the elaboration of feature computing. The training samples were resized to 32 by 32 ROIs to facilitate the training procedure. In the experiment, all DBNs were pretrained in an unsupervised manner using RBMs. The RBMs were trained using stochastic gradient descent. Figures 3 and 4 illustrate the architecture and concept for the models of CNN and DBN, respectively. In addition, Figures 5 and 6 illustrate the structure of CNN and DBN, respectively, and show that while the weights between the visible layer and the hidden layer of CNN are directed, the weights between two layers of DBN are undirected and hence DBN has more freedom and can achieve better performance than CNN in terms of computational efficiency of converge or differentiation accuracy.

Figure 5.

Convolutional neural network structure: connectivity pattern between layers.

Figure 6.

Deep belief network structure.

Abbreviation: RBM, restricted Boltzmann machine.

Two feature-based methods7,9 were implemented as baselines for illustration of the effectiveness of the deep learning techniques with regard to the nodule classification problem in this study. The first method7 adopted the descriptors of SIFT and LBP to quantitatively profile a two-dimensional ROI in a nodule. Dimension reduction techniques were further applied to shrink the feature size but preserve the discriminative power. The K-nearest neighbor method was then utilized for the classification task. The second feature-based method9 attempted to fulfill the nodule classification task with the technique of fractal analysis. This fractal analysis method was also applied on two-dimensional images for the purpose of nodule differentiation. Specifically, the popular fractional Brownian motion model was adopted to estimate the Hurst coefficient, which was shown to be linearly correlated with the fractal dimension, at a defined neighborhood. Five coefficients were computed with respect to the neighborhood radius of 3, 5, 7, 9, and 11, respectively, as the feature vector. Support vector machine was utilized to identify the malignant or benign nature of the nodule based on the computed feature vector.

Table 1 summarizes the performance sensitivity and specificity with respect to the DBN, CNN, SIFT + LBP, and fractal methods. It can be observed that both DBN and CNN outperform the feature-computing methods, confirming the efficacy of deep learning techniques with regard to the CAD problem of classification of pulmonary nodules seen on CT images.

Table 1.

Comparison of performance of various models

Abbreviations: DBN, deep belief network; CNN, convolutional neural network; SIFT, scale invariant feature transform.

CAD is a quantitative diagnostic tool to provide an objective opinion for the reference of radiologists. Most CAD frameworks used for tumor classification need to address several issues, including feature extraction, selection, and integration, to achieve the best performance. Also, the choice of classification model is another important factor affecting the differentiation result.

Several deep learning schemes, on the other hand, can potentially avoid the need to address the above-mentioned issues in conventional CAD frameworks by a seamless feature exploration and classification scheme. In this study, we exploited two specific models of deep learning, ie, DBN and CNN, for the purpose of classification of lung nodules seen on CT images. Two feature computing methods, ie, SIFT + LBP7 and fractal analysis,9 were implemented for comparison. The experimental results suggest that the feature computing methods have less discriminative power than DBN and CNN models, and hence encourage the introduction of deep learning techniques into the CAD application domain.

The major drawback of the deep learning techniques used in this study lies in the resizing issue of the input images. This may suggest that we discard the size cue in the nodule classification. Although the size cue is an important diagnostic indicator for identification of malignancy, the adopted DBN and CNN can still achieve satisfactory performance without this cue. Based on our current results, a better nodule classification result could be achieved if we could find a way to incorporate the size cue into the deep learning framework. This study could serve as a basis for further exploration of the nodule detection problem that considers the whole CT volume with the corresponding physical dimensions.

Conclusion

Inspired by recent successes with deep learning techniques, we attempted to address the longstanding fundamental feature extraction problem for classification of the malignant or benign nature of lung nodules without actually computing the morphology and texture features. The encouraging results of our empirical studies indicate that the proposed deep learning framework outperforms conventional hand-crafted feature computing CAD frameworks. To our best knowledge, this is the first research to apply deep learning techniques to the problem of pulmonary nodule classification. This study may serve as a basis to address the intelligent nodule detection problem. We believe this is just the beginning for deep learning with application to tasks, and there are still many open challenges. In future work, we will investigate more advanced deep learning techniques and evaluate further diverse datasets for more in-depth empirical studies.

Acknowledgments

This work was supported in part by the NTUST-MMH Joint Research Program (NTUST-MMH-No 102-03) and the Ministry of Science and Technology (103-2221-E-011-105).

Footnotes

Disclosure

The authors do not have a relationship with the manufacturers of the materials involved either in the past or present and did not receive funding from the manufacturers to carry out their research.

References

- 1.Siegel R, Naishadham D, Jemal A. Cancer statistics, 2013. CA Cancer J Clin. 2013;63(1):11–30. doi: 10.3322/caac.21166. [DOI] [PubMed] [Google Scholar]

- 2.Gomez Leon N, Escalona S, Bandres B, et al. F-fluorodeoxyglucose positron emission tomography/computed tomography accuracy in the staging of non-small cell lung cancer: review and cost-effectiveness. Radiol Res Pract. 2014;2014:135934. doi: 10.1155/2014/135934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gould MK, Donington J, Lynch WR, et al. Evaluation of individuals with pulmonary nodules: when is it lung cancer? Diagnosis and management of lung cancer, 3rd ed: American College of Chest Physicians evidence-based clinical practice guidelines. Chest. 2013;143(5 Suppl):e93S–e120S. doi: 10.1378/chest.12-2351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Doi K. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph. 2007;31(4–5):198–211. doi: 10.1016/j.compmedimag.2007.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.van Ginneken B, Schaefer-Prokop CM, Prokop M. Computer-aided diagnosis: how to move from the laboratory to the clinic. Radiology. 2011;261(3):719–732. doi: 10.1148/radiol.11091710. [DOI] [PubMed] [Google Scholar]

- 6.Cheng J-Z, Chou Y-H, Huang C-S, et al. Computer-aided US diagnosis of breast lesions by using cell-based contour grouping. Radiology. 2010;255(3):746–754. doi: 10.1148/radiol.09090001. [DOI] [PubMed] [Google Scholar]

- 7.Farag A, Ali A, Graham J, Elshazly S, Falk R. Evaluation of geometric feature descriptors for detection and classification of lung nodules in low dose CT scans of the chest; Paper presented at the Biomedical Imaging: From Nano to Macro, 2011 IEEE International Symposium; Chicago, IL, USA. March 30 to April 2, 2011. [Google Scholar]

- 8.Farag A, Graham J, Elshazly S. Statistical modeling of the lung nodules in low dose computed tomography scans of the chest; Paper presented at Image Processing (ICIP), 17th IEEE International Conference; Hong Kong, People’s Republic of China. September 26–29, 2010. [Google Scholar]

- 9.Lin P-L, Huang P-W, Lee C-H, Wu M-T. Automatic classification for solitary pulmonary nodule in CT image by fractal analysis based on fractional Brownian motion model. Pattern Recognit. 2013;46(12):3279–3287. [Google Scholar]

- 10.Singh S, Maxwell J, Baker JA, Nicholas JL, Lo JY. Computer-aided classification of breast masses: Performance and interobserver variability of expert radiologists versus residents. Radiology. 2011;258(1):73–80. doi: 10.1148/radiol.10081308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Way TW, Sahiner B, Chan H-P, et al. Computer-aided diagnosis of pulmonary nodules on CT scans: improvement of classification performance with nodule surface features. Med Phys. 2009;36:3086. doi: 10.1118/1.3140589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Raykar VC, Yu S, Zhao LH, et al. Supervised learning from multiple experts: whom to trust when everyone lies a bit; Paper presented at the 26th Annual International Conference on Machine Learning; Montreal, QC, Canada. June 14–18, 2009. [Google Scholar]

- 13.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE Inst Electr Electron Eng. 1998;86(11):2278–2324. [Google Scholar]

- 14.Hinton GE, Osindero S, Teh Y-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18(7):1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 15.Bengio Y, Lamblin P, Popovici D, Larochelle H. Greedy layer-wise training of deep networks. Adv Neural Inf Process Syst. 2007;19:153. [Google Scholar]

- 16.Suk H-I, Shen D. Deep learning-based feature representation for AD/MCI classification. In: Mori K, Sakuma I, Sato Y, Barillot C, Navab N, editors. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2013. Berlin, Germany: Springer; 2013. [Google Scholar]

- 17.Lee H, Grosse R, Ranganath R, Ng AY. Unsupervised learning of hierarchical representations with convolutional deep belief networks. Commun ACM. 2011;54(10):95–103. [Google Scholar]

- 18.Krizhevsky A, Sutskever I, Hinton G. Imagenet classification with deep convolutional neural networks. Paper presented at Advances in Neural Information Processing Systems. 2012. [Accessed May 20, 2015]. Available from: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.

- 19.Armato SG, McLennan G, Bidaut L, et al. The Lung Image Database Consortium (LIDC) and Image Database Resource Initiative (IDRI): a completed reference database of lung nodules on CT scans. Med Phys. 2011;38:915. doi: 10.1118/1.3528204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Armato SG, III, McLennan G, McNitt-Gray MF, et al. Lung image database consortium: developing a resource for the medical imaging research community. Radiology. 2004;232(3):739–748. doi: 10.1148/radiol.2323032035. [DOI] [PubMed] [Google Scholar]

- 21.Farag A, Elhabian S, Graham J, Farag A, Falk R. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2010. Berlin, Germany: Springer; 2010. Toward precise pulmonary nodule descriptors for nodule type classification. [DOI] [PubMed] [Google Scholar]