Abstract

The current study employed a meta-analytic approach to investigate the relative importance of component reading skills to reading comprehension in struggling adult readers. A total of 10 component skills were consistently identified across 16 independent studies and 2,707 participants. Random effects models generated 76 predictor-reading comprehension effect sizes among the 10 constructs. The results indicated that six of the component skills exhibited strong relationships with reading comprehension (average rs ≥ .50): morphological awareness, language comprehension, fluency, oral vocabulary knowledge, real word decoding, and working memory. Three of the component skills yielded moderate relationships with reading comprehension (average rs ≥ .30 and < .50): pseudoword decoding, orthographic knowledge, and phonological awareness. Rapid automatized naming (RAN) was the only component skill that was weakly related to reading comprehension (r = .15). Morphological awareness was a significantly stronger correlate of reading comprehension than phonological awareness and RAN. This study provides the first attempt at a systematic synthesis of the recent research investigating the reading skills of adults with low literacy skills, a historically under-studied population. Directions for future research, the relation of our results to the children’s literature, and the implications for researchers and Adult Basic Education (ABE) programs are discussed.

Reading comprehension is a complex skill that draws on a multitude of higher and lower order cognitive processes (for a review see Cain & Oakhill, 2007). Most of the research on the cognitive processes related to reading comprehension has been based upon the study of school-aged children. However, there has been a sharp increase in the number of larger-scale investigations of reading-related predictors of the reading comprehension skills of adult literacy students over the past five years. These studies have included heterogeneous samples of adult learners and have administered large batteries of reading component skill measures: decoding (Mellard & Fall, 2012; Nanda, Greenberg, & Morris, 2010), metalinguistic skills (Binder, Snyder, Ardoin, & Morris, 2011; Tighe & Binder, 2014), fluency (MacArthur, Konold, Glutting & Alamprese, 2010; Mellard, Fall, & Woods, 2010), language comprehension (Mellard & Fall, 2012; Sabatini, Sawaki, Shore, & Scarborough, 2010), and vocabulary knowledge (Hall, Greenberg, Laures-Gore, & Pae, 2014; Tighe, 2012. Moreover, these studies have employed several statistical techniques (i.e., regression analyses, path analyses, and exploratory and confirmatory factor analyses) to best understand the factor structure of component skills and what constitutes important predictors of reading comprehension in this population. However, there is no consensus as to the most important predictors of reading comprehension or the magnitudes of the various component skill-reading comprehension relationships in adult literacy students. The purpose of the present study was to conduct a meta-analysis to provide a systematic synthesis of the current research on the component reading skills of struggling adult readers. We wanted to understand important predictors of adults’ reading comprehension by assessing the direction and strength of the relationships between component skills and reading comprehension across multiple studies.

The Simple View of Reading

There has been substantial empirical evidence that word reading and language skills represent core component processes that influence reading comprehension in school-aged children (Goff, Pratt, & Ong, 2005; Tilstra, McMaster, Van den Broek, Kendeou, & Rapp, 2009; Vellutino, Tunmer, Jaccard, & Chen, 2007; Verhoeven & van Leeuwe, 2008). The Simple View of Reading (SVR), a prominent model of reading comprehension in the children’s literature, postulates that reading comprehension is equal to the product of an individual’s decoding and linguistic comprehension skills (Gough & Tunmer, 1986; Hoover & Gough, 1990). The SVR framework has successfully been applied with two samples of low literate adults: Adult Basic Education (ABE) students, who had sight word recognition skills below a seventh grade level equivalency (GLE) (Sabatini et al., 2010); and young adult struggling readers (Braze, Tabor, Shankweiler & Mencl, 2007). Sabatini et al. (2010) determined that a SVR confirmatory factor analysis (CFA) with latent constructs of decoding (real word and pseudoword reading) and language comprehension captured 64% of the variance in reading comprehension. Similarly, Braze et al. (2007) found that decoding (pseudoword reading) and listening comprehension accounted for a preponderance of the reading comprehension variance (76%).

Although these are the only studies to explicitly test the predictive utility of the SVR model, several studies have reported that decoding (Fracasso, Bangs, & Binder, in press; Mellard & Fall, 2012; Mellard et al., 2010; To, Tighe, & Binder, in press) and language comprehension (Mellard & Fall, 2012; Mellard et al., 2010) are important predictors of ABE students’ reading comprehension abilities. In addition, some studies have administered a variety of norm-referenced decoding assessments and distinguished between types of decoding measures (i.e., real word decoding and pseudoword decoding) when investigating the factor structure of adults’ component reading skills (MacArthur et al., 2010; Mellard & Fall, 2012; Nanda et al., 2010). Thus, we hypothesized that decoding and language comprehension skills would emerge as common predictors across studies included in our meta-analysis. We wanted to synthesize the results across multiple types of measures and to tease apart the construct of decoding (real word and pseudoword reading) to investigate the magnitude of the relationship of each decoding type with reading comprehension.

Both Sabatini et al. (2010) and Braze et al. (2007) extended their SVR models to examine the addition of an oral vocabulary knowledge predictor. Sabatini et al. (2010) reported that the latent vocabulary variable did not account for significant unique variance in reading comprehension after controlling for the SVR decoding and language comprehension components. In contrast, Braze et al. (2007) found that a composite oral vocabulary knowledge variable accounted for an additional 6% of the reading comprehension variance beyond decoding and listening comprehension. The Braze et al. (2007) finding supports the growing body of research indicating the importance of vocabulary knowledge (Fracasso et al., in press; Hall et al., 2014; Mellard et al., 2010; Taylor, Greenberg, Laures-Gore, & Wise, 2012; Tighe, 2012) to reading comprehension in ABE students. Some of these studies have found that vocabulary knowledge remained a significant predictor after controlling for other component skills: decoding (Hall et al., 2014; Taylor et al., 2012), fluency (Taylor et al., 2012), and morphological awareness (Fracasso et al., in press; Tighe, 2012). Similar to the research on decoding skills, the vocabulary studies have included an array of assessments: oral expressive vocabulary measures, oral receptive vocabulary measures, and reading vocabulary measures. Based on the past research, we hypothesized that vocabulary knowledge would emerge as a consistently investigated predictor across our meta-analysis studies.

Finally, Sabatini et al. (2010) expanded on their SVR CFA model to include a speeded word reading and text fluency latent variable. The addition of fluency as a separate component of the SVR model has been repeatedly explored in the children’s literature and has yielded mixed results (Aaron, Joshi, & Williams, 1999; Adlof, Catts, & Little, 2006; Cutting & Scarborough, 2006; Tilstra et al., 2009). Sabatini et al. (2010) found that fluency did not contribute additional unique variance independent of decoding and language comprehension in the ABE sample. Fluency (oral reading fluency and text fluency) has recently been explored in studies addressing the factor structure of component skills with ABE students (MacArthur et al., 2010; Mellard & Fall, 2012; Nanda et al., 2010); however, only a single study has reported that fluency is an important contributor to adults’ reading comprehension skills (Mellard et al., 2010). We hypothesized that fluency would be another predictor included in our meta-analysis; and we wanted to quantify the strength of this relationship with reading comprehension.

Metalinguistic Skills

Metalinguistic skills, including morphological awareness, phonological awareness, and orthographic knowledge, are another set of predictors that have been investigated recently in the adult literacy field. None of the research has investigated the unique and shared contributions of all three metalinguistic skills to reading skills; however, these constructs have been considered separately (or included two of the constructs) across several studies. For example, three recent studies have reported that morphological awareness is an important predictor of ABE students’ reading comprehension skills after controlling for phonological awareness (Tighe & Binder, 2014), decoding (To et al., in press), and oral vocabulary knowledge (Tighe, 2012). Phonological awareness has been reported as an important predictor of reading comprehension (Thompkins & Binder, 2003; Tighe & Binder, 2014), word reading skills (Greenberg, Ehri, & Perin, 1997) and a composite score of word reading, fluency, and comprehension skills (Binder et al., 2011). Similarly, orthographic knowledge has emerged as an important contributor to adults’ reading comprehension (Thompkins & Binder, 2003), word reading skills (Greenberg et al., 1997) and a composite score of reading abilities (word reading, fluency and comprehension) (Binder et al., 2011).

Beyond serving as predictors of reading skills, recent larger-scale investigations have included measures of phonological awareness (Mellard & Fall, 2012; Nanda et al., 2010) and orthographic knowledge (MacArthur et al., 2010) to examine the factor structure of adults’ component reading skills. For example, MacArthur et al. (2010) reported strong correlations (ranging from .60 to .86) between a latent orthographic knowledge variable and latent variables of fluency, decoding, word recognition, and reading comprehension. Utilizing a principal components analysis (PCA), Mellard and Fall (2012) determined that phonological awareness measures loaded highly on a word skills factor with measures of decoding and fluency. Although these studies did not explicitly investigate the independent contributions of orthographic knowledge and phonological awareness to reading comprehension, the studies included correlations among all individual measures. Therefore, the current study utilized the correlational data between component skill measures and reading comprehension measures to estimate the importance of these predictors across studies. We wanted to investigate which of these three metalinguistic skills had a stronger relationship with reading comprehension in this population.

Rationale for Meta-Analysis and Current Study

Meta-analysis is a quantitative statistical procedure that allows researchers to summarize the consistency of previously reported results and to investigate moderators that may account for the variability across studies (Borenstein, Hedges, Higgins, & Rothstein, 2009). We chose a meta-analytic approach because of the current lack of consensus as to the important reading-related contributors to adult literacy students’ reading comprehension skills. More specifically, we wanted to provide estimates of the direction and the magnitude of the identified predictor-reading comprehension relationships. Although historically the field of adult literacy has been under-funded and suffered from a paucity of rigorous research, there has been a recent upsurge in the number of larger-scale investigations of the component reading and reading comprehension skills of struggling adult readers (MacArthur et al., 2010; Mellard & Fall, 2012; Mellard et al., 2010; Nanda et al., 2010; Sabatini et al., 2010; Tighe, 2012). These studies have varied on a number of dimensions: the number and types of included component skills, the assessments administered, the statistical analyses employed, and the size of the included samples. Thus, we wanted to provide an initial attempt at summarizing and evaluating the correlational evidence of the predictor-reading comprehension relationships across these diverse investigations.

In accordance with meta-analytic procedures, we developed extensive article search criteria and a coding scheme to pinpoint studies that provided correlations between component skills and reading comprehension. Identifying previously measured component reading skills served two purposes: 1. allowed us to use these in our meta-analysis models to quantify the relative importance of these predictors to reading comprehension; and 2. enhanced our knowledge of what component skills have been consistently measured as well as highlighted what skills have not been considered in this population. Based on past research, we anticipated that the predictors included in the SVR framework (decoding, language comprehension, vocabulary knowledge, and fluency) and the metalinguistic skill predictors (morphological awareness, phonological awareness, and orthographic knowledge) would all emerge as constructs measured across multiple studies.

We employed random effects models to evaluate the relative importance of our identified predictor-reading comprehension correlations. These models allowed us to quantify which predictors exhibited the strongest relationships with reading comprehension (i.e., Does morphological awareness demonstrate a stronger relationship with reading comprehension than orthographic knowledge?). Thus, the following research question was the impetus for our meta-analysis: What component reading skills emerge as the most important predictors of adult literacy students’ reading comprehension abilities?

Methods

Inclusion Criteria

The limited research base focusing on the reading skills of adult literacy students coupled with the inherent heterogeneity of the demographic and reading characteristics of the adult learners warranted less stringent inclusion criteria. For an article to be included in the current meta-analysis, we adopted the following inclusion criteria:

1. The study must be published in English because we did not have the resources to translate and evaluate articles published in other languages.

2. The study must include adults with low literacy skills who are enrolled in literacy or vocational programs that include an explicit reading component. We defined our target adult population in accordance with the National Research Council (NRC) standards as “individuals ages 16 and older” who are not concurrently enrolled in K-12 education (Lesgold & Welch-Ross, 2012, p. 70). However, the NRC standards also extended their definition to encompass adults enrolled in remedial college courses. We did not include adults enrolled in remedial college courses because these students have already received high school diplomas or high school equivalency degrees (General Educational Development [GED] certificates). The majority of adult education students are enrolled in literacy classes to complete their GED requirements. Thus, we only considered adult literacy courses in which the majority of students had not previously earned their high school diplomas or GED certificates (i.e., Adult Basic Education, Adult Secondary Education, Adult English Second Language courses, and Job Corps1). We also excluded studies focusing on prison populations2, learning-disabled high school students, or high-school dropouts that are not concurrently enrolled in a literacy program.

3. The study must include at least one reading comprehension measure and report correlations between reading comprehension and other reading-related predictors. For studies that did not directly report correlations, we attempted to contact the author(s) to obtain this information.

Beyond these three initial screening criteria, we did not limit our searches by publication year, ages or GLEs of the participants, use of experimental versus norm-referenced assessments, or language status of the participants (native and non-native speakers of English). Moreover, to eliminate publication bias and to increase the number of potentially relevant articles, we considered published, peer-reviewed articles as well as unpublished reports, theses, dissertations, and under review and in prep manuscripts.

Search Terms and Search Procedures

In order to conduct an extensive literature search and locate applicable articles, multiple approaches were utilized: 1. computerized searches of two primary databases (Education Resources Information Center [ERIC] and PsycINFO); 2. perusal of early view, online first and recently published articles from relevant journals; 3. cross-checking of references from previously published studies; and 4. contacting four experts in the field of adult literacy research. First, we utilized several combinations of key terms in our computerized database searches, all of which included a variant of our outcome variable (reading comprehension) and a variant of our population of interest (adult literacy students). Our search terms included: reading comprehension, reading, OR reading skills AND adults, struggling adult readers, adults with low literacy, low literate adults, functionally illiterate adults, illiterate adults, adult education, adult basic education, adult low literacy, OR adult illiteracy. The different combinations of search terms yielded a total of 3,438 studies from the ERIC and PsycINFO databases. Removal of the duplicate articles between the two databases left us with a total of 1,955 articles (as of July, 3, 2013). By briefly scanning the abstracts and a few of the actual studies, the 1,955 records were quickly reduced to a total number of 16 articles. Articles were eliminated for four primary reasons: 1. non-empirical reports and single person descriptive case studies; 2. not including any measures of reading comprehension; 3. not including our target population of adult literacy students; and 4. intervention studies that did not include correlational (pre-test) data.

Second, we scanned relevant journals for early view/online first and recently published articles that may not have been identified in our electronic database searches. These journals were selected based on their commitment to publishing articles related to reading and/or Adult Basic Education. The following journals were searched: Reading and Writing: An Interdisciplinary Journal, Scientific Studies of Reading, Journal of Learning Disabilities, Reading Research Quarterly, Reading Psychology, Journal of Research in Reading, Journal of Educational Psychology, Journal of Research and Practice for Adult Literacy, Secondary, and Basic Education, Adult Education Quarterly, Learning Disability Quarterly, and Learning Disabilities Research and Practice. These searches resulted in an additional three articles that met our search inclusion criteria (Binder et al., 2011; Greenberg et al., 20103; Mellard et al., 2012).

Third, we cross-checked references from the studies we identified in the electronic database searches and journal scans. We identified six articles that potentially met our inclusion criteria. However, upon retrieving and reviewing these articles, none of these studies were included in the analyses because they were either non-empirical or did not include any measures of reading comprehension.

Finally, we contacted four experts in the field of adult literacy to ensure the comprehensiveness of our search. As previously mentioned, we decided to include both published, peer-reviewed articles and unpublished (under review, theses/dissertations, reports, and in prep) articles. These experts did not nominate any additional readily available published articles that we had not already retrieved from our searches; however, they sent us five additional studies on adult literacy students. From these five studies, three met our inclusion criteria: Sabatini (1997)4 (a dissertation), Fracasso et al. (in press), and Mellard (unpublished dataset). These experts also provided supplementary correlations between predictors and reading comprehension for several of our previously identified articles (Binder et al., 2011; Mellard et al., 2010; Mellard et al., 2012; To et al., in press). The experts were also helpful in elucidating a common issue we noticed in our selected articles: non-independent samples. Prior to coding our 21 identified articles, we eliminated three articles because the same samples were included across other identified studies and the articles did not report additional predictor correlations with reading comprehension (Greenberg, Pae, Morris, Calhoon, & Nanda, 20095; Herman, Gilbert-Cote, Reilly, & Binder, 20136, Mellard & Fall, 20127). Thus, our multiple approaches to identifying relevant articles left us with 18 remaining articles to code.

Coding Scheme

Studies were coded based on multiple study characteristics and reader demographics. For the first wave of coding, the following study characteristics were identified and coded: article identification number, full citation, published/unpublished, type of publication (i.e., thesis, dissertation, peer-reviewed article), norm-referenced/experimental predictor measure, name of predictor measure, type of predictor, total sample size, sample size included per correlation coefficient, norm-referenced/experimental reading comprehension measure, name of reading comprehension measure, Pearson correlation coefficient between each predictor measure and reading comprehension measure, and comments. Comments allowed us to note any additional information specific to the study. For example, if a particular correlation was missing or in the case of Greenberg et al. (2010), we noted that the article was an intervention and the correlations we included were only from the pre-test data. Authors were contacted for missing correlational data and in all but one case we were able to fill in the appropriate correlation coefficients. We also coded the following reader demographics: age range, GLEs, and language status (inclusion of native English speakers, non-native English speakers, or both native and non-native English speakers). If correlations were provided for the entire sample and separately by language status, we coded the correlations for the entire sample as well as by language group. Because there was a lot of missing reader demographic information, we attempted to obtain as much information as possible from the authors. To check for accuracy of the coding scheme, a second researcher re-coded all of the articles. Inter-rater reliability was high across the study characteristics (97.9%) and reader demographic variables (96.3%).

For the second wave of coding, we categorized the predictor measures into corresponding constructs and aggregated similar measures within studies by applying a Fisher’s Zr transformation (described in detail in the Results section). Inter-rater reliability was acceptable for categorizing predictor measures into the appropriate predictor constructs (97.9%). Because we decided that each study would only contribute a single predictor-reading comprehension correlation per construct, we excluded predictor variables that were represented only in a single study: syntactic skills (Taylor et al., 2012), prosody (Binder et al., 2013), nonverbal reasoning (Braze et al., 2007), visual memory (Braze et al., 2007), short-term memory (Thompkins & Binder, 2003), and print experience (Braze et al., 2007). This eliminated two studies (Taylor et al., 2012; Binder et al., 2013) because these studies had overlapping samples with other included studies and did not report any further unique predictors. Thus, we included 16 articles and a total of 2,707 participants (ranging from 41 to 486 across studies, M = 169) in our final analyses. Table 1 presents a detailed summary of the study characteristics and reader demographics for the included studies.

Table 1.

Summary of Articles Included in the Meta-Analysis

| Author(s) | N | Age | GLEs (M) | Language | Predictor(s) | RC Measure(s) |

|---|---|---|---|---|---|---|

| Barnes et al. (unpublished) | 41 | 16–64 | 0– above 12th (6.7) | N | LC, MA, OK, F, RD | WJIII PC, TABE-R |

| Binder et al. (2011) | 90 | 18–64 | NR | B | F, OK, PA, PD, RD | WJIII PC |

| Braze et al. (2007) | 44 | 16–24 | NR | NR | OV, F, LC, OK, PD, WM RD | PIAT-R RC*, GORT-4 C |

| Fracasso et al. (in press) | 63 | 18–83 | All literacy levels | B | LC, MA, OK, OV, PD | WRMT-R PC |

| Greenberg et al. (2010) | 98 | NR | Intermediate levels | B | OV, F, PD, RD | TABE-R |

| MacArthur et al. (2010) | 486 | 16–71 | 4th–7th | B | F, OK, PD, RD | NRC |

| Mellard et al. (2010) | 174 | NR | All NRS levels (5.0) | B | OV, F, LC, PD, RAN, RD, WM | WRMT-R PC |

| Mellard et al. (2012) | 296 | 16–25 | NRS levels 2–6 | NR | F, PA, PD, RAN, WM, OV, RD, OK | CASAS-R |

| Mellard (unpublished) | 202 | 16–26 | All NRS levels (4.7) | NR | OV, RD, PD | CASAS-R, TABE-R |

| Nanda et al. (2010) | 371 | 16–72 | 3rd–5th** | B | OV, F, PA, PD, RAN, RD | GORT-4 C, WJIII PC |

| Sabatini (1997) | 52 | 16–53 | NR | B | PD, RAN, RD | WIAT-RC |

| Sabatini et al. (2010) | 476 | 16–76 | Below 7th** | B | OV, F, LC, PD, RD | WJIII PC |

| Thompkins & Binder (2003) | 60 | 17–55 | Mean of 5th | B | OK, PA | TABE-R |

| Tighe (2012) | 136 | 16–73 | 3rd– above 12th (7.7) | N | OV, MA | TABE-R, TOSREC-9 |

| Tighe & Binder (2014) | 57 | 18–57 | 1st– above 12th (4.4) | B | MA, PA, PD, RD | WRMT-R PC |

| To et al. (in press) | 61 | 17–60 | 2nd– above 12th (7.1)** | B | F, MA, PD, RD |

Note: GLEs = grade level equivalencies. RC = Reading Comprehension. N = Native English Speakers. B = Both (Native and Non-Native English Speakers). NR = Not Reported. LC = Language Comprehension. MA = Morphological Awareness. OK = Orthographic Knowledge. F = Fluency. RD = Real Word Decoding. OV = Oral Vocabulary. PD = Pseudoword Decoding. RAN = Rapid Automatized Naming. WM = Working Memory. PA = Phonological Awareness. NRS = National Reporting System. WJIII PC = Woodcock Johnson Third Edition, Passage Comprehension subtest. TABE-R = Test of Adult Basic Education – Reading subtest. PIAT-R RC = Peabody Individual Achievement Test –Revised, Reading Comprehension subtest. GORT-4 C = Gray Oral Reading Test-Fourth Edition, Comprehension score. WRMT-R = Woodcock Reading Mastery Test-Revised, Passage Comprehension subtest. NRC = Nelson Reading Comprehension subtest. CASAS-R = Comprehensive Adult Student Assessment System-Reading scores. WIAT RC = Wechsler Individual Achievement Test, Reading Comprehension subtest. TOSREC = Test of Silent Reading Efficiency and Comprehension, Grade 9.

Comprehension.

An abridged version of the measure was utilized.

GLEs are based on measures of word and pseudoword reading/word recognition skills (instead of reading comprehension skills).

We consistently identified 10 constructs across the 16 studies: real word decoding, pseudoword decoding, phonological awareness, morphological awareness, orthographic knowledge, RAN, working memory, language comprehension, reading fluency, and oral vocabulary knowledge. The majority of these studies utilized norm-referenced assessments of the component skills and therefore, we labeled the constructs according to the test manuals. If norm-referenced assessments were not administered (i.e., typically observed with morphological awareness and orthographic knowledge measures), we labeled the constructs according to the researcher-defined measure description in the methods section. Due to the small number of included studies, we collapsed across some component skill measures to form broader, overarching constructs. For example, our oral vocabulary knowledge predictor encompassed both receptive and expressive oral vocabulary knowledge.

Results

Effect Size Calculations

An effect size for a meta-analysis of correlational data represents the strength of the relationship between two variables (i.e., reading comprehension and a predictor variable) (Borenstein et al., 2009). We used the Pearson product-moment correlation coefficient (r) as our effect size index (Rosenthal, 1991). Many of our studies reported r coefficients between multiple measures of a predictor (i.e., oral vocabulary knowledge) and multiple measures of reading comprehension. We only allowed each study to contribute a single effect size (predictor-outcome relationship) per included predictor. For example, Tighe (2012) contained multiple measures of morphological awareness and oral vocabulary knowledge as well as multiple measures of our outcome variable, reading comprehension. However, this study would only contribute two effect sizes (one per predictor): 1. an aggregated morphological awareness and reading comprehension effect size; and 2. an aggregated oral vocabulary knowledge and reading comprehension effect size. Thus, we averaged the multiple predictor measures and/or multiple reading comprehension measures within each study by applying a Fisher’s Zr transformation to the r coefficients. The conversion of r coefficients into Fisher’s Zr coefficients was computed in Excel utilizing the following formula:

We calculated a total of 76 Fisher’s Z transformed effect sizes between component reading skills and reading comprehension across the 10 constructs and 16 studies. We also computed the corresponding weighted variance for each effect size in Excel utilizing the following formula:

Data Analyses

Our effect sizes and corresponding weighted variance estimates in the Fisher’s Z metric were entered into 10 random effects models (one model per predictor). These models were run utilizing the metafor package in R statistical software (R Development Core Team, 2011; Viechtbauer, 2010). Random effects models were preferred because these models allowed for variation in the true effect size among studies (or differences in effect sizes due to variability in sampling characteristics) (Borenstein et al., 2009).

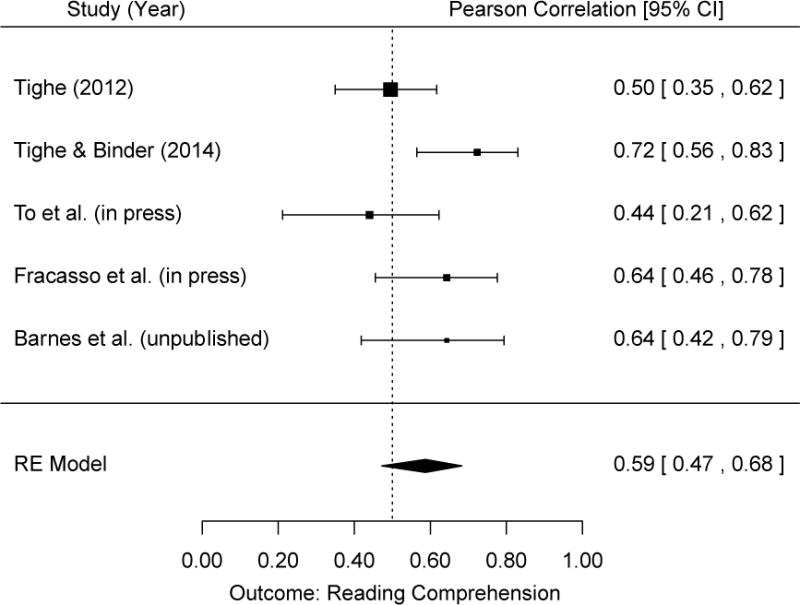

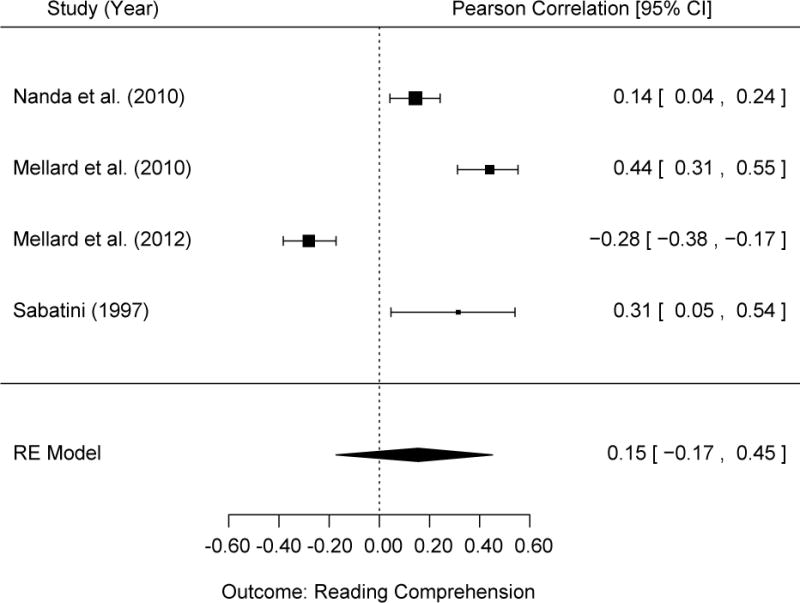

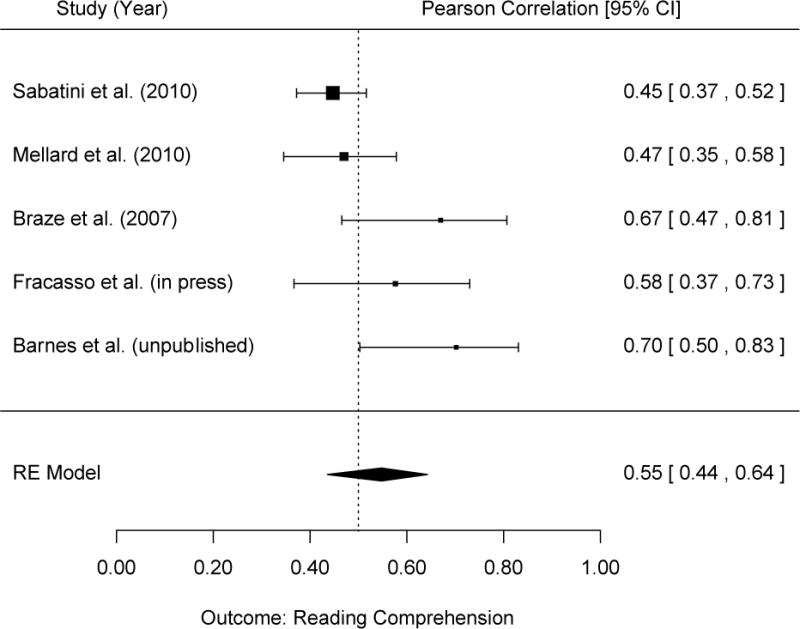

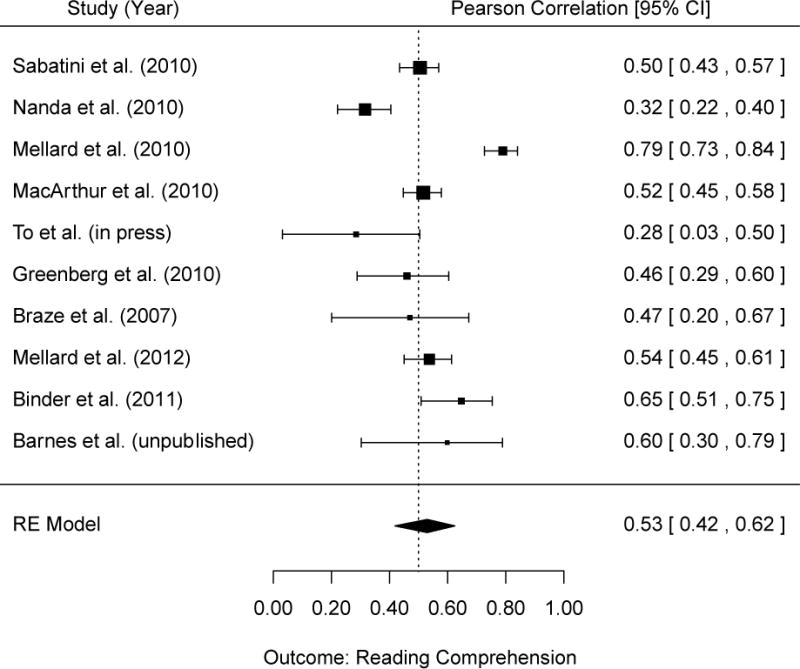

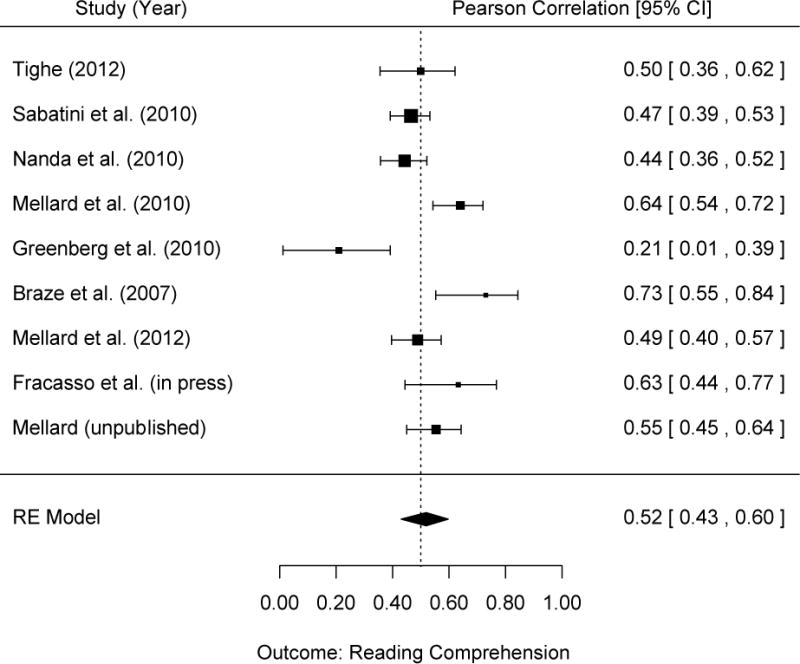

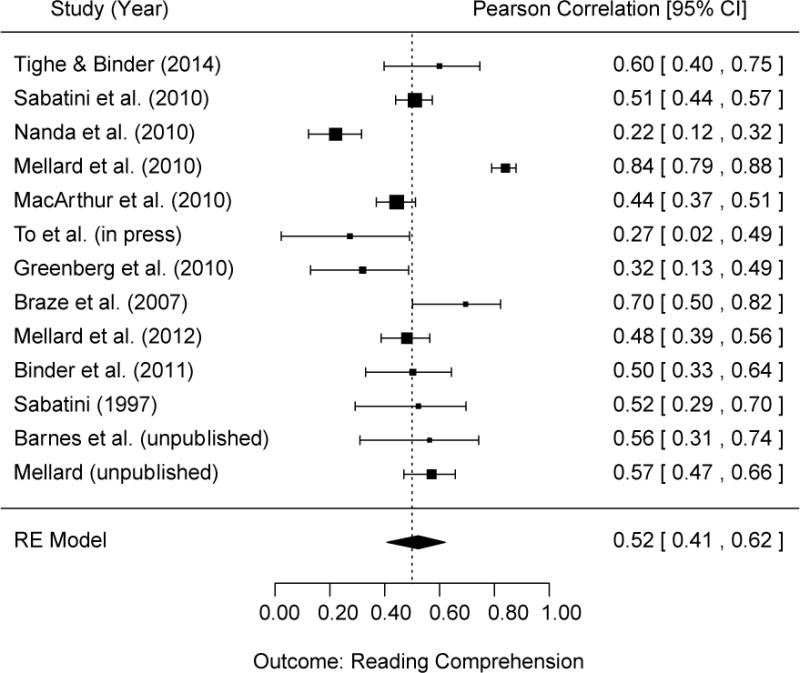

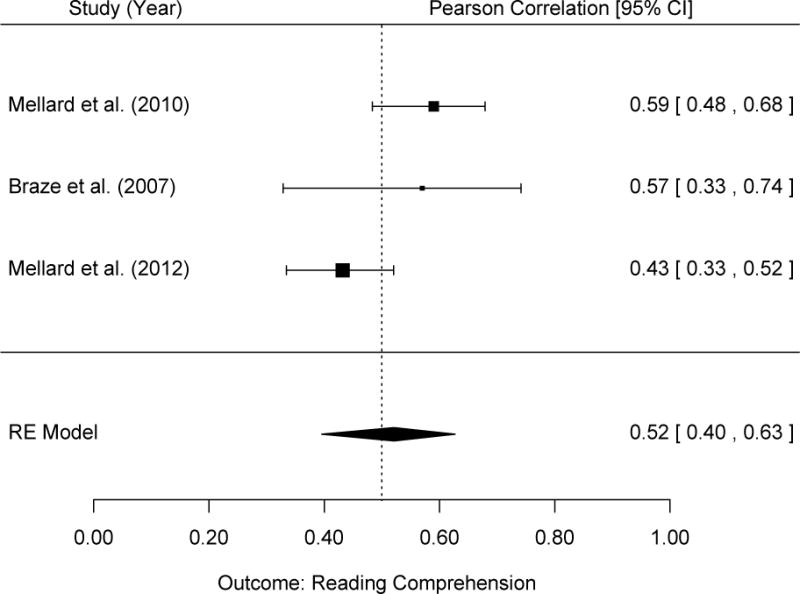

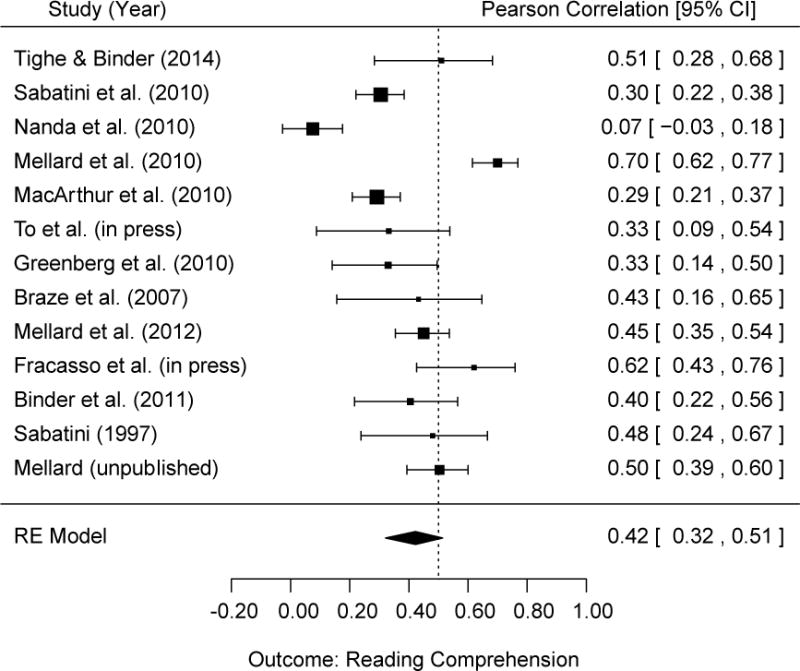

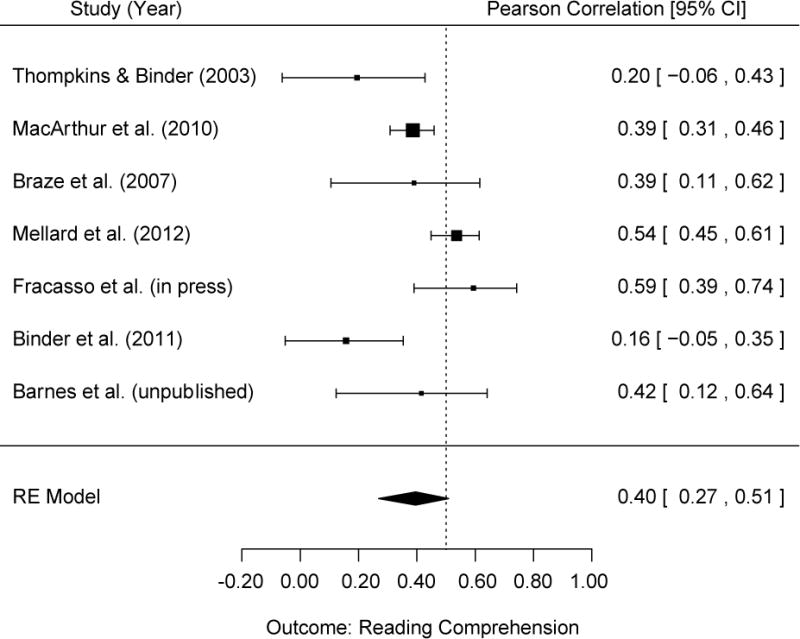

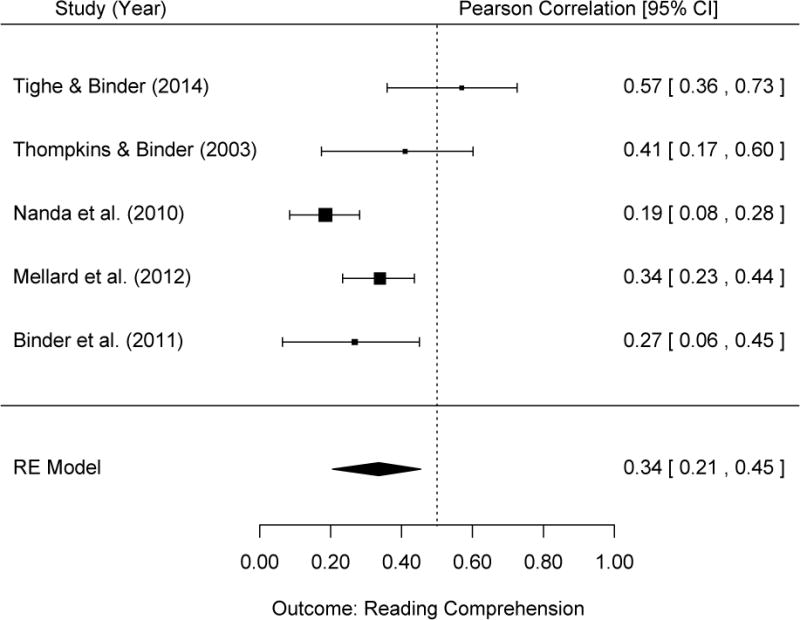

We present our results by predictor-reading comprehension relationship. For each predictor, we report a forest plot of the individual study effect sizes, 95% confidence intervals (CIs), and an overall summary effect (the mean of the effect sizes across studies). It is important to note that although we conducted the analyses using the Fisher’s Z transformed effect sizes and variance estimates, we converted the effect sizes back into Pearson’s r coefficients for easier interpretability in the presentation of our results and forest plots (Borenstein et al., 2009). We relied on the Cohen (1988) standards to determine the strength of our predictor-reading comprehension effect sizes: .50 or greater indicates a strong relationship, .30 to .49 indicates a moderate relationship, and below .30 indicates a weak relationship. These coefficients also specify the percentage of the reading comprehension variance accounted for by each predictor: at least 25% for a strong relationship, between 9% to 25% for a moderate relationship, and less than 9% for a weak relationship. We conclude by providing a table to summarize the 10 average predictor effect sizes, the number of participants per effect size, the number of studies per effect size, and the heterogeneity estimates (Q and I2 statistics) per effect size.

Predictors of Reading Comprehension

The primary research aim addressed in our analyses was to identify important reading-related predictors of the reading comprehension skills of struggling adult readers. To focus on this, we conducted a meta-analysis to quantify the magnitude of the 10 predictor-reading comprehension relationships across multiple studies. The results are summarized in forest plots by predictor (see Figures 1–10). Forest plots provided an effective way to illustrate a visual representation of the consistency and/or variation of the predictor-reading comprehension correlations across the included studies. Each predictor forest plot lists the citations of the included studies, a numerical and graphical representation of the predictor-reading comprehension correlation and 95% CI by study, and the overall summary effect and 95% CI. Individual study correlations are depicted with black boxes, which vary depending upon the sample size of the study (i.e., a bigger box represents a larger sample size). Each forest plot also contains a dotted vertical line, referred to as a reference line, which denotes the strength of the individual study correlations and overall summary effect. We set the reference line at .50 for all of our predictors (with the exception of RAN). Because reading component skills should be positively correlated with reading comprehension, .50 serves as the midway point for our x-axes (0.00 to 1.00). Additionally, .50 was chosen because this point distinguishes between strong relationships versus moderate and weak relationships (Cohen, 1988). For RAN, we chose to place the reference line at 0.00 because this was the only predictor that included positive and negative correlation values. We set the x-axis on the RAN plot to range from −.60 to .60. Therefore, 0.00 represents the midway point and distinguishes between positive and negative relationships.

Figure 1.

Forest Plot of the Correlations Between Morphological Awareness and Reading Comprehension

Figure 10.

Forest Plot of the Correlations Between RAN and Reading Comprehension

As can be seen from the forest plots, the overall summary effects ranged from .15 to .59 across the 10 predictors. These results are summarized in descending order of the strength of the predictor-reading comprehension effect size in Table 2. The component skills of morphological awareness, language comprehension, fluency, oral vocabulary knowledge, real word decoding, and working memory exhibited strong relationships with reading comprehension (rs between .52 and .59). Pseudoword decoding, orthographic knowledge, and phonological awareness yielded moderate relationships with reading comprehension (rs between .34 and .42). RAN was the only predictor that exhibited a weak relationship with reading comprehension (r = .15). We examined the effect size 95% CIs to determine significant differences among the strength of the predictor – reading comprehension relationships. A significant difference existed if the 95% CIs did not overlap between two predictor-reading comprehension effect sizes (at the p < .05 level). Morphological awareness was a significantly stronger predictor of reading comprehension than phonological awareness and RAN (see Table 2).

Table 2.

Average Correlations for Predictor-Reading Comprehension Relationships in Struggling Adult Readers

| Predictor | Avg. r | 95% CI

|

N Studies |

N Participants |

Q | I2 | |

|---|---|---|---|---|---|---|---|

| Upper | Lower | ||||||

| Morphological Awareness | .59 | .68 | .47 | 5 | 336 | 7.95 | 50.26% |

| Language Comprehension | .55 | .64 | .44 | 5 | 790 | 9.67* | 64.20% |

| Fluency | .53 | .62 | .42 | 10 | 2,124 | 73.85** | 89.42% |

| Oral Vocabulary Knowledge | .52 | .6 | .43 | 9 | 1,836 | 30.26*** | 81.11% |

| Real Word Decoding | .52 | .62 | .41 | 13 | 2,440 | 134.33*** | 91.37% |

| Working Memory | .52 | .63 | .40 | 3 | 514 | 5.43 | 61.32% |

| Pseudoword Decoding | .42 | .51 | .32 | 13 | 2,461 | 98.86*** | 86.66% |

| Orthographic Knowledge | .40 | .51 | .27 | 7 | 1,066 | 21.11** | 74.65% |

| Phonological Awareness | .34 | .45 | .21 | 5 | 872 | 12.89* | 70.80% |

| RAN | .15 | .45 | −.17 | 4 | 893 | 70.65*** | 95.45% |

Note:

p <.05

p < .01

p < .001.

Avg.r = Average Pearson Correlation Coefficient. CI = Confidence Interval.

It is important to note that there was considerable variability in the number of included studies (Ns = 3 to 13) and the number of adult participants (Ns = 336 to 2,461) across the predictor-reading comprehension effect sizes (see Table 2). For example, morphological awareness exhibited the strongest relationship with reading comprehension (r = .59); however, this predictor-comprehension effect size included the fewest number of participants (N = 336) and was only averaged across five studies. Conversely, other strong predictor effect sizes, such as fluency and oral vocabulary knowledge (rs = .53 and .52, respectively), contained more participants (Ns = 2,124 and 1,836, respectively) and were averaged across several studies (Ns = 11 and 9, respectively). Thus, the stability of the fluency and oral vocabulary effect size estimates may be more representative of the actual relationship of these variables to reading comprehension in adult literacy students.

Finally, we calculated Q statistics and corresponding p-values as well as I2 statistics as our indexes of heterogeneity in the predictor effect sizes. Borenstein et al. (2009) recommend that researchers compute multiple measures of the heterogeneity of effect sizes. In particular, researchers should consider one statistic for the presence or absence of heterogeneity (i.e, Q statistic and p-value) and one statistic for the magnitude of the heterogeneity (i.e, I2 statistic). Our Q statistic allowed us to statistically determine the presence or absence of heterogeneity among our predictor-reading comprehension effect sizes. A statistically significant Q statistic signifies the presence of heterogeneity among the effect sizes. We found statistically significant Q statistics for all of our predictors (ps < .05) with the exception of morphological awareness and working memory (see Table 2). However, Q statistics are particularly underpowered when the number of included studies is small (Borenstein et al., 2009; Higgins, Thompson, Deeks, & Altman, 2003). Therefore, based solely on the Q statistic, we cannot conclusively determine that the morphological awareness and working memory effect sizes are homogenous because the number of included studies for these predictors was smaller than the majority of our other predictors.

To address the issue of a minimal number of included studies and to quantify the amount of heterogeneity present in our effect sizes, we also report I2 statistics (see Table 2). I2 is an index of the degree of effect size inconsistency and provides a proportion of observed effect size variation due to heterogeneity versus observed effect size variation due to random error and/or chance (Borenstein et al., 2009; Higgins et al., 2003). The I2 scale ranges from 0% (little observed heterogeneity, primarily chance variation) to 100% (primarily observed heterogeneity, little chance variation). Higgins et al. (2003) proposed the following guidelines to interpret the I2 values based on the amount of observed variation due to heterogeneity: 25% is low, 50% is moderate, and 75% is high. Based on these guidelines, morphological awareness (I2 = 50.26%), working memory (I2 = 61.32%), and language comprehension (I2 = 64.20%) were the only predictors that fell in the moderate range. This indicates that roughly half to two-thirds of the observed variation in these predictor-reading comprehension effect sizes was due to actual heterogeneity and the rest was based on chance. The remainder of our predictor-comprehension effect sizes exhibited high degrees of variation due to heterogeneity (I2 statistics ranged from 70.80% to 95.45%). These results demonstrate that when utilizing both indices of heterogeneity, the morphological awareness and working memory effect sizes emerge as less heterogeneous than the other predictor effect sizes. However, it is important to note that these heterogeneity estimates do not provide the cause of the heterogeneity. Thus, we still have a large degree of observed variation across all of our predictor-reading comprehension effect sizes that could be accounted for by prospective moderators. Given the limited number of total included studies and inherent heterogeneity of the ABE population, we were unable to conduct follow-up analyses to test for the presence of moderators. In the limitations section of the discussion, we propose potential moderators to consider when more studies on the component skills of adult literacy students are conducted and published.

Discussion

The purpose of the current meta-analysis was to assess the direction and magnitude of the relationships of 10 component skills to reading comprehension across 16 studies of struggling adult readers. The results indicated that morphological awareness, language comprehension, fluency, oral vocabulary knowledge, real word decoding, and working memory yielded strong relationships with reading comprehension (average rs ≥ .50). The component skills of pseudoword decoding, orthographic knowledge, and phonological awareness yielded moderate relationships with reading comprehension (average rs ≥ .30 and < .50). RAN was the only component skill that was weakly related to reading comprehension (r = .15). Morphological awareness emerged as a significantly stronger predictor of reading comprehension than phonological awareness and RAN. We conclude by: 1. relating the findings to past adult literacy research on the SVR and metalinguistic skills; 2. comparing the findings with meta-analyses on component reading skills in children; 3. discussing the implications of these findings for ABE researchers and educators; and 4. addressing the limitations and future directions.

The Simple View of Reading

Consistent with the SVR model and past research that employed a SVR framework with adult literacy students (Braze et al., 2007; Sabatini et al., 2010), we found that decoding (real word and pseudoword) and language comprehension emerged as important component reading skills. Real word decoding and pseudoword decoding were the most consistently measured skills across our included studies (Ns = 13). Interestingly, we found a difference in the magnitude of the real word decoding-reading comprehension relationship (r = .52) compared to the magnitude of the pseudoword decoding-reading comprehension relationship (r = .42). Although this was not a statistically significant difference, Cohen’s (1988) standards suggest that real word decoding displays a strong relationship with reading comprehension whereas pseudoword decoding displays a moderate relationship with reading comprehension. Thus, there may exist a difference in the importance of the types of decoding to reading comprehension in this population.

The other two predictors examined in the past SVR research (fluency and oral vocabulary knowledge) yielded strong relationships with reading comprehension (rs = .53 and .52, respectively). Oral vocabulary knowledge and fluency were also among the most included component reading skills across our studies (Ns = 11 and 9, respectively). Unfortunately, we did not have enough power to differentiate among types of oral vocabulary knowledge: (i.e., receptive oral vocabulary versus expressive oral vocabulary). We were also unable to include a separate reading vocabulary knowledge-reading comprehension effect size estimate because this skill was only measured in two studies (MacArthur et al., 2010; Mellard et al., 2012). Similarly, we did not have enough power to differentiate among measures of fluency (i.e., oral reading fluency versus silent reading fluency). As more research on the component reading skills of adult literacy students becomes available, future syntheses should attempt to separate these constructs to provide a finer-grain analysis of the relationships of these skills to reading comprehension.

Metalinguistic Skills

The metalinguistic skills of morphological awareness, phonological awareness, and orthographic knowledge were another set of predictors that were commonly estimated in adult literacy students. Morphological awareness had the strongest relationship with reading comprehension (r = .59) and was significantly more related to reading comprehension than phonological awareness (r = .34) and RAN (r = .15). This finding is consistent with Tighe and Binder (2014) who reported that morphological awareness accounted for an additional 37% of the reading comprehension variance after controlling for phonological awareness. However, we were unable to determine significance among morphological awareness and orthographic knowledge as well as phonological awareness and orthographic knowledge. Although these results begin to identify important predictors, the results should be interpreted cautiously because of the small number of included studies (N = five to seven) and participants (N = 336 to 1,066) across the three metalinguistic skill effect sizes.

Meta-Analyses of Component Skills in the Children’s Literature

Our findings both corroborate and deviate from past results of meta-analyses on component reading skills in the children’s literature. The National Early Literacy Panel (NELP) conducted a large-scale meta-analysis, which included a correlational piece on identifying important predictors of reading comprehension in early childhood and kindergarten (NELP, 2008). This report included 18 predictor-reading comprehension summary effects (the majority of which were emergent literacy skills); however, there were several overlapping constructs with those included in the current meta-analysis. First, the panel reported that phonological awareness exhibited a moderate relationship with reading comprehension (r = .44) (NELP, 2008). This is consistent with the current meta-analysis that also reported a moderate relationship of phonological awareness to reading comprehension (r = .34). The participants included in our phonological awareness effect size hovered around a fourth to fifth GLE. This suggests that the importance of phonological awareness to reading comprehension is consistently moderate across preschool to fifth grade levels.

Second, the NELP panel also differentiated between non-word decoding and real word decoding; however, they reported that both constructs displayed moderate relationships with reading comprehension (rs = .41, .40, respectively) (NELP, 2008). We found a distinction between the two constructs such that real word decoding exhibited a strong relationship with reading comprehension (r = .52) and pseudoword decoding exhibited a moderate relationship with reading comprehension (r = .42). This finding has also been examined in two recent meta-analyses (Florit & Cain, 2011; García & Cain, 2014). Similar to the NELP data, Florit and Cain (2011) reported that for English-speaking preschoolers and kindergartners there was no differentiation in the magnitude of the relationships of real word and non-word decoding to reading comprehension (rs = .80, .83, respectively). However, in English-speaking first through fourth graders, real word decoding exhibited a stronger relationship with reading comprehension (r = .78) than the non-word decoding-reading comprehension relationship (r = .61). García and Cain (2014) reported that the type of decoding assessment administered significantly moderated the decoding-reading comprehension relationship, irrespective of participant age. These researchers reported that real word decoding accuracy yielded a significantly stronger relationship with reading comprehension than pseudoword decoding accuracy and lexical decision measures across the lifespan (ages five to 53). These findings suggest the need to include multiple decoding measures (real word and pseudoword decoding) to fully assess the construct of decoding in predicting reading comprehension. Further, the importance of pseudoword decoding may decrease in relative importance to reading comprehension as grade level increases.

Third, the NELP panel examined RAN (separated by letter and digit naming as well as object and color naming) and reported positive, moderate relationships with reading comprehension (rs = .43, .42) (NELP, 2008). However, the current meta-analysis found a relatively weak relationship between RAN and reading comprehension (r = .15). It is hard to conclusively interpret the findings from the NELP report and our meta-analysis because both studies had limited RAN data (NELP had three and six studies; ours had four studies). Thus, future research needs to explore the relationship between RAN and reading comprehension in struggling adult readers to assess if the magnitude of the relationship changes as a function of grade level.

Finally, the NELP panel included supplementary analyses, which subdivided an oral language predictor into further component skills (NELP, 2008). Relevant to the current meta-analysis, the additional NELP analyses differentiated among three types of vocabulary knowledge-reading comprehension relationships (definitional [r = 0.45], receptive [r = .25], and expressive [r = .34]). Our oral vocabulary knowledge-reading comprehension effect size was considerably larger (r = .52); however, our vocabulary predictor encompassed both receptive and expressive vocabulary knowledge. The findings from the NELP report suggest a differential relationship among types of vocabulary knowledge to reading comprehension in beginning readers. This finding should be explored as more research becomes available on the different types of vocabulary knowledge in adult literacy students.

Overall, it is not surprising that some of our results diverge from the findings of meta-analyses conducted with children. Previous research has reported differences in some component reading skills between adult literacy students and children matched on reading-achievement levels. In particular, adult literacy students tend to have weaker phonological and decoding skills and more advanced orthographic knowledge relative to children (Greenberg et al., 1997; 2002; Thompkins & Binder, 2003). Additionally, Nanda et al. (2010) was unable to successfully fit CFAs of three theoretical child-based reading models to an ABE sample. These child-based reading models encompassed a large battery of constructs (phonological awareness, decoding, RAN, oral vocabulary knowledge, fluency, and comprehension) and the researchers speculated that the low inter-correlations observed among the measures caused the poor model fit. Thus, our findings are consistent with previous research indicating that adults and children may differ on component reading skills. However, future research needs to pinpoint which specific component reading skills diverge across the adult and children samples.

Implications for ABE Researchers and Educators

Our findings have important implications for ABE researchers and educators. This meta-analysis provides the first systematic synthesis of the currently expanding literature on the reading-related skills of adult literacy students. We identified six component skills that had strong relationships with reading comprehension (morphological awareness, language comprehension, fluency, oral vocabulary knowledge, real word decoding, and working memory). We also identified three component skills that had moderate relationships with reading comprehension (pseudoword decoding, orthographic knowledge, and phonological awareness). This suggests that these are all important skills underlying the reading comprehension abilities of struggling adult readers. Thus, these are skills that should be considered during instructional practices and targeted in intervention and assessment research. Currently, ABE programs rely on two global measures of reading (Test of Adult Basic Education [TABE] and Comprehensive Adult Student Assessment System [CASAS]) to assess GLEs and to assign students to appropriate classrooms. These reading measures do not assess component skills (Greenberg, 2007) and therefore, provide very limited reading skill profiles of adult learners. Our results highlight important reading component skills that should be considered when evaluating and instructing adult learners to gain a fuller representation of the reading skills of this population.

For researchers, our findings also highlight the shortcomings in the current adult component skill research. Many of the higher-order reading skills that are consistently found in the children’s literature are either minimally studied (i.e., working memory) or neglected entirely from the adult literacy research. For example, comprehension monitoring (Cain, Oakhill, & Bryant, 2004; Oakhill & Cain, 2012), verbal reasoning (NELP, 2008; Schatschneider, Harrell, & Buck, 2007; Tighe & Schatschneider, 2014), nonverbal reasoning (Schatschneider et al., 2007; Tighe & Schatschneider, 2014), and working memory (Cain et al., 2004; Seigneuric & Ehrlich, 2005) have all been identified as important predictors of reading comprehension across multiple grade levels in the children’s literature. Thus, future research needs to examine the relationships of these component skills to reading comprehension in an adult literacy student sample.

Limitations and Future Directions

There are a few limitations that are worth noting. First, the relative dearth of rigorous research, particularly peer-reviewed articles, limited the scope of our meta-analysis. We were unable to conduct moderator analyses to account for the large degree of heterogeneity present in our predictor-reading comprehension effect sizes. ABE learners constitute a heterogeneous population on multiple facets: age, previous educational background, incidence of learning disabilities, language status (native versus non-native English speakers), and race and ethnic background (Lesgold & Welch-Ross, 2012). Moreover, the studies in our analyses administered various predictor assessments (experimental and norm-referenced) and included participants at multiple GLEs (spanning from kindergarten through above a twelfth grade, nine month level). All of these reader and study characteristics could account for the substantial variability in our predictor-reading comprehension effect sizes. As the research on the reading skills of adult literacy students continues to evolve, future research should investigate these potential moderators of the predictor-reading comprehension relationships.

Second, we were only able to identify 10 predictors that were consistently measured across our 16 studies. Many of these predictors could be subdivided into additional constructs (i.e., fluency, language comprehension). Subdividing these constructs and estimating the magnitude of the relationships with reading comprehension would provide a finer-grained analysis. Furthermore, as previously mentioned, many higher-order cognitive predictors of reading comprehension were not considered in struggling adult readers. Thus, future research needs to consider assessing additional reading subskills in this population.

Third, our predictor-reading comprehension effect sizes were not corrected for measure reliability estimates. Measure reliability estimates could have impacted the strength of our predictor-reading comprehension effect sizes; in particular, unreliable measures can lower effect sizes. Many of our included studies did not report reliability estimates on experimental measures. In addition, most of the studies included participants from a range of ages and grade levels, which makes it difficult to determine appropriate reliability estimates on norm-referenced assessments. Finally, some of the included measures that are commonly administered to children (i.e., CTOPP and TOWRE) are only normed on individuals up to age 24. It is not clear that measures designed for children are reliable and valid for use with struggling adult readers (see Greenberg et al., 2009; Nanda, Greenberg, & Morris, in press; Pae, Greenberg, & Williams, 2012). More research is needed to understand appropriate, reliable measures to utilize in this population.

Finally, the included studies administered a multitude of different reading comprehension measures. Given the relatively small number of included studies, it was not possible to investigate predictor-reading comprehension effect sizes by different reading comprehension measures. However, it is important to note that these included measures varied along several dimensions. For example, there were sentence-level comprehension tasks (e.g., TOSREC), passage-level comprehension tasks (e.g., GORT) and tasks that included a functional literacy component (e.g., TABE). In addition, it is important to consider that some of the predictor skill measures may overlap with content covered on some of the reading comprehension measures. For example, the TOSREC is a timed measure that assesses silent reading fluency as well as reading comprehension skills. As more research on struggling adult readers becomes available, future research should explore the possibility of differential relationships of component skills with varying types of reading comprehension assessments (see Cutting & Scarborough, 2006).

Conclusion

This meta-analysis provided the first systematic review of the direction and strength of 10 predictor-reading comprehension relationships across 16 studies and 2,707 struggling adult readers. Six of the component skills exhibited strong relationships with reading comprehension and an additional three component skills exhibited moderate relationships with reading comprehension. RAN emerged as the only predictor that was weakly related to reading comprehension. These findings help to pinpoint important component skills in this population; however, the results should be interpreted cautiously because of the limited number of included studies and the large degree of heterogeneity in the predictor-reading comprehension effect sizes. Future research should explore additional component skills and consider reader demographics and study characteristics as moderators of the strength of the various predictor-reading comprehension effect sizes.

Figure 2.

Forest Plot of the Correlations Between Language Comprehension and Reading Comprehension

Figure 3.

Forest Plot of the Correlations Between Fluency and Reading Comprehension

Figure 4.

Forest Plot of the Correlations Between Oral Vocabulary Knowledge and Reading Comprehension

Figure 5.

Forest Plot of the Correlations Between Real Word Decoding and Reading Comprehension

Figure 6.

Forest Plot of the Correlations Between Working Memory and Reading Comprehension

Figure 7.

Forest Plot of the Correlations Between Pseudoword Decoding and Reading Comprehension

Figure 8.

Forest Plot of the Correlations Between Orthographic Knowledge and Reading Comprehension

Figure 9.

Forest Plot of the Correlations Between Phonological Awareness and Reading Comprehension

Acknowledgments

We would like to extend our sincere gratitude and appreciation to Dr. Katherine Binder, Dr. Daphne Greenberg, Dr. Daryl Mellard, and Dr. John Sabatini for providing additional data for our analyses and lending their expertise in the field of adult literacy. We would also like to acknowledge and thank Emily Diehm for her help coding articles.

Footnotes

Job Corps participants were included because these programs have an explicit educational component in addition to vocational training. These programs serve adults, defined as ages 16 to 24, that need supplementary instruction to earn a GED certificate (Mellard, Woods, & Md Desa, 2012). In addition, the two included studies (Mellard, unpublished; Mellard et al., 2012) provided data on the National Reporting System (NRS) educational functional levels, which are based on adult literacy students.

Although it has been documented that adult prison inmates in the United States exhibit an increased likelihood of dropping out of school and not acquiring basic literacy skills (Greenberg, Dunleavy, & Kutner, 2007; Shippen, Houchins, Crites, Derzis, & Patterson, 2010), we chose to exclude this population for a number of reasons. First, most of the research on the reading skills of prison inmates is reported as a homogenous sample (and not all inmates have low literacy skills). Second, inconsistent with our struggling adult readers, most prison inmates are not receiving literacy instruction and/or there is no way to evaluate how prison literacy instruction compares to formal instruction in adult education programs. Finally, prison inmates are disproportionately male (estimates of up to 93%; Shippen et al., 2010), which is inconsistent with the demographics of struggling adult readers enrolled in national adult education programs (approximately equal numbers of males and females; Lesgold & Welch-Ross, 2012).

Greenberg et al. (2010) was the only included intervention article because it contained correlational pre-test data.

The Sabatini (1997) dissertation reported the correlations between reading comprehension and predictor measures for the adult literacy sample. Thus, we included the dissertation and eliminated the corresponding Sabatini (2002) article because the article combined adult literacy students and college students in the reported correlations.

Nanda et al. (2010) was included instead of Greenberg et al. (2009) because the Nanda et al. (2010) article had a larger sample size and reported on a larger battery of predictor measures.

Although Herman et al. (2013) included a larger sample size (N = 293) than the five separate studies that the article reported on, this article had fewer predictors, no unique predictors, and aggregated the data across several years (2009–2013). Thus, we decided to include the four separate studies from Herman et al. (2013) that met our inclusion criteria: Binder et al., (2011), Fracasso et al. (in press), Tighe and Binder, (2014), and To et al. (in press).

Although Mellard and Fall (2012) had a larger sample size (N = 312) than Mellard et al. (2010) (N = 174), the Mellard and Fall (2012) article reported correlations between reading comprehension and a smaller set of predictor composite variables. Thus, we included Mellard et al. (2010) because this gave us individual correlations between predictor and reading comprehension measures and additional constructs.

Contributor Information

Elizabeth L. Tighe, Florida State University

Christopher Schatschneider, Florida State University

References

*Studies included in meta-analysis.

- Aaron PG, Joshi M, Williams KA. Not all reading disabilities are alike. Journal of Learning Disabilities. 1999;32(2):120–137. doi: 10.1177/002221949903200203. [DOI] [PubMed] [Google Scholar]

- Adlof SM, Catts HW, Little TD. Should the simple view of reading include a fluency component? Reading and Writing: An Interdisciplinary Journal. 2006;19:933–958. [Google Scholar]

- *.Barnes AE, Kim Y-S, Tighe EL. Reading characteristics of Adult Basic Education participants. Unpublished raw data 2013 [Google Scholar]

- *.Binder KS, Snyder MA, Ardoin SP, Morris RK. Dynamic indicators of basic early literacy skills: An effective tool to assess adult literacy students? Adult Basic Education and Literacy Journal. 2011;5(3):150–160. [Google Scholar]

- Binder KS, Tighe E, Jiang Y, Kaftanski K, Qi C, Ardoin SP. Reading expressively and understanding thoroughly: An examination of prosody in adults with low literacy skills. Reading and Writing: An Interdisciplinary Journal. 2013;26(5):665–680. doi: 10.1007/s11145-012-9382-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Introduction to meta-analysis. West Sussex, UK: John Wiley & Sons, Ltd; 2009. [Google Scholar]

- *.Braze D, Tabor W, Shankweiler DP, Mencl WE. Speaking up for vocabulary: Reading skill differences in young adults. Journal of Learning Disabilities. 2007;40(3):226–243. doi: 10.1177/00222194070400030401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cain K, Oakhill J, editors. Children’s comprehension problems in oral and written language: A cognitive perspective. New York, NY: The Guilford Press; 2007. [Google Scholar]

- Cain K, Oakhill J, Bryant P. Children’s reading comprehension ability: Concurrent prediction by working memory, verbal ability, and component skills. Journal of Educational Psychology. 2004;96(1):31–42. [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2nd. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc; 1988. [Google Scholar]

- Cutting LE, Scarborough HS. Prediction of reading comprehension: Relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading. 2006;10(3):277–299. [Google Scholar]

- Florit E, Cain K. The simple view of reading: Is it valid for different types of alphabetic orthographies? Educational Psychology Review. 2011;23:553–576. [Google Scholar]

- *.Fracasso LE, Bangs K, Binder KS. The contributions of phonological and morphological awareness to literacy skills in the Adult Basic Education population. Journal of Learning Disabilities. doi: 10.1177/0022219414538513. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- García JR, Cain K. Decoding and reading comprehension: A meta-analysis to identify which reader and assessment characteristics influence the strength of the relationship in English. Review of Educational Research. 2014;84(1):74–111. [Google Scholar]

- Goff D, Pratt C, Ong B. The relations between children’s reading comprehension, working memory, language skills and components of reading decoding in a normal sample. Reading and Writing: An Interdisciplinary Journal. 2005;2:127–160. [Google Scholar]

- Gough PB, Tunmer WE. Decoding, reading, and reading disability. Remedial and Special Education (RASE) 1986;7(1):6–10. [Google Scholar]

- Greenberg D. Tales from the field: The struggles and challenges of conducting ethical and quality research in the field of adult literacy. In: Belzer A, editor. Toward defining and improving quality in adult basic education. Mahwah, NJ: Erlbaum; 2007. pp. 53–67. [Google Scholar]

- Greenberg D, Ehri LC, Perin D. Are word-reading processes the same or different in adult literacy students and third-fifth graders matched for reading level? Journal of Educational Psychology. 1997;89:262–275. [Google Scholar]

- Greenberg D, Ehri LC, Perin D. Do adult literacy students make the same word-reading and spelling errors as children matched for word-reading age? Scientific Studies of Reading. 2002;6:221–243. [Google Scholar]

- *.Greenberg D, Levy SR, Rasher S, Kim Y, Carter SD, Berbaum ML. Testing Adult Basic Education students for reading ability and progress: How many tests to administer? Adult Basic Education and Literacy Journal. 2010;4(2):96–103. [Google Scholar]

- Greenberg D, Pae HK, Morris RD, Calhoon MB, Nanda AO. Measuring adult literacy students’ reading skills using the Gray Oral Reading Test. Annals of Dyslexia. 2009;59:133–149. doi: 10.1007/s11881-009-0027-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg E, Dunleavy E, Kutner M. National Center for Education Statistics, U.S. Department of Education; Washington D.C: 2007. Literacy behind bars: Results from the 2003 National Assessment of Adult Literacy Prison Survey. (NCES 2007–473). Retrieved August 1, 2014, from: http://nces.ed.gov/pubs2007/2007473.pdf. [Google Scholar]

- Hall R, Greenberg D, Laures-Gore J, Pae HK. The relationship between expressive vocabulary knowledge and reading skills for adult struggling readers. Journal of Research in Reading. 2014;37(1):87–100. doi: 10.1111/j.1467-9817.2012.01537.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herman J, Gilbert-Cote N, Reilly L, Binder KS. Literacy skill differences between adult native English and native Spanish speakers. Journal of Research and Practice for Adult Literacy, Secondary, and Basic Education. 2013;2(3):142–155. [PMC free article] [PubMed] [Google Scholar]

- Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. British Medical Journal. 2003;327(7417):557–560. doi: 10.1136/bmj.327.7414.557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoover WA, Gough PB. The simple view of reading. Reading and Writing: An Interdisciplinary Journal. 1990;2:127–160. [Google Scholar]

- Lesgold AM, Welch-Ross M, editors. Improving adult literacy instruction: Options for practice and research. Washington, DC: National Academies Press; 2012. [Google Scholar]

- *.MacArthur CA, Konold TR, Glutting JJ, Alamprese JA. Reading component skills of learners in adult basic education. Journal of Learning Disabilities. 2010;43(2):108–121. doi: 10.1177/0022219409359342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *.Mellard Reading skills of Job Corps participants. Unpublished raw data 2013 [Google Scholar]

- Mellard DF, Fall E. Component model of reading comprehension for Adult Education participants. Journal of Learning Disabilities. 2012;35(1):10–23. [Google Scholar]

- *.Mellard DF, Fall E, Woods KL. A path analysis of reading comprehension for adults with low literacy. Journal of Learning Disabilities. 2010;43(2):154–165. doi: 10.1177/0022219409359345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *.Mellard D, Woods K, Md Desa Z. An oral reading fluency assessment for young adult career and technical education students. Learning Disabilities Research & Practice. 2012;27(3):125–135. [Google Scholar]

- *.Nanda A, Greenberg D, Morris R. Modeling child-based theoretical reading constructs with struggling adult readers. Journal of Learning Disabilities. 2010;43(2):139–153. doi: 10.1177/0022219409359344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nanda AO, Greenberg D, Morris R. Reliability and validity of the CTOPP Elision and Blending Words subtests for struggling adult readers. Reading and Writing: An Interdisciplinary Journal in press. [Google Scholar]

- National Early Literacy Panel. Developing early literacy: A scientific synthesis of early literacy development and implications for intervention. Washington, DC; National Institute for Literacy: 2008. [Google Scholar]

- Oakhill JV, Cain K. The precursors of reading ability in young readers: Evidence from a four-year longitudinal study. Scientific Studies of Reading. 2012;16(2):91–121. [Google Scholar]

- Pae HK, Greenberg D, Williams RS. An analysis of differential response patterns on the Peabody Picture Vocabulary Test-IIIB in struggling adult readers and third-grade children. Reading and Writing: An Interdisciplinary Journal. 2012;25(6):1239–1258. [Google Scholar]

- R Development Core Team. R: A language and environment for statistical computing [Computer software] Vienna, Austria: R Foundational for Statistical Computing; 2011. Retrieved from http://www.R-project.org. [Google Scholar]

- Rosenthal R. Meta-analytic procedures for social sciences. 2nd. Beverly Hills, CA: Sage Publications Inc; 1991. [Google Scholar]

- *.Sabatini JP. Is accuracy enough? The cognitive implications of speed of response in adult reading ability. Unpublished dissertation. University of Delaware 1997 [Google Scholar]

- Sabatini JP. Efficiency in word reading of adults: Ability groups compared. Scientific Studies of Reading. 2002;6(3):267–298. [Google Scholar]

- *.Sabatini JP, Sawaki Y, Shore JR, Scarborough HS. Relationships among reading skills of adults with low literacy. Journal of Learning Disabilities. 2010;43(2):122–138. doi: 10.1177/0022219409359343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schatschneider C, Harrell E, Buck J. An individual differences approach to the study of reading comprehension. In: Wagner RK, Muse AE, Tannenbaum KR, editors. Vocabulary acquisition: Implications for reading comprehension. New York: The Guilford Press; 2007. pp. 249–275. [Google Scholar]

- Seigneuric A, Ehrlich MF. Contribution of working memory capacity to children’s reading comprehension: A longitudinal investigation. Reading and Writing: An Interdisciplinary Journal. 2005;18(7–9):617–656. [Google Scholar]

- Shippen ME, Houchins DE, Crites SA, Derzis NC, Patterson D. An examination of the basic reading skills of incarcerated males. Adult Learning. 2010;21(3/4):4–12. [Google Scholar]

- Taylor NA, Greenberg D, Laures-Gore J, Wise JC. Exploring the syntactic skills of struggling adult readers. Reading and Writing: An Interdisciplinary Journal. 2012;25:1385–1402. [Google Scholar]

- *.Thompkins AC, Binder KS. A comparison of the factors affecting reading performance of functionally illiterate adults and children matched by reading level. Reading Research Quarterly. 2003;38:236–255. [Google Scholar]

- *.Tighe EL. An investigation of the dimensionality of morphological and vocabulary knowledge in Adult Basic Education students. Unpublished masters thesis. Florida State University 2012 [Google Scholar]

- *.Tighe EL, Binder KS. An investigation of morphological awareness and processing in adults with low literacy. Applied Psycholinguistics. 2014 doi: 10.1017/S0142716413000222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tighe EL, Schatschneider C. A dominance analysis approach to determining predictor importance in third, seventh, and tenth grade reading comprehension skills. Reading and Writing: An Interdisciplinary Journal. 2014;27(1):101–127. doi: 10.1007/s11145-013-9435-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tilstra J, McMaster K, Van den Broek P, Rapp D. Simple but complex: Components of the simple view of reading across grade levels. Journal of Research in Reading. 2009;32(4):383–401. [Google Scholar]

- *.To NL, Tighe EL, Binder KS. Investigating morphological awareness and the processing of transparent and opaque words in adults with low literacy skills and in skilled readers. Journal of Research in Reading. doi: 10.1111/1467-9817.12036. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vellutino FR, Tunmer WE, Jaccard JJ, Chen R. Components of reading ability: Multivariate evidence for a convergent skills model of reading development. Scientific Studies of Reading. 2007;11(1):3–32. [Google Scholar]

- Verhoeven L, van Leeuwe J. Prediction of the development of reading comprehension: A longitudinal study. Applied Cognitive Psychology. 2008;22:407–423. [Google Scholar]

- Viechtbauer W. Conducting meta-analyses in R with the metafor package. Journal of Statistical Software. 2010;36(3):1–48. [Google Scholar]