Abstract

Heading estimation involves both inertial and visual cues. Inertial motion is sensed by the labyrinth, somatic sensation by the body, and optic flow by the retina. Because the eye and head are mobile these stimuli are sensed relative to different reference frames and it remains unclear if a perception occurs in a common reference frame. Recent neurophysiologic evidence has suggested the reference frames remain separate even at higher levels of processing but has not addressed the resulting perception. Seven human subjects experienced a 2s, 16 cm/s translation and/or a visual stimulus corresponding with this translation. For each condition 72 stimuli (360° in 5° increments) were delivered in random order. After each stimulus the subject identified the perceived heading using a mechanical dial. Some trial blocks included interleaved conditions in which the influence of ±28° of gaze and/or head position were examined. The observations were fit using a two degree-of-freedom population vector decoder (PVD) model which considered the relative sensitivity to lateral motion and coordinate system offset. For visual stimuli gaze shifts caused shifts in perceived head estimates in the direction opposite the gaze shift in all subjects. These perceptual shifts averaged 13 ± 2° for eye only gaze shifts and 17 ± 2° for eye-head gaze shifts. This finding indicates visual headings are biased towards retina coordinates. Similar gaze and head direction shifts prior to inertial headings had no significant influence on heading direction. Thus inertial headings are perceived in body-centered coordinates. Combined visual and inertial stimuli yielded intermediate results.

Introduction

Human heading estimation is multi-sensory involving visual and inertial cues[1–3]. However these sensory modalities use different reference frames with vision represented relative to the retina[4–6], vestibular relative to the head[6–9], and somatosensation relative to the body[10, 11]. It has been proposed that multisensory integration should occur in a common reference frame[12–15]. However, a single reference frame may be implausible based on recent findings for visual-vestibular integration[6, 16] as well as visual-proprioceptive[17] and auditory[18] integration which suggests multiple references frames.

Although the coordinates of perceived heading estimates have not previously been directly measured several studies have looked at the neurophysiology underlying this perception. The ventral intraparietal area (VIP) is a region that is likely to be importation for visual-vestibular integration[1, 19–22]. In VIP visual stimuli are represented in eye-centered coordinates[23, 24] while vestibular headings in VIP are in body coordinates that do not vary with changes in eye or head position[16]. In contrast, in both the dorsal medial superior temporal area (MSTd) and parietoinsular vestibular cortex vestibular headings are more head centered[16]. Although, the neurophysiology has not eliminated the possibility of a common coordinate system for perception of visual and vestibular headings, no such common coordinate system has been found as visual headings have only been found to be represented in retinal coordinates and vestibular headings have been found in only head and body coordinates.

It has recently been shown that human heading estimates are systematically biased so that lateral component is overestimated with both visual and vestibular stimuli[25, 26]. This behavior can be predicted by a population vector decoder (PVD) model based on a relatively larger number of units with sensitivity to lateral motion in MSTd[27]. However previously studies did not attempt to measure the effect of eye and head position on these biases.

In the current experiment, human visual and inertial head estimates were measured while systematically varying eye and head position. This experiment was designed to address two current controversies: First, determine the coordinate systems in which visual and vestibular stimuli are perceived. Second, determine if multisensory visual-vestibular integration occurs in a common coordinate system. Perception of visual headings shifted with gaze position demonstrating visual headings were perceived in retina-centered coordinates. Inertial heading estimates were not influenced by either head or eye position indicating a body-centered coordinates. When both visual and vestibular stimuli are present an intermediate coordinate system was used.

Methods

Ethics Statement

The research was conducted according to the principles expressed in the Declaration of Helsinki. Written informed consent was obtained from all participants. The protocol and written consent form were approved by the University of Rochester Research Science Review Board (RSRB).

Equipment

Motion stimuli were delivered using a 6-degree-of-freedom motion platform (Moog, East Aurora, NY, model 6DOF2000E) similar to that used in other laboratories for human motion perception studies [26, 28–30] and previously described in the current laboratory for heading estimation studies[25, 31].

Head and platform movements were monitored in all six-degrees of freedom using a flux-gate magnetometer (trakSTAR, Ascension Technologies, Burlington, VT) using two model 800 position sensors, one on the subject’s head and other on the chair as previously described[32].

Monocular eye position was monitored and recorded at 60 Hz using an infrared video eye tracking system (LiveTrack, Cambridge Research Systems, Rochester England). Prior to each experiment the position was calibrated using a series of fixation points between ±30° in the horizontal plane and ±15° in the vertical plane. This system was used predominately as test of fixation, although failures of fixation were found to be extremely rare.

During both visual and vestibular stimuli, an audible white noise was reproduced from two platform-mounted speakers on either side of the subject as previously described [33]. The noise from the platform was similar regardless of motion direction. Tests in the current laboratory previously demonstrated that subjects could not predict the platform motion direction based on sound alone[33].

Sounds from the platform were further masked using a white noise stimulus reproduced from two platform-mounted speakers on either side of the subject. The intensity of the masking noise used in the current study varied with time as a half-sine wave so that the peak masking noise occurred at the same time the peak velocity was reached. This created a masking noise similar to the noise made by the platform. Sound levels at the location of the subject were measured using a Quest Technologies, model 1900 sound level meter (Quest Technologies, Oconomowoc, WI). Average sound pressure level (SPL) of the ambient sound was 58 dB, with a peak level of 68 dB when no motion was delivered. The masking noise had a peak of 92 dB. The motion platform had a peak noise level of 84 dB for velocities of 30 deg or cm/s for movements in the horizontal plane (yaw, surge, and sway) and 88 dB for heave. The peak noise of the platform was 74 dB. The masking sound intensity was the same for every stimulus independent of the stimulus direction and masking was also used for visual stimuli for consistency even though the visual stimulus made no sound. No masking noise was used between stimuli. We found this type of masking much more effective than a continuous masking noise of constant intensity.

Responses were collected using a two-button control box with a dial in the middle that could be freely rotated in the horizontal plane without any discontinuity points as previously described in the current laboratory[25].

Stimulus

There were three types of stimuli: visual only, inertial only, and combined visual-inertial. During the combined stimulus condition the visual and inertial motion were synchronous and represented the same direction and magnitude of motion. The visual and inertial stimuli consisted of a single cycle 2s (0.5 Hz) sine wave in acceleration. This motion profile has previously been used for threshold determination[29, 33, 34] and for heading estimation[25, 31]. Directions tested included the 360° range of headings in 5° increments (72 total), delivered in random order. The displacement of the stimulus was 16 cm with a peak velocity of 16 cm/s and peak velocity of 25 cm/s/s.

Visual stimuli were presented on a color LCD screen (Samsung model LN52B75OU1FXZA) with a resolution of 1920 x 1080 pixels 50 cm from the subject filling 98° horizontal field of view. A fixation point consisted of a 2x2 cm cross at eye level could be presented centered or ±28° and was visible throughout every trial. The visual stimulus consisted of a star field which simulated movement of the observer through a random-dot cloud with binocular disparity as previously described[25]. Each star consisted of a triangle 0.5 cm in height and width at the place of the screen adjusted appropriately for distance. The star density was 0.01 per cubic cm. The depth of the field was 130 cm (the nearest stars were 20 cm and the furthest 150 cm). Visual coherence was fixed at 100%. Disparity was provided using red-green anaglyph glasses made with Kodak (Rochester, NY) Wratten filters #29 (dark red) and #61 (deep green). The colors were adjusted such that the intensities of the two were similar when viewed through the respective filters and rejection ratio was better than ten fold.

Experimental Procedure

Three stimulus conditions were used: Inertial motion in which the platform moved, visual motion in which the platform remained stationary but star field motion was present, and combined visual and inertial motion.

Subjects were instructed that each stimulus would be a linear motion in the horizontal plan. Prior to testing subjects were show how to orient a mechanical dial as previously described[25]. A few practice trials were conducted in the light prior to doing the experiment. Although no feedback was given during these trials, if they were making systematic errors such as identifying the direction of the star field motion rather than their direction through the star field such errors were corrected.

Four types of head/gaze variations were used, each in a separate block of trials: Head centered gaze centered (HCGC) in which the head and gaze remained fixed at the midline. This condition was essentially the same as a previously published study[25], but was repeated using the current subjects. In the remaining 3 types of trial blocks there were interleaved conditions in which head or gaze position was randomly varied between trials. The head remained fixed in the head centered gaze varied (HCGV) condition while gaze was varied between fixation points 28° to the right or left prior to each stimulus presentation. In the head varied, gaze centered (HVGC) condition the visual fixation point remained centered and the head was rotated to 28° to the right or left prior to each stimulus presentation. In the head varied, gaze varied condition (HVGV) both the head and gaze were rotated 28° to the right or left prior to the stimulus presentation such that the eye remained near straight ahead relative to the head.

In the HVGC and HVGV conditions prior to each trial (i.e. each stimulus presentation) a location representing the ideal head position (28° right or left) was shown on the video display. A box ±1° on a side represented the current head position in real time and the subject was instructed to move their head so that the cross was in the center of the box. Afterwards the head was stabilized against a rubber headrest. A fixation point was displayed to indicate gaze position which in the case of HVGV was the center of the box and in HVGC was in the center of the screen. Once the head and gaze were stabilized at the desired position the subject pressed the start button. After pressing the start button the box and current head location were no longer displayed but were recorded through out the trial. Immediately after the stimulus was delivered subjects heard two 0.125s tones in rapid succession to indicate their response could be reported using the dial. After they were finished they pushed a button to indicate they were done.

Subjects

A total of 7 subjects (5 female) completed the 11 trial block protocol. Ages ranged from 20 to 67 (39 ± 22, mean ± SD). None of the subjects was familiar with the design of the experiment. The order of trial blocks was randomized between subjects and no feedback was given. To minimize fatigue the trials blocks were completed in multiple sessions on different days with 2–3 blocks completed in each session. All subjects were screened prior to participation and had normal or corrected vision, normal hearing, normal vestibular function on caloric testing, and no dizziness or balance symptoms.

Analysis

Each dial setting was compared with the actual heading for each trial to calculate a response error. A simple population vector decoder (PVD) model was fit to each participants responses for each test condition. The general form of a PVD model is given in Eq (1).

| (1) |

Because there is little known about the actual distribution of human sensitivities, the model was simplified to include only two orthogonal vectors representing surge and sway with independent weights. Although including additional vectors in the population would allow more degrees of freedom and a better fit to the data, this was not done due to the risk of over fitting:

| (2) |

The absolute weights are not important but only their relative sizes, so they can be replaced with a ratio:

| (3) |

This gives us:

| (4) |

Because we are only interested in the direction of the vector and the length is not important the absolute weight of the surge component is not needed. This simplifies the PVD to (Fig 1A):

| (5) |

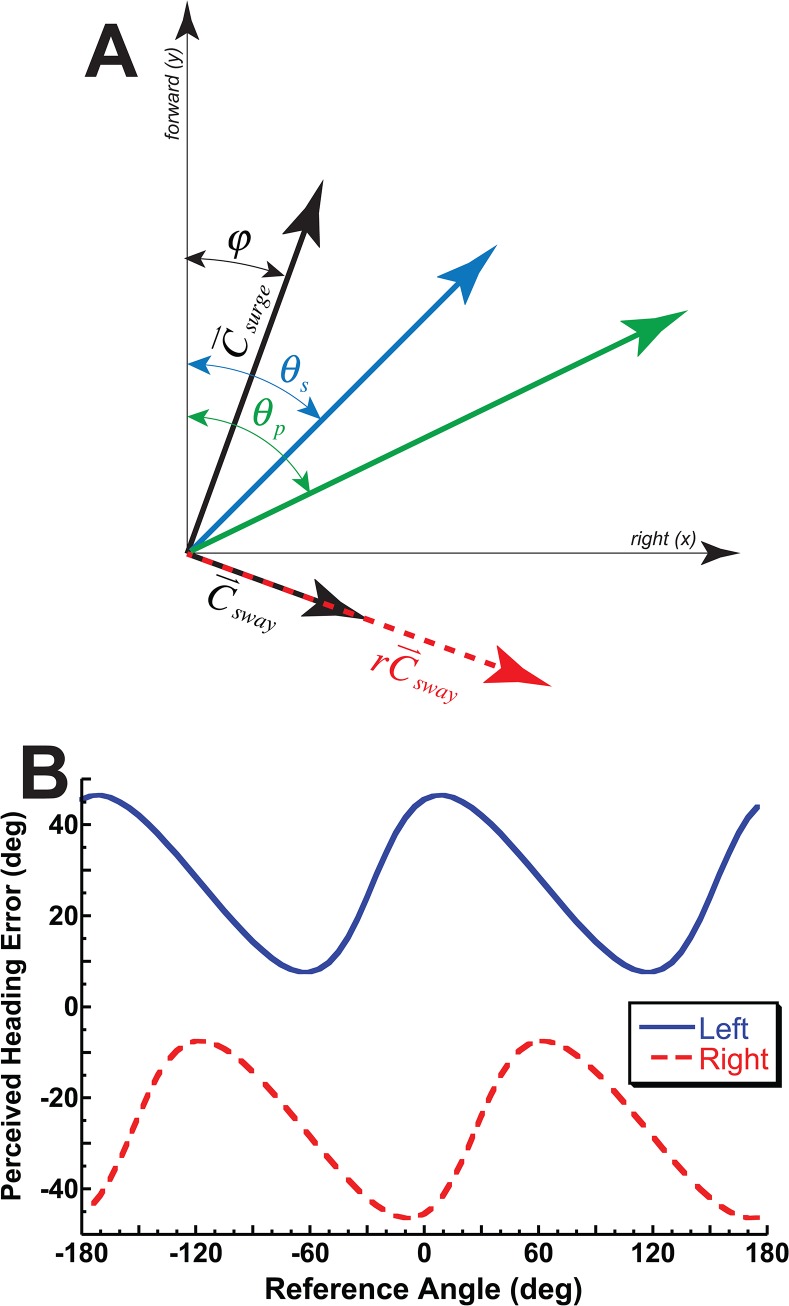

Fig 1. The population vector decoder (PVD) model used and its predictions.

(A) The model in graphical form for a stimulus heading, θ s, of 45° to the right. This 45° vector can be represented by orthogonal surge, , and sway, , vectors offset by φ. The perceived heading, θ p, is the sum of the surge and sway vectors after the sway component is multiplied by r. (B) The quantitative predictions of the model when r = 2 (sway component weighted twice that of surge). The solid blue tracing represents a 27° leftward gaze and the dashed red tracing represents a 27° rightward gaze. Ideal performance would be a perceived heading error of 0° for all reference headings. The model predicts a gaze shift changes the coordinate system and influences both the phase and offset of the perceived heading error.

The orientation of the stimulus in space is given by θ s. The surge and sway vectors relative to space were allowed to vary by an offset angle or phase (φ). Here the perceived direction, , is related to the unit forward vection, , and unit sway vector, :

| (6) |

Thus when is forward (i.e. cos(θ s + φ) > 0) the perceived heading angle, is given by:

| (7) |

When is backwards and is to the right (i.e. sin(θ s + φ) > 0) then a correction factor of +180° is applied, otherwise (i.e. sin(θ s + φ) < 0) a correction of -180° is needed.

It is hypothesized that this PVD model can explain the perceived heading bias. This hypothesis is based on prior human behavior experiments[25, 26] as well as primate neurophysiology[27] which suggest greater numbers of units sensitive to changes in lateral or sway heading changes for both visual and inertial headings or in terms of the current model r > 1. If visual headings are perceived in retina coordinates as suggested by recordings from VIP[23, 24], then the offset angle (φ) will be similar to the eye position (Fig 1B). This model was fit to the responses for each condition. This was done using the fminsearch function in Matlab (Mathworks, Natick, Massachusetts) to minimize the mean squared error (MSE) of the model predictions relative to the observed responses.

Statistics was performed using JMP Pro version 11 for the Macintosh (SAS, Cary, North Carolina). A paired student’s t-test was used to determine significance between model fit parameters across the population tested. One way analysis of variance (ANOVA) was used to determine if there were significant effects of gaze/head position, stimulus types, and between subjects.

Results

Eye position at the start of the trial was within 1° of the intended target. Eye fixation remained at the intended point during motion. In conditions in which the head position was varied over ±28°, the subjects were able to do this accurately. The average error was 0.2° at the start of the trial with the maximum <1°. The head also remained in position during the inertial movement. The average peak-to-peak variation in yaw head position during the inertial movement in head varied conditions was 1.9° with the standard deviation of head position averaging 0.6°.

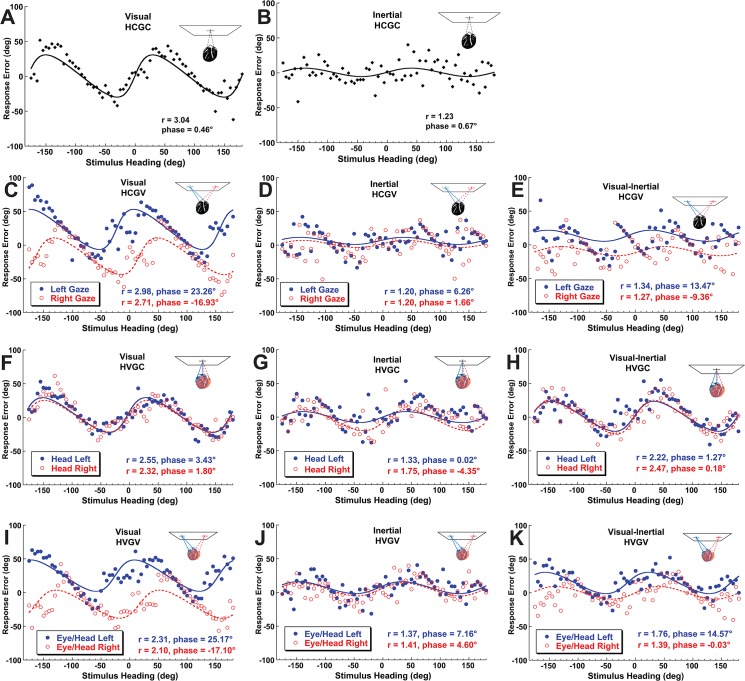

Performance of a typical subject (#4) is shown in Fig 2 with the results of model fit to the data. The visual headings (Fig 2A, 2C, 2F, & 2I) had heading specific biases that could be predicted by relative greater sensitivity to sway motion as the best fit ratio (r) ranged from 2.1 to 3. The heading offsets were a function of gaze position (Fig 2C & 2I). Inertial headings were predicted using a sensitivity to sway closer to that of surge with the best r fit ranging from 1.2 to 1.8 (Fig 2B, 2D, 2G, & 2J). Inertial heading perception was independent of gaze or head position. When visual and inertial headings were combined (Fig 2E, 2H, & 2K) the perceived headings and model fits represented a response that was intermediate between the visual and inertial only conditions.

Fig 2. Sample data and model fits for a typical individual subject (#4).

The abscissa represents the stimulus heading. The ordinate represents the response error (perceived minus stimulus heading). Curves represent the best fit of the PVD model. Top row (A&B): The head centered gaze centered (HCGC) condition. Second row (panels C-E): The head centered gaze varied (HCGV) condition with gaze varied by ±28°. The gaze direction strongly influenced visual heading perception as indicated by the PVD model phase/offset parameter (φ). Third row (F-H): Head position varied over ±28° with gaze centered (HVGC). Inertial headings were independent of head position. Bottom row (I-K): Varying gaze and head yielded results similar to varying gaze alone (C-E).

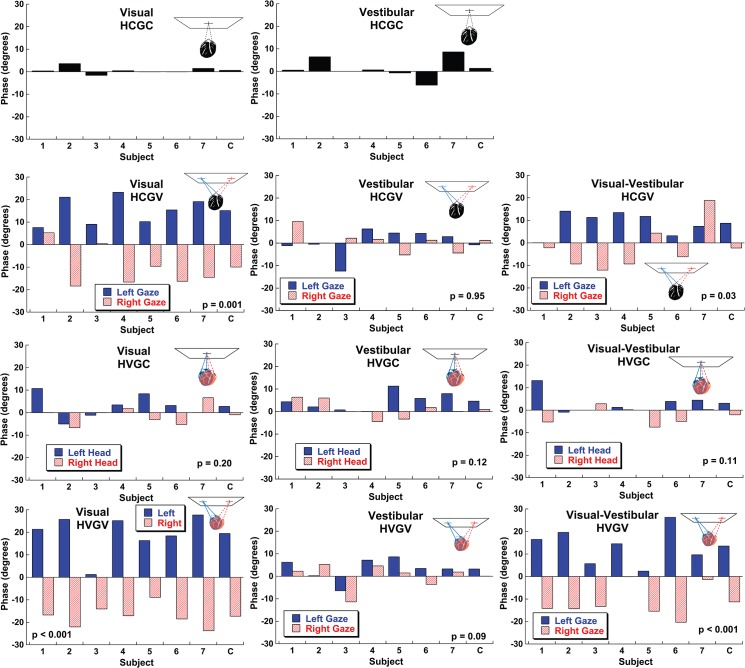

The data were summarized using the ratio (r) and phase or offset (φ) parameters of the PVD model fit. The offset parameters that best fit each subject’s responses are shown for each subject and trial block type (Fig 3). For the HCGC conditions the offset averaged near zero (Fig 3A & 3B). In visual heading conditions where gaze was varied there was a large and significant effect of gaze direction (Fig 3C & 3I). The average offset was 13 ± 2° (mean ± SEM) in the direction of gaze or 46% of the gaze shift with the head centered and 17 ± 2° or 61% of the gaze shift in the HVGV condition. The mean effect was about half the size at 7.2 ± 1.4° (HCGV) and 12.8 ± 1.8° (HVGV) but remained highly significant with combined visual and inertial headings (Fig 3E & 3K). In every subject, when a visual stimulus was present, gaze shifted the perceived heading estimate so that left gaze produced a positive (rightward) offset and right gaze produced a relatively negative (leftward) offset. This was consistent with retina based coordinates since a leftward gaze would cause stationary objects to appear shifted to the right and vice-versa. Shifts in gaze did not cause a significant shift in inertial heading perception with the average shift being -0.3±1.4° (HCGV). Similarly head shifts (HVGC) produced a non-significant offset of 2.0 ± 1.2°.

Fig 3. The phase/offset parameter (φ) of the PVD model for each of condition across subjects.

The average (combined) value is shown in the furthest right column marked with a C. For the HCGC conditions the average phase offset was near zero. In conditions where the trial blocks included multiple head and/or gaze positions (panels C-K) a T-test was used to determine if the values were significantly different across subjects and p-values are printed for each condition.

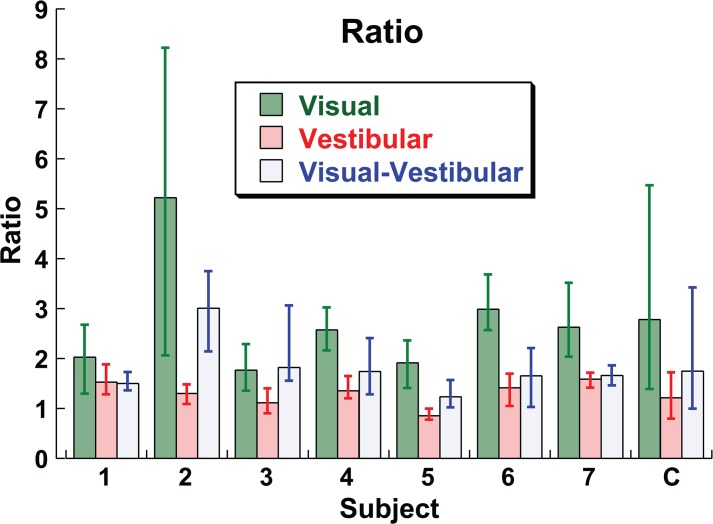

Unlike the offset, the ratio (r) parameter of the model that best fit the data did not depend on the gaze or head position (e.g. HVGC, HCGV, etc.; ANOVA, p = 0.98, F = 0.06). The ratio depended on the stimulus type (e.g. visual, inertial, or combined; ANOVA p < 0.0001, F = 32.37). The ratio did not depend on the direction of gaze or head position (ANOVA p = 0.63, F = 0.23). The mean r for a visual stimulus was 2.78 (95% CI: 1.39–5.47), for an inertial stimulus it was 1.21 (95% CI: 0.79–1.72), for the combined visual-inertial stimulus it was 1.75 (95% CI: 0.99–3.42). There was significant variation in r across subjects (ANOVA p < 0.0001, F = 6.45). The ratio is shown by subject and stimulus type in Fig 4. In every subject the ratio was higher for the visual condition relative to the inertial condition. The combined stimulus yielded a ratio that had a variable relationship to the visual and inertial ratio depending on subject and could be closer to inertial (subjects 1, 6, 7), similar to visual (subject 3), or intermediate between the two (subjects 2, 4, 5).

Fig 4. The ratio parameter (r) of the PVD model.

Unlike the offset parameter, ratio was independent of gaze/head condition which where combined. The values combined across subjects are shown in the furthest right column marked C. Error bars represent the 95% CI.

Once fit with the ideal ratio and offset parameters the MSE of the fit of the model to the data did not depend on gaze/head condition (ANOVA, p = 0.72) or stimulus type (ANOVA, p = 0.52).

Discussion

The method used here to measure heading estimates is a relatively simple task which has the potential to be influenced by confounding factors including cognitive influences. Yet, the large offsets in visual heading estimates with changes in gaze position were consistently observed across subjects and predicted from the known neurophysiology. In VIP, visual motion stimuli have been shown to be represented in eye-centered coordinates[23, 24]. The current data demonstrate that although visual headings are biased towards eye position, the bias in the heading was only about half of the eye position offset. This is likely because eye position is also considered in heading estimation[35–38], although the current data demonstrate that the effect of eye position is not completely corrected. Although it is possible that visual headings could be represented in body or world coordinates elsewhere in the central nervous system no such area has yet been identified. The current data suggest that no such representations of visual headings exist as subject’s reported perception of visual headings was strongly biased towards retina coordinates.

The other major finding was that inertial heading was represented in a body fixed coordinate system. Even large variations in head and gaze position had no influence on the heading estimates. The current experiments did not vary the body orientation relative to the earth so an earth fixed coordinate system is also possible. However, it is clear that the inertial headings and visual headings are perceived relative to different coordinate systems. This is consistent with the neurophysiology which demonstrates that in VIP, vestibular heading representation does not change with changes in either eye or head position[16]. Although it has been shown in MSTd and the parientoinsular vestibular cortex vestibular headings appear to be in head centered coordinates [6, 16]. It is not surprising that there are areas of the brain where inertial signals are represented in head coordinates as the vestibular organs are fixed in the head, but the current data demonstrates that inertial heading perception follows the neurophysiology of VIP most closely.

Previous studies have looked at multisensory integration using visual and inertial cues[3, 39–41]. A common model of multisensory integration is that cues are integrated in a statistically optimal way also known as an ideal observer model. This model predicts that each sensory cue will be weighted according to its relative reliability. This weighting strategy is what is predicted by Bayesian probability theory[42]. For visual-vestibular heading integration, the prior work in this area has focused on multisensory integration using a discrimination task (e.g. subjects report if a test stimulus is to the right or left of a reference heading). The current experiments involve a estimation task which allows the bias and reference frame to also be determined. The relative reliability of visual and inertial cues is more complex for heading estimation because the relative reliability varies not only on the stimulus but also with heading direction. The current experiments did not vary the relative reliabilities of the stimuli or offset the visual and inertial stimuli relative to each other which limits the scope of conclusions that can be made with regard to multi-sensory integration. However, the phase offsets (Fig 3) and ratios (Fig 4) calculated demonstrated that the combined condition was usually intermediate between the visual and inertial condition. In some subjects the combined stimulus was closer to inertial (e.g. subject 1) while in others it was similar to visual (e.g. subject 3). It seems clear that there is no uniform common reference frame for heading estimation, but how the intermediate reference frame is developed remains unclear.

An obvious issue raised by these results is that if eye position causes large biases in heading perception, what are the implications for day-to-day activities such as ambulation and driving? It is possible that feedback could minimize the biases observed here. The effect of feedback was not studied in the current experiments and it is likely that subjects were not aware that their responses were biased. However, these subjects also had feedback during their daily activities such as driving and ambulation that did not eliminate these biases. During ambulation people tend to direct gaze in the direction of intended motion[43, 44] which makes it easier to maintain an accurate heading[36] and this also occurs with driving[45–47]. Thus, under natural conditions, control of gaze direction may be the mechanism by which heading errors that could arise with eccentric gaze positions are minimized. When gaze is eccentric from the intended course by as little as 5° while driving, subjects shifted their position on road significantly toward the direction of gaze[47]. With vestibular headings in body coordinates, it is not surprising that head orientation changes do not influence ambulation direction [48]. The current data suggest fixed eccentric gaze while driving could lead to deviation towards the direction of gaze. Such an experiment has not to the author’s knowledge been done but, fixing gaze at a central position causes steering errors and decreased performance in driving simulation[49, 50]. Thus prior behavioral data is consistent with the current findings.

The current data strongly suggest that visual-vestibular heading estimation occurs in different reference frames.

Data Availability

All relevant data are within the paper.

Funding Statement

This work was funded by National Institute on Deafness and Other Communication Disorders (NIDCD) K23 DC011298 and R01 DC0135580. It was also supported by a Triological Society Career Scientist Award. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Chen A, DeAngelis GC, Angelaki DE. Convergence of vestibular and visual self-motion signals in an area of the posterior sylvian fissure. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2011;31(32):11617–27. Epub 2011/08/13. 10.1523/JNEUROSCI.1266-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.DeAngelis GC, Angelaki DE. Visual-Vestibular Integration for Self-Motion Perception. In: Murray MM, Wallace MT, editors. The Neural Bases of Multisensory Processes. Frontiers in Neuroscience. Boca Raton (FL)2012.

- 3. Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nature reviews Neuroscience. 2013;14(6):429–42. 10.1038/nrn3503 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Larish JF, Flach JM. Sources of optical information useful for perception of speed of rectilinear self-motion. J Exp Psychol Hum Percept Perform. 1990;16(2):295–302. . [DOI] [PubMed] [Google Scholar]

- 5. Palmisano S, Allison RS, Pekin F. Accelerating self-motion displays produce more compelling vection in depth. Perception. 2008;37(1):22–33. Epub 2008/04/11. . [DOI] [PubMed] [Google Scholar]

- 6. Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 2007;27(3):700–12. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Roditi RE, Crane BT. Asymmetries in human vestibular perception thresholds. Association for Research in Otolarngology, 34th Annual Meeting; Baltimore, MD2011. p. 1006.

- 8. Soyka F, Giordano PR, Barnett-Cowan M, Bulthoff HH. Modeling direction discrimination thresholds for yaw rotations around an earth-vertical axis for arbitrary motion profiles. Exp Brain Res. 2012;220(1):89–99. Epub 2012/05/25. 10.1007/s00221-012-3120-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Merfeld DM, Park S, Gianna-Poulin C, Black FO, Wood S. Vestibular perception and action employ qualitatively different mechanisms. I. Frequency response of VOR and perceptual responses during Translation and Tilt. Journal of neurophysiology. 2005;94(1):186–98. . [DOI] [PubMed] [Google Scholar]

- 10. McIntyre S, Seizova-Cajic T. Neck muscle vibration in full cues affects pointing. Journal of vision. 2007;7(5):9 1–8. Epub 2008/01/26. 10.1167/7.5.9 . [DOI] [PubMed] [Google Scholar]

- 11. Ceyte H, Cian C, Nougier V, Olivier I, Roux A. Effects of neck muscles vibration on the perception of the head and trunk midline position. Exp Brain Res. 2006;170(1):136–40. Epub 2006/02/28. 10.1007/s00221-006-0389-7 . [DOI] [PubMed] [Google Scholar]

- 12. Cohen YE, Andersen RA. A common reference frame for movement plans in the posterior parietal cortex. Nature reviews Neuroscience. 2002;3(7):553–62. 10.1038/nrn873 . [DOI] [PubMed] [Google Scholar]

- 13. Stein BE, Meredith MA, Wallace MT. The visually responsive neuron and beyond: multisensory integration in cat and monkey. Prog Brain Res. 1993;95:79–90. . [DOI] [PubMed] [Google Scholar]

- 14. Schlack A, Sterbing-D'Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25(18):4616–25. 10.1523/JNEUROSCI.0455-05.2005 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Karnath HO, Sievering D, Fetter M. The interactive contribution of neck muscle proprioception and vestibular stimulation to subjective "straight ahead" orientation in man. Exp Brain Res. 1994;101(1):140–6. Epub 1994/01/01. . [DOI] [PubMed] [Google Scholar]

- 16. Chen X, Deangelis GC, Angelaki DE. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron. 2013;80(5):1310–21. 10.1016/j.neuron.2013.09.006 PubMed Central PMCID: PMCPMC3858444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nature neuroscience. 2005;8(7):941–9. 10.1038/nn1480 . [DOI] [PubMed] [Google Scholar]

- 18. Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. Journal of neurophysiology. 2005;94(4):2331–52. 10.1152/jn.00021.2005 . [DOI] [PubMed] [Google Scholar]

- 19. Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. The Journal of neuroscience: the official journal of the Society for Neuroscience. 2011;31(33):12036–52. Epub 2011/08/19. 10.1523/JNEUROSCI.0395-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Colby CL, Duhamel JR, Goldberg ME. Ventral intraparietal area of the macaque: anatomic location and visual response properties. Journal of neurophysiology. 1993;69(3):902–14. Epub 1993/03/01. . [DOI] [PubMed] [Google Scholar]

- 21. Maciokas JB, Britten KH. Extrastriate area MST and parietal area VIP similarly represent forward headings. Journal of neurophysiology. 2010;104(1):239–47. Epub 2010/04/30. 10.1152/jn.01083.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Guipponi O, Wardak C, Ibarrola D, Comte JC, Sappey-Marinier D, Pinede S, et al. Multimodal convergence within the intraparietal sulcus of the macaque monkey. J Neurosci. 2013;33(9):4128–39. 10.1523/JNEUROSCI.1421-12.2013 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Chen X, DeAngelis GC, Angelaki DE. Eye-centered visual receptive fields in the ventral intraparietal area. Journal of neurophysiology. 2014;112(2):353–61. 10.1152/jn.00057.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Chen X, DeAngelis GC, Angelaki DE. Eye-centered representation of optic flow tuning in the ventral intraparietal area. J Neurosci. 2013;33(47):18574–82. 10.1523/JNEUROSCI.2837-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Crane BT. Direction Specific Biases in Human Visual and Vestibular Heading Perception. PLoS One. 2012;7(12):e51383 Epub 12/7/12. 10.1371/journal.pone.0051383 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Cuturi LF, Macneilage PR. Systematic biases in human heading estimation. PLoS One. 2013;8(2):e56862 10.1371/journal.pone.0056862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Gu Y, Fetsch CR, Adeyemo B, Deangelis GC, Angelaki DE. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron. 2010;66(4):596–609. Epub 2010/06/01. 10.1016/j.neuron.2010.04.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. MacNeilage PR, Banks MS, DeAngelis GC, Angelaki DE. Vestibular heading discrimination and sensitivity to linear acceleration in head and world coordinates. J Neurosci. 2010;30(27):9084–94. Epub 2010/07/09. doi: 30/27/9084 [pii] 10.1523/JNEUROSCI.1304-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Grabherr L, Nicoucar K, Mast FW, Merfeld DM. Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res. 2008;186(4):677–81. 10.1007/s00221-008-1350-8 [DOI] [PubMed] [Google Scholar]

- 30. Fetsch CR, Turner AH, Deangelis GC, Angelaki DE. Dynamic re-weighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29(49):15601–12. 10.1523/JNEUROSCI.2574-09.2009 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Crane BT. Human Visual and Vestibular Heading Perception in the Vertical Planes. J Assoc Res Otolaryngol. 2014;15(1):87–102. Epub 2013/11/20. 10.1007/s10162-013-0423-y . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Crane BT. The influence of head and body tilt on human fore-aft translation perception. Exp Brain Res. 2014;232:3897–905. 10.1007/s00221-014-4060-4 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Roditi RE, Crane BT. Directional asymmetries and age effects in human self-motion perception. J Assoc Res Otolaryngol. 2012;13(3):381–401. 10.1007/s10162-012-0318-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Benson AJ, Hutt EC, Brown SF. Thresholds for the perception of whole body angular movement about a vertical axis. Aviat Space Environ Med. 1989;60(3):205–13. . [PubMed] [Google Scholar]

- 35. Royden CS, Banks MS, Crowell JA. The perception of heading during eye movements. Nature. 1992;360(6404):583–5. . [DOI] [PubMed] [Google Scholar]

- 36. Wann JP, Swapp DK. Why you should look where you are going. Nature neuroscience. 2000;3(7):647–8. Epub 2000/06/22. 10.1038/76602 . [DOI] [PubMed] [Google Scholar]

- 37. Warren WH Jr, Hannon DJ. Direction of self-motion perceived from optical flow. Nature. 1988;336:162–3. [Google Scholar]

- 38. Lappe M, Bremmer F, van den Berg AV. Perception of self-motion from visual flow. Trends Cogn Sci. 1999;3(9):329–36. . [DOI] [PubMed] [Google Scholar]

- 39. Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nature neuroscience. 2008;11(10):1201–10. 10.1038/nn.2191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. de Winkel KN, Weesie J, Werkhoven PJ, Groen EL. Integration of visual and inertial cues in perceived heading of self-motion. Journal of vision. 2010;10(12):1 Epub 2010/11/05. 10.1167/10.12.1 . [DOI] [PubMed] [Google Scholar]

- 41. Edwards M, O'Mahony S, Ibbotson MR, Kohlhagen S. Vestibular stimulation affects optic-flow sensitivity. Perception. 2010;39(10):1303–10. Epub 2010/12/25. . [DOI] [PubMed] [Google Scholar]

- 42. Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends in neurosciences. 2004;27(12):712–9. 10.1016/j.tins.2004.10.007 . [DOI] [PubMed] [Google Scholar]

- 43. Hollands MA, Marple-Horvat DE, Henkes S, Rowan AK. Human Eye Movements During Visually Guided Stepping. Journal of motor behavior. 1995;27(2):155–63. 10.1080/00222895.1995.9941707 . [DOI] [PubMed] [Google Scholar]

- 44. Patla AE, Vickers JN. Where and when do we look as we approach and step over an obstacle in the travel path? Neuroreport. 1997;8(17):3661–5. . [DOI] [PubMed] [Google Scholar]

- 45. Land MF, Lee DN. Where we look when we steer. Nature. 1994;369(6483):742–4. 10.1038/369742a0 . [DOI] [PubMed] [Google Scholar]

- 46. Wilkie RM, Wann JP. Driving as night falls: the contribution of retinal flow and visual direction to the control of steering. Curr Biol. 2002;12(23):2014–7. . [DOI] [PubMed] [Google Scholar]

- 47. Readinger WO, Chatziastros A, Cunningham DW, Bülthoff HH. Driving effects of retinal-flow properties associated with eccentric gaze. Perception. 2001;30(ECVP Abstract Supplement). [Google Scholar]

- 48. Cinelli M, Warren WH. Do walkers follow their heads? Investigating the role of head rotation in locomotor control. Exp Brain Res. 2012;219(2):175–90. 10.1007/s00221-012-3077-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Wilkie RM, Wann JP. Eye-movements aid the control of locomotion. Journal of vision. 2003;3(11):677–84. Epub 2004/02/10. 10.1167/3.11.3 . [DOI] [PubMed] [Google Scholar]

- 50. Marple-Horvat DE, Chattington M, Anglesea M, Ashford DG, Wilson M, Keil D. Prevention of coordinated eye movements and steering impairs driving performance. Exp Brain Res. 2005;163(4):411–20. 10.1007/s00221-004-2192-7 . [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are within the paper.