Abstract

Background

Development of cognitive skills for competent medical practice is a goal of residency education. Cognitive skills must be developed for many different clinical situations.

Innovation

We developed the Resident Cognitive Skills Documentation (CogDoc) as a method for capturing faculty members' real-time assessment of residents' cognitive performance while they precepted them in a family medicine office. The tool captures 3 dimensions of cognitive skills: medical knowledge, understanding, and its application. This article describes CogDoc development, our experience with its use, and its reliability and feasibility.

Methods

After development and pilot-testing, we introduced the CogDoc at a single training site, collecting all completed forms for 14 months to determine completion rate, competence development over time, consistency among preceptors, and resident use of the data.

Results

Thirty-eight faculty members completed 5021 CogDoc forms, documenting 29% of all patient visits by 33 residents. Competency was documented in all entrustable professional activities. Competence was statistically different among residents of different years of training for all 3 dimensions and progressively increased within all residency classes over time. Reliability scores were high: 0.9204 for the medical knowledge domain, 0.9405 for understanding, and 0.9414 for application. Almost every resident reported accessing the individual forms or summaries documenting their performance.

Conclusions

The CogDoc approach allows for ongoing assessment and documentation of resident competence, and, when compiled over time, depicts a comprehensive assessment of residents' cognitive development and ability to make decisions in ambulatory medicine. This approach meets criteria for an acceptable tool for assessing cognitive skills.

What was known

Developing residents' cognitive skills is an important goal of residency education.

What is new

The Resident Cognitive Skills Documentation (CogDoc) tool captures 3 dimensions of cognitive skills: medical knowledge, understanding, and its application.

Limitations

The tool was implemented in a single population of faculty preceptors and residents, limiting generalizability.

Bottom line

The CogDoc approach allows for ongoing assessment and documentation of resident competence.

Introduction

Developing cognitive skills is a major focus of residency. While medical students graduate with much knowledge, their critical thinking and decision-making skills are underdeveloped.1 Thus, it is important to assess these developing skills over time in all areas of practice to ensure that graduates are competent.

Faculty can make these assessments during precepting. As a resident describes the data collected from patients and the thought process for arriving at a diagnosis and management plan, preceptors assess the resident's cognitive processing and decision-making in-situ.

Usually, this assessment of residents' critical thinking and decision-making skills is not documented (other than cosignature of the patient record). Instead, preceptors' general impressions are captured only during end-of-rotation summations and are typically not accurate.2,3 By the end of a rotation, raters have difficulty recalling details, leading to simplistic conceptions of performance. In addition, positive feedback is reported more often, leading to overly generous assessments.2 In addition, this summative approach may not identify specific weaknesses in resident performance. Decision-making skills are not generic, homogeneous, stable, inborn traits.2–4 Excellent diagnostic and management skills are context-specific and expertise in 1 area of practice is not easily transferred, especially by novices, to other areas of practice.5–9

Therefore, it is important to document cognitive performance in practice over time to assure that graduates are competent. What is needed is a method of capturing supervisors' expert assessments of resident capability in actual performance.10 These assessments will also document resident achievement of the Accreditation Council for Graduate Medical Education's educational Milestones.11

To capture preceptors' assessments of residents' cognitive performance we developed the Resident Cognitive Skills Documentation (CogDoc) tool. It consists of 3 dimensions of performance—medical knowledge, understanding, and its application—with each having 3 levels of performance. A preceptor familiar with the scoring rubric can complete the form during or at the end of each patient presentation in about 10 seconds. In this article, we describe the development of this form, our experience with its use, its reliability, and residents' reactions to its use in resident assessment.

Methods

Assessment Form Development

The CogDoc approach reflects the concepts embodied in Bloom's Taxonomy of Cognitive Domains, revised.12 The 3 dimensions (medical knowledge, understanding, and application) do not directly represent the same domains of Bloom's taxonomy but were descriptions that were intuitive in our pretesting with users. The medical knowledge scale encompasses Bloom's “remembering” and “understanding,” and documents a resident's determination of what information needs to be considered when making decisions for the specific patient. The understanding dimension (Bloom's “applying” and “analyzing”) documents how well residents solicit and synthesize information from their patients. The information gathering is based on medical knowledge and represents the residents' ability to create a coherent narrative on both medical knowledge and gathered patient information. The application dimension (Bloom's “evaluating” and “creating”) assesses how the resident builds on the understanding of the patient, and his or her knowledge of medicine to create an appropriate, individualized course of action.

Residents are assessed by using criteria describing the expected competent performance for 1 of 60 outpatient entrustable professional activities (EPAs).13 The activities encompassing each EPA are outlined on a separate linked form available to preceptors and residents (box).

box Example of an Entrustable Professional Activity Description

EPA: Managing the Patient With Symptoms of Dyspepsia Diagnosis/diagnostic activities

Demonstrate knowledge of alarm signs (red flags) and indications for endoscopy

Contrast presenting symptoms of gastroesophageal reflux disease (GERD), peptic ulcer disease, and gastritis

Describe when Helicobacter pylori testing is necessary and describe the advantages and disadvantages of testing methods

Know when screening for Barrett esophagus is required

Treatment activities

Show or know a rational treatment algorithm

Show or know counseling about lifestyle changes to decrease dyspepsia and GERD symptoms

Know the relative costs of treatment

In addition to these 2 categories of activities, EPA descriptions may also include primary prevention activities, secondary prevention and monitoring activities, knowledge and prevention of secondary morbidities, and quality improvement and systems-related activities.

For each dimension, faculty can rate cognitive performance to be below, at, or above competence. The form provides criteria for each level of competence, which are standardized (criterion referenced) and not based on a “typical” resident at the same level of training (norm-referencing). A free-text box on each form labelled “identified learning needs” allows faculty members to provide residents with further feedback.

Study Design

We conducted this study over a 14-month period in a single family medicine office in which 24 to 25 residents per year see their own outpatients. Data were collected for 33 residents.

We introduced a paper version of the CogDoc form in October 2008 after initial pilot testing. In April 2010, we switched to a computerized version. At a faculty development retreat, we explained the background and purpose to our core faculty members and showed them how to complete it using role-plays of precepting sessions to illustrate the scoring.

We introduced residents to CogDoc in a series of meetings, emphasizing that it is designed to provide ongoing documentation of their performance and is not a series of “tests.” The chief residents championed the acceptance of CogDoc among the residents.

Preceptors complete a CogDoc form for every patient encounter discussed by a resident, either during or after the precepting encounter. The preceptors select a level of demonstrated competence on each of the 3 dimensions (with the option of not completing a dimension). Each encounter is linked to 1 of 60 outpatient EPAs13 that best reflect the nature of that patient visit. In this way, completed CogDoc scores can be organized by EPA.

We surveyed residents to determine their use of CogDoc reports. We conducted the anonymous survey by using an online survey tool (SurveyMonkey).

The study was approved by the Institutional Review Board of the Cambridge Health Alliance.

Analysis

Data from every CogDoc form completed between the introduction of the form on April 28, 2010, through June 30, 2011, were downloaded to a spreadsheet for analysis. Results were coded by resident being assessed, faculty member assessor, and date.

Internal consistency for each dimension on the form for individual residents was used to evaluate the reliability of CogDoc. We selected completed CogDoc forms for third-year residents for their top 5 most common diagnoses. We assumed that, for these diagnoses and at this stage of training, each resident would demonstrate consistent performance most of the time and therefore most of the difference in CogDoc scores would be due to differences among raters. Cronbach α was used to compare CogDoc scores for each resident.

Results

A total of 5021 CogDoc forms were completed by 38 faculty members for 33 residents (during 1.3 academic calendar years). Competency was documented in all 60 EPAs (results reported separately13), though no individual resident had completed competency categories documented for all 60 EPAs during the 14-month period (range for second- and third-year residents, 68%–95%).

A total of 29% (5021 of 17 304) of all patient visits conducted by residents were documented via CogDoc (range per month, 16%–55%). Documentation of visits varied by postgraduate year (PGY) of training: 27% (2419 of 9058) of PGY-3 visits, 30% (2000 of 6639) of PGY-2 visits, and 38% (602 of 1607) of PGY-1 visits.

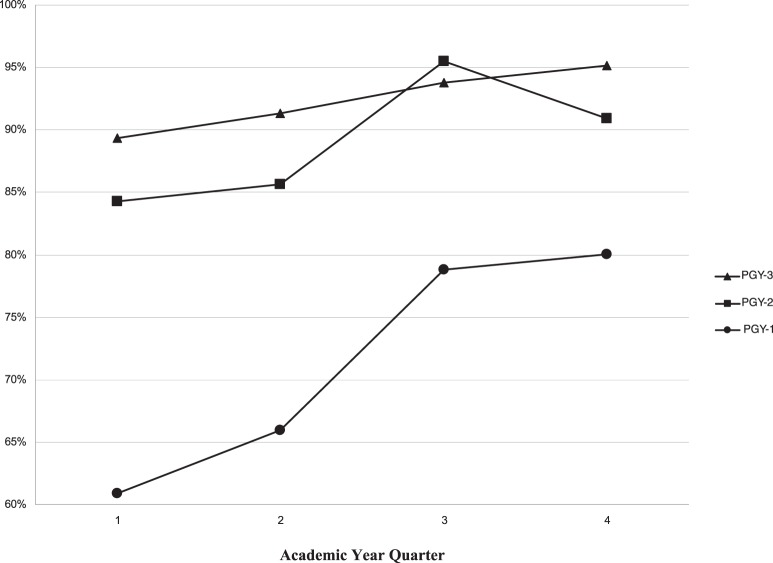

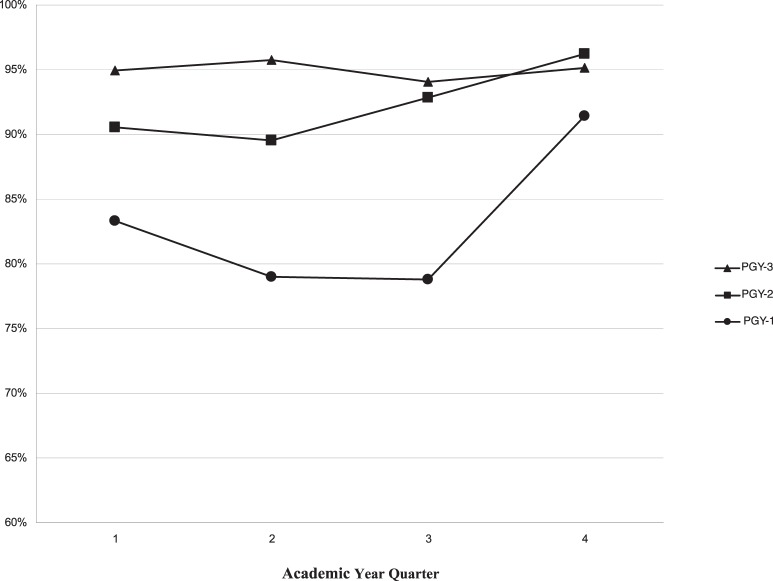

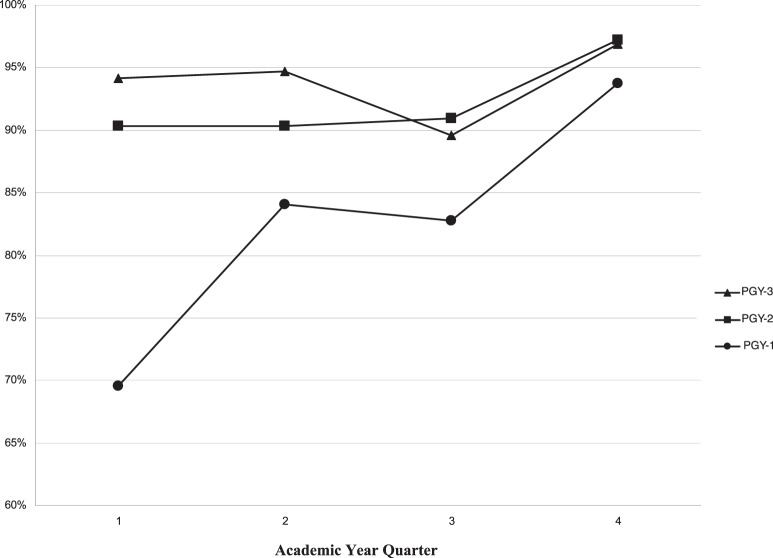

For academic year 2010–2011, we compared results on each dimension by class year and by academic quarter to determine whether competence documentation was different among the class years and whether they changed over time (figures 1 through 3). Residents were rated as “competent” if their performance was documented as “competent” or “above competent” on each of the dimensions.

FIGURE 1.

Percentage of Visits Rated Competent (2) or Above Competent (3) in “Medical Knowledge,” by Year of Training

FIGURE 2.

Percentage of Visits Rated Competent (2) or Above Competent (3) in “Understanding,” by Year of Training

FIGURE 3.

Percentage of Visits Rated Competent (2) or Above Competent (3) in “Application to Patient,” by Year of Training

To assess reliability, we used completed forms for all third-year residents for the 5 most common EPAs for these residents (n = 675), which included a patient with joint pain, a patient with, or at risk for, cardiovascular disease, a patient with a lesion or a rash, a pregnant patient, and a patient with diabetes. These EPAs compose 28% of CogDocs completed for these residents. Internal consistency (Cronbach α) was 0.92 for the medical knowledge domain, and 0.94 for understanding application.

Almost all residents (97%, 32 of 33) responded to the survey asking about their response to and use of the feedback. Most residents (91%, 29 of 32) reported looking at completed CogDoc forms and 97% (31 of 32) reported looking at the graphic summary. Most respondents (59%, 19 of 32) reported reading most of the forms completed for them, while 35% (11 of 32) reported that they usually looked only at forms documenting below competence on 1 or more of the scales. Almost all respondents (82%, 26 of 32) agreed the CogDoc assessments usually reflected their performance.

Discussion

Using a computer-based approach to collecting and summarizing the documentation, we developed CogDoc, a tool that was easily implemented and provided reliable formative assessment used by most residents.

Two other approaches to assessing clinical decision making have also been developed. Precept-Assist is a computer evaluation system that documents residents' performance, based on preceptors' summative assessment, for the complete patient session.14 The “Real-Time Evaluation of Doctors' Independence” assessment method tracks resident performance for individual patients during precepting.15 It does not identify specific strengths and weaknesses.

Our assessment approach has limitations. Assessments represent expert judgment based on what a resident reported about the patient visit, not direct observation of the resident with the patient. In addition, since the primary reason for precepting is to assure good patient care and to improve resident knowledge, not to provide an ideal assessment, preceptors may not ask enough questions to completely assess a resident's cognitive skill.

In addition, only 1 in 4 patient visits was documented by using the forms. This rate is about the same as the 30% reported by Reichard.15 Based on informal discussions with preceptors, it is possible that cases documented are bimodal, representing the best and worst performance observed. In addition, scores on the 3 scales often were similar on a specific form, which might reflect a “halo” effect, the tendency to rate a person high on all scales because of a global impression. The CogDoc tool has only been studied in a single population of faculty preceptors and residents. Further research on its implementation and use in other family medicine residency settings is necessary for confidence in its reliability.

Determining the reliability of this method is difficult using standard education metrics.16,17 The ratings are in part dependent on the interaction between the resident and the preceptor; the questions asked by the preceptor will allow greater or lesser demonstration of knowledge, understanding, and application by the resident. As a result, it is not reasonable to assess interrater reliability by using video recording of resident-preceptor discussions or even comparing ratings of 2 preceptors assessing a single resident at the same time.

Our attempt to assess interrater reliability is further confounded by the issues of intercase reliability, a problem that plagues competence assessment.16 The value of this method of assessment is that it is inexpensive, fast, and captures existing data (eg, the preceptors' assessments of resident decision-making performance). A strength of our approach is the 30% rate of clinical encounters being assessed, as a major contributor to reliability is the frequency of the assessments.18 Finally, assessments focused on EPAs is 1 of several assessment methods that can be combined to support Milestone determination required of the Next Accreditation System.19

Footnotes

Allen F. Shaughnessy, PharmD, MMedEd, is Professor of Family Medicine, Tufts University School of Medicine; Katherine T. Chang, BS, is a Medical Student, Tufts University School of Medicine; Jennifer Sparks, MD, is Faculty, Lawrence Family Medicine Residency; Molly Cohen-Osher, MD, is Assistant Professor, Boston University School of Medicine; and Joseph Gravel Jr, MD, is Program Director, Lawrence Family Medicine Residency.

Funding: The authors report no external funding source for this study.

Conflict of Interest: The authors declare they have no competing interests.

The authors would like to thank Niels K. Kjaer, MD, MHPE, for his inspiration to begin this project and for his help translating the Danish competency areas into English.

References

- 1.McGregor CA, Paton C, Thomson C, Chandratilake M, Scott H. Preparing medical students for clinical decision making: a pilot study exploring how students make decisions and the perceived impact of a clinical decision making teaching intervention. Med Teach. 2012;34(7):e508–e517. doi: 10.3109/0142159X.2012.670323. [DOI] [PubMed] [Google Scholar]

- 2.Williams RG, Klamen DA, McGaghie WC. Cognitive, social and environmental sources of bias in clinical performance ratings. Teach Learn Med. 2003;15(4):270–292. doi: 10.1207/S15328015TLM1504_11. [DOI] [PubMed] [Google Scholar]

- 3.Maxim BR, Dielman TE. Dimensionality, internal consistency and interrater reliability of clinical performance ratings. Med Educ. 1987;21(2):130–137. doi: 10.1111/j.1365-2923.1987.tb00679.x. [DOI] [PubMed] [Google Scholar]

- 4.Schuwirth LW, Van Der Vleuten CP. A plea for new psychometric models in educational assessment. Med Educ. 2006;40(4):296–300. doi: 10.1111/j.1365-2929.2006.02405.x. [DOI] [PubMed] [Google Scholar]

- 5.van der Vleuten CP, Newble DI. How can we test clinical reasoning. Lancet. 1995;345(8956):1032–1034. doi: 10.1016/s0140-6736(95)90763-7. [DOI] [PubMed] [Google Scholar]

- 6.Stillman PL, Swanson DB, Smee S, Stillman AE, Ebert TH, Emmel VS, et al. Assessing clinical skills of residents with standardized patients. Ann Intern Med. 1986;105(5):762–771. doi: 10.7326/0003-4819-105-5-762. [DOI] [PubMed] [Google Scholar]

- 7.Stillman P, Swanson D, Regan MB, Philbin MM, Nelson V, Ebert T, et al. Assessment of clinical skills of residents utilizing standardized patients: a follow-up study and recommendations for application. Ann Intern Med. 1991;114(5):393–401. doi: 10.7326/0003-4819-114-5-393. [DOI] [PubMed] [Google Scholar]

- 8.Harper AC, Roy WB, Norman GR, Rand CA, Feightner JW. Difficulties in clinical skills evaluation. Med Educ. 1983;17(1):24–27. doi: 10.1111/j.1365-2923.1983.tb01088.x. [DOI] [PubMed] [Google Scholar]

- 9.Eva KW, Neville AJ, Norman GR. Exploring the etiology of content specificity: factors influencing analogic transfer and problem solving. Acad Med. 1998;73((suppl 10)):1–5. doi: 10.1097/00001888-199810000-00028. [DOI] [PubMed] [Google Scholar]

- 10.ten Cate O. Entrustability of professional activities and competency-based training. Med Educ. 2005;39(12):1176–1177. doi: 10.1111/j.1365-2929.2005.02341.x. [DOI] [PubMed] [Google Scholar]

- 11.2013. The Family Medicine Milestones Project. http://acgme.org/acgmeweb/Portals/0/PDFs/Milestones/FamilyMedicineMilestones.pdf. Accessed July 8, 2014. [Google Scholar]

- 12.Anderson LW, Krathwohl DR, Airasian PW, Cruikshank KA, Mayer RE, Pintrich PR, et al. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives. Abridged ed. New York, NY: Longman; 2001. [Google Scholar]

- 13.Shaughnessy AF, Sparks J, Cohen-Osher M, Goodell KH, Sawin GL, Gravel J. Entrustable professional activities in family medicine. J Grad Med Educ. 2013;5(1):112–118. doi: 10.4300/JGME-D-12-00034.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.DiTomasso RA, Gamble JD, Willard MA. Precept-Assist, a computerized, data-based evaluation system. Fam Med. 1999;31(5):346–352. [PubMed] [Google Scholar]

- 15.Reichard G. 2009. Real-time evaluation of doctors' independence (REDI) http://www.fmdrl.org/index.cfm?event=c.beginBrowseD&clearSelections=1&criteria=readiness#584. Accessed May 29, 2014. [Google Scholar]

- 16.Wass V, Van der Vleuten CPM, Shatzer J, Jones R. Assessment of clinical competence. Lancet. 2001;357(9260):945–949. doi: 10.1016/S0140-6736(00)04221-5. [DOI] [PubMed] [Google Scholar]

- 17.Scott IA, Phelps G, Brand C. Assessing individual clinical performance: a primer for physicians. Int Med J. 2011;41(2):144–155. doi: 10.1111/j.1445-5994.2010.02225.x. [DOI] [PubMed] [Google Scholar]

- 18.Williams RG, Verhulst S, Colliver JA, Dunnington GL. Assuring the reliability of resident performance appraisals: more items or more observations. Surgery. 2005;137(2):141–147. doi: 10.1016/j.surg.2004.06.011. [DOI] [PubMed] [Google Scholar]

- 19.Nasca TJ, Philibert I, Brigham T, Flynn TC. The Next GME Accreditation System—rationale and benefits. New Engl J Med. 2012;366(11):1051–1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]