Abstract

Background

In July 2013, emergency medicine residency programs implemented the Milestone assessment as part of the Next Accreditation System.

Objective

We hypothesized that applying the Milestone framework to real-time feedback in the emergency department (ED) could affect current feedback processes and culture. We describe the development and implementation of a Milestone-based, learner-centered intervention designed to prompt real-time feedback in the ED.

Methods

We developed and implemented the Milestones Passport, a feedback intervention incorporating subcompetencies, in our residency program in July 2013. Our primary outcomes were feasibility, including faculty and staff time and costs, number of documented feedback encounters in the first 2 months of implementation, and user-reported time required to complete the intervention. We also assessed learner and faculty acceptability.

Results

Development and implementation of the Milestones Passport required 10 hours of program coordinator time, 120 hours of software developer time, and 20 hours of faculty time. Twenty-eight residents and 34 faculty members generated 257 Milestones Passport feedback encounters. Most residents and faculty reported that the encounters required fewer than 5 minutes to complete, and 48% (12 of 25) of the residents and 68% (19 of 28) of faculty reported satisfaction with the Milestones Passport intervention. Faculty satisfaction with overall feedback in the ED improved after the intervention (93% versus 54%, P = .003), whereas resident satisfaction with feedback did not change significantly.

Conclusions

The Milestones Passport feedback intervention was feasible and acceptable to users; however, learner satisfaction with the Milestone assessment in the ED was modest.

What was known

Despite the importance of feedback in improving learner performance, learners and educators report dissatisfaction with the quality of feedback they receive and deliver.

What is new

Development and implementation of a Milestone-based, learner-centered intervention designed to prompt real-time feedback in the emergency department.

Limitations

Single-program study limits generalizability.

Bottom line

The Milestones Passport feedback intervention was feasible and acceptable to users.

Editor's Note: The online version (1.9MB, pdf) of this article contains the survey instrument used in this study.

Introduction

The implementation of the Next Accreditation System (NAS) poses a challenge to graduate medical educators: How should the educational Milestones be incorporated into resident assessment and the feedback process? Milestones, observable developmental steps that describe resident progress from novice to expert, are the framework for assessment in the NAS.1,2 The development of the Emergency Medicine (EM) Milestones has been previously described, and EM residencies submitted the first wave of Milestone data in December 2013.1–8 Although the intent of the Accreditation Council for Graduate Medical Education was that Clinical Competency Committees would use a variety of existing assessment methods to inform consensus decisions regarding learner performance, presentations at the 2013 Council of Emergency Medicine Residency Directors Academic Assembly suggested that many programs had incorporated the Milestone framework into existing shift and rotation assessment instruments.

As residency programs prioritize collecting data to inform Milestone assessment, little is known about how the focus on Milestones will affect another crucial educational process: feedback. Despite the importance of feedback in improving learner performance, learners and educators report dissatisfaction with the quality of feedback they receive and deliver.9–12 It seems intuitive that improved assessment processes will benefit feedback, yet there may be unintended consequences that could threaten effective feedback practices and culture. For example, as programs devote faculty and staff resources to collecting assessment data, preparing for and administering Clinical Competency Committee meetings, and uploading data, an unintended reduction in the emphasis on direct feedback as part of the assessment process might occur. Furthermore, as clinical faculty spend time during shifts or rotations completing assessment forms that attempt to gather Milestone data, they may have less time to spend in direct observation or face-to-face feedback.

To maintain a deliberate focus on improving educational feedback as we implemented the NAS, our residency program explored how the subcompetencies, with their explicit description of developmental steps and common language for performance expectations, might be applied to enhance feedback. The literature suggests that effective feedback is specific, appropriately timed, frequent, based on direct observation, and learner-centered.13–16 Recent research suggests that factors affecting feedback-seeking behavior, learner responsiveness to feedback, and the culture of feedback may be more important to feedback effectiveness than factors related to its delivery.17–20 With those concepts in mind, we developed the Milestones Passport (MP), a feedback intervention that incorporates the educational Milestones into real-time feedback processes and prioritizes improving performance over documenting assessment. The intervention also aimed to change the culture of feedback, ensuring that feedback becomes an expected component of every clinical shift.

This article describes the development and testing of the MP in our EM residency program and reports on initial feasibility and user acceptability. We hypothesized the MP would be feasible to implement and would be accepted by residents and attending physicians.

Methods

Study and Participants

The study site is a 3-year EM residency in an urban, tertiary-care, academic institution that has 33 residents and 34 clinical faculty. The intervention was developed in preparation for the July 2013 implementation of the NAS. Before the intervention, feedback was encouraged but not tracked, and there was no formal feedback system in place.

Intervention

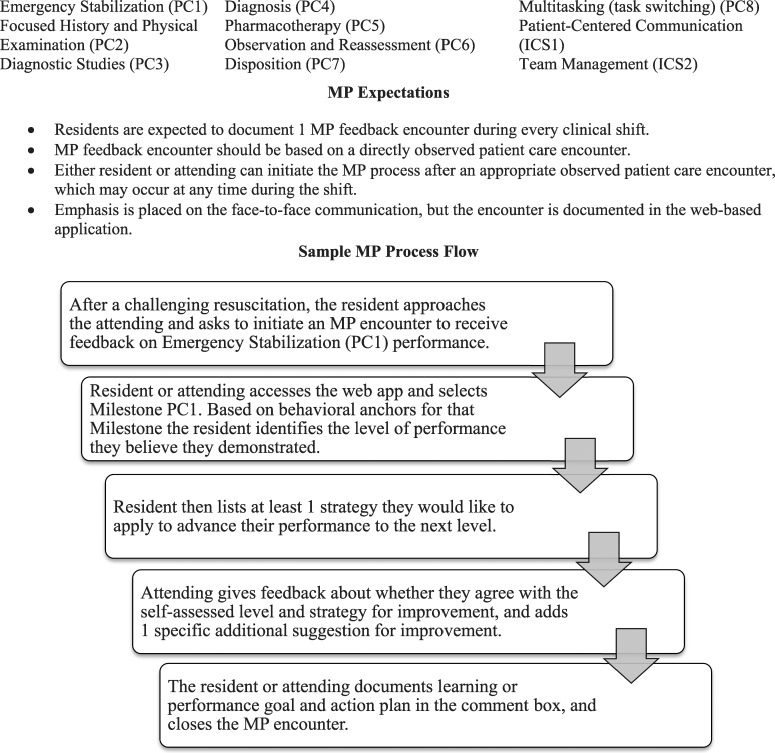

The MP is a web-based “passport” that prompts residents to choose from selected subcompetencies during a clinical shift to initiate feedback related to their own learning goals and their directly observed patient encounters during that shift. The MP is resident-initiated (residents are encouraged to complete an average of 1 MP encounter per shift) and resident-centered (residents have ownership of the MP, select subcompetencies to assess, and self-assess before seeking feedback). The encounter is intended to occur as close as possible to the observed clinical experience and to be completed in 5 or fewer minutes. The figure describes the subcompetencies included, the MP expectations, and the encounter flow. The system was developed using theories of curriculum design and assessment in medical education through the following steps21,22:

FIGURE.

Subcompetencies Included in Milestones Passport (MP)

Abbreviations: PC, patient care; ICS, interpersonal and communication skills.

Experts in EM education participated in a consensus-building session to identify a subset of subcompetencies well-suited to real-time observation in the emergency department (ED). For brevity, the 3 most relevant levels for each subcompetency were included on the MP. Although the anchors varied by subcompetency, in most cases level 1 (novice) Milestones were eliminated because incoming interns had already demonstrated that level of competency, and level 5 (expert) Milestones were eliminated because many were not observable in the ED, and most physicians do not achieve that level of performance until years into independent practice.2

Our department's informational technology team developed a virtual passport application—each documented MP encounter serves as a “stamp” in the passport. This application is housed on a password-protected departmental website and accessible on any web-connected computer, smartphone, or tablet.

The MP application and process were pilot tested and revised based on resident and faculty input. Faculty and residents were oriented to the MP system by e-mails, discussions at each resident's annual review, and presentations at resident and faculty meetings. The MP was introduced in July and formally implemented during August and September. All 34 faculty and 28 of 33 residents (85%) worked at least 1 shift in the study site ED during the 2-month pilot block.

Acceptability was assessed by a web-based survey of residents and attendings. Survey items were adapted from a previously published survey23 addressing satisfaction with feedback in the ED, and identical items were included on the residency's March 2013 Annual Program Review. Satisfaction was measured on a 5-point scale, the top 2 responses were considered “satisfied,” and the top 3 considered “acceptable” (anchors included very satisfied, somewhat satisfied, neither satisfied nor dissatisfied, somewhat dissatisfied, and very dissatisfied). Resident and faculty satisfaction with feedback in the ED was compared preintervention and postintervention using the χ2 test.

The study was submitted to our Institutional Review Board and deemed exempt from review because it was determined to be program evaluation rather than human subject research.

Results

Feasibility

Staff time requirements included 10 hours of program coordinator time and 120 hours of web developer time to develop, pilot, and implement the web-based MP application. Estimated costs include $4,200 in upfront developer salary support, and $75 per month in ongoing web-based hosting and support. Program leaders spent a total of 20 person-hours developing the MP and educating residents and faculty before implementation. After implementation, ongoing time requirements for data processing and analysis and application maintenance included 4 hours a month each for the program coordinator, web developer, and program director.

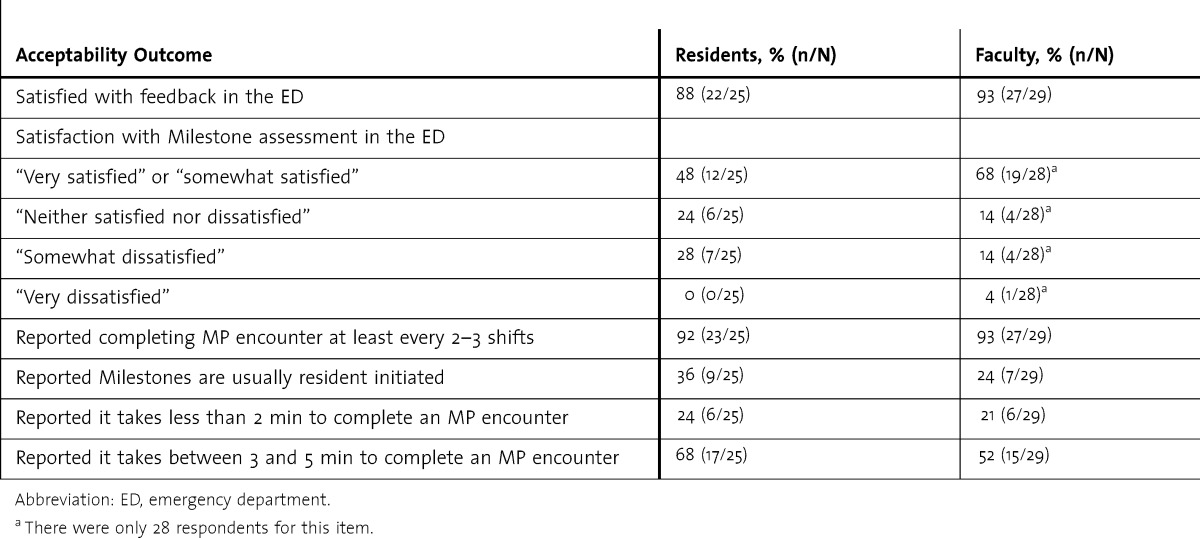

During the 2-month pilot phase, 257 MP encounters were recorded out of an estimated 480 faculty-resident encounters for a participation rate of 54%. All 28 residents who had at least 1 shift scheduled during the pilot period completed at least 1 MP encounter, and 92% (23 of 25) of resident respondents and 72% (21 of 29) of faculty respondents reported it took less than 5 minutes to complete an MP feedback encounter (table).

TABLE.

Resident and Attending Acceptability Responses for the Milestones Passport (MP)

Acceptability

The response rates to the postintervention survey were 89% (25 of 28) for residents and 85% (29 of 34) for faculty. For the item on satisfaction with Milestone assessments in the ED, 48% (12 of 25) of the residents and 68% (19 of 28) of the faculty reported satisfaction with the intervention, and 24% (6 of 25) of the residents and 14% (4 of 28) of the attendings were neutral. Eighty-eight percent (22 of 25) of the residents reported overall satisfaction with feedback received in the ED, an increase over the 64% (9 of 14) on the 2013 Annual Program Review survey completed before the intervention (P = .08). Of the attendings, 93% (27 of 29) reported satisfaction with feedback in the ED, compared with 54% (7 of 13) before the intervention (P = .003). Resident and faculty responses to the postintervention survey are shown in the table.

Discussion

We found the MP intervention feasible to develop and implement, and it was acceptable to learners and educators. Participants reported that the MP encounter could be completed quickly. Although learners reported only modest satisfaction with Milestone assessments, we found a significant improvement in satisfaction with feedback among the faculty after the intervention.

Approximately half of the residents reported satisfaction with the MP, compared with 88% of residents reporting satisfaction with overall feedback in the ED. Possible explanations for the low resident satisfaction with the MP itself include that residents may still be adapting to a new system, may feel it adds to their workload during busy ED shifts, or may believe the MP forces them to choose from a limited number of assessment options for Milestone encounters. The lower resident satisfaction with the MP may explain why MPs were completed for only half of resident shifts, despite our goal of 1 MP per shift. We were surprised that only 36% of residents and 24% of faculty reported that the MP is usually learner-initiated, suggesting that the tool is not being implemented in the manner we envisioned.

The MP represents an explicit effort to prioritize feedback as part of the NAS assessment process. Our model assumes that pairing the assessment encounters with associated feedback conversations will allow learners and educators to place observed performance within Milestone anchors and, in doing so, facilitate a conversation about both how the learner performed and how he or she can advance to the next level of performance. Encouraging residents to identify subcompetencies needing improvement and to initiate the encounter promotes goal-setting, feedback-seeking behavior, and learner-centered feedback. Focusing on 1 subcompetency per shift encourages frequent, specific conversations. Although our daily feedback results were modest, we did not previously have a mechanism for documenting feedback encounters, and the 257 encounters documented during our study are useful data points for our residency and the Clinical Competency Committee and suggest that use of the MP to promote and document feedback encounters is feasible and has utility. In addition, we expect that as the MP becomes part of our educational culture, the volume of MP encounters collected will increase.

Although the MP application serves to document the encounter, the emphasis of the encounter is on the face-to-face conversation, which, ideally, is bidirectional and focused on performance improvement. Our results suggest faculty were significantly more satisfied with the feedback they provided after the intervention compared with before. We hypothesize the increase in faculty satisfaction may relate to increased resident engagement in feedback and improved faculty comfort with providing feedback as a result of the shift from a faculty-driven, unstructured feedback system to a process that provides common expectations and uses anchors to drive the generation of suggestions for improvement.

Perhaps most important, a system that expects a subcompetency-based feedback encounter during every clinical shift can change the feedback culture by (1) defining a feedback encounter as a collaborative effort between the resident and attending to facilitate learner progression to the next level for a specific subcompetency, and (2) making feedback a habit so learners feel safe initiating it, and faculty remember to engage in it.

Limitations of our study include that it focused on intervention feasibility and acceptability and did not assess higher-level outcomes, such as improvement in feedback quality or learner performance. The postintervention survey may have been affected by recall bias or other sources of error inherent in surveys. Feedback encounters were self-reported and may have been underreported or overreported. Preintervention data were obtained through the Annual Program Review survey, which had a low response rate, and our study was not powered to determine whether there was a statistically significant difference in metrics preintervention and postintervention. Our intervention was implemented in a single residency program, which may limit the generalizability of our findings.

Conclusion

The MP is 1 method of incorporating select Accreditation Council for Graduate Medical Education Milestones into real-time feedback systems. The system is feasible to develop and implement and is acceptable to learners and faculty, although only one-half of the residents reported satisfaction with Milestone assessment in the ED. Future study is needed to explore how real-time Milestone assessment affects feedback practices, efficacy, and learner and faculty acceptance.

Footnotes

All authors are at the Oregon Health & Science University. Lalena M. Yarris, MD, MCR, is Associate Professor and Emergency Medicine Residency Director; David Jones, MD, is an Education Research Fellow; Joshua G. Kornegay, MD, is an Education Research Fellow; and Matthew Hansen, MD, MCR, is Assistant Professor and Assistant Residency Director.

Funding: The authors report no external funding source for this study.

Conflict of Interest: The authors declare they have no competing interests.

The authors would like to thank Cindy Koonz for administrative support in implementing the Milestones Passport, Joel Schramm and Cody Weathers for assistance in developing the web-based application, and the Oregon Health & Science University Department of Emergency Medicine residents and faculty for supporting this educational intervention.

References

- 1.Accreditation Council for Graduate Medical Education. Frequently asked questions about the Next Accreditation System. http://www.acgme.org/acgmeweb/Portals/0/PDFs/NAS/NASFAQs.pdf. Updated July 2013. Accessed January 23, 2014. [Google Scholar]

- 2.The Emergency Medicine Milestone Project: A joint initiative of the Accreditation Council for Graduate Medical Education and the American Board of Emergency Medicine. http://www.acgme.org/acgmeweb/Portals/0/PDFs/Milestones/EmergencyMedicineMilestones.pdf. Copyright 2012. Accessed April 9, 2014. [Google Scholar]

- 3.Santen SA, Rademacher N, Heron SL, Khandelwal S, Hauff S, Hopson L. How competent are emergency medicine interns for level 1 milestones: who is responsible. Acad Emerg Med. 2013;20(7):736–739. doi: 10.1111/acem.12162. [DOI] [PubMed] [Google Scholar]

- 4.Korte RC, Beeson MS, Russ CM, Carter WA, Reisdorff EJ Emergency Medicine Milestones Working Group. The emergency medicine milestones: a validation study. Acad Emerg Med. 2013;20(7):730–735. doi: 10.1111/acem.12166. [DOI] [PubMed] [Google Scholar]

- 5.Lewiss RE, Pearl M, Nomura JT, Baty G, Bengiamin R, Duprey K, et al. CORD-AEUS: Consensus document for the emergency ultrasound milestone project. Acad Emerg Med. 2013;20(7):740–745. doi: 10.1111/acem.12164. [DOI] [PubMed] [Google Scholar]

- 6.Beeson MS, Carter WA, Christopher TA, Heidt JW, Jones JH, Meyer LE, et al. The development of the emergency medicine milestones. Acad Emerg Med. 2013;20(7):724–729. doi: 10.1111/acem.12157. [DOI] [PubMed] [Google Scholar]

- 7.Beeson MS, Carter WA, Christopher TA, Heidt JW, Jones JH, Meyer LE, et al. Emergency medicine milestones. J Grad Med Educ. 2013;5(1, suppl 1):5–13. doi: 10.4300/JGME-05-01s1-02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012;366(11):1051–1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 9.Ericsson KA. Deliberate practice and acquisition of expert performance: a general overview. Acad Emerg Med. 2008;15(11):988–994. doi: 10.1111/j.1553-2712.2008.00227.x. [DOI] [PubMed] [Google Scholar]

- 10.Yarris LM, Linden JA, Gene Hern H, Lefebvre CL, Nestler DM, Fu R, et al. Attending and resident satisfaction with feedback in the emergency department. Acad Emerg Med. 2009;16(suppl 2):76–81. doi: 10.1111/j.1553-2712.2009.00592.x. [DOI] [PubMed] [Google Scholar]

- 11.Jensen AR, Wright AS, Kim S, Horvath KD, Calhoun KE. Educational feedback in the operating room: a gap between resident and faculty perceptions. Am J Surg. 2012;204(2):248–255. doi: 10.1016/j.amjsurg.2011.08.019. [DOI] [PubMed] [Google Scholar]

- 12.Rose JS, Waibel BH, Schenarts PJ. Disparity between resident and faculty surgeons' perceptions of preoperative preparation, intraoperative teaching, and postoperative feedback. J Surg Educ. 2011;68(6):459–464. doi: 10.1016/j.jsurg.2011.04.003. [DOI] [PubMed] [Google Scholar]

- 13.Ende J. Feedback in clinical medical education. JAMA. 1983;250(6):777–781. [PubMed] [Google Scholar]

- 14.Shute V. Focus on formative feedback. Rev Educ Res. 2008;78(1):153–189. [Google Scholar]

- 15.Archer JC. State of the science in health professional education: effective feedback. Med Educ. 2010;44(1):101–108. doi: 10.1111/j.1365-2923.2009.03546.x. [DOI] [PubMed] [Google Scholar]

- 16.Bangert-Drowns RL, Kulik CC, Kulik JA, Morgan MT. The instructional effect of feedback in test-like events. Rev Educ Res. 1991;61(2):213–238. [Google Scholar]

- 17.Sargeant J, Armson H, Chesluk B, Dornan T, Eva K, Homboe E, et al. The processes and dimensions of informed self-assessment: a conceptual model. Acad Med. 2010;85(7):1212–1220. doi: 10.1097/ACM.0b013e3181d85a4e. [DOI] [PubMed] [Google Scholar]

- 18.Crommelinck M, Anseel F. Understanding and encouraging feedback-seeking behaviour: a literature review. Med Educ. 2013;47(3):232–241. doi: 10.1111/medu.12075. [DOI] [PubMed] [Google Scholar]

- 19.Eva K, Armson H, Holmboe E, Lockyer J, Loney E, Mann K, et al. Factors influencing responsiveness to feedback: on the interplay between fear, confidence, and reasoning processes. Adv Health Sci Educ Theory Pract. 2012;17(1):15–26. doi: 10.1007/s10459-011-9290-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bok HG, Teunissen PW, Spruijt A, Fokkema JP, van Beukelen P, Jaarsma DA, et al. Clarifying students' feedback-seeking behavior in clinical clerkships. Med Educ. 2013;47(3):282–291. doi: 10.1111/medu.12054. [DOI] [PubMed] [Google Scholar]

- 21.Kern DE, Thomas PA, Howard DM, Bass EB. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: Johns Hopkins University Press; 1998. [Google Scholar]

- 22.Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003;37(9):830–837. doi: 10.1046/j.1365-2923.2003.01594.x. [DOI] [PubMed] [Google Scholar]

- 23.Yarris LM, Fu R, LaMantia J, Linden JA, Hern HG, Lefebvre C, et al. Effect of an educational intervention on faculty and resident satisfaction with real-time feedback in the emergency department. Acad Emerg Med. 2011;18(5):504–512. doi: 10.1111/j.1553-2712.2011.01055.x. [DOI] [PMC free article] [PubMed] [Google Scholar]