Abstract

Health information technologies have become a central fixture in the mental healthcare landscape, but few frameworks exist to guide their adaptation to novel settings. This paper introduces the Contextualized Technology Adaptation Process (CTAP) and presents data collected during Phase 1 of its application to measurement feedback system development in school mental health. The CTAP is built on models of human-centered design and implementation science and incorporates repeated mixed methods assessments to guide the design of technologies to ensure high compatibility with a destination setting. CTAP phases include: (1) Contextual evaluation, (2) Evaluation of the unadapted technology, (3) Trialing and evaluation of the adapted technology, (4) Refinement and larger-scale implementation, and (5) Sustainment through ongoing evaluation and system revision. Qualitative findings from school-based practitioner focus groups are presented, which provided information for CTAP Phase 1, contextual evaluation, surrounding education sector clinicians’ workflows, types of technologies currently available, and influences on technology use. Discussion focuses on how findings will inform subsequent CTAP phases, as well as their implications for future technology adaptation across content domains and service sectors.

Keywords: health information technology, assessment, implementation, adaptation, school mental health

Over the past two decades, innovative technologies designed to support and improve the delivery of a wide range of healthcare services have rapidly expanded. Many of these innovations can be classified under the umbrella term of health information technology (HIT), which involves the storage, retrieval, sharing, and use of healthcare information for communication and decision-making (U.S. Department of Health and Human Services, Office of the National Coordinator, 2014a). Although undoubtedly influenced by the general growth of digital technologies, HIT has also been specifically facilitated by recent policies that actively promote or mandate their use as well as those that dictate key aspects of their functioning (e.g., Patient Protection and Affordable Care Act of 2010; Health Information Technology for Economic and Clinical Health Act of 2009). These developments have driven a series of new initiatives that support increased information accessibility and sharing capabilities. For instance, the federal “Blue Button” initiative (U.S. Department of Health and Human Services, Office of the National Coordinator, 2014b) allows individuals to download and share their health information to facilitate care across multiple sectors, and the “meaningful use” incentive program for electronic health records (EHRs) certifies EHRs with regard to their ability to support data sharing, coordination of care, transparency, evaluation, and improved outcomes. As healthcare moves toward an era of nearly ubiquitous HIT, an increasing number of technologies are being developed to address a wide range of organizational, service provider, and service recipient needs.

Despite their popularity, the recent explosion of HIT tools, initiatives, and policies has not been accompanied by a corresponding increase in widespread HIT adoption in typical service settings (Furukawa et al., 2014; Heeks, 2006), and this is particularly true in mental health (e.g., Kokkonen et al., 2013). Consequently, authors have called for increased research on adherence, barriers, costs, and other factors that affect uptake of HIT (Mohr, Burns, Schueller, Clarke, & Klinkman, 2013; Pringle, Chambers & Wang, 2010). To help address HIT implementation problems, various models have been proposed, all of which identify key factors that influence the adoption of new technologies with the goal of facilitating uptake and sustained use. The Technology Acceptance Model (TAM) (Davis, 1989; Davis, Bagozzi, & Warshaw, 1989), for instance, has been applied to HIT with studies demonstrating that its components (e.g., attitudes, behavioral intentions, perceived ease of use, perceived usefulness, subjective norms) account for substantial variance in the acceptance and use of HIT across contexts (Holden & Karsh, 2010). Similarly, the USE-IT adoption model (Michel-Verkerke & Spil, 2013) evaluates the likelihood of successful introduction of healthcare information systems. USE-IT articulates four central components (relevance, requirements, resources, and resistance), which are collectively related to the extent to which a technology is adopted.

Although models such as TAM and USE-IT are quite helpful in specifying the processes through which HIT innovations are implemented, as well as barriers to their implementation, these models often assume a static technology. In contrast, most HIT implementation requires some degree of tailoring to account for local priorities, preferences, and workflows. Although technology selection frameworks also exist to assist organizational decision-makers with the identification of appropriate, existing technologies for adoption (Shehabuddeen & Probert, 2004), no clear processes have been proposed to assist in the adaptation of existing HIT to novel settings. Given the rate at which technological innovation is accelerating, new methods for adapting and evaluating new products are necessary if these technologies are to be efficiently implemented (Mohr et al., 2013). There exists a need for structured approaches to technology development that allow functioning technologies to be adapted to address emerging needs or new contexts instead of requiring the creation of a new technology from the “ground up.”

Measurement Feedback Systems

Measurement feedback systems (MFS) are a specific type of clinical decision-support HIT that provide: (1) the ability to manage quantitative data from measures that are administered regularly throughout treatment to collect ongoing information about the process and progress of the intervention; and (2) automated presentation of information to support timely and clinically-useful feedback to mental health providers about their cases (Bickman, 2008). There is growing evidence that MFS, through their abilities to support clinical progress monitoring, can improve service outcomes for youth and adults (Bickman, Kelley, Breda, de Andrade, & Riemer, 2011; Lambert et al., 2003). For these reasons, MFS are increasingly popular and applied across a wide range of clinical service settings. Indeed, an in-progress review of all MFS in mental and behavioral health has identified over 40 such systems (Lyon & Lewis, this issue). Although it is difficult to calculate the full costs involved when developing a new HIT system, they typically represent lengthy and resource-intensive processes (Shekelle, Morton, & Keeler, 2006). Due to their rapid proliferation and the current existence of multiple quality examples of MFS products, devoting the considerable resources required to develop novel MFS “from the ground up” is likely to be less cost-effective or useful to the field than the selection and adaptation of existing, high-quality systems for new contexts (Lyon, Borntrager, Nakamura, & Higa-McMillan, 2013).

Adapting HIT to User Needs

In adapting existing technologies – such as MFS – to new settings, careful attention should be paid to the degree of fit between the selected HIT and the destination context. The field of implementation science has identified that innovation-setting appropriateness is a key predictor of uptake and sustained use across contexts and innovations (Aarons, Hurlburt, & Horwitz, 2011; Proctor et al., 2011; Rogers, 2003). Appropriateness is defined as “the perceived fit, relevance, or compatibility of the innovation or evidence-based practice for a given practice setting, provider, or consumer; and/or perceived fit of the innovation to address a particular issue or problem” (Proctor et al., 2011, p. 69). Unfortunately, HIT research has recently been identified as inadequately contextual (Glasgow, Phillips, & Sanchez, 2013). In pursuit of appropriate technologies that satisfy the needs of local users, multiple researchers have suggested that principles and practices drawn from the growing field of user-centered design (UCD) may be particularly relevant to HIT development and adaptation, given its emphasis on incorporating the perspectives of end users into all phases of the product development sequence (Bickman, Kelley, & Athay, 2012; Mohr et al., 2013). UCD is an approach to product development that grounds the process in information about the people who will ultimately use the product (Courage & Baxter, 2005; Norman & Draper, 1986). UCD is deeply ingrained in the contemporary discipline of human-computer interaction and the concepts of human centered design, user experience, experience design, and meta-design, among others. In particular, UCD utilizes methods of contextual inquiry, which include documentation of user workflows and behaviors for the purposes of formative evaluation to guide technology design or redesign, thus enhancing appropriateness.

The Contextualized Technology Adaptation Process (CTAP)

In light of accelerating technology development, inadequate HIT implementation in typical service contexts, and variable HIT appropriateness across settings, new approaches are necessary to ensure that existing, high-quality healthcare technologies can be efficiently adapted for use in new environments. We therefore propose a preliminary Contextualized Technology Adaptation Process (CTAP), which integrates existing UCD processes for interactive system design (e.g., International Standards Organization, 2010) and components of leading implementation science models for innovation adoption and sustainment (e.g., Aarons et al., 2012; Aarons et al., 2011; Chambers et al., 2013) with the goal of producing locally relevant adaptations of existing HIT products. The CTAP was originally developed to inform the in process adaptation of a MFS to a novel context (see Current Aims below) and may be refined over time as that initiative progresses or as it is used to guide HIT redesign across other projects and settings. Although the CTAP is expected to facilitate the successful implementation of HIT, it is not intended to be an implementation framework; more than 60 of which currently exist (Tabak, Khoong, Chambers, & Brownson, 2013). Instead, CTAP is conceptualized as a technology adaptation framework, given that its primary emphasis is on producing a revised, contextually appropriate technology rather than ensuring the continued use of that technology. Nevertheless, CTAP is heavily influenced by, and designed to be compatible with, existing implementation frameworks.

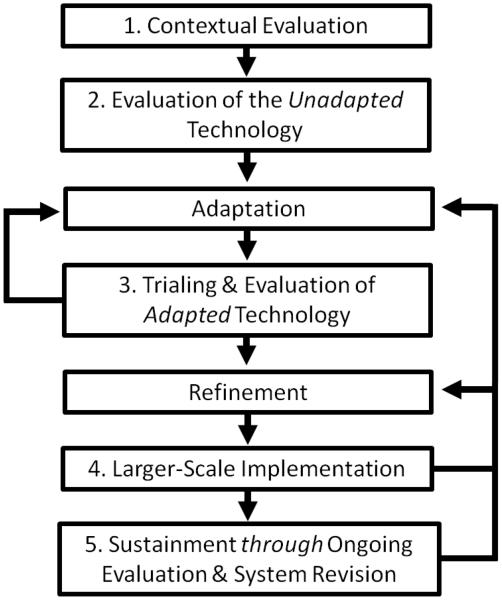

Consistent with both implementation science and UCD recommendations (e.g., Vredenburg, Isensee, & Righi, 2001; Palinkas et al., 2011), the CTAP incorporates repeated, mixed qualitative and quantitative assessments to guide the redesign of HIT, ensure high compatibility with a destination setting, and contribute to systematic, “evidence-based IT design” (Butler, Haselkorn, Bahrami, & Schroder, 2011). When considering the sustainment of innovations in new contexts, Chambers, Glasgow, and Stange (2013) articulated the importance of continually refining and improving interventions to optimize intervention-setting fit. Recent work in HIT has also argued for an iterative approach (Glasgow et al., 2014). The CTAP is similarly iterative and, drawing from contemporary approaches within UCD, emphasizes direct user input to guide its ongoing HIT adaptation and improvement across five phases, each of which carries its own information needs. CTAP phases include: (1) Evaluation of the destination context, including existing technologies, workflows, and relevant clinical practices or content areas; (2) Evaluation of the unadapted technology through user interactions and expert input, (3) Trialing and usability evaluation of the adapted technology, (4) Larger-scale implementation and refinement of the adapted technology, and (5) Sustainment through ongoing evaluation and system revision. Figure 1 displays these phases as well as recursive arrows to represent key decision points and opportunities to return to previous phases when indicated.

Figure 1.

Contextualized Technology Adaptation Process (CTAP)

Based on natural differences among technologies (e.g., complexity, intended frequency of use), contexts (e.g., user types and experiences, organizational policies), and resources (e.g., time, money to devote to programming), we contend that there is no single correct way to satisfy the information needs detailed for each CTAP phase. Indeed, product design or redesign timelines are often quite short, necessitating flexibility and various tradeoffs at each phase. Although extensive information gathering and system revision across all CTAP phases could easily take five or more years and millions of dollars to complete, more rapid or streamlined approaches may be sufficient to effectively inform system revision. For instance, a considerable amount has been written in the UCD literature surrounding cost-effective or “good enough” methods for completing user testing (e.g., Krug, 2014) and the utility of small user samples to effectively identify and correct design problems (e.g., Lewis, 1994; Turner et al., 2006). While there are likely to be scenarios where extensive resources are necessary, the anticipated impact of many of the methods discussed below comes from deliberate consideration of important inputs from the very early stages of the technology adaptation process, thus allowing for changes to be made at a time when correcting design problems is substantially more cost-effective. Although projects will invariably differ based on immediate needs and resources, information gathering across all phases should generally be scoped in accordance with the intended breadth of the specific technology roll-out (e.g., single clinic, larger organization, entire service system) and clearly articulated research questions about users (e.g., What information sources do users rely on for decision making?; Where do users get “stuck,” or spend unnecessary time, when completing tasks?), settings (e.g., What organizational policy or resource constraints must be accommodated?), or the technology itself (e.g., Is the HIT solution differentially effective for different user types?; Among multiple design options, which is most intuitive, appealing, and consistent with user expectations?; Are users able to locate and use key features efficiently?). Below, we briefly describe each CTAP phase with explicit attention to the range of methods through which investigators can satisfy its phase-specific information needs, after which we present example data derived from the first phase (contextual evaluation) of a CTAP-informed MFS adaptation in a local school mental health initiative.

Contextual evaluation (Phase 1)

As indicated earlier, the fields of UCD and implementation science both place high importance on the context into which innovations will be placed (Aarons et al., 2011; Damschroder et al., 2009; Glasgow et al., 2014; Holtzblatt, Wendell, & Wood, 2004). Although destination contexts represent complex social systems with a myriad of factors that may influence the appropriateness of HIT, CTAP articulates three key contextual components believed to be most relevant to the adaptation of an existing technology for mental health service delivery: (1) clinical tasks and workflows, (2) existing technological resources, and (3) the specific clinical content domain supported by the technology.

Understanding users’ clinical workflows and translating them into a well designed HIT is vital to the success of the technology and the adequacy of clinical service delivery (Rausch & Jackson, 2007). We define clinical workflows as the sequential events that occur as clinical service providers perform any aspect of their jobs including, but not limited to, clinician-client interactions. Phase 1 of the CTAP seeks to align technology system redesign with these workflows by first documenting them and then considering their fit with different system capabilities. In addition, it evaluates existing technologies currently in use in the destination context (e.g., EHRs, other data systems) with particular attention to why clinicians have chosen to use those tools and whether there may be opportunities for interference or redundancies across systems. Phase 1 information gathering also includes an extensive evaluation of the types of technologies users currently employ when completing job tasks (e.g., text messaging or social media for communications related to service delivery), as well as their perceptions about the positive and negative attributes of those technologies. Evaluation of this “technology landscape” within the destination context serves to ensure that any overlap or redundancy between existing technologies and the HIT selected for redesign are identified and can be dealt with directly. Finally, the current state of practices that relate to the content area that the technology will ultimately support should be evaluated carefully (e.g., identifying current psychiatric prescribing practices prior to redesigning a prescription decision support tool). Such evaluation provides essential information about the ways and extent to which providers already engage in specific target behaviors (e.g., clinical practices) and identifies barriers that can be fed into the redesign process to ensure that the adapted technology mitigates them to the extent possible.

Evaluations of all three types of Phase 1 CTAP content are most likely to be qualitative in nature, but can also incorporate targeted quantitative data collection (e.g., the frequency with which clinicians engage in particular content practices). Qualitative methods may include focus groups, individual interviews, card-sort tasks, participant observation, or self-report surveys among other approaches. The use of formal task analysis (Diaper & Stanton, 2003; Hackos & Redish, 1998) to break down workflows and job responsibilities into discrete tasks and subtasks may also be useful at this stage. For instance, clinical charting can be subdivided into accessing the computer/EHR (e.g., logging in), consulting other information sources (e.g., personal notes, previous EHR notes), recalling session events, writing the note, reviewing the note, and then signing to indicate its readiness for a supervisor’s review. In a particularly sophisticated example of workflow modeling that makes use of task analysis, Butler and colleagues (2011) presented the Modeling & Analysis Toolsuite for Healthcare (MATH), which consists of a methodology and software tools for modeling clinical workflows and integrating them with system information flows. The tools allow each task within a workflow to be captured with regard to its information needs, information sources, and information outputs. Although the assessment of mental health service delivery workflows is inherently difficult given the complexities of human interactions and the nature of psychosocial interventions, key workflow information may also be derived from querying staff about their activities and behaviors, reasons for those behaviors, and concerns about current procedures (Rausch & Jackson, 2007). Such methods may also be used effectively to gather information about current technologies and the specific clinical content domain, as indicated above.

Quantitative data collected during Phase 1 may include structured tools to assess practitioner attitudes or behaviors as they related to specific clinical practices or digital technologies. For instance, a project designed to adapt a technology to support clinician assessment practices may administer the Current Assessment Practice Evaluation (Lyon, Dorsey, Pullmann, Silbaugh-Cowdin, & Berliner, 2015) to determine the current state of standardized assessment use in the destination context. If the emphasis is on the adaptation of a computer-assisted therapy product, quantitative assessment tools may include the Computer-Assisted Therapy Attitudes Scale (Becker & Jensen-Doss, 2013) to evaluate therapist comfort with and perceptions about computer-assisted therapies.

Evaluation of the unadapted technology (Phase 2)

Because the goal of the CTAP is technology adaptation, rather than the costly, protracted, and potentially redundant development of a novel HIT system, close evaluation of the original technology is essential. The central objectives of the second CTAP phase are to obtain information about the appropriateness of the unadapted technology for the destination context and identify key aspects of the system that are most in need of redesign. Systems can be defined by both their functionality, the operations they are able to complete (e.g., tracking client outcomes), as well as their presentation, the way in which they communicate to users (e.g., navigation, use of color to communicate meaning) (Rosenbaum, 1989), both of which should be considered at this stage.

The field of UCD emphasizes the incorporation of information sources that represent user needs and, whenever possible, are derived from direct interactions between users and various features of the technology (Tullis & Albert, 2013). Although heuristic analysis – in which experts review a product and identify problems based on a set of established principles (De Jong & Van Der Geest, 2000) – may also be appropriate in Phase 2, methods will ideally involve structured or unstructured opportunities for users to explore the technology in order to identify useful and irrelevant features. Data collection activities in this phase may involve a period of trialing the technology within users’ standard clinical service environments, followed by structured qualitative (e.g., focus groups, interviews) and quantitative feedback. Quantitative feedback may include ratings of system usability (see Phase 3) for benchmarking, or evaluation of implementation constructs related to the characteristics of the technology. Drawing from Diffusion of Innovations Theory (Rogers, 2003), relevant constructs may include the relative advantage, compatibility, complexity, trialability, or observability of the original system (Rogers, 2003). Atkinson (2007) used Diffusion of Innovations to develop a standardized tool for evaluating the characteristics of eHealth technologies as they relate to adoption intentions which, if adapted, could be used with a broader range of technologies as part of a Phase 2 assessment. These kinds of qualitative and quantitative problem discovery studies (Tullis & Albert, 2013), in which users help to evaluate technologies by performing tasks that are relevant to them, rather than being provided with a structured set of pre-defined tasks (as they would in a traditional usability study – below) are particularly useful to the CTAP process because they are more likely to maximize appropriateness.

Trialing and usability evaluation of the adapted technology (Phase 3)

Following a synthesis of the information gathered in Phases 1 and 2, the process of adapting the selected HIT can begin. Phase 3 involves both the development of the initial adapted technology and ongoing small-scale user testing throughout that process. Usability, defined as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use” (International Standards Organization, 1998), is a central focus of this phase. If new features are identified for development (e.g., an alternative navigation interface that better suits clinician workflows), formative usability testing may be used to simultaneously test multiple, low-fidelity prototypes of the updated design with small numbers of users to assess their value.

Usability testing typically involves presenting a new system (or system components) to representative users through a series of scenarios and tasks, and gathering detailed information about user perceptions and their ability to interact successfully with the product. For instance, depending on the specific technology and the objectives of testing, users may be asked to perform actions such as entering patient data, retrieving key clinical information (e.g., diagnoses, most recent encounter), or engaging in system-facilitated clinical decision making. A wide variety of usability metrics in the areas of effectiveness (e.g., errors), efficiency (e.g., time), and satisfaction (e.g., preferences) have been identified (Hornbæk, 2006), which can be used in the context of testing. These metrics generally demonstrate low inter-correlations (Hornbæk & Law, 2007), suggesting the need to account for a range of outcomes in a usability study measurement model. Furthermore, although laboratory-based testing may be applicable to the CTAP, the adapted technology should be placed into trialing scenarios that approximate the intended context of use as quickly as possible (e.g., the in vivo settings used to complete Phase 2 data collection). Regardless, of the setting(s) in which testing occurs during Phase 3, researchers should also consider making use of the wide variety of usability scales developed over the last few decades. These include the Post-Study System Usability Questionnaire (Lewis, 2002), the Computer System Usability Questionnaire (Lewis, 1995), and the System Usability Scale (SUS; Brooke, 1996), among others. The 10-item SUS is generally considered to be among the most sensitive, robust, and widely used usability scales (Bangor, Kortum, & Miller, 2008; Sauro, 2011; Tullis & Stetson, 2004). The SUS yields a total score ranging from 0-100, with scores >70 indicating an acceptable level of usability.

Depending on the scale of problems identified, the technology adaptation team should determine whether the adapted technology is ready for larger-scale implementation (Phase 4; see Figure 1). Multiple sources of information should be used to make this decision, but rules of thumb may be derived from existing data compiled from decades of usability testing. Standardized tools such as the SUS may provide some of the most straightforward inputs into the decision making process about whether to advance to the next CTAP phase. For instance, products that do not meet the cutoff of 70 or higher may require a more substantial redesign prior to transitioning to Phase 4.

Larger-scale implementation and refinement (Phase 4)

Ongoing, everyday use in the destination context allows for evaluation of how system capabilities unfold over time and how they interact with different components of that setting, some of which may not be apparent during more condensed trialing (e.g., how users relate to the system as they become increasingly expert in its use). Phase 4 is therefore focused on larger-scale implementation and summative usability testing, which evaluates how well a full product meets its specified objectives (Rubin & Chisnell, 2008; Zhou, 2007). Information gathering at this stage includes evaluation of user awareness of key features (e.g., summary patient reports in an EHR, patient messaging systems in a mobile health app) as opportunities to make use of these features arise. Interestingly, our anecdotal experience suggests that, even when features have been highlighted during initial system training, user awareness and use of these features is often inconsistent.

Ongoing evaluation in the context of larger-scale implementation allows for more complete evaluation of extended system learnability, or the degree to which an individual is able to achieve a level of proficiency and efficiency with a system over time (Grossman et al., 2009). Learnability may be manifested in a variety of ways including increased speed of task completion (e.g., clicking through a documentation menu) and decreased uncertainty or frustration experienced when navigating a system. During this period, users may also develop workarounds to account for previously undetected design issues (e.g., reverting to paper charts to compensate for problems in EHR documentation capabilities; Flanagan, Saleem, Millitello, Russ, & Doebbeling, 2013). Careful documentation of these workarounds may identify usability issues which can be corrected prior to Phase 5. Furthermore, Phase 4 provides opportunities to track which HIT features are used most and least frequently. If any core components are not being used, these system components can be explored via periodic check-ins and qualitative queries to users and identified as targets for modification or for simplifying the HIT by excluding features that can ‘bloat’ the technology and reduce its ease of use. Ongoing quantitative data collection may also be indicated to determine changes in different usability metrics since initial introduction. Tools such as the SUS may be employed to determine whether perceptions of usability have remained stable; however, it should be noted that SUS scores have been documented to grow more favorable across systems as users grow more familiar with a technology (McLellan, Muddimer, & Peres, 2012).

Sustainment through continued adaptation and evaluation (Phase 5)

Consistent with recent frameworks for innovation sustainment in healthcare (e.g., Aarons et al., 2011; Aarons et al., 2012; Chambers et al., 2013), the CTAP recognizes that “the only constant is change” in HIT redesign. In their recent presentation of the Dynamic Sustainability Framework, Chambers and colleagues (2013) described the importance of continued learning and problem solving, continued adaptation of innovations with a primary focus on fit between interventions and multi-level contexts, and expectations for ongoing improvement as opposed to diminishing outcomes over time. Among contemporary software packages (e.g., operating systems), frequent (and often automatic) updates are common and essential given the continued evolution of user expectations for faster products, system integration, and better interfaces; advances in underlying technological capabilities (e.g., processing speed and interoperability); and ongoing opportunities for the identification and remediation of system bugs or design flaws. Within the CTAP, updates – whether subtle or substantial – are conducted in the context of continued evaluation.

Multiple methods exist to evaluate continued changes over time, some of which overlap with previous CTAP phases. Subtle changes (e.g., different navigation or user interface options) may be evaluated via live-site metrics (e.g., page views, time spent in different system task pathways) and “A/B” tests in which a control or original design is compared to a novel, alternative design (Tullis & Albert, 2013). If changes are small, users may be selected randomly to see one system or another, allowing for a true experimental design. Even in the absence of multi-group approaches, quantitative and qualitative data collection from users in the form of surveys or interviews can help to evaluate the impact of changes. Additionally, quantitative data-mining techniques may be used during this stage to augment qualitative approaches and determine the severity of system problems. Relevant data-mining techniques may include using large usability datasets to identify association rules, a descriptive technique for finding interesting or useful relationships in usability data (e.g., determining if patients with particular characteristics are likely to be missing key data in an EHR, suggesting problematic usability for those cases), and decision trees, flow-chart systems used to classify tests of specific instances at higher nodes and predict usability problems at descending nodes (e.g., comparing different versions of a mobile mood monitoring app and predicting task non-completion or long task completion times based on its user interface characteristics) (González, Lorés, & Granollers, 2008). Finally, a key assumption of the CTAP is that Phase 5 has no true end point. Although resources devoted to updates may be reduced over time, ongoing involvement by the adaptation team (or their designees) will be necessary to make continued improvements until such point a HIT product is de-adopted or replaced.

Current Project Aims

In addition to describing the CTAP framework (above), the primary aim of the current paper is to present data from the application of CTAP Phase 1 to the redesign of a MFS for use by school-based mental health (SBMH) providers. Phase 1 was selected because a description of the activities and results contained within all CTAP phases is beyond the scope of a single paper, because data collection from subsequent phases is still ongoing, and because Phase 1 best exemplifies the contextually grounded approach that is central to the CTAP framework. Furthermore, schools represent the most common service delivery sector for youth (Farmer et al., 2003), but one in which evidence-based practices are used inconsistently (Owens et al., 2014). Recent research suggests that school-based clinicians encounter a number of barriers to the incorporation of routine assessment and monitoring into their practice, which MFS are designed to support (Connors, Arora, Curtis, & Stephan, 2015). In the setting for the current project, Lyon and colleagues (in press) recently explored SBMH practitioners’ use of standardized assessment and progress monitoring (clinical content domain) using qualitative focus groups and found that assessment tool use appeared to be relatively uncommon. When they were used, assessments were applied for the purposes of eliciting information from students, giving feedback, providing validation, triage, informing session/treatment structure, and progress monitoring over time. Various factors were also identified as affecting assessment tool use, include those at the client (e.g., presenting problem, reading level, time required), provider (e.g., knowledge/training in assessment use), and system levels (e.g., culture of use).

Although the studies described above provide a reasonable amount of design-relevant information about the clinical content domain in the destination setting (e.g., how assessments are used, what information sources are most relevant), there is presently very little information available about the remaining two CTAP Phase 1 information categories as they related to MFS: SBMH provider workflows and the extent to which they utilize digital technologies as a component of their professional roles. To address this gap and inform the redesign of a MFS for use in schools, the current project was intended to answer the following qualitative research questions: (1) How do school-based mental health providers describe their clinical workflows? (2) To what types of technological infrastructure do providers report having access and what reasons do they give for using / not using those technologies?

Method

The current study evaluated the clinical workflows and existing technologies used by school-based providers using qualitative data analysis. All data were collected via focus groups (discussed below). Study procedures were conducted with approval from the local institutional review board and all participants completed standard consent forms.

Participants and Setting

The current project was part of a larger initiative focused on enhancing the services provided by mental health clinicians working in school-based health centers (SBHCs) in middle schools and high schools in a large urban school district in the Pacific Northwest. SBHCs are a well-established model for education sector healthcare delivery with a proven track record for reducing disparities in service accessibility (Gance-Cleveland & Yousey, 2005; Walker, Kerns, Lyon, Bruns, & Cosgrove, 2010). Out of 17 invited mental health clinicians working in the district, fifteen SBHC providers participated in the study. Most participants had a master’s degree in social work, education, or counseling (one had a PsyD). Participants were 87% female and 87% Caucasian. Most functioned as the sole dedicated mental health provider at their respective schools. Three participants also worked as supervisors within their agencies.

Measurement Feedback System

An existing MFS, the Mental Health Integrated Tracking System (MHITS) was selected for the current project via a collaborative process that involved the local department of public health, community organizations that staffed the SBHCs, and the investigators. MHITS was originally developed to support the delivery of an integrated care model for adults in which depression intervention was delivered in primary care settings. The system was selected on the basis of empirical support for its use in improving mental health services provided in primary care settings similar to SBHCs and proven ability for broad system-wide implementation (Unützer et al., 2012; Unützer et al., 2002; Williams et al., 2004). MHITS is a web-based, HIPAA-compliant caseload management system that contains a number of key capabilities, including the ability to administer standardized instruments for the purposes of screening and routine outcome monitoring; cues to providers based on severity or inadequate progress; structured clinical encounter templates; and permission-based login accessibility for different types of providers, supervisors, or consultants. The MHITS software was developed using WebObjects (Apple Inc.), a Java-based application server framework, with a relational database MySQL (Oracle Corp.), and an Apache web server. The MHITS was originally developed to support a multi-site trial of depression quality improvement in primary care (Unützer et al., 2001), but at the time of the project’s initiation, the technology had already been adapted to support a range of different patient populations, which had produced an infrastructure to facilitate additional adaptations.

Procedures

To collect information about the destination context (CTAP Phase 1) and guide system redesign, clinicians participated in semi-structured focus groups intended to gather data about their workflows/tasks, access to and use of technology in their jobs, as well as perceptions about and use of standardized assessment tools. Information drawn from these focus groups related to their use of standardized tools is summarized elsewhere (Lyon et al., in press). In order to achieve manageable groups of approximately equal size, two focus groups occurred simultaneously. One researcher facilitated each group with a third floating between the two. Three practitioners were unavailable to participate in the in-person focus groups and, instead, responded to the focus group questions (see below) over the phone.

Semi-structured qualitative focus group protocol

Focus groups and make-up interviews followed a standard outline. Questions evaluated in the current study centered on provider workflow (e.g., “Knowing there is no such thing as a typical day, think back over the past month and pick a day that is most representative and walk us through that work day,” “How often do you consult and interface with teachers and other school personnel?”) and their use of technology in their clinical roles (e.g., “Describe any technical infrastructure [e.g., computers, electronic health record] currently involved in your job,” “What worries do you have about incorporating additional technologies into your work?”).

Data Analysis

All focus groups and make-up interviews were digitally audio recorded, transcribed, and coded using qualitative coding software (ATLAS.ti; Friese, 2012). Coding used a conventional content analysis approach, which is designed to identify the contextual meaning of communications (Hsieh & Shannon, 2005). Four trained coders reviewed the transcripts initially and then met to identify potential codes, producing in an initial codebook. This codebook was then trialed and revised over subsequent transcript reviews. After a stable set of codes was developed, a consensus process was used in which all four reviewers independently coded (or re-coded) all of the transcripts and met to compare their coding to arrive at consensus judgments through open dialogue (DeSantis & Ugarriza 2000; Hill, Knox, Thompson, Nutt Williams, & Hess, 2005; Hill, Thompson, & Nutt Williams, 1997). Consensus coding is designed to capture data complexity, avoid errors, reduce groupthink, and circumvent some researcher biases. In the results below, specific code names are indicated in italics.

Results

RQ1: How do School-based Mental Health Providers Describe their Clinical Workflows?

RQ1 investigated the clinical workflows of school-based providers. Participant descriptions during the focus groups yielded codes related to the activities and tasks in which providers engage in their professional roles. In general, participants described a wide range of activities (see Table 1), which needed to be prioritized for consideration during MFS redesign. For instance, workflow activities described included clinician accounts of their engagement in communications with different types of individuals. These included internal communications, which occurred among professionals within the school setting (e.g., teachers, other health providers), and external communications (i.e., professionals or family members outside the school walls). Activities determined to be most relevant to the redesign of the MHITS included various types of clinical service encounters (i.e., individual sessions, leading groups, conflict mediation, crisis management, and check-ins/follow-up meetings) to which the adapted system would need to be directly applicable. Other activities that were determined to be clinically relevant, but which did not involve direct contact with identified students included classroom observations and student outreach. Additionally, clinicians described a variety of non-clinical administrative tasks such as scheduling/pass arrangement (some clinicians scheduled their own meetings while others had their schedules managed by office staff), paperwork and charting, as well as session prep time, many of which required a considerable amount of their time. To a lesser extent, participants also indicated spending time working with other colleagues in the context of educational or clinical team meetings (e.g., individualized education program meetings, clinical supervision) and work on professional committees. Related, some providers reported that, despite not being school employees, they were sometimes given school-related duties (such as monitoring the hallways during class transitions), but generally considered this to be outside of their professional roles.

Table 1.

SBHC Clinician Workflow Codes

| Code | Brief Description |

|---|---|

| Activity | Descriptions of the common activities/responsibilities of SBHC counselors. |

| Clinical Service Encounters | |

| Individual Sessions | Working with youth individually, usually in their office. |

| Leading Groups | Leading or organizing groups for students that relate to certain mental health issues with a prevention or intervention focus |

| Conflict Mediation | Providing mediation surrounding student interpersonal peer conflicts. |

| Crisis Management | Responding to student or school-level crises |

| Check-in/Follow-up | Interactions with students that fall outside of regular service provision. |

| Communications | |

| Internal Communication | Communications with other school or SBHC employees/staff (e.g., phone calls, consultation, internal referrals). |

| External Communication | Communications between school-based providers and external individuals (e.g., providers from other agencies, parents of clients). |

| Setting Engagement | |

| Classroom Observation | Conducting classroom observations of identified students. |

| Student Outreach | Participating in outreach efforts to make students aware of the services available in SBHCs (e.g., visits to classrooms). |

| Administrative Tasks | |

| Scheduling/Passes | Spending time scheduling students themselves and/or writing, delivering, coordinating, or following up on passes. |

| Paperwork | Time spent writing notes, “charting,” or completing paperwork. |

| Prep Time | Time spent preparing for group or individual sessions or other responsibilities. |

| Group Meetings | |

| Team Meetings | Attending / participating in team meetings (e.g., IEP meetings, supervision) |

| Committee | Spends time working on professional committees. |

| Other | |

| School-Related Duties | Performing, or feeling pressure to perform, duties related to running the school, which fall outside of their duties as a mental health professional. |

| Activity Location | Describes the location of any of their activities or, more generally, where clinicians tend to perform most of their duties. |

|

| |

| Time | Description of the time involved in an activity, the time of day something happens, how things change over the course of the year, etc. |

| Session Length | Lengths of sessions with youth |

| Between Sessions | Length of time between sessions/meetings with students. |

| After School | Work activities (e.g., seeing kids) that occur after the end of the school day. |

To facilitate an in-depth understanding about how practitioners’ duties are distributed in space and time, a code for activity locations was used as well as a series of codes related to the placement of activities/tasks in time (i.e., the time of day they occurred or the amount of time required to complete them). With the exception of activities such as classroom observations or student outreach activities, coding generally indicated that clinicians completed the overwhelming majority of their work tasks in their dedicated offices. Time codes indicated that individual sessions were the most common clinical service format and that sessions generally lasted between 10-20 and 50-60 minutes (“Sometimes I have 45 minutes for the kid, but by the time they actually show up in my office I get 15 minutes left”), with only short breaks between sessions. Clinicians also reported between 10 and 25 minutes between sessions to chart, but that this time was often usurped by other responsibilities (e.g., “I always have the intention of charting, but it usually gets taken up by either security or teacher calling or some other issue or trying to hook up outside things for kids”). Although some clinicians reported occasionally scheduling clients after school, this practice appeared to occur relatively infrequently.

RQ2: What Types of Technologies do Providers Report are Useful to Them in Their Professional Roles and What Reasons do They Give for Using or Not Using Those Technologies?

Clinicians described a wide range of existing technology infrastructure that they considered useful and relevant to their professional roles, although their access to these technologies was variable. Infrastructure discussed included tools for internal or external communications (e.g., email, texting, social media), basic computing infrastructure (e.g., personal computers [PCs] – although some had only had them for one year, all providers reported having PCs in their offices; Microsoft Office), existing clinical tools (e.g., EHRs), and educational information systems (e.g., used by district personnel to track student attendance, academic performance, etc.). Although no clinicians reported using technologies that fulfilled the assessment and progress monitoring functions supported by a MFS, providers who had EHRs discussed the potential for duplicative data entry if they are required to document components of their clinical encounters in those systems and in a MFS.

Reported use of the types of technologies indicated above was also variable, with SBMH clinicians indicating a wide range of influences on use at the client, provider, and system levels (Table 2). At the client level, all discussion was focused on provider perceptions of the role of client responses to their use of technology in session. Comments in this category suggested that clinicians perceived client response to be both a potential barrier and facilitator to their own technology use. Although some comments were based on direct experience (e.g., one clinician had used biofeedback software with clients and found that it was met with a high degree of enthusiasm), others were more hypothetical and rooted in clinicians’ beliefs that incorporating technology could negatively impact rapport (“in some ways it kind of introduces another barrier when you’re [on] a machine instead of trying to establish engagement and relationship”).

Table 2.

Technology Codes

| Code | Brief Description |

|---|---|

| Infrastructure | Existing technology infrastructure found to be useful to school mental health providers. |

|

| |

| Tech Use | Reported influences on whether or not providers use technologies in their jobs or the extent to which they are able to use them successfully. |

| Client | |

| Response | Client response to the use of technology in some component of their care. |

| Provider | |

| Time | Limited time or energy to incorporate new technologies or attend trainings for new technologies. |

| Prescriptive | Clinicians referencing situations in which technology mandates a certain course of action. |

| Effective | Technology needs to “work” or support providers in the way intended. |

| Personal | Personal orientation toward (or against) technology. |

| Practice | Use of technology is consistent or inconsistent with the provider’s practice and training. |

| Enhance | Expressed belief that using technologies helps to enhance practice. |

| System | |

| Capacity | Current technology resources facilitate or inhibit clinicians’ abilities to run new software effectively (e.g., network speed). |

| Changes | Changes in the system are frequent, inhibiting providers’ abilities to develop and maintain proficiency. |

| Policy | Organizational policies prohibiting or mandating the use of a technology or pieces of a technology. |

| Cost | Cost of acquiring technologies. |

In addition to the impact of technology on clients, most discussion was focused on factors at the provider level, with respondents revealing that they often lacked adequate time to learn to use a new technology appropriately. Clinicians also expressed concern that some HIT would be overly prescriptive surrounding their clinical practice, thus decreasing their autonomy, or that technologies need to execute their functions effectively to make them likely to integrate them into their practice. Less immediately relevant to MFS redesign, but nonetheless important, many clinicians expressed personal orientations toward or against technologies in general (e.g., “I'm not so computer literate”), or that they perceived their individual approach to clinical practice to be compatible (or incompatible) with the incorporation of HIT.

At the larger system level, four codes were identified which influenced technology use. These included comments about whether existing technology resources provided sufficient capacity to run modern software (e.g., concerns about being repeatedly “kicked out” of a central server), irritation with frequent changes or updates to technology products that decreased their ability to maintain mastery, policies that interfered with clinician use of technologies that they believed would be valuable (e.g., educational technologies), and the costs associated with the purchase of new tools. Policies, in particular, were emphasized in the discussion because clinicians were not agents of the district and, as such, were not allowed access to the existing district educational data system under FERPA. That system contained various pieces of information (e.g., attendance, homework completion) that clinicians believed to be highly relevant to youth functioning and the SBMH services they provide.

Discussion

As described previously, Phase 1 of the CTAP is principally focused on completing a detailed assessment of a destination context with the goal of generating actionable information about workflows, technology infrastructure, and the specific clinical content domain(s) most relevant to the identified HIT. Although actual redesign and testing of the technology does not begin until Phase 3 (trialing and usability evaluation), Phase 1 provides a series of key inputs that guide information gathering in all subsequent phases and help to form the initial technology adaptation agenda. As an example of the type of information gathering that might occur during Phase 1, the current study considered two domains of these inputs (workflows and technology infrastructures) to inform the eventual redesign of a MFS for use in SBMH, the MHITS. This assessment documented a collection of frequently occurring clinical work tasks that a MFS seems best able to support, including a variety of different types of service encounters (e.g., individual, group), situated in time and space. Furthermore, existing technology resources in the context were detailed and a number of factors were identified at the level of the client, provider, and system that providers described as related to the likelihood that they would use a technology.

Importantly, some workflow- and technology-related findings from the current project were deemphasized for the redesign process. For instance, at the provider level, personal opinions or orientations held about technology in general (e.g., personal and practice codes) were determined to be less fruitful as a primary focus for the redesign effort, given that changing practitioner attitudes directly is likely to require a more comprehensive effort than was possible within the scope of the current project. Nevertheless, based on existing research (Holden & Karsh, 2010), it is anticipated that interactions with a well-designed product that addresses the more specific technological difficulties listed may ultimately improve general user attitudes about the utility of HIT. Instead, efforts were made to address more concrete concerns about ways that technologies were compelling to users or failed to meet their expectations. Below, we provide a synthesis of the resulting codes determined to be most relevant to MFS redesign in the context of their implications for Phase 2 data collection and the subsequent, initial adaptation completed in Phase 3.

Implications for Phase 2 Data Collection

The CTAP is intended to be iterative and, as such, formative information gathered during Phase 1 should directly inform information gathering during Phase 2 (evaluation of the unadapted technology). In general, over the course of the five CTAP phases, information gathering should become increasingly specific and targeted to the needs of the intended users. Given comments about limited practitioner time and energy to master a new technology, Phase 2 in the current project can pay careful attention to the learnability of the unadapted MHITS system and look for ways to reduce complexity and cognitive load (e.g., removing unnecessary screens or tabs; ensuring that the navigation is sufficiently intuitive). For example, MHITS includes a central dashboard view that displays key information for each client (e.g., name, enrollment date, date of initial clinical assessment, number of sessions attended, weeks in treatment, last assessment, most recent standardized assessment tool scores, reminder or warning flags, etc.). Given that best practices in dashboard design suggest that (1) dashboard information should be limited to only the most essential data elements needed to accomplish its purpose (due to limited on-screen real estate) and (2) dashboards must be customized to the requirements of a particular person, group, or function (Few, 2006), Phase 2 may identify dashboard data elements that are less relevant to school-based service delivery and may be removed to reduce complexity. In this way, Phase 2 will also provide an indication of the level of resources (e.g., programming hours) that might be required to enhance the extent to which the interface and navigation of the revised system are engaging and easy to use. Similarly, Phase 2 can help to determine how many of the general concerns that were identified in Phase 1 are likely to become actual concrete concerns in the context of the MHITS redesign. For instance, this includes careful evaluation of how well the unadapted system can run on the existing SBHC computer networks and workstations across sites. Because each SBHC is staffed by one of a number of distinct community-based organizations – each of which has its own infrastructure and resources – different capacity constraints may emerge across clinics. If so, the final adapted system could either be designed with explicit consideration of the needs of the lower capacity sites by limiting the system requirements (e.g., web browser or operating system versions, available RAM) wherever possible (more likely) or a plan can be made to improve the capacity or infrastructure of the sites with lower levels of resources (e.g., purchasing new workstations) (less likely).

The current results also suggest a need to explicitly evaluate client responses to the use of MFS technologies in session. Although increasing research has targeted client perspectives on routine assessment and progress monitoring in youth mental health (e.g., Wolport, Curtis-Tyler, & Edbrooke-Childs, in press), no research has evaluated how specific technologies designed to support those processes are viewed by service recipients. Since no providers had previously used a system that had the type of assessment and monitoring capabilities contained in the selected MFS (e.g., supporting administration of multiple standardized assessment tools over time to be tracked, flagged, and displayed on a dashboard; facilitating communications with additional internal and external service providers), the relevance to the current project of their comments describing client responses to the use of technology in session are unknown. Many providers reported concerns about using technology in session in the abstract, having never provided technology-supported services themselves. Given that clinicians frequently list potential negative client reactions as a key barrier to the use of new clinical innovations in practice (Cook, Biyanova, & Coyne, 2009), directly evaluating this assumption via data collection in Phase 2 and beyond (e.g., by explicitly asking providers about their clients’ reactions to their use of technology following the trial, or collecting data directly from clients about their perceptions) is likely be provide important insights to the field in general and to the current MFS redesign process specifically.

Lastly, the focus group transcripts suggested that EHRs, which were in use by a subset of the participants at the time data collection occurred, may demonstrate some degree of overlap with the unadapted version of the selected MFS technology given their focus on capturing information about service recipients (e.g., demographics, alcohol/drug history, mental health history, family/social background, medications, clinical formulation, diagnoses) and behavioral health encounters. Nevertheless, following Phase 1, the degree of overlap remained largely unknown since the providers did not have direct experience using both systems. In Phase 2, providers acquire this experience and will be able to more directly evaluate which specific features of the unadapted system may be redundant with providers’ EHRs in order to drive decisions about possible elimination, revision, or integration. For example, although MHITS contains a variety of functions unlikely to appear in contemporary EHRs – such as dynamic caseload lists (i.e., sortable lists displaying key aspects of treatment progress for all patients on a caseload), graphing multiple standardized measures over time, and the ability to track the success of referrals to additional providers – other functions (e.g., capturing different types of protected health information, detailed information about clinical encounters) may be more or less relevant to providers depending on their organization’s EHR status. Feedback during Phase 2 may therefore differ depending on whether or not users have also implemented an EHR. Practitioners from agencies that have not yet adopted an EHR may request greater functionality (e.g., articulating detailed treatment plans or capturing specific information about the services delivered during session), which could create additional opportunities for redundancies for practitioners from other agencies. If identified, balancing these conflicting needs may be achieved by allowing some users to toggle certain capabilities on or off to allow them to continue to use their existing EHR infrastructure, or to develop data or text export capabilities to support system interoperability.

Implications for System Redesign in Phase 3 and Beyond

Phase 3 of the CTAP involves the development and trialing of the first working version of the adapted technology. Although any design directions identified based on Phase 1 or 2 data collection should be formally tested and reevaluated in Phase 3, findings from the current study did provide preliminary insights into some of the characteristics of the MFS adaptation. First, although the unadapted MHITS was optimized for use with a web browser running on a standard computer operating system platform, the adaptation team originally considered offering an adjunctive mobile technology solution as well (e.g., practitioner access on a tablet computer) to make the system more accessible/portable and facilitate the sharing of assessment results with youth. However, the Phase 1 findings that providers all had PCs in their offices and completed the vast majority of their professional tasks in that environment suggest that prioritizing the creation of a version of the MFS that is optimized to run on mobile platforms may be unnecessary. Second, our current coding was able to identify multiple types of clinical service encounters in which providers commonly engaged. These included standard individual sessions with students on their caseloads, group sessions, short-term crisis management or conflict mediation, and follow-up over time with students who are not receiving routine services. To demonstrate a good fit with this component of the SBMH providers’ clinical workflows, the adapted MFS will need to accommodate these activities. For instance, an encounter template could be created for the system that allows for this full range of clinical services. In addition, given the tremendous variability among the number and timing of mental health sessions received by youth in schools (Walker et al., 2010), the system may also require the ability to track students over time in a way that allows them to “flow” in and out of a practitioner’s active caseload without being completely discharged. This could be addressed by a function that allows for some ongoing monitoring of youth who are not actively attending sessions and re-initiation of services when indicated.

Third, to demonstrate a high level of contextual fit and relevance, a revised version of the selected MFS may explicitly incorporate educational data. In the current initiative, the content area originally began focused on the use of standardized assessment tools measuring youth mental health symptoms. Nevertheless, data gathered from SBHC clinicians has identified the importance of educationally relevant idiographic monitoring targets to this process (Lyon et al., in press). This is consistent with research reviewed earlier, which documented that school-based clinicians value educational indicators as much or more than traditional measures of mental health services (Connors et al., 2015). As a result, these data may be appropriate to incorporate into the ultimate system revision plan. Indeed, if SBMH is to embrace calls to “re-imagine” health information exchange and interoperability (Walker et al., 2005) it will be essential to expand assessment domains beyond traditional health and mental health information to relevant educational data. Recent models for incorporating educational data into SBMH clinical decision-making have been presented (Borntrager & Lyon, 2015; Lyon et al., 2013), which could be effectively leveraged via a well-designed MFS.

Finally, although it will not be immediately relevant to Phase 3, the identified concern that frequent updates to technology systems may interfere with practitioners’ abilities to achieve and maintain a high level of proficiency in the use of those system should be considered throughout. Phase 5 assumes that continuous updates will be necessary to sustain a technology, but the intervals between and significance of those updates should be carefully considered with regard to user burden.

Limitations and Additional Considerations

The findings from the current study carry a number of important limitations. First, this paper represents only one example of formative data collection conducted under the first CTAP phase (contextual evaluation) using focus group methodology. As detailed in the introduction, there are many different data-collection approaches available to fulfill the Phase 1 information needs. It is possible that some of these (e.g., direct observation to gather workflow information) may lead to additional or different conclusions. In this vein, although our data collection surrounding clinician workflows incorporated elements of time, it did not include explicit attention to task sequencing over multiple sessions, which may have important design implications. Further, although efforts were made by the focus group facilitators to ensure equal participation, the focus group format may make some participants unwilling to voice their opinions, yielding biased results. Finally, this project was conducted in SBHCs, which, while common, represent just one type of school-based service delivery model in the United States. Provider workflows in particular may not generalize across service delivery models.

Summary and Conclusion

In sum, HIT has become a permanent fixture in the healthcare delivery landscape, the relevance of which is only likely to increase over time. Methods of adapting HIT for use in new contexts may further accelerate the quality improvement mission of many HIT systems. The CTAP framework represents one potential model for how data-driven adaptation may occur in a manner that integrates the UCD and implementation science literatures and, in doing so, is concerned principally with evaluating and responding to the context of use. Although previous research has examined the types of mental health services most likely to be provided in the education sector (e.g., Foster et al., 2005), no previous studies have detailed SBMH provider tasks and clinical workflows with adequate specificity to inform the redesign of digital clinical support tools. When applied to MFS development in schools, the first CTAP phase yielded actionable information to drive key decisions during subsequent data collection and redesign of the MHITS technology. The goal of delivering of high-quality and contextually appropriate HIT-supported services routinely bridges the fields of implementation science and human-centered technology design. Applying knowledge from both fields has the potential to support more widespread and effective HIT use as well as other innovations to improve health services across domains.

Acknowledgments

This publication was made possible in part by funding from grant number K08 MH095939, awarded to the first author from the National Institute of Mental Health (NIMH). The authors would also like to thank the school-based mental health provider participants, Seattle Children’s Hospital, and Public Health – Seattle and King County for their support of this project. Dr. Lyon is an investigator with the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis; through an award from the National Institute of Mental Health (R25 MH080916) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI).

References

- Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, Chaffin MJ. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implementation Science. 2012;7(32):1, 9. doi: 10.1186/1748-5908-7-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):4, 23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atkinson NL. Developing a questionnaire to measure perceived attributes of eHealth innovations. American Journal of Health Behavior. 2007;31(6):612, 621. doi: 10.5555/ajhb.2007.31.6.612. [DOI] [PubMed] [Google Scholar]

- Bangor A, Kortum PT, Miller JT. An empirical evaluation of the system usability scale. International Journal of Human-Computer Interaction. 2008;24(6):574, 594. doi: 10.1080/10447310802205776. [Google Scholar]

- Becker EM, Jensen-Doss A. Computer-assisted therapies: Examination of therapist-level barriers to their use. Behavior Therapy. 2013;44(4):614, 624. doi: 10.1016/j.beth.2013.05.002. [DOI] [PubMed] [Google Scholar]

- Bickman L. A measurement feedback system (MFS) is necessary to improve mental health outcomes. Journal of the American Academy of Child and Adolescent Psychiatry, 47(10), 1114. 2008 doi: 10.1097/CHI.0b013e3181825af8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, Athay M. The technology of measurement feedback systems. Couple and Family Psychology: Review and Practice. 2012;1:274–284. doi: 10.1037/a0031022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, Breda C, de Andrade AR, Riemer M. Effects of routine feedback to clinicians on mental health outcomes of youths: Results of a randomized trial. Psychiatric Services. 2011;62:1423–1429. doi: 10.1176/appi.ps.002052011. [DOI] [PubMed] [Google Scholar]

- Borntrager C, Lyon AR. Client progress monitoring and feedback in school-based mental health. Cognitive & Behavioral Practice. 2015;22:74–86. doi: 10.1016/j.cbpra.2014.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooke J. SUS-A quick and dirty usability scale. In: Jordan PW, Thomas B, McClelland IL, Weerdmeester B, editors. Usability Evaluation in Industry. Taylor & Francis Inc.; Bristol, PA: 1996. pp. 189–194. [Google Scholar]

- Butler KA, Haselkorn M, Bahrami A, Schroder K. Introducing the MATH Method and Toolsuite for Evidence-based HIT; Paper presented at the 2nd Annual AMA/IEEE EMBS Medical Technology Conference; Boston, MA. Oct, 2011. Published online at https://www.uthouston.edu/dotAsset/fcc91d1b-3a16-495b-9809-37e2108ed5e2.pdf. [Google Scholar]

- Chambers D, Glasgow R, Stange K. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8(1):117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers D, Glasgow R, Stange K. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8(1):117. doi: 10.1186/1748-5908-8-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connors EH, Arora P, Curtis L, Stephan SH. Evidence-Based Assessment in School Mental Health. Cognitive and Behavioral Practice. Cognitive and Behavioral Practice. 2015;22:60, 73. [Google Scholar]

- Cook JM, Biyanova T, Coyne JC. Barriers to adoption of new treatments: an internet study of practicing community psychotherapists. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36(2):83, 90. doi: 10.1007/s10488-008-0198-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courage C, Baxter K. Understanding your users: A practical guide to user requirements methods, tools, and techniques. Morgan Kaufmann; San Francisco, CA: 2005. [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS quarterly. 1989:319–340. [Google Scholar]

- Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology: a comparison of two theoretical models. Management science. 1989;35(8):982, 1003. [Google Scholar]

- De Jong M, Van Der Geest T. Characterizing web heuristics. Technical Communication. 2000;47(3):311, 326. [Google Scholar]

- DeSantis L, Ugarriza DN. The concept of theme as used in qualitative nursing research. Western Journal of Nursing Research. 2000;22(3):351, 372. doi: 10.1177/019394590002200308. [DOI] [PubMed] [Google Scholar]

- Diaper D, Stanton N. The handbook of task analysis for human-computer interaction. CRC Press; 2003. [Google Scholar]

- Farmer EM, Burns BJ, Phillips SD, Angold A, Costello EJ. Pathways into and through mental health services for children and adolescents. Psychiatric Services. 2003;54(1):60, 66. doi: 10.1176/appi.ps.54.1.60. [DOI] [PubMed] [Google Scholar]

- Few S. Information dashboard design: The effective visual communication of data. O'Reilly. 2006 [Google Scholar]

- Flanagan ME, Saleem JJ, Millitello LG, Russ AL, Doebbeling BN. Paper- and computer-based workarounds to electronic health record use at three benchmark institutions. Journal of the American Medical Informatics Association. 2013;20(e1):e59–e66. doi: 10.1136/amiajnl-2012-000982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster S, Rollefson M, Doksum T, Noonan D, Robinson G, Teich J. School Mental Health Services in the United States. Center for Mental Health Services, Substance Abuse and Mental Health Services Administration; Rockville, MD: 2005. 2002–2003 (DHHS Pub. No. (SMA) 05–4068) [Google Scholar]

- Friese S. ATLAS.ti 7 User Manual. ATLAS. ti Scientific Software Development GmbH; Berlin: 2012. [Google Scholar]

- Furukawa MF, King J, Patel V, Hsiao C-J, Adler-Milstein J, Jha AK. Despite Substantial Progress In EHR Adoption, Health Information Exchange And Patient Engagement Remain Low In Office Settings. Health Affairs. 2014;33(9):1672, 1679. doi: 10.1377/hlthaff.2014.0445. [DOI] [PubMed] [Google Scholar]

- Gance-Cleveland B, Yousey Y. Benefits of a school-based health center in a preschool. Clinical nursing research. 2005;14(4):327, 342. doi: 10.1177/1054773805278188. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Kessler RS, Ory MG, Roby D, Gorin SS, Krist A. Conducting rapid, relevant research: lessons learned from the my own health report project. American journal of preventive medicine. 2014;47(2):212, 219. doi: 10.1016/j.amepre.2014.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, Phillips SM, Sanchez MA. Implementation science approaches for integrating eHealth research into practice and policy. International Journal of Medical Informatics. 2013;83:e1–e11. doi: 10.1016/j.ijmedinf.2013.07.002. [DOI] [PubMed] [Google Scholar]

- González MP, Lorés J, Granollers A. Enhancing usability testing through datamining techniques: A novel approach to detecting usability problem patterns for a context of use. Information and software technology. 2008;50(6):547, 568. [Google Scholar]

- Grossman T, Fitzmaurice G, Attar R. A survey of software learnability: metrics, methodologies and guidelines. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2009 Apr;:649–658. [Google Scholar]

- Hackos JT, Redish J. User and task analysis for interface design. Wiley; New York, NY: 1998. [Google Scholar]

- Health Information Technology for Economic and Clinical Health Act of 2009. Title XIII of Division A and Title IV of Division B of the American Recovery and Reinvestment Act of 2009 (ARRA), Pub. L. No. 111-5, 123 Stat. 226 (Feb. 17, 2009), codified at 42 U.S.C. §§300jj et seq.; §§17901 et seq. [Google Scholar]

- Heeks R. Health information systems: Failure, success and improvisation. International journal of medical informatics. 2006;75(2):125, 137. doi: 10.1016/j.ijmedinf.2005.07.024. [DOI] [PubMed] [Google Scholar]

- Hill CE, Knox S, Thompson BJ, Nutt Williams E, Hess SA. Consensual qualitative research: An update. Journal of Counseling Psychology. 2005;52:196–205. [Google Scholar]

- Hill CE, Thompson BJ, Williams EN. A guide to conducting consensual qualitative research. The counseling psychologist. 1997;25(4):517, 572. [Google Scholar]

- Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. Journal of biomedical informatics. 2010;43(1):159, 172. doi: 10.1016/j.jbi.2009.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holtzblatt K, Wendell JB, Wood S. Rapid contextual design: a how-to guide to key techniques for user-centered design. Elsevier. 2004 [Google Scholar]

- Hornbæk K. Current practice in measuring usability: Challenges to usability studies and research. International Journal of Human-Computer Studies. 2006;64(2):79, 102. doi: 10.1016/j.ijhcs.2005.06.002. [Google Scholar]

- Hornbæk K, Law ELC. Meta-analysis of correlations among usability measures. Proceedings of the SIGCHI conference on Human factors in computing systems. 2007 Apr; [Google Scholar]

- Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qualitative health research. 2005;15(9):1277, 1288. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- International Standards Organization Ergonomic requirements for office work with visual display terminals (VDTs) – Part 11: Guidance on usability. International Organization for Standardization. 1998:9241–11. [Google Scholar]

- International Standards Organization Ergonomics of human-system interaction – Part 2010: Human centered design for interactive systems. International Organization for Standardization. 2010 [Google Scholar]

- Kokkonen EW, Davis SA, Lin HC, Dabade TS, Feldman SR, Fleischer AB., Jr. Use of electronic medical records differs by specialty and office settings. Journal of the American Medical Informatics Association. 2013;20(1):33, 8. doi: 10.1136/amiajnl-2012-001609. [DOI] [PMC free article] [PubMed] [Google Scholar]