Abstract

In reinforcement learning, a decision maker searching for the most rewarding option is often faced with the question: what is the value of an option that has never been tried before? One way to frame this question is as an inductive problem: how can I generalize my previous experience with one set of options to a novel option? We show how hierarchical Bayesian inference can be used to solve this problem, and describe an equivalence between the Bayesian model and temporal difference learning algorithms that have been proposed as models of reinforcement learning in humans and animals. According to our view, the search for the best option is guided by abstract knowledge about the relationships between different options in an environment, resulting in greater search efficiency compared to traditional reinforcement learning algorithms previously applied to human cognition. In two behavioral experiments, we test several predictions of our model, providing evidence that humans learn and exploit structured inductive knowledge to make predictions about novel options. In light of this model, we suggest a new interpretation of dopaminergic responses to novelty.

Keywords: reinforcement learning, Bayesian inference, exploration-exploitation dilemma, neophobia, neophilia

1 Introduction

Novelty is puzzling because it appears to evoke drastically different responses depending on a variety of still poorly understood factors. A century of research has erected a formidable canon of behavioral evidence for neophobia (the fear or avoidance of novelty) in humans and animals, as well as an equally formidable canon of evidence for its converse, neophilia, without any widely accepted framework for understanding and reconciling these data. We approach the puzzle of novelty through the theoretical lens of reinforcement learning (RL; Sutton and Barto, 1998), a computational framework that is concerned with how we estimate values (expected future rewards) based on experience. Viewed through this lens, novelty responses can be understood in terms of how values learned for one set of options can be generalized to a novel (unexperienced) option, thereby guiding the decision maker’s search for the option that will yield the most reward.

The starting point of our investigation is the idea that value generalization is influenced by the decision maker’s inductive bias (Mitchell, 1997): prior beliefs about the reward properties of unchosen options. An inductive bias is distinguished from non-inductive biases in that an inductive bias involves an inference from observations to unknowns. For example, if you’ve eaten many excellent dishes at a particular restaurant, it is reasonable to infer that a dish that you haven’t tried yet is likely to be excellent as well. In contrast, a non-inductive bias reflects a prepotent response tendency not derived from an inferential process. From a psychological perspective, it seems plausible that humans possess a rich repertoire of inductive biases that influence their decisions in the absence of experience (Griffiths et al., 2010). Here we ask: Does human RL involve inductive biases, and if so, how are the biases acquired and used?

We hypothesize that humans and animals learn at multiple levels of abstraction, such that higher-level knowledge constrains learning at lower levels (Kemp et al., 2007; Friston, 2008; Lucas and Griffiths, 2010). Learning the specific properties of a novel option is guided by knowledge about the class of options to which the novel option belongs—high-level knowledge plays the role of inductive bias. In the restaurant example above, high-level knowledge is comprised of your evaluation of the restaurant, an inductive generalization made on the basis of previous experience at that restaurant as a whole, which enables predictions about new dishes and future experiences. This form of inductive generalization has the potential to accelerate the search for valuable options by effectively structuring the search space.

The inductive nature of responses to novelty is intimately related to the exploration-exploitation dilemma (Cohen et al., 2007), which refers to the problem of choosing whether to continue harvesting a reasonably profitable option (exploitation) or to search for a possibly more profitable one (exploration). Choosing a novel option corresponds to an exploratory strategy. Traditional theoretical treatments approach the problem of determining the optimal balance between exploration and exploitation in terms of the value of information (Howard, 1966): reducing uncertainty by observing the consequences of novel actions is inherently valuable because this can lead to better actions in the future. This principle is formalized in the Gittins Index (Gittins, 1989), which dictates the optimal exploration policy in multi-armed bandits (choice tasks with a single state and multiple actions). The Gittins Index can be interpreted as adding to the predicted reward payoff for each option an “exploration bonus” that takes into account the uncertainty about these predictions. The influence of this factor on human behavior and brain activity has been explored in several recent studies (Daw et al., 2006b; Steyvers et al., 2009; Acuña and Schrater, 2010). We shall come back to the exploration-exploitation dilemma when we discuss the results of Experiment 2.

The rest of the paper is organized as follows. We first review the rather puzzling and contradictory literature on responses to novelty in humans and animals, and relate novelty responses to the neuromodulator dopamine, thought to play an important role in RL. Then in Section 2 we lay out a Bayesian statistical framework for incorporating inductive biases into RL, and show how this framework is related to the temporal difference algorithm (Sutton and Barto, 1998) that has been widely implicated in neurophysiological and behavioral studies of RL (Schultz et al., 1997; Niv, 2009). In Sections 3–4 we present the results of two experiments designed to test the model’s predictions, and compare these predictions to those of alternative models. Finally, in Section 5 we discuss these results in light of contemporary theories of RL in the brain.

1.1 The puzzle of novelty

In this section, we briey survey some representative findings from prior studies of neophobia and neophilia (see Corey, 1978; Hughes, 2007, for more extensive reviews). We define neophobia operationally as the preference for familiar over novel stimuli (and the reverse for neophilia). This encompasses not only approach/avoidance responses (the typical behavioral index of novelty preference), but also instrumental or Pavlovian responses to novel stimuli. For example, in the experiments we report below, we use prediction and choice as measures of novelty preferences, under the assumption that both choice and approach/avoidance result from predictions about future reward (see Dayan et al., 2006).

Evidence for neophilia comes from a variety of preparations. Rats will learn to press a bar for the sake of poking their heads into a new compartment (Myers and Miller, 1954), will forgo food rewards in order to press a lever that periodically delivers a visual stimulus (Reed et al., 1996), will display a preference for environments in which novel objects have appeared (Bardo and Bevins, 2000) and will interact more with novel objects placed in a familiar environment (Sheldon, 1969; Ennaceur and Delacour, 1988). Remarkably, access to novelty can compete with conditioned cocaine reward (Reichel and Bevins, 2008), and will motivate rats to cross an electrified grid (Nissen, 1930). The intrinsically reinforcing nature of novelty suggested by these studies is further indicated by the similarity between behavioral and neural responses to novelty and to drug rewards (Bevins, 2001). Finally, it has been argued that neophilia should not be considered derivative of basic drives like hunger, thirst, sexual appetite, pain and fear, since it is still observed when these drives have ostensibly been satisfied (Berlyne, 1966).

Despite the extensive evidence for affinity to novelty in animals, many researchers have observed that rats will avoid or withdraw from novel stimuli if given the opportunity (Blanchard et al., 1974; King and Appelbaum, 1973), a pattern also found in adult humans (Berlyne, 1960), infants (Weizmann et al., 1971) and non-human primates (Weiskrantz and Cowey, 1963). Flavor neophobia, in which animals hesitate to consume a novel food (even if it is highly palatable), has been observed in a number of species, including humans (Corey, 1978). Suppressed consummatory behavior is also observed when a familiar food is offered in a novel container (Barnett, 1958); animals may go two or three days without eating under these circumstances (Cowan, 1976). Another well-studied form of neophobia is known as the mere exposure effect: simply presenting an object repeatedly to an individual is sufficient to enhance their preference for that object relative to a novel object (Zajonc, 2001). As an extreme example of the mere exposure effect, Rajecki (1974) reported that playing tones of different frequencies to different sets of fertile eggs resulted in the newly-hatched chicks preferring the tone to which they were prenatally exposed.

A number of factors have been identified that modulate the balance between neophilia and neophobia. Not surprisingly, hunger and thirst will motivate animals to explore and enhances their preference for novelty (Fehrer, 1956; File and Day, 1972). Responses to novelty also depend on “background” factors such as the level of ambient sensory stimulation (Berlyne et al., 1966) and the familiarity of the environment (Hennessy et al., 1977). For our purposes, the most relevant modulatory factor is prior reinforcing experience with other cues. Numerous studies have shown that approach to a novel stimulus is reduced following exposure to electric shock (Corey, 1978). One interpretation of this finding is that animals have made an inductive inference that the environment contains aversive stimuli, and hence new stimuli should be avoided. In this connection, it is interesting to note that laboratory rats tend to be more neophilic than feral rats (Sheldon, 1969); given that wild environments tend to contain more aversive objects than laboratories, this finding is consistent with idea that rats make different inductive generalizations based on their differing experiences.

1.2 Dopamine and shaping bonuses

RL theory has provided a powerful set of mathematical concepts for understanding the neurophysiological basis of learning. In particular, theorists have proposed that humans and animals employ a form of the temporal difference (TD) learning algorithm, which uses prediction errors (the discrepancy between received and expected reward) to update reward predictions (Barto, 1995; Houk et al., 1995; Montague et al., 1996; Schultz et al., 1997); for a recent review, see Niv (2009). The firing of midbrain dopamine neurons appears to correspond closely to a reward prediction error signal (Schultz et al., 1997; Hollerman and Schultz, 1998; Bayer and Glimcher, 2005). Despite this remarkable correspondence, the prediction error interpretation of dopamine has been challenged by the observation that dopamine neurons also respond to the appearance of novel stimuli (Horvitz et al., 1997; Schultz, 1998), a finding not predicted by classical RL theories.

Kakade and Dayan (2002b) suggested that dopaminergic novelty responses can be incorporated into RL theory by postulating shaping bonuses—optimistic initialization of reward predictions (Ng et al., 1999). These high initial values have the effect of causing a positive prediction error when a novel stimulus is presented (see also Suri et al., 1999). Wittmann et al. (2008) have shown that this model can explain both brain activity and choice behavior in an experiment that manipulated the novelty of cues. Optimistic initialization is theoretically well-motivated (Brafman and Tennenholtz, 2003), based on the idea that optimism increases initial exploration. However, the contribution of inductive biases to the dopaminergic novelty response has not been systematically investigated, although there is evidence that dopamine neurons will sometimes “generalize” their responses from reward-predictive to reward-unpredictive cues (Day et al., 2007; Kakade and Dayan, 2002a; Schultz, 1998).

It is important to distinguish between multiple forms of generalization that can occur when a cue is presented. For example, Kakade and Dayan (2002b) examined generalization arising from partial observability: uncertainty about the identity of an ambiguous cue. This can result in neural responses to different cues being blurred together (see also Daw et al., 2006a; Rao, 2010), effectively leading to generalization. Similarly, uncertainty about when an outcome will occur can also lead to the blending together of neural responses across multiple points in time (Daw et al., 2006a). Our focus, in contrast, is on generalization induced by uncertainty about the reward value of a cue, particularly in situations where multiple cues occur in the same context. Our conjecture is that contextual associations bind together cues such that experience with one cue influences reward predictions for all cues in that context. In the next section, we present a theoretical framework that formalizes this idea.

2 Theoretical framework

To formally incorporate inductive generalization into the machinery of RL, we appeal to the theory of Bayesian statistics, which has received considerable support as the basis of human inductive inferences (Griffiths et al., 2010), and has been applied to RL in a number of previous investigations (Kakade and Dayan, 2002a; Courville et al., 2006; Behrens et al., 2007; Gershman et al., 2010; Payzan-LeNestour and Bossaerts, 2011). Our contribution is to formalize the influence of abstract knowledge in RL through a hierarchical Bayesian model (Kemp et al., 2007; Lucas and Griffiths, 2010). In such a model, the reward properties of different options are coupled together by virtue of being drawn from a common distribution. As a consequence, an agent’s belief about one option is (and should be) influenced by the agent’s experience with other options.

We derive our Bayesian RL model from first principles, starting with a generative model of rewards that expresses assumptions, which we ascribe to the agent, about the probabilistic relationships between cues and rewards in its environment.1 The agent then uses Bayes’ rule to “invert” this probabilistic model and predict the underlying reward probabilities. Finally, we show that there is a close formal connection between application of Bayes’ rule and TD learning (see Dearden et al., 1998; Engel et al., 2003, for other relationships between Bayes’ rule and TD learning).

2.1 Hierarchical Bayesian inference

For concreteness, consider the problem of choosing whom to ask on a date. Each potential date has some probability of saying “yes” (a rewarding outcome) or “no” (an unrewarding outcome). These probabilities may not be independent from each other; for example, there may be an overall bias towards saying “no” if people tend to already have dates. In the Bayesian framework, the goal is to learn each person’s probability of saying “yes”, potentially informed by the higher-level bias shared across people.

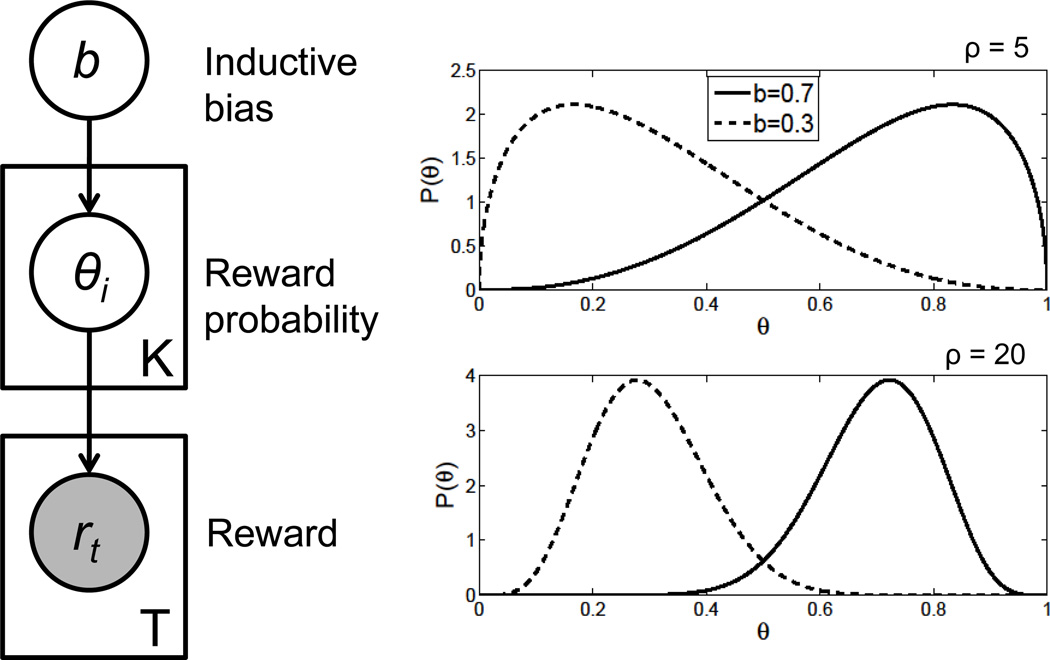

Formally, we specify the following generative model (see Figure 1) for reward rt on trial t in a K-armed bandit (a choice problem in which there are K options on every trial, each with a separate probability of delivering reward):

- In the first step, a bias parameter b, which determines the central tendency of the reward probabilities across arms, is drawn from a Beta distribution:

where b0, the mean, and ρ0, which is inversely proportional to the variance.2 In the dating example, b represents the overall propensity across people for agreeing to go on a date.(1) - Given the bias parameter, the next step is to draw a reward probability θi for each arm. In the dating example, θi represents a particular individual’s propensity for agreeing to go on a date. These are drawn independently from a Beta distribution with mean b:

The parameter ρ controls the degree of coupling between arms: high ρ means that reward probabilities will tend to be tightly clustered around b (see Figure 1).(2) - The last step is to draw a binary reward rt for each trial t, conditional on the chosen arm ct and the reward probability of that arm θct:

where i ∈ {1, …, K} indexes arms (options) and ct ∈ {1, …, K} denotes the choice made on trial t. In the dating example, rt represents whether or not the person you chose to ask out on a particular night (ct) agreed to go on a date.(3)

Figure 1. Hierarchical Bayesian model.

(Left) Graphical representation of the model as a Bayesian network. Unshaded nodes represent unknown (latent) variables, shaded nodes represent observed variables, plates represent replications, and arrows represent probabilistic dependencies. See Pearl (1988) for an introduction to Bayesian networks. (Right) Probability distributions over the reward parameter θ induced by different settings of b and ρ.

Given a sequence of choices c = {c1, …, cT} and rewards r = {r1, …, rT}, the agent’s goal is to estimate the reward probabilities θ = {θ1, …, θK}, so as to choose the most rewarding arm. We now describe the Bayesian approach to this problem, and then relate it to TD reinforcement learning.

Letting Ci denote the number of times arm i was chosen and Ri denote the number of times reward was delivered after choosing arm i, we can exploit the conditional independence assumptions of the model to express the posterior over reward probabilities as:

| (4) |

where Beta(θi; ·, ·) is the probability density function of the Beta distribution evaluated at θi. We have suppressed explicit dependence on ρ, ρ0 and b0 (which we earlier assumed to be known by the agent) to keep the notation uncluttered. The conditional distribution over b is given by:

| (5) |

where ℬ(·, ·) is the beta function. The posterior mean estimator for θi is thus given by

| (6) |

This estimate represents the posterior belief that arm i will yield a reward, conditional upon observing r and c. Although there is no closed-form solution to the integral in Eq. 6, it is bounded and one-dimensional, so we can easily approximate it numerically.

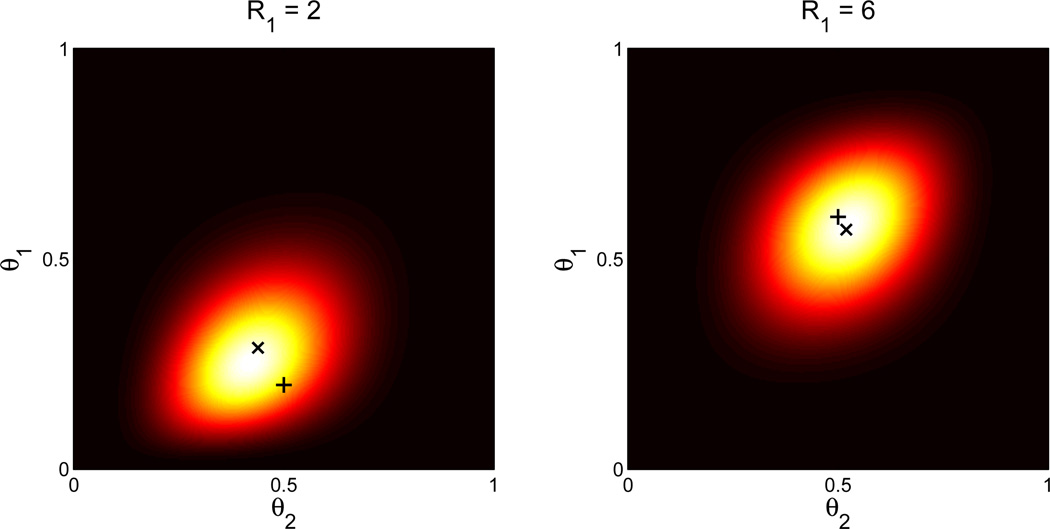

As an illustration of how the estimated reward probabilities are determined by observed rewards, Figure 2 shows examples of the joint posterior distribution for two arms under different settings of R1. Notice that the estimate for θ1 is regularized toward the empirical mean of the other arm, R2/C2. Similarly, the estimate of θ2 is regularized towards the empirical mean of the first arm. The regularization occurs because the hierarchical model couples the reward probabilities across arms. Experience with one arm influences the estimate for the other arm by shifting the conditional distribution over the bias parameter (Eq. 5), which is shared by both arms.

Figure 2. Posterior distribution over reward probabilities.

Heatmap displays P(θ|r, c) for a two-armed bandit under different settings of R1 (lighter colors denote higher probability). The axes represent different hypothetical settings of the reward probabilities (θ1 and θ2). The cross denotes the empirical proportions Ri/Ci, with R2 = 5 and C1 = C2 = 10. The “x” denotes the posterior mean.

2.2 Relationship to temporal difference reinforcement learning

Although we are primarily interested in testing the validity of the Bayesian framework to describe human behavior in relation to novelty, to relate this abstract statistical framework to commonly used mechanistic models of learning in the brain, we now show how a learner can estimate θ̂i online using a variant of TD learning. First, we establish that, for given b, TD learning with a time-varying learning rate directly calculates θ̂i. We then extend this to the case of unknown b. After choosing option ct, TD updates its estimate of the expected reward (the value function, V) according to:

| (7) |

where ηt is a learning rate and δt = rt − Vt(ct) is the prediction error.3 This delta-rule update (cf. Widrow and Hoff, 1960) is identical to the influential Rescorla-Wagner model used in animal learning theory (Rescorla and Wagner, 1972). The same model has been applied by Gluck and Bower (1988b) to human category learning (see also Gluck and Bower, 1988a).

We now establish that Eq. 7 directly computes the posterior mean θ̂i. We proceed by setting the learning rate ηt such that Vt(i) represents the posterior mean estimate of θi after observations 1 to t − 1. Let us define the auxiliary variables s = Ci + ρ and a = Ri + ρb, where the counts reflect observations 1, …, t − 1. For known b, we can re-express Eq. 6 in the following “delta rule” form:

| (8) |

Note that the integral in Eq. 6 has disappeared here because we are conditioning on b. The left-hand side of Eq. 8 is the posterior mean θ̂i after observations 1, …, t, and is the posterior mean after observations 1, …, t − 1. After some algebraic manipulation, we can solve for ηt:

| (9) |

Notice that when t = 1 (i.e., before any observations, when Ri = Ci = 0), . In other words, the above equations imply that the initial value for all options is equal to the prior mean, b. This means that TD learning using and initializing all the values to b yields a correct posterior estimation scheme, conditional on b.

There is a close connection between this posterior estimation scheme and the shaping bonus considered by Kakade and Dayan (2002a) in their model of dopamine responses. Recall that a shaping bonus corresponds simply to setting the initial value to a positive constant in order to encourage exploration. This can be contrasted with a “naïve” TD model in which the initial value is set to 0. The analysis described above demonstrates that according to the hierarchical Bayesian interpretation of TD, the initial value should be precisely the prior mean. Thus, our theory provides a normative motivation for shaping bonuses grounded in inductive inference. Different initial values represent different assumptions (inductive biases) about the data-generating process. Another interesting aspect of this formulation is that larger values of the coupling parameter ρ lead to faster learning rate decay. This happens because larger ρ implies more sharing between options, and hence effectively more information about the value of each individual option.

When b is unknown, we must average over its possible values. This can be done approximately by positing a collection of value functions {Ṽt(i; b1), …, Ṽt(i; bN)}, each with a different initial value bn, such that they tile the [0, 1] interval. These can be learned in parallel, and their estimates can then be combined to form the marginalized hierarchical estimate:

| (10) |

where

| (11) |

The intuition here is that the distribution over b represents uncertainty about initial values (i.e., about the prior probability of reward); by averaging over b the agent effectively smoothes the values to reflect this uncertainty.

To summarize, we have derived a formal relationship between hierarchical Bayesian inference and TD learning, and used this to show how shaping bonuses can be interpreted as beliefs about the prior probability of reward, a form of inductive bias. We have also shown how this inductive bias can itself be learned. The basic prediction of our theory is that preferences for novel options should increase monotonically with the value of other options experienced in the same context. In the following, we describe two experiments designed to test implications of this prediction.

3 Experiment 1: manipulating inductive biases in a reward prediction task

The purpose of Experiment 1 was to show that inductive biases influence predictions of reward for novel options. Our general approach was to create environments in which options tend to have similar reward probabilities, leading participants to form the expectation that new options in the same environment will also yield similar rewards. Participants played an “interplanetary farmer” game in which they were asked to predict how well crops would grow on different planets, obtaining reward if the crop indeed grew. In this setting, crops represent options and planets represent environments. “Fertile” planets tended to be rewarding across many crops, whereas “infertile” planets tended to be unrewarding. The Bayesian RL model predicts that participants will learn to bias their predictions for new crops based on a planet’s fertility. Specifically, participants should show higher reward predictions for novel crops on planets in which other crops have been frequently rewarded, compared to predictions for novel crops on planets in which other crops have been infrequently rewarded. Thus, the model predicts both ‘neophilia’ and ‘neophobia’ (in the generalized sense of a behavioral bias for or against novelty) depending on the participant’s previous experience in the task.

3.1 Methods

3.1.1 Participants

Fourteen Princeton University undergraduate students were compensated $10 for 45 minutes, in addition to a bonus based on performance. All participants gave informed consent and the study was approved by the Princeton University Institutional Review Board.

3.1.2 Materials and procedure

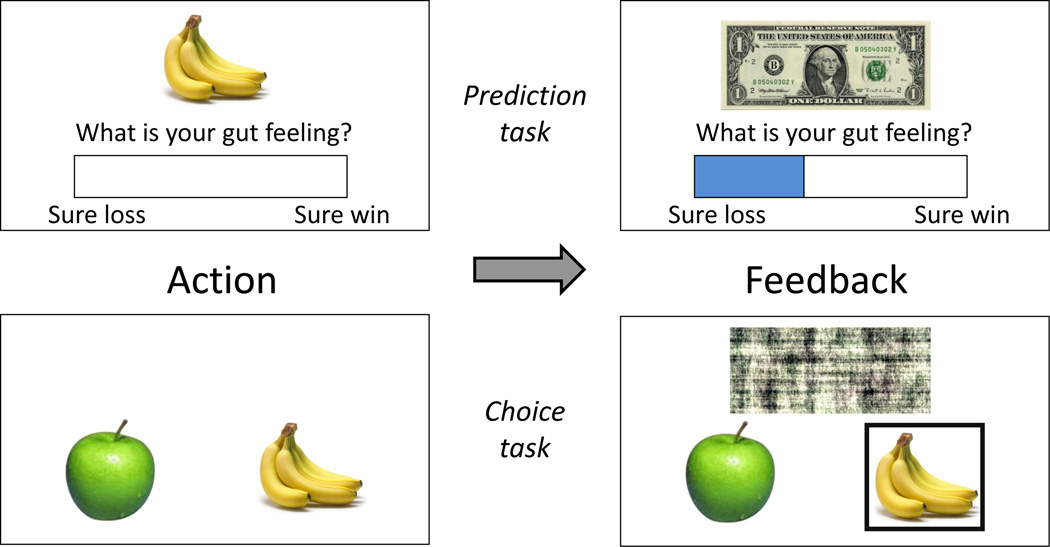

Figure 3 shows a schematic of the task. Participants were told that they would play the role of “interplanetary farmers” tasked with planting various types of crops on different planets, with each crop’s properties specific to a planet (i.e., apples might grow on one planet but not on another). Participants were informed each time they began farming a new planet.4 On prediction trials (Figure 3, top), participants were shown a single crop and asked to rate their “gut feeling” that the crop will yield a profit (i.e., a binary reward). Responses were registered using a mouse-controlled slider-bar. Crops were indicated by color images of produce (fruits and vegetables). The experiment was presented using Psychtoolbox (Brainard, 1997).

Figure 3. Task design.

(Top row) A prediction trial, in which subjects rated their “gut feeling” (using a slider-bar) that a crop will yield a reward. (Bottom row) A choice trial, in which subjects chose between two crops. In both cases participants received (probabilistic) reward feedback (right panels). Receipt of reward is represented by a dollar bill; no reward obtained is represented by a scrambled image of a dollar bill.

After making a response, the participant was presented with probabilistic reward feedback lasting 1000 ms while the response remained on the screen. Reward feedback was signalled by a dollar bill for rewarded outcomes, and by a phase-scrambled dollar bill for unrewarded outcomes. Rewards were generated according to the following process: For each planet, a variable b was drawn from a Beta(1.5,1.5) distribution,5 and then a crop-specific reward probability was drawn from a Beta(ρb, ρ(1 − b)) distribution, with ρ = 5. Participants were told that planets varied in their “fertility”: On some planets, many crops would tend to be profitable (i.e., frequently yield rewards), whereas on other planets few crops would tend to be profitable.

We used a prediction task (rather than a choice task) in order to disentangle inductive bias from the value of gathering information (Howard, 1966). Because rewards in our task do not depend on behavioral responses, participants cannot take actions to gain information. However, to confirm that participants were able to distinguish the reward probabilities of different crops and were assessing each crop separately, we also included periodic choice trials in which participants chose between two different crops. On these trials (Figure 3, bottom), participants were shown two crops from the current planet and were asked to choose the crop they would prefer to plant. Feedback was then delivered according to the same generative process used in the prediction trials. A cash bonus of 1–3 dollars was awarded based on performance on the choice trials by calculating 10 percent of the participant’s earnings on these trials, thus participants were encouraged to maximize the success of their crops on these trials.

Each planet corresponded to 60 prediction trials (6 planets total), with each crop appearing 4–12 times. The crops were cycled, such that three crops were randomly interleaved at each point in time, and every four trials one crop would be removed and and replaced by a new crop. Thus, except for the first and last two crops, each crop appeared in 3 consecutive cycles. Choice trials were presented after every 10 prediction trials, for a total of 6 choice trials per planet.

3.2 Results and discussion

To analyze the “gut feeling” prediction data, we fit several computational learning models to participants’ predictions. These models formalize different assumptions about inductive reward biases. In particular, we compared the Bayesian RL model to simple variations on the basic TD algorithm. The “naïve” TD model initialized values to V1 = 0, and then updated them according to the TD rule (Eq. 7), with a stationary learning rate η that we treated as a free parameter. The “shaping” model incorporated shaping bonuses by initializing V1 > 0. For the shaping model, we treated V1 as a free parameter (thus the naïve model is nested in the shaping model). The Bayesian RL model, as described above, had two free parameters, ρ and b0.

We used participants’ responses on prediction trials in order to fit the free parameters of the models. For this, it was necessary to specify a mapping from learned values to behavioral responses. Letting x denote the set of parameters on which the value function depends in each model, we assumed that the behavioral response on prediction trial t, yt, is drawn from a Gaussian with mean Vt(ct; x) and variance σ2 (a free parameter fit to data). Because there is only one crop on each prediction trial, ct refers to the presented crop on trial t. Note also that Vt is implicitly dependent on the reward and choice history.

The free parameters of the models were fit for each participant separately, using Markov chain Monte Carlo (MCMC) methods (Robert and Casella, 2004). A detailed description of our procedure is provided in the Appendix. Briey, we drew samples from the posterior over parameters and used these to generate model predictions as well as the predictive probability of held-out data using a cross-validation procedure, where we held out one planet while fitting all the others. Cross-validation evaluates the ability of the model to generalize to new data, and is able to identify “over-fitting” of the training data by complex models. We reserved the choice trials for independent validation that participants were discriminating between the reward probabilities for different crops on a planet, and did not use them for model fitting.

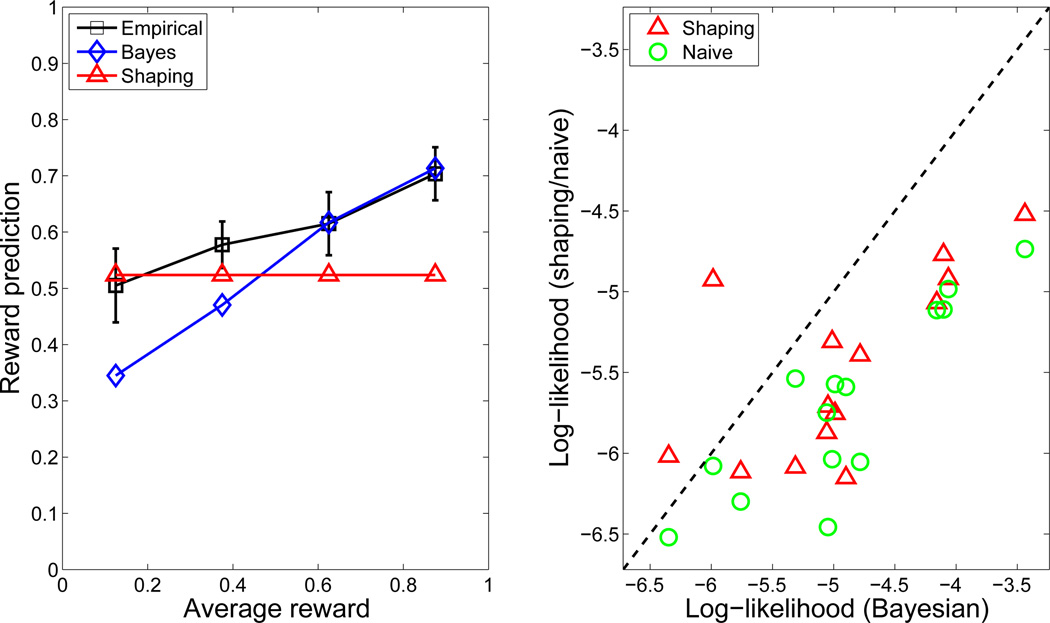

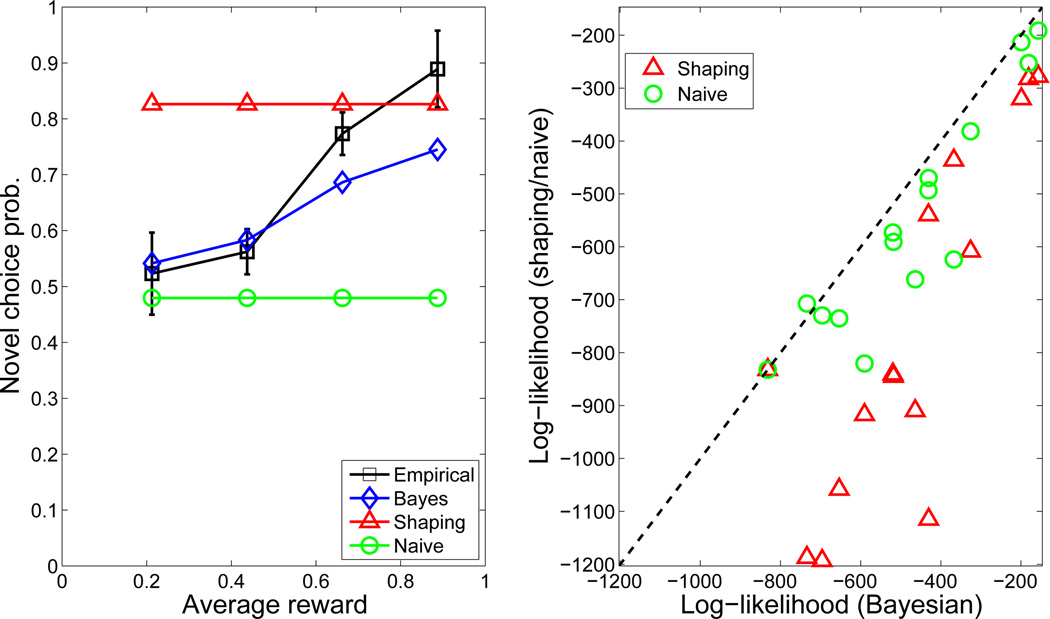

Since we were primarily interested in behavior on trials in which a novel crop was presented, we first analyzed these separately. Figure 4 (left) shows reward predictions for novel crops as a function of average previous reward on a planet (across all crops). Participants exhibited a monotonic increase in reward predictions for a novel crop as a function of average reward, despite having no experience with the crop. This monotonic increase is anticipated by the Bayesian RL model, but not by the shaping model. Participants also appeared to display an a priori bias towards high initial reward predictions (i.e., optimism), based on the fact that initial reward predictions were always greater than 0. The Bayesian RL model was able to capture this bias with the higher-level bias parameter, b0.

Figure 4. Human inductive biases in Experiment 1.

(Left) Empirical and model-based reward predictions for novel crops as a function of average past reward on a planet (across all crops). The average reward only incorporates rewards prior to each response. The naïve RL predictions correspond to a straight line at 0. Predictions were averaged within 4 bins equally spaced across the average reward axis. Error bars denote standard error. (Right) Cross-validated predictive log likelihood of shaping and naïve models relative to the Bayesian model. Points below the diagonal (higher log likelihood) indicate a better fit of the Bayesian model.

Figure 4 (right) shows the cross-validation results for the three models, favoring the Bayesian model. To statistically quantify these results, we computed relative cross-validation scores by subtracting, for each subject, the predictive log-likelihood of the held-out prediction trials under the shaping and naïve models from the log-likelihood under the Bayesian model. Thus, scores below 0 represent inferior predictive performance relative to the Bayesian model. We performed paired-sample t-tests on the cross-validation scores across participants. The scores for the Bayesian model were significantly higher compared to the shaping model (t(13) = 3.42, p < 0.005) and the naïve model (t(13) = 6.87, p < 0.00002). The score for the shaping model was also significantly higher compared to the naïve model (t(13) = 4.44, p < 0.0007).

These results rule out another alternative model that we have not yet discussed. In this model, participants represent the planet context as an additional feature of each trial, which can itself accrue value. When presented with a novel crop, this contextual feature (and its corresponding value) can then guide valuation according to the reinforcement history of other crops on the same planet. The simplest instantiation of such a model would be to calculate the aggregate value of a crop as the sum of its context and crop-specific values, such as in the Rescorla-Wagner model (Rescorla and Wagner, 1972). In fact, this type of feature combination is well-established in the RL literature, where it is known as a linear function approximation architecture (Sutton and Barto, 1998). However, the precise quantitative predictions of such a model disagree with our findings. To correctly predict the reward value, the feature-specific reward predictions should sum to 1. This means that rewards are essentially divided among the features; consequently, when presented with a novel crop, its value under this context-feature model will of necessity be less than or equal to the average reward previously experienced on that planet, in contradiction to the results shown in Figure 4. It is also worth noting that these findings are consistent with the observation in the animal learning literature that contexts do not acquire value in the same way as do punctate cues (Bouton and King, 1983).

An important question concerns whether participants truly learned separate values for each crop or simply collapsed together all the crops on a planet. To address this, we performed a logistic regression analysis on the choice trials to see whether the difference in average reward between two crops is predictive of choice behavior (an intercept term was also included). The regression analysis was performed for each subject separately, and then the regression coefficients were tested for significance using a one-sample t-test. This test showed that the coefficients were significantly greater than zero (t(13) = 5.09, p < 0.0005), indicating that participants were able to discriminate between crops on the basis of their reward history. On average, participants chose the better crop 68% of the time (significantly greater than chance according to a binomial test, p < 0.01).

In summary, this experiment provides evidence that humans and animals learn at multiple levels of abstraction, such that higher-level knowledge (here: about a planet) is informed by, and also constrains learning at lower levels (e.g., about crops).

4 Experiment 2: manipulating inductive biases in a decision making task

Our previous experiment used a reward prediction task as a way of directly querying participants’ values. However, this design sacrifices the decision-making aspect of RL, the source of rich computational issues such as the exploration-exploitation trade-off. It also makes it difficult to distinguish our experiments from formally similar causal learning experiments; indeed, our computational formalism is closely related to Bayesian theories of causal learning (Glymour, 2003; Kemp et al., 2010). Experiment 2 was therefore designed to study inductive biases in a choice setting, where participants are asked to choose crops to maximize their cumulative rewards.

One problem with translating our paradigm into the choice setting is that choices are primarily driven by relative value, and hence when all the crops are of high or low value, any inductive biases related to the context will be obscured by the relative value of the crops. To address this issue, we asked participants to choose between a continually changing crop and a reference crop that was presented on every trial and always delivered rewards with probability 1/2. Inductive biases can then be revealed by examining the probability that a novel crop will be chosen over the reference crop.

4.1 Methods

4.1.1 Participants

Fifteen Princeton University undergraduate students were compensated $10 for 45 minutes, in addition to a bonus based on performance. All participants gave informed consent and the study was approved by the Princeton University Institutional Review Board.

4.1.2 Materials and procedure

The procedure in Experiment 2 was similar to the choice trials in Experiment 1. Participants were shown two crops from the same planet and were asked to choose the crop with the greater probability of reward. One of the two crops was a reference crop that delivered reward with probability 1/2 across all planets (participants were told this reward probability). Feedback was then delivered probabilistically as in Experiments 1 and 2, according to the chosen crop. A cash bonus of $1–$3 was awarded based on performance on the choice trials, by calculating 10 percent of the participant’s earnings.

Each planet involved 100 trials (12 planets total), with a new crop appearing every 9 to 19 trials (chosen from a uniform distribution). Unlike in the previous experiments, the crops were not cycled; instead, a single crop would appear in consecutive trials until replaced by a new one. This allowed us to examine learning curves for a single crop. On each planet, participants were presented with a total of 7 to 8 unique crops (not including the reference crop). Planets were divided into equal numbers of “fertile” planets on which all crops delivered a reward with probability 0.75, and “infertile” planets on which all crops delivered a reward with probability 0.25.

To model the transformation of values into choice probabilities, we used the softmax equation (Sutton and Barto, 1998):

| (12) |

where β is an inverse temperature parameter that governs the stochasticity of choice behavior and j indexes crops available on the current trial. In all other aspects our fitting and evaluation procedures were identical to those described for Experiment 1.

4.2 Results and discussion

Figure 5 summarizes the results of this experiment. The probability of choosing a novel crop on its first presentation increased monotonically as a function of average reward (Figure 5, left), despite no prior experience with the crop, consistent with the Bayesian RL model. As in Experiment 1, the shaping and naïve models were unable to capture this pattern: The cross-validation results (Figure 5, right) confirmed that the Bayesian RL model was quantitatively better at predicting behavior than either alternative. The cross-validation scores for the Bayesian model were significantly higher compared to the shaping model (t(14) = 5.69, p < 0.00005) and the naïve model (t(14) = 3.65, p < 0.005).

Figure 5. Human inductive biases in Experiment 2.

(Left) Empirical and model-based probability of choosing a novel crop as a function of average past reward on a planet (across all crops). Predictions were averaged within 4 bins equally spaced across the average reward axis. Error-bars denote standard error. (Right) Cross-validated predictive log likelihood of shaping and naïve models relative to the Bayesian model. Points below the diagonal (higher log likelihood) indicate a better fit of the Bayesian model.

Consistent with the results of Experiment 1, we again found that participants had an a priori bias towards novel crops superimposed on their inductive bias, as evidenced by the fact that the novel crops were chosen over 40% of the time even when the previous crops were rewarded only on 20 percent of the trials (compared to 50 percent for the reference crop). This bias was captured by fitting the top-level bias parameter ρ0.

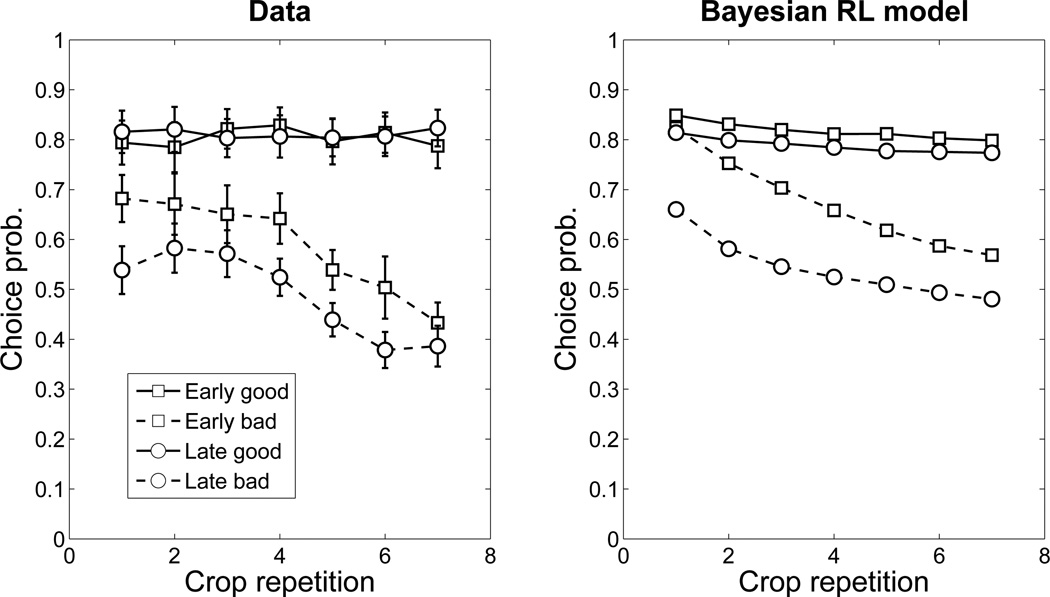

Our experimental design provided us with an opportunity to study how participants use inductive biases to balance the exploration-exploitation trade-off. In particular, a stronger inductive bias should lead to less exploration and more exploitation, since the participant is more confident in his/her reward predictions. According to the Bayesian RL model, the inductive bias for novel crops will be stronger at the end of a planet than at the beginning, since more evidence will have accumulated about the average value of crops on a planet. Accordingly, we divided crops according to whether they appeared early in the planet (within the first 25 trials) or late (after the first 25 trials).6 We further distinguished between “good” crops (with reward probability 0.75) and “bad” crops (with reward probability 0.25). We then examined the learning curves for each of these categories of crops (Figure 6, left).

Figure 6. Learning curves in Experiment 2.

(Left) Empirical learning curves. (Right) Predicted learning curves. Each curve shows the probability of choosing a non-reference crop as a function of the number of times that crop has appeared, broken down by whether that crop is on a “good” or “bad” planet, and whether the trial occurs late or early in the planet.

For bad crops, the Bayesian RL model predicts a stronger inductive bias late in the block compared to early in the block (Figure 6, right), a pattern that is exhibited by participants’ choice behavior (Figure 6, left). Thus, participants appear to explore less as their inductive biases become stronger. The main discrepancy between the model and data is the slightly lower novel choice probability on the first repetition of a bad crop late in the block. In addition, the model appears to predict an overall higher novel choice probability for bad crops than observed empirically.

The Bayesian RL model also predicts a smaller difference in early/late performance for bad crops compared to good crops. Due to the baseline optimistic novelty bias described above, participants will (according to the model) initially over-sample a rewarding novel option and then decrease (or at least not increase) their choice of this option so as to calibrate their choice probability with the reward probability. This pattern is manifested by the fitted Bayesian RL model (Figure 6, right) for the good crops. Empirically, however, this predicted decline was too small to detect (Figure 6, left), probably because of a ceiling effect.

5 General discussion

We hypothesized, on the basis of a hierarchical Bayesian RL model, that preferences for novel options are affected by the value of other options experienced in the same context. The results of two experiments provide support for this hypothesis, suggesting that inductive biases play a role in human RL by influencing reward predictions for novel options. Experiment 1 showed that these predictions corresponded well with those of a Bayesian RL model that learned inductive biases from feedback. The essential idea underlying this model is that reward predictions for different options within a single context influence each other, such that the reward prediction for a new option in the same context will reflect the central tendency of rewards for previously experienced options. Experiment 2 replicated the results of Experiment 1 in a choice task, showing that participants are more likely to choose novel options in a reward-rich (compared to a reward-poor) context. In addition, Experiment 2 showed that participants’ inductive biases influenced how they balanced the exploration-exploitation trade-off: participants spent less time exploring when they had stronger inductive biases, suggesting that inductive biases accelerate the search for valuable options.

These findings contribute to a more complex picture of the brain’s RL faculty than previously portrayed (e.g., Houk et al., 1995; Montague et al., 1996; Schultz et al., 1997). In this new picture, structured statistical knowledge shapes reward predictions and guides option search (Acuña and Schrater, 2010; Gershman and Niv, 2010). It has been proposed that humans exploit structured knowledge to decompose their action space into a set of sub-problems that can be solved in parallel (Gershman et al., 2009; Botvinick et al., 2009; Ribas-Fernandes et al., 2011). The current work suggests that humans will also use structured knowledge to couple together separate options and learn about them jointly, a form of generalization. An important question for future research is how this coupling is learned. One possibility is that humans adaptively partition their action space; related ideas have been applied to clustering of states for RL (Redish et al., 2007; Gershman et al., 2010) and category learning (Anderson, 1991; Love et al., 2004).

The animal learning literature is rich with examples of “generalization decrement, ” the observation that a change in conditioned stimulus properties results in reduced responding (Domjan, 2003). Our results suggest that the effects of stimulus change on responding may be more adaptive: If the animal has acquired a high-level belief that stimuli in an environment tend to be rewarding (or punishing), one would expect stimulus change to maintain a high level of responding. In other words, generalization (according to our account) should depend crucially on the abstract knowledge acquired by the animal from its experience, resulting in either decrement or increment in responding. Urcelay and Miller (2010) have reviewed a number of studies showing evidence of such abstraction in rats.

A conceptually related set of ideas has been investigated in the causal learning literature. For example, Waldmann and Hagmayer (2006) showed that people will generalize causal predictions from one set of exemplars to another if the exemplars belong to the same category (see also Lien and Cheng, 2000; Kemp et al., 2010). In a similar vein, Gopnik and Sobel (2000) showed that young children use object categories to predict the causal powers of a novel object. Our work, in particular the choice task explored in Experiment 2, distinguishes itself from studies of causal learning in that participants are asked to make decisions that optimize rewards. The incentive structure of RL introduces computational problems that are irrelevant to traditional studies of causal learning, such as how to balance exploration and exploitation, as well as implicating different underlying neural structures. Still, our results are consistent with what has been shown for causal learning.

The causal and category learning literatures offer alternative models that may be able to explain our results, such as exemplar models (Nosofsky, 1986) that are yet another mechanism for carrying out Bayesian inference (Shi et al., 2010). Exemplar models require a similarity function between exemplars; with complete freedom to choose this function, it can be specified to produce the same predictions as the Bayesian model. The choice of similarity function can also be seen as implicitly embodying assumptions about the generative process that we are trying to explicitly capture in our Bayesian analysis.

Both exemplar models and TD models are specified at the algorithmic level (Marr, 1982). The primary goal of this paper was to develop a computational-level theory of novelty. As such, we are not committed to any particular mechanistic implementation of the theory. The reason for introducing TD models was to show how a particular set of mechanistic ideas could be connected explicitly to this computational-level theory. This specific implementation was motivated by previous work on RL in humans and animals, which supports an error-driven learning rule that incrementally estimates reward predictions (Rescorla and Wagner, 1972; Schultz et al., 1997; Niv, 2009).

The category literature has also emphasized the question of how people generalize properties to novel objects. Shepard (1987) famously proposed his “universal law of generalization, ” according to which generalization gradients decay approximately exponentially as a function of the psychological distance between novel and previously experienced objects. Shepard derived his universal law from assumptions about the geometric structure of natural kinds in psychological space (the consequential region) and the probability distribution over consequential regions. Subsequently, Gluck (1991) showed how, given an appropriate choice of stimulus representation, exponential-like generalization gradients could be derived from precisely the sort of associative model that we have investigated in this paper.

Dopamine has long played a central role in the neurophysiology of novelty (Hughes, 2007). The “shaping bonus” theory of Kakade and Dayan (2002b), which posits that reward predictions are initialized optimistically, has proven useful in rationalizing the relationship between dopamine and novelty in RL tasks (Wittmann et al., 2008). Our model predicts aspects of novelty responses that go beyond shaping bonuses. In particular, the dopamine signal should be systematically enhanced for novel cues when other cues in the same context are persistently rewarded, relative to a context in which cues are persistently unrewarded. In essence, we explain how the shaping bonus should be dynamically set.

In conclusion, we believe that novelty is not as simple as previously assumed. We have proposed, from a statistical point of view, that responses to novelty are inductive in nature, guiding how decision makers evaluate and search through the set of options. Our modifications of a classic RL model allowed it to accommodate these statistical considerations, providing a better fit to behavior. The inductive interpretation offers, we believe, a new path towards unraveling the puzzle of novelty.

Acknowledgments

We thank Nathaniel Daw for many fruitful discussions and Quentin Huys for comments on an earlier version of the manuscript. This work was funded by a Quantitative Computational Neuroscience training grant to S.J.G. from the National Institute of Mental Health and by a Sloan Research Fellowship to Y.N.

Appendix

model fitting and evaluation

Free parameters were fit to behavior, for each participant separately, using MCMC: samples were drawn from a Markov chain whose stationary distribution corresponds to the posterior distribution over model parameters conditional on the observed behavioral data. In particular, we applied the Metropolis algorithm (see Robert and Casella, 2004, for more information) using a Gaussian proposal distribution. Letting xm denote the parameter vector at iteration m, the Metropolis algorithm proceeds by proposing a new parameter and accepting it with probability

| (13) |

If the proposal is rejected, xm+1 is set to xm.

We placed the following priors on the parameters, with the goal of making relatively weak assumptions:

| (14) |

| (15) |

| (16) |

| (17) |

| (18) |

| (19) |

| (20) |

Note that ρ, ρ0 and b0 are specific to the Bayesian RL model, η is specific to the naïve and shaping models, and V1 is specific to the shaping model. All models have a noise parameter σ. For each model, to ensure that the Metropolis proposals were in the correct range, we transformed the parameters to the real line (using exponential or logistic transformations) during sampling, inverting these transformation when calculating the likelihood and prior. Note that in producing behavioral predictions, the bias parameter b was integrated out numerically.

After M iterations of the Metropolis algorithm, we had M samples approximately distributed according to the posterior P(x|y, r, c). We set M = 3000 and discarded the first 500 as “burn-in” (Robert and Casella, 2004). For cross-validation, we repeated this procedure for each cross-validation fold, holding out one planet while estimating parameters for the remaining planets. Model-based reward predictions ŷt were obtained by averaging the reward predictions under the posterior distribution:

| (21) |

As M → ∞, this approximation approaches the exact posterior expectation.

Footnotes

It is important to keep in mind that the generative model represents the agent’s putative internal model of the environment, as distinct from our model of the agent.

We have used a non-standard parameterization of the beta distribution because it allows us to more clearly separate out mean and variance components.

We use a simplified version of TD learning that estimates rewards rather than returns (cumulative future rewards) as is more common in RL theory (Sutton and Barto, 1998). The latter would significantly complicate formal analysis, whereas the former has the advantage of being appropriate for the bandit problems we investigate. Furthermore, the simplified model has been used extensively to model human choice behavior and brain activity in bandit tasks (e.g., Daw et al., 2006b; Schönberg et al., 2007).

Although time and planetary context are confounded in this experiment (i.e., crops experienced on a planet are also presented nearby in time), our model is neutral with respect to what defines context. As long as the crops on a planet are grouped together, this confound does not affect our model predictions.

We chose to use a Beta(1.5,1.5) distribution instead of a uniform distribution to avoid near-deterministic reward probabilities.

Qualitatively similar results were obtained with a symmetric (pre-50/post-50) split, but we found that results with the asymmetric split were less noisy.

References

- Acuña D, Schrater P. Structure learning in human sequential decision-making. PLoS Computational Biology. 2010;6(12):221–229. doi: 10.1371/journal.pcbi.1001003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson J. The adaptive nature of human categorization. Psychological Review. 1991;98(3):409–429. [Google Scholar]

- Bardo M, Bevins R. Conditioned place preference: what does it add to our preclinical understanding of drug reward? Psychopharmacology. 2000;153(1):31–43. doi: 10.1007/s002130000569. [DOI] [PubMed] [Google Scholar]

- Barnett S. Experiments on “neophobia” in wild and laboratory rats. British Journal of Psychology. 1958;49:195–201. doi: 10.1111/j.2044-8295.1958.tb00657.x. [DOI] [PubMed] [Google Scholar]

- Barto A. Adaptive Critics and the Basal Ganglia. In: Houk J, Davis J, Beiser D, editors. Models of Information Processing in the Basal Ganglia. MIT Press; 1995. pp. 215–232. [Google Scholar]

- Bayer H, Glimcher P. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47(1):129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens T, Woolrich M, Walton M, Rushworth M. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10(9):1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Berlyne D. Conict, Arousal, and Curiosity. New York: McGraw-Hill; 1960. [Google Scholar]

- Berlyne D. Curiosity and exploration. Science. 1966;153(3731):25–33. doi: 10.1126/science.153.3731.25. [DOI] [PubMed] [Google Scholar]

- Berlyne D, Koenig I, Hirota T. Novelty, arousal, and the reinforcement of diversive exploration in the rat. Journal of Comparative and Physiological Psychology. 1966;62(2):222–226. doi: 10.1037/h0023681. [DOI] [PubMed] [Google Scholar]

- Bevins R. Novelty seeking and reward: Implications for the study of high-risk behaviors. Current Directions in Psychological Science. 2001;10(6):189–193. [Google Scholar]

- Blanchard R, Kelley M, Blanchard D. Defensive reactions and exploratory behavior in rats. Journal of Comparative and Physiological Psychology. 1974;87(6):1129–1133. [Google Scholar]

- Botvinick M, Niv Y, Barto A. Hierarchically organized behavior and its neural foundations: A reinforcement learning perspective. Cognition. 2009;113(3):262–280. doi: 10.1016/j.cognition.2008.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton M, King D. Contextual control of the extinction of conditioned fear: Tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes. 1983;9(3):248–265. [PubMed] [Google Scholar]

- Brafman R, Tennenholtz M. R-max-a general polynomial time algorithm for nearoptimal reinforcement learning. The Journal of Machine Learning Research. 2003;3:213–231. [Google Scholar]

- Brainard D. The psychophysics toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Cohen J, McClure S, Yu A. Should I stay or should I go? How the human brain manages the trade-off between exploitation and exploration. Philosophical Transactions of the Royal Society B: Biological Sciences. 2007;362(1481):933–942. doi: 10.1098/rstb.2007.2098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corey D. The determinants of exploration and neophobia. Neuroscience & Biobehavioral Reviews. 1978;2(4):235–253. [Google Scholar]

- Courville A, Daw N, Touretzky D. Bayesian theories of conditioning in a changing world. Trends in Cognitive Sciences. 2006;10(7):294–300. doi: 10.1016/j.tics.2006.05.004. [DOI] [PubMed] [Google Scholar]

- Cowan P. The new object reaction of Rattus rattus L.: the relative importance of various cues. Behavioral Biology. 1976;16(1):31–44. doi: 10.1016/s0091-6773(76)91095-6. [DOI] [PubMed] [Google Scholar]

- Daw N, Courville A, Touretzky D. Representation and timing in theories of the dopamine system. Neural Computation. 2006a;18(7):1637–1677. doi: 10.1162/neco.2006.18.7.1637. [DOI] [PubMed] [Google Scholar]

- Daw N, O'Doherty J, Dayan P, Seymour B, Dolan R. Cortical substrates for exploratory decisions in humans. Nature. 2006b;441(7095):876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day J, Roitman M, Wightman R, Carelli R. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nature Neuroscience. 2007;10(8):1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- Dayan P, Niv Y, Seymour B, D Daw N. The misbehavior of value and the discipline of the will. Neural Networks. 2006;19(8):1153–1160. doi: 10.1016/j.neunet.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Dearden R, Friedman N, Russell S. Bayesian q-learning. Proceedings of the National Conference on Artificial Intelligence. 1998:761–768. [Google Scholar]

- Domjan M. The Principles of Learning and Behavior. Thomson/Wadsworth; 2003. [Google Scholar]

- Engel Y, Mannor S, Meir R. Bayes meets bellman: The gaussian process approach to temporal difference learning. International Conference on Machine Learning. 2003;20:154–162. [Google Scholar]

- Ennaceur A, Delacour J. A new one-trial test for neurobiological studies of memory in rats. 1: Behavioral data. Behavioural Brain Research. 1988;31(1):47–59. doi: 10.1016/0166-4328(88)90157-x. [DOI] [PubMed] [Google Scholar]

- Fehrer E. The effects of hunger and familiarity of locale on exploration. Journal of Comparative and Physiological Psychology. 1956;49(6):549–552. doi: 10.1037/h0047540. [DOI] [PubMed] [Google Scholar]

- File S, Day S. Effects of time of day and food deprivation on exploratory activity in the rat. Animal Behaviour. 1972;20(4):758–762. doi: 10.1016/s0003-3472(72)80148-9. [DOI] [PubMed] [Google Scholar]

- Friston K. Hierarchical models in the brain. PLoS Computational Biology. 2008;4(11):e1000211. doi: 10.1371/journal.pcbi.1000211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gershman S, Blei D, Niv Y. Context, learning, and extinction. Psychological Review. 2010;117(1):197–209. doi: 10.1037/a0017808. [DOI] [PubMed] [Google Scholar]

- Gershman S, Niv Y. Learning latent structure: carving nature at its joints. Current Opinion in Neurobiology. 2010;20(2):251–256. doi: 10.1016/j.conb.2010.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gershman S, Pesaran B, Daw N. Human reinforcement learning subdivides structured action spaces by learning effector-specific values. Journal of Neuroscience. 2009;29(43):13524–13531. doi: 10.1523/JNEUROSCI.2469-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gittins J. Multi-armed Bandit Allocation Indices. John Wiley & Sons Inc.; 1989. [Google Scholar]

- Gluck M. Stimulus generalization and representation in adaptive network models of category learning. Psychological Science. 1991;2(1):50–55. [Google Scholar]

- Gluck M, Bower G. Evaluating an adaptive network model of human learning. Journal of Memory and Language. 1988a;27(2):166–195. [Google Scholar]

- Gluck M, Bower G. From conditioning to category learning: An adaptive network model. Journal of Experimental Psychology: General. 1988b;117(3):227–247. doi: 10.1037//0096-3445.117.3.227. [DOI] [PubMed] [Google Scholar]

- Glymour C. Learning, prediction and causal bayes nets. Trends in Cognitive Sciences. 2003;7(1):43–48. doi: 10.1016/s1364-6613(02)00009-8. [DOI] [PubMed] [Google Scholar]

- Gopnik A, Sobel D. Detecting blickets: How young children use information about novel causal powers in categorization and induction. Child Development. 2000:1205–1222. doi: 10.1111/1467-8624.00224. [DOI] [PubMed] [Google Scholar]

- Griffiths T, Chater N, Kemp C, Perfors A, Tenenbaum J. Probabilistic models of cognition: exploring representations and inductive biases. Trends in Cognitive Sciences. 2010;14(8):357–364. doi: 10.1016/j.tics.2010.05.004. [DOI] [PubMed] [Google Scholar]

- Hennessy J, Levin R, Levine S. Influence of experiential factors and gonadal hormones on pituitary-adrenal response of the mouse to novelty and electric shock. Journal of Comparative and Physiological Psychology. 1977;91(4):770–777. doi: 10.1037/h0077368. [DOI] [PubMed] [Google Scholar]

- Hollerman J, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nature Neuroscience. 1998;1(4):304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- Horvitz J, Stewart T, Jacobs B. Burst activity of ventral tegmental dopamine neurons is elicited by sensory stimuli in the awake cat. Brain Research. 1997;759(2):251–258. doi: 10.1016/s0006-8993(97)00265-5. [DOI] [PubMed] [Google Scholar]

- Houk J, Adams J, Barto A. A model of how the basal ganglia generate and use neural signals that predict reinforcement. In: Houk J, Davis J, Beiser D, editors. Models of Information Processing in the Basal Ganglia. MIT Press; 1995. pp. 249–270. [Google Scholar]

- Howard R. Information value theory. IEEE Transactions on Systems Science and Cybernetics. 1966;2(1):22–26. [Google Scholar]

- Hughes R. Neotic preferences in laboratory rodents: Issues, assessment and substrates. Neuroscience & Biobehavioral Reviews. 2007;31(3):441–464. doi: 10.1016/j.neubiorev.2006.11.004. [DOI] [PubMed] [Google Scholar]

- Kakade S, Dayan P. Acquisition and extinction in autoshaping. Psychological Review. 2002a;109(3):533–544. doi: 10.1037/0033-295x.109.3.533. [DOI] [PubMed] [Google Scholar]

- Kakade S, Dayan P. Dopamine: Generalization and bonuses. Neural Networks. 2002b;15(4–6):549–559. doi: 10.1016/s0893-6080(02)00048-5. [DOI] [PubMed] [Google Scholar]

- Kemp C, Goodman N, Tenenbaum J. Learning to learn causal models. Cognitive Science. 2010;34(7):1185–1243. doi: 10.1111/j.1551-6709.2010.01128.x. [DOI] [PubMed] [Google Scholar]

- Kemp C, Perfors A, Tenenbaum J. Learning overhypotheses with hierarchical Bayesian models. Developmental Science. 2007;10(3):307–321. doi: 10.1111/j.1467-7687.2007.00585.x. [DOI] [PubMed] [Google Scholar]

- King D, Appelbaum J. Effect of trials on “emotionality” behavior of the rat and mouse. Journal of Comparative and Physiological Psychology. 1973;85(1):186–194. doi: 10.1037/h0034887. [DOI] [PubMed] [Google Scholar]

- Lien Y, Cheng P. Distinguishing genuine from spurious causes: A coherence hypothesis* 1. Cognitive Psychology. 2000;40(2):87–137. doi: 10.1006/cogp.1999.0724. [DOI] [PubMed] [Google Scholar]

- Love B, Medin D, Gureckis T. Sustain: A network model of category learning. Psychological Review. 2004;111(2):309. doi: 10.1037/0033-295X.111.2.309. [DOI] [PubMed] [Google Scholar]

- Lucas C, Griffiths T. Learning the form of causal relationships using hierarchical Bayesian models. Cognitive Science. 2010;34(1):113–147. doi: 10.1111/j.1551-6709.2009.01058.x. [DOI] [PubMed] [Google Scholar]

- Marr D. Vision. Freeman; 1982. [Google Scholar]

- Mitchell T. Machine Learning. McGraw-Hill; 1997. [Google Scholar]

- Montague P, Dayan P, Sejnowski T. A framework for mesencephalic dopamine systems based on predictive hebbian learning. The Journal of Neuroscience. 1996;16(5):1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers A, Miller N. Failure to find a learned drive based on hunger; evidence for learning motivated by exploration. Journal of Comparative and Physiological Psychology. 1954;47(6):428–436. doi: 10.1037/h0062664. [DOI] [PubMed] [Google Scholar]

- Ng A, Harada D, Russell S. Policy invariance under reward transformations: Theory and application to reward shaping; Proceedings of the Sixteenth International Conference on Machine Learning; 1999. [Google Scholar]

- Nissen H. A study of exploratory behavior in the white rat by means of the obstruction method. Journal of Genetic Psychology. 1930;37:361–376. [Google Scholar]

- Niv Y. Reinforcement learning in the brain. Journal of Mathematical Psychology. 2009;53(3):139–154. [Google Scholar]

- Nosofsky R. Attention, similarity, and the identification–categorization relationship. Journal of Experimental Psychology: General. 1986;115(1):39–57. doi: 10.1037//0096-3445.115.1.39. [DOI] [PubMed] [Google Scholar]

- Payzan-LeNestour E, Bossaerts P. Risk, unexpected uncertainty, and estimation uncertainty: Bayesian learning in unstable settings. PLoS Computational Biology. 2011;7(1):e1001048. doi: 10.1371/journal.pcbi.1001048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearl J. Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann; 1988. [Google Scholar]

- Rajecki D. Effects of prenatal exposure to auditory or visual stimulation on postnatal distress vocalizations in chicks. Behavioral Biology. 1974;11(4):525–536. doi: 10.1016/s0091-6773(74)90845-1. [DOI] [PubMed] [Google Scholar]

- Rao R. Decision Making Under Uncertainty: A Neural Model Based on Partially Observable Markov Decision Processes. Frontiers in Computational Neuroscience. 2010;4:146. doi: 10.3389/fncom.2010.00146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish A, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: implications for addiction, relapse, and problem gambling. Psychological Review. 2007;114(3):784–805. doi: 10.1037/0033-295X.114.3.784. [DOI] [PubMed] [Google Scholar]

- Reed P, Mitchell C, Nokes T. Intrinsic reinforcing properties of putatively neutral stimuli in an instrumental two-lever discrimination task. Animal Learning and Behavior. 1996;24(1):38–45. [Google Scholar]

- Reichel C, Bevins R. Competition between the conditioned rewarding effects of cocaine and novelty. Behavioral Neuroscience. 2008;122(1):140–150. doi: 10.1037/0735-7044.122.1.140. [DOI] [PubMed] [Google Scholar]

- Rescorla R, Wagner A. Variations in the Effectiveness of reinforcement and nonreinforcement. Classical Conditioning. II: Current Research and Theory, Appleton-Century-Crofts, New York. 1972 [Google Scholar]

- Ribas-Fernandes J, Solway A, Diuk C, McGuire J, Barto A, Niv Y, Botvinick M. A neural signature of hierarchical reinforcement learning. Neuron. 2011;71(2):370–379. doi: 10.1016/j.neuron.2011.05.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robert C, Casella G. Monte Carlo Statistical Methods. Springer Verlag; 2004. [Google Scholar]

- Schönberg T, Daw N, Joel D, O'Doherty J. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. The Journal of Neuroscience. 2007;27(47):12860–12867. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. Journal of Neurophysiology. 1998;80(1):1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague P. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Sheldon A. Preference for familiar versus novel stimuli as a function of the familiarity of the environment. Journal of Comparative and Physiological Psychology. 1969;67(4):516–521. [Google Scholar]

- Shepard R. Toward a universal law of generalization for psychological science. Science. 1987;237(4820):1317–1323. doi: 10.1126/science.3629243. [DOI] [PubMed] [Google Scholar]

- Shi L, Griffiths T, Feldman N, Sanborn A. Exemplar models as a mechanism for performing bayesian inference. Psychonomic Bulletin & Review. 2010;17(4):443–464. doi: 10.3758/PBR.17.4.443. [DOI] [PubMed] [Google Scholar]

- Steyvers M, Lee M, Wagenmakers E. A Bayesian analysis of human decision-making on bandit problems. Journal of Mathematical Psychology. 2009;53(3):168–179. [Google Scholar]

- Suri R, Schultz W, et al. A neural network model with dopamine-like reinforcement signal that learns a spatial delayed response task. Neuroscience. 1999;91(3):871–890. doi: 10.1016/s0306-4522(98)00697-6. [DOI] [PubMed] [Google Scholar]

- Sutton R, Barto A. Reinforcement Learning: An Introduction. MIT Press; 1998. [Google Scholar]

- Urcelay G, Miller R. On the generality and limits of abstraction in rats and humans. Animal Cognition. 2010;13(1):21–32. doi: 10.1007/s10071-009-0295-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waldmann M, Hagmayer Y. Categories and causality: The neglected direction. Cognitive Psychology. 2006;53(1):27–58. doi: 10.1016/j.cogpsych.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Weiskrantz L, Cowey A. The aetiology of food reward in monkeys. Animal Behaviour. 1963;11(2–3):225–234. [Google Scholar]

- Weizmann F, Cohen L, Pratt R. Novelty, familiarity, and the development of infant attention. Developmental Psychology. 1971;4(2):149–154. [Google Scholar]

- Widrow B, Hoff M. IRE WESCON convention record. Vol. 4. New York: IRE; 1960. Adaptive switching circuits; pp. 96–104. [Google Scholar]

- Wittmann B, Daw N, Seymour B, Dolan R. Striatal activity underlies novelty-based choice in humans. Neuron. 2008;58(6):967–973. doi: 10.1016/j.neuron.2008.04.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zajonc R. Mere exposure: A gateway to the subliminal. Current Directions in Psychological Science. 2001;10(6):224–228. [Google Scholar]