Abstract

Background

Functional MRI (fMRI) based on language tasks has been used in pre-surgical language mapping in patients with lesions in or near putative language areas. However, if the patients have difficulty performing the tasks due to neurological deficits, it leads to unreliable or non-interpretable results. In this study, we investigate the feasibility of using a movie-watching fMRI for language mapping.

Methods

A 7-min movie clip with contrasting speech and non-speech segments was shown to 22 right-handed healthy subjects. Based on all subjects' language functional regions-of-interest, six language response areas were defined, within which a language response model (LRM) was derived by extracting the main temporal activation profile. Using a leave-one-out procedure, individuals' language areas were identified as the areas that expressed highly correlated temporal responses with the LRM derived from an independent group of subjects.

Results

Compared with an antonym generation task-based fMRI, the movie-watching fMRI generated language maps with more localized activations in the left frontal language area, larger activations in the left temporoparietal language area, and significant activations in their right-hemisphere homologues. Results of two brain tumor patients' movie-watching fMRI using the LRM derived from the healthy subjects indicated its ability to map putative language areas; while their task-based fMRI maps were less robust and noisier.

Conclusions

These results suggest that it is feasible to use this novel “task-free” paradigm as a complementary tool for fMRI language mapping when patients cannot perform the tasks. Its deployment in more neurosurgical patients and validation against gold-standard techniques need further investigation.

Keywords: movie-watching fMRI, pre-surgical planning, language mapping, naturalistic language stimuli, task-based fMRI

Introduction

Over the past two decades, functional magnetic resonance imaging (fMRI) for in vivo mapping of language function has revolutionized the study of language1,2. Its clinical application has been focused on mapping individual patients' language areas for neurosurgical planning due to its advantages of non-invasiveness and pre-surgical availability3,4. Conventional language fMRI identifies individual patients' language areas by comparing blood-oxygen-level dependent (BOLD) signal change while patients perform language tasks vs. a control condition. This task-based fMRI has been used to identify language-dominant hemisphere and to localize critical language areas, and generally shown to be concordant with the gold-standard language mapping techniques, i.e., intracarotid amytal testing (Wada test) and electro-cortical stimulation (ECS)5-8.

Language tasks for fMRI pre-surgical mapping include object naming, word generation/categorization, sentence completion, etc.; these are typically implemented as blocked design paradigms. These tasks are relatively demanding for neurosurgical patients to understand and perform3. Because the reliability of fMRI results is highly dependent on the task performance, patients who perform poorly due to aphasias or other neurological deficits may fail the task entirely or have inaccurate findings, and thus do not benefit from this useful surgical planning technique3,9. Furthermore, administration of the strictly timed test to patients requires expertise beyond the scope of that available at many MRI centers10. In order to overcome the limitations of task-based language fMRI, we have previously investigated resting-state fMRI as a “task-free” paradigm that does not require patients' performance of any task11. In the current study, we propose another form of “task-free” fMRI paradigm using naturalistic language stimuli, i.e., a movie-watching (or natural-viewing) condition, during which patients only need to watch a movie clip in the scanner.

Recently, naturalistic stimuli (passive viewing of movie clips) have been used in fMRI and intra-cranial electroencephalography (EEG) experiments to study how our brains operate in the real world12-16. The primary sensory-motor areas, association regions, and prefrontal cortex have been shown to express reliable and selective responses that are highly similar across individuals when they are viewing the same movie clip, based on a model-free inter-subject correlation (Inter-SC) analysis. The Inter-SC analysis results of a study using audiovisual narratives as stimuli revealed extensive bilateral inferior frontal and premotor regions, in addition to superior temporal cortex that has been traditionally attributed to speech comprehension17. Another study showed highly similar time course responses in the language-related regions of anterior and posterior superior temporal sulcus based on an intra-subject correlation (Intra-SC) analysis when a clip of an action movie was shown repeatedly to the same subject18. These results suggest that language regions may respond to the linguistic material of the movie in a temporally similar way both within- and across- subjects. In addition, Bartels and Zeki correlated human perception ratings of four features of an action movie clip (color, face, human body, and language) to the BOLD signal response and found temporally distinct feature-specific brain activities in the spatially and functionally segregated brain regions; specifically, activity in the bilateral Wernicke's area was correlated with the perception of comprehensible language19. These studies raise the intriguing possibility that language regions could show distinct activations when subjects watch a movie clip consisting of linguistic material, but to our knowledge no attempt has been made to use this condition for individual subject language mapping.

Building on these findings, we propose a new fMRI paradigm for pre-surgical mapping of language function in individuals using a movie-watching condition. Our hypothesis is that a new subject's language areas could be identified by finding the brain regions that express similar hemodynamic response to a “language response model” (LRM) of a movie stimulus. The LRM is an experimentally derived time course model based on the language area activities from a group of training subjects while they are watching the same movie clip. Our goal is to develop a language mapping paradigm that is less demanding than the task-based language fMRI, requiring no explicit responses from patients. In addition, naturalistic language stimuli may be more effective to activate the extensive neural substrates for natural language processing20,21, which may not be completely revealed using traditional language tasks with isolated and controlled conditions. In this study, we acquired movie-watching fMRI from 22 right-handed healthy subjects who also performed conventional task-based language fMRI. We assessed a strategy of mapping an individual subject's language areas based on the LRM derived from an independent set of subjects using a leave-one-out procedure. Results in healthy subjects and two brain tumor patients indicated that this novel movie-watching paradigm could identify individual subjects' language areas, and thus could be used as a complementary approach for pre-surgical language mapping.

Materials and Methods

Subjects

Twenty-two healthy subjects (11 males, mean age = 26.3 years, range: 19-39 years) with no history of neurological, cognitive, or psychiatric disorders, nor any speech, hearing, or vision deficits were enrolled in this study. All subjects were right-handed based on the Edinburgh Handiness Inventory (EHI) score (80 ± 20 (mean ± SD))22. All subjects were native English speakers. The Partners Institutional Review Board approved the study protocol; and all subjects provided written informed consent.

Image Acquisition

MR images were obtained using a 3.0 Tesla GE Signa system (General Electric, Milwaukee, WI, USA). BOLD functional images were acquired using single-shot gradient-echo echo-planar imaging (EPI) and a standard quadrature head coil (TR/TE = 2000/40 ms, flip angle = 90°, slice gap = 0 mm, FOV = 25.6 cm, in-plane resolution = 128 × 128, 27 axial slices, ascending interleaved sequence, voxel size = 2 × 2 × 4 mm3). Whole brain T1-weighted axial 3D spoiled gradient recalled (SPGR) structural images were acquired using array spatial sensitivity encoding technique (ASSET, i.e., parallel imaging) and an 8-channel head coil (TR/TE = 7.8/3.0 ms, flip angle = 20°, in-plane resolution = 512 × 512, 176 slices, voxel size = 0.5 × 0.5 × 1 mm3).

Behavioral Paradigm

All subjects underwent a movie-watching and a task-based language fMRI during one scanning session. The order of the two functional runs was counterbalanced across subjects. The movie stimulus was a 7-min clip from a family film “The Parent Trap” (directed by Nancy Meyers, produced by The Meyers/Shyer Company and Walt Disney Pictures, 1998). The movie is about two identical twins, separated at birth and each raised by one of their biological parents, who decide to switch places after discovering each other for the first time at a summer camp. In the clip, one of the twins, Annie, first meets her father who is unaware of the switch. The clip consisted of seven speech segments of dialogue between Annie, her father, and the butler (various durations, range: 5 – 119 sec, total length: 5 min 54 sec), interleaved with six non-speech segments of indoor and outdoor scenes (duration range: 4 – 25 sec, total length: 1 min 6 sec). Screen shots of three main speech segments and two non-speech segments are shown in Figure 3A.

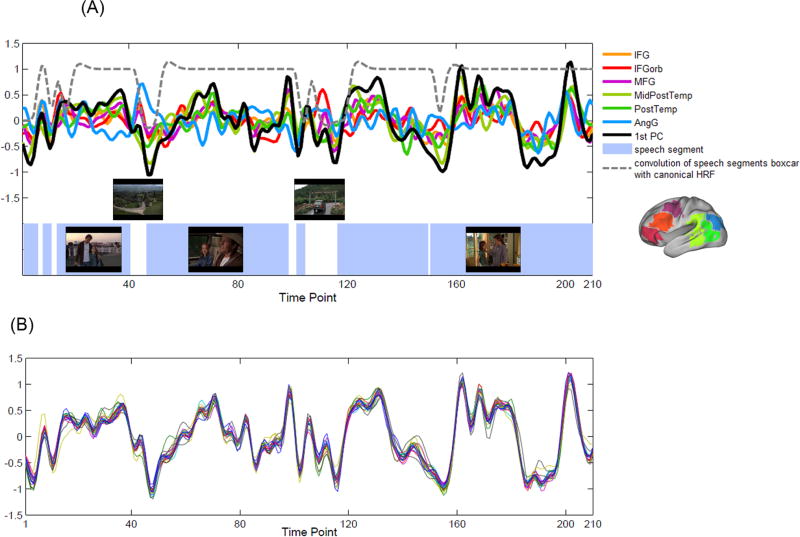

Figure 3.

Language response model (LRM) of the movie stimulus.

(A) Overlay of the six LRRs' grand mean time courses and the first PC from all subjects' data. The first PC represented the main temporal profile of the language area response, and thus was defined as the LRM of the movie stimulus. Blue rectangle areas indicate the speech segments of the movie clip. Screen shots of three main speech segments and two non-speech segments are also shown. Dotted line plots the convolution of the speech segment boxcar with the canonical HRF.

(B) Overlay of the 22 LRMs resulting from the leave-one-out procedure. For each test subject, a LRM was defined as the first PC resulting from the PCA analysis on the LRRs' time course response of the remaining 21 subjects. The LRM was then used as a linear regressor for the first-level GLM analysis on the test subject's movie-watching fMRI.

Subjects were instructed to remain still and alert while watching and listening to the movie clip. These instructions were provided prior to the scanning session, and again through the MRI headphones right before the movie-watching scan was acquired. A researcher also spoke to the subject right after the scanning to assure that the subject could both see and hear the movie and remained alert during the scanning.

The task-based language fMRI was a 7-min blocked design fMRI11,23. This paradigm consisted of seven 20-sec antonym generation (AG) task blocks (5 words per block, each word presented for 2 sec with an inter-stimulus-interval of 2 sec) during which the subjects were asked to verbalize the antonym of the presented word stimulus with minimal movement of their head, jaw, or lips. This paradigm also consisted of seven 20-sec case categorization (CC) high-level control blocks (5 orthographically irregular letter strings per block) during which the subjects were asked to indicate whether the presented letter string was composed of upper or lower cases. The AG and CC blocks were interleaved with fourteen 10-sec blocks of fixation condition.

Dummy scans of 10-sec (black screen) were shown at the beginning of each functional run and were excluded from the analysis to allow stabilization of the BOLD signal. The stimulus paradigm was implemented using EPrime software (Psychology Software Tools, Inc., Sharpsburg, PA, USA, http://www.pstnet.com/eprime.cfm, version 1.1.4.1). The visual and auditory stimuli were presented through MR-compatible video goggles and headphones respectively (Nordic NeuroLab Inc., Milwaukee, WI, USA).

Data Pre-processing

The functional data was pre-processed using Statistical Parametric Mapping software (SPM8, Wellcome Department of Cognitive Neurology, London, UK, http://www.fil.ion.ucl.ac.uk/spm). Pre-processing procedures included rigid body motion correction by realigning the images to the first image of the functional run, spatial normalization to the Montreal Neurological Institute (MNI) space based on the EPI template (re-sampled to 3 × 3 × 3 mm3 voxel size), and spatial smoothing using a 6 mm full-width half-maximum (FWHM) Gaussian kernel. Note that for the movie-watching fMRI data used for calculating regional homogeneity (ReHo) and building the time course model, band-pass filtering (0.01-0.1 Hz) was performed on the unsmoothed data to remove slow drift and reduce physiological and physical noise, while preserving the spatial resolution.

Head motion of all subjects was within acceptable parameters (any direction of translations was less than 2.5 mm, and any rotation was less than 2.5 degrees). Head motion was also estimated using framewise displacement (FD) which is a scalar quantity that uses all six realignment parameters and represents instantaneous head motion24. The mean FD of the time series of the movie-watching fMRI was 0.18 ± 0.08 mm across all subjects, and that of the task-based fMRI was 0.21 ± 0.08 mm across all subjects, indicating that the head motion was within acceptable range (i.e., less than 0.5 mm)24.

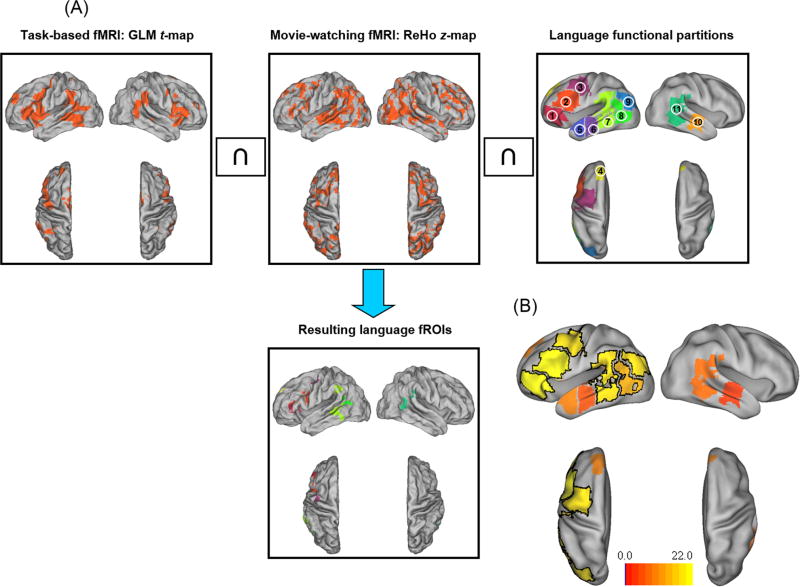

Defining Language Response Regions (LRRs) for Extraction of Language Area Time Course Response to the Movie Stimulus

In order to extract the language area time course response to the movie stimulus, language functional regions-of-interest (fROIs) were defined for each subject. Figure 1A shows the procedure of generating the fROIs for an example subject, visualized using Caret software (http://www.nitrc.org/projects/caret/)25 and the human population-average, landmark- and surface-based (PALS)-B12 atlas (http://sumsdb.wustl.edu/sums/humanpalsmore.do)26. First, a statistical t-map was derived from the task-based language fMRI based on the first-level single-subject general linear model (GLM) analysis27 using SPM8. Boxcar reference functions of the “AG”, “CC” and fixation conditions were convolved with the canonical hemodynamic response function (HRF) to construct regressors for GLM. High-pass filtering with a cutoff of 128 sec and an autoregressive model of the first level (AR (1)) were applied during parameter estimation. The statistical t-map of the contrast “AG” vs. “CC” was thresholded by an individually determined value (half of the mean t value of the top 5% positive t values of the map, modified from28). Second, a regional homogeneity (ReHo) map that showed the local signal similarity29 was derived from the movie-watching fMRI using the advanced edition of Data Processing Assistant for Resting-State fMRI software (DPARSFA, http://rfmri.org/DPARSF,30). ReHo was calculated using Kendall's coefficient of concordance (KCC,31) that measured the similarity of the ranked time courses of a given voxel with its nearest 26 neighboring voxels. The ReHo map was scaled to z-score map, and thresholded at z ≥ 1. Third, the intersection image of each subject's task-based GLM t-map and movie-watching ReHo z-map was generated, resulting in brain regions that were activated during the language task as well as expressed coherent activities with their surrounding voxels during the movie-watching condition. Finally, eleven language functional partitions defined in the MNI space (http://web.mit.edu/evelina9/www/funcloc/funcloc_parcels.html,32) were used as masks on the above intersection images to constrain the resulting fROIs to be within these high-level linguistic regions. These partitions were derived from a probabilistic overlap map of individual activations for the sentences vs. non-words contrast in an independent group of subjects32. The eleven partitions included nine left-hemispheric masks and two right-hemispheric masks (see Figure 1A caption). For the temporal cortex masks, the primary and secondary auditory cortices (i.e., Heschl's gyrus, Brodmann areas 41, 42, and 22) were excluded to avoid inclusion of auditory response from the proximate auditory areas.

Figure 1.

Procedure of generating individual subjects' language functional ROIs (fROIs), and defining the language response regions (LRRs).

(A) Generation of an example subject's language fROIs. The intersection of the subject's thresholded GLM t-map from the task-based fMRI and ReHo z-map from the movie-watching fMRI is masked by eleven language functional partitions to generate the language fROIs. The partitions included nine left-hemispheric masks: (1) IFGorb: orbital inferior frontal gyrus, (2) IFG: inferior frontal gyrus, (3) MFG: middle frontal gyrus, (4) SFG: superior frontal gyrus, (5) AntTemp: anterior temporal gyrus, (6) MidAntTemp: middle anterior temporal gyrus, (7) MidPostTemp: middle posterior temporal gyrus, (8) PostTemp: posterior temporal gyrus, and (9) AngG: angular gyrus; and two right-hemispheric masks: (10) right MidAntTemp, and (11) right MidPostTemp. For this example subject, the language fROIs were located within seven partitions (left IFGorb, IFG, MFG, SFG, MidPostTemp PostTemp, and right MidPostTemp).

(B) Brain renderings showing the number of subjects who showed language fROIs within the eleven language functional partitions (range: 4-22 subjects). The six partitions (three in the left frontal lobe: IFGorb, IFG, MFG, and three in the left temporal lobe: MidPostTemp, PostTemp, AngG) within which more than half (≥ 11) of the subjects showed language fROIs are defined as the “language response regions” (LRRs, highlighted with black border).

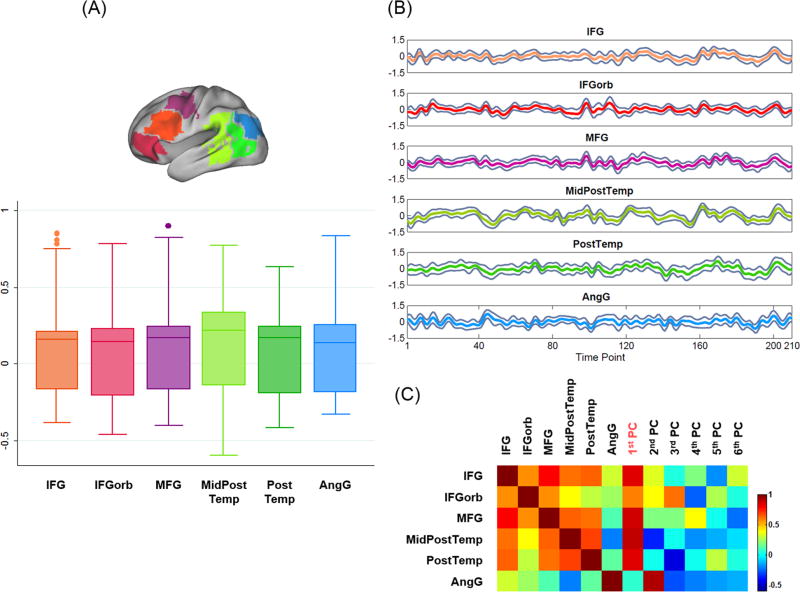

Figure 1B shows the number of subjects who showed language fROIs within each of the eleven language functional partitions. We defined six “language response regions” (LRRs, highlighted with black borders) as the partitions within which more than half (≥ 11) of the subjects showed language fROIs. All LRRs were in the left hemisphere, including orbital inferior frontal gyrus (IFGorb), inferior frontal gyrus (IFG), middle frontal gyrus (MFG), middle posterior temporal gyrus (MidPostTemp), post temporal gyrus (PostTemp), and angular gyrus (AngG). Figure 2A shows the pair-wise correlation coefficients among the mean time course of the fROIs' activation clusters across all subjects in the LRRs.

Figure 2.

Time course analysis of the six LRRs.

(A) Pair-wise correlation coefficients among the mean time course of the fROIs' activation clusters (consisting of more than 10 connected voxels) across all subjects within each LRR.

(B) Grand mean time course and 95% confidence interval across all subjects' activation clusters in each LRR (shown as gray traces).

(C) Correlation matrix between the six LRRs' grand mean time courses and the principal components (PCs). The first PC was highly correlated with the LRRs' grand mean time courses (except for AngG).

Building Language Response Model (LRM) for the Movie-watching fMRI

The hemodynamic language response model (LRM) was built from a group of subjects' movie-watching data, based on the time course response of individuals' fROIs in the six LRRs. First, for each LRR, a grand mean time course was calculated by averaging the mean time course of all subjects' fROIs in the LRR. Figure 2B shows the grand mean time course and 95% confidence interval of all subjects' activation clusters in each LRR. Then, a principal component analysis (PCA) was applied to all LRRs' grand mean time courses to extract the overall temporal profile of the language area response to the movie stimulus. The correlation matrix of the grand mean time courses and the principal components (PCs) is shown in Figure 2C. The first PC retained 60.11% of the signal variance and was highly correlated with five LRRs' grand mean time courses (correlation coefficient r = 0.801 ± 0.125 (mean ± SD)), except for AngG (r = 0.065). Because the first PC represented the main temporal profile of the language area response, it was defined as the LRM for the movie-watching fMRI (Figure 3A shows the overlay of the LRRs' grand mean time courses and the first PC). Figure 3A also plots the convolution of the speech segment boxcar with the canonical HRF (dotted line), which was moderately correlated with the LRM (r = 0.426). This suggests that the main temporal profile of the language area response was selective to the linguistic material of the movie clip.

We then assessed this model building strategy using all 22 subjects' data in a leave-one-out fashion. For each test subject, a LRM was derived from the remaining 21 subjects using the procedure described above, and then used as a linear regressor for the first-level GLM analysis on the test subject's movie-watching fMRI. A t-test was applied to the regression coefficients to test their significance, in order to identify the areas that showed highly similar time course response to the LRM. The resulting language map was evaluated using the subject's task-based fMRI map as a reference. This testing was repeated 22 times, using each subject as the test subject.

Applying the Movie-watching fMRI in Brain Tumor Patients using LRM Derived from Healthy Subjects

Two female right-handed brain tumor patients also participated this study and provided written informed consent. Patient #1 (P1) was 54 years old with a recurrent metastatic adenocarcinoma in the left temporal lobe (size = 10.17 cm3). Patient #2 (P2) was 37 years old with a recurrent astrocytoma (grade II) in the left frontal lobe (size = 6.30 cm3). For the two patients, multiple modalities of structural images were acquired. For P1, the gadolinium-enhanced T1-weighted fluid attenuated inversion recovery (FLAIR) image was used for overlaying the resulting functional maps (TR/TE = 14000/86 ms, flip angle = 90°, in-plane resolution = 448 × 512, voxel size = 0.5 × 0.5 × 1 mm3). For P2, the gadolinium-enhanced T1-weighted axial 3D spoiled gradient recalled (SPGR) images were used (TR/TE = 25/6 ms, flip angle = 40°, in-plane resolution = 256 × 256, voxel size = 1 × 1 × 1.4 mm3).

The LRM to the movie stimulus derived from all healthy subjects was tested in the two patients, by applying it as a linear regressor in the first-level GLM analysis on each patient's movie-watching fMRI data.

Results

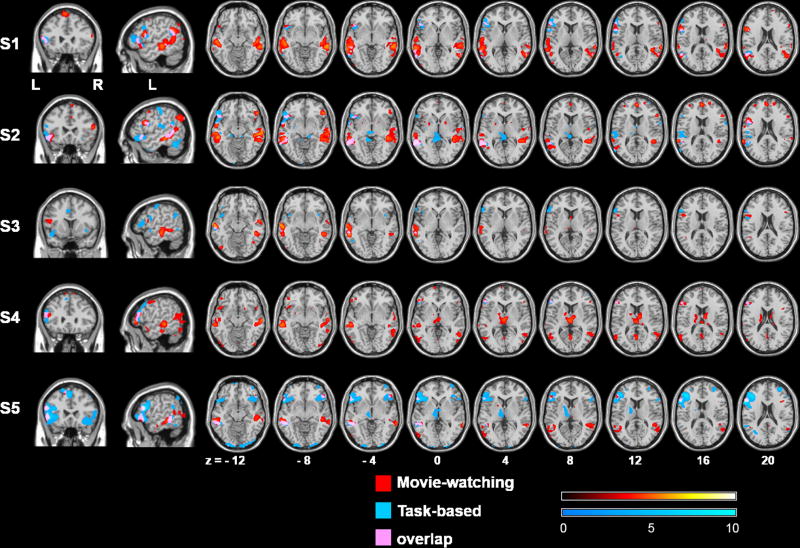

The 22 LRMs resulting from the leave-one-out procedure are plotted in Figure 3B; pair-wise correlation coefficient among them was 0.979 ± 0.014 (mean ± SD). Figure 4 shows five example subjects' first-level GLM analysis results of the movie-watching (red) and task-based (blue) fMRI, and the overlapping areas (pink). All maps are thresholded at p < 0.001 (uncorrected) level. The movie-watching maps showed predominant activations in the putative language areas in the left frontal and temporoparietal cortices, as well as some activations in their right-hemispheric homologues. In comparison, the task-based maps focused primarily in the left frontal regions, with only small activations in the temporoparietal regions. Among the 22 subjects, 16 subjects (73%) showed activations in all of the eleven language functional partitions during the movie-watching condition. For the remaining 6 subjects, activations were found in the combined left frontal region (IFGorb, IFG, and MFG) and the combined left temporoparietal region (MidPostTemp, PostTemp, and AngG). These results suggested that the movie-watching fMRI was able to identify individuals' language areas in all subjects, although high variability existed in the locations of language network.

Figure 4.

Comparison of the movie-watching and task-based fMRI results in individual subjects.

Results of five example subjects' first-level GLM analyses of the movie-watching (red) and task-based (blue) fMRI, and the overlapping areas (pink) were superimposed on the structural images of a template brain. All maps are in neurological convention (the left side of the image is the left side of the brain), and thresholded at p < 0.001 (uncorrected) level.

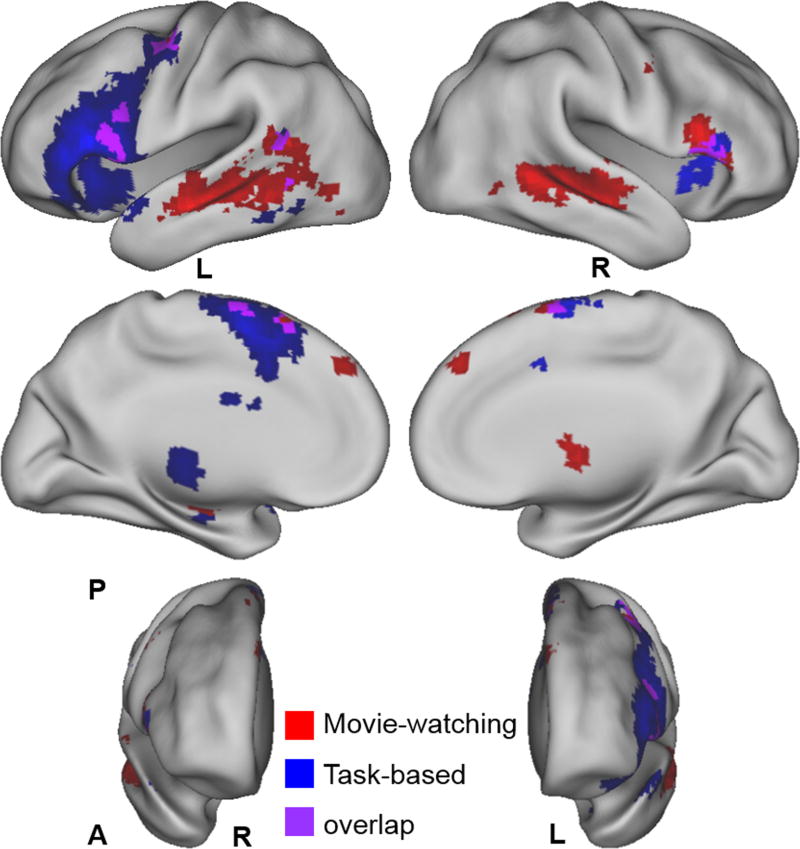

Figure 5 shows the results of second-level group random-effects analyses (one-sample t-test) separately performed for the movie-watching (red) and task-based (blue) fMRI (thresholded at p < 0.001, uncorrected), and the overlapping areas (purple). Table I lists the activation clusters of the group t-maps, including cluster size, brain region, Brodmann area, and the MNI coordinate and t-value of the peak voxel. Based on the size of the activation clusters, the movie-watching fMRI showed robust and localized activations in the left frontal and temporoparietal language areas and the supplementary motor area (SMA). The right-hemispheric homologues of the traditional language regions and the right sub-cortical regions (thalamus and putamen) were also identified. Among the overall activated voxels, only 4.8% voxels were within the primary or secondary auditory cortices, indicating that the majority of temporal cortex activations were not attributable to auditory processing. The task-based language fMRI showed more extensive activations in the left frontal language area and bilateral SMA, but only fair activations in the left temporoparietal language regions. It also showed activations in the left sub-cortical regions (thalamus and putamen) and the right IFG.

Figure 5.

Comparison of the second-level group random-effects analysis results of the movie-watching and task-based fMRI.

Brain renderings showing the group analysis results of the movie-watching (red) and task-based (blue) fMRI, and the overlapping areas (purple) (thresholded at t = 3.53 (p < 0.001, uncorrected) level). A: anterior, P: posterior.

Table I.

Group GLM analysis results of the movie-watching and task-based language fMRI.

| Cluster Size | Brain Region | Brodmann Area | Peak Voxel | ||

|---|---|---|---|---|---|

| MNI (mm) | t | ||||

| Movie-watching fMRI | |||||

| L | 802 | Middle/Superior/Inferior Temporal Gyrus, Sub-gyral, Middle/Inferior Occipital Gyrus, Supramarginal Gyrus | 21, 22, 37, 40, 39 | -54 -27 -15 | 7.92 |

| 96 | Inferior Frontal Gyrus (Opercularis, Triangularis) | 44, 45 | -57 15 12 | 5.69 | |

| 65 | Precentral Gyrus, Middle Frontal Gyrus, Postcentral Gyrus | 6 | -42 -3 57 | 5.09 | |

| 32 | Supplementary Motor Area, Superior Frontal Gyrus | 6 | -3 21 63 | 4.60 | |

| R | 732 | Middle/Superior/Inferior Temporal Gyrus, Sub-gyral, Fusiform Gyrus | 21, 22, 37 | 57 -30 -9 | 7.16 |

| 147 | Inferior Frontal Gyrus (Triangularis, Opercularis, Orbitalis) | 45, 44 | 54 18 12 | 6.02 | |

| 57 | Putamen (Lentiform Nucleus), Thalamus (Ventral Anterior Nucleus), Pallidum | N/A | 12 0 6 | 5.22 | |

| 31 | Middle Frontal Gyrus, Precentral Gyrus | 6 | 57 -3 51 | 4.29 | |

| B | 102 | Medial/Superior Frontal Gyrus | 9, 6 | 3 48 42 | 4.80 |

| 69 | Superior Frontal Gyrus, Supplementary Motor Area | 6 | 3 3 75 | 5.51 | |

| Task-based language fMRI | |||||

| L | 1776 | Inferior Frontal Gyrus (Triangularis, Orbitalis, Opercularis), Precentral Gyrus, Middle Frontal Gyrus, Insula, Superior Temporal Gyrus, Sub-gyral, Superior Temporal Pole, Rolandic Operculum, Postcentral Gyrus, Claustrum | 6, 47, 9, 45, 13, 44, 46, 38, 22, 4 | -54 24 9 | 11.47 |

| 238 | Thalamus (Ventral Lateral Nucleus, Medial Dorsal Nucleus), Putamen (Lentiform Nucleus), Caudate Nucleus | N/A | -12 -18 3 | 5.04 | |

| 115 | Middle/Inferior Temporal Gyrus, Sub-gyral | N/A | -51 -36 -12 | 4.94 | |

| 23 | Parahippocampal Gyrus, Hippocampus | N/A | -30 -18 -24 | 4.59 | |

| R | 238 | Inferior Frontal Gyrus (Triangularis, Orbitalis), Insula, Superior Temporal Gyrus, Superior Temporal Pole | 47, 38, 13, 45 | 48 27 0 | 5.68 |

| 21 | Sub-gyral | N/A | 51 -39 -9 | 4.61 | |

| B | 530 | Supplementary Motor Area, Superior/Medial Frontal Gyrus, Cingulate Gyrus | 6, 32, 8 | -3 0 60 | 9.80 |

The group GLM t-maps are thresholded at p < 0.001 (uncorrected, df = 21) level. Clusters consisting of more than 20 voxels are reported. L: left hemisphere; R: right hemisphere; B: bilateral.

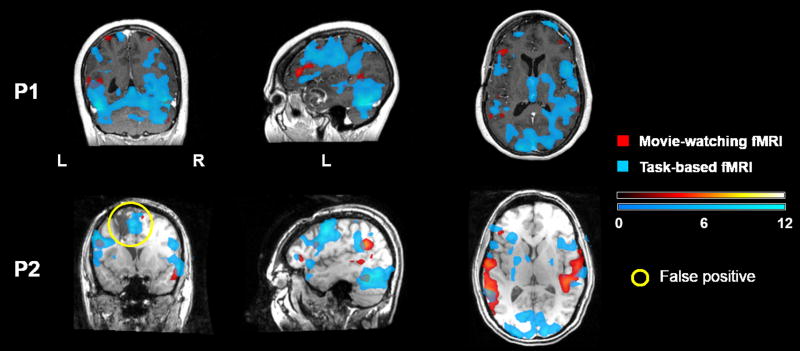

Figure 6 shows the results of the two brain tumor patients' fMRI analyses. In general, the functional map of the task-based fMRI showed more supra-threshold voxels than that of the movie-watching fMRI, however, some of the activations represented non-language cortical areas (such as visual cortex), or noise signals inside the ventricles. Patient P1 had mild word finding difficulty pre- and post-operatively; the movie-watching fMRI identified activations in putative language areas in the left frontal and temporal cortices, as well as the right temporal homologues. Compared with the task-based fMRI, the movie-watching fMRI more robustly identified activations in Broca's area, with the activation locations of the two paradigms only partially overlapped. Similar to P1, patient P2's movie-watching fMRI identified activations in the left frontal and temporal language areas, and the right temporal homologues. P2 had normal language function pre- and post-operatively, therefore, the activations within the cavity of the previous surgery shown in the map of the task-based fMRI (highlighted with yellow circles) were most likely false positives.

Figure 6.

Comparison of the movie-watching and task-based fMRI results in two brain tumor patients.

Results of two brain tumor patients' first-level GLM analyses of the movie-watching (red) and task-based (blue) fMRI were superimposed on their structural images. All maps are in neurological convention (the left side of the image is the left side of the brain), and thresholded at p < 0.001 (uncorrected) level.

Discussion

In current clinical settings, a major obstacle exists for the broad application of fMRI for language mapping: the test results are unreliable or unobtainable when patients have language or other neurological deficits that prevent them from performing the tasks satisfactorily, because task-based fMRI depends on strictly timed administration of language tasks and requires adequate performance from patients. To overcome this obstacle, we proposed a new paradigm using a movie-watching condition, during which subjects passively watched a movie clip consisting of contrasting speech and non-speech segments. We developed and assessed an analytical strategy to map individual subjects' language areas using the movie-watching fMRI, based on a time course model derived from an independent set of subjects. Results in a group of healthy subjects and two brain tumor patients indicated that the movie-watching paradigm was able to activate and identify individual subjects' language areas, supporting the feasibility of using this novel paradigm as a complementary approach in language mapping when patients have difficulties in performing language tasks.

Although other passive comprehension language tasks, such as story reading or listening, have been used for language mapping, such tasks are usually implemented as blocked design requiring precise timing and strictly complementary control conditions (such as dots patterns or non-word strings for reading task, and beep or reversed speech for listening task)32,33. Similar to the conventional active language tasks, these passive tasks are also difficult and challenging for neurosurgical patients. In contrast, a movie-watching condition is less-demanding than the blocked design paradigms, and the naturalistic language stimuli embedded in movie clips are more engaging than simple visual or audio stimuli. Therefore, this novel movie-watching fMRI is potentially more suitable for a wider range of clinical populations.

Analytical Strategy for the Movie-watching fMRI

Unlike the conventional task-based fMRI where the task boxcar can be used to build the regression model for a GLM analysis, modeling of the language area response is not straightforward for the movie-watching fMRI. We developed a data-driven approach for deriving a time course model of the hemodynamic response based on the language fROIs' activation profiles from a group of subjects. A regression analysis was performed to identify a new subject's language areas by finding the brain areas that expressed highly similar temporal profiles to the model. The rationale for this strategy is based on the findings that (1) the activity time courses of the putative language areas were highly similar across a group of subjects when they are watching the same movie clip consisting of linguistic material17, and (2) functionally specialized brain areas indicated unique activity time courses and can be segregated from each other under natural-viewing condition19. Specifically, using a hypothesis-driven approach based on human perception of a movie's language feature, Bartels and Zeki were able to reveal bilateral Wernicke's areas, and the results did not depend on the precise nature of the language stimuli used19. Furthermore, we hypothesize that even in patients with lesions in or near putative language areas where normal anatomy may be distorted, the large-scale temporal profile of the language area response will be preserved. Therefore, this strategy based on temporal similarity is potentially more suitable than that based on spatial similarity for patients with structural brain lesions.

In this study, the results of time course analysis indicated that putative language areas were functionally connected under the movie-watching condition. Although large signal variability was found among the activation clusters within each language response region (LRR, Figure 2A), five of the six LRRs showed highly correlated grand mean time courses (pair-wise r (mean ± SD) = 0.579 ± 0.144), except for AngG (mean r between AngG and the other five LRRs was 0.117) (Figure 2C). The lower correlation of AngG with other LRRs is consistent with the findings in34. These results supported the feasibility of deriving a language time course model by extracting the main temporal profile of the activation clusters within these functionally connected LRRs.

Defining Individual Subjects' Language fROIs and Group-level Language Response Regions (LRRs)

Language network is far more complex than the traditional large-module theories that are composed primarily of Broca's and Wernicke's areas in the left frontal and temporoparietal regions, and is highly variable across subjects1. Therefore, we generated language fROIs for each subject by finding the voxels that satisfied the following criteria: (1) were significantly activated during the task-based language fMRI, (2) responded similarly with its neighboring voxels during the movie-watching condition, and (3) were within a set of language functional partitions32 defined in the standard space which can be considered as structural ROIs in this study. For the movie-watching fMRI, we adopted a ReHo technique that measures the functional coherence of a given voxel with its nearest neighbors in a 3D domain (26 voxels)29. A voxel-wise ReHo map showing each voxel's KCC value quantifies the similarity between the time course of the voxel with those of its nearest neighbors. ReHo has been applied to investigate alteration of resting-state functional connectivity patterns of brain diseases, such as Alzheimer's disease35 and Parkinson's disease36. In this study, we extended the usefulness of ReHo to aid the identification of locally functionally connected regions, based on the hypothesis that neighboring voxels of a functional brain area synchronize their activities under the movie-watching condition. Building upon the individual subject's language fROIs, we defined six group-level “language response regions” (LRRs) by choosing the functional partitions where more than half of the subjects in the group showed fROIs, to only include brain regions where most subjects showed language-related activations when deriving the time course model.

It should be emphasized that the task-based language fMRI was used as a localizer fMRI for functionally defining language ROIs. In the leave-one-out procedure, the test subject's data was analyzed based on the time course model independently derived from the remaining subjects, without depending on the subject's task-based fMRI. Therefore, when applied to clinical language mapping, this movie-watching paradigm will not be dependent on patients' task-based language fMRI.

Language Areas Identified from the Movie-watching fMRI

Results of group GLM analysis on the movie-watching fMRI revealed activations that were localized in the putative Broca's area in the left IFG as well as its right-hemispheric homologues. For the temporal cortex activations, in addition to the putative Wernicke's area in the left posterior superior temporal gyrus, bilateral activations were revealed in the middle temporal gyrus that extended dorso-anteriorly to the superior temporal gyrus. This bilateral activation pattern in the temporal cortex is consistent with the framework that has been proposed for speech comprehension37,38. In contrast, the task-based language fMRI revealed extensive activations that spread all over the left IFG, with only small activations in the left middle and inferior temporal gyri. This difference in the activation pattern is due to the fundamental difference between the two paradigms. The language task of antonym generation with vocalized response involves word decoding, selection, and overt articulation, and is able to activate Broca's area39 and SMA40. Compared with this word-level language task, comprehending the dialogue of the movie clip involves processing of many aspects of natural language relevant to novel, contextual and emotional meaning, and thus involves more extensive brain regions than the well-known left frontal and temporoparietal language areas41-43. Studies have found bilateral activation pattern and emphasized substantial and unique contribution of the right hemisphere in natural language processing1,20. According to the framework of natural language comprehension proposed by Jung-Beeman20, the left hemisphere performs rapid interpretation and tight links, while the right hemisphere serves relatively coarser function of maintaining broader meaning and recognizing distant relations of conversation. For example, analyzing spoken sentences as a whole drove right hemisphere activations predominantly located in the temporal lobe44. It has also been suggested that decoding a conversation involves the left hemisphere language areas that process semantic association and serial information, as well as the right hemisphere homologues that integrate semantic information into the context to monitor and maintain the conversation topic21,45.

Sub-cortical activation of the movie-watching fMRI was in the right thalamus and basal ganglia (putamen and pallidum), while that of the task-based fMRI was in the left thalamus and basal ganglia (putamen and caudate nucleus). The thalamus and basal ganglia have been hypothesized to play a role in the control and adaptation of cortico-cortical connectivity, and support for the composition of cortically provided information, respectively46. The cortico-thalamic language processing network in the left hemisphere47,48 has recently been supported by a structural connectivity analysis among Broca's area, basal ganglia, and thalamus using diffusion-weighted imaging (DWI) fiber tracking technique49,50. To date there has been a lack of neuroimaging studies focusing on sub-cortical involvement during natural language processing. Based on the results of the movie-watching fMRI, we hypothesize that the right thalamus and basal ganglia are involved in monitoring and supporting cortico-cortical activities of the right hemisphere language regions during natural language processing. This hypothesis and the lack of involvement of the left sub-cortical areas during natural language processing need further investigation.

Clinical Relevance

The patients' results further supported the feasibility of using this novel movie-watching paradigm in language mapping in patients with occupying lesions. In clinical practice, for neurosurgical patients who have language deficits, such as aphasia, they will be asked to simply watch this movie clip without performing any tasks. We suggest that the patient answer a survey after the scan to confirm that they could follow the movie content and understand the conversations. As shown in this study, the language response model derived from the healthy subjects will be used as a regressor in the conventional GLM analysis on the patient's data to map the language areas.

Another non-invasive functional mapping technique, magnetoencephalography (MEG), has also been used to map language areas51. Unlike fMRI that indirectly reflects neural activity through changes in blood flow, MEG measures the stimulation-evoked post-synaptic activity of the cerebral cortex. Both techniques have advantages and limitations, therefore, multi-modal analysis combining fMRI and MEG has reported to enhance the reliability of non-invasive mapping of eloquent cortex51. This movie-watching paradigm could also be adopted in MEG acquisition, when the patient has difficulty with the conventional task performance, to take advantage of this easy, task-free and less timing intensive paradigm. An optimized analysis approach would be needed to analyze the movie-evoked response of the MEG acquisition.

Limitations

One limitation of this study was the lack of behavioral response data during the movie-watching condition, which made it difficult to evaluate subjects' attentional level and what cognitive operations were in effect responding to the rich stimuli of the movie clip. This may explain some of the variability in the activation time course across subjects. Studies have used questionnaire or survey to evaluate attentional level and comprehension of movie content14,17. One dilemma is that the lack of overt response during scanning is definitional of our approach to be used in patient populations because the goal is to make fMRI language mapping easier for neurosurgical patients, particularly for the patients who cannot make overt responses due to language deficits (such as aphasia). In the clinical implementation of this movie-watching paradigm, although patients may not be evaluated for their engagement, the LRM used for data analysis should be derived from an independent group of healthy and attentive subjects.

In this study, our clinical antonym generation task-based fMRI was used as a reference for assessing the performance of the movie-watching fMRI in mapping individuals' language areas. Although the resulting language maps of the two paradigms were partially overlapped, the movie-watching fMRI was able to identify putative language areas in the frontal and temporoparietal cortices in individual subject level. An inherent limitation of fMRI for its application in pre-surgical brain mapping exists for both paradigms. Because fMRI is an activation technique, the movie-watching paradigm with naturalistic language stimuli may activate many participating components of the language network (such as the “domain-general regions” defined in52), thus the resulting language map may not specifically show the critical language areas that neurosurgeons want to preserve during surgery. Therefore, future work is needed to assess and compare the two paradigms by validating the identified areas against the clinical gold-standard ECS technique. From the data analysis perspective, characterization of the critical language areas' temporal profile was achieved by extraction of the overall time course response from individuals' language fROIs in this study. Further refinement of this time course model or application of multivariate approaches (such as independent component analysis (ICA)53, and multi-voxel pattern analysis (MVPA)54) may be investigated to improve the specificity of the resulting language map from the movie-watching fMRI.

Conclusions

We report the development of a new fMRI paradigm for mapping individual subjects' language areas by using a movie-watching condition and a corresponding language response model. Our results in healthy subjects as well as two brain tumor patients suggest that this easy-to-perform and “task-free” paradigm was able to identify putative language areas in individual subjects. This novel paradigm can be used as a complementary approach for neurosurgical patients who cannot perform the conventional task-based language fMRI, thus expand the clinical populations for fMRI language mapping. In addition, the rich naturalistic language stimuli provide an ecologically valid condition to activate complex language network. Future study of this paradigm should focus on optimization of the analytical approach, deployment in more neurosurgical patients who may have language deficits and space occupying lesions, and validation against clinical gold-standard language mapping techniques.

Acknowledgments

This work is supported by the National Institute of Neurological Disorders and Stroke (NINDS), the National Center for Research Resources (NCRR), and the National Institute of Biomedical Imaging and Bioengineering (NIBIB) of the National Institutes of Health (NIH) through Grant Numbers R21NS075728 (AJG), P41EB015898 (AJG), P41RR019703 (AJG), and P01CA067165 (AJG), and The Brain Science Foundation (YT). The authors are grateful to two anonymous reviewers for insightful comments on an earlier version of the manuscript.

References

- 1.Bookheimer S. Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu Rev Neurosci. 2002;25:151–88. doi: 10.1146/annurev.neuro.25.112701.142946. [DOI] [PubMed] [Google Scholar]

- 2.Price CJ. A review and synthesis of the first 20 years of PET and fMRI studies of heard speech, spoken language and reading. Neuroimage. 2012;62:816–47. doi: 10.1016/j.neuroimage.2012.04.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bookheimer S. Pre-surgical language mapping with functional magnetic resonance imaging. Neuropsychol Rev. 2007;17:145–55. doi: 10.1007/s11065-007-9026-x. [DOI] [PubMed] [Google Scholar]

- 4.Orringer DA, Vago DR, Golby AJ. Clinical applications and future directions of functional MRI. Semin Neurol. 2012;32:466–75. doi: 10.1055/s-0032-1331816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Binder JR, Swanson SJ, Hammeke TA, et al. Determination of language dominance using functional MRI: a comparison with the Wada test. Neurology. 1996;46:978–84. doi: 10.1212/wnl.46.4.978. [DOI] [PubMed] [Google Scholar]

- 6.Bizzi A, Blasi V, Falini A, et al. Presurgical functional MR imaging of language and motor functions: validation with intraoperative electrocortical mapping. Radiology. 2008;248:579–89. doi: 10.1148/radiol.2482071214. [DOI] [PubMed] [Google Scholar]

- 7.Roux FE, Boulanouar K, Lotterie JA, Mejdoubi M, LeSage JP, Berry I. Language functional magnetic resonance imaging in preoperative assessment of language areas: correlation with direct cortical stimulation. Neurosurgery. 2003;52:1335–45. doi: 10.1227/01.neu.0000064803.05077.40. discussion 45-47. [DOI] [PubMed] [Google Scholar]

- 8.Stippich C, Rapps N, Dreyhaupt J, et al. Localizing and lateralizing language in patients with brain tumors: feasibility of routine preoperative functional MR imaging in 81 consecutive patients. Radiology. 2007;243:828–36. doi: 10.1148/radiol.2433060068. [DOI] [PubMed] [Google Scholar]

- 9.Tharin S, Golby A. Functional brain mapping and its applications to neurosurgery. Neurosurgery. 2007;60:185–201. doi: 10.1227/01.NEU.0000255386.95464.52. discussion -2. [DOI] [PubMed] [Google Scholar]

- 10.Rigolo L, Stern E, Deaver P, Golby AJ, Mukundan S., Jr Development of a clinical functional magnetic resonance imaging service. Neurosurg Clin N Am. 2011;22:307–14. doi: 10.1016/j.nec.2011.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Tie Y, Rigolo L, Norton IH, et al. Defining language networks from resting-state fMRI for surgical planning--a feasibility study. Hum Brain Mapp. 2014;35:1018–30. doi: 10.1002/hbm.22231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hasson U, Malach R, Heeger DJ. Reliability of cortical activity during natural stimulation. Trends Cogn Sci. 2010;14:40–8. doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–40. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- 14.Jaaskelainen IP, Koskentalo K, Balk MH, et al. Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. Open Neuroimag J. 2008;2:14–9. doi: 10.2174/1874440000802010014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kauppi JP, Jaaskelainen IP, Sams M, Tohka J. Inter-subject correlation of brain hemodynamic responses during watching a movie: localization in space and frequency. Front Neuroinform. 2010;4:5. doi: 10.3389/fninf.2010.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Spiers HJ, Maguire EA. Decoding human brain activity during real-world experiences. Trends Cogn Sci. 2007;11:356–65. doi: 10.1016/j.tics.2007.06.002. [DOI] [PubMed] [Google Scholar]

- 17.Wilson SM, Molnar-Szakacs I, Iacoboni M. Beyond superior temporal cortex: intersubject correlations in narrative speech comprehension. Cereb Cortex. 2008;18:230–42. doi: 10.1093/cercor/bhm049. [DOI] [PubMed] [Google Scholar]

- 18.Golland Y, Bentin S, Gelbard H, et al. Extrinsic and intrinsic systems in the posterior cortex of the human brain revealed during natural sensory stimulation. Cereb Cortex. 2007;17:766–77. doi: 10.1093/cercor/bhk030. [DOI] [PubMed] [Google Scholar]

- 19.Bartels A, Zeki S. Functional brain mapping during free viewing of natural scenes. Hum Brain Mapp. 2004;21:75–85. doi: 10.1002/hbm.10153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jung-Beeman M. Bilateral brain processes for comprehending natural language. Trends Cogn Sci. 2005;9:512–8. doi: 10.1016/j.tics.2005.09.009. [DOI] [PubMed] [Google Scholar]

- 21.Xu J, Kemeny S, Park G, Frattali C, Braun A. Language in context: emergent features of word, sentence, and narrative comprehension. Neuroimage. 2005;25:1002–15. doi: 10.1016/j.neuroimage.2004.12.013. [DOI] [PubMed] [Google Scholar]

- 22.Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 23.Tie Y, Suarez RO, Whalen S, Radmanesh A, Norton IH, Golby AJ. Comparison of blocked and event-related fMRI designs for pre-surgical language mapping. Neuroimage. 2009;47(Suppl 2):T107–15. doi: 10.1016/j.neuroimage.2008.11.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Power JD, Barnes KA, Snyder AZ, Schlaggar BL, Petersen SE. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage. 2012;59:2142–54. doi: 10.1016/j.neuroimage.2011.10.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An integrated software suite for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–59. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Van Essen DC. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–62. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- 27.Friston KJ, Holmes AP, Worsley KJ, Poline JP, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- 28.Voyvodic JT. Activation mapping as a percentage of local excitation: fMRI stability within scans, between scans and across field strengths. Magn Reson Imaging. 2006;24:1249–61. doi: 10.1016/j.mri.2006.04.020. [DOI] [PubMed] [Google Scholar]

- 29.Zang Y, Jiang T, Lu Y, He Y, Tian L. Regional homogeneity approach to fMRI data analysis. Neuroimage. 2004;22:394–400. doi: 10.1016/j.neuroimage.2003.12.030. [DOI] [PubMed] [Google Scholar]

- 30.Yan C, Zang Y. DPARSF: A MATLAB Toolbox for “Pipeline” Data Analysis of Resting-State fMRI. Front Syst Neurosci. 2010;4:13. doi: 10.3389/fnsys.2010.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kendall M, Gibbons JDR. Correlation Methods. Oxford University Press; 1990. [Google Scholar]

- 32.Fedorenko E, Hsieh PJ, Nieto-Castanon A, Whitfield-Gabrieli S, Kanwisher N. New method for fMRI investigations of language: defining ROIs functionally in individual subjects. J Neurophysiol. 2010;104:1177–94. doi: 10.1152/jn.00032.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Binder JR, Swanson SJ, Hammeke TA, Sabsevitz DS. A comparison of five fMRI protocols for mapping speech comprehension systems. Epilepsia. 2008;49:1980–97. doi: 10.1111/j.1528-1167.2008.01683.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Blank I, Kanwisher N, Fedorenko E. A functional dissociation between language and multiple-demand systems revealed in patterns of BOLD signal fluctuations. J Neurophysiol. 2014;112:1105–18. doi: 10.1152/jn.00884.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.He Y, Wang L, Zang Y, et al. Regional coherence changes in the early stages of Alzheimer's disease: a combined structural and resting-state functional MRI study. Neuroimage. 2007;35:488–500. doi: 10.1016/j.neuroimage.2006.11.042. [DOI] [PubMed] [Google Scholar]

- 36.Wu T, Long X, Zang Y, et al. Regional homogeneity changes in patients with Parkinson's disease. Hum Brain Mapp. 2009;30:1502–10. doi: 10.1002/hbm.20622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- 38.Scott SK, Blank CC, Rosen S, Wise RJ. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123(Pt 12):2400–6. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Brannen JH, Badie B, Moritz CH, Quigley M, Meyerand ME, Haughton VM. Reliability of functional MR imaging with word-generation tasks for mapping Broca's area. AJNR Am J Neuroradiol. 2001;22:1711–8. [PMC free article] [PubMed] [Google Scholar]

- 40.Alario FX, Chainay H, Lehericy S, Cohen L. The role of the supplementary motor area (SMA) in word production. Brain Res. 2006;1076:129–43. doi: 10.1016/j.brainres.2005.11.104. [DOI] [PubMed] [Google Scholar]

- 41.Buchanan TW, Lutz K, Mirzazade S, et al. Recognition of emotional prosody and verbal components of spoken language: an fMRI study. Brain Res Cogn Brain Res. 2000;9:227–38. doi: 10.1016/s0926-6410(99)00060-9. [DOI] [PubMed] [Google Scholar]

- 42.Ferstl EC, Neumann J, Bogler C, von Cramon DY. The extended language network: a meta-analysis of neuroimaging studies on text comprehension. Hum Brain Mapp. 2008;29:581–93. doi: 10.1002/hbm.20422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Yarkoni T, Speer NK, Zacks JM. Neural substrates of narrative comprehension and memory. Neuroimage. 2008;41:1408–25. doi: 10.1016/j.neuroimage.2008.03.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kircher TT, Brammer M, Tous Andreu N, Williams SC, McGuire PK. Engagement of right temporal cortex during processing of linguistic context. Neuropsychologia. 2001;39:798–809. doi: 10.1016/s0028-3932(01)00014-8. [DOI] [PubMed] [Google Scholar]

- 45.Caplan R, Dapretto M. Making sense during conversation: an fMRI study. Neuroreport. 2001;12:3625–32. doi: 10.1097/00001756-200111160-00050. [DOI] [PubMed] [Google Scholar]

- 46.Klostermann F, Krugel LK, Ehlen F. Functional roles of the thalamus for language capacities. Front Syst Neurosci. 2013;7:32. doi: 10.3389/fnsys.2013.00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Alain C, Reinke K, McDonald KL, et al. Left thalamo-cortical network implicated in successful speech separation and identification. Neuroimage. 2005;26:592–9. doi: 10.1016/j.neuroimage.2005.02.006. [DOI] [PubMed] [Google Scholar]

- 48.Crosson B, Benefield H, Cato MA, et al. Left and right basal ganglia and frontal activity during language generation: contributions to lexical, semantic, and phonological processes. J Int Neuropsychol Soc. 2003;9:1061–77. doi: 10.1017/S135561770397010X. [DOI] [PubMed] [Google Scholar]

- 49.Ford AA, Triplett W, Sudhyadhom A, et al. Broca's area and its striatal and thalamic connections: a diffusion-MRI tractography study. Front Neuroanat. 2013;7:8. doi: 10.3389/fnana.2013.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lemaire JJ, Golby A, Wells WM, 3rd, et al. Extended Broca's area in the functional connectome of language in adults: combined cortical and subcortical single-subject analysis using fMRI and DTI tractography. Brain Topogr. 2013;26:428–41. doi: 10.1007/s10548-012-0257-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Stufflebeam SM. Clinical magnetoencephalography for neurosurgery. Neurosurg Clin N Am. 2011;22:153–67. vii–viii. doi: 10.1016/j.nec.2010.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Fedorenko E, Duncan J, Kanwisher N. Broad domain generality in focal regions of frontal and parietal cortex. Proc Natl Acad Sci U S A. 2013;110:16616–21. doi: 10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bartels A, Zeki S. The chronoarchitecture of the human brain--natural viewing conditions reveal a time-based anatomy of the brain. Neuroimage. 2004;22:419–33. doi: 10.1016/j.neuroimage.2004.01.007. [DOI] [PubMed] [Google Scholar]

- 54.Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10:424–30. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]