Abstract

There are many inertial sensor-based foot pose estimation algorithms. In this paper, we present a methodology to improve the accuracy of foot pose estimation using two low-cost distance sensors (VL6180) in addition to an inertial sensor unit. The distance sensor is a time-of-flight range finder and can measure distance up to 20 cm. A Kalman filter with 21 states is proposed to estimate both the calibration parameter (relative pose of distance sensors with respect to the inertial sensor unit) and foot pose. Once the calibration parameter is obtained, a Kalman filter with nine states can be used to estimate foot pose. Through four activities (walking, dancing step, ball kicking, jumping), it is shown that the proposed algorithm significantly improves the vertical position estimation.

Keywords: inertial sensor, distance sensor, foot pose estimation, Kalman filters

1. Introduction

Foot pose (position and orientation) estimation is used in many areas, such as gait analysis [1–3], exergaming [4,5] and pedestrian navigation systems [6]. The most accurate method to estimate foot pose is using an optical motion tracker. However, optical motion tracking is rather expensive, and it can only capture the motion in a limited space, which is determined by the number of cameras.

Recently, inertial sensor-based foot pose estimation methods are increasingly used, since the sensor can be attached to a shoe, which does not require any infrastructure in an environment and, thus, removes the space constraint imposed by optical motion trackers. As inertial sensors are becoming less expensive and smaller, inertial sensor-based motion tracking (ISBMT) is expected to be more popular.

The basic principle of an ISBMT is integration of gyroscope output (which gives the orientation) and double integration of accelerometer outputs (which gives the position). In the process, the sensors noises are also integrated. For example, if there is a nonzero accelerometer sensor bias term, it affects the position estimation error proportional to the square of elapsed time [7]. Furthermore, initial orientation error also significantly affects the position estimation error, since the gravitational acceleration is not correctly removed during the position estimation [7].

In foot pose tracking, the error increase can be mitigated using zero velocity updating [8,9]. Whenever a foot touches the ground, we know the velocity of the foot is zero. Using this zero velocity information, the estimation error can be significantly reduced [6].

Another approach to reduce the estimation error is to use a smoother [10]. The smoother uses both prior and posterior data to estimate the current state. This combination reduces the estimation error. One drawback of a smoother is that its computation cannot be done online and, thus, is not suitable for applications, such as gaming.

The pose estimation error also can be reduced by using additional sensors. In [11], a pressure sensor is attached on a shoe to accurately detect the zero velocity intervals, which increases the estimation accuracy. In [12], an ultrasound range sensor is used to measure the distance to walls. The ultrasound sensor does not improve foot pose estimation, but improves the location accuracy for indoor pedestrian navigation. In [13], a camera is used to read markers on the floor, which gives the absolute position and orientation of a foot. One disadvantage of this approach is that the markers must be installed on the floor, so the experiment space is limited by the number of makers. In [14], a camera and infrared LEDs are used to measure the relative pose between two feet.

In this paper, we present a methodology to improve the accuracy of foot pose estimation by attaching low-cost distance sensors on a shoe in addition to an inertial sensor unit. Since the distance sensor gives height information, it can help to improve height estimation accuracy. The improved accuracy could be helpful in gait analysis and exergaming applications. The foot pose estimation algorithm is implemented using a Kalman filter. To combine inertial sensors and distance sensors, the relative pose between two sensors should be known. This pose parameter is included in the proposed Kalman filter.

The paper is organized as follows. In Section 2, the system hardware and coordinate systems are introduced. In Section 3, dynamic equations of the Kalman filter for ISBMT are given. In Section 4, measurement equations of the Kalman filter are provided. In Section 5, the proposed algorithm is tested, and the estimated positions are compared with optical tracker measurement values. The discussion is given in Section 6.

2. System Overview

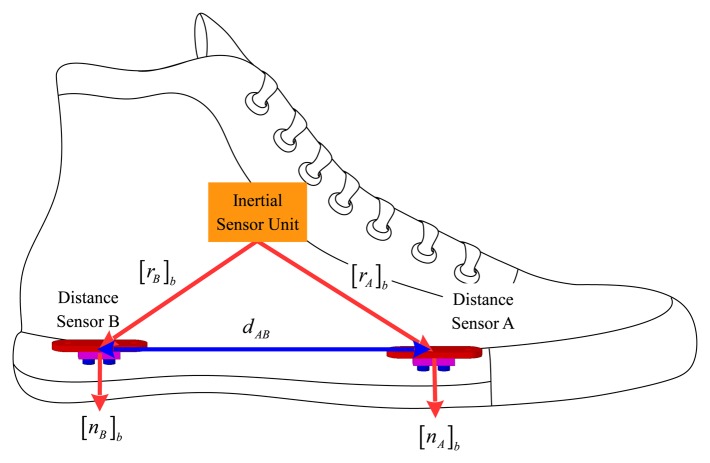

An inertial measurement unit (three-axis accelerometers and three-axis gyroscopes, Xsens MTi) is attached on a shoe as in Figure 1. The sampling frequency of the inertial sensors is 100 Hz. Two distance sensors (VL6180 [15]) are also attached on a shoe, where symbols A and B are used to distinguish the two sensors. The distance sensor VL6180 measures the distance by measuring the time-of-flight of infrared light, and the measurement range is up to 20 cm. This sensor is most often used as a proximity sensor in smartphones. The sampling frequency of the distance sensors is 33.33 Hz.

Figure 1.

Inertial and distance sensors on a shoe.

Two coordinate systems are used in this paper: the body and world coordinate systems. The three axes of the body coordinate system coincide with the three axes of the inertial sensor unit. The z axis of the world coordinate system is in the direction of the local gravitational field: the z axis is pointing upward. The x and y axes are chosen arbitrarily. The origin of the world coordinate system is assumed to be on the floor.

The relative position and orientation of a distance sensor with respect to the inertial sensor unit are required in the foot pose estimation algorithm. As shown in Figure 1, the positions of two distance sensors are denoted [rA]b ∈ R3 and [rB]b ∈ R3, which are the position coordinates in the body coordinate system. The notation [p]b ([p]w) for a vector p ∈ R3 is used to emphasize that a vector is represented in the body (world) coordinate system. When there is no confusion, [p]b (or [p]w) is just expressed by p. The pointing direction of the distance sensor is denoted by a unit vector nA ∈ R3 and nB ∈ R3.

The distance sensor parameters (rA, rB, nA, nB in Figure 1) could be determined using a ruler and a protractor. It would be, however, not easy to determine the parameters with high accuracy. Thus, the small errors in the parameters are estimated in the Kalman filter. We model the parameters as follows:

| (1) |

where r̂A ∼ n̂B are initial estimated parameter values (most likely measured values using a ruler and a protractor) and r̄A ∼ n̄B are errors in the parameter estimation.

The distance between two distance sensors is denoted by dAB = ‖rA − rB‖, which is measured by a ruler. This scalar quantity can be measured more accurately comparing with other vector parameters, which are rather difficult to measure and sometimes require guesswork. Thus, the error of dAB is not estimated. The measured value of dAB is denoted by zAB:

| (2) |

where vAB is the measurement noise.

3. Kalman Filter for ISBMT

In this section, the foot pose tracking algorithm is given.

Let r ∈ R3 and v ∈ R3 denote the position and velocity of the inertial sensor unit in the world coordinate system. Let q ∈ R4 be the quaternion [16] representing the rotation relationship between the body and world coordinate systems. Let C(q) ∈ SO(3) be the rotation matrix corresponding to the quaternion q.

3.1. Basic Pose Equations

The basic equations for r, v and q are given by [17]:

| (3) |

where [a]b ∈ R3 is the acceleration expressed in the body coordinate system and ω = [ωx ωy ωz]′ is the angular velocity of the body coordinate system with respect to the world coordinate system. The symbol Ω(ω) is defined by:

3.2. Numerical Integration

The basic principle of ISBMT is that q, v and r can be computed by numerically integrating Equation (3) [7]. To do that, we need to know ω and [a]b, which can be measured using gyroscopes and accelerometers.

The gyroscope output (yg ∈ R3) and accelerometer output (ya ∈ R3) can be modeled as follows:

| (4) |

where [g̃]w = [ 0 0 g ]′ ∈ R3 is the local gravitational vector in the world coordinate system and g is the magnitude of the gravitational acceleration. The measurement noises vg and va are assumed to be uncorrelated zero mean white Gaussian.

We can integrate Equation (3) by replacing ω by yg and replacing [a]b by ya − C(q)g̃. The numerical integration algorithm is given in [18]. Let the integrated values be denoted by q̂, r̂ and v̂.

Since ω ≠ yg and [a]b ≠ ya − C(q̂)g̃, there are errors in q̂, r̂ and v̂, which are denoted by q̄ ∈ R3, r̄ ∈ R3 and v̄ ∈ R3:

| (5) |

where ⊗ denotes the quaternion multiplication and q* is the conjugate quaternion of q ∈ R4. The definition of q̄ ∈ R3×1 is from the assumption that the estimation error of q̂ is small, and thus, the following is satisfied [19]:

| (6) |

The errors q̄, r̄ and v̄ along with the parameter errors r̄A ∼ n̄B in Equation (1) are estimated in the Kalman filter. Combining nine states in Equation (5) and 12 states in Equation (1), we have the following state for a Kalman filter:

| (7) |

Once the calibration parameters r̂A ∼ n̂B are estimated, the parameter error terms r̄A ∼ n̄B in Equation (7) can be removed for fast computation. The dynamic equation of x is given by:

| (8) |

where:

The dynamic equations for q̄, r̄ and v̄ are from the result in [20]. The derivatives of r̄A ∼ n̄B are zero, since rA ∼ nB are constant parameters.

4. Measurement Equation of the Kalman Filter

Two measurement equations are used in the Kalman filter. One is the measurement from distance sensors (Section 4.1), and the other is the measurement equation using the zero velocity intervals (Section 4.2).

4.1. Distance Sensor Output and Parameters

The distance sensor outputs zA ∈ R and zB ∈ R can be modeled as follows:

| (9) |

where dA and dB are true distance values of sensors A and B, respectively. Symbols vA and vB denote measurement noises of A and B, respectively. We note that dA and dB are not the same as the heights of distance sensors A and B if they are not perpendicular to the floor. The heights can be obtained from dA and dB if we know the orientation of distance sensors.

Assuming that the floor is flat and the origin of the world coordinate system lies on the floor, the following is satisfied:

| (10) |

In Equation (10), − (ra + nada) is a vector (in the body coordinate system) from a point (intersection of line na and floor plane) on the floor to the inertial sensor. By pre-multiplying C(q)′ (the rotation matrix from the body coordinate system to the world coordinate system), the third component of C(q)′ (ra + nada) is the height of the inertial sensor.

Now, we relate Equation (10) to the state Equation (7). Note that [21]:

| (11) |

Inserting Equations (1), (5), (9) and (11), into the first equation of Equation (10), we have the following:

| (12) |

Assuming that the error terms (q̄, r̄A, n̄A, r̄) and the noise term (va) are small, we can ignore the product terms: for example, [q̄×]r̄A is ignored. By ignoring the product terms, we obtain:

| (13) |

where:

Thus, the measurement z̃A is related to the state x as follows:

| (14) |

where:

Similarly, we can derive a measurement equation for the distance sensor B:

| (15) |

where:

In addition to Equations (13) and (15), some constraints are added in the measurement equations. The first constraint is na and nb should be unit vectors since they represent direction vectors. The second constraint is that ‖ra − rb‖ = dAB.

The constraint (na and nb should be unit vectors) is expressed as:

| (16) |

From and (assuming that n̄A is small), we have the following approximation:

| (17) |

Thus, constraints on nA and nB are imposed through the following measurement equations:

| (18) |

where:

The noise term vconstraint ∈ R2×1 is an artificial noise reflecting the fact that the constraint Equation (17) is an approximation.

Inserting Equation (2) into the constraint ‖rA − rB‖ = dAB, we have:

| (19) |

Since it has the nonlinear relationship, an extended Kalman filtering technique is used for this measurement.

| (20) |

where:

In summary, Equations (14), (15), (18) and (20) are used as measurement equations for the Kalman filter if distance sensor data zA and zB are available. In this case, the measurement equation is given by:

4.2. Zero Velocity Updating

In addition to distance sensors, zero velocity updating is used in a measurement equation. If we know when a foot is not moving (for example, when a foot is on the ground), the velocity error in the ISBMT algorithm can be reset, which significantly reduces foot pose estimation errors. Since velocity sensors (such as a Doppler velocity sensor in [22]) are not used, the zero velocity intervals are detected indirectly, where many zero velocity detection algorithms have been proposed [8,23].

In this paper, we use a simple zero velocity detection algorithm. Let Zm be a set of all discrete time indices belonging to the zero velocity intervals. The discrete time k belongs to Zm if the following conditions are satisfied:

| (21) |

where Ng and Na are even number integers.

During zero velocity intervals, we assume the following:

| (22) |

Inserting Equation (5) into Equation (22), we have the following measurement equation in the Kalman filter.

| (23) |

where:

The noise term vzero ∈ R3×1 is an artificial noise reflecting the fact that the true velocity may not be zero (the velocity may be almost zero, but not exactly zero).

5. Experiments and Results

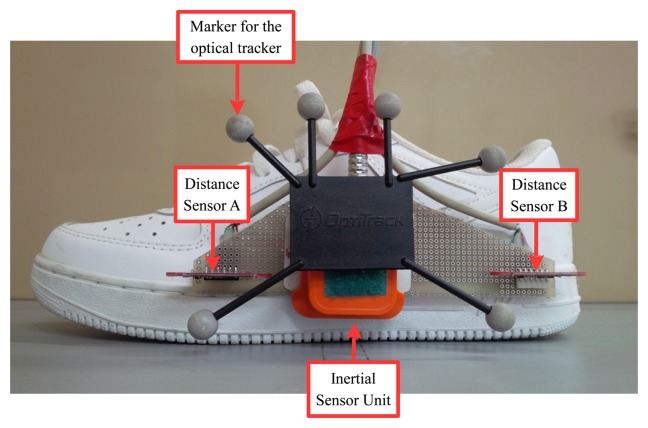

The proposed algorithm is tested with several foot movement experiments. The foot position is measured with an inertial sensor unit (Xsens MTi) and two distance sensors (VL6180) as in Figure 2. The estimated positions are compared with the positions obtained using an optical motion tracker (Optitrack six Flex 13 camera system), which is considered as a ground truth.

Figure 2.

A shoe for the experiment.

The parameters used in the proposed algorithm are summarized in Table 1. All noises are assumed to be uncorrelated white Gaussian noises.

Table 1.

Parameters in the proposed algorithm.

| Parameter | Value | Related Equations | |

|---|---|---|---|

| initial value of r̂A | [ −0.008 0.080 0 ]′ | (1) | |

| initial value of r̂B | [ −0.006 −0.062 0 ]′ | (1) | |

| initial value of n̂A | [1 0 0 ]′ | (1) | |

| initial value of n̂B | [1 0 0 ]′ | (1) | |

| zAB | 0.142 | (2) | |

|

|

0.00017I3 | (5) | |

|

|

0.0083I3 | (5) | |

| Pinit = initial value of E{xx′} | Pinit(2 : 3, 2 : 3) = 0.001 I2(*), Pinit(6, 6) = 0.0001,Pinit(10 : 21, 10 : 21) = 0.000001I12(all unspecified elements are zero) | (7) | |

|

|

0.0004 | (9) | |

|

|

0.0004 | (9) | |

|

|

0.00000001I2 | (18) | |

|

|

0.000001 | (20) | |

|

|

0.0001I3 | (23) |

Pinit(2 : 3, 2 : 3) represents a R2×2 matrix consisting of second and third rows and column elements.

Four motions (walking, dancing steps, kicking in a football, jumping) are tested. The estimated position is compared with two other inertial sensor-only pose estimations. In the first inertial sensor-only pose estimation, the same algorithm is used, except for the distance sensors: that is, Equations (14) and (15) are not used in the Kalman filter. We call this method “K.F. (zero velocity updating)”. In the second inertial sensor-only pose estimation, the height updating is added in the first inertial sensor-only pose estimation. If we assume that the floor is flat, the foot height during the zero velocity intervals (that is, when a foot is on the ground) should be the same. Thus, in addition to zero velocity updating, the z axis value of r is updated to the initial z axis value during each zero velocity interval. We call this method “K.F. (zero velocity + height updating)”

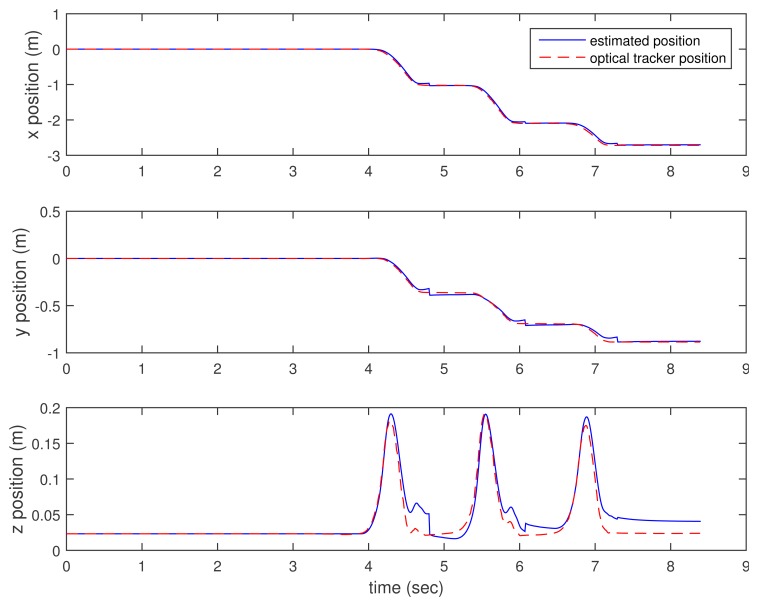

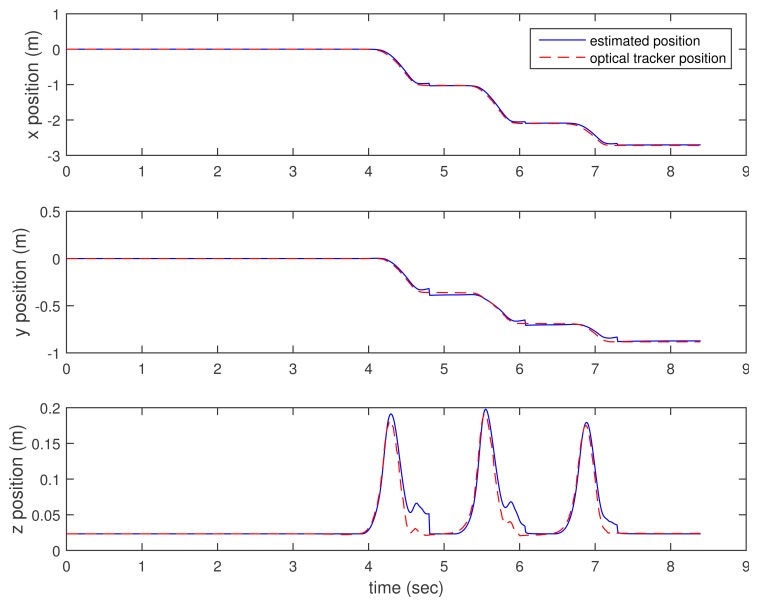

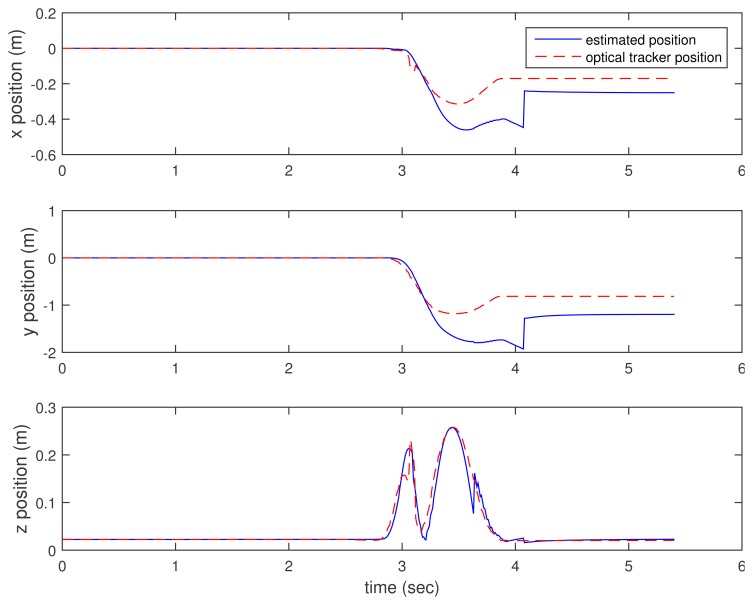

In Figures 3, 4–5, the estimated positions (walking case) by three methods (the proposed method and the two inertial sensor-only methods) are given along with the position by an optical tracker. Since the optical tracker coordinate system is different from the world coordinate system, the position data are translated and rotated. Furthermore, the inertial sensor data and optical tracker data are not synchronized at the hardware level. The data are synchronized by maximizing the cross-correlation.

Figure 3.

Walking position estimation: proposed method.

Figure 4.

Walking position estimation: K.F. (zero velocity updating). K.F., Kalman filter.

Figure 5.

Walking position estimation: K.F. (zero velocity + height updating).

We can see that x and y axis position estimations are similar in all three methods. This is not surprising, since the distance sensor only gives the z axis position (height) information. In the z axis position estimation, the proposed method gives better results (see the third graphs of Figures 3, 4–5 around 4.6, 6 and 7 s). Among inertial sensor-only estimations, the Kalman filter (zero velocity + height updating) is better, since its height is compensated during the zero velocity intervals.

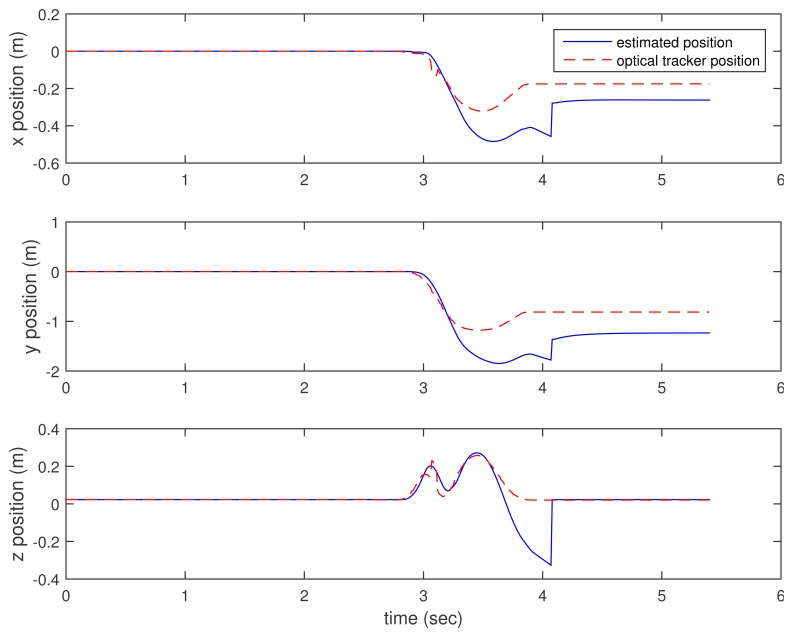

Another example is given in Figures 6 and 7, where a ball kicking action is done. The x and y position estimation results are similar, both by the proposed method and K.F. (zero velocity + height updating). In this case, the position errors are quite large, presumably due to the fact that there is a rather long moving interval (2.8∼4-s interval) and a quick movement (large sensor values). In the z axis position estimation, we can see that the proposed method gives a significantly better result.

Figure 6.

Ball kicking estimation: proposed method.

Figure 7.

Ball kicking estimation: K.F. (zero velocity + height updating).

To compare the result quantitatively, RMS (root mean square) position errors are computed. The x axis RMS position error is defined by:

where r̂x,k is the x axis estimated position at the discrete time k, r̂optical,x,k is the x axis position measured by the optical tracker and N is the total number of data. Similarly, we can define ERMS,y and ERMS,z

The RMS error results for four different activities are given in Table 2. In general, there is no significant improvement in the x and y axis position estimation.

Table 2.

RMS position error comparison of different methods (unit: m).

| Motion Type | Estimation Method | ERMS,x | ERMS,y | ERMS,z | Sum |

|---|---|---|---|---|---|

| walking | proposed method | 0.0208 | 0.0118 | 0.0030 | 0.0356 |

| K.F. (zero velocity) | 0.0243 | 0.0131 | 0.0121 | 0.0495 | |

| K.F. (zero velocity + height updating) | 0.0247 | 0.0130 | 0.0095 | 0.0472 | |

|

| |||||

| dancing steps | proposed method | 0.0231 | 0.0798 | 0.0084 | 0.1113 |

| K.F. (zero velocity) | 0.0265 | 0.0805 | 0.0397 | 0.1466 | |

| K.F. (zero velocity + height updating) | 0.0269 | 0.0810 | 0.0266 | 0.1345 | |

|

| |||||

| ball kicking | proposed method | 0.0816 | 0.3513 | 0.0120 | 0.4449 |

| K.F. (zero velocity) | 0.0871 | 0.3551 | 0.0714 | 0.5136 | |

| K.F. (zero velocity + height updating) | 0.0877 | 0.3583 | 0.0685 | 0.5145 | |

|

| |||||

| jumping | proposed method | 0.0529 | 0.0705 | 0.0188 | 0.1423 |

| K.F. (zero velocity) | 0.0630 | 0.0807 | 0.0313 | 0.1750 | |

| K.F. (zero velocity + height updating) | 0.0637 | 0.0808 | 0.0244 | 0.1689 | |

Once the calibration parameters are computed, the dimension of the Kalman filter state reduces to nine from 21, since r̄A ∼ n̄B can be removed from the state. The proposed algorithm is used with this nine-state Kalman filter. In one Kalman filter, the initial calibration parameters in Table 1 are used. In another Kalman filter, the estimated calibration parameters in Equation (24) (which are supposed to be more accurate) are used. The position estimation results of two nine-state Kalman filters are given in Table 3, where the result of the 21-state Kalman filter is repeated from Table 2 for an easy comparison. The nine-state Kalman filter with calibrated parameters gives slightly better results, except for the kicking case. Since the difference between the initial and calibrated parameters is small, the RMS error difference is also small. The calibration of parameters is a one time process, and after the calibration, we can estimate the position using the nine-state Kalman filter.

Table 3.

RMS position error comparison of different calibration parameters (unit: m).

| Motion Type | Estimation Method | ERMS,x | ERMS,y | ERMS,z | Sum |

|---|---|---|---|---|---|

| walking | proposed method | 0.0208 | 0.0118 | 0.0030 | 0.0356 |

| with fixed initial parameter | 0.0244 | 0.0119 | 0.0034 | 0.0397 | |

| with fixed estimated parameter | 0.0201 | 0.0119 | 0.0029 | 0.0350 | |

|

| |||||

| dancing steps | proposed method | 0.0231 | 0.0798 | 0.0084 | 0.1113 |

| with fixed initial parameter | 0.0362 | 0.0852 | 0.0089 | 0.1303 | |

| with fixed estimated parameter | 0.0196 | 0.0745 | 0.0083 | 0.1024 | |

|

| |||||

| ball kicking | proposed method | 0.0816 | 0.3513 | 0.0120 | 0.4449 |

| with fixed initial parameter | 0.0764 | 0.3495 | 0.0120 | 0.4380 | |

| with fixed estimated parameter | 0.0853 | 0.3506 | 0.0120 | 0.4479 | |

|

| |||||

| jumping | proposed method | 0.0529 | 0.0705 | 0.0188 | 0.1423 |

| with fixed initial parameter | 0.0630 | 0.0807 | 0.0313 | 0.1750 | |

| with fixed estimated parameter | 0.0486 | 0.0655 | 0.0188 | 0.1329 | |

In the walking data, the calibration parameters are estimated in the Kalman filter. The calibration parameters from walking data are given by:

| (24) |

6. Conclusions

There are many inertial sensor-based foot pose estimation algorithms. In this paper, the position estimation is improved additionally using distance sensors. The distance sensor has a 20-cm range limitation. However, this limitation does not degrade estimation accuracy much, since a foot is over the 20-cm range only for a short time, unless the foot is in the air intentionally. Longer range sensors exist, but they are either less accurate in short ranges or more expensive.

The inertial motion estimation algorithm is proposed using a 21-state Kalman (nine states for pose tracking and 12 states for calibration parameters). After the parameters are calibrated, the nine-state Kalman filter can be used to estimate the foot pose.

The proposed algorithm is tested for four activities: walking, dancing steps, ball kicking and jumping. It is shown in Table 2 that there is significant improvement in the z axis position estimation compared with inertial sensor-only foot pose estimation. On the other hand, there is no improvement in the x and y axis, since the distance sensor only gives the height information.

The proposed algorithm can be used in gait analysis (which requires a foot pose estimation), motion-based gaming (such as a soccer game) and exergaming (game-based exercises).

Acknowledgements

This work was supported by the 2015 Research Fund of University of Ulsan.

Author Contributions

Young Soo Suh contributed to main algorithm design. Pham Duy Duong implemented the algorithm in Matlab and did all the experiments. Both authors wrote the paper together.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Sabatini A.M., Martelloni C., Scapellato S., Cavallo F. Assessment of walking features from foot inertial sensing. IEEE Trans. Biomed. Eng. 2005;52:486–494. doi: 10.1109/TBME.2004.840727. [DOI] [PubMed] [Google Scholar]

- 2.Tao W., Liu T., Zheng R., Feng H. Gait analysis using wearable sensors. Sensors. 2012;12:2255–2283. doi: 10.3390/s120202255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Suh Y.S. Inertial sensor-based smoother for gait analysis. Sensors. 2014;14:24338–24357. doi: 10.3390/s141224338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Maillot P., Perrot A., Hartley A., Do M.C. The braking force in walking: Age-related differences and improvement in older adults with exergame training. J. Aging Phys. Act. 2014;22:518–526. doi: 10.1123/japa.2013-0001. [DOI] [PubMed] [Google Scholar]

- 5.Alshurafa N., Xu W., Liu J.J., Huang M.C., Mortazavi B., Roberts C.K., Sarrafzadeh M. Designing a robust activity recognition framework for health and exergaming using wearable sensors. IEEE J. Biomed. Health Inform. 2014;18:1636–1646. doi: 10.1109/JBHI.2013.2287504. [DOI] [PubMed] [Google Scholar]

- 6.Foxlin E. Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Gr. Appl. 2005;25:38–46. doi: 10.1109/mcg.2005.140. [DOI] [PubMed] [Google Scholar]

- 7.Titterton D.H., Weston J.L. Strapdown Inertial Navigation Technology. Peter Peregrinus Ltd.; London, UK: 1997. [Google Scholar]

- 8.Park S.K., Suh Y.S. A zero velocity detection algorithm using inertial sensors for pedestrian navigation systems. Sensors. 2010;10:9163–9178. doi: 10.3390/s101009163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xu Z., Wei J., Zhang B., Yang W. A robust method to detect zero velocity for improved 3D personal navigation using inertial sensors. Sensors. 2015;4:7708–7727. doi: 10.3390/s150407708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Colomar D., Nilsson J., Handel P. Smoothing for ZUPT-aided INSs. Proceedings of 2012 International Conference on Indoor Positioning and Indoor Navigation; Sydney, Australia. 13–15 November 2012; pp. 1–5. [Google Scholar]

- 11.Bebek O., Suster M.A., Rajgopal S., Fu M.J., Xuemei H., Cauvusoglu M.C., Young D.J., Mehregany M., van den Bogert A.J., Mastrangelo C.H. Personal navigation via high-resolution gait-corrected inertial measurement units. IEEE Trans. strum. Meas. 2010;59:3018–3027. [Google Scholar]

- 12.Girard G., Cote S., Zlatanova S., Barette Y., St-Pierre J., Van Oosterom P. Indoor pedestrian navigation using foot-mounted IMU and portable ultrasound range sensors. Sensors. 2011;11:7606–7624. doi: 10.3390/s110807606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Do T.N., Suh Y.S. Gait analysis using floor markers and inertial sensors. Sensors. 2012;12:1594–1611. doi: 10.3390/s120201594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hung T.N., Suh Y.S. Inertial sensor-based two feet motion tracking for gait analysis. Sensors. 2013;13:5614–5629. doi: 10.3390/s130505614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.STMicroelectronics. VL6180x (Proximity and Ambient Light Sensing (ALS) Module) Datasheet. [accessed on 6 June 2015]. Available online: http://www.st.com/web/en/resource/technical/document/datasheet/DM00112632.pdf.

- 16.Kuipers J.B. Quaternions and Rotation Sequences: A Primer with Applications to Orbits, Aerospace, and Virtual Reality. Princeton University Press; Princeton, NJ, USA: 1999. [Google Scholar]

- 17.Nam C.N.K., Kang H.J., Suh Y.S. Golf swing motion tracking using inertial sensors and a stereo camera. IEEE Trans. Instrum. Meas. 2014;63:943–952. [Google Scholar]

- 18.Savage P.G. Strapdown inertial navigation integration algorithm design Part 1: Attitude algorithms. J. Guid. Control Dyn. 1998;21:19–28. [Google Scholar]

- 19.Markley F.L. Multiplicative vs. additive filtering for spacecraft attitude determination. Proceedings of 6th Cranfield Conference on Dynamics and Control of Systems and Structures in Space; Baltimore, MD, USA. 8 September 2004; pp. 467–474. [Google Scholar]

- 20.Suh Y.S., Park S. Pedestrian inertial navigation with gait phase detection assisted zero velocity updating. Proceedings of the 4th International Conference on Autonomous Robots and Agents; Wellington, New Zealand. 2009. pp. 336–341. [Google Scholar]

- 21.Maley J.M. Multiplicative Quaternion Extended Kalman Filtering for Nonspinning Guided Projectiles. Army Research Laboratory, Adelphi; MD, USA: 2013. [Google Scholar]

- 22.Hawkinson W., Samanant P., McCroskey R., Ingvalson R., Kulkarni A., Haas L., English B. GLANSER: Geospatial location, accountability, and navigation system for emergency responders. Proceedings of Position Location and Navigation Symposium (PLANS); Myrtle Beach, SC, USA. 23–26 April 2012; pp. 98–105. [Google Scholar]

- 23.Skog I., Nilsson J.O., Handel P. Evaluation of zero-velocity detectors for foot-mounted inertial navigation systems. Proceedings of International Conference on Indoor Positioning and Indoor Navigation; Zurich, Switzerland. 15–17 September 2010; pp. 1–6. [Google Scholar]