Abstract

Objectives

In oral and other health research, participant literacy levels may impact the quality of data obtained through self-report (e.g., degree of data missingness). This study addressed whether computerized administration of a battery of psychosocial instruments used in an oral health disparities research protocol yielded more complete data than paper-and-pencil administration and aimed to determine the role of general literacy in differences in data missingness between administration types.

Design

Oral health data were obtained from 1,652 adolescent and adult participants who were administered a large questionnaire battery via either paper-and-pencil or tablet personal computer. Number of unanswered items for each participant was compared across administration mode. For a subset of 171 participants who were randomized to one of the administration modes, general literacy and satisfaction with the questionnaire experience also were assessed.

Results

Participants assigned to complete the oral health questionnaire battery via tablet PC were significantly more likely than those assigned to the paper-and-pencil condition to have missing data for at least one item (p < .001); however, for participants who had at least one missing item, paper-and-pencil administration was associated with a greater number of items missed than was tablet PC administration (p < .001). Across administration modes, participants with higher literacy level completed the questionnaire battery more rapidly than their lower literacy counterparts (p < .001). Participant satisfaction was similar for both modes of questionnaire administration (p ≥ .29).

Conclusions

These results suggest that a certain type of data missingness may be decreased through the use of a tablet computer for questionnaire administration.

Keywords: literacy, missing data, questionnaires, surveys, self-reports

INTRODUCTION

In oral health and other research that involves patient response, participant literacy influences data quality. Participant literacy may determine the degree to which questions are understood and answered in an accurate and valid fashion, if answered at all. Whether a person understands and responds to a query affects completeness and accuracy of data sets, regardless of mode of administration. Ultimately, oral health and other investigations that rely on participant oral or verbal responses are affected by literacy level.

General literacy is the ability to read, write, and comprehend (Hillerich, 1976) and is related to intelligence and also to level and quality of education, as formal and informal schooling directly influence capacity to read and write and the degree to which one is able to process and understand written language (Stanovich, 1993; Neisser et al., 1996). A respondent’s general literacy likely has a bearing on level of health literacy, a construct defined in Healthy People 2010 as “the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions,” (US Department of Health and Human Services, 2000) with similar definitions offered by others (World Health Organization, 1998; Peersen & Saunders, 2009; Lanning & Doyle, 2010). Lower health literacy is associated with negative health outcomes and also with reduced use of health care services (Berkman et al., 2001) and, in the case of oral health specifically (Horowitz & Kleinman, 2008), has been shown to be quite prevalent (Jones et al., 2007). The capacity to obtain, process, and understand health information frequently requires the ability to read written language, signs, or symbols and to comprehend health-related literature in various forms. Health literacy, then, is a prerequisite for accessing and utilizing health information and is grounded at least somewhat in general literacy.

In populations for whom access to standard and health education is limited or for whom the quality of such education is suboptimal, general literacy as well as oral and general health literacy may play an important role in the quality of collected research data. For example, the most currently available data suggest that, in the Appalachian region of the United States of America, high school dropout rates are higher, and the percentage of individuals completing college are lower, relative to the national average (Appalachian Regional Commission, 2000); functional illiteracy also is more prevalent in Appalachia than in other regions (Crew, 1985). Education and literacy are, of course, critical in the improvement of public health (Woolf et al., 2007). The Appalachian sample utilized in the current study is an important one for studying oral health research data quality in the context of literacy, as there are oral health disparities, with oral diseases disproportionately affecting the population of this region (McNeil et al., 2012), and educational experiences that vary widely, providing a broad range of degree of general literacy across participants.

General literacy has important implications for dental research utilizing oral or written responses from participants. Low general literacy, for instance, may impair the ability of a research participant to read and comprehend questionnaire items, leading to inaccurate, invalid, or missing responses. Participants with lower degrees of general literacy may respond to researcher’s queries with reduced accuracy. Moreover, lower literacy may affect whether there is a response at all; with lower literacy, there may be increased occurrence of non-response, or conversely, greater “yea-saying” (i.e., acquiescence response set) on the part of the participant (Winkler et al., 1982; Foreit & Foreit, 2003).

An important aspect of sound data collection is garnering complete but accurate data for each participant. To obtain such data, participants ideally are able to respond, in some way, to all items of an instrument. A lack of response yields data missingness, which ultimately affects overall data quality and the conclusions that can be drawn from statistical analyses (Allison, 2009; Schafer & Graham, 2002). While there are statistical methods to deal with missing data, such as imputing derived scores, these procedures generally are inferior to actually obtaining the data first-hand (Little & Rubin, 2002). It has been suggested that the best way to deal with missing data is to avoid it altogether by preventing situations that may lead to missingness (Fleming, 2011), although allowing non-response as a choice is important for ethical reasons.

One way to circumvent the potential problems that low literacy levels may have on psychosocial data collection in oral health and other research is to present questions at a reading level and/or in a format that is more accessible and easily understood by all participants, regardless of general literacy level. Presenting items in such a way can be done using live interviewer administration of items instead of paper-and-pencil administration, though it should be noted that interviewing presents its own challenges as a data collection modality. Technological adjuncts, such as computerized modes of administration, may take the place of a live interviewer. For example, a computer program that includes delivery of items in both visual and audio formats may serve as a substitute for a live interviewer. The barrier of low literacy to quality data collection potentially may be reduced with the use of such technology (Bowling, 2005).

The current study includes data that are part of a larger project involving interviews, questionnaires, oral health assessment, microbiological assessment, and collection of DNA (Polk et al., 2008). In comprehensive research protocols such as this one, the battery of self-report instruments often is onerous and a burden to participants, given the number of different instruments and number of items per scale. Participant literacy, energy/fatigue, and satisfaction/dissatisfaction with the protocol can influence accuracy of responding, willingness to participate with future assessments in longitudinal designs, and perception of the project in the larger social community. Understanding data quality as a function of factors such as literacy and satisfaction with study administration is important for any research involving self-report instruments or interviews.

The purpose of this study was to determine whether computerized administration of a battery of psychosocial instruments used in an oral health research protocol had an impact on completeness of data (or conversely, amount of missing data) compared to paper-and-pencil administration of the same battery of instruments. Further, the study was designed to elucidate the role of general literacy in differences in data missingness between questionnaire administration modes.

It was expected that a technological tool, a tablet-based personal computer (PC) with oral presentation of the items, would yield significant differences in data missingness, compared to paper-and-pencil administration of self-report questionnaires related to factors affecting of oral health (i.e., fear and anxiety related to dental treatment; Hypothesis 1). Also anticipated were differences between administration formats and literacy level in response time and satisfaction with the delivery of questionnaires. It was hypothesized that time required for questionnaire battery completion would be lower for high-literacy participants and for participants assigned to the computerized administration mode (Hypothesis 2). Additionally, it was hypothesized that satisfaction ratings would be greater for high-literacy participants, compared to low-literacy participants, and for participants assigned to the computerized administration mode, compared to the paper-and-pencil administration mode (Hypothesis 3).

MATERIALS AND METHODS

Participants

Participants in this project were members of families in West Virginia and Pennsylvania who were enrolled in the Center for Oral Health Research in Appalachia (COHRA) study on determinants of individual, family, and community factors affecting oral diseases in Appalachia (Marazita et al., 2005). Questionnaire data were obtained from 1,652 participants (60% female; ages 11–94 [M = 28.7, SD = 12.5]); the questionnaire data from this total sample, as well as additional data from a subset of this total sample, are analyzed for this study. No study participants were excluded from analyses due to missing questionnaire data, given the experimental questions. Consistent with the demographics of the study sites (Polk et al., 2008), 88% of the sample identified themselves as Caucasian/White, 10% as African American, and 2% as other ethnic/racial groups. Participants’ average number of years of education was 12.5 (SD = 2.6). Data were collected with the understanding and written consent of each participant (or assent for children and adolescents under age 18, with parental consent) in accordance with the Declaration of Helsinki (version 2008), and with approval from the West Virginia University and University of Pittsburgh Institutional Review Boards.

Design and Apparatus

Participants were involved in one of three periods of data collection across a five-year time-span. Initially, and for a 12-month period, all questionnaires were administered in a paper-and-pencil format to participants age 11 and above (i.e., Period 1; n = 798). In response to participant and community feedback about fatigue from the time required for completion of questionnaires, a tablet PC format was developed and introduced. At the time that the computerized questionnaire administration was introduced, a study was initiated to assess the utility of paper-and-pencil versus tablet PC administration. For that 32-month period of time (i.e., Period 2), participants (n = 171) at one of the data collection sites were randomly assigned to either the paper-and-pencil or PC administration formats. Thereafter, in the third period of data collection for the COHRA project, all questionnaire administration was completed via tablet PC (n = 683). Each participant completed study questionnaires only once during the five-year study period; administration mode for individual participants therefore was dependent upon when the participant was enrolled in the study (during Period 1, Period 2, or Period 3). Data from across all three periods of data collection are analyzed for the current study.

Questionnaire Administration

Participants completed the 20-item Dental Fear Survey (Kleinknecht et al., 1973) and the 9-item Short Form – Fear of Pain Questionnaire (McNeil & Rainwater, 1998), along with a battery of 13 other health-related, self-report instruments with a combined total of over 300 items. The current study was completed using only these two (of the 15 total) self-report instruments because they were specifically related to factors affecting oral health (i.e., dental care-related fear and fear of pain); the other instruments in the battery measured demographic or general health information. The number of missing responses across these two instruments was calculated for each participant.

Participants were assigned to one mode of questionnaire administration (paper-and-pencil n = 884, tablet PC n = 768). The paper-and-pencil and tablet PC administration groups did not differ significantly with regard to any demographic variables (e.g., gender, age, race, number of years of education, income), and the questionnaire battery was not different for the two administration modes. Participants assigned to the paper-and-pencil condition completed the questionnaire battery by filling in “bubbles” or by circling a number on the questionnaire form to indicate their response for each item. Research staff interacting with participants were instructed to review completed paper-and-pencil questionnaire packets, and to inquire of participants whether blank items were intentionally skipped. If an omission was accidental, the participant was invited to complete the missing item(s). If intentional, there was no further prompt to complete the item(s), consistent with Institutional Review Board guidelines.

The tablet PCs (Gateway Motion Computing Tablet M1300, Model: T002; 18.4 cm × 24.8 cm screen) were equipped with specialized visual and audio software, created for the project, that mimicked questions being posed by a live interviewer; participants, wearing headphones, watched and listened to a video of a research assistant reading questionnaire items and responded by touching the icon on the screen that best described their answer. For each of the items, an “opt-out” response was allowed, as directed by Institutional Review Board policy. That is, participants could choose to move to the next item of the battery by purposefully choosing an available “skip” response for a particular item. Specifically, if a participant selected the “next” button on the screen without specifying an answer, there would be an audio prompt and an accompanying written statement: “You have not selected an answer. If you would like to skip this question, tap on the ‘next’ button again.”

Assessment of General Literacy and Satisfaction with Questionnaire Battery

For those participants who completed the questionnaire battery during the second period of data collection when administration mode was randomly assigned via a sealed envelope system at one data collection site (paper-and-pencil condition n = 86, tablet PC condition n = 85), general literacy and satisfaction with the questionnaire battery were assessed. General literacy and satisfaction data were available only for these 171 participants. Power analysis suggests that, for all statistical tests reported below, the size of this subsample was appropriate, as observed power is adequate (i.e., greater than .80). This subsample was not significantly different from the remainder of the total study sample on any demographic or study outcome variables.

Literacy Assessment

General literacy was assessed with the Wide Range Achievement Test-4 (WRAT4) administered by trained research assistants (Wilkinson & Robertson, 2006). The WRAT4 is a norm-referenced assessment instrument that includes three subscales pertinent to general literacy: Word Reading, Sentence Comprehension, and Spelling. A Reading Composite score can be calculated (possible range: 55–145; mean: 100; standard deviation: 15) using the Word Reading and Sentence Comprehension subscale standard scores, with higher scores indicating greater reading ability (Wilkinson & Robertson, 2006). For this study, participants were divided into two groups based on Reading Composite scores. A “high-literacy” group (n = 86) included participants with a Reading Composite score greater than or equal to 90, the cut-off score that indicates at least average reading ability, and a “low-literacy” group (n = 85) included participants with a Reading Composite score less than or equal to 89, which indicates reading ability in the below average, low, or lower extreme ranges.

Assessment of Satisfaction

After finishing the questionnaire battery, participants completing the WRAT4 also responded to two measures of satisfaction with the questionnaire battery. The first measure, using nine 7-point Semantic Differential (Heise, 1970; Osgood et al., 1957) items, assessed satisfaction in three specific domains, with three items devoted to each: evaluative (e.g., positive versus negative), potency (e.g., interesting versus boring), and activity (e.g., fast versus slow). For each domain, item scores were totaled to produce a subscale score. The range of 3–21 was possible for each of the evaluative, potency, and activity subscale scores. In the second measure, overall satisfaction was measured using ten 5-point Likert-type items (e.g., “The questionnaires asked for important information,” “The questionnaires were too long”), five of which were reverse-scored, which were summed to yield a total score. A range of 10–50 was possible for overall satisfaction total scores. For all these measures, higher scores are indicative of a greater degree of satisfaction with the questionnaire battery.

Statistical Analyses

In order to test the hypothesis that administration mode would be associated with data missingness (Hypothesis 1), chi-square and t-test analyses were utilized, examining data from across the entire sample. Follow-up regression analysis was utilized to determine predictors of data missingness, analyzing data from the entire sample as well as data from only the subsample for whom the general literacy assessment was completed. Analysis of variance (ANOVA) was employed to determine whether there were differences in completion time between administration modes, by literacy level (Hypothesis 2). Finally, differences between questionnaire administration modes, by literacy level, in overall satisfaction with the assessment battery were assessed using ANOVA (Hypothesis 3).

RESULTS

Data Missingness

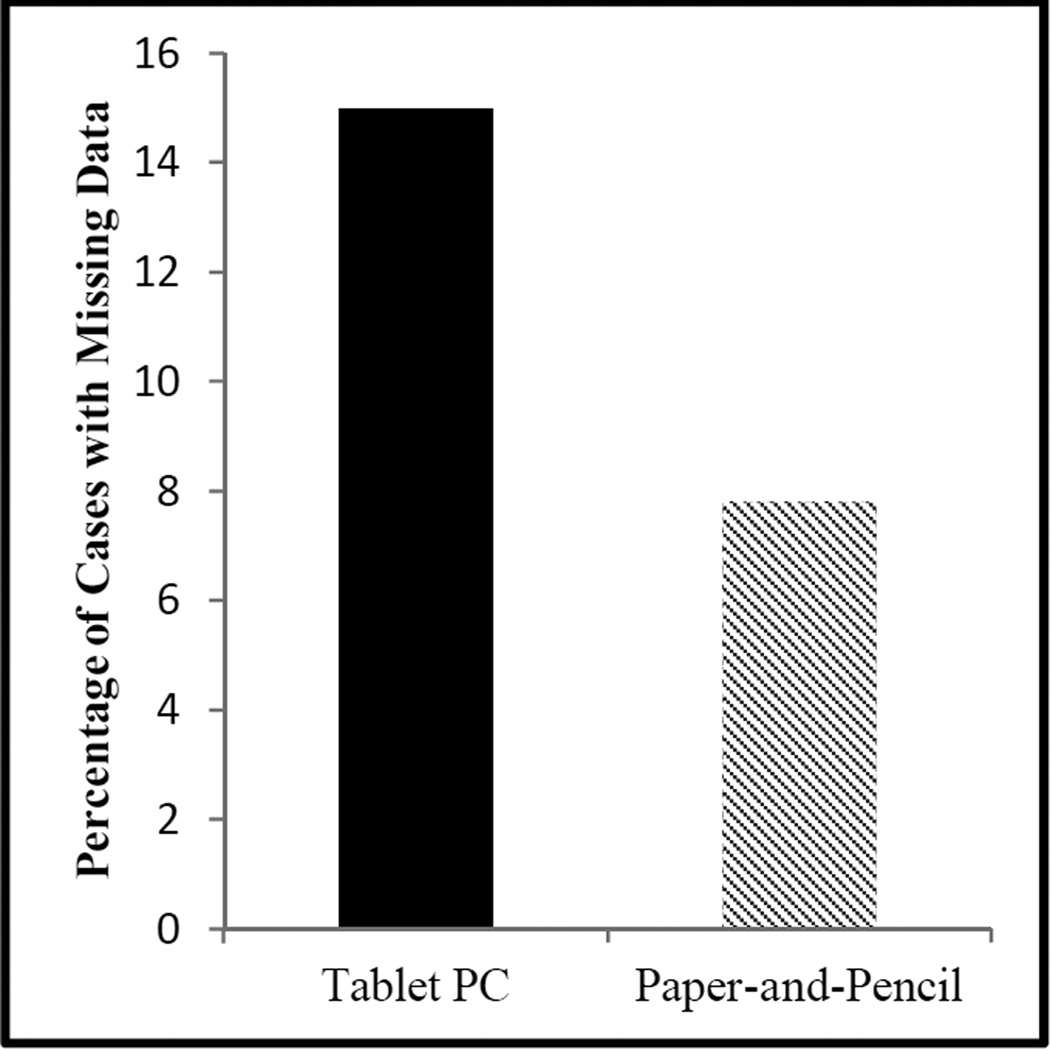

A technological tool did, in fact, yield differences in data missingness for self-report instruments, compared to paper-and-pencil administration, supporting Hypothesis 1. Across all three periods of the study (i.e., paper-and-pencil administration only, paper-and-pencil and tablet PC administration, and tablet PC administration only), participants assigned to complete the questionnaire battery via tablet PC were significantly more likely to have missing data for at least one item than were participants assigned to complete the questionnaire battery via paper-and-pencil, χ2(1) = 21.34, p < .001. Of the participants who completed the questionnaire battery via tablet PC, 15% had at least one item missing (i.e., 115 of 768 participants). Only 7.8% of participants who completed the questionnaire battery via paper-and-pencil had at least one item missing (i.e., 69 of 883 participants). Follow-up logistic regression analyses indicated that tablet PC administration mode is a significant predictor of at least one item being missing, Wald test = 17.84, p < .001; OR = 1.56–3.38, 95 % CI. Increasing age also is a significant predictor of at least one missing item, Wald test = 4.70, p = .03; OR = 1.002–1.04, 95% CI, as is fewer years of education, Wald test = 6.55, p = .01; OR = .83-.98, 95% CI. Gender was not a significant predictor of at least one missing item.

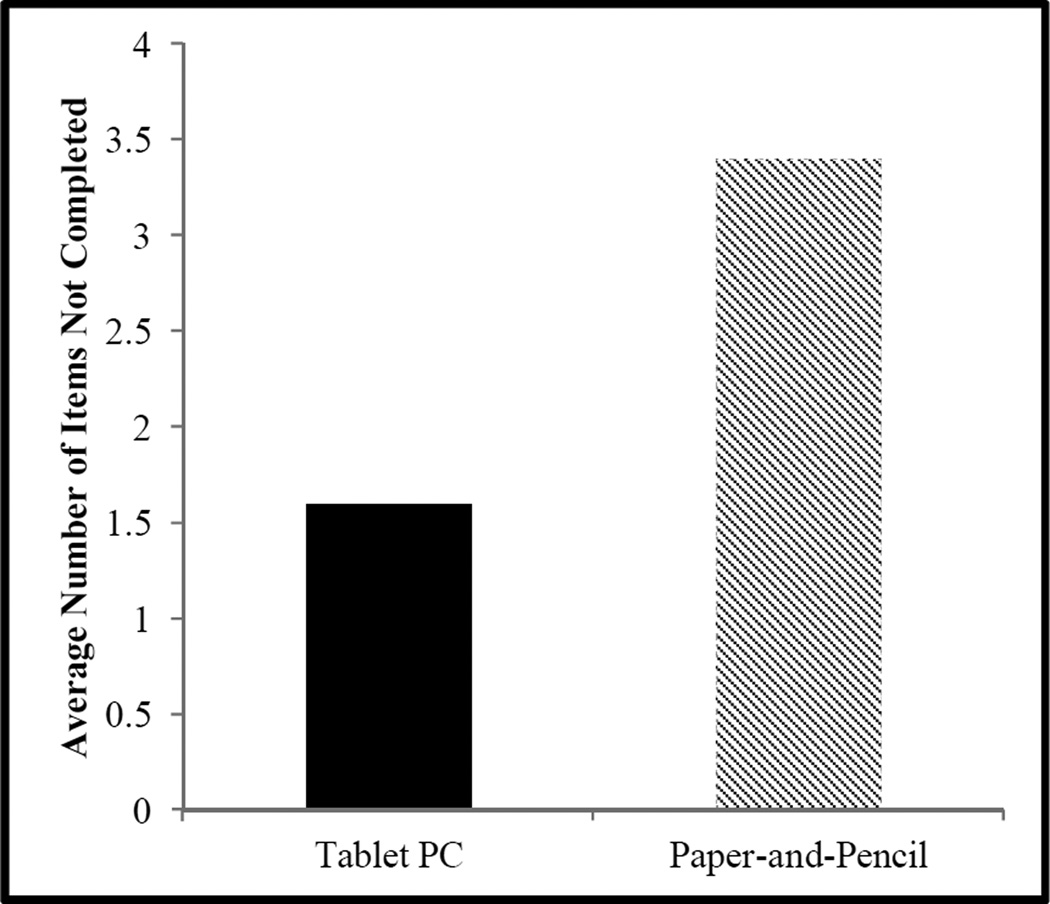

For participants who missed at least one questionnaire item, administration type was associated with degree of data “missingness.” In contrast to the finding for effect of administration mode on whether any items were missed, participants who completed the questionnaire battery via paper-and-pencil did not complete significantly more items (M = 3.4, SD = 3.6) than those who completed the battery via tablet PC (M = 1.6, SD = 1.0), t(182) = 5.02, p < .001, even after controlling for age. That is, across the entire sample, those participants with any missing data were likely to have a greater number of missing items if questionnaire administration mode was paper-and-pencil. Follow-up hierarchical linear regression analysis indicates that even after controlling for age, gender, and number of years of education, paper-and-pencil questionnaire administration mode predicts a greater degree of missingness, β = .41, p < .001, OR = 1.45–3.41, 95% CI. Considering the subsample for whom general literacy data are available, follow-up hierarchical linear regression analysis indicates that paper-and-pencil questionnaire administration mode, but not age, gender, number of years of education, nor literacy level, predicts a greater degree of missingness, β = .16, p = .045, OR = 1.002–1.34, 95% CI.

For paper-and-pencil questionnaire administration, participants classified as “high literacy” did not differ from participants classified as “low literacy” with regard to not responding to items, χ2(1) = 1.36, p = .24. This same finding was observed for tablet PC questionnaire administration, χ2(1) = 0.05, p = .82.

Completion Time and Participant Satisfaction

Hypothesis 2 was supported in part; high-literacy participants (M = 66.8 min, SD = 16.7) completed the battery more rapidly than low-literacy participants (M = 85.0 min, SD = 26.6), F(1, 170) = 25.9, p < .001, π = 0.99, even after controlling for age. Time for questionnaire battery completion, however, did not differ between the two modes of administration (tablet PC M = 77.2 min, SD = 22.0, paper-and-pencil M = 74.5 min, SD = 25.6), F(1, 170) = 0.25, p = .62. There was not a significant literacy level by questionnaire administration mode interaction effect on time for completion, F(1, 170) = .61, p = .44.

Hypothesis 3 also was supported in part; controlling for age, there was a significant effect of literacy level on degree of satisfaction, as measured by the overall satisfaction instrument, F(1, 170) = 9.5, p = .002, π = 0.87, but not a significant effect of administration mode, F(1, 170) = 1.3, p = .26, nor an interaction effect on overall satisfaction, F(1, 170) = .09, p = .76. High-literacy participants reported a greater degree of overall satisfaction (M = 34.4, SD = 5.6) than did low-literacy participants (M = 31.9, SD = 5.6). On the other hand, ratings of satisfaction for the two questionnaire administration types, as measured by the other satisfaction instrument, were remarkably similar, with no differences detected between the tablet PC and paper-and-pencil formats in evaluation (tablet PC M = 10.4, SD = 3.6 paper-and-pencil M = 10.4, SD = 3.2), t(169) = .01, p = .99, potency (tablet PC M = 11.9, SD = 3.3; paper-and-pencil M = 11.7, SD = 2.9), t(169) = .29, p = .77, or activity (tablet PC M = 13.2, SD = 3.1; paper-and-pencil M = 13.3, SD = 2.7), t(169) = .37, p = .72.

DISCUSSION AND CONCLUSIONS

Incorporation of a technological tool (i.e., tablet PC) yields differences in data missingness compared to standard paper-and-pencil administration of a questionnaires that are part of a large battery. Participants in this oral health study who completed self-report instruments using a tablet PC were more likely to not respond to at least one questionnaire item than were participants who completed the same instruments via standard paper-and-pencil format. Interestingly, though, for participants who did not respond to at least one item, administration of the questionnaires using paper-and-pencil was associated with a greater number of unanswered items than administration using the tablet PC. The finding that mode of battery administration impacts patterns of data missingness has implications for oral health research design, particularly when projects involve administering large questionnaire batteries. Researchers aiming to avoid missing data would be well served by utilizing technological aids for delivery of self-report instruments.

A bit surprising is the finding that participants who completed the questionnaires via tablet PC were more likely than those completing paper-and-pencil questionnaires to not answer at least one item. One might expect that, since the tablet PC is equipped with software that prompts participants for a response to each item, individuals in the tablet PC condition would have been less likely to “miss” any items. This observation can be explained by the clear written and auditory opt-out response choice made available to participants for each item. Participants who do not understand the item or who do not identify with any of the response options for a given item, for example, may choose the conscious opt-out alternative. Though not answering an item on the paper-and-pencil form is allowed, the option to do so is not conspicuously present. In general, some of the findings may be due to a difference in a conscious choice to skip an item (in the case of the tablet PC) versus inadvertently missing an item or a group of items (with the paper-and-pencil administration).

The data missingness associated with the tablet PC’s opt-out option may not be a problem from the researcher’s perspective. Providing participants with the alternative to responding to the item may improve the overall quality of the data that are collected. One can be more certain that collected data represent the responses of participants who understood items and believed that one of the response options was consistent with their experience. “Missing” data in this case may become a rich source of information about the participants and their behaviors or the self-report instrument that was administered.

Not surprising is the finding that, for those who did not answer at least one item, participants completing the questionnaire using the paper-and-pencil missed/skipped more items than participants completing the questionnaire via tablet PC. Participants who were assigned to the standard paper-and-pencil condition were not monitored during questionnaire administration in a way comparable to the prompting that is capable with the tablet PC software. Therefore, not answering items, or even sections of items, is more likely.

There were no significant differences between administration types in time for survey battery completion or satisfaction with survey battery delivery. Literacy level, however, was an important factor impacting time for questionnaire completion and satisfaction with the survey. As one might expect, being of a higher literacy level allows a participant to more quickly read, comprehend, and respond to questionnaire items, ultimately making time for questionnaire battery completion shorter. It may be the case that these same individuals report a greater degree of satisfaction with the assessment protocol simply because it is easier to comprehend, think about, and answer the questionnaire items, or that they have greater fluency in working with written materials. The findings from this study highlight the importance of constructing self-report instruments and items at a level that can be understood at varying literacy levels.

It is surprising that participants did not report a greater degree of satisfaction with tablet PC administration of the questionnaire battery than with paper-and-pencil administration. One might assume that an interactive technological adjunct that encouraged involvement might be more likely to hold the interest of the participant. Perhaps this novelty factor was diminished as a result of the length of the questionnaire battery. Additionally, posing each question individually, as is done with the tablet PC mode of administration, might slow down some participants in their responding. This aspect of the technological tool may be perceived by participants as an obstruction, as it prevents rapid responding to items, although it may serve to enhance data quality by helping to reduce answering with a global response set.

These analyses are limited in that they rely on data gleaned from a larger project primarily designed to answer other research questions. That is, time of study enrollment/visit in part determined administration mode for some participants, rather than true random assignment (e.g., participants who were enrolled in the study and completed questionnaires during Period 1 automatically were “assigned” to the paper-and-pencil administration format and participants who were enrolled in the study and completed questionnaires during Period 3 automatically were “assigned” to the tablet PC administration mode). This aspect of study design introduces the potential confound of time. Secondly, the subset of participants who completed both the WRAT4, as a measure of literacy, and the satisfaction questionnaire, was small, and was assessed at only one data collection site. A larger subset of the study group likely would have provided a greater degree of variability in both data missingness and questionnaire administration satisfaction, possibly exposing differences between administration modes.

A third limitation may impact the generalizability of the current study. For this study protocol, research assistants were instructed to briefly glance over completed paper-and-pencil questionnaires and to ask if any missed items were intentionally skipped, offering an opportunity for participants to provide a response to those items. This procedure was utilized in order to make the two administration modes as comparable as possible, as the tablet PC administration mode prompted participants to provide a response to all items, even if the response was “skip.” In many research protocols, research assistants may explicitly be told to refrain from looking at participant responses on paper-and-pencil questionnaires in order to protect anonymity or confidentiality, or questionnaires may be mailed out and sent back, with no opportunity for review by a research assistant. In such protocols, the degree of data missingess for paper-and-pencil questionnaires thus may be greater than was observed in the present study. Completing missingness research similar to the current study with protocols that do not include the review of participants’ questionnaire packets represents a logical future direction.

A fourth important limitation is related to the way that data quality was measured. For this study, we measured only number of “missed” questionnaire items. In essence, the completeness of a data record was measured and used as an outcome variable. To improve this aspect of study design, a future study could address whether the quality of provided responses is affected by administration mode. That is, a future study might use measures of reliability and validity to assess whether one administration mode is more likely than the other to produce consistent and accurate responses to items, within participants. Additional studies also might seek to determine whether a similar pattern of missingness is observed across administration modes when surveys related to demographic or other information are utilized, as the current study focused only on questionnaires that tapped correlates of oral health care utilization (i.e., dental care-related fear and fear of pain).

The results of this study have important implications for researchers who utilize large batteries of written, self-report instruments as part of a study protocol. While the issue of managing missing data is beyond the scope of this article, there is a literature on data handling techniques in order to appropriately handle the unavoidable problem of data missingness (Schafer & Graham, 2002; Little & Rubin, 2002). Reducing this problem is an important goal for any research, and it is clear that mode of self-report instrument administration is a factor worthy of consideration during study design. Oral health and other researchers should consider whether a technological adjunct, like the tablet PC, might benefit the quality of data collected and, ultimately, the inferences that can be drawn from such data.

Figure 1.

Comparison of overall data missingness across administration modes.

Figure 2.

Average number of items not completed across administration modes, for participants with any missing data.

ACKNOWLEDGEMENTS

The authors express appreciation to the participating families in the COHRA project, as well as to Robert Blake, Linda Brown, Stella Chapman, the late Kim Clayton, Rebecca DeSensi, Hilda Heady, Bill Moughamer, Dr. Kathy Neiswanger, Dr. Leroy Utt, the collaborating health centers and hospitals, the GORGE Connection Rural Health Board, and the Rural Health Education Partnerships Program in West Virginia. This research was supported by grant R01 DE014899 from the National Institutes of Health/National Institute of Dental and Craniofacial Research (PI: Marazita) and by a Fulbright Senior Scholar fellowship to the second author.

REFERENCES

- Allison PD. The Sage handbook of quantitative methods in psychology. Thousand Oaks, CA: Sage Publications, Ltd.; 2009. Missing data; pp. 72–89. [Google Scholar]

- Appalachian Regional Commission. Education - High School and college completion rates. 2000 Available from: http://www.arc.gov/reports/custom_report.asp?REPORT_ID=18.

- Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Crotty K. Low health literacy and health outcomes: An updated systematic review. Ann Intern Med. 2001;155:97–107. doi: 10.7326/0003-4819-155-2-201107190-00005. [DOI] [PubMed] [Google Scholar]

- Crew BK. Droupout and functional illiteracy rates in central Appalachia. Lexington, KY: Appalachian Center, University of Kentucky; 1985. [Google Scholar]

- Fleming TR. Addressing missing data in clinical trials. Ann Intern Med. 2011;154:113–117. doi: 10.1059/0003-4819-154-2-201101180-00010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowling A. Mode of questionnaire administration can have serious effects on data quality. J Public Health. 2005;27:281–291. doi: 10.1093/pubmed/fdi031. [DOI] [PubMed] [Google Scholar]

- Foreit JR, Foreit KGF. The reliability and validity of willingness to pay surveys for reproductive health pricing decisions in developing countries. Health Policy. 2003;63:37–47. doi: 10.1016/s0168-8510(02)00039-8. [DOI] [PubMed] [Google Scholar]

- Heise DR. Attitude measurement. Chicago: Rand McNally; 1970. The semantic differential and attitude research. [Google Scholar]

- Hillerich RL. Toward an assessable definition of literacy. Engl J. 1976;65:50–55. [Google Scholar]

- Horowitz AM, Kleinman DV. Oral health literacy: The new imperative to better oral health. Dent Clin N A. 2008;52:333–344. doi: 10.1016/j.cden.2007.12.001. [DOI] [PubMed] [Google Scholar]

- Jones M, Lee JY, Rozier RG. Oral health literacy among patients seeking dental care. J Am Dent Assoc. 2007;138:1199–1208. doi: 10.14219/jada.archive.2007.0344. [DOI] [PubMed] [Google Scholar]

- Kleinknecht R, Klepac R, Alexander LD. Origins and characteristics of fear of dentistry. J Dent Res. 1973;86:842–848. doi: 10.14219/jada.archive.1973.0165. [DOI] [PubMed] [Google Scholar]

- Lanning BA, Doyle EI. Health literacy: Developing a practical framework for effective health communication. Am Med Writ Assoc J. 2010;25:155–161. [Google Scholar]

- Little RJA, Rubin DB. Statistical analysis with missing data. 2nd ed. New York: Wiley; 2002. [Google Scholar]

- Marazita M, Weyant R, Tarter R, et al. Family-based paradigm for investigators of oral health disparities. J Dent Res. 2005;85 [Google Scholar]

- McNeil DW, Crout RJ, Marazita ML. Appalachian health and well-being. Lexington, KY: University Press of Kentucky; 2012. Oral health in Appalachia; pp. 275–294. [Google Scholar]

- McNeil DW, Rainwater AJ. Development of the Fear of Pain Questionnaire - III. J Behav Med. 1998;21:389–410. doi: 10.1023/a:1018782831217. [DOI] [PubMed] [Google Scholar]

- Neisser U, Boodoo G, Bouchard TJ, et al. Intelligence: Knowns and unknowns. Am Psychol. 1996;51:77–101. [Google Scholar]

- Osgood CE, Suci G, Tannenbaum P. The measurement of meaning. Urbana, IL: University of Illinois Press; 1957. [Google Scholar]

- Peersen A, Saunders M. Health literacy revisited: What do we mean and why does it matter? Health Promot Int. 2009;24:285–296. doi: 10.1093/heapro/dap014. [DOI] [PubMed] [Google Scholar]

- Polk DE, Weyant RJ, Crout RJ, et al. Study protocol of the Center for Oral Health Research in Appalachia (COHRA) etiology study. BMC Oral Health. 2008;8 doi: 10.1186/1472-6831-8-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schafer JL, Graham JW. Missing data: Our view of the state of the art. Psychol Methods. 2002;7:147–177. [PubMed] [Google Scholar]

- Stanovich KE. Does reading make you smarter? Literacy and the development of verbal intelligence. Adv Child Dev Behav. 1993;24:133–180. doi: 10.1016/s0065-2407(08)60302-x. [DOI] [PubMed] [Google Scholar]

- US Department of Health and Human Services. Healthy People 2010: Understanding and Improving Health. 2nd ed. Washington, D C: U.S. Government Printing Office; 2000. [Google Scholar]

- Wilkinson GS, Robertson GJ. WRAT4: Wide Range Achievement Test Professional Manual. Lutz, FL: Psychological Assessment Resources; 2006. [Google Scholar]

- Winkler JD, Kanouse DE, Ware JE. Controlling for acquiescence response set in scale development. J Appl Psychol. 1982;67:555–561. [Google Scholar]

- Woolf SH, Johnson RE, Phillips RL, Philipsen M. Giving everyone the health of the educated: An examination of whether social change would save more lives than medical advances. Am J Public Health. 2007;97:679–683. doi: 10.2105/AJPH.2005.084848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization. Health promotion glossary. 1998 Available from: http://whqlibdoc.who.int/hq/1998/WHO_HPR_HEP_98.1.pdf.