Abstract

Background

Capturing public opinion toward public health topics is important to ensure that services, policy, and research are aligned with the beliefs and priorities of the general public. A number of approaches can be used to capture public opinion.

Methods

We are conducting a program of work on the effectiveness and acceptability of health promoting financial incentive interventions. We have captured public opinion on financial incentive interventions using three methods: a systematic review, focus group study, and analysis of online user-generated comments to news media reports. In this short editorial-style piece, we compare and contrast our experiences with these three methods.

Results

Each of these methods had their advantages and disadvantages. Advantages include tailoring of the research question for systematic reviews, probing of answers during focus groups, and the ability to aggregate a large data set using online user-generated content. However, disadvantages include needing to update systematic reviews, participants conforming to a dominant perspective in focus groups, and being unable to collect respondent characteristics during analysis of user-generated online content. That said, analysis of user-generated online content offers additional time and resource advantages, and we found it elicited similar findings to those obtained via more traditional methods, such as systematic reviews and focus groups.

Conclusion

A number of methods for capturing public opinions on public health topics are available. Public health researchers, policy makers, and practitioners should choose methods appropriate to their aims. Analysis of user-generated online content, especially in the context of news media reports, may be a quicker and cheaper alternative to more traditional methods, without compromising on the breadth of opinions captured.

Keywords: incentives, health behavior, research methods, attitudes, thematic analysis, qualitative, quantitative

Background

Capturing public opinion on public health topics is important to gauge buy-in to political agendas (1), and to ensure that services meet the requirements of the public (2). By gauging public opinion, it is possible to determine wider community issues that may not have been identified if researchers or policy makers are the only constituencies involved in priority setting, intervention design, and delivery (3). Through understanding public opinion, research, policy, and practice can contribute to an informed evidence base, accounting for multiple stakeholder views (3). It has also been argued that the public have a moral right to be involved in publicly funded health research, which they have helped fund and which may have an impact on health care and public health interventions that they may receive (4, 5).

We are conducting a program of research on the effectiveness and acceptability of health promoting financial incentives (HPFIs) (6–9). These are cash, or cash-like, rewards or penalties provided contingent on behavior change, or non-change. Although a range of work, including ours, has confirmed HPFIs are effective in a range of contexts (9), less evidence is available on the acceptability of these interventions, including to the public (9, 10).

A number of methodological options are available for capturing public opinions on public health topics, such as HPFI. We conducted a systematic review, focus group study, and an analysis of user-generated online content. Here, we compare and contrast our experiences with, and results from, the three approaches, to help inform those researching public health issues about how each of the methods can help capture public opinion. All three studies have been reported in detail elsewhere (9–11). Our intention here is not to describe our methods and results in detail, but to compare and contrast the pros and cons of the three different methods.

Methods

Determining public opinion toward health promoting financial incentives using three different methods

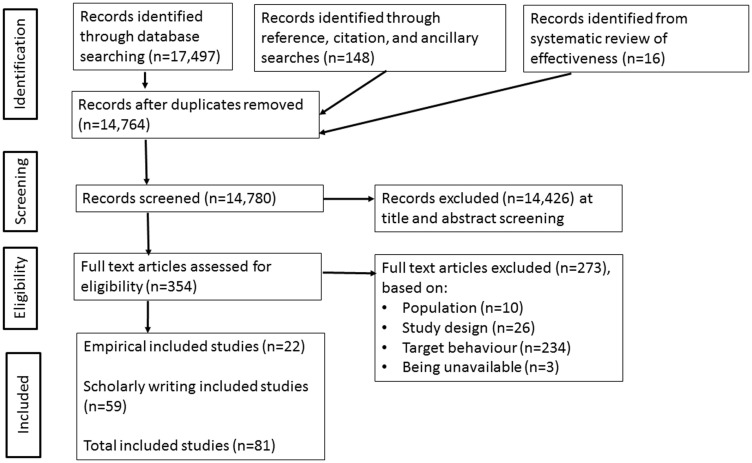

We conducted a systematic review of acceptability of HPFI (10). Unusually, we included both empirical and opinion pieces in this review as we believed both provided useful information on the acceptability of HPFI from a range of viewpoints (See Figure 1, PRISMA Flow Diagram). We searched a range of databases and the final review included 81 papers in total: 22 empirical studies and 59 opinion pieces. Methods and results are summarized in Table 1.

Figure 1.

PRISMA Flow Diagram.

Table 1.

Methods and results of three methodological approaches.

| Focus groups | Systematic review | Online user-generated content | |

|---|---|---|---|

| METHODS | |||

| Main approach | Eight focus groups (n = 74) | Searching of databases from the earliest date to October 2014 | Analysis of 3,373 reader comments posted online in response to a news article on incentives |

| Inclusion criteria: UK adults, aged 18+ years | Databases searched: Medline; Embase; Web of Knowledge; CINAHL; PsycINFO; ASSIA; Sociological Abstracts; Scopus; The Philosopher’s Index; Cochrane Library; SSCI; IBSS | News article topic: feasibility study of financial incentives for breastfeeding | |

| Method: face-to-face focus groups; audio-recorded; lasting on average 60 min | Inclusion criteria: English language title Published in a peer-reviewed journal Explored acceptability of financial incentives for healthy behaviors Acceptability explored in the public, policy makers, potential recipients, and practitioners | Online news sites searched: BBC; Guardian; Daily Mail; Telegraph; Independent; The Sun | |

| Analytical approach: thematic analysis using NVivo 10 | Analytical approach: thematic analysis using NVivo 10 | Inclusion criteria: popular websites defined as those that achieved an average monthly audience of at least five million unique viewers per month across laptop computers, desktop computers, and mobile devices in April and May 2013 Analytical approach: thematic analysis using NVivo 10 | |

| RESULTS | |||

| Main themes | The nature of fair exchange | Fair exchange | Children are a lifestyle choice |

| Design and delivery of incentive schemes | Design and delivery | Financial incentives for breastfeeding are discriminatory and divisive | |

| Effectiveness and cost-effectiveness | Effectiveness and cost-effectiveness | Creating a culture of entitlement | |

| Recipients | Recipients | Financial incentives for breastfeeding are potentially insulting | |

| Impact on individuals and wider society | Impact on individuals and wider society | Psychological impacts on recipients | |

| “Other” issues | Effectiveness and cost-effectiveness | ||

| Generating initial motivation | |||

| Design and delivery | |||

| Informed choice | |||

Our focus group study included 74 participants in eight groups, stratified by age and socio-economic group. These explored participant views on financial incentives for a range of different health behaviors. Data were analyzed using thematic analysis and results were summarized in Table 1.

Finally, we conducted a thematic analysis of online user-generated content (12). This was inspired by “netnography” (13–15), a technique originally established in the marketing domain to capture consumer opinions toward products and services (13–15). The approach has been relatively underused in the public health arena (16, 17), although there is growing recognition of the value that online media can have for exploring public health issues (17–21). This was an opportunistic piece of work arising from extensive UK coverage of a pilot study offering financial incentives for breastfeeding to mothers living in a deprived area (22). Online news coverage of the pilot trial generated substantial reader comments in response. In total, 10 articles were identified from popular UK news websites, with over 3,000 reader comments posted. We uploaded these reader comments into NVivo software for thematic analysis (23). Results are summarized in Table 1.

Across all three methods, many of the main themes are comparable, such that financial incentives – if they are to be accepted – need to be fair to all individuals, be effective and cost-effective, and be carefully designed so as not to increase inequalities or have a negative impact on recipients. The analysis of the online user-generated content revealed slightly more themes in terms of children being a lifestyle choice, and that it is important to understand the impact incentives have on intrinsic motivation for behaviors. This could be due to the fact that this analysis focused on one behavior only – breastfeeding – whereas the focus groups and systematic review covered a range of healthy behaviors (e.g., smoking cessation, physical activity and so on).

Comparing Experiences of Three Methods for Capturing Public Opinion

Table 2 summarizes what we felt were the pros and cons of the three methods discussed.

Table 2.

A comparison of methodological approaches.

| Method | Advantages | Disadvantages |

|---|---|---|

| Systematic review | Allowed: | Required multiple skill sets – e.g., an information scientist and researcher (33) |

| searching of an extensive range of data sources and the use of an exhaustive search strategy (24) | There was limited guidance available on how to critically appraise non-standard included papers (i.e., scholarly critique) (32, 34) | |

| tailoring of the research question (25) the aggregation of data (26–29) | May have excluded papers that were not indexed in the main databases (31, 35, 36) | |

| the identification of what is and is not known on the topic | Requires updating to remain current (37) | |

| us to answer a question on acceptability where quantitative data may have been insufficient (30–32) | Narrative reviews are sometimes viewed to hold less weight (36, 38) | |

| Focus groups | Allowed: | Participants may have conformed to the majority view (41) |

| consensus to be achieved and identification of outlying opinions (39) | Are costly in terms of human resources (42) | |

| probing of “new” issues | Results may not be generalizable (although could be transferable) (41, 43) | |

| adherence to best practice, given a wealth of guidance available (40) | ||

| Thematic analysis of online content | Was a timely and inexpensive approach to data collection (17) | Ethical issues were raised, such as privacy concerns (44) |

| Established a wide range of opinions using a large sample size Allowed for objectivity | Being removed from the commenting process meant that we could not probe individuals to elaborate on their comments | |

| The sample may not be representative of the general population (45) as user characteristics are often unavailable |

We found the thematic analysis of user-generated online content to be a timely and inexpensive method that allowed us to explore a wide range of public opinions in response to incentives for breastfeeding (17, 46). It was a quick process in that comments were downloaded, “cleaned” and made ready for analysis in a few hours, with the full analytical process taking a matter of weeks. This is in comparison to the much longer timescale for searching and screening in the systematic review, and recruiting participants and arranging and conducting focus group interviews. The inclusion of more than 3,000 reader comments in the analysis of online content also provided a much larger sample size than could have been reasonably achieved using traditional qualitative methods, or even a quantitative survey in the same timescale (47). Whilst greater sample size is not necessarily good in its own right in qualitative research, this volume of data did give us much greater confidence that thematic saturation had been achieved than in the focus group study.

However, the analysis of user-generated online content was not without its limitations. Using comments posted to online discussions for research purposes raises a number of ethical considerations (44). As the comments were publically available, and because the websites state in their privacy policies that site content can be used in other ways, we deemed it ethical to use the comments, despite the lack of explicit informed consent from participants. We sought permission from the news sites to use these comments for research purposes and abided by copyright guidelines (48). We also gained ethical approval from Newcastle University and upheld ethical best practice throughout, including adhering to data protection, treating data confidentially and ensuring participant anonymity. We spent much more time considering the ethical implications of the analysis of online content than the focus group study partly because standard guidance for this type of work is not available. Whilst far from commonplace, using online data for research is no longer particularly novel. The research community needs to move faster in developing guidance and norms of ethical practice for these newer research contexts.

Unlike in the focus groups, we chose not to take part in, and steer online discussions, or identify ourselves as non-participating observers to others commenting on included articles. Instead, we decided, as others have done (49), to remain anonymous and to simply download posted comments. This ensured we did not influence the discussion process and provided an additional degree of objectivity to data collection, compared to the focus groups (44, 50). However, it also meant, unlike in the focus groups, that we were unable to probe respondents for clarification on what particular comments meant and their reasons for writing them (44, 46).

Whilst systematic reviews aim to capture all extant research meeting particular criteria and hence be “representative” of the available research, individuals who comment on websites or take part in focus groups may not be representative of the wider population (47). They may, for instance, be socially similar to each other, or hold particularly strong viewpoints on a given topic (45). However, both of these are limitations of any opinion-based research (either qualitative or quantitative) which participants must opt-in to. Furthermore, it is possible that individuals may be more likely to be truthful in the partially anonymous space of the internet, compared to in a face-to-face setting (51, 52). One advantage of research methods where individuals come in direct contact with researchers is that questions concerning demographics can be asked, meaning that conclusions concerning representativeness can be drawn. This was not the case with our analysis of online content.

In both the systematic review and online study, we had to sift through data that was not directly relevant to the research. In both contexts, a balance between sensitivity and specificity was needed, in identifying data relevant to our research aims whilst interpreting the comments in a way that was true to the meaning intended by the commenters (53, 54). Arguably the online study, which we were able to immediately focus by restricting inclusion to comments on articles of direct relevance to the research, achieved this balance more effectively.

A final consideration relates to debriefing participants. Arguably a systematic review publication is, itself, a summary of findings for “participating” authors. In focus group research, it is normal to explain the reason for the research to participants during recruitment, and summarize discussions at the end of group interviews for immediate feedback to participants. Participants may also be offered the opportunity of receiving a fuller summary of all results at a later date. We considered something similar in our online study – perhaps by posting a summary of our results on the websites from where comments were downloaded. This would allow readers to remark on the findings and for commenters to see our interpretation of their comments. We decided not to do this because we felt these would be likely to be seen by only a very small minority of those who originally contributed to the research. Others have identified that debriefing participants is more complicated in internet-based forum, compared to traditional research (44) and further consideration of whether and how this should be done is required.

Originally, we expected that the analysis of user-generated online content would generate a different set of opinions to those captured in our systematic review and focus groups. This is because the latter involved a different, perhaps more vocal, population discussing the particularly emotive topic of breastfeeding. However, in general, the results from our analysis of online reader comments reflected the findings of both the systematic review and the focus groups (see Table 1). Thus, we are confident that using online data and tools to determine public attitudes toward HPFI (and perhaps also other topics), accurately captures the range of public opinions extant.

Other researchers have reported similar findings. For example, Henrich and Holmes (2011) found, when they undertook a thematic analysis of online news reader comments in Canadian news media toward the H1N1 vaccine, similar opinions to those which had previously been established via focus groups and surveys (18). This suggests that thematic analysis of reader comments to online news coverage is an appropriate method for a variety of public health topics and in a variety of contexts (19, 43, 55). However, it is worth remembering that sampling and response bias will occur when using an online sample (45, 56). In order to limit this bias, we attempted to detect differences in comments according to the stance and publication site of difference articles, but did not find any particular patterns. These are biases that are hard to detect or eliminate, but, as discussed above, these problems are not unique to analysis of online user-generated content.

Using analysis of user-generated online content more widely

Given the similarity of findings across the three methods used, and the relative novelty of the analysis of user-generated online content, we focus the remainder of our discussion on this method in particular. Despite the benefits we feel it offers, it is worth highlighting that analysis of user-generated online content would only be a viable methodological option if the topic of interest is covered online in a context allowing for user-generated response and discussion. In the example, we used reader responses to online news media reporting, and so the method is restricted to issues that are covered in online news stories and by outlets that allow for reader comments. This could include user-generated content available in other fora – although some, more closed, contexts (e.g., member-only discussion boards) may raise additional ethical issues. Where researchers wish to be proactive and gain public feedback on a particular issue, the use of a press release may help stimulate news coverage and hence reader comments. In our case, the topic of financial incentives for breastfeeding was well-covered by UK news sources.

Where a limited evidence base exists, analysis of user-generated online content could enable researchers to achieve a preliminary grounding in a topic prior to conducting further, more resource-intensive work. It has also been suggested that the number of comments that are posted in response to a news article indicates strength of feeling toward an issue (18).This would be potentially useful to researchers, but also to policymakers who may wish to gauge the strength of public opinion and reaction to a new policy or to a change in policy. Although, again, issues of sampling bias are important to remember. This approach could help identify areas of divergence between the views of the public and policymakers, helping policymakers to design policy which is likely to be more accepted by the public (18). Certainly it is argued that “good public discourse is maximally polyvocal, and good public policy must incorporate, hence accommodate all agents, rather than representing a single interest” (52).

Analysis of user-generated online content could also indicate to policymakers where further public health information or education is required. This was an important finding from our work where we identified, for example, a public perception of insufficient information concerning the benefits of breastfeeding.

Furthermore, where issues are particularly sensitive, the use of an internet-based (and anonymous) forum may help individuals to feel more comfortable about expressing their opinions. This could elicit more truthful opinions than would be gained in a face-to-face setting (52). It has also been argued that posting comments online can help to build a sense of community amongst those participating, helping individuals to feel more confident when posting their comments (20, 57). Additionally, even when posted comments are potentially offensive, shocking and left-field, they still enable researchers to capture a representation of the full range of a “social and cultural phenomena” (52). Such breadth of opinion may be less likely to be captured in face-to-face settings where social processes inhibiting unusual opinions may be more overt. Certainly, the media can play a large role in the public’s responses to health issues (21) and as such using responses to the media as a resource to garner public opinion seems expedient.

In terms of resources, if these are restricted, analysis of user-generated online content provides an opportunity for in-depth qualitative analysis using accepted and rigorous analytical methods at a fraction of the time and cost. Thus, such analysis of online news comments may be particularly useful to policymakers, with limited resources, exploring public attitudes to new and controversial interventions that have attracted media attention. Timely examples include minimum unit pricing of alcohol, e-cigarettes, and standardized cigarette packaging.

Finally, it is probably important that the online news sources from which reader comments are taken for analysis, are viewed to be credible. In these terms, credibility of news sources may be perceived very differently by different audiences. Credibility could be enhanced by balanced coverage and appropriate citation of evidence within news coverage. Exploring, as we did, whether there are obvious differences in user responses according to the content of coverage can also give more trustworthiness to conclusions (18). Should researchers and policymakers wish to extend this method beyond topics covered in news stories, to, say, public opinion on health advice, information or content on other websites, they would have to consider the trustworthiness, design and perceived credibility of those websites (19). These issues could all impact the number of people who would view the website, whether they view the information that the website contains to be reputable, and whether they take the time to comment (19).

Conclusion

Whilst researchers should choose methodologies appropriate to their research questions, if a large sample, up-to-date public opinions (47), and a relatively robust data source is required, then analysis of online news content confers benefits similar, and even additional to, other methodological approaches. Further empirical work is required to confirm the validity of this method and representativeness in terms of the range of opinions garnered in a wider range of contexts (47). Greater debate on the ethical issues raised by this approach and standard guidance for research in this context is also warranted (58).

Author Contributions

EG conceived of, and drafted, the manuscript. JA revised the manuscript. All authors read and approved the final manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work is produced under the terms of a Career Development Fellowship research training fellowship issued by the NIHR to JA, grant number: CDF-2011-04-001. The views expressed are those of the authors and not necessarily those of the NHS, The National Institute for Health Research or the Department of Health. EG is funded in part, and FFS is funded in full by Fuse: the Centre for Translational Research in Public Health and JA is funded in part by The Centre for Diet and Activity Research (CEDAR). Fuse and CEDAR are UKCRC Public Health Research Centres of Excellence. Funding for Fuse and CEDAR from the British Heart Foundation, Cancer Research UK, Economic and Social Research Council, Medical Research Council, the National Institute for Health Research, under the auspices of the UK Clinical Research Collaboration, is gratefully acknowledged. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Hanson M, Gluckman P. Developmental origins of noncommunicable disease: population and public health implications. Am J Clin Nutr (2011) 94:1754S–8S. 10.3945/ajcn.110.001206 [DOI] [PubMed] [Google Scholar]

- 2.Mockford C, Staniszewska S, Griffiths F, Herron-Marx S. The impact of patient and public involvement on UK NHS health care: a systematic review. Int J Qual Health Care (2012) 24(1):28–38. 10.1093/intqhc/mzr066 [DOI] [PubMed] [Google Scholar]

- 3.Barber R, Boote JD, Parry GD, Cooper CL, Yeeles P, Cook S. Can the impact of public involvement on research be evaluated? A mixed methods study. Health Expect (2012) 15(3):229–41. 10.1111/j.1369-7625.2010.00660 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Boote J, Wong R, Booth A. ‘Talking the talk or walking the walk?’ A bibliometric review of the literature on public involvement in health research published between 1995 and 2009. Health Expect (2012) 18(1):44–57. 10.1111/hex.12007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Brett J, Staniszewska S, Mockford C, Herron-Marx S, Hughes J, Tysall C, et al. Mapping the impact of patient and public involvement on health and social care research: a systematic review. Health Expect (2012) 17(5):637–50. 10.1111/j.1369-7625.2012.00795 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marteau TM, Ogilvie D, Roland M, Suhrcke M, Kelly MP. Judging nudging: can nudging improve population health? BMJ (2011) 342:d228. 10.1136/bmj.d228 [DOI] [PubMed] [Google Scholar]

- 7.Michie S, van Stralen M, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci (2011) 6(1):42. 10.1186/1748-5908-6-42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lynagh M, Sanson-Fisher R, Bonevski B. What’s good for the goose is good for the gander. Guiding principles for the use of financial incentives in health behaviour change. Int J Behav Med (2013) 20(1):114–20. 10.1007/s12529-011-9202-5 [DOI] [PubMed] [Google Scholar]

- 9.Giles EL, Robalino S, McColl E, Sniehotta FF, Adams J. The effectiveness of financial incentives for health behaviour change: systematic review and meta-analysis. PLoS One (2014) 9(3):e90347. 10.1371/journal.pone.0090347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Giles EL, Robalino S, Sniehotta FF, Adams J, McColl E. Acceptability for financial incentives for encouraging uptake of healthy behaviours: a critical review using systematic methods. Prev Med (2015) 73:145–58. 10.1016/j.ypmed.2014.12.029 [DOI] [PubMed] [Google Scholar]

- 11.Giles EL, Sniehotta FF, McColl E, Adams J. Acceptability of financial incentives and penalties for encouraging uptake of healthy behaviours: focus groups. BMC Public Health (2015) 15:58. 10.1186/s12889-015-1409-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Giles EL, Holmes M, McColl E, Sniehotta FF, Adams J. Acceptability of financial incentives for breastfeeding: thematic analysis of reader’s comments to UK online news reports. BMC Pregnancy Childbirth (2015) 14:116. 10.1186/s12884-015-0549-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kozinets RV. The field behind the screen: using netnography for marketing research in online communities. J Mark Res (2002) 39(1):61–72. 10.1509/jmkr.39.1.61.18935 [DOI] [Google Scholar]

- 14.Kozinets RV. Netnography: The Marketer’s Secret Weapon. How Social Media Understanding Drives Innovation. California: Netbase Solutions Inc. (2010). [Google Scholar]

- 15.Kozinets RV. Marketing netnography: prom/ot(ulgat)ing a new research method. Methodol Innovat Online (2012) 7(1):37–45. 10.4256/mio.2012.004 [DOI] [Google Scholar]

- 16.De Brún A, McCarthy M, McKenzie K, McGloin A. Weight stigma and narrative resistance evident in online discussions of obesity. Appetite (2014) 72(0):73–81. 10.1016/j.appet.2013.09.022 [DOI] [PubMed] [Google Scholar]

- 17.Kesten J, Cohn S, Ogilvie D. The contribution of media analysis to the evaluation of environmental interventions: the commuting and health in Cambridge study. BMC Public Health (2014) 14(1):482. 10.1186/1471-2458-14-482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Henrich N, Holmes B. What the public was saying about the H1N1 vaccine: perceptions and issues discussed in on-line comments during the 2009 H1N1 pandemic. PLoS One (2011) 6(4):e18479. 10.1371/journal.pone.0018479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sillence E, Briggs P, Harris PR, Fishwick L. How do patients evaluate and make use of online health information? Soc Sci Med (2007) 64(9):1853–62. 10.1016/j.socscimed.2007.01.012 [DOI] [PubMed] [Google Scholar]

- 20.Freeman B. Tobacco plain packaging legislation: a content analysis of commentary posted on Australian online news. Tob Control (2011) 20(5):361–6. 10.1136/tc.2011.042986 [DOI] [PubMed] [Google Scholar]

- 21.Leask J, Hooker C, King C. Media coverage of health issues and how to work more effectively with journalists: a qualitative study. BMC Public Health (2010) 10(1):535. 10.1186/1471-2458-10-535 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Whelan B, Van Cleemput P, Strong M, Relton C. Views on the acceptability of financial incentives for breastfeeding: a qualitative study. Lancet (2013) 382:S103. 10.1016/S0140-6736(13)62528-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Langer R, Beckman SC. Sensitive research topics: netnography revisited. Qual Mark Res (2005) 8(2):189–203. 10.1108/13522750510592454 [DOI] [Google Scholar]

- 24.Lyman G, Kuderer N. The strengths and limitations of meta-analyses based on aggregate data. BMC Med Res Methodol (2005) 5(1):14. 10.1186/1471-2288-5-14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mulrow CD. Systematic reviews: rationale for systematic reviews. BMJ (1994) 3(309):597–9. 10.1136/bmj.309.6954.597 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cook DJ, Mulrow CD, Haynes RB. Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med (1997) 126(5):376–80. 10.7326/0003-4819-126-5-199703010-00006 [DOI] [PubMed] [Google Scholar]

- 27.Smith V, Devane D, Begley C, Clarke M. Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Med Res Methodol (2011) 11(1):15. 10.1186/1471-2288-11-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tugwell P, Petticrew M, Kristjansson E, Welch V, Ueffing E, Waters E, et al. Assessing equity in systematic reviews: realising the recommendations of the commission on social determinants of health. BMJ (2010) 341:c4739. 10.1136/bmj.c4739 [DOI] [PubMed] [Google Scholar]

- 29.Turner L, Boutron I, Hrobjartsson A, Altman D, Moher D. The evolution of assessing bias in cochrane systematic reviews of interventions: celebrating methodological contributions of the cochrane collaboration. Syst Rev (2013) 2(1):79. 10.1186/2046-4053-2-79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rycroft-Malone J, McCormack B, Hutchinson A, DeCorby K, Bucknall T, Kent B, et al. Realist synthesis: illustrating the method for implementation research. Implement Sci (2012) 7(1):33. 10.1186/1748-5908-7-33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mays N, Pope C, Popay J. Systematically reviewing qualitative and quantitative evidence to inform management and policy-making in the health field. J Health Serv Res Policy (2005) 10(Suppl 1):6–20. 10.1258/1355819054308576 [DOI] [PubMed] [Google Scholar]

- 32.Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review – a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy (2005) 10(Suppl 1):21–34. 10.1258/1355819054308530 [DOI] [PubMed] [Google Scholar]

- 33.Li T, Vedula SS, Scherer R, Dickersin K. What comparative effectiveness research is deeded? A framework for using guidelines and systematic reviews to identify evidence gaps and research priorities. Ann Intern Med (2012) 156(5):367–77. 10.7326/0003-4819-156-5-201203060-00009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pawson R. Evidence-based policy: the promise of ‘realist synthesis’. Evaluation (2002) 8(3):340–58. 10.1177/13563890240146244825720797 [DOI] [Google Scholar]

- 35.Dickersin K, Scherer R, Lefebvre C. Identifying relevant studies for systematic reviews. BMJ (1994) 309(6964):1286–91. 10.1136/bmj.309.6964.1286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yuan Y, Hunt RH. Systematic reviews: the good, the bad, and the ugly. Am J Gastroenterol (2009) 104(5):1086–92. 10.1038/ajg.2009.118 [DOI] [PubMed] [Google Scholar]

- 37.Chalmers I. The cochrane collaboration: preparing, maintaining, and disseminating systematic reviews of the effects of health care. Ann N Y Acad Sci (1993) 703(1):156–65. 10.1111/j.1749-6632.1993.tb26345.x [DOI] [PubMed] [Google Scholar]

- 38.Shojania KG, Sampson M, Ansari MT, Ji J, Doucette S, Moher D. How quickly do systematic reviews go out of date? A survival analysis. Ann Intern Med (2007) 147(4):224–33. 10.7326/0003-4819-147-4-200708210-00179 [DOI] [PubMed] [Google Scholar]

- 39.Bryman A. Social Research Methods. Oxford: Open University Press Oxford; (2012). [Google Scholar]

- 40.Braun V, Clarke V. Successful Qualitative Research: A Practical Guide for Beginners. London: SAGE Publications; (2013). [Google Scholar]

- 41.Thomas E, Magilvy JK. Qualitative rigor or research validity in qualitative research. J Spec Pediatr Nurs (2011) 16(2):151–5. 10.1111/j.1744-6155.2011.00283.x [DOI] [PubMed] [Google Scholar]

- 42.Ritchie J, Lewis J, Lewis PSPJ, Nicholls CMN, Ormston R. Qualitative Research Practice: A Guide for Social Science Students and Researchers. London: Sage Publications; (2013). [Google Scholar]

- 43.Tracy SJ. Qualitative quality: eight “big-tent” criteria for excellent qualitative research. Qual Inquiry (2010) 16(10):837–51. 10.1177/1077800410383121 [DOI] [Google Scholar]

- 44.Kraut R, Olson J, Banaji M, Bruckman A, Cohen J, Couper M. Psychological research online. Report of Board of Scientific Affairs’ Advisory Group on the conduct of research on the internet. Am Psychol (2004) 59(2):105–17. 10.1037/0003-066X.59.2.105 [DOI] [PubMed] [Google Scholar]

- 45.Hermida A, Thurman N. A clash of cultures. J Pract (2008) 2(3):343–56. 10.1080/17512780802054538 [DOI] [Google Scholar]

- 46.Poria Y, Oppewal H. A new medium for data collection: online news discussions. Int J Contemp Hosp Manag (2003) 15(4):232–6. 10.1108/09596110310475694 [DOI] [Google Scholar]

- 47.Rowe G, Hawkes G, Houghton J. Initial UK public reaction to avian influenza: analysis of opinions posted on the BBC website. Health Risk Soc (2008) 10(4):361–84. 10.1080/13698570802166456 [DOI] [Google Scholar]

- 48.Intellectual Property Office. Exceptions to copyright: research. Newport: (2014). Available from: https://www.gov.uk/exceptions-to-copyright [Google Scholar]

- 49.De Brun A, McCarthy M, McKenzie K, McGloin A. “Fat is your fault”. Gatekeepers to health, attributions of responsibility and the portrayal of gender in the Irish media representation of obesity. Appetite (2013) 62:17–26. 10.1016/j.appet.2012.11.005 [DOI] [PubMed] [Google Scholar]

- 50.Nosek BA, Banaji MR, Greenwald AG. E-Research: ethics, security, design, and control in psychological research on the internet. J Soc Issues (2002) 58(1):161–76. 10.1111/1540-4560.00254 [DOI] [Google Scholar]

- 51.Mathieu E, Barratt A, Carter S, Jamtvedt G. Internet trials: participant experiences and perspectives. BMC Med Res Methodol (2012) 12(1):162. 10.1186/1471-2288-12-162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Reader B. Free press vs. free speech? The rhetoric of “civility” in regard to anonymous online comments. J Mass Commun Q (2012) 89(3):495–513. 10.1177/1077699012447923 [DOI] [Google Scholar]

- 53.Merriam SB. Qualitative Research: A Guide to Design and Implementation. San Francisco: Wiley; (2014). [Google Scholar]

- 54.Willig C. Introducing Qualitative Research in Psychology. Maidenhead: McGraw-Hill Education; (2013). [Google Scholar]

- 55.Lugosi P, Janta H, Watson P. Investigative management and consumer research on the internet. Int J Contemp Hosp Manag (2011) 24(6):838–54. 10.1097/NCM.0b013e3181badd9f [DOI] [PubMed] [Google Scholar]

- 56.Kozinets RV. On netnography: initial reflections on consumer research investigations of cyberculture. Adv Consum Res (1998) 25:366–71. [Google Scholar]

- 57.Hlavach L, Freivogel WH. Ethical implications of anonymous comments posted to online news atories. J Mass Media Ethics (2011) 26(1):21–37. 10.1080/08900523.2011.525190 [DOI] [Google Scholar]

- 58.McKee R. Ethical issues in using social media for health and health care research. Health Policy (2013) 110(2):298–301. 10.1016/j.healthpol.2013.02.006 [DOI] [PubMed] [Google Scholar]