Abstract

The planted (l, d) motif search (PMS) is one of the fundamental problems in bioinformatics, which plays an important role in locating transcription factor binding sites (TFBSs) in DNA sequences. Nowadays, identifying weak motifs and reducing the effect of local optimum are still important but challenging tasks for motif discovery. To solve the tasks, we propose a new algorithm, APMotif, which first applies the Affinity Propagation (AP) clustering in DNA sequences to produce informative and good candidate motifs and then employs Expectation Maximization (EM) refinement to obtain the optimal motifs from the candidate motifs. Experimental results both on simulated data sets and real biological data sets show that APMotif usually outperforms four other widely used algorithms in terms of high prediction accuracy.

1. Introduction

Transcription factor binding sites (TFBSs) are short and conserved nucleotide fragments (usually ≤ 30 bps) in the cis-regulatory regions of genes in DNA sequences. They interact with transcription factors (TFs) and affect the gene expression. Identification of TFBSs, that is, motif discovery [1], is a fundamental problem for its importance to understand the structure and function of gene expression.

In this paper, we focus on the planted (l, d) motif search (PMS) problem [2], a widely accepted formulation of motif discovery problem. Given a set of input n-length DNA sequences X = {X 1, X 2,…, X t} and two nonnegative integers l and d, the aim of the PMS is to find an l-mer M (an l-length string), which occurs in each of the t sequences with up to d mutations. The l-mer M is called a (l, d) motif and each mutation of M is called a motif instance.

The existing algorithms to solve PMS problem include two main categories. One is exact algorithms, most of which use consensus sequences [3] to represent motifs. The exact algorithms are guaranteed to obtain the optimal motif. Recently, the research of exact algorithms mainly concentrates on pattern-driven algorithms. All the l-length string patterns are taken as candidate motifs, and the string patterns occurring in all input sequences with up to d mutations are the motifs. Typical pattern-driven algorithms use various means to reduce time complexity [4–10]. PairMotif [4] selects multiple pairs of l-mer with relatively large distance from the input sequences to restrict the search space. Compared with recently proposed algorithms, PairMotif requires less storage space and runs faster on most PMS problems. PMS5 [7] computes the common d-neighbors of three l-mers using integer programming formulation, which is an efficient algorithm for solving the difficult instances of PMS: (21, 8) and (23, 9). Some other pattern-driven algorithms index the input sequences with a suffix tree to speed up the search of candidate motifs [11–14]. RISOTTO [11] is the fastest algorithm in the family of suffix tree algorithms for PMS problem and can solve the instance (15, 5) in 100 minutes. The initial search space of pattern-driven algorithms is O(4l). Therefore, pattern-driven algorithms are feasible for small motif length l (l ≤ 20), but they will take long running time or have high space requirement with the increase of the motif length.

The other category is approximate algorithms, which commonly use position weight matrixes (PWMs) [15] to represent motifs. They can report results in a short time but often get trapped in local optimal solutions. Most approximate algorithms attempt to maximize the score function of how likely a subsequence of an input sequence is a motif instance, using statistical analysis [16–23]. MEME [18] and Gibbs sampling [20] are well-known approximate algorithms. MEME finds motifs by optimizing the PWMs using the Expectation Maximization (EM). Based on MEME, there are some extension algorithms like Projection [21] and MCEMDA [22]. Projection projects all l-mers from the input sequences onto many buckets by hashing and then derives the consensus sequences to select some valid buckets. After the effective initialization step, EM algorithm is used for refinement. MCEMDA is a modification of the EM algorithm in that the expectation in the E-step is computed numerically through Monte Carlo simulation. Gibbs sampling is a Markov Chain Monte Carlo (MCMC) approach. Based on Gibbs sampling strategy, there are some modifications that have also been described [24, 25]. One that stands out is AlignACE [25], which is a Gibbs sampling algorithm for identifying the overrepresented motifs in a set of DNA sequences. Furthermore, some graph-theoretic methods either based on clustering or on heuristic search have also been introduced in the field of motif discovery [26–28]. CRMD [26] uses an entropy-based clustering to find good starting candidate motifs from the input sequences and then employs an effective greedy refinement to search for optimal motifs from the candidate motifs. VINE [28] is a graph clustering algorithm for motif discovery by finding t-cliques in a t-graph in polynomial time. Generally, the approximate algorithm has speedy runtime and minimal memory consumption. Sometimes, however, they cannot converge to the global optimal.

In this paper, we propose a new algorithm, APMotif, to solve motif discovery problem. APMotif first applies Affinity Propagation (AP) [29] clustering in DNA sequences to find highly conserved candidate motifs. APMotif then employs an effective EM refinement to search for optimal motifs from the candidate motifs. Experimental results show that APMotif has competitive prediction accuracy compared to that of previously developed algorithms.

2. Materials and Method

Here, we first briefly describe the original Affinity Propagation clustering and Expectation Maximization algorithms used in the remainder of the paper. We then construct the similarity matrix for motif discovery. Finally, we describe the APMotif algorithm.

2.1. Affinity Propagation (AP)

Compared with other clustering approaches, AP clustering is an effective and fast clustering algorithm, especially for large data sets. Given a set of data points X = {X 1, X 2,…, X t}, AP clustering takes as input a collection of real valued similarities s(i, k) between the pairs X i and X k, i, k ∈ {1,2,…, t}. According to the similarities between data points, AP clustering recursively calculates two types of messages: the responsibility r(i, k), reflecting the suitability of point X k as the exemplar for point X i, and the availability a(i, k), indicating how appropriate it would be for point X i to choose point X k as its exemplar:

| (1) |

Upon convergence, AP clustering selects a subset of data points as exemplar and assigns every nonexemplar point to exactly one exemplar. The exemplar e(i) = X k associated with point X i is finally defined as follows:

| (2) |

The AP clustering is terminated when the exemplar remains unchanged for a user-set number of iterations.

2.2. Expectation Maximization (EM)

For EM algorithm, given the DNA sequences X = {X 1, X 2,…, X t}, each sequence consists of two components which model the motif and nonmotif (“background”) positions in the sequence. The starting positions of the motif in each sequence are unknown and represented by the variables (“missing data”) Z = {Z i,j∣1 ≤ i ≤ t, 1 ≤ j ≤ n − l + 1}, where Z i,j = 1 if a motif starts at position j in the sequence X i, and Z i,j = 0 otherwise.

EM algorithm attempts to maximize the expectation of the logarithm of the joint likelihood of the model.

The main procedure of EM algorithm repeats iteratively the following two steps:

| (3) |

| (4) |

In (4), the logarithm of the joint likelihood of the model is defined as follows:

| (5) |

where

| (6) |

is the vector containing all the parameters of the model and P w,m is the probability of the character w ∈ {A, T, C, G} occurring at either a background position (m = 0) or a motif position (1 ≤ m ≤ l).

In (5), the conditional probability for a sequence containing a motif is defined as follows:

| (7) |

where I(i, j) indicates a vector whose entries are all zeros except the one corresponding to the character at position j in the sequence X i. Δi,j is the set of positions of the background in the sequence X i.

2.3. Construction of Similarity Matrix for Motif Discovery

In the original AP clustering, given two random l-mers x i and x k from t DNA sequences X = {X 1, X 2,…, X t}, the similarity is set as the negative Hamming distance between l-mers x i and x k; that is, s(i, k) = −d H(x i, x k) [29], which cannot describe the property of DNA sequences clustering effectively. According to the feature of PMS that two motif instances of the same motif cannot differ by more than 2d positions, and the maximum similarity principle, we employ pairwise constraints and variable-similarity measure [30] to modify the similarity as follows:

| (8) |

where

| (9) |

R 1∈ (1, +∞), R 2∈ (0, 1], and x i ∈l X p denotes x i is an l-mer of the sequence X p.

Based on the similarity in (8), the similarity between data points is more accurate and only tiny subsets of the data points are required to exchange messages, so AP clustering can not only increase clustering accuracy but also decrease runtime. Its theoretical analyses are shown in Section 3.1.

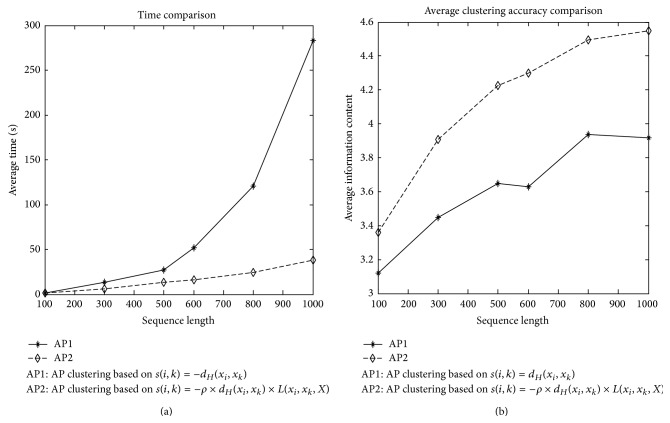

According to the two similarities: s(i, k) = −d H(x i, x k) and s(i, k) = −ρ × d H(x i, x k) × L(x i, x k, X), take the PMS instance (15, 4) with 20 sequences of different length between 100 and 1000 as an example; we show the comparison of runtime and clustering accuracy in Figure 1.

Figure 1.

AP clustering results.

2.4. APMotif Algorithm

Under the assumption of exactly one occurrence of motif instance per sequence (OOPS) [1], to find the motif instances from the input DNA sequences X = {X 1, X 2,…, X t}, APMotif algorithm consists of the following stages:

-

(1)

Constructing Clusters. Select the sequence X 1 as the reference sequence, for each l-mer x k (k = 1,2,…, n − l + 1) in X 1 (reference subsequence), and construct cluster C(x k, X), which is the set composed by all the l-mer x′ in X − {X 1} that d H(x k, x′) ≤ 2d and the l-mer x k.

-

(2)

Extracting Clusters. For each cluster C(x k, X), use AP clustering and a filtering rule to generate a highly conserved cluster C′(x k1, X).

-

(3)

Refining Clusters. For each filtered cluster C′(x k1, X), use EM refinement to obtain the distribution θ k1 and the objective function Q k1 of each cluster C′(x k1, X).

-

(4)

Verifying Motif Instances. With the maximum distribution θ max and the maximum objective function Q max, the l-mer y having the maximum log-likelihood logp(y∣θ max) in each sequence is verified as a motif instance.

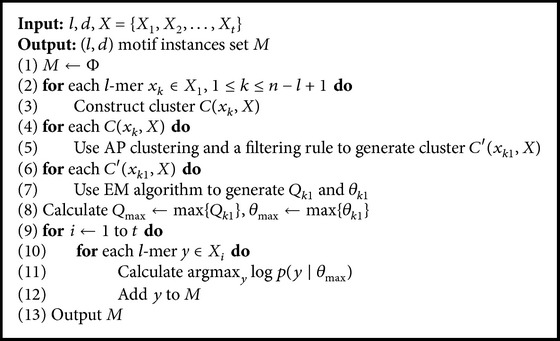

Based on the four stages, the APMotif algorithm is presented as in Algorithm 1.

Algorithm 1.

APMotif.

In line (1), the set of the (l, d) motif instances M is initialized to an empty set. Lines (2)-(3) show the stage of constructing clusters. Lines (4)-(5) show the stage of extracting clusters. Lines (6)-(7) show the stage of refining clusters. Lines (8)–(12) show the stage of verifying motif instances. APMotif can discover the (l, d) motif instances in high prediction accuracy and output them in line (13).

Next, we explain each stage in detail.

Stage 1 (construct clusters). —

The construction of clusters keeps the following simple observation that the Hamming distance between two motif instances of the same motif must be less than or equal to 2d. Generally, we choose the first sequence X 1 as the reference sequence. As we do not know in advance which l-mer x k in X 1 is the motif instance, all the l-mers x k (k = 1,2,…, n − l + 1) in X 1 are regarded as the reference subsequences. Given an l-mer x k in X 1, the selected l-mers x′ in other sequence X i (i = 2,…, n − l + 1) should satisfy d H(x k, x′) ≤ 2d, denoted as B(x k, X i) = {x′ : x′ ∈l X i, d H(x k, x′) ≤ 2d}, where x′ ∈l X i denotes that x′ is an l-mer of X i. The cluster corresponding to the reference subsequence x k is denoted as

(10) The average number of l-mers in the cluster C(x k, X) is p 2d × t × (n − l + 1), where

(11) is the probability that the Hamming distance between two random l-mers is at most 2d.

Stage 2 (extract clusters). —

For each cluster subset C(x k, X), we use the AP clustering to produce the high conserved cluster C′(x k, X) that contains the reference subsequence x k. If one of the reference subsequences x k (k = 1,2,…, n − l + 1) is a motif instance, the corresponding cluster C′(x k, X) may be the true motif model.

For each cluster C′(x k, X), two types of metrics, information content (IC) and complexity scores [31], are employed to assess the quality of the cluster. The information content of the cluster C′(x k, X) is defined as

(12) where p w,m represents the probability of each character w ∈ {A, T, C, G} appearing at the position m of the l-mer, and where p w,0 is the background probability of character w. A higher IC value indicates a stronger potential of a cluster to be the true motif model.

The complexity score of the cluster C′(x k, X) is defined as

(13)

Note that the IC value cannot completely reflect the conservation of the motif model. The reason is that many noninformative repeated l-mers may lead to a higher IC value. Fortunately, these false positive clusters have lower complexity scores and they can be effectively filtered out.

Taking these into account, we propose the following rule to filter out some unqualified clusters.

Rule. If J(C′(x k1, X))>(1/(n − l + 1))∑k=1 n−l+1 J(C′(x k, X)) and IC(C′(x k1, X)) > (1/(n − l + 1))∑k=1 n−l+1IC(C′(x k, X)), the cluster C′(x k1, X) will be stored as a candidate motif model.

According to the rule, a few clusters C′(x k1, X) with high IC value and high complexity scores are stored for EM refinement in Stage 3.

Stage 3 (refine clusters). —

It is important to note that AP clustering is primarily an initialization strategy that produces starting points for EM refinement. Taking each cluster C′(x k1, X) as a starting point, we use the modified EM refinement to search for a motif model.

The E-step of EM calculates the expected value of the missing information Z i,j, which is the probability that a motif starts in position j of sequence X i.

E-Step. Consider

(14) The M-step of EM reestimates distribution θ by maximizing the expected log-likelihood.

M-Step. Consider

(15) where ξ m is the pseudocount to deal with the zero frequencies and c w is the total number of the character w in all sequence X.

The EM algorithm is terminated when the object function Q, that is, Information Content, remains unchanged. After EM refinement, we can obtain the distribution θ k1 and the objective function Q k1 of each cluster C′(x k1, X).

Stage 4 (select motif instances). —

Comparing each distribution θ k1, we find the maximum one θ max. For the distribution θ max, an l-mer y in one sequence with the maximum log-likelihood that is considered as a candidate motif instance:

(16) Meanwhile, a candidate motif instance y should satisfy d H(y, x motif) ≤ d, where x motif is the motif by using θ max as the consensus.

Thus, the l-mer y that has the maximum log-likelihood under the distribution θ max and satisfies d H(y, x motif) ≤ d is stored in the set of motif instances M.

3. Results and Discussion

Here, we first theoretically analyze the probability of s(i, k) = −∞ and give its formula. We then show the experimental results of APMotif both on simulated data sets and real biological data sets.

3.1. Analysis of Similarity Matrix

It has been pointed out in [29] that the sparsity of the similarity matrix will lead to fast calculation since the information propagation needs not be performed if s(i, k) = −∞.

Given two random l-mers x i and x k, coming from different sequences, which differ from the same l-mer x 0 with up to 2d positions, the distance relationships between x i, x k, and x 0 satisfy 0 ≤ d H(x 0, x i) ≤ 2d and 0 ≤ d H(x 0, x k) ≤ 2d. Let p(α, β) represent the probability of d H(x 0, x i) = α and d H(x 0, x k) = β corresponding to a sample space Ω = {〈α, β〉 : 0 ≤ α ≤ 2d, 0 ≤ β ≤ 2d}. Because d H(x 0, x i) = α and d H(x 0, x k) = β are independent of each other, p(α, β) can be calculated as follows:

| (17) |

| (18) |

Let p(d H(x i, x k) > 2d) represent the probability that the Hamming distance between two random l-mers x i and x k is more than 2d.

Using Theorem of Total Probability, we have

| (19) |

where p(d H(x i, x k) > 2d∣d H(x 0, x i) = α, d H(x 0, x k) = β) represents the conditional probability of d H(x i, x k) > 2d given d H(x 0, x i) = α and d H(x 0, x k) = β.

Next, we discuss how to calculate the conditional probability p(d H(x i, x k) > 2d∣d H(x 0, x i) = α, d H(x 0, x k) = β).

According to d H(x i, x k) ≤ d H(x 0, x i) + d H(x 0, x k) and d H(x i, x k) > 2d, we can obtain

| (20) |

For 0 ≤ d H(x 0, x i) ≤ 2d and 0 ≤ d H(x 0, x k) ≤ 2d, (20) can be written as

| (21) |

Given d, for each c, we can find all the 2-tuple 〈d H(x 0, x i), d H(x 0, x k)〉 = 〈α, β〉 that satisfy (21).

Given c, for each 2-tuple 〈α 0, β 0〉, the conditional probability of d H(x i, x k) > 2d can be calculated as follows:

| (22) |

Considering all the values c = 0,1,…, 2d − 1 and all the 2-tuple 〈α, β〉, we calculate the conditional probability of d H(x i, x k) > 2d as follows:

| (23) |

According to (18) and (23), we can obtain

| (24) |

The probability p(d H(x i, x k) > 2d) is also the probability of s(i, k) = −∞ corresponding to the condition that d H(x i, x k)∈(2d, 4d].

Meanwhile, when the two l-mers x i and x k are in the same sequence, the probability of s(i, k) = −∞ in the similarity matrix is 1/(t − 1), where t is the sequence number.

For the (15, 4) problem instance with sequence number t = 20, the probability of s(i, k) = −∞ obtained by (24) and t is 0.8405, when sequence length n varies from 100 to 1000.

In Table 1, by enumerating all the s(i, k) = −∞ in the similarity matrix, the empirical result shows that the probability of s(i, k) = −∞ accounts for more or less than 84% of the similarity matrix, which is consistent with the theoretical analysis.

Table 1.

Number of −∞ related to different sequence length n on (15, 4) instance.

| n | Data sizea | Numbers of −∞ | Percentage |

|---|---|---|---|

| 100 | 93 | 6.81e + 03 | 78.75% |

| 300 | 308 | 8.01e + 04 | 84.43% |

| 500 | 523 | 2.29e + 05 | 83.89% |

| 600 | 630 | 3.41e + 05 | 85.82% |

| 800 | 846 | 5.84e + 05 | 81.55% |

| 1000 | 1061 | 9.53e + 05 | 84.67% |

aData size: the number of all l-mers in one cluster.

3.2. Results on Synthetic Data Sets

We generate the synthetic data sets as follows: first, we generate a motif M of length l and t independent and identically distributed (i.i.d) sequences X of length n. Then, we implant (l, d) instance, which differs from the motif M with up to d positions, into a random position in each sequence.

The nucleotide level performance coefficient (nPC) defined by Pevzner and Sze [2] is used to evaluate the motif prediction accuracy:

| (25) |

K is the set of l × t base positions in the t known motif instances, and P is the corresponding set of l × t base positions in the t predicted motif instances. The value of nPC is between 0 and 1; the larger the value of nPC, the higher the accuracy of the predicted motif.

Table 2 shows the comparison of the mean nPC obtained by APMotif and four other representative algorithms: MEME [16–18], Gibbs sampling [20], Projection [21], and VINE [28]. For each of the (l, d) combinations, all the five algorithms are run once on each of 10 randomly generated sets of input sequences (t = 20, n = 600). APMotif constitutes a simple and effective method which groups the significant l-mers to form the optimal clusters so that the motif instances can be predicted with high accuracy. APMotif has the highest mean nPC on the instances (11, 3), (12, 3), (18, 6), and (19, 7), and the second highest mean nPC on the instances (15, 4), (16, 5), which proves that APMotif is relatively robust in various problem instances.

Table 2.

Prediction accuracy on different (l, d) instances.

| (l, d) | nPC | ||||

|---|---|---|---|---|---|

| Projection | MEME | VINE | Gibbs sampling | APMotif | |

| (11, 3) | 92% | 65% | 95% | 56% | 96% |

| (12, 3) | 77% | 84% | 92% | 3% | 93% |

| (15, 4) | 93% | 86% | 98% | 19% | 96% |

| (16, 5) | 64% | 71% | 95% | 2% | 94% |

| (18, 6) | 75% | 79% | 93% | 3% | 98% |

| (19, 7) | 84% | 77% | 92% | 4% | 97% |

In Table 3, we compare the nPC of APMotif on problem instances with longer background sequences. Since the longer a sequence is, the more noisy l-mers will be yielded, this makes it difficult to discover the true motifs. We fix the (l, d) instance as (15, 4) instance, one of the most popular benchmarks for motif discovery problem, and vary the sequence length n from 100 to 1000. For each setting, 10 i.i.d data sets are generated, each containing 20 sequences. The nPC of APMotif is over 95% for sequences of various lengths between 100 and 1000, much greater than that of Projection, MEME, Gibbs sampling, and VINE. The reason why the performance of APMotif is stable over the sequence length is that APMotif has strong ability of filtering noisy l-mers. With each sequence length n increasing (n ≥ 600), APMotif still maintains its advantage in the prediction accuracy. For example, when the sequence length is 1000 bps, the nPC of Projection, MEME, VINE, and Gibbs sampling are 88%, 76%, 91%, and 8%, respectively, while APMotif algorithm shows its advantage with the nPC 95%.

Table 3.

Prediction accuracy of different sequence length n on (15, 4) instance.

| n | nPC | ||||

|---|---|---|---|---|---|

| Projection | MEME | VINE | Gibbs sampling | APMotif | |

| 100 | 96% | 99% | 99% | 92% | 100% |

| 300 | 94% | 98% | 99% | 58% | 99% |

| 600 | 89% | 91% | 97% | 19% | 98% |

| 800 | 87% | 90% | 98% | 14% | 97% |

| 1000 | 88% | 76% | 91% | 8% | 95% |

3.3. Results on Real Biological Data Sets

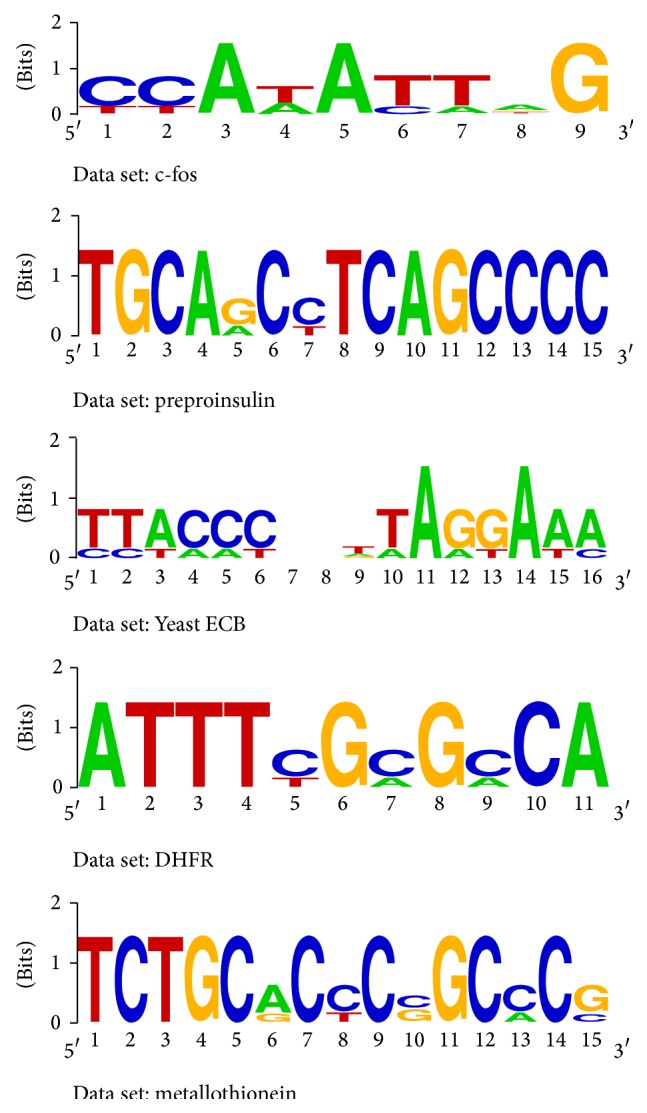

At first, the performance of APMotif is evaluated on the five widely used real data sets discussed in [21], which are preproinsulin, dihydrofolate reductase (DHFR), c-fos, metallothionein, and Yeast ECB. Because no information about the l and the d of the true motif is known in advance, we select the (l, d) used for each real data set as follows: the value of l is fixed as the length of the reference motif; the value of d is minimum to ensure that the predicted (l, d) instance contains the reference motif. Table 4 shows that the predicted motifs returned by the APMotif algorithm are almost consistent with the reference motifs. In Figure 2, the software Weblogo [32] is used to show the sequence logos of the predicted motifs, which graphically shows the degree of motif conservation measured by relative entropy.

Table 4.

Results of APMotif on real biological data.

| Data set | Predicted motif | Reference motif | (l, d) |

|---|---|---|---|

| c-fos | CCATATTAG | CCANATTNG | (9, 2) |

| Preproinsulin | TGCAGCCTCAGCCCC | CAGCCTCAGCCCCAT | (15, 2) |

| Yeast ECB | TTACCCNNTTAGGAAA | TTTCCCNNTNAGGAAA | (16, 3) |

| DHFR | ATTTCGCGCCA | ATTTCGCGCCA | (11, 2) |

| Metallothionein | TCTGCACCCGGCCCG | CTCTGACNCCGCCC | (15, 2) |

Figure 2.

Sequence logos of the predicted motifs.

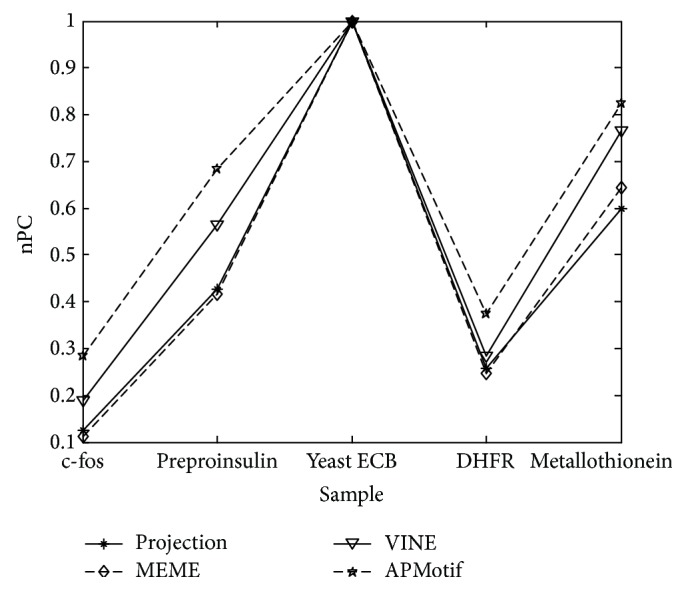

For the five real data sets, Figure 3 compares the nucleotide level performance coefficient of APMotif with that of other popular algorithms. For Yeast ECB, the nPC of APMotif, MEME, Projection, and VINE are 1, which indicates the prediction result is completely correct. For c-fos, preproinsulin, DHFR, and metallothionein, the nPC of APMotif is 0.28, 0.68, 0.37, and 0.82, respectively, greater than that of three other widely used motif finding algorithms.

Figure 3.

Prediction accuracy on real biological data.

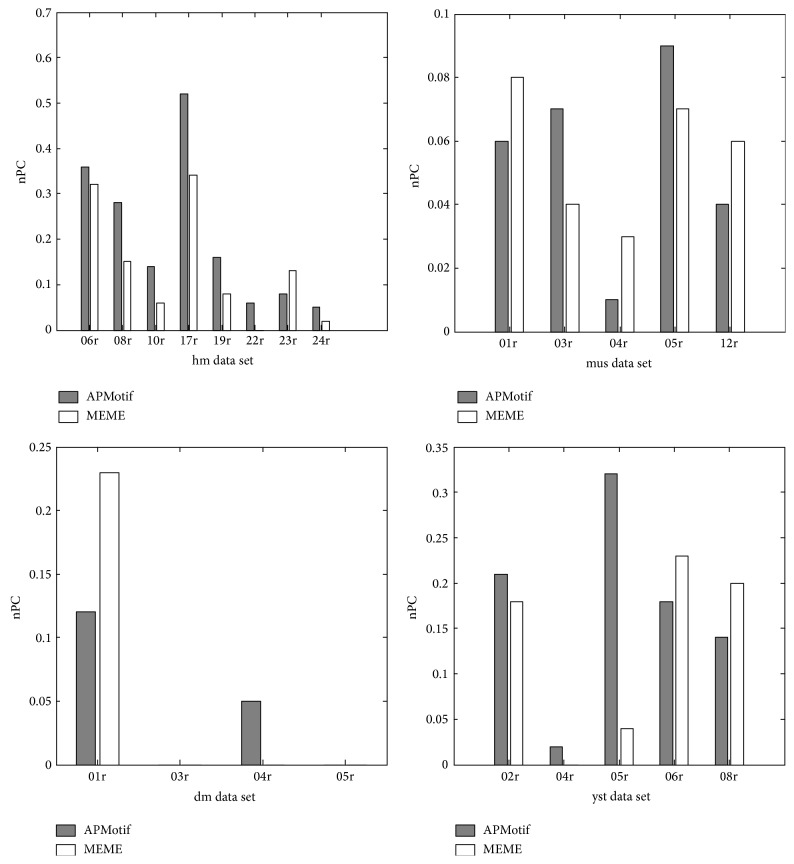

In addition, we show the prediction performance of APMotif on Tompa data [33], which is set up as the benchmark for testing motif discovery algorithms. For most Tompa data, the distribution of motif in each sequence makes it difficult to report the motif occurrence positions. We select some Tompa data. When a sequence contains more than one motif, it is difficult to discover all the motifs. When some sequences do not contain any motif, it is difficult to discovery motifs in other sequences. Overall, most algorithms have very low prediction accuracy in Tompa data. To improve the prediction accuracy, different algorithms should be executed together to complement each other.

Figure 4 shows the prediction accuracy (nPC) of APMotif and MEME on each selected Tompa data. We observe that, for some data, such as hm08r, hm19r, mus03r, dm04r, and yst02r, the nPC of APMotif is better than that of MEME, but for some other data, such as hm23r, mus04r, dm01r, and yst06r, the nPC of MEME is better than that of APMotif. This phenomenon illustrates the practical significance in combining the results of APMotif and MEME to improve the ability of motif discovery algorithms in identifying TFBSs in higher eukaryotes [33].

Figure 4.

Prediction accuracy on Tompa data.

4. Conclusions

The planted (l, d) motif search (PMS) problem arises from the need to find transcription factor binding sites (TFBSs) in DNA sequences. In this paper, we propose a new approximate algorithm, APMotif, which overcomes the local maximum drawback to some extent that is inherent in the EM motif-finding algorithms and guarantees that most motifs can be discovered for specific (l, d) settings. APMotif first constructs clusters by computing the Hamming distance between each l-mer in the reference sequence and all the l-mers in other sequences, and then it uses AP clustering combined with two metrics to select the high conserved clusters for the EM refinement progress. After the EM refinement, the cluster with maximum information content is verified as the motif instances. The experimental results on the synthetic data sets show that APMotif indeed removes most useless background information to obtain motifs with high accuracy. The experimental results on the real biological data show that APMotif can discover all or a large part of the motif instances. In summary, the APMotif algorithm outperforms the compared algorithms with significant improvement in prediction accuracy.

In the last years, the introduction of ChIP-Seq data raises new challenges for motif discovery problem from the perspective of data scale. Most existing motif discovery algorithms proposed for small data set are inefficient in dealing with ChIP-Seq data. Since APMotif performs AP clustering for multiple times with each clustering independent of others, APMotif thus features the merit for parallel computing. In our future work, we plan to parallel the APMotif algorithm to be fastened, so that the improved APMotif algorithm can deal with large data set efficiently.

Acknowledgment

This research was supported in part by the National Natural Science Foundation of China (61173205 and 61373044).

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Stormo G. D. DNA binding sites: representation and discovery. Bioinformatics. 2000;16(1):16–23. doi: 10.1093/bioinformatics/16.1.16. [DOI] [PubMed] [Google Scholar]

- 2.Pevzner P. A., Sze S.-H. Combinatorial approaches to finding subtle signals in DNA sequences. Proceedings of the 8th International Conference on Intelligent Systems for Molecular Biology; August 2000; San Diego, Calif, USA. pp. 269–278. [PubMed] [Google Scholar]

- 3.Schneider T. D. Consensus sequence zen. Applied Bioinformaitics. 2002;1(3):111–119. [PMC free article] [PubMed] [Google Scholar]

- 4.Yu Q., Huo H., Zhang Y., Guo H. PairMotif: a new pattern-driven algorithm for planted (l, d) DNA motif search. PLoS ONE. 2012;7(10) doi: 10.1371/journal.pone.0048442.e48442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chin F. Y. L., Leung H. C. M. Voting algorithms for discovering long motifs. Proceedings of the 3rd Asia-Pacific Bioinformatics Conference (APBC '05); January 2005; pp. 261–271. [Google Scholar]

- 6.Davila J., Balla S., Rajasekaran S. Fast and practical algorithms for planted (l, d) motif search. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2007;4(4):544–552. doi: 10.1109/tcbb.2007.70241. [DOI] [PubMed] [Google Scholar]

- 7.Dinh H., Rajasekaran S., Kundeti V. K. PMS5: an efficient exact algorithm for the (ℓ, d)-motif finding problem. BMC Bioinformatics. 2011;12, article 410 doi: 10.1186/1471-2105-12-410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ho E. S., Jakubowski C. D., Gunderson S. I. iTriplet, a rule-based nucleic acid sequence motif finder. Algorithms for Molecular Biology. 2009;4(1, article 14) doi: 10.1186/1748-7188-4-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dinh H., Rajasekaran S., Davila J. qPMS7: a fast algorithm for finding (l, d)-motifs in DNA and protein sequences. PLoS ONE. 2012;7(7) doi: 10.1371/journal.pone.0041425.e41425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nicolae M., Rajasekaran S. Efficient sequential and parallel algorithms for planted motif search. BMC Bioinformatics. 2014;15(1, article 34) doi: 10.1186/1471-2105-15-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pisanti N., Carvalho A. M., Marsan L., Sagot M.-F. LATIN 2006: Theoretical Informatics. Vol. 7. Springer; 2006. RISOTTO: fast extraction of motifs with mismatches; pp. 757–768. [DOI] [Google Scholar]

- 12.Floratou A., Tata S., Patel J. M. Efficient and accurate discovery of patterns in sequence data sets. IEEE Transactions on Knowledge and Data Engineering. 2011;23(8):1154–1168. doi: 10.1109/TKDE.2011.69. [DOI] [Google Scholar]

- 13.Sagot M. F. Spelling approximate repeated or common motifs using a suffix tree. Proceedings of the 3rd Latin American Symposium on Theoretical Informatics (LATIN '98); 1998; pp. 374–390. [Google Scholar]

- 14.Pavesi G., Mauri G., Pesole G. An algorithm for finding signals of unknown length in DNA sequences. Bioinformatics. 2001;17(supplement 1):S207–S214. doi: 10.1093/bioinformatics/17.suppl_1.s207. Proceedings of the 9th International Conference on Intelligent Systems for Molecular Biology (ISMB '01) [DOI] [PubMed] [Google Scholar]

- 15.Thompson J. D., Higgins D. G., Gibson T. J. CLUSTAL W: improving the sensitivity of progressive multiple sequence alignment through sequence weighting, position-specific gap penalties and weight matrix choice. Nucleic Acids Research. 1994;22(22):4673–4680. doi: 10.1093/nar/22.22.4673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bailey T. L., Elkan C. Fitting a mixture model by expectation maximization to discover motifs in biopolymers. Proceedings of the 2nd International Conference on Intelligent Systems for Molecular Biology; 1994; pp. 28–36. [PubMed] [Google Scholar]

- 17.Quang D., Xie X. EXTREME: an online em algorithm for motif discovery. Bioinformatics. 2014;30(12):1667–1673. doi: 10.1093/bioinformatics/btu093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bailey T. L., Williams N., Misleh C., Li W. W. MEME: discovering and analyzing DNA and protein sequence motifs. Nucleic Acids Research. 2006;34(supplement 2):W369–W373. doi: 10.1093/nar/gkl198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hertz G. Z., Stormo G. D. Identifying DNA and protein patterns with statistically significant alignments of multiple sequences. Bioinformatics. 1999;15(7-8):563–577. doi: 10.1093/bioinformatics/15.7.563. [DOI] [PubMed] [Google Scholar]

- 20.Lawrence C. E., Altschul S. F., Boguski M. S., Liu J. S., Neuwald A. F., Wootton J. C. Detecting subtle sequence signals: a gibbs sampling strategy for multiple alignment. Science. 1993;262(5131):208–214. doi: 10.1126/science.8211139. [DOI] [PubMed] [Google Scholar]

- 21.Buhler J., Tompa M. Finding motifs using random projections. Journal of Computational Biology. 2002;9(2):225–242. doi: 10.1089/10665270252935430. [DOI] [PubMed] [Google Scholar]

- 22.Bi C. A monte carlo em algorithm for de novo motif discovery in biomolecular sequences. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2009;6(3):370–386. doi: 10.1109/TCBB.2008.103. [DOI] [PubMed] [Google Scholar]

- 23.Yu Q., Huo H., Zhang Y., Guo H., Guo H. PairMotif+: a fast and effective algorithm for De Novo motif discovery in DNA sequences. International Journal of Biological Sciences. 2013;9(4):412–424. doi: 10.7150/ijbs.5786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Thompson W., Rouchka E. C., Lawrence C. E. Gibbs recursive sampler: finding transcription factor binding sites. Nucleic Acids Research. 2003;31(13):3580–3585. doi: 10.1093/nar/gkg608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Roth F. P., Hughes J. D., Estep P. W., Church G. M. Finding DNA regulatory motifs within unaligned noncoding sequences clustered by whole-genome mRNA quantitation. Nature Biotechnology. 1998;16(10):939–945. doi: 10.1038/nbt1098-939. [DOI] [PubMed] [Google Scholar]

- 26.Li G., Chan T.-M., Leung K.-S., Lee K.-H. A cluster refinement algorithm for motif discovery. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2010;7(4):654–668. doi: 10.1109/TCBB.2009.25. [DOI] [PubMed] [Google Scholar]

- 27.van Dongen S. Graph clustering by flow simulation [Ph.D. thesis] University of Utrecht; 2000. [Google Scholar]

- 28.Huang C.-W., Lee W.-S., Hsieh S.-Y. An improved heuristic algorithm for finding motif signals in DNA sequences. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2011;8(4):959–975. doi: 10.1109/TCBB.2010.92. [DOI] [PubMed] [Google Scholar]

- 29.Frey B. J., Dueck D. Clustering by passing messages between data points. Science. 2007;315(5814):972–976. doi: 10.1126/science.1136800. [DOI] [PubMed] [Google Scholar]

- 30.Leone M., Sumedha, Weigt M. Clustering by soft-constraint affinity propagation: applications to gene-expression data. Bioinformatics. 2007;23(20):2708–2715. doi: 10.1093/bioinformatics/btm414. [DOI] [PubMed] [Google Scholar]

- 31.Wang D., Lee N. K. Computational discovery of motifs using hierarchical clustering techniques. Proceedings of the 8th IEEE International Conference on Data Mining (ICDM '08); December 2008; pp. 1073–1078. [DOI] [Google Scholar]

- 32.Crooks G. E., Hon G., Chandonia J.-M., Brenner S. E. WebLogo: a sequence logo generator. Genome Research. 2004;14(6):1188–1190. doi: 10.1101/gr.849004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tompa M., Li N., Bailey T. L., et al. Assessing computational tools for the discovery of transcription factor binding sites. Nature Biotechnology. 2005;23(1):137–144. doi: 10.1038/nbt1053. [DOI] [PubMed] [Google Scholar]