Significance

We present evidence from five experiments in two countries suggesting the power and robustness of the search engine manipulation effect (SEME). Specifically, we show that (i) biased search rankings can shift the voting preferences of undecided voters by 20% or more, (ii) the shift can be much higher in some demographic groups, and (iii) such rankings can be masked so that people show no awareness of the manipulation. Knowing the proportion of undecided voters in a population who have Internet access, along with the proportion of those voters who can be influenced using SEME, allows one to calculate the win margin below which SEME might be able to determine an election outcome.

Keywords: search engine manipulation effect, search rankings, Internet influence, voter manipulation, digital bandwagon effect

Abstract

Internet search rankings have a significant impact on consumer choices, mainly because users trust and choose higher-ranked results more than lower-ranked results. Given the apparent power of search rankings, we asked whether they could be manipulated to alter the preferences of undecided voters in democratic elections. Here we report the results of five relevant double-blind, randomized controlled experiments, using a total of 4,556 undecided voters representing diverse demographic characteristics of the voting populations of the United States and India. The fifth experiment is especially notable in that it was conducted with eligible voters throughout India in the midst of India’s 2014 Lok Sabha elections just before the final votes were cast. The results of these experiments demonstrate that (i) biased search rankings can shift the voting preferences of undecided voters by 20% or more, (ii) the shift can be much higher in some demographic groups, and (iii) search ranking bias can be masked so that people show no awareness of the manipulation. We call this type of influence, which might be applicable to a variety of attitudes and beliefs, the search engine manipulation effect. Given that many elections are won by small margins, our results suggest that a search engine company has the power to influence the results of a substantial number of elections with impunity. The impact of such manipulations would be especially large in countries dominated by a single search engine company.

Recent research has demonstrated that the rankings of search results provided by search engine companies have a dramatic impact on consumer attitudes, preferences, and behavior (1–12); this is presumably why North American companies now spend more than 20 billion US dollars annually on efforts to place results at the top of rankings (13, 14). Studies using eye-tracking technology have shown that people generally scan search engine results in the order in which the results appear and then fixate on the results that rank highest, even when lower-ranked results are more relevant to their search (1–5). Higher-ranked links also draw more clicks, and consequently people spend more time on Web pages associated with higher-ranked search results (1–9). A recent analysis of ∼300 million clicks on one search engine found that 91.5% of those clicks were on the first page of search results, with 32.5% on the first result and 17.6% on the second (7). The study also reported that the bottom item on the first page of results drew 140% more clicks than the first item on the second page (7). These phenomena occur apparently because people trust search engine companies to assign higher ranks to the results best suited to their needs (1–4, 11), even though users generally have no idea how results get ranked (15).

Why do search rankings elicit such consistent browsing behavior? Part of the answer lies in the basic design of a search engine results page: the list. For more than a century, research has shown that an item’s position on a list has a powerful and persuasive impact on subjects’ recollection and evaluation of that item (16–18). Specific order effects, such as primacy and recency, show that the first and last items presented on a list, respectively, are more likely to be recalled than items in the middle (16, 17).

Primacy effects in particular have been shown to have a favorable influence on the formation of attitudes and beliefs (18–20), enhance perceptions of corporate performance (21), improve ratings of items on a survey (22–24), and increase purchasing behavior (25). More troubling, however, is the finding that primacy effects have a significant impact on voting behavior, resulting in more votes for the candidate whose name is listed first on a ballot (26–32). In one recent experimental study, primacy accounted for a 15% gain in votes for the candidate listed first (30). Although primacy effects have been shown to extend to hyperlink clicking behavior in online environments (33–35), no study that we are aware of has yet examined whether the deliberate manipulation of search engine rankings can be leveraged as a form of persuasive technology in elections. Given the power of order effects and the impact that search rankings have on consumer attitudes and behavior, we asked whether the deliberate manipulation of search rankings pertinent to candidates in political elections could alter the attitudes, beliefs, and behavior of undecided voters.

It is already well established that biased media sources such as newspapers (36–38), political polls (39), and television (40) sway voters (41, 42). A 2007 study by DellaVigna and Kaplan found, for example, that whenever the conservative-leaning Fox television network moved into a new market in the United States, conservative votes increased, a phenomenon they labeled the Fox News Effect (40). These researchers estimated that biased coverage by Fox News was sufficient to shift 10,757 votes in Florida during the 2000 US Presidential election: more than enough to flip the deciding state in the election, which was carried by the Republican presidential candidate by only 537 votes. The Fox News Effect was also found to be smaller in television markets that were more competitive.

We believe, however, that the impact of biased search rankings on voter preferences is potentially much greater than the influence of traditional media sources (43), where parties compete in an open marketplace for voter allegiance. Search rankings are controlled in most countries today by a single company. If, with or without intervention by company employees, the algorithm that ranked election-related information favored one candidate over another, competing candidates would have no way of compensating for the bias. It would be as if Fox News were the only television network in the country. Biased search rankings would, in effect, be an entirely new type of social influence, and it would be occurring on an unprecedented scale. Massive experiments conducted recently by social media giant Facebook have already introduced other unprecedented types of influence made possible by the Internet. Notably, an experiment reported recently suggested that flashing “VOTE” ads to 61 million Facebook users caused more than 340,000 people to vote that day who otherwise would not have done so (44). Zittrain has pointed out that if Facebook executives chose to prompt only those people who favored a particular candidate or party, they could easily flip an election in favor of that candidate, performing a kind of “digital gerrymandering” (45).

We evaluated the potential impact of biased search rankings on voter preferences in a series of experiments with the same general design. Subjects were asked for their opinions and voting preferences both before and after they were allowed to conduct research on candidates using a mock search engine we had created for this purpose. Subjects were randomly assigned to groups in which the search results they were shown were biased in favor of one candidate or another, or, in a control condition, in favor of neither candidate. Would biased search results change the opinions and voting preferences of undecided voters, and, if so, by how much? Would some demographic groups be more vulnerable to such a manipulation? Would people be aware that they were viewing biased rankings? Finally, what impact would familiarity with the candidates have on the manipulation?

Study 1: Three Experiments in San Diego, CA

To determine the potential for voter manipulation using biased search rankings, we initially conducted three laboratory-based experiments in the United States, each using a double-blind control group design with random assignment. For each of the experiments, we recruited 102 eligible voters through newspaper and online advertisements, as well through notices in senior recreation centers, in the San Diego, CA, area.* The advertisements offered USD$25 for each subject’s participation, and subjects were prescreened in an attempt to match diverse demographic characteristics of the US voting population (46).

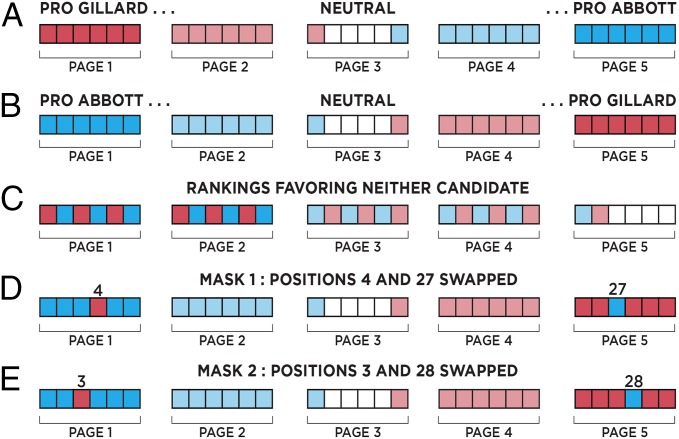

Each of the three experiments used 30 actual search results and corresponding Web pages relating to the 2010 election to determine the prime minister of Australia. The candidates were Tony Abbott and Julia Gillard, and the order in which their names were presented was counterbalanced in all conditions. This election was used to minimize possible preexisting biases by US study participants and thus to try to guarantee that our subjects would be truly “undecided.” In each experiment, subjects were randomly assigned to one of three groups: (i) rankings favoring Gillard (which means that higher-ranked search results linked to Web pages that portrayed Gillard as the better candidate), (ii) rankings favoring Abbott, or (iii) rankings favoring neither (Fig. 1 A–C). The order of these rankings was determined based on ratings of Web pages provided by three independent observers. Neither the subjects nor the research assistants who supervised them knew either the hypothesis of the experiment or the groups to which subjects were assigned.

Fig. 1.

Search rankings for the three experiments in study 1. (A) For subjects in group 1 of experiment 1, 30 search results that linked to 30 corresponding Web pages were ranked in a fixed order that favored candidate Julia Gillard, as follows: those favoring Gillard (from highest to lowest rated pages), then those favoring neither candidate, then those favoring Abbott (from lowest to highest rated pages). (B) For subjects in group 2 of experiment 1, the search results were displayed in precisely the opposite order so that they favored the opposing candidate, Tony Abbott. (C) For subjects in group 3 of experiment 1 (the control group), the ranking favored neither candidate. (D) For subjects in groups 1 and 2 of experiment 2, the rankings bias was masked slightly by swapping results that had originally appeared in positions 4 and 27. Thus, on the first page of search results, five of the six results—all but the one in the fourth position—favored one candidate. (E) For subjects in groups 1 and 2 of experiment 3, a more aggressive mask was used by swapping results that had originally appeared in positions 3 and 28.

Initially, subjects read brief biographies of the candidates and rated them on 10-point Likert scales with respect to their overall impression of each candidate, how much they trusted each candidate, and how much they liked each candidate. They were also asked how likely they would be to vote for one candidate or the other on an 11-point scale ranging from −5 to +5, as well as to indicate which of the two candidates they would vote for if the election were held that day.

The subjects then spent up to 15 min gathering more information about the candidates using a mock search engine we had created (called Kadoodle), which gave subjects access to five pages of search results with six results per page. As is usual with search engines, subjects could click on any search result to view the corresponding Web page, or they could click on numbers at the bottom of each results page to view other results pages. The same search results and Web pages were used for all subjects in each experiment; only the order of the search results was varied (Fig. 1). Subjects had the option to end the search whenever they felt they had acquired sufficient information to make a sound decision. At the conclusion of the search, subjects rated the candidates again. When their ratings were complete, subjects were asked (on their computer screens) whether anything about the search rankings they had viewed “bothered” them; they were then given an opportunity to write at length about what, if anything, had bothered them. We did not ask specifically whether the search rankings appeared to be “biased” to avoid false positives typically generated by leading or suggestive questions (47).

Regarding the ethics of our study, our manipulation could have no impact on a past election, and we were also not concerned that it could affect the outcome of future elections, because the number of subjects we recruited was small and, to our knowledge, included no Australian voters. Moreover, our study was designed so that it did not favor any one candidate, so there was no overall bias. The study presented no more than minimal risk to subjects and was approved by the Institutional Review Board (IRB) of the American Institute for Behavioral Research and Technology (AIBRT). Informed consent was obtained from all subjects.

In aggregate for the first three experiments in San Diego, CA, the demographic characteristics of our subjects (mean age, 42.5 y; SD = 18.1 y; range, 18–95 y) did not differ from characteristics of the US voting population by more than the following margins: 6.4% within any category of the age or sex measures; 14.1% within any category of the race measure; 18.7% within any category of the income or education measures; and 21.1% within any category of the employment status measure (Table S1). Subjects’ political inclinations were fairly balanced, with 20.3% identifying themselves as conservative, 28.8% as moderate, 22.5% as liberal, and 28.4% as indifferent. Political party affiliation, however, was less balanced, with 21.6% identifying as Republican, 19.6% as Independent, 44.8% as Democrat, 6.2% as Libertarian, and 7.8% as other. In aggregate, subjects reported conducting an average of 7.9 searches (SD = 17.5) per day using search engines, and 52.3% reported having conducted searches to learn about political candidates. They also reported having little or no familiarity with the candidates (mean familiarity on a scale of 1–10, 1.4; SD = 0.99). On average, subjects in the first three experiments spent 635.9 s (SD = 307.0) using our mock search engine.

Table S1.

Demographics for studies 1 and 2

| Category | Value | Census 2010† | Study 1 | Census and study 1 | Study 2 | |||

| n | % | n | % | Z | n | % | ||

| Age | 18–24 | 26,718 | 12.7% | 51 | 16.7% | 2.097* | 446 | 21.2% |

| 25–44 | 70,472 | 33.4% | 122 | 39.9% | 2.385* | 1,274 | 60.7% | |

| 45–64 | 75,865 | 36.0% | 95 | 31.0% | 1.800 | 342 | 16.3% | |

| 65–74 | 20,605 | 9.8% | 20 | 6.5% | 1.906 | 33 | 1.6% | |

| 75+ | 17,140 | 8.1% | 18 | 5.9% | 1.438 | 5 | 0.2% | |

| Race | White | 152,929 | 72.5% | 179 | 58.5% | 5.502*** | 1,645 | 78.3% |

| Black | 25,632 | 11.8% | 38 | 12.4% | 0.349 | 126 | 6.0% | |

| Hispanic | 21,285 | 9.8% | 52 | 17.0% | 4.169*** | 121 | 5.8% | |

| Asian | 7,638 | 3.9% | 7 | 2.3% | 1.528 | 123 | 5.9% | |

| Other | 3,316 | 2.0% | 30 | 9.8% | 10.977*** | 85 | 4.0% | |

| Sex | Male | 101,279 | 48.0% | 162 | 52.9% | 1.715 | 1,148 | 54.7% |

| Female | 109,521 | 52.0% | 144 | 47.1% | 1.715 | 947 | 45.1% | |

| Other | n/a | n/a | 0 | 0.0% | n/a | 5 | 0.2% | |

| Education | Less than ninth grade | 6,655 | 3.2% | 2 | 0.7% | 2.504* | 0 | 0.0% |

| Ninth to 12th grade | 15,931 | 7.6% | 45 | 14.7% | 4.724*** | 22 | 1.0% | |

| High school graduate | 65,951 | 31.3% | 68 | 22.2% | 3.417*** | 231 | 11.0% | |

| Some college or associate degree | 62,655 | 29.7% | 145 | 47.4% | 6.753*** | 820 | 39.0% | |

| Bachelors | 39,272 | 18.6% | 30 | 9.8% | 3.963*** | 752 | 35.8% | |

| Advanced | 20,336 | 9.6% | 16 | 5.2% | 2.616** | 275 | 13.1% | |

| Used‡ | Yes | 126,477 | 60.0% | 119 | 38.9% | 7.531*** | 1,509 | 71.9% |

| No | 84,323 | 40.0% | 187 | 61.1% | 7.531*** | 591 | 28.1% | |

| Income | Under $10,000 | 5,496 | 3.6% | 67 | 21.9% | 20.009*** | 137 | 6.5% |

| $10,000 to $14,999 | 5,069 | 3.3% | 33 | 10.8% | 8.538*** | 131 | 6.2% | |

| $15,000 to $19,999 | 4,549 | 2.9% | 28 | 9.2% | 7.446*** | 124 | 5.9% | |

| $20,000 to $29,999 | 12,632 | 8.2% | 45 | 14.7% | 4.800*** | 282 | 13.4% | |

| $30,000 to $39,999 | 13,182 | 8.5% | 34 | 11.1% | 1.857 | 288 | 13.7% | |

| $40,000 to $49,999 | 10,807 | 7.0% | 17 | 5.6% | 1.143 | 239 | 11.4% | |

| $50,000 to $74,999 | 25,516 | 16.5% | 30 | 9.8% | 3.602*** | 405 | 19.3% | |

| $75,000 to $99,999 | 17,597 | 11.4% | 11 | 3.6% | 4.932*** | 235 | 11.2% | |

| $100,000 to $149,999 | 16,586 | 10.7% | 5 | 1.6% | 5.916*** | 148 | 7.0% | |

| $150,000 and over | 12,102 | 7.8% | 0 | 0.0% | 5.893*** | 46 | 2.2% | |

| Prefer not to say | 30,875 | 20.0% | 36 | 11.8% | 4.069*** | 65 | 3.1% | |

| Marital status | Married | 113,421 | 53.8% | 48 | 15.7% | 13.364*** | 751 | 35.8% |

| Widowed | 13,612 | 6.5% | 27 | 8.8% | 1.682 | 15 | 0.7% | |

| Divorced | 23,035 | 10.9% | 68 | 22.2% | 6.324*** | 141 | 6.7% | |

| Separated | 4,528 | 2.1% | 15 | 4.9% | 3.317*** | 33 | 1.6% | |

| Never married | 56,203 | 26.7% | 148 | 48.4% | 8.576*** | 1,160 | 55.2% | |

P < 0.05; **P < 0.01; and ***P < 0.001.

Census numbers are in hundred thousands.

For census data, “No” includes “unemployed” and “not in labor force.”

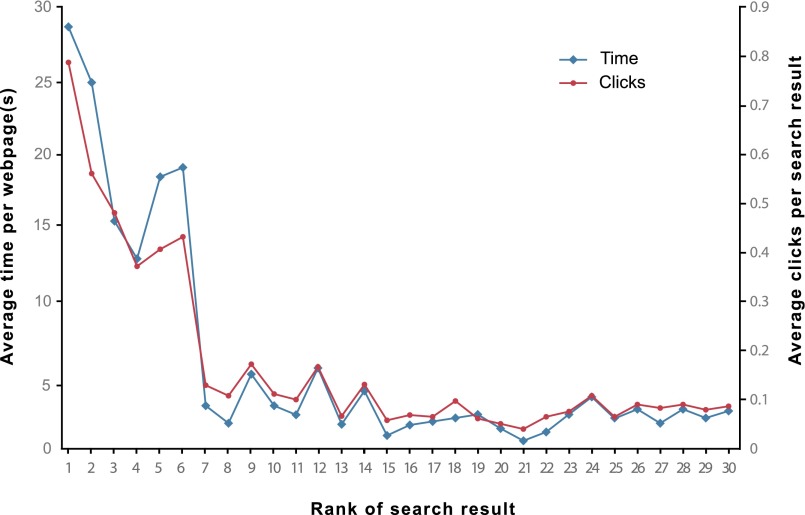

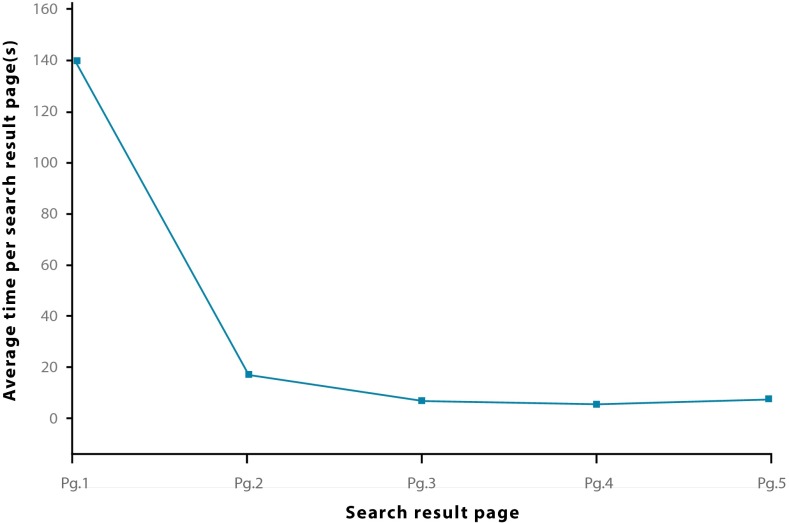

As expected, higher search rankings drew more clicks, and the pattern of clicks for the first three experiments correlated strongly with the pattern found in a recent analysis of ∼300 million clicks [r(13) = 0.90, P < 0.001; Kolmogorov–Smirnov test of differences in distributions: D = 0.033, P = 0.31; Fig. 2] (7). In addition, subjects spent more time on Web pages associated with higher-ranked results (Fig. 2), as well as substantially more time on earlier search pages (Fig. 3).

Fig. 2.

Clicks on search results and time allocated to Web pages as a function of search result rank, aggregated across the three experiments in study 1. Subjects spent less time on Web pages corresponding to lower-ranked search results (blue curve) and were less likely to click on lower-ranked results (red curve). This pattern is found routinely in studies of Internet search engine use (1–12).

Fig. 3.

Amount of time, aggregated across the three experiments in study 1, that subjects spent on each of the five search pages. Subjects spent most of their time on the first search page, a common finding in Internet search engine research (1–12).

In experiment 1, we found no significant differences among the three groups with respect to subjects’ ratings of the candidates before Web research (Table S2). Following the Web research, all candidate ratings in the bias groups shifted in the predicted directions compared with candidate ratings in the control group (Table 1).

Table S2.

Voting preferences by group for study 1

| Experiment | Voting preferences | Mean (SE) | Kruskal–Wallis (χ2) | Mann–Whitney u | ||

| Group 1 (Gillard bias) | Group 2 (Abbott bias) | Group 3 (control) | ||||

| 1 | PreImpressionAbbott | 8.09 (0.34) | 7.74 (0.40) | 7.41 (0.26) | 3.979 | 525.0 |

| PreImpressionGillard | 7.06 (0.42) | 7.47 (0.35) | 6.88 (0.32) | 1.395 | 529.5 | |

| PreTrustAbbott | 7.82 (0.31) | 7.85 (0.39) | 7.35 (0.28) | 3.275 | 538.5 | |

| PreTrustGillard | 6.38 (0.40) | 7.56 (0.30) | 6.88 (0.32) | 5.213 | 407.0 | |

| PreLikeAbbott | 6.06 (0.52) | 5.68 (0.47) | 5.79 (0.38) | 0.296 | 538.5 | |

| PreLikeGillard | 5.29 (0.48) | 5.76 (0.41) | 5.29 (0.37) | 1.335 | 500.0 | |

| PostImpressionAbbott | 4.24 (0.49) | 7.29 (0.51) | 5.85 (0.38) | 19.029*** | 252.0*** | |

| PostImpressionGillard | 7.26 (0.45) | 4.71 (0.47) | 5.65 (0.46) | 14.667** | 286.0** | |

| PostTrustAbbott | 4.59 (0.43) | 7.32 (0.51) | 6.15 (0.38) | 18.385*** | 260.5*** | |

| PostTrustGillard | 6.91 (0.42) | 4.97 (0.43) | 6.15 (0.40) | 10.809** | 326.5** | |

| PostLikeAbbott | 3.88 (0.43) | 6.24 (0.58) | 5.18 (0.42) | 11.026** | 341.5** | |

| PostLikeGillard | 5.68 (0.49) | 4.15 (0.45) | 5.41 (0.42) | 5.836 | 403.0* | |

| 2 | PreImpressionAbbott | 6.76 (0.43) | 7.50 (0.34) | 6.76 (0.44) | 1.761 | 477.0 |

| PreImpressionGillard | 6.50 (0.36) | 7.29 (0.43) | 6.12 (0.45) | 4.369 | 449.5 | |

| PreTrustAbbott | 6.41 (0.44) | 7.12 (0.30) | 7.32 (0.44) | 2.700 | 499.0 | |

| PreTrustGillard | 6.56 (0.41) | 7.32 (0.36) | 6.35 (0.43) | 3.094 | 465.0 | |

| PreLikeAbbott | 5.56 (0.46) | 5.65 (0.43) | 5.76 (0.49) | 0.170 | 575.0 | |

| PreLikeGillard | 5.79 (0.44) | 5.79 (0.48) | 5.47 (0.45) | 0.306 | 568.0 | |

| PostImpressionAbbott | 3.79 (0.41) | 7.15 (0.49) | 5.24 (0.48) | 20.878*** | 226.5*** | |

| PostImpressionGillard | 7.35 (0.39) | 4.79 (0.47) | 6.00 (0.38) | 15.270*** | 279.5*** | |

| PostTrustAbbott | 3.82 (0.40) | 7.18 (0.47) | 5.53 (0.51) | 21.917*** | 207.5*** | |

| PostTrustGillard | 7.32 (0.41) | 4.97 (0.46) | 6.18 (0.36) | 13.410** | 302.0** | |

| PostLikeAbbott | 3.91 (0.42) | 6.09 (0.53) | 5.56 (0.48) | 9.822** | 353.0** | |

| PostLikeGillard | 6.68 (0.45) | 4.29 (0.48) | 5.79 (0.40) | 12.905** | 311.5** | |

| 3 | PreImpressionAbbott | 7.24 (0.39) | 7.18 (0.39) | 7.88 (0.27) | 1.346 | 568.5 |

| PreImpressionGillard | 6.12 (0.43) | 7.09 (0.39) | 7.26 (0.34) | 4.134 | 452.0 | |

| PreTrustAbbott | 7.18 (0.35) | 6.41 (0.41) | 7.53 (0.32) | 3.837 | 478.0 | |

| PreTrustGillard | 6.65 (0.38) | 6.68 (0.40) | 6.97 (0.33) | 0.259 | 568.5 | |

| PreLikeAbbott | 6.59 (0.42) | 5.94 (0.39) | 6.59 (0.43) | 2.301 | 491.0 | |

| PreLikeGillard | 5.85 (0.46) | 5.85 (0.43) | 6.26 (0.41) | 1.065 | 576.5 | |

| PostImpressionAbbott | 5.29 (0.48) | 6.82 (0.41) | 6.26 (0.48) | 5.512 | 384.0* | |

| PostImpressionGillard | 6.50 (0.45) | 5.47 (0.43) | 6.21 (0.48) | 3.027 | 445.5 | |

| PostTrustAbbott | 5.38 (0.49) | 6.85 (0.45) | 6.47 (0.47) | 5.091 | 399.0* | |

| PostTrustGillard | 6.44 (0.45) | 5.76 (0.47) | 6.29 (0.44) | 1.365 | 493.0 | |

| PostLikeAbbott | 5.29 (0.48) | 6.03 (0.48) | 5.79 (0.53) | 1.129 | 487.0 | |

| PostLikeGillard | 6.12 (0.47) | 5.26 (0.54) | 6.09 (0.51) | 1.475 | 491.5 | |

P < 0.05; **P < 0.01; and ***P < 0.001: Kruskal–Wallis tests were conducted between all three groups, and Mann–Whitney u tests were conducted between groups 1 and 2. Preferences were measured for each candidate separately on 10-point Likert scales.

Table 1.

Postsearch shifts in voting preferences for study 1

| Experiment | Candidate | Rating | Mean deviation from control (SE) | |||

| Gillard bias | u | Abbott bias | u | |||

| 1 | Gillard | Impression | 1.44 (0.56)* | 761.0 | −1.52 (0.56)** | 380.5 |

| Trust | 1.26 (0.53)** | 779.0 | −1.85 (0.48)** | 330.5 | ||

| Like | 0.26 (0.54) | 615.5 | −1.73 (0.65)** | 387.0 | ||

| Abbott | Impression | −2.29 (0.73)** | 373.0 | 1.11 (0.72)** | 766.5 | |

| Trust | −2.02 (0.63)** | 384.0 | 0.67 (0.76) | 679.0 | ||

| Like | −1.55 (0.71) | 460.5 | 1.17 (0.64)* | 733.0 | ||

| 2 | Gillard | Impression | 0.97 (0.65) | 704.0 | −2.38 (0.79)*** | 325.0 |

| Trust | 0.94 (0.72) | 691.5 | −2.17 (0.74)** | 332.5 | ||

| Like | 0.55 (0.76) | 639.5 | −1.82 (0.66)** | 378.0 | ||

| Abbott | Impression | −1.44 (0.81)* | 395.5 | 1.17 (0.75)* | 742.0 | |

| Trust | −0.79 (0.81) | 453.5 | 1.85 (0.72)** | 774.5 | ||

| Like | −1.44 (0.70)* | 429.0 | 0.64 (0.71) | 690.0 | ||

| 3 | Gillard | Impression | 1.44 (0.73)* | 717.5 | −0.55 (0.69) | 507.5 |

| Trust | 0.47 (0.70) | 620.0 | −0.23 (0.56) | 466.5 | ||

| Like | 0.44 (0.65) | 623.5 | −0.41 (0.70) | 528.5 | ||

| Abbott | Impression | −0.32 (0.70) | 534.0 | 1.26 (0.60)* | 750.5 | |

| Trust | −0.73 (0.65) | 498.5 | 1.50 (0.58)** | 795.0 | ||

| Like | −0.50 (0.61) | 496.0 | 0.88 (0.62) | 681.5 | ||

P < 0.05, **P < 0.01, and ***P < 0.001: Mann–Whitney u tests were conducted between the control group and each of the bias groups.

Before Web research, we found no significant differences among the three groups with respect to the proportions of people who said that they would vote for one candidate or the other if the election were held today (Table 2). Following Web research, significant differences emerged among the three groups for this measure (Table 2), and the number of subjects who said they would vote for the favored candidate in the two bias groups combined increased by 48.4% (95% CI, 30.8–66.0%; McNemar’s test, P < 0.01).

Table 2.

Comparison of voting proportions before and after Web research by group for studies 1 and 2

| Study | Experiment | Group | Simulated vote before Web research | χ2 | Simulated vote after Web research | χ2 | VMP | ||

| Gillard | Abbott | Gillard | Abbott | ||||||

| 1 | 1 | 1 | 8 | 26 | 5.409 | 22 | 12 | 8.870* | 48.4%** |

| 2 | 11 | 23 | 10 | 24 | |||||

| 3 | 17 | 17 | 14 | 20 | |||||

| 2 | 1 | 16 | 18 | 2.197 | 27 | 7 | 14.274*** | 63.3%*** | |

| 2 | 20 | 14 | 12 | 22 | |||||

| 3 | 14 | 20 | 22 | 12 | |||||

| 3 | 1 | 17 | 17 | 2.199 | 22 | 12 | 3.845 | 36.7%* | |

| 2 | 21 | 13 | 15 | 19 | |||||

| 3 | 15 | 19 | 15 | 19 | |||||

| 2 | 4 | 1 | 317 | 383 | 1.047 | 489 | 211 | 196.280*** | 37.1%*** |

| 2 | 316 | 384 | 228 | 472 | |||||

| 3 | 333 | 367 | 377 | 323 | |||||

McNemar's test was conducted to assess VMP significance. VMP, percent increase in subjects in the bias groups combined who said that they would vote for the favored candidate.

P < 0.05; **P < 0.01; and ***P < 0.001: Pearson χ2 tests were conducted among all three groups.

We define the latter percentage as vote manipulation power (VMP). Thus, before the Web search, if a total of subjects in the bias groups said they would vote for the target candidate, and if, following the Web search, a total of subjects in the bias groups said they would vote for the target candidate, VMP = . The VMP is, we believe, the key measure that an administrator would want to know if he or she were trying to manipulate an election using SEME.

Using a more sensitive measure than forced binary choice, we also asked subjects to estimate the likelihood, on an 11-point scale from −5 to +5, that they would vote for one candidate or the other if the election were held today. Before Web research, we found no significant differences among the three groups with respect to the likelihood of voting for one candidate or the other [Kruskal–Wallis (K–W) test: χ2(2) = 1.384, P = 0.501]. Following Web research, the likelihood of voting for either candidate in the bias groups diverged from their initial scale values by 3.71 points in the predicted directions [Mann–Whitney (M–W) test: u = 300.5, P < 0.01]. Notably, 75% of subjects in the bias groups showed no awareness of the manipulation. We counted subjects as showing awareness of the manipulation if (i) they had clicked on the box indicating that something bothered them about the rankings and (ii) we found specific terms or phrases in their open-ended comments suggesting that they were aware of bias in the rankings (SI Text).

In experiment 2, we sought to determine whether the proportion of subjects who were unaware of the manipulation could be increased with voter preferences still shifting in the predicted directions. We accomplished this by masking our manipulation to some extent. Specifically, the search result that had appeared in the fourth position on the first page of the search results favoring Abbott in experiment 1 was swapped with the corresponding search result favoring Gillard (Fig. 1D). Before Web research, we found no significant differences among the three groups with respect to subjects’ ratings of the candidates (Table S2). Following the Web research, all candidate ratings in the bias groups shifted in the predicted directions compared with candidate ratings in the control group (Table 1).

Before Web research, we found no significant differences among the three groups with respect to voting proportions (Table 2). Following Web research, significant differences emerged among the three groups for this measure (Table 2), and the VMP was 63.3% (95% CI, 46.1–80.6%; McNemar’s test, P < 0.001).

For the more sensitive measure (the 11-point scale), we found no significant differences among the three groups with respect to the likelihood of voting for one candidate or the other before Web research [K-W test: χ2(2) = 0.888, P = 0.642]. Following Web research, the likelihood of voting for either candidate in the bias groups diverged from their initial scale values by 4.44 points in the predicted directions (M-W test: u = 237.5, P < 0.001). In addition, the proportion of people who showed no awareness of the manipulation increased from 75% in experiment 1 to 85% in experiment 2, although the difference between these percentages was not significant (χ2 = 2.264, P = 0.07).

In experiment 3, we sought to further increase the proportion of subjects who were unaware of the manipulation by using a more aggressive mask. Specifically, the search result that had appeared in the third position on the first page of the search results favoring Abbott in experiment 1 was swapped with the corresponding search result favoring Gillard (Fig. 1E). This mask is a more aggressive one because higher ranked results are viewed more and taken more seriously by people conducting searches (1–12).

Before Web research, we found no significant differences among the three groups with respect to subjects’ ratings of candidates (Table S2). Following the Web research, all candidate ratings in the bias groups shifted in the predicted directions compared with candidate ratings in the control group (Table 1).

Before Web research, we found no significant differences among the three groups with respect to voting proportions (Table 2). Following Web research, significant differences did not emerge among the three groups for this measure (Table 2); the VMP, however, was 36.7% (95% CI, 19.4–53.9%; McNemar’s test, P < 0.05).

For the more sensitive measure (the 11-point scale), we found no significant differences among the three groups with respect to the likelihood of voting for one candidate or the other before Web research [K-W test: χ2(2) = 0.624, P = 0.732]. Following Web research, the likelihood of voting for either candidate in the bias groups diverged from their initial scale values by 2.62 points in the predicted directions (M-W test: u = 297.0, P < 0.001). Notably, in experiment 3, no subjects showed awareness of the rankings bias, and the difference between the proportions of subjects who appeared to be unaware of the manipulations in experiments 1 and 3 was significant (χ2 = 19.429, P < 0.001).

Although the findings from these first three experiments were robust, the use of small samples from one US city limited their generalizability and might even have exaggerated the effect size (48).

Study 2: Large-Scale National Online Replication of Experiment 3

To better assess the generalizability of SEME to the US population at large, we used a diverse national sample of 2,100 individuals† from all 50 US states (Table S1), recruited using Amazon’s Mechanical Turk (mturk.com), an online subject pool that is now commonly used by behavioral researchers (49, 50). Subjects (mean age, 33.9 y; SD = 11.9 y; range, 18–81 y) were exposed to the same aggressive masking procedure we used in experiment 3 (Fig. 1E). Each subject was paid USD$1 for his or her participation.

Regarding ethical concerns, as in study 1, our manipulation could have no impact on a past election, and we were not concerned that it could affect the outcome of future elections. Moreover, our study was designed so that it did not favor any one candidate, so there was no overall bias. The study presented no more than minimal risk to subjects and was approved by AIBRT’s IRB. Informed consent was obtained from all subjects.

Subjects’ political inclinations were less balanced than those in study 1, with 19.5% of subjects identifying themselves as conservative, 24.2% as moderate, 50.2% as liberal, and 6.3% as indifferent; 16.1% of subjects identified themselves as Republican, 29.9% as Independent, 43.2% as Democrat, 8.0% as Libertarian, and 2.9% as other. Subjects reported having little or no familiarity with the candidates (mean, 1.9; SD = 1.7). As one might expect in a study using only Internet-based subjects, self-reported search engine use was higher in study 2 than in study 1 [mean searches per day, 15.3; SD = 26.3; t(529.5)‡ = 6.9, P < 0.001], and more subjects reported having previously used a search engine to learn about political candidates (86.0%, χ2 = 204.1, P < 0.001). Subjects in study 2 also spent less time using our mock search engine [mean total time, 309.2 s; SD = 278.7; t(381.9)‡ = −17.6, P < 0.001], but patterns of search result clicks and time spent on Web pages were similar to those we found in study 1 [clicks: r(28) = 0.98, P < 0.001; Web page time: r(28) = 0.98, P < 0.001] and to those routinely found in other studies (1–12).

Before Web research, we found no significant differences among the three groups with respect to subjects’ ratings of the candidates (Table S3). Following the Web research, all candidate ratings in the bias groups shifted in the predicted directions compared with candidate ratings in the control group (Table 3).

Table S3.

Voting preferences by group for study 2

| Voting preferences | Mean (SE) | Kruskal–Wallis (χ2) | Mann–Whitney u | ||

| Group 1 (Gillard bias) | Group 2 (Abbott bias) | Group 3 (control) | |||

| PreImpressionAbbott | 7.40 (0.07) | 7.36 (0.08) | 7.37 (0.07) | 0.458 | 241,861.5 |

| PreImpressionGillard | 7.13 (0.07) | 7.12 (0.08) | 7.13 (0.07) | 0.081 | 243,115.0 |

| PreTrustAbbott | 7.26 (0.07) | 7.22 (0.08) | 7.18 (0.07) | 0.954 | 241,924.5 |

| PreTrustGillard | 6.95 (0.07) | 6.89 (0.08) | 6.92 (0.07) | 0.222 | 241,779.0 |

| PreLikeAbbott | 6.42 (0.08) | 6.39 (0.08) | 6.23 (0.08) | 2.987 | 243,677.5 |

| PreLikeGillard | 6.24 (0.08) | 6.30 (0.08) | 6.11 (0.08) | 3.178 | 239,556.0 |

| PostImpressionAbbott | 4.61 (0.09) | 6.88 (0.09) | 5.53 (0.09) | 289.065*** | 120,660.0*** |

| PostImpressionGillard | 6.87 (0.08) | 4.95 (0.09) | 6.21 (0.09) | 237.034*** | 133,106.5*** |

| PostTrustAbbott | 4.56(0.10) | 6.94 (0.09) | 5.57 (0.10) | 281.560*** | 121,786.5*** |

| PostTrustGillard | 6.84 (0.09) | 4.95 (0.09) | 6.19 (0.09) | 221.709*** | 136,689.0*** |

| PostLikeAbbott | 4.55 (0.09) | 6.31 (0.09) | 5.21 (0.09) | 177.225*** | 146,957.0*** |

| PostLikeGillard | 6.34(0.09) | 4.64 (0.09) | 5.71 (0.09) | 176.066*** | 147,372.5*** |

***P < 0.001: Kruskal–Wallis tests were conducted between all three groups, and Mann–Whitney u tests were conducted between groups 1 and 2. Preferences were measured for each candidate separately on 10-point Likert scales.

Table 3.

Postsearch shifts in voting preferences for study 2

| Candidate | Rating | Mean deviation from control (SE) | |||

| Gillard bias | u | Abbott bias | u | ||

| Gillard | Impression | 0.65 (0.10)*** | 288,299.5 | −1.25 (0.12)*** | 168,203.5 |

| Trust | 0.61 (0.10)*** | 283,491.0 | −1.21 (0.11)*** | 167,658.5 | |

| Like | 0.50 (0.10)*** | 279,967.0 | −1.25 (0.11)*** | 166,544.0 | |

| Abbott | Impression | −0.96 (0.13)*** | 189,290.5 | 1.35 (0.12)*** | 326,067.0 |

| Trust | −1.09 (0.14)*** | 183,993.0 | 1.31 (0.12)*** | 318,740.5 | |

| Like | −0.85 (0.13)*** | 195,088.5 | 0.94 (0.11)*** | 302,318.0 | |

P < 0.001: Mann–Whitney u tests were conducted between the control group and each of the bias groups.

Before Web research, we found no significant differences among the three groups with respect to voting proportions (Table 2). Following Web research, significant differences emerged among the three groups for this measure (Table 2), and the VMP was 37.1% (95% CI, 33.5–40.7%; McNemar’s test, P < 0.001). Using poststratification and weights obtained from the 2010 US Census (46) and a 2011 study from Gallup (51), which were scaled to size for age, sex, race, and education, the VMP was 36.7% (95% CI, 33.2–40.3%; McNemar’s test, P < 0.001). When weighted using the same demographics via classical regression poststratification (52) (Table S4), the VMP was 33.5% (95% CI, 30.1–37.0%, McNemar’s test, P < 0.001).

Table S4.

Treatment effect estimates for study 2 voting preferences

| Predictor variable | Presearch vote | Postsearch vote | ||

| Coefficient | SE | Coefficient | SE | |

| Intercept | −0.073 | 0.540 | 0.062 | 0.543 |

| Sex | ||||

| Female | 0 | Referent | 0 | Referent |

| Male | 0.039 | 0.110 | −0.135 | 0.119 |

| Other | −0.430 | 0.922 | −0.568 | 0.924 |

| Race/ethnicity | ||||

| White | 0 | Referent | 0 | Referent |

| Black | 0.115 | 0.224 | 0.090 | 0.245 |

| Hispanic | −0.435 | 0.235 | −0.280 | 0.237 |

| Asian | 0.366 | 0.238 | 0.668 | 0.291* |

| Other | 0.133 | 0.274 | −0.072 | 0.291 |

| Age group | ||||

| 18–24 | 0 | Referent | 0 | Referent |

| 25–44 | −0.024 | 0.144 | −0.083 | 0.157 |

| 45–64 | 0.241 | 0.184 | 0.029 | 0.200 |

| 65+ | 0.258 | 0.411 | 0.685 | 0.519 |

| Education level | ||||

| Less than ninth grade | 0 | Referent | 0 | Referent |

| Ninth to 12th grade | 0.024 | 0.548 | 0.732 | 0.550 |

| High school graduate | 0.074 | 0.528 | 0.927 | 0.528 |

| Bachelors | 0.094 | 0.529 | 0.842 | 0.530 |

| Advanced | −0.050 | 0.543 | 0.549 | 0.544 |

The presearch and postsearch columns report the estimate and variance for both treatment groups using classical regression poststratification. Data for sex, race/ethnicity, age group, and education level came from the 2010 US Census. Data on the number of people who identify their sex as “other” came from a 2011 Gallup study.

P < 0.05.

For the more sensitive measure (the 11-point scale), we found no significant differences among the three groups with respect to the likelihood of voting for one candidate or the other before Web research [K-W test: χ2(2) = 2.790, P = 0.248]. Following Web research, the likelihood of voting for either candidate in the bias groups diverged from their initial scale values by 3.03 points in the predicted directions (M-W test: u = 1.29 × 105, P < 0.001). As one might expect of a more Internet-fluent sample, the proportion of subjects showing no awareness of the manipulation dropped to 91.4%.

The number of subjects in study 1 was too small to look at demographic differences. In study 2, we found substantial differences in how vulnerable different demographic groups were to SEME. Consistent with previous findings on the moderators of order effects (30–32), for example, we found that subjects reporting a low familiarity with the candidates (familiarity less than 5 on a scale from 1 to 10) were more vulnerable to SEME (VMP = 38.7%; 95% CI, 34.9–42.4%; McNemar’s test, P < 0.001) than were subjects who reported high familiarity with the candidates (VMP = 19.3%; 95% CI, 9.1–29.5%; McNemar’s test, P < 0.05), and this difference was significant (χ2 = 8.417, P < 0.01).

We found substantial differences in vulnerability to SEME among a number of different demographic groups (SI Text). Although the groups we examined were overlapping and somewhat arbitrary, if one were manipulating an election, information about such differences would have enormous practical value. For example, we found that self-labeled Republicans were more vulnerable to SEME (VMP = 54.4%; 95% CI, 45.2–63.5%; McNemar’s test, P < 0.001) than were self-labeled Democrats (VMP = 37.7%; 95% CI, 32.3–43.1%; McNemar’s test, P < 0.001) and that self-labeled divorcees were more vulnerable (VMP = 46.7%; 95% CI, 32.1–61.2%; McNemar’s test, P < 0.001) than were self-labeled married subjects (VMP = 32.4%; 95% CI, 26.8–38.1%; McNemar’s test, P < 0.001). Among the most vulnerable groups we identified were Moderate Republicans (VMP = 80.0%; 95% CI, 62.5–97.5%; McNemar’s test, P < 0.001), whereas among the least vulnerable groups were people who reported a household income of $40,000 to $49,999 (VMP = 22.5%; 95% CI, 13.8–31.1%; McNemar’s test, P < 0.001).

Notably, awareness of the manipulation not only did not nullify the effect, it seemed to enhance it, perhaps because people trust search order so much that awareness of the bias serves to confirm the superiority of the favored candidate. The VMP for people who showed no awareness of the biased search rankings (n = 1,280) was 36.3% (95% CI, 32.6–40.1%; McNemar's test, P < 0.001), whereas the VMP for people who showed awareness of the bias (n = 120) was 45.0% (95% CI, 32.4–57.6%; McNemar’s test, P < 0.001).

Having now replicated the effect with a large and diverse sample of US subjects, we were concerned about the weaknesses associated with testing subjects on a somewhat abstract election (the election in Australia) that had taken place years before and in which subjects were unfamiliar with the candidates. In real elections, people are familiar with the candidates and are influenced, sometimes on a daily basis, by aggressive campaigning. Presumably, either of these two factors—familiarity and outside influence—could potentially minimize or negate the influence of biased search rankings on voter preferences. We therefore asked if SEME could be replicated with a large and diverse sample of real voters in the midst of a real election campaign.

Study 3: SEME Evaluated During the 2014 Lok Sabha Elections in India

In our fifth experiment, we sought to manipulate the voting preferences of undecided eligible voters in India during the 2014 national Lok Sabha elections there. This election was the largest democratic election in history, with more than 800 million eligible voters and more than 430 million votes ultimately cast. We accomplished this by randomly assigning undecided English-speaking voters throughout India who had not yet voted (recruited through print advertisements, online advertisements, and online subject pools) to one of three groups in which search rankings favored either Rahul Gandhi, Arvind Kejriwal, or Narendra Modi, the three major candidates in the election.§

Subjects were incentivized to participate in the study either with payments between USD$1 and USD$4 or with the promise that a donation of approximately USD$1.50 would be made to a prominent Indian charity that provides free lunches for Indian children. (At the close of the study, a donation of USD$1,457 was made to the Akshaya Patra Foundation.)

Regarding ethical concerns, because we recruited only a small number of subjects relative to the size of the Indian voting population, we were not concerned that our manipulation could affect the election’s outcome. Moreover, our study was designed so that it did not favor any one candidate, so there was no overall bias. The study presented no more than minimal risk to subjects and was approved by AIBRT’s IRB. Informed consent was obtained from all subjects.

The subjects (n = 2,150) were demographically diverse (Table S5), residing in 27 of 35 Indian states and union territories, and political leanings varied as follows: 13.3% identified themselves as politically right (conservative), 43.8% as center (moderate), 26.0% as left (liberal), and 16.9% as indifferent. In contrast to studies 1 and 2, subjects reported high familiarity with the political candidates (mean familiarity Gandhi, 7.9; SD = 2.5; mean familiarity Kejriwal, 7.7; SD = 2.5; mean familiarity Modi, 8.5; SD = 2.1). The full dataset for all five experiments is accessible at Dataset S1.

Table S5.

Demographics for study 3

| Category | Value | Study 3 | Indian Census 2011 (literates) | ||

| n | % | n | % | ||

| Age | 18–24 | 602 | 28.0% | 160,241,457 | 21.0% |

| 25–44 | 1410 | 65.6% | 347,587,712 | 45.6% | |

| 45–64 | 124 | 5.8% | 188,197,343 | 24.7% | |

| 65+ | 14 | 0.7% | 66,185,333 | 8.7% | |

| Religion | Buddhism | 14 | 0.7% | — | — |

| Christianity | 262 | 12.2% | — | — | |

| Hinduism | 1512 | 70.3% | — | — | |

| Islam | 314 | 14.6% | — | — | |

| Jainism | 21 | 1.0% | — | — | |

| Other | 15 | 0.7% | — | — | |

| Sikhism | 12 | 0.6% | — | — | |

| Sex | Male | 1518 | 70.6% | 388,428,872 | 51.0% |

| Female | 632 | 29.4% | 373,782,973 | 49.0% | |

| Education | None | 0 | 0.0% | — | — |

| Primary school | 4 | 0.2% | — | — | |

| Higher secondary | 71 | 3.3% | — | — | |

| Pre-university | 136 | 6.3% | — | — | |

| Bachelors | 1225 | 57.0% | — | — | |

| Masters | 699 | 32.5% | — | — | |

| Doctorate | 15 | 0.7% | — | — | |

| Used | Yes | 1635 | 76.0% | — | — |

| No | 515 | 24.0% | — | — | |

| Income | Under Rs 10,000 | 121 | 5.6% | — | — |

| Rs 10,000 to Rs 29,999 | 206 | 9.6% | — | — | |

| Rs 30,000 to Rs 49,999 | 131 | 6.1% | — | — | |

| Rs 50,000 to Rs 69,999 | 106 | 4.9% | — | — | |

| Rs 70,000 to Rs 89,999 | 146 | 6.8% | — | — | |

| Rs 90,000 to Rs 109,999 | 181 | 8.4% | — | — | |

| Rs 110,000 to Rs 129,999 | 172 | 8.0% | — | — | |

| Rs 130,000 to Rs 149,999 | 132 | 6.1% | — | — | |

| Rs 150,000 to Rs 169,999 | 124 | 5.8% | — | — | |

| Rs 170,000 to Rs 189,999 | 118 | 5.5% | — | — | |

| Rs 190,000 and over | 486 | 22.6% | — | — | |

| I prefer not to say | 227 | 10.6% | — | — | |

| Marital status | Married | 1,144 | 53.2% | — | — |

| Widowed | 5 | 0.2% | — | — | |

| Divorced | 4 | 0.2% | — | — | |

| Separated | 78 | 3.6% | — | — | |

| Never married | 919 | 42.7% | — | — | |

| Location | State | 1,144 | 53.2% | 749,758,470 | 98.4% |

| Union Territory | 5 | 0.2% | 12,453,375 | 1.6% | |

Subjects reported more frequent search engine use compared with subjects in studies 1 or 2 (mean searches per day, 15.7; SD = 30.1), and 71.7% of subjects reported that they had previously used a search engine to learn about political candidates. Subjects also spent less time using our mock search engine (mean total time, 277.4 s; SD = 368.3) than did subjects in studies 1 or 2. The patterns of search result clicks and time spent on Web pages in our mock search engine was similar to the patterns we found in study 1 [clicks, r(28) = 0.96; P < 0.001; Web page time, r(28) = 0.91; P < 0.001] and study 2 [clicks, r(28) = 0.96; P < 0.001; Web page time, r(28) = 0.92; P < 0.001].

Before Web research, we found one significant difference among the three groups for a rating pertaining to Kejriwal, but none for Gandhi or Modi (Table S6). Following the Web research, most of the subjects’ ratings of the candidates shifted in the predicted directions (Table 4).

Table S6.

Voting Preferences by Group for Study 3

| Voting preferences | Mean (SE) | Kruskal–Wallis (χ2) | ||

| Group 1 (Gandhi bias) | Group 2 (Kejriwal bias) | Group 3 (Modi bias) | ||

| PreImpressionGandhi | 5.94 (0.10) | 5.73 (0.10) | 5.65 (0.10) | 4.782 |

| PreImpressionKejriwal | 6.80 (0.09) | 7.07 (0.09) | 7.09 (0.08) | 6.230* |

| PreImpressionModi | 7.49 (0.10) | 7.46 (0.10) | 7.48 (0.09) | 0.188 |

| PreLikableGandhi | 5.71 (0.10) | 5.64 (0.10) | 5.61 (0.10) | 0.722 |

| PreLikableKejriwal | 6.68 (0.09) | 6.78 (0.09) | 6.87 (0.09) | 2.030 |

| PreLikableModi | 7.40 (0.10) | 7.29 (0.10) | 7.29 (0.10) | 1.483 |

| PreTrustGandhi | 5.57 (0.11) | 5.52 (0.11) | 5.42 (0.10) | 0.955 |

| PreTrustKejriwal | 6.54 (0.10) | 6.74 (0.10) | 6.85 (0.09) | 4.546 |

| PreTrustModi | 7.22 (0.11) | 7.31 (0.11) | 7.27 (0.10) | 0.159 |

| PreLikelyToVoteGandhi | 0.10 (0.12) | 0.08 (0.12) | 0.08 (0.12) | 1.587 |

| PreLikelyToVoteKejriwal | 1.19 (0.11) | 1.38 (0.11) | 1.55 (0.10) | 5.178 |

| PreLikelyToVoteModi | 2.15 (0.12) | 2.12 (0.12) | 2.06 (0.12) | 0.202 |

| PostImpressionGandhi | 5.78 (0.10) | 5.52 (0.10) | 5.35 (0.10) | 9.552** |

| PostImpressionKejriwal | 6.50 (0.09) | 6.96 (0.09) | 6.70 (0.08) | 14.288** |

| PostImpressionModi | 7.27 (0.10) | 7.26 (0.10) | 7.60 (0.09) | 7.860* |

| PostLikableGandhi | 5.62 (0.10) | 5.46 (0.10) | 5.26 (0.10) | 6.322* |

| PostLikableKejriwal | 6.37 (0.09) | 6.84 (0.09) | 6.64 (0.08) | 13.456** |

| PostLikableModi | 7.24 (0.11) | 7.20 (0.11) | 7.47 (0.10) | 3.874 |

| PostTrustGandhi | 5.71 (0.11) | 5.48 (0.10) | 5.22 (0.10) | 11.386* |

| PostTrustKejriwal | 6.38 (0.10) | 6.89 (0.10) | 6.68 (0.08) | 15.840*** |

| PostTrustModi | 7.18 (0.11) | 7.20 (0.11) | 7.49 (0.10) | 4.758 |

P < 0.05; **P < 0.01; and ***P < 0.001: Kruskal–Wallis tests were conducted between all three groups. Preferences were measured for each candidate separately on 10-point Likert scales.

Table 4.

Postsearch shifts in voting preferences for study 3

| Candidate | Rating | χ2 | Mean (SE) | ||

| Gandhi bias | Kejriwal bias | Modi bias | |||

| Gandhi | Impression | 3.61 | −0.16 (0.06) | −0.21 (0.06) | −0.30 (0.06) |

| Trust | 21.19*** | 0.14 (0.06) | −0.04 (0.07) | −0.20 (0.06) | |

| Like | 12.99** | −0.09 (0.07) | −0.17 (0.06) | −0.34 (0.06) | |

| Voting likelihood | 10.79** | 0.16 (0.07) | −0.04 (0.07) | −0.18 (0.07) | |

| Kejriwal | Impression | 17.75*** | −0.30 (0.06) | −0.11 (0.06) | −0.39 (0.05) |

| Trust | 26.69*** | −0.17 (0.07) | 0.15 (0.06) | −0.16 (0.06) | |

| Like | 24.74*** | −0.31 (0.06) | 0.05 (0.06) | −0.23 (0.06) | |

| Voting likelihood | 13.22** | −0.03 (0.06) | 0.17 (0.07) | −0.12 (0.06) | |

| Modi | Impression | 24.98*** | −0.22 (0.06) | −0.21 (0.06) | 0.12 (0.05) |

| Trust | 18.78*** | −0.04 (0.06) | −0.10 (0.06) | 0.23 (0.06) | |

| Like | 16.89*** | −0.16 (0.05) | −0.09 (0.06) | 0.19 (0.06) | |

| Voting likelihood | 31.07*** | −0.07 (0.07) | −0.10 (0.06) | 0.33 (0.06) | |

P < 0.01 and ***P < 0.001: for each rating, a Kruskal–Wallis χ2 test was used to assess significance of group differences.

Before Web research, we found no significant differences among the three groups with respect to voting proportions (Table 5). Following Web research, significant differences emerged among the three groups for this measure (Table 5), and the VMP was 10.6% (95% CI, 8.3–12.8%; McNemar’s test, P < 0.001). Using poststratification and weights obtained from the 2011 India Census data on literate Indians (53)—scaled to size for age, sex, and location (grouped into state or union territory)—the VMP was 9.4% (95% CI, 8.2–10.6%; McNemar’s test, P < 0.001). When weighted using the same demographics via classical regression poststratification (Table S7), the VMP was 9.5% (95% CI, 8.3–10.7%; McNemar’s test, P < 0.001).

Table 5.

Comparison of voting proportions before and after Web research for study 3

| Group | Simulated vote before Web research | χ2 | Simulated vote after Web research | χ2 | VMP | ||||

| Gandhi | Kejriwal | Modi | Gandhi | Kejriwal | Modi | ||||

| 1 | 115 | 164 | 430 | 3.070 | 144 | 152 | 413 | 16.935** | 10.6%*** |

| 2 | 112 | 183 | 393 | 113 | 199 | 376 | |||

| 3 | 127 | 196 | 430 | 117 | 174 | 462 | |||

McNemar's test was conducted to assess VMP significance. VMP, percent increase in subjects in the bias groups combined who said that they would vote for the favored candidate.

**P < 0.01; and ***P < 0.001: Pearson χ2 tests were conducted among all three groups.

Table S7.

Treatment effect estimates for study 3 voting preferences

| Predictor variable | Presearch vote | Postsearch vote | ||

| Coefficient | SE | Coefficient | SE | |

| Intercept | −0.716 | 0.090*** | −0.552 | 0.088*** |

| Sex | ||||

| Male | 0 | Referent | 0 | Referent |

| Female | 0.168 | 0.100 | 0.030 | 0.099 |

| Age group, y | ||||

| 18–24 | 0 | Referent | 0 | Referent |

| 25–44 | 0.031 | 0.103 | 0.067 | 0.101 |

| 45–64 | −0.222 | 0.217 | −0.057 | 0.208 |

| 65+ | −0.213 | 0.598 | −0.366 | 0.598 |

| Location | ||||

| State | 0 | Referent | 0 | Referent |

| Union Territory | −0.401 | 0.294 | −0.321 | 0.279 |

The presearch and postsearch columns report the estimate and variance for both of the treatment groups using classical regression poststratification. Data for sex, age group, and location came from the 2011 India Census.

***P < 0.001.

To obtain a more sensitive measure of voting preference in study 3, we asked subjects to estimate the likelihood, on three separate 11-point scales from −5 to +5, that they would vote for each of the candidates if the election were held today. Before Web research, we found no significant differences among the three groups with respect to the likelihood of voting for any of the candidates (Table S6). Following Web research, significant differences emerged among the three groups with respect to the likelihood of voting for Rahul Gandhi and Arvind Kejriwal but not Narendra Modi (Table S6), and all likelihoods shifted in the predicted directions (Table 4). The proportion of subjects showing no awareness of the manipulation in experiment 5 was 99.5%.

In study 3, as in study 2, we found substantial differences in how vulnerable different demographic groups were to SEME (SI Text). Consistent with the findings of study 2 and previous findings on the moderators of order effects (30–32), for example, we found that subjects reporting a low familiarity with the candidates (familiarity less than 5 on a scale from 1 to 10) were more vulnerable to SEME (VMP = 13.7%; 95% CI, 4.3–23.2%; McNemar’s test, P = 0.17) than were subjects who reported high familiarity with the candidates (VMP = 10.3%; 95% CI, 8.0–12.6%; McNemar’s test, P < 0.001), although this difference was not significant (χ2 = 0.575, P = 0.45).

As in study 2, although the demographic groups we examined were overlapping and somewhat arbitrary, if one was manipulating an election, information about such differences would have enormous practical value. For example, we found that subjects between ages 18 and 24 were less vulnerable to SEME (VMP = 8.9%; 95% CI, 5.0–12.8%; McNemar’s test, P < 0.05) than were subjects between ages 45 and 64 (VMP = 18.9%; 95% CI, 6.3–31.5%; McNemar’s test, P = 0.10) and that self-labeled Christians were more vulnerable (VMP = 30.7%; 95% CI, 20.2–41.1%; McNemar’s test, P < 0.001) than self-labeled Hindus (VMP = 8.7%; 95% CI, 6.3–11.1%; McNemar’s test, P < 0.001). Among the most vulnerable groups we identified were unemployed males from Kerala (VMP = 72.7%; 95% CI, 46.4–99.0%; McNemar’s test, P < 0.05), whereas among the least vulnerable groups were female conservatives (VMP = −11.8%; 95% CI, −29.0%–5.5%; McNemar's test, P = 0.62).

A negative VMP might suggest oppositional attitudes or an underdog effect for that group (54). No negative VMPs were found in the demographic groups examined in study 2, but it is understandable that they would be found in an election in which people are highly familiar with the candidates (study 3). As a practical matter, where a search engine company has the ability to send people customized rankings and where biased search rankings are likely to produce an oppositional response with certain voters, such rankings would probably not be sent to them. Eliminating the 2.6% of our sample (n = 56) with oppositional responses, the overall VMP in this experiment increases from 10.6% to 19.8% (95% CI, 16.8–22.8%; n = 2,094; McNemar’s test: P < 0.001).

As we found in study 2, awareness of the manipulation appeared to enhance the effect rather than nullify it. The VMP for people who showed no awareness of the biased search rankings (n = 2,140) was 10.5% (95% CI, 8.3–12.7%; McNemar’s test, P < 0.001), whereas the VMP for people who showed awareness of the bias (n = 10) was 33.3%.

The rankings and Web pages we used in study 3 were selected by the investigators based on our limited understanding of Indian politics and perspectives. To optimize the rankings, midway through the election process we hired a native consultant who was familiar with the issues and perspectives pertinent to undecided voters in the 2014 Lok Sabha Election. Based on the recommendations of the consultant, we made slight changes to our rankings on 30 April, 2014. In the preoptimized rankings group (n = 1,259), the VMP was 9.5% (95% CI, 6.8–12.2%; McNemar’s test, P < 0.001); in the postoptimized rankings group (n = 891), the VMP increased to 12.3% (95% CI, 8.5–16.1%; McNemar’s test, P < 0.001). Eliminating the 3.1% of the subjects in the postoptimization sample with oppositional responses (n = 28), the VMP increased to 24.5% (95% CI, 19.3–29.8%; n = 863).

Discussion

Elections are often won by small vote margins. Fifty percent of US presidential elections were won by vote margins under 7.6%, and 25% of US senatorial elections in 2012 were won by vote margins under 6.0% (55, 56). In close elections, undecided voters can make all of the difference, which is why enormous resources are often focused on those voters in the days before the election (57, 58). Because search rankings biased toward one candidate can apparently sway the voting preferences of undecided voters without their awareness and, at least under some circumstances, without any possible competition from opposing candidates, SEME appears to be an especially powerful tool for manipulating elections. The Australian election used in studies 1 and 2 was won by a margin of only 0.24% and perhaps could easily have been turned by such a manipulation. The Fox News Effect, which is small compared with SEME, is believed to have shifted between 0.4% and 0.7% of votes to conservative candidates: more than enough, according to the researchers, to have had a “decisive” effect on a number of close elections in 2000 (40).

Political scientists have identified two of the most common methods political candidates use to try to win elections. The core voter model describes a strategy in which resources are devoted to mobilizing supporters to vote (59). As noted earlier, Zittrain recently pointed out that a company such as Facebook could mobilize core voters to vote on election day by sending “get-out-and-vote” messages en masse to supporters of only one candidate. Such a manipulation could be used undetectably to flip an election in what might be considered a sort of digital gerrymandering (44, 45). In contrast, the swing voter model describes a strategy in which candidates target their resources toward persuasion—attempting to change the voting preferences of undecided voters (60). SEME is an ideal method for influencing such voters.

Although relatively few voters have actively sought political information about candidates in the past (61), the ease of obtaining information over the Internet appears to be changing that: 73% of online adults used the Internet for campaign-related purposes during the 2010 US midterm elections (61), and 55% of all registered voters went online to watch videos related to the 2012 US election campaign (62). Moreover, 84% of registered voters in the United States were Internet users in 2012 (62). In our nationwide study in the United States (study 2), 86.0% of our subjects reported having used search engines to get information about candidates. Meanwhile, the number of people worldwide with Internet access is increasing rapidly, predicted to increase to nearly 4 billion by 2018 (63). By 2018, Internet access in India is expected to rise from the 213 million users who had access in 2013 to 526 million (63). Worldwide, it is reasonable to conjecture that both proportions will increase substantially in future years; that is, more people will have Internet access, and more people will obtain information about candidates from the Internet. In the context of the experiments we have presented, this suggests that whatever the effect sizes we have observed now, they will likely be larger in the future.

The power of SEME to affect elections in a two-person race can be roughly estimated by making a small number of fairly conservative assumptions. Where is the proportion of voters with Internet access, is the proportion of those voters who are undecided, and VMP, as noted above, is the proportion of those undecided voters who can be swayed by SEME, —the maximum win margin controllable by SEME—can be estimated by the following formula: .

In a three-person race, will vary between 75% and 100% of its value in a two-person race, depending on how the votes are distributed between the two losing candidates. (Derivations of formulas in the two-candidate and three-candidate cases are available in SI Text.) In both cases, the size of the population is irrelevant.

Knowing the values for and for a given election, along with the projected win margin, the minimum VMP needed to put one candidate ahead can be calculated (Table S8). In theory, continuous online polling would allow search rankings to be optimized continuously to increase the value of VMP until, in some instances, it could conceivably guarantee an election’s outcome, much as “conversion” and “click-through” rates are now optimized continuously in Internet marketing (64).

Table S8.

Minimum VMP levels needed to impact two-person races with various projected win margins and proportions of undecided Internet voters

| Proportion of undecided Internet voters in the population (i*u) | Projected win margin | |||||||||

| 0.01 | 0.02 | 0.03 | 0.04 | 0.05 | 0.06 | 0.07 | 0.08 | 0.09 | 0.10 | |

| 0.01 | 1.000 | — | — | — | — | — | — | — | — | — |

| 0.02 | 0.500 | 1.000 | — | — | — | — | — | — | — | — |

| 0.03 | 0.333 | 0.667 | 1.000 | — | — | — | — | — | — | — |

| 0.04 | 0.250 | 0.500 | 0.750 | 1.000 | — | — | — | — | — | — |

| 0.05 | 0.200 | 0.400 | 0.600 | 0.800 | 1.000 | — | — | — | — | — |

| 0.06 | 0.167 | 0.333 | 0.500 | 0.667 | 0.833 | 1.000 | — | — | — | — |

| 0.07 | 0.143 | 0.286 | 0.429 | 0.571 | 0.714 | 0.857 | 1.000 | — | — | — |

| 0.08 | 0.125 | 0.250 | 0.375 | 0.500 | 0.625 | 0.750 | 0.875 | 1.000 | — | — |

| 0.09 | 0.111 | 0.222 | 0.333 | 0.444 | 0.556 | 0.667 | 0.778 | 0.889 | 1.000 | — |

| 0.10 | 0.100 | 0.200 | 0.300 | 0.400 | 0.500 | 0.600 | 0.700 | 0.800 | 0.900 | 1.000 |

| 0.11 | 0.091 | 0.182 | 0.273 | 0.364 | 0.455 | 0.545 | 0.636 | 0.727 | 0.818 | 0.909 |

| 0.12 | 0.083 | 0.167 | 0.250 | 0.333 | 0.417 | 0.500 | 0.583 | 0.667 | 0.750 | 0.833 |

| 0.13 | 0.077 | 0.154 | 0.231 | 0.308 | 0.385 | 0.462 | 0.538 | 0.615 | 0.692 | 0.769 |

| 0.14 | 0.071 | 0.143 | 0.214 | 0.286 | 0.357 | 0.429 | 0.500 | 0.571 | 0.643 | 0.714 |

| 0.15 | 0.067 | 0.133 | 0.200 | 0.267 | 0.333 | 0.400 | 0.467 | 0.533 | 0.600 | 0.667 |

| 0.16 | 0.063 | 0.125 | 0.188 | 0.250 | 0.313 | 0.375 | 0.438 | 0.500 | 0.563 | 0.625 |

| 0.17 | 0.059 | 0.118 | 0.176 | 0.235 | 0.294 | 0.353 | 0.412 | 0.471 | 0.529 | 0.588 |

| 0.18 | 0.056 | 0.111 | 0.167 | 0.222 | 0.278 | 0.333 | 0.389 | 0.444 | 0.500 | 0.556 |

| 0.19 | 0.053 | 0.105 | 0.158 | 0.211 | 0.263 | 0.316 | 0.368 | 0.421 | 0.474 | 0.526 |

| 0.20 | 0.050 | 0.100 | 0.150 | 0.200 | 0.250 | 0.300 | 0.350 | 0.400 | 0.450 | 0.500 |

For example, if (i) 80% of eligible voters had Internet access, (ii) 10% of those individuals were undecided at some point, and (iii) SEME could be used to increase the number of people in the undecided group who were inclined to vote for the target candidate by 25%, that would be enough to control the outcome of an election in which the expected win margin was as high as 2%. If SEME were applied strategically and repeatedly over a period of weeks or months to increase the VMP, and if, in some locales and situations, and were larger than in the example given, the controllable win margin would be larger. That possibility notwithstanding, because nearly 25% of national elections worldwide are typically won by margins under 3%,¶ SEME could conceivably impact a substantial number of elections today even with fairly low values of , , and VMP.

Given our procedures, however, we cannot rule out the possibility that SEME produces only a transient effect, which would limit its value in election manipulation. Laboratory manipulations of preferences and attitudes often impact subjects for only a short time, sometimes just hours (65). That said, if search rankings were being manipulated with the intent of altering the outcome of a real election, people would presumably be exposed to biased rankings repeatedly over a period of weeks or months. We produced substantial changes not only in voting preferences but in multiple ratings of attitudes toward candidates given just one exposure to search rankings linking to Web pages favoring one candidate, with average search times in the 277- to 635-s range. Given hundreds or thousands of exposures of this sort, we speculate not only that the resulting attitudes and preferences would be stable, but that they would become stronger over time, much as brand preferences become stronger when advertisements are presented repeatedly (66).

Our results also suggest that it is a relatively simple matter to mask the bias in search rankings so that it is undetectable to virtually every user. In experiment 3, using only a simple mask, none of our subjects appeared to be aware that they were seeing biased rankings, and in our India study, only 0.5% of our subjects appeared to notice the bias. When people are subjected to forms of influence they can identify—in campaigns, that means speeches, billboards, television commercials, and so on—they can defend themselves fairly easily if they have opposing views. Invisible sources of influence can be harder to defend against (67–69), and for people who are impressionable, invisible sources of influence not only persuade, they also leave people feeling that they made up their own minds—that no external force was applied (70, 71). Influence is sometimes undetectable because key stimuli act subliminally (72–74), but search results and Web pages are easy to perceive; it is the pattern of rankings that people cannot see. This invisibility makes SEME especially dangerous as a means of control, not just of voting behavior but perhaps of a wide variety of attitudes, beliefs, and behavior. Ironically, and consistent with the findings of other researchers, we found that even those subjects who showed awareness of the biased rankings were still impacted by them in the predicted directions (75).

One weakness in our studies was the manner in which we chose to determine whether subjects were aware of bias in the search rankings. As noted, to not generate false-positive responses, we avoided asking leading questions that referred specifically to bias; rather, we asked a rather vague question about whether anything had bothered subjects about the search rankings, and we then gave subjects an opportunity to type out the details of their concerns. In so doing, we probably underestimated the number of detections (47), and this is a matter that should be studied further. That said, because people who showed awareness of the bias were still vulnerable to our manipulation, people who use SEME to manipulate real elections might not be concerned about detection, except, perhaps, by regulators.

Could regulators in fact detect SEME? Theoretically, by rating pages and monitoring search rankings on an ongoing basis, search ranking bias related to elections might be possible to identify and track; as a practical matter, however, we believe that biased rankings would be impossible or nearly impossible for regulators to detect. The results of studies 2 and 3 suggest that vulnerability to SEME can vary dramatically from one demographic group to another. It follows that if one were using biased search rankings to manipulate a real election, one would focus on the most vulnerable demographic groups. Indeed, if one had access to detailed online profiles of millions of individuals, which search engine companies do (76–78), one would presumably be able to identify those voters who appeared to be undecided and impressionable and focus one’s efforts on those individuals only—a strategy that has long been standard in political campaigns (79–84) and continues to remain important today (85). With search engine companies becoming increasingly adept at sending users customized search rankings (76–78, 86–88), it seems likely that only customized rankings would be used to influence elections, thus making it difficult or impossible for regulators to detect a manipulation. Rankings that appear to be unbiased on the regulators’ screens might be highly biased on the screens of select individuals.

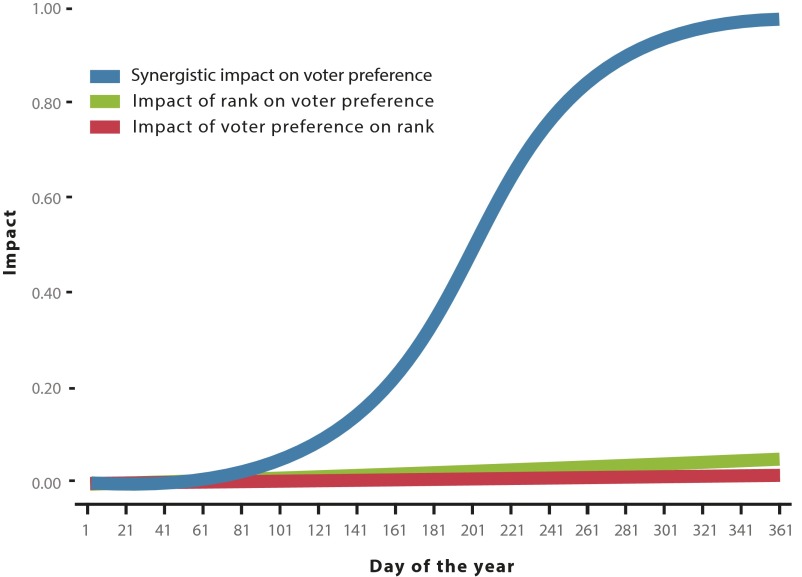

Even if a statistical analysis did show that rankings consistently favored one candidate over another, those rankings could always be attributed to algorithm-guided dynamics driven by market forces—so-called “organic” forces (89)—rather than by deliberate manipulation by search engine company employees. This possibility suggests yet another potential danger of SEME. What if election-related search results are indeed being left to the vagaries of market forces? Do such forces end up pushing some candidates to the top of search rankings? If so, it seems likely that those high rankings are cultivating additional supporters for those candidates in a kind of digital bandwagon effect. In other words, for several years now and with greater impact each year (as more people get election-related information through the Internet), SEME has perhaps already been affecting the outcomes of close elections. To put this another way, without human intention or direction, algorithms have perhaps been having a say in selecting our leaders.

Because search rankings are based, at least in part, on the popularity of Web sites (90), it is likely that voter preferences impact those rankings to some extent. Given our findings that search rankings can in turn affect voter preferences, these phenomena might interact synergistically, causing a substantial increase in support for one candidate at some point even when the effects of the individual phenomena are small.||

Our studies produced a wide range of VMPs. In a real election, what proportion of undecided voters could actually be shifted using SEME? Our first two studies, which relied on a campaign and candidates that were unfamiliar to our subjects, produced overall VMPs in the range 36.7–63.3%, with demographic shifts occurring with VMPs as high as 80.0%. Our third study, with real voters in the midst of a real election, produced, overall, a lower VMP: just 10.6%, with optimizing our rankings raising the VMP to 12.3% and with the elimination of a small number of oppositional subjects raising the VMP to 24.5%, which is the value we would presumably have found if our search rankings had been optimized from the start and if we had advance knowledge about oppositional groups. In the third study, VMPs in some demographic groups were as high as 72.7%. If a search engine company optimized rankings continuously and sent customized rankings only to vulnerable undecided voters, there is no telling how high the VMP could be pushed, but it would almost certainly be higher than our modest efforts could achieve. Our investigation suggests that with optimized, targeted rankings, a VMP of at least 20% should be relatively easy to achieve in real elections. Even if only 60% of a population had Internet access and only 10% of voters were undecided, that would still allow control of elections with win margins up to 1.2%—five times greater than the win margin in the 2010 race between Gillard and Abbott in Australia.

Conclusions

Given that search engine companies are currently unregulated, our results could be viewed as a cause for concern, suggesting that such companies could affect—and perhaps are already affecting—the outcomes of close elections worldwide. Restricting search ranking manipulations to voters who have been identified as undecided while also donating money to favored candidates would be an especially subtle, effective, and efficient way of wielding influence.

Although voters are subjected to a wide variety of influences during political campaigns, we believe that the manipulation of search rankings might exert a disproportionately large influence over voters for four reasons:

First, as we noted, the process by which search rankings affect voter preferences might interact synergistically with the process by which voter preferences affect search rankings, thus creating a sort of digital bandwagon effect that magnifies the potential impact of even minor search ranking manipulations.

Second, campaign influence is usually explicit, but search ranking manipulations are not. Such manipulations are difficult to detect, and most people are relatively powerless when trying to resist sources of influence they cannot see (66–68). Of greater concern in the present context, when people are unaware they are being manipulated, they tend to believe they have adopted their new thinking voluntarily (69, 70).

Third, candidates normally have equal access to voters, but this need not be the case with search engine manipulations. Because the majority of people in most democracies use a search engine provided by just one company, if that company chose to manipulate rankings to favor particular candidates or parties, opponents would have no way to counteract those manipulations. Perhaps worse still, if that company left election-related search rankings to market forces, the search algorithm itself might determine the outcomes of many close elections.

Finally, with the attention of voters shifting rapidly toward the Internet and away from traditional sources of information (12, 61, 62), the potential impact of search engine rankings on voter preferences will inevitably grow over time, as will the influence of people who have the power to control such rankings.

We conjecture, therefore, that unregulated election-related search rankings could pose a significant threat to the democratic system of government.

Materials and Methods

We used 102 subjects in each of experiments 1–3 to give us an equal number of subjects in all three groups and both counterbalancing conditions of the experiments.

Nonparametric statistical tests such as the Mann–Whitney u and the Kruskal–Wallis H are used throughout the present report because Likert scale scores, which were used in each of the studies, are ordinal.

In study 3, the procedure was identical to that of studies 1 and 2; only the Web pages and search results were different: that is, Web pages and search results were pertinent to the three leading candidates in the 2014 Lok Sabha general elections. The questions we asked subjects were also adjusted for a three-person race.

Fig. S1.

A possible synergistic relationship between the impact that search rankings have on voter preferences and the impact that voter preferences have on search rankings. The lower curves (red and green) show slow increases that might occur if each of the processes acted alone over the course of a year (365 iterations of the model). The upper curve (blue) shows the result of a possible synergy between these two processes using the same parameters that generated the two lower curves. The curves are generated by an iterative model using equations of the general form Vn+1 = Vn + r[Rn × (1 − Vn)] + r[On × (1 – Vn)], where V is voter preference for one candidate, R is the impact of voter preferences on search rankings, O is the impact (randomized with each iteration) of other influences on voter preferences, and r is a rate-of-change factor. Because a change in voter preference alters the proportion of votes available, its value in the model cannot exceed 1.0.

SI Text

Demographic Differences in VMP.

In study 2, we found substantial differences in how vulnerable different demographic groups were to SEME. Although the groups we examined are somewhat arbitrary, overlapping, and by no means definitive, they do establish a range of vulnerability to SEME. Ten groups (n ≥ 50) that appeared to be highly vulnerable in study 2, as indicated by their VMP scores, were, in order from highest to lowest, as follows:

-

i)

Moderate Republicans (80.0%; 95% CI, 62.5–97.5%)

-

ii)

People from North Carolina (66.7%; 95% CI, 42.8–90.5%)

-

iii)

Moderate Libertarians (73.3%; 95% CI, 51–95.7%)

-

iv)

Male Republicans (66.1%; 95% CI, 54–78.2%)

-

v)

Female conservatives age 30 and over (67.7%; 95% CI, 52.5–82.7%)

-

vi)

People from Virginia (60.0%; 95% CI, 38.5–81.5%)

-

vii)

People earning between $15,000 and $19,999 (60.0%; 95% CI, 42.5–77.5%)

-

viii)

Hispanics (59.4%; 95% CI, 42.4–76.4%)

-

ix)

Independents with no political leaning (58.3%; 95% CI, 38.6–78.1%)

-

x)

Female conservatives (54.7%; 95% CI, 41.3–68.1%)

Ten groups that appeared to show little vulnerability to SEME, as indicated by their VMP scores, were, in order from highest to lowest, as follows:

-

i)

People from California (24.1%; 95% CI, 15.1–33.1%)

-

ii)

Moderate independents (24.0%; 95% CI, 15.4–32.5%)

-

iii)

Liberal independents (23.4%; 95% CI, 13.1–33.8%)

-

iv)

People from Texas (22.9%; 95% CI, 11–34.8%)

-

v)

Liberal Libertarians (22.7%; 95% CI, 5.2–40.2%)

-

vi)

People earning between $40,000 and $49,999 (22.5%; 95% CI, 13.8–31.1%)

-

vii)

Female independents (22.0%; 95% CI, 13.5–30.5%)

-

viii)

Male moderates age 30 and over (19.3%; 95% CI, 9.1–29.5%)

-

ix)

Female independent moderates (17.9%; 95% CI, 13.5–30.5%)

-

x)

People with an uncommon political party (15.0%; 95% CI, −0.6% to 30.6%)

In study 3, as in study 2, we found substantial differences in how vulnerable different demographic groups were to SEME. Although the groups we examined are somewhat arbitrary, overlapping, and by no means definitive, they do establish a range of vulnerability to SEME. Ten groups (n ≥ 50) that appeared to be highly vulnerable in study 3, as indicated by their VMP scores, were, in order from highest to lowest, as follows:

-

i)