Abstract

Background

Alcohol, tobacco, and other drug use remains highly prevalent among US adolescents and is a threat to their well-being and to the public health. Clinical trials and meta-analyses evidence supports the effectiveness of Screening, Brief Intervention and Referral to Treatment (SBIRT) for adolescents with substance misuse but primary care providers have been slow to adopt this evidence-based approach. The purpose of this paper is to describe the theoretically informed methodology of an on-going implementation study.

Methods

This study protocol is a multi-site, cluster randomized trial (N = 7) guided by Proctor’s conceptual model of implementation research and comparing two principal approaches to SBIRT delivery within adolescent medicine: Generalist vs. Specialist. In the Generalist Approach, the primary care provider delivers brief intervention (BI) for substance misuse. In the Specialist Approach, BIs are delivered by behavioral health counselors. The study will also examine the effectiveness of integrating HIV risk screening within an SBIRT model. Implementation Strategies employed include: integrated team development of the service delivery model, modifications to the electronic medical record, regular performance feedback and supervision. Implementation outcomes, include: Acceptability, Appropriateness, Adoption, Feasibility, Fidelity, Costs/Cost-Effectiveness, Penetration, and Sustainability.

Discussion

The study will fill a major gap in scientific knowledge regarding the best SBIRT implementation strategy at a time when SBIRT is poised to be brought to scale under health care reform. It will also provide novel data to inform the expansion of the SBIRT model to address HIV risk behaviors among adolescents. Finally, the study will generate important cost data that offers guidance to policymakers and clinic directors about the adoption of SBIRT in adolescent health care.

Keywords: implementation, brief intervention, SBIRT, primary care, adolescents

1. Introduction

In the U.S., approximately one in ten youth under the age of 18 reports using illicit drugs, tobacco, or binge alcohol drinking in the past month (Substance Abuse and Mental Health Services Administration, 2010) and while recent studies have shown an overall decline in reported use of illicit drugs, findings from the most recent Monitoring the Future survey indicated that 37% of 12th graders reported past-month use of alcohol, 35% reported use of marijuana in the past year, and that use of non-prescribed medications such as Adderall, Vicodin, and tranquilizers in the past year ranged from 4.7 to 6.8% (Johnston, 2014). Youth with more severe substance use issues often experience significant difficulties with school performance (Miller, Naimi, Brewer, & Jones, 2007), while many more were no longer engaged in school by the 12th grade, indicating that these figures likely under-represent the extent of substance use and related problems. More particularly, studies have found that deleterious short- and long-term consequences of substance use include mental health problems (Mathers, Toumbourou, Catalano, Williams, & Patton, 2006; Moore et al., 2007), deteriorating school performance (Miller et al., 2007), risky sexual activity and victimization (Fergusson & Lynskey, 1996; Miller et al., 2007), placing oneself in danger by riding with an impaired driver (Miller et al., 2007), suicide attempts (Windle, 2004), and elevated risk of mortality (Clark, Martin, & Cornelius, 2008). Moreover, it has been reported that substance misuse during adolescence may negatively impact critical stages of brain development (Volkow, 2005; Lubman, 2007). Perhaps the most obvious long term consequence of substance use in adolescence is that it increases the risk for substance use disorders later in life (Englund, Egeland, Oliva, & Collins, 2008; Hingson, Heeren, & Winter, 2006; Mathers et al., 2006; McCambridge, McAlaney, & Rowe, 2011; Swift, Coffey, Carlin, Degenhardt, & Patton, 2008). These findings have led to a concern with developing better screening and interventions for the range of substance use issues, which has led in turn to the development of the Screening, Brief Intervention and Referral to Treatment (SBIRT) model.

SBIRT typically uses universal screening (S) with validated brief self-report questionnaires to identify those at-risk for substance use problems (Knight, Sherritt, Harris, Gates, & Chang, 2003; Knight, Sherritt, Shrier, Harris, & Chang, 2002; Reinert & Allen, 2007). Those who screen positive are given a Brief Intervention (BI), or a referral to treatment (RT) if specialized treatment for substance use disorders appears warranted. In this way, SBIRT can be employed to address varying degrees of substance use severity.

In randomized clinical trials with adolescent populations in school and health care settings, brief interventions were found to significantly impact consumption of alcohol, tobacco, and marijuana (McCambridge & Strang, 2004); smoking frequency and to increase long-term cessation (Colby et al., 2005; Heckman, Egleston, & Hofmann, 2010; Hollis et al., 2005; Peterson et al., 2009); use of alcohol (Monti et al., 1999; Spirito et al., 2004); both use of alcohol and anti-social aggressive behaviors (Walton et al., 2010); attempts to quit drinking (J. Bernstein et al., 2010); frequency of drug use and related consequences (Winters & Leitten, 2007); marijuana use; number of friends smoking marijuana (E. Bernstein et al., 2009; D'Amico, Miles, Stern, & Meredith, 2008; Martin & Copeland, 2008); and referral to substance abuse treatment (Tait, Hulse, & Robertson, 2004). Meta-analyses of RCTs in a variety of settings of BIs for adolescent alcohol and substance use have obtained positive findings (Tait & Hulse, 2003; Tanner-Smith & Lipsey, 2015; Tripodi, Bender, Litschge, & Vaughn, 2010). However, some brief intervention studies focusing on adolescent drug use have demonstrated mixed results (Walker, Stephens, Roffman, Demarce, Lozano, Towe & Berg, 2011) while others have found no effect of Brief Interventions on drug use in adults (Saitz, Palfai, Cheng, Alford, Bernstein, Lloyd-Travaglini, Meli, Chaisson & Samet, 2014).

The majority of adolescents in the U.S. see a healthcare provider at least annually, making primary care an ideal venue in which to deliver substance misuse interventions for this population (Newacheck, Brindis, Cart, Marchi, & Irwin, 1999). Unlike traditional substance abuse treatment or extended prevention programs, SBIRT is a service model that is well-suited for integration into primary care (Erickson, Gerstle, & Feldstein, 2005). Although the USPSTF indicated that there is inadequate support for providing BIs in primary care, the American Academy of Pediatrics and the NIAAA do recommend that pediatricians provide substance use screening and counseling to all adolescents (American Academy of Pediatrics, 2010). Yet research shows that the majority of physicians do not follow this recommendation (Millstein & Marcell, 2003).

Given the lack of implementation of screening and intervention in pediatric settings there is a need for research to determine how best to implement SBIRT services for adolescents in primary care. Understanding the costs of SBIRT is also important for policy-makers and treatment providers when deciding when and where to implement an SBIRT program, yet there is little information on the cost of SBIRT under different implementation scenarios, and providers lacking such information will be unaware of the investment required to implement a sustainable program (Aalto, Pekuri, & Seppa, 2003; Moyer & Finney, 2004; Zarkin, Bray, Davis, Babor, & Higgins-Biddle, 2003).

1.1. Conceptual Model of Implementation Research

Implementation science is a nascent field and a number of conceptual models have recently been proposed to guide research efforts (Damschroder et al., 2009; Fixsen, Naoom, Blase, Friedman, & Wallace, 2005; E. K. Proctor et al., 2009; Simpson & Flynn, 2007). The comprehensive implementation research model proposed by Proctor and colleagues (E. Proctor et al., 2011; E. K. Proctor et al., 2009), on which various theories and strategies can be placed, links key implementation strategies with implementation outcomes. Proctor’s model includes the following implementation outcomes: Penetration (the integration of a practice into a service setting); Adoption (the decision to employ an evidence-based practice); Sustainability (the extent to which an implemented process is maintained over time); Acceptability (stakeholders’ perceptions that an evidence-based practice is palatable), Appropriateness (perceived fit of the evidence-based practice); Fidelity (the degree to which the evidence-based practice was implemented in the prescribed manner); Feasibility (the extent to which the evidence-based practice can be carried out within the setting), and Implementation Cost (E. Proctor et al., 2011; E. K. Proctor et al., 2009). The breadth of issues impacting implementation, such as Acceptability and Appropriateness, highlight the importance of examining the fit between what is being implemented (e.g., a practice like SBIRT) and the system being impacted (e.g., a primary care setting) and indicate the numerous factors within the system that can impede the successful delivery of services.

1.1.1. SBIRT Implementation Approach: Generalist or Specialist

A major consideration for implementing SBIRT in a health care setting is the type of staff responsible for administering its components. Since SBIRT must fit within an existing practice environment, the roles and responsibilities of specific staff members are critical. Various BI agents have been used in efficacy and effectiveness trials, including physicians (Boekeloo & Griffin, 2007), nurses (Babor & Kadden, 2005; Wachtel & Staniford, 2010), peer educators (J. Bernstein et al., 2005; J. Bernstein et al., 2010), tele-intervention (Peterson et al., 2009), and even computers (Ondersma, Svikis, & Schuster, 2007; Walton et al., 2010). Yet there have been few prospective studies comparing different SBIRT implementation approaches (Babor & Kadden, 2005). Our study builds upon existing knowledge by comparing the two principal implementation approaches used for behavioral health problems in primary care (Bower & Gilbody, 2005; Pincus, 1980, 1987) that have also emerged for SBIRT: the Generalist (BI’s delivered by primary care providers) vs. the Specialist (BI’s delivered by on-site behavioral health counselors).

While the Generalist and Specialist implementation approaches are widely used in primary care and behavioral health, data are lacking regarding the relative effectiveness and cost of these different approaches for delivering adolescent SBIRT in primary care settings. They each have their strengths and weaknesses. The Generalist approach offers potentially greater access to services, as they would be delivered during the primary care provider (PCP) encounter. It may also maximize efficient use of resources by reserving specialists for individuals with substance use disorders. Potential weaknesses include the uneven willingness and ability of PCPs to deliver SBIRT in the course of their busy practice, and the potential for reduced fidelity to the BI model, again as a consequence of the crush of competing obligations.

The Specialist approach has emerged as a viable alternative to the Generalist SBIRT model (Gryczynski et al., 2011; Madras et al., 2009; Mitchell et al., in press). Its strengths include service delivery by trained behavioral health counselors (BHC) with, presumably, more time available to deliver an intervention than the PCP. BHCs may also feel better equipped to address substance use due to their professional training, although not all are familiar with brief interventions for substance use problems. However, its weakness may include the limited availability of counselors at the health center, lack of a pre-existing relationship with the patient, the conflicting demands on their time (e.g., seeing other patients when needed to deliver a BI in the course of a medical appointment), and the potential for losing patients during the referral or hand-off process due to the patient’s unwillingness or inability to see another provider. The comfort and receptivity of patients to discuss their substance use may also vary between their PCPs, with whom they might have a pre-existing history and relationship, and a BHC, who could be a new provider with whom they are not familiar.

Risky sexual behavior is a problem among youth and should be routinely addressed in primary care visits regardless of the youth’s involvement with substance use. However, given the frequent co-occurrence of substance use and risky sexual behaviors, it is important for providers to integrate discussions of sexually transmitted infections and HIV risk-reduction with adolescents, regardless of which BI model is employed. Research is needed on how best to implement sexual risk counseling among substance using youth in primary care settings.

In order to contribute to the growing implementation science field, this paper describes a theoretically informed methodology for conducting implementation studies. It presents the methodology of an on-going National Institute on Drug Abuse-funded multi-site implementation study being conducted under the Proctor theoretical model (E. Proctor et al., 2011; E. K. Proctor et al., 2009), comparing the implementation of the Generalist v. the Specialist BI model for adolescents receiving primary care in a multi-site Federally Qualified Health Center (FQHC) organization in Baltimore, Maryland. The project recently completed the Implementation Phase and has entered the Sustainability Phase of the study.

1.1.2. SBIRT Implementation Strategy

The service delivery protocols tailored to Generalist and Specialist conditions were administered using a combination of implementation strategies targeting differing system levels, including: integrated team development of service delivery protocols (to address Acceptability, Adoption, Appropriateness, and Feasibility), Electronic Medical Record (EMR) modifications (to address Feasibility, Fidelity, and Sustainability), regular performance feedback and supervision (to address Fidelity and Penetration), manual development and training modifications (to address Sustainability), and patient satisfaction surveys (to assess program acceptance from the consumer perspective).

The study is being implemented within a Federally Qualified Health Center (FQHC), a system in flux as the result of recent health care changes, which has created both additional complexity and additional opportunities to examine factors impacting implementation and sustainability. The Friends Research Institute’s Institutional Review Board approved the study, which is due to be completed in June 2016.

2. Methods

2.1. Study Sites

The participating organization is a large, urban Federally Qualified Health Center, which provides adolescent medicine at 7 of its sites throughout Baltimore City and serves a predominantly African American patient population. This FQHC provides primary care services including internal medicine, pediatrics and primary care services to adolescents, obstetrics and gynecology. The study will be conducted at the 7 community clinics, which together serve approximately 3,600 adolescent patients each year.

2.2. Randomization to Organizational Implementation Strategy

The FQHC sites (N = 7) were randomly assigned to implement adolescent SBIRT for primary care patients, ages 12–17 years, inclusive, using either the Generalist or Specialist service delivery approaches. A stratified randomization approach was used, such that clinics were ranked by size of adolescent patient population and grouped into pairs, matched as closely as possible in terms of size. Since one of the 7 sites was twice as large as the next largest, it was grouped against two other sites. Each matched grouping was then randomized, with one assigned to the Specialist Condition and the other assigned to the Generalist Condition, resulting in 3 Specialist sites (including the largest clinic) and 4 Generalist sites.

2.3. Participant Recruitment

Adolescent health care providers (pediatricians, family practitioners, and nurse practitioners), nurses, clinical assistants, and staff at the 7 clinics, as well as organizational leadership members, were asked to provide informed consent to participate in the study. Temporary staff members were not included, nor were staff who worked only at non-participating clinics or in departments that do not see pediatric patients for routine care. Participants (n = 92) completed periodic surveys and interviews, which were conducted, whenever possible, in conjunction with a staff training. Staff who refused to provide informed consent were not required to participate in the study assessments. Nonetheless, they were required by THC to participate in implementation activities because the service delivery model and meetings associated with increasing adherence to the model were considered part of their job duties. A sub-sample of staff completed semi-structured qualitative interviews annually. Qualitative interview participants (n = 20) were purposively selected from across all 7 participating sites to represent a diverse range of roles and organizational perspectives, including primary care providers, nurses, medical assistants, behavioral health counselors, and administrators. Staff represented perspectives from both Generalist and Specialist sites.

2.3.2 Patient Data

As part of the study, de-identified patient-level data is being drawn from service encounter data from the electronic medical record (EMR). In addition, patients who are provided with a BI are asked to complete anonymous patient satisfaction surveys. The FRI IRB granted an exemption for obtaining patient consent for the collection of these anonymous data.

2.4. Start-up Phase

Throughout the Start-up Phase the research team collaborated with the study’s training and implementation experts, a local consulting firm with an on-going consulting relationship with the FQHC. Researchers and the consultants met frequently with clinic leadership, including the medical director (a pediatrician), site medical practice managers, and behavioral health supervisors, to develop the Generalist and Specialist service delivery protocols.

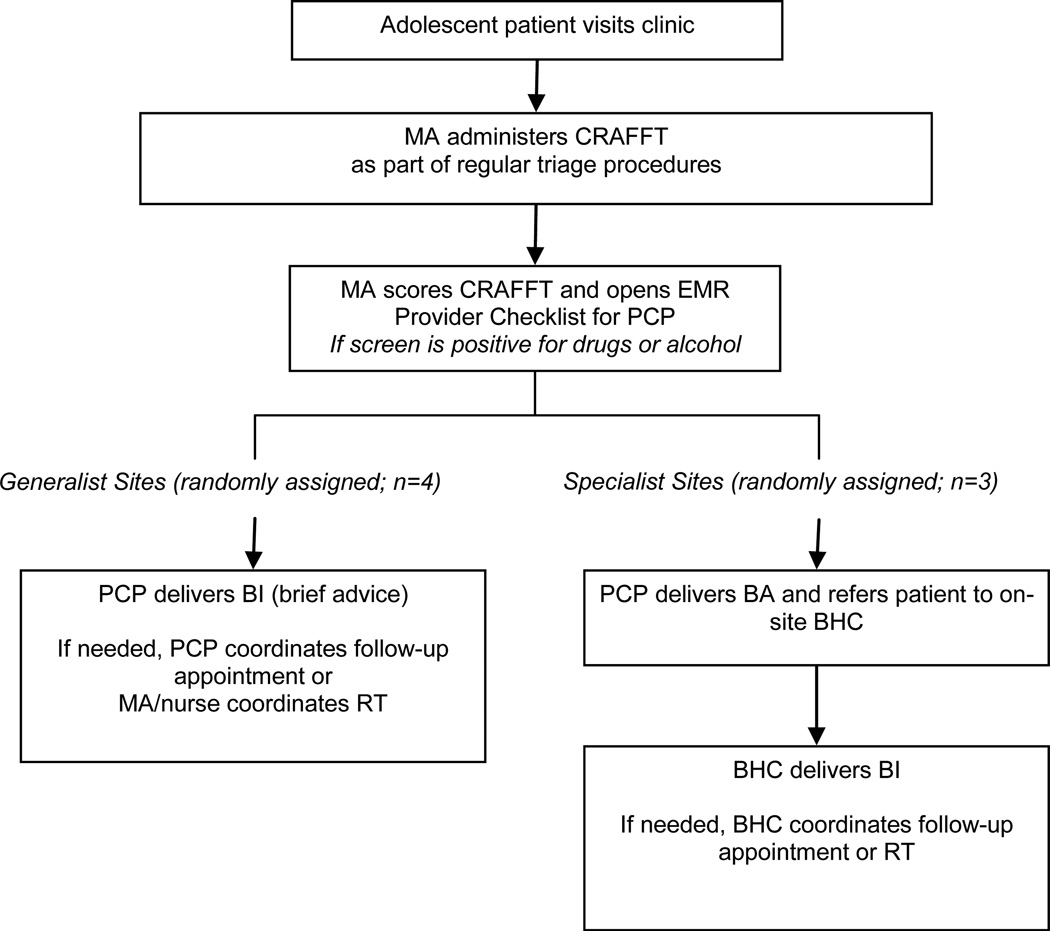

As seen in Figure 1, the training content varied by site, depending on whether they were using the Generalist or Specialist approaches to provide the BI and RT for patients who screened positive. We strove to fully integrate the intervention into the unique workflow at each site and careful attention was given to the specific nuances in the protocol between Generalist and Specialist conditions – particularly as it related to the hand-off for the BI in the Specialist model.

Fig. 1. Patient Flow in Generalist vs. Specialist Sites.

Note: EMR = Electronic Medical Record; MA = Medical Assistant; PCP = Primary Care Provider; BHC = Behavioral Health Counselor; CRAFFT = screening instrument for drug and alcohol use; BI = Brief Intervention; BA = Brief Advice; RT = Referral to Treatment

The consultants, medical director, and study staff also met with the EMR vendor on several occasions to develop the adolescent SBIRT screening and clinical encounter items to be added to the EMR, as well as creating reports to be generated by the system.

2.4.1. Adopting a screening tool

Prior to the study, clinicians were not systematically or consistently asking adolescent patients about drug, alcohol, or tobacco use or risky sexual behaviors. Although the State required that the CRAFFT alcohol and drug screening tool be administered annually to adolescents, because it was administered on paper forms by the clinic assistants and not scored or readily accessible in the EMR during the patient’s visit, providers reported frequently not checking the results. Those providers who did ask their adolescent patients about drug or alcohol use did so in their own way and had no set manner for determining the necessity of follow-up care, particularly for low or moderate substance use. Thus, the first step to implementing adolescent SBIRT was to modify the work flow and train the providers to more effectively utilize the CRAFFT in a consistent manner across both the Generalist and Specialist sites. The MAs were trained to administer the CRAFFT at every visit attended by 12–17 year old patients, not just at annual exams, and PCPs were instructed to always review the CRAFFT results with patients, integrating it into their patient encounter. It was emphasized that doing so would better allow them to normalize the discussion of drugs and alcohol, assess changes in their patients’ behaviors, and enable them to address drug, alcohol, and tobacco use before it becomes problematic.

2.4.2. Modifying the Electronic Medical Record

To improve the utilization of the CRAFFT by the providers, the clinic’s EMR was modified to include a more user-friendly version of the CRAFFT and the instrument was moved to a section that was commonly accessed by the primary care providers during both routine and acute care visits. A tobacco use item, also already present in the EMR, was moved to be asked in conjunction with the CRAFFT. Sex risk items from the EMR’s history and physical section were also linked with the screening items so they could be easily completed as part of the screening process without requiring duplicate data entry. Reports were then developed so they could be generated on a monthly basis to answer the service delivery model adherence and reach questions.

Seven months into the Implementation Phase the clinic switched to a new EMR and the development process had to be entirely repeated. Unfortunately, despite the fact that the screening data were placed in a section of the EMR that was supposed to have reporting capabilities, this feature was largely unworkable in the new system. The Implementation team was still able to extract the necessary data to continue generating summary reports for the purposes of this study, however the process was too complex and labor intensive for the organization to continue doing so once the Implementation Phase was complete.

2.4.3. Initial Staff Trainings

At the conclusion of the Start-up Phase the Implementation trainings were conducted. Clinic personnel who interacted with or treated adolescent patients at each of the 7 sites received in-person training by the consultants. The 90 minute, largely didactic training covered: (1) background information on adolescent tobacco, alcohol, and drug use prevalence and incidence rates; (2) an overview of SBIRT; (3) directions concerning the use, scoring, and interpretation of the screening measure (the CRAFFT; (Knight et al., 2003; Knight et al., 2002) plus the tobacco question [together termed the “CRAFFT+”] for all youth ages 12–17 years, and additional questions on sexual risk behaviors for those youth who screened positive on the CRAFFT); and (4) instructions and practice guidelines regarding conducting a BI (for those at a Generalist site) or Brief Advice (BA) and “warm handoff” to the BHC (for those at a Specialist site). The primary care providers in the 4 sites randomized to the Generalist Condition received additional training in motivational interviewing techniques and in providing a Brief Intervention to adolescents who screened positive (scored at least a 2 on the CRAFFT or reported past year tobacco use). They were also trained to refer their patients that were believed to need additional care to either further assessment by the organization’s behavioral health staff or to community-based specialty substance abuse treatment. At sites assigned to the Specialist Condition, primary care providers were trained to provide Brief Advice and then refer their patients who screened positive (scored at least 2 on the CRAFFT or reported past year tobacco use) to the on-site behavioral health counselors (BHCs). The BHCs were trained to provide a BI based on motivational interviewing and provide a referral to specialty services, as needed. The initial staff training process was identical across the Generalist and Specialist approaches, however the Specialist trainings required slightly longer time (due to the complexity of the service delivery protocol). The Specialist approach also required the training of the behavioral health counselors, who were trained as a group.

2.5. Implementation Phase

2.5.1. Delivery of Generalist and Specialist Approaches

The Medical Assistants (MAs) administered the CRAFFT+ to all adolescents between 12 and 17 years of age at all visits as part of the patient triage/intake procedures. Staff at the sites were permitted to modify the process by which the CRAFFT+ was administered (e.g., hand a hard copy of the form to the youth to complete independently; administer the questions orally; or allow the youth to input their responses directly into the EMR) depending on the circumstances. The MA then entered the patient’s responses into the EMR, scored the CRAFFT+, indicated whether or not the patient’s parent was present during the screening, and opened the Provider Intervention in the EMR for the primary care provider to complete the remainder of the documentation. For patients who reported no past year drug, alcohol, or tobacco use, the primary care provider offered positive feedback and congratulated the patient on their healthy behaviors. For patients who did report any past year alcohol, drug, or tobacco use, the provider responded as outlined below, depending upon the Condition to which that site was randomly assigned.

2.5.1.1. Generalist

At the 4 sites randomly assigned to deliver the Generalist approach, the Primary Care Provider (PCP) discussed the risky behaviors endorsed by the adolescent on the CRAFFT+. The PCPs were trained to conduct BIs based on motivational interviewing techniques, integrating the BI into their on-going conversation with the patient. It was estimated that the sessions lasted approximately 5 minutes, on average. The provider then counseled the patient to reduce or discontinue their level of alcohol, drug, or tobacco use and developed a plan with the patient to reach that goal. Patients who scored a 2 or more on the CRAFFT are also asked the sex risk questions by the PCP and such issues were included in the BI discussion, when appropriate.

Upon concluding the session, the primary care providers, at their discretion based upon the clinical severity of the patient’s substance use and the patient’s willingness to engage in further intervention, either made a follow-up appointment for a longer discussion with the patient, continued the conversation at the patient’s next regularly scheduled primary care visit, or referred the patient to specialty substance abuse services, if indicated. Patients were invited by the PCP to complete an anonymous patient satisfaction questionnaire regarding the SBIRT services received and deposit the questionnaire in a locked box located in the exam room.

2.5.1.2. Specialist

At the 3 sites randomly assigned to deliver the Specialist approach, patients who reported any past year drug, alcohol, or tobacco use received Brief Advice (approximately 1 – 2 minutes in length, on average). Patients who received a score of 2 or more on the CRAFFT or reported more frequent drug or alcohol use received a BA from the PCP who then encouraged the patient to accept a “warm handoff” (i.e., a referral) to the BHC. The provider alerted the nurse or MA that the patient needed to see the BHC who, if available, saw the patient either in the exam room or took the patient to their office (located in the same building) to conduct the BI (estimated to last approximately 15 minutes). The BHC then developed a plan with the patient for reduction of use, as appropriate, encouraged the patient to accept a referral for a substance abuse evaluation and/or scheduled a follow-up visit to assess the patient's progress. Patients were invited by the BHC to complete an anonymous patient satisfaction questionnaire regarding the SBIRT services received and deposit the questionnaire in a locked box located in the exam room or office. If the BHC was not available to conduct the BI, the nurse or MA scheduled an appointment for the patient within one week and notified the PCP.

2.5.2. Implementation Strategies Utilized

Throughout the Implementation Phase, regular performance feedback and technical assistance were provided to different groups and in different formats (see Table 1). The majority of the feedback ultimately consisted of de-identified data which had been extracted from the EMR, summarized, and presented in a comparative and longitudinal manner to show changes and overall trends.

Table 1.

Implementation strategies used for Generalist and Specialist approaches.

| Implementation Strategy |

Description | Target Audience | Schedule |

|---|---|---|---|

|

Electronic Medical Record (EMR) Modification |

Substance use screening (CRAFFT) and documentation fields addressing follow-up services provided (e.g., BI provided) were added to the EMR. |

Administrators, Primary Care Providers, Behavioral Health Counselors, Nurses, Medical Assistants |

Prior to Implementation Phase and repeated when the new EMR was adopted |

|

Initial Staff Trainings |

In-person trainings addressing adolescent substance use prevalence and incidence, SBIRT overview, items and scoring of CRAFFT, service delivery protocol, and EMR documentation. A list of adolescent substance use providers in the community was also provided. Trainings were conducted at each site for all staff. BHCs were trained as a group, independent of site. |

Primary Care Providers, Behavioral Health Counselors, Nurses, Medical Assistants |

Start of Implementation Phase |

|

Bi-monthly Feedback |

De-identified encounter data from the EMR were aggregated by site and summarized into a single page overview. Feedback compared the site’s rates of screening and BI completion over time and in comparison to the other clinics completion rates. |

Administrators | Repeated every other month for duration of Implementation Phase |

|

Quarterly Booster Trainings |

On-site quarterly boosters provided the site’s EMR data for screening and BI completion and presented an opportunity to clarify confusion regarding service delivery processes by Implementation Approach, identify and overcome barriers to screening, BI/BA delivery, and warm handoff completion. |

Primary Care Providers, Nurses, Medical Assistants |

Repeated every 3 months for duration of Implementation Phase |

|

Quarterly Feedback to Providers |

EMR data for each adolescent patient seen by each provider were summarized and provided in a quarterly single page letter to demonstrate performance trends and offer reminders for adherence to their assigned Implementation Approach. |

Primary Care Providers |

Repeated every 3 months for duration of Implementation Phase |

2.5.2.1. Bi-monthly Feedback to Medical Practice Managers

The medical director and consultants together set benchmarks for key clinical activities that indicated adherence to the implementation model. To monitor adherence, de-identified encounter data were collected from the EMR, aggregated by site, and used to assess Adherence to the study implementation approach and determine Penetration of the Generalist and Specialist service delivery models with respect to: (1) how many patients in the age group were seen during the time period; (2) how many of those who were screened should have received a BI, BA, or RT; and (3) how many received the prescribed intervention. These data were provided bi-monthly to the Medical Practice Managers (administrative site leadership) via email and hard copy. Medical Practice Managers were encouraged to share this information with the clinic staff and ensure accountability among their staff.

2.5.2.2. Quarterly Booster Trainings

Quarterly booster trainings were conducted at each clinic in order to provide detailed feedback regarding Penetration and Adherence to the implementation approach and troubleshoot problems (e.g., confusion regarding process or EMR documentation). EMR data from each site for each month during the quarter were presented, compared with performance across the other 6 sites, and trends in performance were discussed. All MAs, nurses, PCPs, and BHCs (at Specialist sites only) were invited to attend these trainings, which customarily took place during monthly staff meeting time-slots. However, PCPs at some clinics infrequently attended the Booster Trainings, and only 1 BHC ever attended the meetings. As a result, Booster Training discussions tended to focus on screening issues and ways to better coordinate positive screening results with the PCPs or BHCs.

2.5.2.3. Quarterly Feedback to Primary Care Providers

EMR data for each adolescent patient seen by each provider were summarized and provided quarterly to demonstrate performance trends and offer reminders concerning implementation model components and reminders. The medical director requested that this detailed quarterly feedback be presented in a written form with an invitation to discuss performance with the medical director or to seek assistance from the training consultants.

2.6. Sustainability Phase

During the Sustainability Phase (currently on-going) all of the implementation strategies described above will cease. The research team will continue to extract EMR data and monitor on-going activities and changes within the organization that could influence adherence to the Generalist or Specialist implementation approaches.

2.7. Implementation Outcome Measures

Implementation outcome measures (shown in Table 2, below) are divided by Specific Aim and drawn from Proctor’s conceptual model of implementation (E. Proctor et al., 2011; E. K. Proctor et al., 2009). For each site, the study start-up period was 6 months, the implementation period was 20 months, and the sustainability period will be 12 months.

Table 2.

Implementation outcomes and measures by Study Aim.

| Implementation Outcome |

Description | Measure Source | Measurement Schedule |

|

|---|---|---|---|---|

| AIM 1 | Penetration | Delivery of (i) BI and (ii) RT to those for whom indicated. |

CRAFFT+ and provider intervention items in EMR |

Ongoing for each encounter; administrative encounter data abstracted monthly |

| AIM 2 |

Cost and Cost- Effectiveness |

Cost of service delivery; Full implementation costs; ICERs for BI and RT. |

SASCAP, clinic records, organizational expenditures, budget |

Month 12 (clinic costs); Month 25 (full implementation costs) |

| AIM 3 | Acceptability | Provider attitudes, perceived need, self- competence |

Provider surveys; Qualitative Interviews |

Repeated each period (∼months 4, 15, and 30) |

| Timeliness | Delivery of services on the same day as screening vs. future appointment. |

Provider intervention items in EMR |

Ongoing for each encounter; administrative encounter data abstracted monthly |

|

| Adherence | Provider adherence to key components of the two SBIRT strategies (e.g., Generalist vs. Specialist) |

Provider intervention items in EMR; Qualitative interviews |

Complete after each encounter in which a BI is indicated |

|

| Satisfaction | Patient satisfaction with services |

Anonymous patient questionnaire |

Ongoing voluntary questionnaire for each BI/RT encounter. Data abstracted monthly. |

|

| Sustainability | Endurance of services after removal of support resources |

CRAFFT+ and provider intervention items in EMR |

Continued ongoing collection of administrative encounter data with monthly abstraction |

|

| AIM 4 |

Feasibility and Acceptability |

Incorporation of HIV risk behavior screening into the SBIRT process |

Provider surveys; Qualitative interviews |

Repeated each period |

Aim 1

To examine the relative effectiveness of the Generalist condition vs. the Specialist condition in terms of Penetration of Brief Intervention (BI) for those adolescents for whom BI is indicated and referral to specialty substance abuse treatment for those adolescents for whom such treatment is indicated.

Penetration captures the extent to which patients receive services under the adopted practice, and will be assessed from clinic encounter records using the methodology described above.

Aim 2

To determine the cost and cost-effectiveness of the two SBIRT models.

Aim 3

To examine the Generalist condition v. the Specialist condition in terms of key implementation factors: Acceptability, Timeliness, Adherence, Satisfaction, and Sustainability.

Acceptability captures the degree to which individual providers and stakeholders view the practice as providing a benefit to patients, is appropriate, and is worth doing. Providers’ perceived self-competence in delivering the evidence-based practice also plays a role in acceptability. Acceptability is measured by administering a survey using Likert scales to primary care providers and behavioral health counselors. The survey will ask questions tapping attitudes, comfort, and competence with respect to SBIRT for adolescent patients. These surveys will be conducted prior to implementation of SBIRT, and twice thereafter (midway through the implementation and sustainability periods). Information on acceptability will also be obtained from qualitative interviews with providers.

Timeliness refers to how quickly a patient receives needed services. This will be measured from administrative encounter records and which will indicate whether the patient received the BI or RT on the same day of the screening.

Adherence refers to the extent to which providers adhere to the core components of their particular implementation approach. PCPs will complete the provider checklist indicating their response to the patient’s screening at the end of each encounter. Reasons for adherence or non-adherence will also be assessed in qualitative interviews with providers.

Patient Satisfaction will be assessed with the 6-item Patient Questionnaire that was used in the SBIRT Implementation evaluation by the OMNI Institute (2010). Items address satisfaction with specific aspects of the SBIRT services as well as satisfaction with the provider-patient relationship (e.g., “My provider made me feel comfortable talking about my alcohol, drug or tobacco use.”). Providers were trained to distribute the surveys to patients who receive a BI. This measure will allow us to compare patient satisfaction between study conditions. Patients will be asked to deposit the anonymous questionnaires in a box located in either the exam room or office where the BI took place. The clinics did not have access to these boxes, as research staff held the keys to them and collected the questionnaires regularly.

Finally we will assess Sustainability. While the study includes robust training and technical support for both implementation conditions, a novel design feature is the cessation of all training and technical support towards the end of the study. This will allow us to gauge the relative sustainability of the implementation models for a period of 12 months after the removal of all implementation support resources. We will incorporate a statistical analysis of sustainability (drawing from the clinic encounter records) and will also obtain provider perspectives on sustainability using qualitative interviews

Aim 4

To examine the feasibility and acceptability of integrating HIV risk behavior screening within an adolescent SBIRT program and providing HIV risk behavior intervention and offering HIV testing to adolescents at risk.

Feasibility will be assessed by determining the extent to which providers report providing HIV risk behavior screening to their patients. As part of the above-described provider checklist at the end of each encounter, PCPs will indicate whether they counseled regarding risky sexual behaviors. Reasons for adherence or non-adherence will also be assessed in qualitative interviews with providers.

2.8. Data Analysis (for Aims 1, 3, and 4)

Multi-level modeling (known alternatively in various disciplines as hierarchical linear modeling, random effects, random coefficients, nesting models, and variance components) is regarded as the optimal analytical strategy for cluster randomized trials due to its potential advantages in terms of precision and efficiency. The multi-level modeling approach also has the advantage of handling unbalanced data with elegance (e.g., clinics of different sizes with differing base rates of BI-eligibility – although this lack of balance will be mitigated by the use of randomization stratified by clinic size). Thus, we will apply a multi-level modeling approach to test hypotheses related to Aim 1 (i.e., rates of appropriate SBIRT service delivery). We also use this technique for examining some of the questions under Aims 3 and 4 that lend themselves to quantitative analysis (i.e., to model change in provider attitudes and acceptance of SBIRT under each implementation model).

2.8.1. Qualitative Data

Semi-structured qualitative interviews will be recorded, professionally transcribed, and analyzed using a grounded theory approach with Atlas.ti qualitative analysis software. Grounded theory is a systematic, inductive approach to the analysis of qualitative data that uses the data itself to generate underlying theories of the key phenomena under investigation. It entails an iterative coding process in which themes, concepts, and ideas within a narrative are continually identified, categorized, questioned, and revised. Two independent coders will analyze the data separately, meet to discuss their findings and coding schemas, and reconcile differences until consensus is reached. We have used this approach extensively in our previous research (Peterson, 2010; Reisinger, 2009) and this rigorous strategy will be applied to the current study.

2.9. Cost Data (for Aim 2)

The economic study is designed to estimate the costs and cost-effectiveness associated with the implementation of the adolescent SBIRT interventions under study. To do the cost estimation, we follow an activity-based costing approach where relevant activities are identified and resource use and costs associated with each activity are collected (Zarkin, 2004). Costs, collected from the treatment provider perspective, are separated into labor (e.g., time spent by clinical staff in intervention-related activities) and non-labor costs (e.g., contracted services, materials and space). Research-related activities, such as data collection and analysis, are excluded from the cost estimates as these would not be done in real practice.

Costs are collected in 2 categories: (1) the costs associated with setting up and preparing for full implementation (i.e., startup costs), and (2) the costs related to delivering the intervention (i.e., ongoing implementation costs). Start-up costs are typically incurred only once over a relatively short time-frame. Start-up activities include administrative activities to set-up and implement SBIRT (e.g., discussing logistics and planning for how SBIRT will be implemented at each site, making relevant policy changes and changes to forms/systems, getting buy in from key stakeholders), staff training, and providing quality assurance and technical assistance (TA) during start-up. Ongoing activities are needed to maintain the SBIRT practice once the intervention program is operational. Ongoing activities include the screening and brief intervention itself and any other activities that support it, such as hand-offs for referral to outside specialist treatment, TA and ongoing training.

Ongoing implementation costs will be used in a cost-effectiveness analysis to compare the cost and implementation success of each delivery model. If one implementation model is both more expensive and effective we will compute an Incremental Cost Effectiveness Ratio (ICER), which is the ratio of the difference in costs to the difference in implementation outcomes, and represents how much more a decision maker would have to pay in order to achieve one extra unit of the outcome (Drummond, 2005; Gold, 1996).

2.10. System Dynamics Modeling

Holmes and colleagues (Holmes, 2012) argued that “Complex systems are not predictable and therefore cannot be understood through reductionism; however it is possible to achieve a level of understanding of a complex system by studying how it operates” (p. 177). The comprehensive scope of data being collected for this study, along with the longitudinal nature of our data collection, present an opportunity to both observe and examine the complexity of the primary care clinic’s delivery of the Generalist and Specialist approaches and the implementation process strategies used.

Working in collaboration with selected clinic-based project stakeholders, we are developing and validating a system dynamics (SD) model that can be used to conduct simulated comparative effectiveness analyses of the Generalist vs. Specialist approach to the SBIRT intervention, examining implementation factors such as Acceptability, Timeliness, Adherence, Satisfaction, and Sustainability at the site (clinic) level.

2.10.2. Overview of SD modeling approach

SD model-building deploys an iterative research process that is complete when the model achieves sufficient ‘structural’ and ‘behavioral’ validity to its intended purpose (Barlas, 1989, 1996; Martinez-Moyana & Richardson, 2013; Martinez-Moyano, 2012). Procedures for establishing structural and behavioral validity are organized around the purpose of the model, the type and quality of the sources of evidence, and model calibration. In the current study, the purpose of the SD model is to help inform new organizational procedures and policies that adequately address implementation challenges and support the long term sustainability of the intervention. The model is a working set of algebraic and ordinary differential equations, generally shown as a stock-and-flow diagram (I. Ventana Systems, 2008; Inc Ventana Systems, 2008), which can then be used as a tool to explore hypotheses about the factors that contribute to the stated problem, as well as to compare problem-solving strategies (Peter S. Hovmand & David F. Gillespie, 2010; P. S. Hovmand & D. F. Gillespie, 2010; Repenning, 2002). Major steps in SD model-building include problem identification, system conceptualization, model formulation, model simulation, and, finally model evaluation (Roberts, 1983).

2.10.3 Sources of modeling data

The system dynamics modeling will be informed by quantitative data obtained through the current study’s multi-site, cluster-randomized design and by qualitative input obtained from stakeholders, namely MAs, nurses, PCPs, BHCs, and administrators. Five data sources are available to support our proposed SD modeling: (1) Training data – detailed records of initial and booster training sessions (longitudinal); (2) Patient visit and screening data + number of adolescents and non-adolescents (medical records, individual patients, longitudinal); (3) Staffing data – clinical staffing and staffing turnover (longitudinal); (4) Structured provider interviews and semi-structured qualitative provider interviews about knowledge of barrier and facilitators (baseline and follow up during sustainability period); and (5) Organizational impact data about either facilitators or inhibitors of the intervention’s implementation, such as catastrophic breakdown of a clinic’s electronic medical records systems or an abrupt change in clinic leadership priorities relating to the intervention.

2.10.4 Final Assessment of Model Performance

We will conduct extensive tests to ensure the system dynamics models adhere to established validation tests. We will apply procedures based on recommendations by (Forrester, 1980). Three categories of tests will be conducted: (1) verification tests, which confirm that the parameters of the model are logical, supported by one more sources of information, and properly entered; (2) validation tests, which address the extent to which the simulated behavior of the model is realistic or like the actual ‘real world’ dynamics it is intended to represent (i.e., the model must not produce nonsensical values, such as ‘negative persons’); and (3) legitimation tests, which affirm that the differential equations used to construct the model follow commonly accepted mathematical principles, namely that the model must be dimensionally valid (i.e., the units of measurement or quantification of the constructs or variables on each side of the equation should be the same), and, for material (i.e., physical) variables, the model should maintain ‘conservation of flow.’ (i.e., what enters the system should be accounted for at any point within the model’s time horizon).

3. Discussion

The present adolescent SBIRT Implementation study seeks to add to the existing knowledge base, both with respect to effective implementation strategies for use within primary care environments, and implementation approaches for delivering adolescent SBIRT services in primary care settings. Those settings are routinely visited by adolescents, but have historically lacked strategies for identifying and addressing substance use. Successful integration of SBIRT into primary care has promise to reduce the significant social and economic consequences associated with alcohol, tobacco, and other drug use by youth, including impaired driving and injury, high risk sex behavior, violence, delinquency and future criminal activity, development of health problems and drug dependence throughout the life course, as well as escalation of future healthcare costs (J. Bernstein et al., 2010; D'Amico et al., 2008; McCambridge & Strang, 2004; Mitchell et al., in press; Spirito et al., 2004; Tait & Hulse, 2003; Walton et al., 2010; Winters & Leitten, 2007). Identifying an effective implementation model could pave the way to bring adolescent SBIRT to scale across the nation.

The present study utilizes Proctor’s Model because of the breadth of implementation outcomes it takes into consideration, representing both the complex system and nuanced “fit” between the intervention being implemented and the people tasked with carrying it out. Proctor’s model incorporates several of the RE-AIM framework components (albeit under somewhat different terms) but expands upon the model by specifically examining the perceptions of the providers, themselves, which for the purposes of implementation of a practice in a primary care setting, we thought provided a more comprehensive picture.

Although reimbursement mechanisms to support SBIRT have existed for several years now (Goplerud & Anderson, 2008), many providers, while endorsing the idea of SBIRT, fail to implement an SBIRT program. Likewise, existing SBIRT programs have faced challenges to sustainability following expiration of federal funding (Gonzales, 2012). Estimates of the cost of SBIRT vary widely, due to diverse screening and intervention methods and variability in costing methodology (Cowell, Bray, Mills, & Hinde, 2010). While several economic studies have found support for SBIRT cost-benefits or cost-effectiveness in adults (Fleming et al., 2000, 2002; Gentilello, Ebel, Wickizer, Salkever, & Rivara, 2005; Kunz, French, & Bazargan-Hejazi, 2004; Wutzke, Shiell, Gomel, & Conigrave, 2001), this literature provides limited guidance on the implementation costs for adolescent SBIRT. Only one study has compared the costs of SBIRT, specifically its screening and BI components, across providers and different models of implementation (Zarkin et al., 2003). This study used activity-based costing to separate costs into start-up and ongoing implementation costs, which distinction had been overlooked in previous studies. It was also the only study that estimated the costs of technical assistance required to establish and assure quality in an SBIRT program. The present study will provide important data on the cost-effectiveness, of the principal competing implementation strategies for adolescent SBIRT.

Rather than over-simplifying these complex health care environments, our study will use SD modeling to examine the service delivery process in an attempt to determine the points at which the service delivery process is disrupted or inhibited in some manner, thereby identifying more effective implementation strategies to use in future implementation efforts. Given the national emphasis on integrating substance abuse screening and treatment within medical care environments, much can be learned from this ongoing study.

Although we have only recently completed the Implementation phase and are currently in the Sustainability phase of our study, several preliminary “lessons learned” have already been noted. First, attempting to implement a more integrated service delivery strategy within an organization with co-located mental health services poses numerous challenges across both the start-up and Implementation phases, adding additional layers of communication and coordination while attempting to ensure that delivering adolescent SBIRT interventions is equally emphasized across both systems. Second, asking about substance use can often uncover additional co-occurring mental health issues. Ensuring that behavioral health staff are brought in at the correct time and for appropriate reasons, irrespective of the service delivery strategy being employed, is essential. Finally, flexibility is critical for working within a complex health system, both from an organizational standpoint (when implementing a new initiative) and from the providers’ standpoint (as they strive to meet the diverse health needs of their patients on a daily basis).

Highlights.

This on-going cluster randomized trial of Adolescent SBIRT is being implemented in 7 Federally Qualified Health Center clinics.

Proctor’s Implementation outcomes are being examined.

Two different service delivery approaches are being compared: Generalist (BI provided by primary care physician) vs. Specialist (BI provided by a behavioral health counselor).

The integration of HIV discussions within the Brief Intervention is also being examined.

Acknowledgement

We thank Ms. Faye Royale-Larkins and the staff of Total Health Care for their collaboration on this implementation project. We also thank Mr. Josh Li and Ms. Lauren Restivo for their assistance with manuscript preparation and Drs. Tisha Wiley and Lori Ducharme for their continued guidance.

Declarations of interest and source of funding: The study was supported through National Institute on Drug Abuse (NIDA) Grant1R01DA034258-01 (PI Mitchell). NIDA had no role in the design and conduct of the study; data acquisition, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosures: No financial disclosures.

Clinical Trials Registration: Clinicaltrials.gov NCT01829308

References

- Aalto M, Pekuri P, Seppa K. Obstacles to carrying out brief intervention for heavy drinkers in primary health care: a focus group study. Drug Alcohol Rev. 2003;22(2):169–173. doi: 10.1080/09595230100100606. [DOI] [PubMed] [Google Scholar]

- Babor TF, Kadden RM. Screening and interventions for alcohol and drug problems in medical settings: what works? J Trauma. 2005;59(3 Suppl):S80–S87. doi: 10.1097/01.ta.0000174664.88603.21. discussion S94–100. [DOI] [PubMed] [Google Scholar]

- Barlas Y. Multiple tests for validation of system dynamics type of simulation models. European Journal of Operational Research. 1989;42(1):59–87. [Google Scholar]

- Barlas Y. Formal aspects of model validity and validation in system dynamics. System Dynamics Review. 1996;12(3):183–210. [Google Scholar]

- Bernstein E, Edwards E, Dorfman D, Heeren T, Bliss C, Bernstein J. Screening and brief intervention to reduce marijuana use among youth and young adults in a pediatric emergency department. Acad Emerg Med. 2009;16(11):1174–1185. doi: 10.1111/j.1553-2712.2009.00490.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein J, Bernstein E, Tassiopoulos K, Heeren T, Levenson S, Hingson R. Brief motivational intervention at a clinic visit reduces cocaine and heroin use. Drug Alcohol Depend. 2005;77(1):49–59. doi: 10.1016/j.drugalcdep.2004.07.006. [DOI] [PubMed] [Google Scholar]

- Bernstein J, Heeren T, Edward E, Dorfman D, Bliss C, Winter M, Bernstein E. A brief motivational interview in a pediatric emergency department, plus 10-day telephone follow-up, increases attempts to quit drinking among youth and young adults who screen positive for problematic drinking. Acad Emerg Med. 2010;17(8):890–902. doi: 10.1111/j.1553-2712.2010.00818.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boekeloo BO, Griffin MA. Review of clinical trials testing the effectiveness of physician approaches to improving alcohol education and counseling in adolescent outpatients. Current Pediatric Reviews. 2007;3:93–101. doi: 10.2174/157339607779941679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bower P, Gilbody S. Managing common mental health disorders in primary care: conceptual models and evidence base. BMJ. 2005;330(7495):839–842. doi: 10.1136/bmj.330.7495.839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark DB, Martin CS, Cornelius JR. Adolescent-onset substance use disorders predict young adult mortality. J Adolesc Health. 2008;42(6):637–639. doi: 10.1016/j.jadohealth.2007.11.147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colby SM, Monti PM, O'Leary Tevyaw T, Barnett NP, Spirito A, Rohsenow DJ, Lewander W. Brief motivational intervention for adolescent smokers in medical settings. Addict Behav. 2005;30(5):865–874. doi: 10.1016/j.addbeh.2004.10.001. [DOI] [PubMed] [Google Scholar]

- Cowell AJ, Bray JW, Mills MJ, Hinde JM. Conducting economic evaluations of screening and brief intervention for hazardous drinking: Methods and evidence to date for informing policy. Drug Alcohol Rev. 2010;29(6):623–630. doi: 10.1111/j.1465-3362.2010.00238.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Amico EJ, Miles JN, Stern SA, Meredith LS. Brief motivational interviewing for teens at risk of substance use consequences: a randomized pilot study in a primary care clinic. J Subst Abuse Treat. 2008;35(1):53–61. doi: 10.1016/j.jsat.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Englund MM, Egeland B, Oliva EM, Collins WA. Childhood and adolescent predictors of heavy drinking and alcohol use disorders in early adulthood: a longitudinal developmental analysis. Addiction. 2008;103(Suppl 1):23–35. doi: 10.1111/j.1360-0443.2008.02174.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erickson SJ, Gerstle M, Feldstein SW. Brief interventions and motivational interviewing with children, adolescents and their parents in pediatric health care settings. Arch Pediatr Adolesc Med. 2005;159:1173–1180. doi: 10.1001/archpedi.159.12.1173. [DOI] [PubMed] [Google Scholar]

- Fergusson DM, Lynskey MT. Alcohol misuse and adolescent sexual behaviors and risk taking. Pediatrics. 1996;98(1):91–96. [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tapma, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231; 2005. [Google Scholar]

- Fleming MF, Mundt MP, French MT, Manwell LB, Stauffacher EA, Barry KL. Benefit-cost analysis of brief physician advice with problem drinkers in primary care settings. Med Care. 2000;38(1):7–18. doi: 10.1097/00005650-200001000-00003. [DOI] [PubMed] [Google Scholar]

- Fleming MF, Mundt MP, French MT, Manwell LB, Stauffacher EA, Barry KL. Brief physician advice for problem drinkers: long-term efficacy and benefit-cost analysis. Alcohol Clin Exp Res. 2002;26(1):36–43. [PubMed] [Google Scholar]

- Gentilello LM, Ebel BE, Wickizer TM, Salkever DS, Rivara FP. Alcohol interventions for trauma patients treated in emergency departments and hospitals: a cost benefit analysis. Ann Surg. 2005;241(4):541–550. doi: 10.1097/01.sla.0000157133.80396.1c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goplerud E, Anderson DR. 2008: The breakout year for SBI. Alcoholism and Drug Abuse Weekly. 2008;20(1):5. [Google Scholar]

- Gryczynski J, Mitchell SG, Peterson TR, Gonzales A, Moseley A, Schwartz RP. The relationship between services delivered and substance use outcomes in New Mexico's Screening, Brief Intervention, Referral and Treatment (SBIRT) Initiative. Drug Alcohol Depend. 2011;118(2–3):152–157. doi: 10.1016/j.drugalcdep.2011.03.012. PMCID: PMC21482039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heckman CJ, Egleston BL, Hofmann MT. Efficacy of motivational interviewing for smoking cessation: a systematic review and meta-analysis. Tob Control. 2010;19(5):410–416. doi: 10.1136/tc.2009.033175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hingson RW, Heeren T, Winter MR. Age at drinking onset and alcohol dependence: age at onset, duration, and severity. Arch Pediatr Adolesc Med. 2006;160(7):739–746. doi: 10.1001/archpedi.160.7.739. [DOI] [PubMed] [Google Scholar]

- Hollis JF, Polen MR, Whitlock EP, Lichtenstein E, Mullooly JP, Velicer WF, Redding CA. Teen reach: outcomes from a randomized, controlled trial of a tobacco reduction program for teens seen in primary medical care. Pediatrics. 2005;115(4):981–989. doi: 10.1542/peds.2004-0981. [DOI] [PubMed] [Google Scholar]

- Hovmand PS, Gillespie DF. Implementation of Evidence-Based Practice and Organizational Performance. Journal of Behavioral Health Services & Research. 2010;37(1):79–94. doi: 10.1007/s11414-008-9154-y. [DOI] [PubMed] [Google Scholar]

- Hovmand PS, Gillespie DF. Implementation of evidence-based practice and organzational performance. Journal of Behavioral Health Services Research. 2010;27(1):79–94. doi: 10.1007/s11414-008-9154-y. [DOI] [PubMed] [Google Scholar]

- Knight JR, Sherritt L, Harris SK, Gates EC, Chang G. Validity of brief alcohol screening tests among adolescents: a comparison of the AUDIT, POSIT, CAGE, and CRAFFT. Alcohol Clin Exp Res. 2003;27(1):67–73. doi: 10.1097/01.ALC.0000046598.59317.3A. [DOI] [PubMed] [Google Scholar]

- Knight JR, Sherritt L, Shrier LA, Harris SK, Chang G. Validity of the CRAFFT substance abuse screening test among adolescent clinic patients. Arch Pediatr Adolesc Med. 2002;156(6):607–614. doi: 10.1001/archpedi.156.6.607. [DOI] [PubMed] [Google Scholar]

- Kunz FM, Jr, French MT, Bazargan-Hejazi S. Cost-effectiveness analysis of a brief intervention delivered to problem drinkers presenting at an inner-city hospital emergency department. J Stud Alcohol. 2004;65(3):363–370. doi: 10.15288/jsa.2004.65.363. [DOI] [PubMed] [Google Scholar]

- Madras BK, Compton WM, Avula D, Stegbauer T, Stein JB, Clark HW. Screening, brief interventions, referral to treatment (SBIRT) for illicit drug and alcohol use at multiple healthcare sites: comparison at intake and 6 months later. Drug Alcohol Depend. 2009;99(1–3):280–295. doi: 10.1016/j.drugalcdep.2008.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin G, Copeland J. The adolescent cannabis check-up: randomized trial of a brief intervention for young cannabis users. J Subst Abuse Treat. 2008;34(4):407–414. doi: 10.1016/j.jsat.2007.07.004. [DOI] [PubMed] [Google Scholar]

- Martinez-Moyana IJ, Richardson GP. Best practices in system dynamics modeling. System Dynamics Review. 2013;29(2):102–123. [Google Scholar]

- Martinez-Moyano IJ. Documentation for model transparency. System Dynamics Review. 2012;28(2):199–208. [Google Scholar]

- Mathers M, Toumbourou JW, Catalano RF, Williams J, Patton GC. Consequences of youth tobacco use: a review of prospective behavioural studies. Addiction. 2006;101(7):948–958. doi: 10.1111/j.1360-0443.2006.01438.x. [DOI] [PubMed] [Google Scholar]

- McCambridge J, McAlaney J, Rowe R. Adult consequences of late adolescent alcohol consumption: a systematic review of cohort studies. PLoS Med. 2011;8(2):e1000413. doi: 10.1371/journal.pmed.1000413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCambridge J, Strang J. The efficacy of single-session motivational interviewing in reducing drug consumption and perceptions of drug-related risk and harm among young people: results from a multi-site cluster randomized trial. Addiction. 2004;99(1):39–52. doi: 10.1111/j.1360-0443.2004.00564.x. [DOI] [PubMed] [Google Scholar]

- Miller JW, Naimi TS, Brewer RD, Jones SE. Binge drinking and associated health risk behaviors among high school students. Pediatrics. 2007;119(1):76–85. doi: 10.1542/peds.2006-1517. [DOI] [PubMed] [Google Scholar]

- Millstein SG, Marcell AV. Screening and counseling for adolescent alcohol use among primary care physicians in the United States. Pediatrics. 2003;111(1):114–122. doi: 10.1542/peds.111.1.114. [DOI] [PubMed] [Google Scholar]

- Mitchell SG, Gryczynski J, Peterson T, Gonzales A, Moseley A, Schwartz RP. Screening, brief intervention, and referral to treatment (SBIRT) for substance use in a school-based program: Services and outcomes. American Journal on Addictions. in press doi: 10.1111/j.1521-0391.2012.00299.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monti PM, Colby SM, Barnett NP, Spirito A, Rohsenow DJ, Myers M, Lewander W. Brief intervention for harm reduction with alcohol-positive older adolescents in a hospital emergency department. J Consult Clin Psychol. 1999;67(6):989–994. doi: 10.1037//0022-006x.67.6.989. [DOI] [PubMed] [Google Scholar]

- Moore BA, Fiellin DA, Barry DT, Sullivan LE, Chawarski MC, O'Connor PG, Schottenfeld RS. Primary care office-based buprenorphine treatment:comparison of heroin and prescription opioid dependent patients. J Gen Intern Med. 2007;22(4):527–530. doi: 10.1007/s11606-007-0129-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moyer A, Finney JW. Brief interventions for alcohol problems: factors that facilitate implementation. Alcohol Res Health. 2004;28(1):44–50. Access at: http://pubs.niaaa.nih.gov/publications/arh28-41/44-50.pdf. [PMC free article] [PubMed] [Google Scholar]

- Newacheck PW, Brindis CD, Cart CU, Marchi K, Irwin CE. Adolescent health insurance coverage: recent changes and access to care. Pediatrics. 1999;104(2 Pt 1):195–202. doi: 10.1542/peds.104.2.195. [DOI] [PubMed] [Google Scholar]

- Ondersma SJ, Svikis DS, Schuster CR. Computer-based brief intervention a randomized trial with postpartum women. Am J Prev Med. 2007;32(3):231–238. doi: 10.1016/j.amepre.2006.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson AV, Jr, Kealey KA, Mann SL, Marek PM, Ludman EJ, Liu J, Bricker JB. Group-randomized trial of a proactive, personalized telephone counseling intervention for adolescent smoking cessation. J Natl Cancer Inst. 2009;101(20):1378–1392. doi: 10.1093/jnci/djp317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pincus HA. Linking general health and mental health systems of care: conceptual models of implementation. Am J Psychiatry. 1980;137(3):315–320. doi: 10.1176/ajp.137.3.315. [DOI] [PubMed] [Google Scholar]

- Pincus HA. Patient-oriented models for linking primary care and mental health care. Gen Hosp Psychiatry. 1987;9(2):95–101. doi: 10.1016/0163-8343(87)90020-x. [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinert DF, Allen JP. The alcohol use disorders identification test: an update of research findings. Alcohol Clin Exp Res. 2007;31(2):185–199. doi: 10.1111/j.1530-0277.2006.00295.x. [DOI] [PubMed] [Google Scholar]

- Repenning NA. A simulation-based approach to understanding the dynamics of innovation implementation. Organization Science. 2002;13:109–127. [Google Scholar]

- Saitz R, Palfai TP, Cheng DM, Alford DP, Bernstein JA, Lloyd-Travaglini CA, Meli SM, Chaisson CE, Samet JH. Screening and brief intervention for drug use in primary care: The ASPIRE rnadomized clinical trial. JAMA. 2014;312(5):502–513. doi: 10.1001/jama.2014.7862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. J Subst Abuse Treat. 2007;33(2):111–120. doi: 10.1016/j.jsat.2006.12.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spirito A, Monti PM, Barnett NP, Colby SM, Sindelar H, Rohsenow DJ, Myers M. A randomized clinical trial of a brief motivational intervention for alcohol-positive adolescents treated in an emergency department. J Pediatr. 2004;145(3):396–402. doi: 10.1016/j.jpeds.2004.04.057. [DOI] [PubMed] [Google Scholar]

- Swift W, Coffey C, Carlin JB, Degenhardt L, Patton GC. Adolescent cannabis users at 24 years: trajectories to regular weekly use and dependence in young adulthood. Addiction. 2008;103(8):1361–1370. doi: 10.1111/j.1360-0443.2008.02246.x. [DOI] [PubMed] [Google Scholar]

- Tait RJ, Hulse GK. A systematic review of the effectiveness of brief interventions with substance using adolescents by type of drug. Drug Alcohol Rev. 2003;22(3):337–346. doi: 10.1080/0959523031000154481. [DOI] [PubMed] [Google Scholar]

- Tait RJ, Hulse GK, Robertson SI. Effectiveness of a brief-intervention and continuity of care in enhancing attendance for treatment by adolescent substance users. Drug Alcohol Depend. 2004;74(3):289–296. doi: 10.1016/j.drugalcdep.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Tanner-Smith EE, Lipsey MW. Brief alcohol interventions for adolescents and young adults: A systematic review and meta analysis. Journal of Substance Abuse Treatment. 2015;51:1–18. doi: 10.1016/j.jsat.2014.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tripodi SJ, Bender K, Litschge C, Vaughn MG. Interventions for reducing adolescent alcohol abuse: a meta-analytic review. Arch Pediatr Adolesc Med. 2010;164(1):85–91. doi: 10.1001/archpediatrics.2009.235. [DOI] [PubMed] [Google Scholar]

- Ventana Systems I. Vensim: Simulator for Business Open Education Resources. 2008 [Google Scholar]

- Wachtel T, Staniford M. The effectiveness of brief interventions in the clinical setting in reducing alcohol misuse and binge drinking in adolescents: a critical review of the literature. J Clin Nurs. 2010;19(5–6):605–620. doi: 10.1111/j.1365-2702.2009.03060.x. [DOI] [PubMed] [Google Scholar]

- Walker DD, Stephens R, Roffman R, Demarce J, Lozano B, Towe S, Berg B. Randomized controlled trial of motivational enhancement therapy with nontreatment-seeking adolescent cannabis users: A further test of the teen marijuana check-up. Psychology of Addictive Behaviors. 2011;25(3):474–484. doi: 10.1037/a0024076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton MA, Chermack ST, Shope JT, Bingham CR, Zimmerman MA, Blow FC, Cunningham RM. Effects of a brief intervention for reducing violence and alcohol misuse among adolescents: a randomized controlled trial. JAMA. 2010;304(5):527–535. doi: 10.1001/jama.2010.1066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Windle M. Suicidal behaviors and alcohol use among adolescents: a developmental psychopathology perspective. Alcohol Clin Exp Res. 2004;28(5 Suppl):29S–37S. doi: 10.1097/01.alc.0000127412.69258.ee. [DOI] [PubMed] [Google Scholar]

- Winters KC, Leitten W. Brief intervention for drug-abusing adolescents in a school setting. Psychol Addict Behav. 2007;21(2):249–254. doi: 10.1037/0893-164X.21.2.249. [DOI] [PubMed] [Google Scholar]

- Wutzke SE, Shiell A, Gomel MK, Conigrave KM. Cost effectiveness of brief interventions for reducing alcohol consumption. Soc Sci Med. 2001;52(6):863–870. doi: 10.1016/s0277-9536(00)00189-1. [DOI] [PubMed] [Google Scholar]

- Zarkin GA, Bray JW, Davis KL, Babor TF, Higgins-Biddle JC. The costs of screening and brief intervention for risky alcohol use. J Stud Alcohol. 2003;64(6):849–857. doi: 10.15288/jsa.2003.64.849. [DOI] [PubMed] [Google Scholar]