Abstract

In order to facilitate the extraction of quantitative data from live cell image sets, automated image analysis methods are needed. This paper presents an introduction to the general principle of an overlap cell tracking software developed by the National Institute of Standards and Technology (NIST). This cell tracker has the ability to track cells across a set of time lapse images acquired at high rates based on the amount of overlap between cellular regions in consecutive frames. It is designed to be highly flexible, requires little user parameterization, and has a fast execution time.

Keywords: cell motility, live-cell imaging, overlap-based cell tracking, time-lapse cell imaging

1. Introduction

Automated microscopy has facilitated the large scale acquisition of live cell image data [Sig06, Gor07, Dav07, and Bah05]. In the case of low magnification imaging in transmission mode, the migration, morphology, and lineage development of large numbers of single cells in culture can be monitored. However, obtaining quantitative data related to single cell behavior requires image analysis methods that can accurately segment and track cells. When fluorescence protein gene reporters are used, the activity of specific genes can be related to phenotypic changes at a single cell level. The analysis of living, single cells also provides information on the variability that exists within homogeneous cell populations [Ras05 and Si206]. Furthermore, multiple fluorescence protein reporters transfected into single cells can be used to understand the sequence of transcriptional changes that occurs in response to perturbations. In order to facilitate the extraction of quantitative data from live cell image sets, automated image analysis methods are needed.

The diversity of both cell imaging techniques and the cell lines used in biological research is enormous making the task of developing reliable segmentation and cell tracking algorithms even harder. Many popular cell tracking techniques are based on complex probabilistic models. In [Bah05] Gaussian probability density functions are used to characterize the selected tracking criteria. In [Mar06] cells are tracked by fitting their tracks to a persistent random walk model based on mean square displacement. In [Lia08] the final cell trajectories and lineages are established based on the entire tracking history by using the interacting multiple models (IMM) filter [Gen06]. In [Kha05], a Markov Chain Monte Carlo based particle filter is used to initially detect the position of the targets and then a Rao-Blackwellized particle filter is applied. An important class of tracking techniques consists of level set methods [Bes00, Man02, and Shi05]. They produce fairly accurate tracking results but are difficult to implement and computationally expensive. The tracking techniques proposed in [Dor02, Ray02, Zim02] are commonly referred to as active contour or snake techniques. In general they do not consider all possible tracking candidates in the frame, but focus on the candidates corresponding to a predefined model (e.g., located around a reference initial position). Finally, tracking techniques based on mean-shift algorithms provide a fast solution, but often do not provide accurate information about object contours [Col03, Com03, Deb05]. Many available techniques are computationally expensive and have a large number of parameters to adjust for every track. We propose a new technique that can produce accurate tracking with a small set of adjustable parameters in situations where cell movement between consecutive frames is limited so that there is typically some cell pixel overlap between frames.

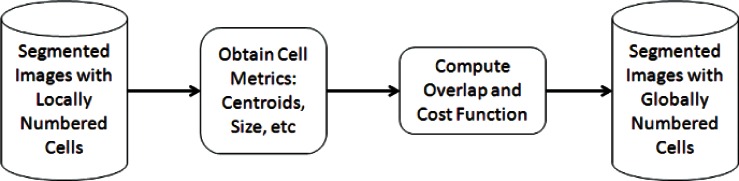

Our experience shows that when acquiring time-lapse images at intervals ranging from 5 min to 15 min, the movement of cultured mammalian cells between two consecutive frames will be relatively small. This means that between consecutive frames a typical cell will occupy nearly the same position. In order to effectively analyze large volumes of data (> 10 000 images) an automated process requiring very little manual intervention and involving a simple and meaningful set of parameters is needed. The overlap-based cell tracking software developed by NIST was designed with this goal in mind. It tracks cells across a set of time lapse images based on the amount of overlap between cellular regions in consecutive frames. It is designed to be highly flexible and suitable for use in a wide range of applications, requires little user interaction during the tracking process, and has a fast execution time. Though it requires that the change in a cell’s location from one frame to the next be relatively small to work reliably, acquiring images at 5 min to 15 min intervals is feasible with standard automated live cell imaging systems and provides image data that is suitable for an overlap-based algorithm. The core tracking algorithm is shown in Fig. 1.

Fig. 1.

Core algorithm

In this paper, a general formulation of the motion tracking problem will be given, followed by a brief description of the input data and of the tracking criteria employed. Some instances of application of the tracking software will be presented to further illustrate its capabilities. We will conclude with a brief summary of our results.

2. Problem Statement

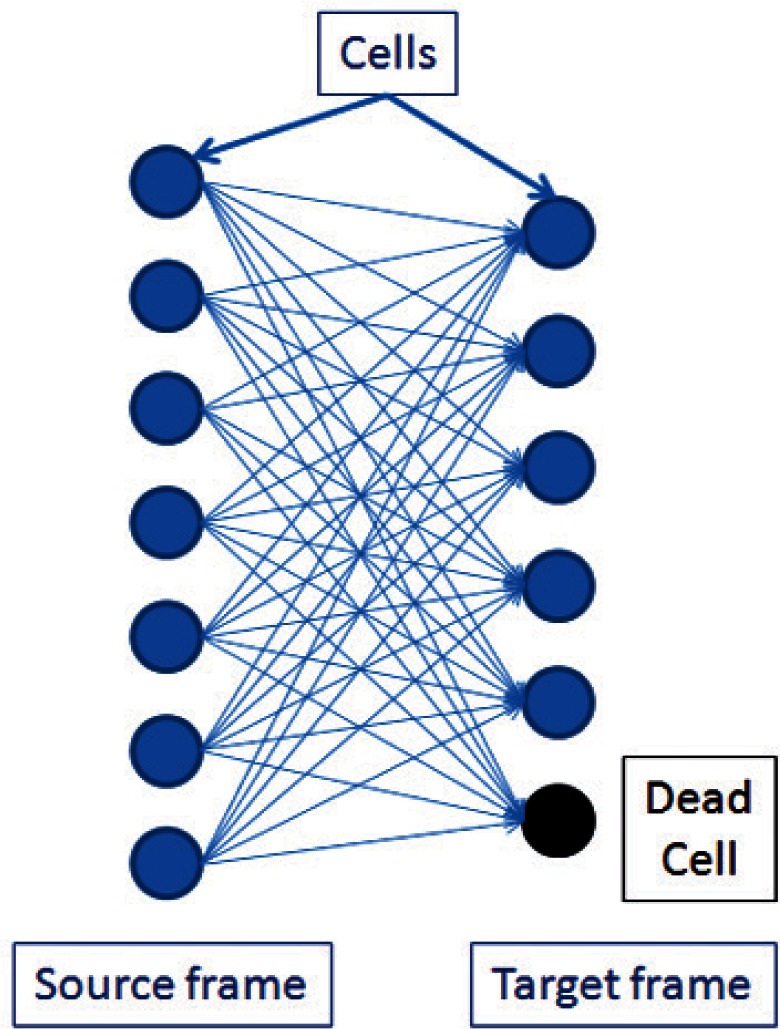

Cellular tracking techniques are used to obtain motion and life cycle behavior information about cells by following the cells of interest through multiple, time sequential images. The cell tracking problem can be defined as: given a cell A from a current (source) image, identify the corresponding cell B, if any, in the subsequent (target) image. If cell A is tracked to B, then the two cells are the same cell at successive moments in time. This process involves examining all possible combinatorial mappings of the cells in a source image to the cells in the target image (Fig. 2) and finding the optimal mapping. The process is then repeated using the target image as the source image and the next image in the set as the target image until the entire set of images has been traversed. The image to image mappings are then chained together to form a complete life-cycle track of every individual cell in the image set.

Fig. 2.

Possible combinatorial tracking between two consecutive frames.

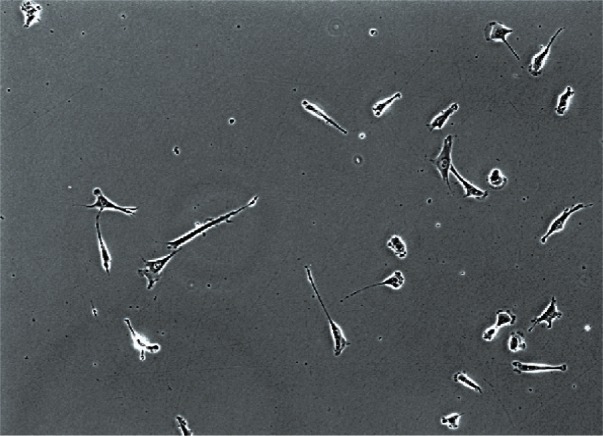

Many different types of imagery can be obtained with modern cellular microscopy instruments—in our case we will be working with phase contrast images of NIH-3T3 fibroblasts, shown in Fig. 3 below.

Fig. 3.

Example of a phase contrast microscopy image.

3. Image Data and Preliminary Definitions

The input of the tracking algorithm is a series of segmented images (masks) derived from the raw microscopy data. The masks identify the individual pixels in an image that correspond to a cellular region and are generated from the raw phase contrast microscope images using automated image segmentation. Many segmentation techniques exist in the literature; some are general purpose and others are specific to a cell line and/or image acquisition parameters. The specifics of the segmentation algorithm used in this project will not be addressed here and in general the NIST cell tracking algorithm can be used with any segmentation algorithm. It is important to note however that the reliability of the tracking outcome is highly dependent on the accuracy of the segmentation.

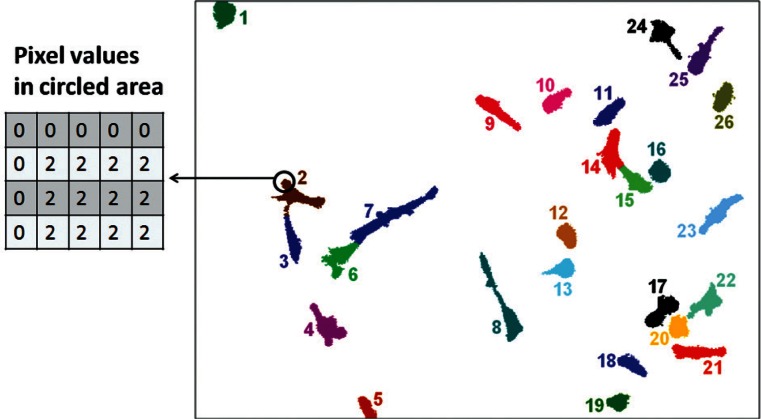

The notation used to refer to a segmented image or mask is Ik, with k = 1,2, …, N, Ik is the kth image in the set and N is the total number of images in the set. The segmentation process sets the value of all background pixels in the mask to zero. It sets the value of all pixels segmented into a cellular region to a positive integer value called the cell number (Fig. 4). The cell numbers are assigned to each segmented region starting at 1 and continuing incrementally until all segmented regions have been labeled. The regions are numbered in the order in which the cells are encountered. The notation used to represent a given pixel at a location in the image is p(x, y), where:

| (1) |

Fig. 4.

Segmented image mask for the example image in Fig. 3.

The notation is used to identify cell number i from the kth image. i = 1,2,…, Mk. Mk represents the total number of cells that are present in the kth image. For visual clarity, each number is also represented by a unique color when plotted. Figure 4 shows the segmented image generated from the phase contrast image in Fig. 3.

4. The Overlap-Based Tracking Concept

The NIST cell tracking algorithm computes a cost for each possible cell-to-cell mapping based on some simple tracking criteria. The cost value represents a measure of the probability that cell from image Ik should be tracked to cell in the subsequent image. The cost function has been defined in such a way that the higher the cost value is, the lower the probability that the two cells should be identified as being the same cell across frames. A general definition of the cost function between a pair of cells from two different images is given as follows:

| (2) |

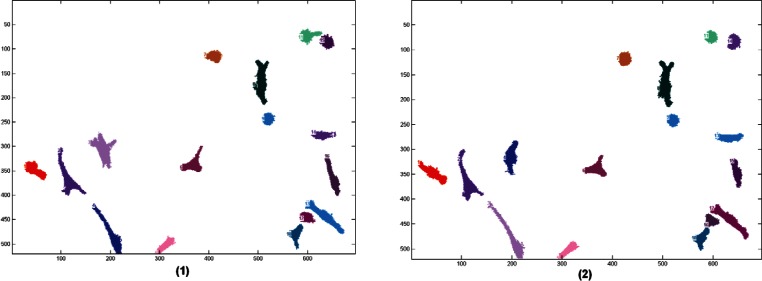

Before describing in detail the tracking criteria used in this paper, consider the two consecutive segmented phase-contrast images shown in Fig. 5 below. Note, that individual cells do not significantly change their position between consecutive frames. This is more easily seen in Fig. 6 where the images are superimposed. This suggests that the number of common pixels (the overlap) between a pair of cells can be used as the principal measure of cost. If a pair of cells shares a large number of overlapping pixels, then these two cells are most likely the same cell in different images. If more than two cells overlap we will need to employ additional criteria to further refine the cost. It is important to note that for this technique to work reliably the images must be acquired at a sufficiently high rate to minimize cell movement between successive frames. If the images are too far apart in time the cells may migrate great distances across the image window and will exhibit little or no overlap. At low acquisition rates cell motion may appear so chaotic that even a human observer will find it difficult to identify them correctly. The acquisition rate used for the NIST 3T3 cells tracked in this paper is typical for this type of cell line.

Fig. 5.

Image 1 and Image 2—two consecutive segmented images.

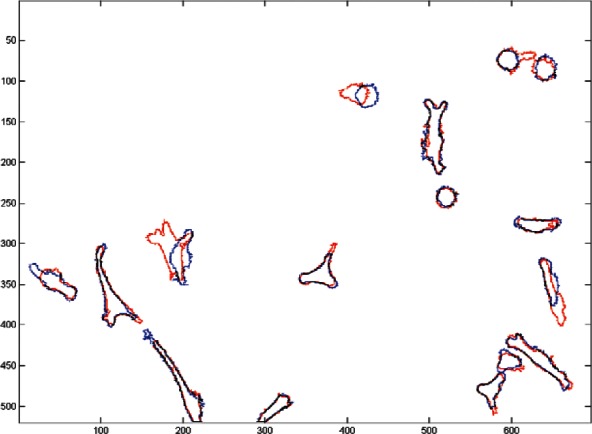

Fig. 6.

Image 1 (red outline) superimposed on Image 2 (blue outline).

The cost function uses the following criteria for computing the cost of a mapping:

The amount of overlap between source and target cells.

The Euclidean distance (offset) between the centroids of the source and target cells.

The difference in size between the source and target cells.

The metrics used for quantifying these criteria are normalized between 0 and 1. A value of zero denotes a perfect match between a pair of cells: all pixels overlap, the centroids are in the same location and cells have the same size. The cost function is defined as a sum of the individual metrics, each representing a tracking criterion. Hence, lower values of the cost function indicate a higher probability that the source and target cells are the same cell. This mathematical representation carries desirable properties such as differentiability and the ease of including additional tracking criteria by adding new terms. Since the terms of the summation were defined in such a way that they are independent, they can be modified as needed without affecting the remaining terms.

A more complete mathematical statement of the cost function is:

where:

wo = the weight of the overlap term,

O = an overlap metric,

wc = the weight of the centroid offset term,

δc = a centroid offset metric,

ws = the weight of the cell size term, and

δs = a cell size metric.

The weights are provided for flexibility and allow the basic algorithm to be tailored for use with different cell lines and image acquisition conditions. For example if the image acquisition rate were high and cells overlap greatly between two consecutive frames then wo should be set to a high value. If the size of the cells changes very little between two consecutive frames then a larger weight can be given for the size term. The weights used in the examples presented in this paper are:

4.1 Pathological Filtering

Some source/target pairs are so obviously undesirable that they are filtered prior to applying the cost function. Specifically, if the source and target cells have no pixels in common and the distance (in pixels) between their centroids is greater than a user defined threshold value, then the mapping is assigned an arbitrarily high cost (MAX_COST) to ensure that it will never be chosen. For example, a cell in the upper right corner should not be tracked to a cell in the lower left corner (cells don’t jump that much between consecutive frames). By definition mappings with a cost of MAX_COST are invalid. This filtering is derived from common sense and experience with cell biology and cell morphology.

4.2 The Overlap Metric

The overlap metric for a source/target pair is a measure of the number of pixels the two cells have in common between two consecutive frames. It is computed using the formula:

where:

= the size in pixels of the source cell,

= the size in pixels of the target cell, and

= the number of pixels the two cells have in common.

4.3 The Centroid Metric

The centroid metric is a measure of the Euclidean distance between the centroids of the source and target cells between two consecutive frames. Let the width and height (in pixels) of a frame be represented by the symbols Iwidth and Iheight and denote the centroid coordinates (in pixels) of cell i in frame k by the symbols . The centroid metric for a source/target pair is computed as:

4.4 The Size Metric

The size metric is a measure of the relative difference in the sizes of the source and target cells in two consecutive frames. It is computed as:

4.5 Tracking Solution

Once the individual cell mappings between consecutive frames have been computed, the frame-to-frame mappings are combined to produce a complete life cycle track of all the cells in the set of images. The sequentially assigned cell numbers given by the segmentation process for the cells in each frame are replaced with uniquely numbered track numbers that identify the movement of each cell in time across the entire set of images. Therefore a unique track number tn will be associated to each uniquely identified cell, n = 1,2, …, T where T represents the total number of unique cells found in the image set. The pixels in the images are relabeled to reflect the new track numbers such that when a pair of cells has been assigned with a tracking number the pixels from all images that belong to a given cell will all have the same value.

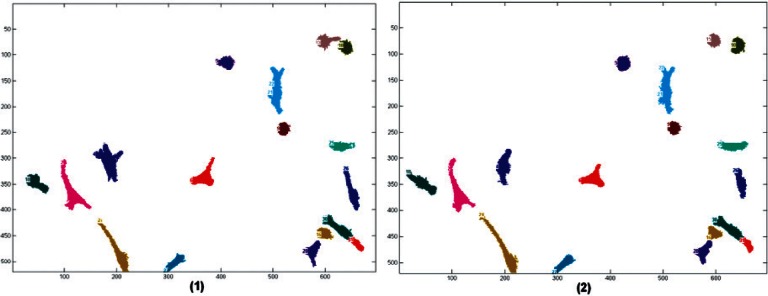

In Fig. 5, in each segmented image, the cells were numbered randomly from 1 to max. When these cells are given a global number, they will carry the same number thru time. Figure 7 shows that this is also reflected by the colors of the cells, the same cell will have the same color throughout the images.

Fig. 7.

Two consecutive tracked images. The cells that were identified as being the same were given the same number and color in both images.

5. Results and Outputs

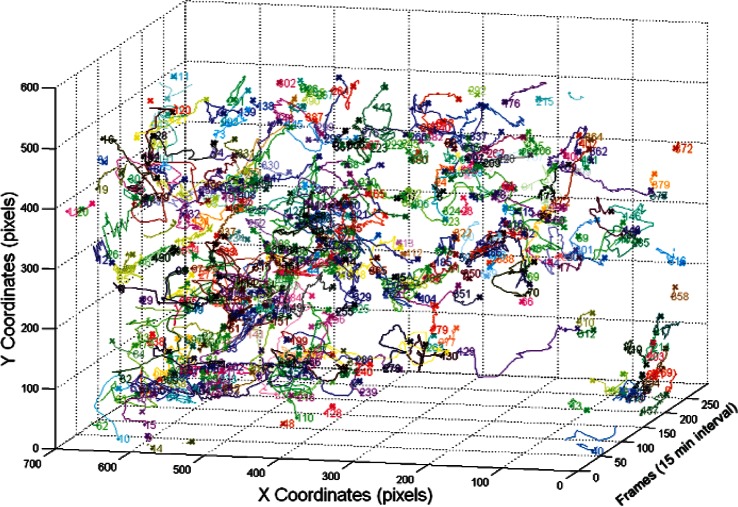

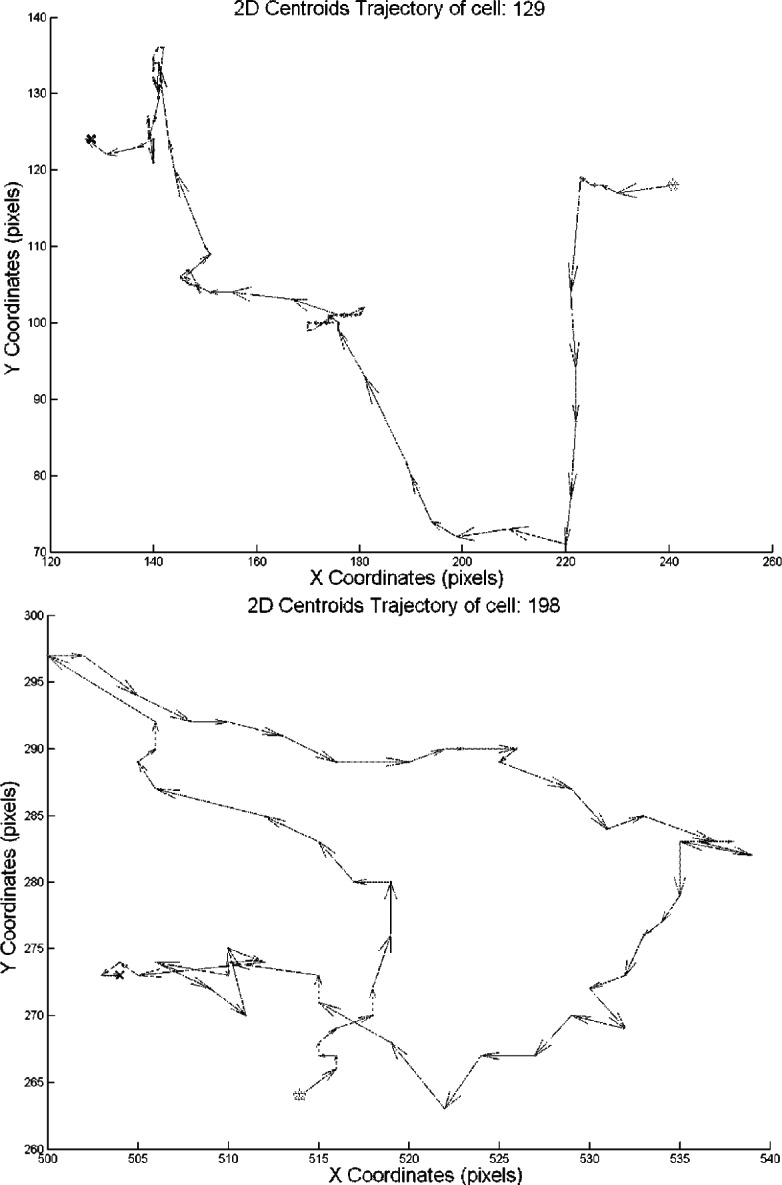

After applying the cell tracker on the segmented images, the results are documented and saved in the cell tracker output folder as matrices. This enables fast access to the output when needed. Figures 8, 9 and 10 show the centroid trajectories of the cells in 2D and 3D. This will help to determine the traveling rate of cells.

Fig. 8.

2D cell centroid trajectories. Each arrow in the image represents the direction and the distance traveled by the cell between two consecutive frames. There is 15 min interval between each frame.

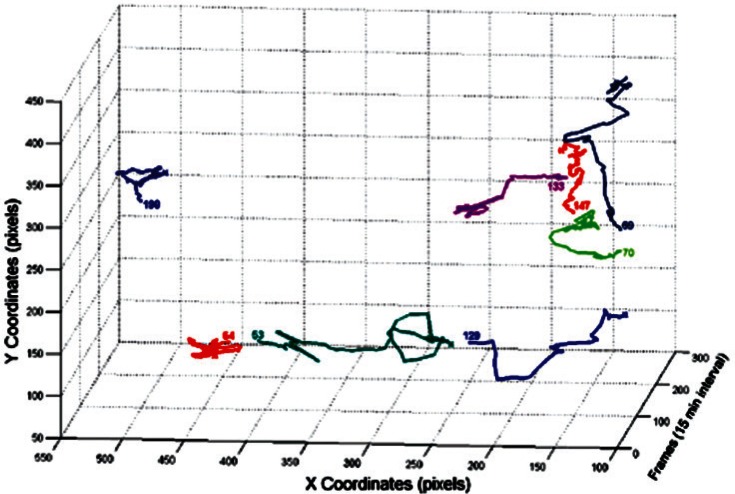

Fig. 9.

3D cell centroid trajectories for some cells.

Fig. 10.

3D cell centroid trajectories for all cells.

6. Conclusion

An overlap cell tracking software developed by NIST was described. This cell tracker has the ability to track cells across a set of time lapse images acquired at high rates based primarily on the amount of overlap between cellular regions in consecutive frames. It was designed to be highly flexible, requires little user parameterization, and has a fast execution time.

Future enhancements are planned for the cell tracker. The ability to detect mitosis (when a source cell divides into two new cells) will be added along with capability of detecting colliding cells and giving a feedback to segmentation when such behavior occurs. A cell shape metric will be used to add a shape weight to the cost function. This metric was not needed for tracking the 3T3 fibroblasts as they typically change shape rapidly between consecutive frames. However, a shape-based metric is in general needed to improve the tracking of cell lines or other objects that are more morphologically stable and it should increase the cell tracker’s suitability for use in a wider range of applications.

The average computation time for tracking 500 cells in our set of 252 images (520 × 696 pixels) on a single core Pentium 3.4 GHz 3 GB RAM is 47 s. This translates to an average speed of 5.36 frames/s.

Biography

About the authors: Joe Chalfoun and Antonio Cardone are Research Associates, Alden Dima is a Computer Scientist, Daniel Allen was a Computer Specialist prior to his retirement. All are with the Software and Systems Division of the Information Technology Laboratory of NIST. Michael Halter is a Research Scientist with the Biochemical Science Division of the Material Measurement Laboratory of NIST. The National Insitute of Standards and Technology is an agency of the U.S. Department of Commerce.

Footnotes

Editor’s Note: This paper is based on NIST IR 7663, Overlap-Based Cell Tracker. Most of the content remains the same.

Contributor Information

Joe Chalfoun, Email: joe.chalfoun@nist.gov.

Antonio Cardone, Email: antonio.cardone@nist.gov.

Alden A. Dima, Email: alden.dima@nist.gov.

Daniel P. Allen, Email: oldhokie@verizon.net.

Michael W. Halter, Email: michael.halter@nist.gov.

7. References

- [Bah05].Bahnson A, Athanassiou C, Koebler D, Qian L, Shun T, Shields D, Yu H, Wang H, Goff J, Cheng T, Houck R, Cowsert L. Automated measurement of cell motility and proliferation. BMC Cell Biology. 2005;6(1):19. doi: 10.1186/1471-2121-6-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [Bes00].Besson S, Barlaud M, Aubert G. Detection and tracking of moving objects using a new level set based method; In Proc of the International Conference on Pattern Recognition; 2000. pp. 1100–1105. [Google Scholar]

- [Col03].Comaniciu D, Ramesh V, Meer P. Kernel-based object tracking. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2003;25(5):564–575. [Google Scholar]

- [Com03].Collins R. Mean-shift blob tracking through scale space; Proceedings of Computer Vision and Pattern Recognition (CVPR’03); 2003. pp. 234–241. [Google Scholar]

- [Dav07].Davis PJ, Kosmacek EA, Sun Y, Ianzini F, Mackey MA. The large-scale digital cell analysis system: an open system for nonperturbing live cell imaging. J Microsc. 2007;228(Pt. 3):296–308. doi: 10.1111/j.1365-2818.2007.01847.x. [DOI] [PubMed] [Google Scholar]

- [Deb05].Debeir O, VanHam P, Kiss R, Decaestecker C. Tracking of migrating cells under phase-contrast video microscopy with combined mean-shift processes. IEEE Transactions on Medical Imaging. 2005;24(6):697–711. doi: 10.1109/TMI.2005.846851. [DOI] [PubMed] [Google Scholar]

- [Dor02].Dormann D, Libotte T, Weijer CJ, Bretschneider T. Simultaneous quantification of cell motility and protein-membrane-association using active contours. Cell Motility and the Cytoskeleton. 2002;52:221–230. doi: 10.1002/cm.10048. [DOI] [PubMed] [Google Scholar]

- [Gen06].Genovesio A, Liedl T, Emiliani V, Parak WJ, Coppey-Moisan M, Olivo-Marin JC. Multiple particle tracking in 3-D+t microscopy: Method and application to the tracking of endocytosed quantum dots. IEEE Transactions of Medical Imaging. 2006;15:1062–1070. doi: 10.1109/tip.2006.872323. [DOI] [PubMed] [Google Scholar]

- [Gor07].Gordon A, Colman-Lerner A, Chin TE, Benjamin KR, Yu RC, Brent R. Single-cell quantification of molecules and rates using open-source microscope-based cytometry. Nat Methods. 2007;4(2):175–181. doi: 10.1038/nmeth1008. [DOI] [PubMed] [Google Scholar]

- [Kha05].Khan Z, Balch TR, Dellaert F. Multitarget Tracking with Split and Merged Measurements; Proceedings of 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 2005. pp. 605–610. [Google Scholar]

- [Lia08].Lia K, Millera ED, Chenb M, Kanadea T, Weissa LE, Campbella PG. Cell population tracking and lineage construction with spatiotemporal context. Medical Image Analysis. 2008;12(5):546–566. doi: 10.1016/j.media.2008.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [Man02].Mansouri A. Region tracking via level set PDEs without motion computation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2002;24(7):947–961. [Google Scholar]

- [Mar06].Martens L, Monsieur G, Ampe C, Gevaert K, Vandekerckhove J. Cell-motility: a cross-platform, open source application for the study of cell motion paths. BMC Bioinformatics. 2006;7:289. doi: 10.1186/1471-2105-7-289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [Ras05].Raser JM, O’Shea EK. Noise in gene expression: origins, consequences, and control. Science. 2005;309(5743):2010–2013. doi: 10.1126/science.1105891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [Ray02].Ray N, Acton ST, Ley K. Tracking leukocytes in vivo with shape and size constrained active contours. IEEE Transactions on Medical Imaging. 2002;21(10):1222–1235. doi: 10.1109/TMI.2002.806291. [DOI] [PubMed] [Google Scholar]

- [Set99].Sethian J. Level Set Methods and Fast Marching Methods. New York: Cambridge Univ. Press; 1999. [Google Scholar]

- [Shi05].Shi Y, Karl WC. Real-time tracking using level sets; Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition; 2005. pp. 34–41. [Google Scholar]

- [Sig06].Sigal A, Milo R, Cohen A, Geva-Zatorsky N, Klein Y, Alaluf I, Swerdlin N, Perzov N, Danon T, Liron Y, Raveh T, Carpenter AE, Lahav G, Alon U. Dynamic proteomics in individual human cells uncovers widespread cell-cycle dependence of nuclear proteins. Natl Methods. 2006;3(7):525–531. doi: 10.1038/nmeth892. [DOI] [PubMed] [Google Scholar]

- [Si206].Sigal A, Milo R, Cohen A, Geva-Zatorsky N, Klein Y, Liron Y, Rosenfeld N, Danon T, Perzov N, Alon U. Variability and memory of protein levels in human cells. Nature. 2006;444(7119):643–646. doi: 10.1038/nature05316. [DOI] [PubMed] [Google Scholar]

- [Zim02].Zimmer C, Labruyere E, Meas-Yedid V, Guillen N, Olivo-Marin JC. Segmentation and tracking of migrating cells in video microscopy with parametric active contours: A tool for cell-based drug testing. IEEE Transactions on Medical Imaging. 2002;21(10):1212–1221. doi: 10.1109/TMI.2002.806292. [DOI] [PubMed] [Google Scholar]