Abstract

Objectives

Nonlinear frequency compression is a signal processing technique used to increase the audibility of high frequency speech sounds for hearing aid users with sloping, high frequency hearing loss. However, excessive compression ratios may reduce spectral contrast between sounds and negatively impact speech perception. This is of particular concern in infants and young children who may not be able to provide feedback about frequency compression settings. This study explores use of an objective cortical auditory evoked potential that is sensitive to changes in spectral contrast, the auditory change complex (ACC), in the verification of frequency compression parameters.

Design

ACC responses were recorded from adult listeners to a spectral ripple contrast stimulus that was processed using a range of frequency compression ratios (1:1, 1.5:1, 2:1, 3:1, and 4:1). Vowel identification, consonant identification, speech recognition in noise (QuickSIN), and behavioral ripple discrimination thresholds were also measured under identical frequency compression conditions. In Experiment 1, these tasks were completed in 10 adults with normal hearing. In Experiment 2, these same tasks were repeated in 10 adults with sloping, high frequency hearing loss.

Results

Repeated measures ANOVAs were completed for each task and each group with frequency compression ratio as the within-subjects factor. Increasing the compression ratio did not affect vowel identification for the normal hearing group but did cause a significant decrease in vowel identification for the hearing-impaired listeners. Increases in compression ratio were associated with significant decrements in ACC amplitudes, consonant identification scores, ripple discrimination thresholds, and speech perception in noise scores for both groups of listeners.

Conclusions

The ACC response, like speech and non-speech perceptual measures, is sensitive to frequency compression ratio. Further work is needed to establish optimal stimulus and recording parameters for the clinical application of this measure in the verification of hearing aid frequency compression settings.

Keywords: nonlinear frequency compression, acoustic change complex, auditory evoked potentials

Introduction

Nonlinear frequency compression is a signal processing technique used in modern hearing aids to improve the audibility of high frequency sounds for individuals with high frequency hearing loss. This technique compresses the spectral bandwidth of acoustic signals above a start frequency that is selected by the audiologist based on the configuration of the individual’s hearing loss. Sounds below that start frequency are not altered. The result is that high frequency information that would have otherwise been inaudible is moved to a frequency region with better hearing (see Alexander 2013 for review). For some individuals with hearing loss, this approach can result in improved speech recognition; however, nonlinear frequency compression can also distort the spectral cues important for speech recognition (Alexander 2013). Excessive compression could then be defined as those settings that increase distortion without any concomitant improvements in audibility of the sounds of speech.

To date, several studies have measured performance of listeners with high frequency hearing loss comparing nonlinear frequency compression with conventional amplification. Some have shown modest group level benefit from frequency compression in consonant and plural recognition in quiet (Glista et al. 2009; Wolfe et al. 2010, 2011) and monosyllabic words in quiet (McCreery et al. 2014). However, others have reported that some hearing aid users do not exhibit any benefit from nonlinear frequency compression (Simpson et al. 2005,2006; Bohnert et al. 2010). A lack of overall benefit from frequency compression has been reported for a variety of speech perception measures, including consonant-vowel (CV) syllables in quiet and spondees in noise (Hillock-Dunn et al. 2014) and word recognition in quiet (Bentler et al. 2014). Perreau et al. (2013) reported group data showing significantly poorer performance for spondees in noise and vowel perception tasks when the study participants used nonlinear frequency compression compared to conventional hearing aid sound processing.

Some of the variance in benefit reported in the literature could be due to the differing approaches to prescribing nonlinear frequency compression settings, all of which depend on cooperation from the hearing-impaired listener to varying extents. One approach involves: (i) selection of the initial start frequency and compression ratio with the goal of maximizing audibility, (ii) use of the fitting software to examine the aided audibility of high frequency speech/noise bands, and (iii) fine tuning of the compression settings based on patient feedback and/or aided discrimination/detection tasks for high frequency consonant sounds such as /s/ and /ʃ/ (Glista et al. 2009; Wolfe et al. 2010, 2011). Others have used the maximum aided audible frequency (defined as the frequency at which hearing loss exceeds 90 dB HL, for example) as the default start frequency for nonlinear frequency compression which was then adjusted based on the hearing aid users’ judgments of sound quality, keeping the compression ratio constant (Simpson et al. 2005, 2006). Frequency compression settings may also be selected based on listener feedback alone (Bohnert, 2010). Another approach is to use an algorithm (SoundRecover Fitting Assistant, Alexander 2013) that selects frequency compression settings from a range of suggested settings generated by the manufacturer software with the goal of maximizing the audible bandwidth for the listener’s audiogram (Perreau et al. 2013; Hillock-Dunn et al. 2014; McCreery et al. 2014). The suitability of the first three approaches, which depend on varying degrees of subjective listener feedback and participation, is questionable for infants and young children. Use of audiogram-based methods may also be problematic given that ABR- and ASSR-based estimates of audiometric thresholds may not be precise and often are not available at frequencies above 4 kHz (Gorga et al. 1985, 2006; Sininger et al. 1997; Stapells and Oates 1997; Oates and Stapells 1998; Stapells 2000; Herdman and Stapells 2001; Cone-Wesson et al. 2002; Vander Werff et al. 2002; Stueve and O’Rourke 2003). Current standards for clinical verification of frequency-lowering hearing aid processing in infants and young children call for simulated real-ear electroacoustic measurements of hearing aid output for fricatives and narrowband noise stimuli, which establishes estimated audibility with this feature enabled (Glista and Scollie, 2009). While this is an essential step in the fitting process, such measures may not be sensitive to possible negative effects on the discriminability of spectrally complex sounds such as fricatives. Given that nonlinear frequency compression is currently enabled by default in manufacturer programming software and there is potential for decrements in performance with this feature, it is troubling that there is not currently a clinical tool that is sensitive to changes in spectral contrast.

In the early years after ABR was first described, there were attempts to use this response to establish aided audibility objectively (Kiessling, 1982; Gorga, Beauchaine, and Reiland, 1987). Unfortunately, stimuli such as clicks or brief tone bursts that are optimal for evoking an ABR are often accompanied by significant output distortion when processed by a wide dynamic range compression hearing aid, especially a hearing aid with higher gain characteristics (Garnham et al. 2000). Cortical auditory evoked potentials (CAEPs) have been used to help establish audibility of sound for infants following hearing aid fitting (Golding et al. 2007; Carter et al. 2010, Chang et al. 2012, Van Dun, Carter, and Dillon 2012). Although CAEPs may objectively establish the aided audibility of sounds post-fitting, the amplitude of these responses may not be sensitive to changes in hearing aid gain (Billings, Tremblay, and Miller 2011; Marynewich, Jenstad, and Stapells 2012). However, these long-latency neural responses may be elicited by spectrally complex and/or steady-state sounds, including speech and do not require sharp stimulus onsets in order to be recorded at the scalp, as is the case with ABR. At this time, however, there are few reports demonstrating cortical evoked potentials in the evaluation of frequency compression settings. Glista et al. (2012) reported that frequency compression increased the amplitude of aided CAEPs elicited by a 4 kHz tone burst relative to a conventional hearing aid processing condition in five adolescent hearing aid users. While this method may be sensitive to changes in audibility for high frequency sounds with frequency compression, it does not establish preservation of sufficient spectral detail for accurate identification and discrimination of high frequency speech sounds.

The acoustic change complex (ACC) is a CAEP that is correlated with detection of spectral and/or intensity changes in an ongoing stimulus (Ostroff et al. 1998; Martin and Boothroyd 2000). The ACC reflects physiological auditory discrimination at the level of the cortex and it can be evoked using spectrally complex signals, such as speech sounds. These properties suggest that the ACC could allow clinicians to detect decrements in spectral contrast caused by excessive frequency compression.

The aim of this work was to describe the effects of nonlinear frequency compression on (1) the ACC, (2) speech perception and (3) perception of complex non-speech sounds. To accomplish this aim, a series of experiments were conducted with adults with normal hearing (Experiment 1) and with high frequency sensorineural hearing loss (Experiment 2). The stimuli used in these physiological and behavioral assessments were processed using a custom MATLAB program to simulate a broad range of hearing aid nonlinear frequency compression settings. For each frequency compression condition, ACC response recordings, vowel identification, consonant identification, and speech perception in noise tasks were completed. The effect of frequency compression was also tested using behavioral discrimination of spectral ripple contrasts. It was hypothesized that for the normal hearing listeners in Experiment 1, vowel identification, consonant identification, ripple discrimination thresholds, and ACC amplitudes would decrease and that the 50% signal-to-noise ratio threshold for speech identification would increase (become poorer) with increasing frequency compression ratios. In Experiment 2, individualized gain adjustments were made to the experimental frequency-compressed stimuli using each subject’s audiometric thresholds and a standard prescriptive algorithm. Then the subjects completed the same tasks used in Experiment 1. For this group of hearing-impaired listeners, it was hypothesized that: (i) mild compression settings would either have no impact or possibly increase ACC amplitude and improve performance on vowel, consonant, behavioral ripple discrimination, and speech identification in noise tasks, and (ii) as the amount of compression was increased, ACC amplitude would decrease and performance on the behavioral tasks would become poorer.

Materials and Methods

Participants

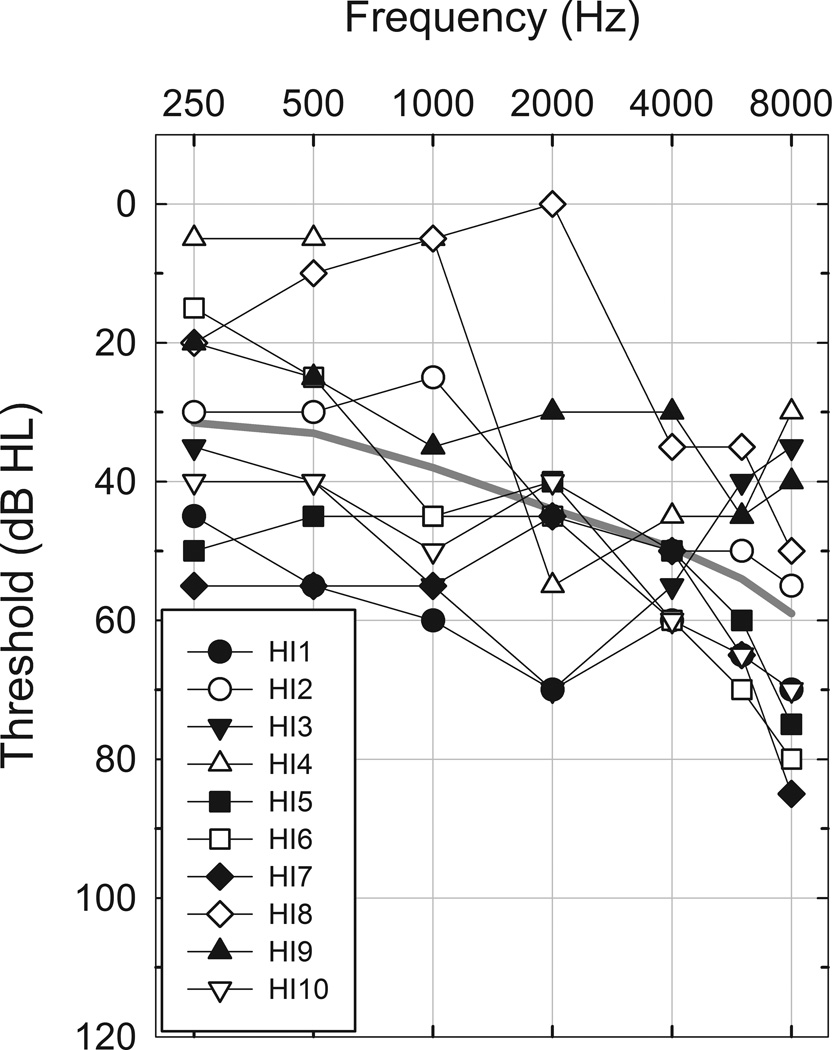

Experiment 1 involved the participation of 10 adults with normal hearing (9 F, 1 M, Mean age=23.8 years, SD = 3.7 years, Range = 19–32 years). Normal hearing was defined as air conduction thresholds < 15 dB HL for octave intervals from 250 to 8000 Hz. Experiment 2 involved the participation of 10 adults (5 F, 5 M, Mean age=54.7 years, SD = 9.0 years, Range = 38–65 years) with sensorineural hearing loss. For this group of participants, audiometric thresholds for pure tones presented by air conduction were measured at octave intervals from 250 to 8000 Hz and at 6000 Hz using standard clinical procedures. Figure 1 shows mean and individual audiometric thresholds for this hearing-impaired group. Table 1 provides greater detail about the individuals who took part in Experiment 2. All subjects were native speakers of English and had no self-disclosed history of neurological or cognitive impairment.

Figure 1.

Individual thresholds of the hearing-impaired participants of Experiment 2. The solid gray line shows mean thresholds.

Table 1.

Participant information for Experiment 2

| Subject ID | Age | Gender | Age at which hearing loss was identified (years) |

Duration of HA use (years) |

HA Type |

|---|---|---|---|---|---|

| HI1 | 64 | F | 55 | 8 | BTE (AU) |

| HI2 | 40 | F | 40 | 01 | - |

| HI3 | 38 | M | ~2–4 | <1 | BTE (AU) |

| HI4 | 58 | M | 44 | 02 | - |

| HI5 | 65 | F | 61 | 3 | BTE (AU) |

| HI6 | 58 | M | 28 | ~13 | ITE (AD) |

| HI7 | 59 | M | 45 | 6 | BTE (AU) |

| HI8 | 54 | F | 16 | 20 | ITE (AS) |

| HI9 | 55 | M | 48 | 1 | BTE (CROS) |

| HI10 | 56 | F | 46 | <1 | BTE (AU) |

Subject HI2 was undergoing a hearing aid evaluation concurrent with participation in this study.

Subject HI4 was not a current or past hearing aid user.

Subject HI6 previously wore an ITE hearing aid for approximately 1 year, but had discontinued use at the time of his participation in this study.

BTE=behind the ear, ITE=in the ear, CROS=Phonak CROS hearing aid for single-sided deafness

General Procedures

Prior to beginning the protocol, consent was obtained and audiometric testing completed. The study protocol had three components: perceptual testing using speech, perceptual testing using ripple noise stimuli, and evoked potential testing. Completion of the full protocol required 5–6 hours. Breaks were provided as needed and in some cases the results were obtained in two or more sessions. Study participants were compensated for their participation and all procedures were approved by the University of Iowa Institution Review Board.

Stimuli

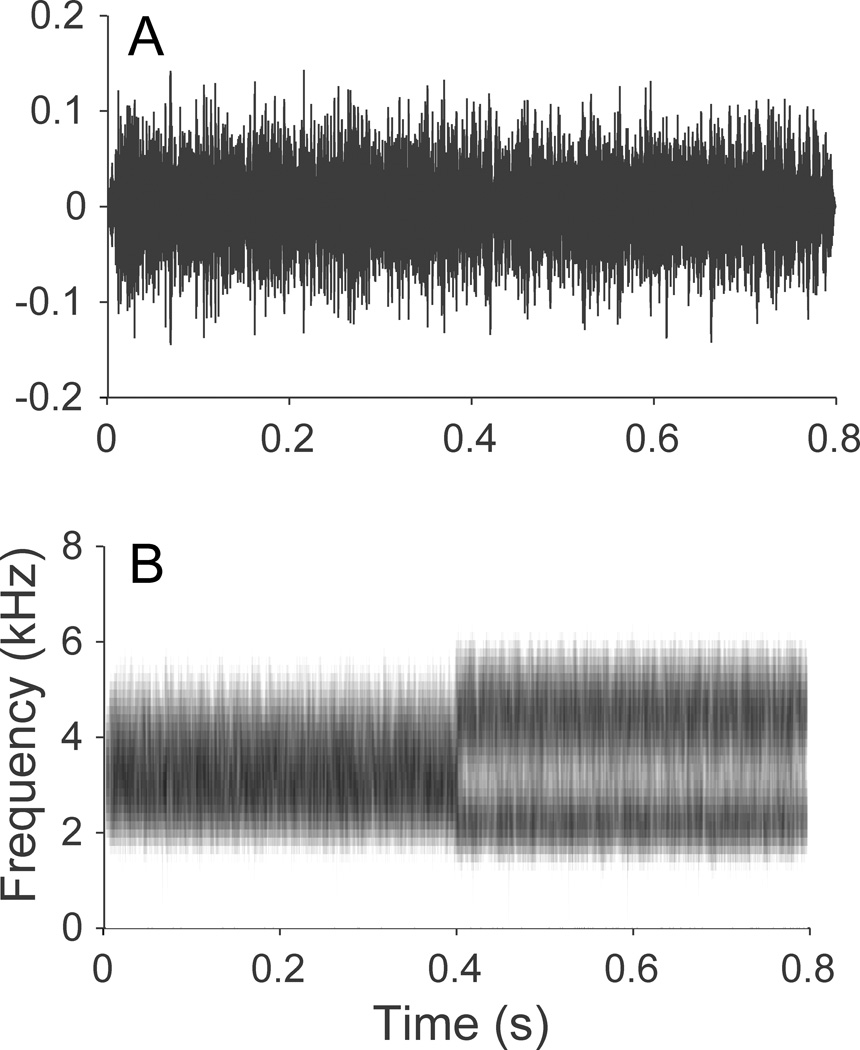

The stimulus used to elicit the ACC was a spectral ripple contrast. The spectral ripple contrast consisted of a broadband noise with a spectrum modulated by a rectified sine function with sine starting phase over the first half of duration that switches at the midpoint to cosine phase. The use of spectral ripple contrast stimuli in an ACC paradigm is established in the cochlear implant (CI) literature (Won et al. 2011) and has been shown to be sensitive to experimental conditions that affect spectral resolution (e.g. number of active CI electrodes/channels). Each half of the ripple contrast stimulus consisted of a broadband noise, 400 ms in length, constructed from 400 summed sinusoids with frequencies distributed logarithmically between 1500 and 6000 Hz; the spectrum of the broadband stimulus was then modulated with a maximum modulation depth of 30 dB using a rectified sinusoidal spectral envelope with sine or cosine starting phase. The ripple contrast stimulus was created by concatenating the leading sine-phase ripple with its cosine counterpart. Figure 2 shows a time waveform and spectrogram of the ripple contrast stimulus. The spectrum of each ripple stimulus was filtered using a modified raised sine window that was symmetrical in log frequency with a 1 octave plateau and ½ octave ramps, similar to Anderson et al. (2011). This step was taken to mitigate spectral edge effects, which may inflate behavioral ripple discrimination thresholds. A single ripple density, 0.5 ripples per octave (RPO), was used for the ACC experiment. This single value was selected to decrease test time compared to a physiological ripple threshold procedure reported by Won et al. (2011), where recordings were made at multiple ripple densities. Pilot data indicated that ripple contrasts of 0.5 RPO are behaviorally discriminable for listeners with normal hearing and sloping sensorineural hearing loss. Stimulus levels were calibrated in a 2cc coupler using a 1 inch condenser microphone and Quest model 2700 sound level meter. Stimuli were presented monaurally via an ER-3A insert earphone at 70 dBA peak level with a 1.2 second interstimulus interval. The non-test ear was plugged. The test ear was counterbalanced across subjects. During testing, study participants sat in a reclining chair and were encouraged to read, play with an iPad, or watch captioned videos in order to stay alert.

Figure 2.

Time waveform (A) and spectrogram (B) of ripple contrast stimulus. 0.5 ripples per octave, 800 ms duration.

EEG Recording

Prior to evoked response recording, surface electrodes were applied to the scalp on the side of the head contralateral to the test ear. A ground electrode was placed on the forehead and electroencephalography (EEG) activity measured differentially from a single recording channel: vertex (+) to contralateral mastoid (−). Recordings in pilot subjects established that amplitudes of the P1-N1-P2 onset and changes responses were comparatively robust for this particular recording channel. Eyeblinks were monitored using two additional electrodes placed above and lateral to the stimulus-contralateral eye; sweeps with excessive eye blink artifact were rejected online. Responses were band-pass filtered between 1 and 30 Hz and amplified (gain=10,000) prior to averaging using custom LabView software. Each stimulus was presented 200 times, in blocks of 100 sweeps. Stimulus conditions were randomized and counterbalanced across the recording session to avoid effects of adaptation or changes in patient state.

Analysis Procedure

The evoked potentials recorded in this study were analyzed off-line using custom-designed (MATLAB, R2013a) software. Replications recorded in each frequency compression condition were averaged together and smoothed using a 40 millisecond wide boxcar filter before peak-picking. The analysis window was 1200 milliseconds in length with 0 milliseconds defined as the onset of the stimulus. The software automatically picked the N1 and P2 peaks. N1 was defined as the most negative point within a latency interval ranging from 80 to 200 milliseconds post stimulus onset for the onset response and from 480 to 600 milliseconds post stimulus onset for the change potential. For both the onset and change potentials, the P2 peak was defined as the most positive voltage recorded within a time window of 120 milliseconds duration after N1. Infrequently, when the averaged waveform was relatively noisy and ACC responses were longer latency or had broader peaks, the algorithm would incorrectly pick N1-P2 before P1. In these cases the points identified by the software as N1 and P2 were adjusted by the first author based on visual inspection of the waveforms. In a select few cases in the more aggressive frequency compression conditions, the automatic peak-picker identified ACC responses that were deemed questionable upon visual inspection of the waveform. Rather than override the peak-picker and mark these responses as zero amplitude, which would tend to exaggerate amplitude differences between the frequency compression conditions, the picked peaks were left unchanged. Peak-to-peak amplitude of both the onset and change potentials was calculated from the voltage difference between the N1 trough and the following P2 peak. ACC amplitudes were defined as the absolute voltage difference between N1 and P2 peaks of the response following the change in the stimulus. ACC amplitudes were normalized by dividing by the amplitude of the N1-P2 response elicited by the stimulus onset to control for possible differences in amplitude related to changes in patient state and recording conditions and to allow for simpler comparisons across subjects.

Behavioral Tasks

The perceptual consequences of frequency compression were assessed with four tasks. All stimuli in each of the tests were presented to the test ear using an ER-3A insert earphone. First, closed set vowel identification was measured across conditions of frequency compression using a ten alternative forced choice procedure. The vowel stimuli consisted of medial vowels (e.g. /hVd/ for the ten English vowels /i/, /u/, /æ/, /eɪ/, /ʌ/, /ɛ/, /ɑ/, /ʊ/, /ɪ/, and /oʊ/) each recorded from ten adult female talkers (Hillenbrand et al. 1995) and presented in random order, for a total of 100 stimuli per condition. Second, closed set consonant identification was measured. This test (Turner et al. 1995) consists of sixteen consonant sounds, in an /aCa/ context, produced by two adult males and two adult females for a total of 64 trials per condition. Stimulus level for these tests was 70 dB A. Stimuli for each of these tests were presented and participant responses were registered using custom MATLAB software in conjunction with PsyLab (Hansen 2006) experimental interfaces. Participants indicated the perceived speech sound using a touch sensitive monitor and graphic user interface. With the exception of a brief familiarization with the task, no feedback on performance was given during testing.

Third, speech perception in noise for these same frequency compression conditions was evaluated using the QuickSIN test (Killion et al. 2001). Presentation level for this test was set at 70 dB HL, with volume units in the audiometer channel adjusted to 0 for the standard calibration tone, per the manufacturer instructions. The test consists of key word identification from sentences spoken by an adult female talker in multi-talker babble background noise. Two lists, selected at random, were used to estimate the signal-to-noise ratio at which performance was approximately 50% correct (i.e. ‘SNR-50’) for each frequency compression condition. Choice of this particular test was motivated by the results of Ellis and Munro (2013). They found significant negative effects of frequency compression on speech in noise performance in normal hearing individuals using IEEE sentences from which the QuickSIN test was derived.

The final method used to assess how frequency compression alters perception was a behavioral spectral ripple discrimination task. Spectral ripple discrimination thresholds, defined as the maximum number of spectral peaks per octave for which the listener can discern standard vs. inverted spectral ripples, were measured using a standard, adaptive, three interval, forced-choice (3AFC) procedure (Levitt 1971) comparable to that described in earlier works (Henry et al. 2005). A 1 dB level increase and a 1dB decrease were applied randomly to two of three intervals to control for possible loudness difference cues. Threshold was estimated from the mean ripples per octave of the last four reversals in a run. Three runs were completed per compression condition and mean threshold for each condition was calculated from the three runs. Again, stimuli were presented and participant responses were registered using custom MATLAB software and the PsyLab interface. Frequency compression conditions applied to the raw stimuli were the same as those used in the electrophysiology task. Stimulus intensity was calibrated for a peak presentation level of 70 dBA. All stimuli were presented monaurally with an ER-3A insert earphone. Response feedback was enabled throughout the test interval.

Signal Processing Procedures

All sound files were encoded in .WAV format with a 44100 Hz sampling rate and 16 bit depth. Manipulation of frequency compression parameters was accomplished using signal processing software. Five experimental frequency compression conditions were created. Using custom MATLAB software, the raw sound files used for each experimental task were 1) segmented using a moving, 882 point, raised cosine window, 2) converted by FFT to the frequency domain, and 3) filtered into low pass and high pass components using a rectangular filter with 300 dB attenuation slopes within the bandwidth of single FFT bin. Frequency compression was applied to the high pass input frequency vector with the compression function described by Simpson et al. (2005) and used in their wearable experimental device. No compression was applied to the low frequency component of the input segment. The low pass and compressed high pass components were then converted to the time domain using an inverse FFT and summed. The next segment was likewise compressed and summed with the preceding compressed segment using a half-overlap increment. For this study, the start frequency was fixed at 1500 Hz in all conditions. This boundary for the low pass and high pass segments coincided with the lowest frequency typically used in commercial frequency compression hearing aids. The five signal processing conditions were defined by the compression ratios, which were 1:1 (no compression), 1.5:1, 2:1, 3:1, and 4:1, respectively. These ratios were selected to encompass the range of compression ratios used in commercial frequency compression aids. It should be noted that these were not individually optimized frequency compression start frequencies and compression ratios for these listeners. To the contrary, these settings were specifically selected to result in poorer speech perception performance in both groups and allow us to test if behavioral spectral ripple discrimination and amplitude of ACC responses elicited by spectral ripple contrasts are both affected under these same conditions.

In addition to the nonlinear frequency compression manipulations described above, individually prescribed gain adjustments were applied to the experimental stimuli for the hearing-impaired participants in Experiment 2. Each participant’s gain profile was calculated by subtracting DSL i/o (Scollie et al. 2005) audibility targets for a listener with normal hearing (0 dB HL thresholds, 250–8000 Hz) for a 65 dB SPL speech stimulus from targets calculated based on the subject’s own audiometric thresholds. These calculated gain settings were implemented using an audiometer (Grason-Stadler GSI-61) to adjust overall level in line with a multi-band digital programmable equalizer (Alesis DEQ 830) to create 1/3 octave band-specific gain adjustments.

Data Analyses

Data analysis for Experiment 1 and 2 included univariate repeated measures ANOVAs with frequency compression ratio as the within-subjects main effect of interest. P-values of paired t-tests were adjusted for multiple comparisons according to the Holm-Bonferroni method (Holm, 1979). Vowel and consonant scores, expressed as percent correct, were transformed into rationalized arcsine units (RAU) to better approximate the normal distribution (Sherbecoe and Studebaker, 2004). Results for each frequency compression condition were compared to the non-compressed condition as baseline performance on each task. In those cases where a Mauchly test revealed that the repeated measures data significantly departed from the sphericity assumption of the ANOVA, the reported p-value of the repeated measures F-test was modified using a Greenhouse-Geisser correction to control for possible inflation of the Type 1 error rate and the epsilon value (ε) is reported with the F-test results. In addition, linear mixed effects analyses were completed for each of the behavioral measures with ACC amplitude and a categorical factor representing frequency compression condition included as predictors. These were done to determine whether individual differences in ACC amplitude were predictive of performance on the behavioral measures across frequency compression conditions. The models also included a random subject effect to account for the repeated measures completed with each participant. Statistical analyses were completed using the R programming environment (Version 3.1.1).

Results

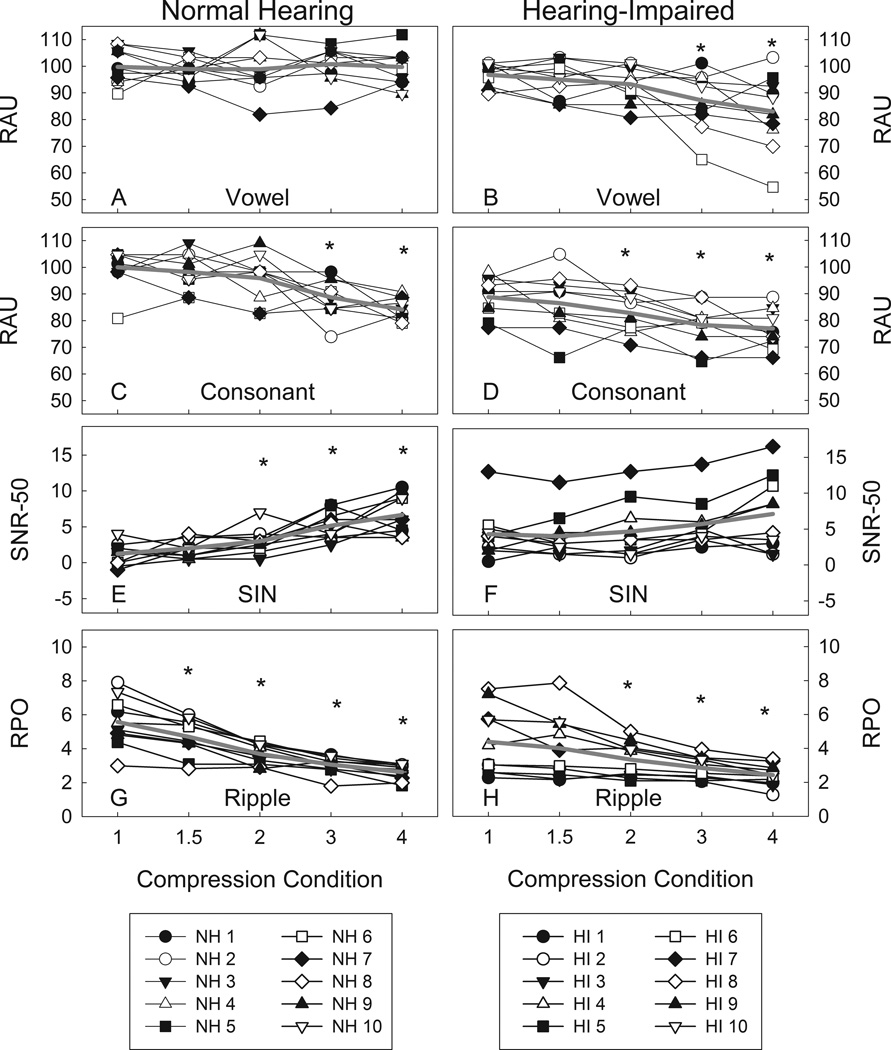

Figure 3 shows group means and individual results for the normal hearing and hearing-impaired listeners for vowel identification, consonant identification, speech perception in noise, and behavioral ripple discrimination.

Figure 3.

Group mean (gray line) and individual results (connected symbols) for normal hearing (left column) and hearing-impaired listeners (right column) for vowel perception (A and B), consonant perception (C and D), speech perception in noise (E and F), and ripple discrimination (G and H). A“*” indicates conditions significantly different from the 1:1 compression condition at an α = 0.05 level.

Analysis of the vowel perception data revealed no effect of frequency compression ratio on identification scores for the normal hearing listeners (F(4,36) = 0.18, p = 0.95). Performance was generally high on this task (above 90%) and remained so across the frequency compression conditions.

Analysis of the vowel perception data for the hearing-impaired listeners revealed a significant main effect of frequency compression ratio on identification score (F(4,36) = 6.46, p = 0.01, ε = 0.47). Vowel identification scores were similar to those of the normal hearing listeners in the baseline (no frequency compression condition). Unlike the normal hearing listeners in Experiment 1, paired t-tests indicated that vowel identification scores were significantly poorer in the 3:1 (t(9) = 3.48, p = 0.02) and 4:1 (t(9) = 3.60, p = 0.02) conditions compared to baseline for the hearing-impaired listeners.

Analysis of the consonant perception data for the listeners with normal hearing revealed a significant main effect of frequency compression condition on identification scores (F(4,36) = 12.12, p < 0.001). Paired t-tests indicated that consonant identification score was significantly poorer in the 3:1 (t(9) = 3.63, p = 0.02) and 4:1 conditions (t(9) = 7.25, p < 0.001) relative to the baseline condition. This result was consistent with our hypothesis that greater frequency compression ratios would have a negative impact on consonant perception.

Analysis of the consonant perception data for the listeners with hearing loss revealed a significant main effect of frequency compression condition on identification score (F(4,36)= 12.29, p < 0.001). Paired t-tests indicated that consonant identification score was significantly poorer in the 2:1 (t(9) = 2.89, p = 0.04), 3:1 (t(9) = 7.76, p < 0.001) and 4:1 (t(9) = 9.12, p < 0.001) conditions relative to the baseline condition. It was hypothesized that consonant identification would be significantly poorer in the highest frequency compression ratio conditions in the subjects with hearing loss, and these results are consistent with that hypothesis.

A repeated measures analysis of variance procedure revealed a significant main effect of frequency compression on performance on the QuickSIN for the listeners with normal hearing (F(4,36) = 17.65, p < 0.001). Paired t-tests indicated that speech identification in noise was significantly poorer in the 2:1 (t(9) = −3.95, p = 0.01), 3:1 (t(9) = −4.78, p = 0.003), and 4:1 (t(9) = −6.70, p < 0.001) frequency compression conditions compared to the no compression baseline condition. This result was consistent with our hypothesis that frequency compression would have a negative effect on speech perception in noise for listeners with normal hearing. It is also consistent with the findings of Ellis and Munro (2013), who found significant negative effects of nonlinear frequency compression ratio on normal hearing listeners’ speech perception in noise. Analysis of the QuickSIN data also revealed a significant main effect of frequency compression condition on estimates of SNR-50 for the listeners with hearing loss (F(4,36) = 6.30, p < 0.001). However, paired t-tests indicated that SNR-50 was not significantly different from baseline in any of the other frequency compression conditions at an α = 0.05 level following correction for multiple comparisons.

Results of a repeated measures ANOVA revealed a significant main effect of frequency compression on ripple discrimination threshold in the listeners with normal hearing (F(4,36) = 51.09, p < 0.001, ε = 0.40). Paired t-tests revealed that ripple discrimination threshold was significantly poorer in the 1.5:1 (t(9) = 4.59, p = 0.001), 2:1 (t(9) = 5.97, p < 0.001), 3:1 (t(9) = 7.33, p < 0.001), and 4:1 (t(9) = 8.45, p < 0.001) frequency compression conditions relative to the baseline no compression condition. This result is consistent with our hypothesis that behavioral ripple threshold would decrease with increasing frequency compression ratio in normal hearing listeners. Analysis of the behavioral ripple data also revealed a significant main effect of frequency compression on ripple discrimination threshold for the listeners with hearing loss (F(4,36) = 8.16, p < 0.001). Paired t-tests revealed that ripple discrimination threshold was significantly poorer in the 2:1 (t(9) = 2.94, p = 0.03), 3:1 (t(9) = 5.10, p = 0.003), and 4:1 (t(9) = 4.23, p = 0.007) frequency compression conditions. These results were similar to those of the listeners with normal hearing in Experiment 1 and consistent with our hypothesis that ripple discrimination would be significantly poorer at the highest frequency compression conditions.

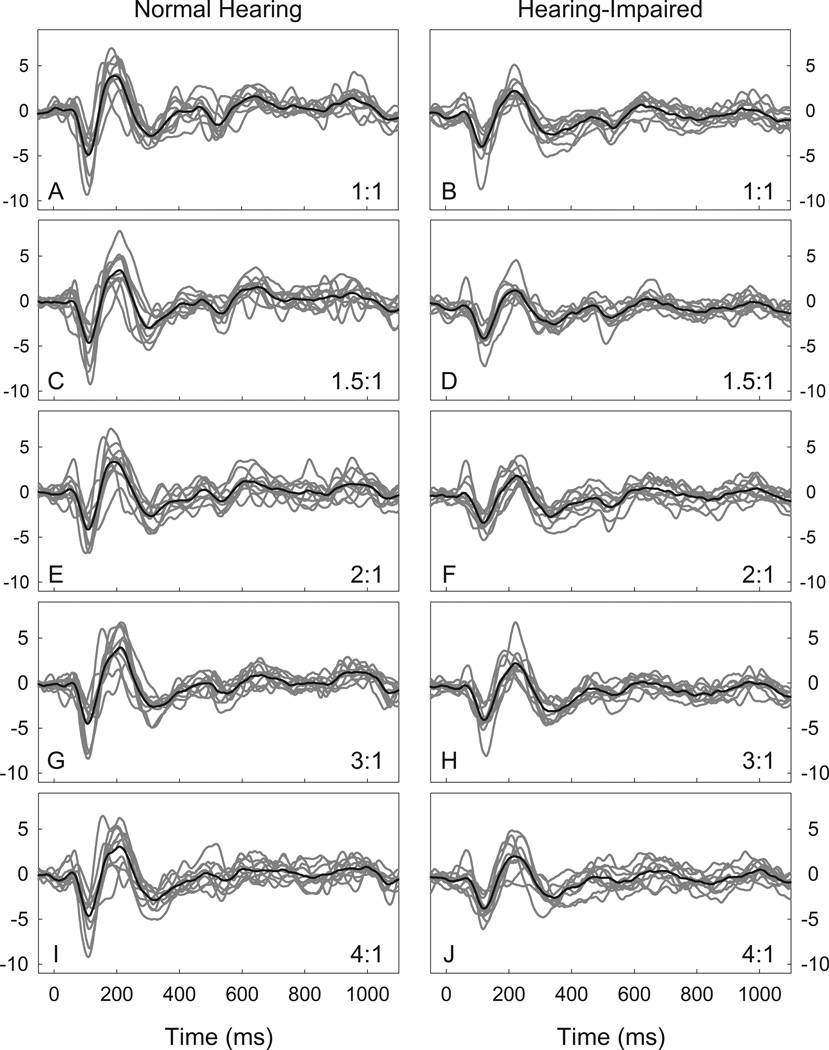

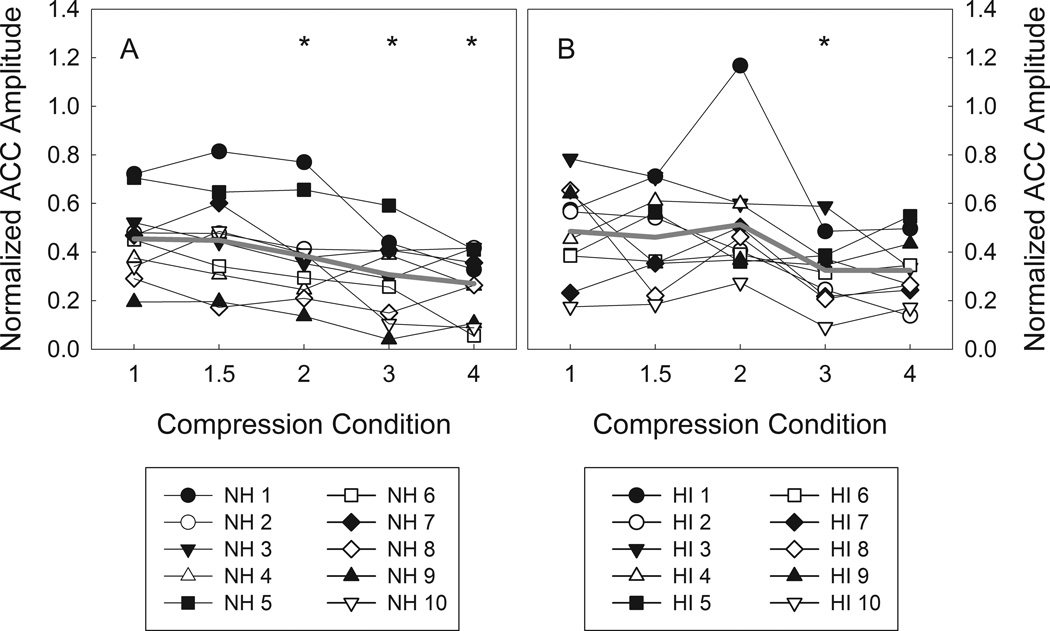

Figure 4 shows individual and grand mean waveforms for each frequency compression condition for the normal hearing and hearing-impaired listeners. As compression ratio increases, ACC N1-P2 amplitude is noticeably smaller. Figure 5 shows normalized ACC amplitudes for normal hearing and hearing-impaired listeners. Analysis of the ACC response data revealed a significant main effect of frequency compression on normalized ACC amplitude in the listeners with normal hearing (F(4,36) = 8.16, p < 0.001). Compared to the no compression condition (M = 0.45, SD = 0.17), paired t-tests revealed that normalized ACC amplitude was significantly smaller in the 2:1 (M = 0.38, SD = 0.20, t(9) = 2.94, p = 0.03), 3:1 (M = 0.31, SD = 0.17, t(9) = 5.10, p = 0.003), and 4:1 (M = 0.27, SD = 0.14, t(9) = 4.23, p = 0.007) frequency compression conditions. The physiological data show an effect analogous to that of the behavioral ripple discrimination task for compression ratios of 2:1, 3:1, and 4:1. This tendency of smaller ACC amplitudes at higher compression ratios, with no effect for mild compression ratios (e.g. 1.5:1), is consistent with our hypothesis for the ACC measure.

Figure 4.

Grand mean (black) and individual waveforms (gray) for normal hearing (n=10, left column) and hearing-impaired listeners (n=10, right column) for ripple contrast stimuli. Compression ratio increases from 1:1 at the top (A and B) to 4:1 at the bottom (I and J).

Figure 5.

Grand mean (gray line) and individual normalized ACC amplitudes (connected symbols) for normal hearing (A) and hearing-impaired listeners (B). A“*” indicates conditions significantly different from the 1:1 compression condition at an α = 0.05 level.

As was the case with the listeners with normal hearing in Experiment 1, ACC N1-P2 amplitude appears to become smaller in the 3:1 and 4:1 compression conditions relative to the baseline condition for the hearing-impaired listeners. Analysis of the ACC response data revealed a significant main effect of frequency compression on normalized ACC amplitude (F(4,36) = 4.42, p = 0.005). Relative to the baseline condition (M = 0.49, SD = 0.20), paired t-tests revealed that normalized ACC amplitude was significantly smaller in the 3:1 (M = 0.33, SD = 0.14, t(9) = 3.39, p = 0.03) but not the 4:1 (M = 0.32, SD = 0.14, t(9) = 2.45, p = 0.11) frequency compression condition. As with the normal hearing listeners in Experiment 1, the tendency of smaller ACC amplitudes in the listeners with hearing loss at higher compression ratios is consistent with our hypothesis for the ACC measure.

ACC amplitudes and performance on the behavioral measures both tended to decrease with increasing frequency compression ratio in the normal hearing and hearing-impaired groups. However, individual variability in ACC amplitude was not predictive of vowel identification, consonant identification, SNR-50, or ripple threshold at an α = 0.05 level in either group of listeners after including a categorical factor accounting for the effect of the frequency compression condition in the mixed effects models.

Discussion

The goal of these experiments was to investigate whether CAEPs, in particular the ACC, are sensitive to changes in nonlinear frequency compression ratio. Our experimental conditions applied to the stimuli used in this task consisted of five frequency compression ratios (1:1, 1.5:1, 2:1, 3:1, and 4:1), encompassing the range of ratios that can be implemented in a wearable personal hearing aid. It was not a goal of this study to select or define optimal frequency compression settings on an individual basis, but rather to determine performance over a wide range of settings. Our selection of parameters for our experimental conditions differs from previous investigations of the effects of nonlinear frequency compression where only a single frequency compression setting was compared to conventional processing. A second goal was to determine the degree to which performance on measures of speech perception (vowels, consonants, and sentences in noise) and behavioral discrimination of spectrally complex non-speech sounds are also affected in these same frequency compression conditions.

Previous work has demonstrated that amplitude of ACC responses elicited by ripple contrast stimuli is smaller in signal processing conditions that reduce spectral contrast, such as in cochlear implant vocoder simulations with varying numbers of simulated channels (Won et al 2011) or with frequency allocation manipulations in cochlear implant mapping (Scheperle 2013). In the current experiments, we demonstrated significant negative effects of increasing frequency compression ratio on ACC amplitude in normal hearing and hearing-impaired listeners, consistent with diminished spectral contrast with increasing frequency compression. The contribution of loss of spectral contrast to decrements in ACC amplitude is supported by our finding that behavioral ripple discrimination thresholds were likewise negatively affected for compression ratios of 2:1, 3:1, and 4:1 in both normal hearing and hearing-impaired listeners. Ripple contrast stimuli, like the ones used in this study, are appealing in that they can readily be modified to have start frequencies matched to different knee points of compression (1500 Hz was used for these experiments). While the 0.5 RPO density stimulus was sufficient to elicit ACC responses from both normal hearing and hearing-impaired listeners across a range of frequency compression settings, it may be the case that alternate densities (e.g. 1 RPO, 2 RPO, etc.) or the use of a series of ripple densities to determine physiologic ripple threshold (similar to Won et al. 2011) may also be applied to evaluation of the effects of different frequency compression parameters. It may be that physiological threshold for the ACC response to ripple contrasts would serve as a better predictor of performance on various behavioral tasks across frequency compression conditions. This approach would necessarily be more time-consuming than one using a single density contrast stimulus. More work is needed to identify optimal stimulus characteristics for the purpose of using the ACC response to evaluate nonlinear frequency compression parameters.

Results of the vowel identification task in Experiment 2, which indicated negative effects of combined low start frequency and high compression ratio on performance, are consistent with the findings of Perreau et al. (2013), who found significant negative effects of frequency compression (implemented in a commercial hearing aid) on vowel identification in listeners with hearing loss. As stated previously, many of the participants in the Perreau et al. (2013) study were fit with frequency compression settings selected using SoundRecover Fitting Assistant that were nearly identical to the maximum frequency compression condition in this study (1.5 kHz knee point, 4:1 compression ratio). This would suggest that those conditions necessary to maximize audibility of high frequency consonant sounds may contribute to deleterious effects on vowel identification in some users. It is, however, noteworthy that similar reduction in vowel identification scores using these same frequency compression conditions was not observed in the listeners with normal hearing. This differential effect may be due to broadening of spectral filters in the periphery in listeners with sensorineural hearing loss. It has been demonstrated that these listeners require greater peak to trough amplitude and/or greater separation of peaks in order to accurately discriminate between different formant distributions and that they need relatively more contrast to perform well as the degree of hearing loss increases (Leek et al. 1987).

Negative effects on consonant identification were found for both groups of listeners at the two highest frequency compression ratios. Other studies (Simpson et al. 2006) have shown negative effects on consonant identification scores in a subset of listeners where frequency compression settings were adjusted on an individual basis prior to testing. Our experimental design did not include individual adjustment of these parameters. It may be the case that our highest frequency compression conditions (3:1 and 4:1 ratios) were more aggressive than would have been typically prescribed for these listeners, contributing to these decrements. This highlights the negative effects on consonant identification of excessive nonlinear frequency compression settings. Clearly, great care should be taken in selecting these settings in young hearing aid users given that “optimal” and “excessive” frequency compression parameters may be more difficult to define in this population.

Significant negative effects on speech recognition in noise were also observed with increasing frequency compression ratio. This result is consistent with the findings of Ellis and Munro (2013), who found significant decrements in performance using similar materials (IEEE sentences) with ratios greater than or equal to 2:1 and a similarly low knee point of frequency compression (1.6 kHz). Though a significant negative main effect on speech in noise performance was observed in our hearing-impaired cohort, differences relative to the baseline (1:1) condition were not significant on the group level following correction for multiple comparisons. It may be the case that with two lists per condition, with each list consisting of only six sentences with five keywords each, that the test was under-powered with respect to the size of the effect in hearing-impaired listeners.

Conclusions

The cumulative results of these experiments demonstrate the following: 1) the amplitude of ACC responses elicited by spectral ripple contrast stimuli is sensitive to frequency compression settings, and 2) performance on speech perception and ripple discrimination tasks is negatively affected as frequency compression ratios increase. Further work is needed to determine optimal stimulus and recording parameters for the application of the ACC response to the evaluation of frequency compression settings. These preliminary results suggest that the ACC measure may be a promising clinical tool for the verification and selection of appropriate frequency lowering settings in hearing aids for infants and young children, contributing to optimal speech and language outcomes in this population.

Acknowledgements

Financial support for this project was provided by the National Institutes of Health, National Institute on Deafness and Other Communication Disorders under awards F31DC012961, P50DC000242, and R01DC012082.

Wu Yu-Hsiang and Shawn Goodman provided invaluable technical advice without which this work could not have been completed.

Financial Disclosures/Conflicts of Interest:

This research was funded by the NIH/NIDCD

References

- Anderson ES, Nelson DA, Kreft H, et al. Comparing spatial tuning curves, spectral ripple resolution, and speech perception in cochlear implant users. The J Acoust Soc Am. 2011;130(1):364–375. doi: 10.1121/1.3589255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander JM. Individual variability in recognition of frequency-lowered speech. Semin Hear. 2013;34:86–109. [Google Scholar]

- Bentler R, Walker E, McCreery R, et al. Nonlinear Frequency Compression in Hearing Aids: Impact on Speech and Language Development. Ear Hear. 2014;35(4):e143–e152. doi: 10.1097/AUD.0000000000000030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Billings CJ, Tremblay KL, Miller CW. Aided cortical auditory evoked potentials in response to changes in hearing aid gain. Int J Audiol. 2011;50(7):459–467. doi: 10.3109/14992027.2011.568011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bohnert A, Nyffeler M, Keilmann A. Advantages of a non-linear frequency compression algorithm in noise. Eur Arch Otorhinolaryngol. 2010;267:1045–1053. doi: 10.1007/s00405-009-1170-x. [DOI] [PubMed] [Google Scholar]

- Carter L, Golding M, Dillon H, et al. The detection of infant cortical auditory evoked potentials (CAEPs) using statistical and visual detection techniques. J Am Acad Audiol. 2010;21(5):347–356. doi: 10.3766/jaaa.21.5.6. [DOI] [PubMed] [Google Scholar]

- Chang HW, Dillon H, Carter L, Van Dun B, Young ST. The relationship between cortical auditory evoked potential (CAEP) detection and estimated audibility in infants with sensorineural hearing loss. Int J Audiol. 2012;51(9):663–670. doi: 10.3109/14992027.2012.690076. [DOI] [PubMed] [Google Scholar]

- Cone-Wesson B, Dowell RC, Tomlin D, et al. The auditory steady-state response: comparisons with the auditory brainstem response. J Am Acad Audiol. 2002;13(4):173–187. [PubMed] [Google Scholar]

- Ellis RJ, Munro KJ. Does cognitive function predict frequency compressed speech recognition in listeners with normal hearing and normal cognition? Int J Audiol. 2013;52(1):14–22. doi: 10.3109/14992027.2012.721013. [DOI] [PubMed] [Google Scholar]

- Garnham J, Cope Y, Durst C, et al. Assessment of aided ABR thresholds before cochlear implantation. Br J Audiol. 2000;34(5):267–278. doi: 10.3109/03005364000000138. [DOI] [PubMed] [Google Scholar]

- Glista D, Easwar V, Purcell DW, et al. A pilot study on cortical auditory evoked potentials in children: Aided CAEPs reflect improved High-Frequency audibility with frequency compression hearing aid technology. Int J Otolaryngol. 2012;2012:1–12. doi: 10.1155/2012/982894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glista D, Scollie S. Modified verification approaches for frequency lowering devices. The Hearing Journal. 2009 Nov;:1–11. Retrieved from http://www.audiologyonline.com/articles/modified-verification-approaches-for-frequency-871. [Google Scholar]

- Glista D, Scollie S, Bagatto M, et al. Evaluation of nonlinear frequency compression: Clinical outcomes. Int J Audiol. 2009;48(9):632–644. doi: 10.1080/14992020902971349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golding M, Pearce W, Seymour J, et al. The relationship between obligatory cortical auditory evoked potentials (CAEPs) and functional measures in young infants. J Am Acad Audiol. 2007;18(2):117–125. doi: 10.3766/jaaa.18.2.4. [DOI] [PubMed] [Google Scholar]

- Gorga MP, Beauchaine KA, Reiland JK. Comparison of onset and steady-state responses of hearing aids: implications for use of the auditory brainstem response in the selection of hearing aids. J Speech Hear Res. 1987;30(1):130–136. doi: 10.1044/jshr.3001.130. [DOI] [PubMed] [Google Scholar]

- Gorga MP, Johnson TA, Kaminski JK, et al. Using a combination of click-and toneburst-evoked auditory brainstem response measurements to estimate pure-tone thresholds. Ear Hear. 2006;27(1):60–74. doi: 10.1097/01.aud.0000194511.14740.9c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorga MP, Worthington DW, Reiland, et al. Some comparisons between auditory brainstem response thresholds, latencies, and the pure-tone audiogram. Ear Hear. 1985;6(2):105–112. doi: 10.1097/00003446-198503000-00008. [DOI] [PubMed] [Google Scholar]

- Hansen M. Lehre und Ausbildung in Psychoakustik mit psylab: freie Software fur psychoakustische Experimente. Fortschritte der Akustik. 2006;32(2):591. [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners. The J Acoust Soc Am. 2005;118(2):1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Herdman AT, Stapells DR. Thresholds determined using the monotic and dichotic multiple auditory steady-state response technique in normal-hearing subjects. Scand Audiol. 2001;30(1):41–49. doi: 10.1080/010503901750069563. [DOI] [PubMed] [Google Scholar]

- Hillock-Dunn A, Buss E, Duncan N, et al. Effects of nonlinear frequency compression on speech identification in children with hearing loss. Ear Hear. 2014;35(3):353–365. doi: 10.1097/AUD.0000000000000007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, et al. Acoustic characteristics of American English vowels. The J Acoust Soc Am. 1995;97(5):3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Holm S. A simple sequentially rejective multiple test procedure. Scand J Stat. 1979:65–70. [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, et al. Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. The J Acoust Soc Am. 2004;116(4):2395–2405. doi: 10.1121/1.1784440. [DOI] [PubMed] [Google Scholar]

- Kiessling J. Hearing aid selection by brainstem audiometry. Scand Audiol. 1982;11(4):269–275. doi: 10.3109/01050398209087478. [DOI] [PubMed] [Google Scholar]

- Leek MR, Dorman MF, Summerfield Q. Minimum spectral contrast for vowel identification by normal-hearing and hearing-impaired listeners. The J Acoust Soc Am. 1987;81(1):148–154. doi: 10.1121/1.395024. [DOI] [PubMed] [Google Scholar]

- Levitt HCCH. Transformed up-down methods in psychoacoustics. The J Acoust Soc Am. 1971;49(2B):467–477. [PubMed] [Google Scholar]

- Martin BA, Boothroyd A. Cortical, auditory, evoked potentials in response to changes of spectrum and amplitude. The J Acoust Soc Am. 2000;107(4):2155–2161. doi: 10.1121/1.428556. [DOI] [PubMed] [Google Scholar]

- Marynewich S, Jenstad LM, Stapells DR. Slow cortical potentials and amplification—part I: N1-P2 measures. Int J Otolaryngol. 2012;2012:1–11. doi: 10.1155/2012/921513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCreery RW, Alexander J, Brennan MA, et al. The Influence of Audibility on Speech Recognition with Nonlinear Frequency Compression for Children and Adults with Hearing Loss. Ear Hear. 2014;35(4):440–447. doi: 10.1097/AUD.0000000000000027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oates P, Stapells DR. Auditory brainstem response estimates of the pure-tone audiogram: Current status. Semin Hear. 1998;19(1):61–85. [Google Scholar]

- Ostroff JM, Martin BA, Boothroyd A. Cortical evoked response to acoustic change within a syllable. Ear Hear. 1998;19(4):290–297. doi: 10.1097/00003446-199808000-00004. [DOI] [PubMed] [Google Scholar]

- Perreau AE, Bentler RA, Tyler RS. The contribution of a frequency-compression hearing aid to contralateral cochlear implant performance. J Am Acad Audiol. 2013;24(2):105–120. doi: 10.3766/jaaa.24.2.4. [DOI] [PubMed] [Google Scholar]

- Scheperle RA. Doctoral dissertation. 2013. Relationships among peripheral and central electrophysiological measures of spatial / spectral resolution and speech perception in cochlear implant users. Retrieved from Iowa Research Online ( http://ir.uiowa.edu/etd/5055/). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scollie S, Seewald R, Cornelisse L, et al. The desired sensation level multistage input/output algorithm. Trends Amplif. 2005;9(4):159–197. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sherbecoe RL, Studebaker GA. Supplementary formulas and tables for calculating and interconverting speech recognition scores in transformed arcsine units. Int J Audiol. 2004;43(8):442–448. doi: 10.1080/14992020400050056. [DOI] [PubMed] [Google Scholar]

- Simpson A, Hersbach AA, McDermott HJ. Improvements in speech perception with an experimental nonlinear frequency compression hearing device. Int J Audiol. 2005;44(5):281–292. doi: 10.1080/14992020500060636. [DOI] [PubMed] [Google Scholar]

- Simpson A, Hersbach AA, McDermott HJ. Frequency-compression outcomes in listeners with steeply sloping audiograms. Int J Audiol. 2006;45(11):619–629. doi: 10.1080/14992020600825508. [DOI] [PubMed] [Google Scholar]

- Sininger YS, Abdala C, Cone-Wesson B. Auditory threshold sensitivity of the human neonate as measured by the auditory brainstem response. Hear Res. 1997;104(1–2):27–38. doi: 10.1016/s0378-5955(96)00178-5. [DOI] [PubMed] [Google Scholar]

- Stapells DR. Threshold estimation by the tone-evoked auditory brainstem response: a literature meta-analysis. J Speech Lang Hear Res. 2000;24(2):74–83. [Google Scholar]

- Stapells DR, Oates P. Estimation of the pure-tone audiogram by the auditory brainstem response: a review. Audiol Neurootol. 1997;2(5):257–280. doi: 10.1159/000259252. [DOI] [PubMed] [Google Scholar]

- Stueve MP, O'Rourke C. Estimation of hearing loss in children: comparison of auditory steady-state response, auditory brainstem response, and behavioral test methods. Am J Audiol. 2003;12(2):125–136. doi: 10.1044/1059-0889(2003/020). [DOI] [PubMed] [Google Scholar]

- Turner CW, Souza PE, Forget LN. Use of temporal envelope cues in speech recognition by normal and hearing-impaired listeners. J Acoust Soc Am. 1995;97(4):2568–2576. doi: 10.1121/1.411911. [DOI] [PubMed] [Google Scholar]

- Van Dun B, Carter L, Dillon H. Sensitivity of cortical auditory evoked potential detection for hearing-impaired infants in response to short speech sounds. Audiol Res. 2012;2(1):e13, 65–76. doi: 10.4081/audiores.2012.e13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vander Werff KR, Brown CJ, Gienapp BA, et al. Comparison of auditory steady-state response and auditory brainstem response thresholds in children. J Am Acad Audiol. 2002;13(5):227–235. [PubMed] [Google Scholar]

- Wolfe J, John A, Schafer E, et al. Evaluation of nonlinear frequency compression for school-age children with moderate to moderately severe hearing loss. J Am Acad Audiol. 2010;21(10):618–628. doi: 10.3766/jaaa.21.10.2. [DOI] [PubMed] [Google Scholar]

- Wolfe J, John A, Schafer E, et al. Long-term effects of non-linear frequency compression for children with moderate hearing loss. Int J Audiol. 2011;50(6):396–404. doi: 10.3109/14992027.2010.551788. [DOI] [PubMed] [Google Scholar]

- Won JHH, Clinard CG, Kwon S, et al. Relationship between behavioral and physiological spectral-ripple discrimination. J Assoc Res Otolaryngol. 2011;12(3):375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]