Abstract

Objectives

The hypothesis that extending the audible frequency bandwidth beyond the range currently implemented in most hearing aids can improve speech understanding was tested for normal-hearing and hearing-impaired participants using target sentences and spatially separated masking speech.

Design

The Hearing in Noise Test (HINT) speech corpus was re-recorded and four masking talkers were recorded at a sample rate of 44.1 kHz. All talkers were male native speakers of American English. Reception threshold for Sentences (RTS) were measured in two spatial configurations. In the asymmetric configuration, the target was presented from −45° azimuth and two colocated masking talkers were presented from +45° azimuth. In the diffuse configuration, the target was presented from 0° azimuth and four masking talkers were each presented from a different azimuth: +45°, +135°, −135°, and −45°. The new speech sentences, masking materials and configurations, collectively termed the ‘Hearing in Speech Test (HIST)’, were presented using lowpass filter cutoff frequencies of 4, 6, 8, and 10 kHz. For the normal-hearing participants, stimuli were presented in the sound field using loudspeakers. For the hearing-impaired participants, the spatial configurations were simulated using earphones, and a multi-band wide dynamic range compressor with a modified CAM2 fitting algorithm was used to compensate for each participant’s hearing loss.

Results

For the normal-hearing participants (N=24, mean age 40 years), the RTS improved significantly by 3.0 dB when the bandwidth was increased from 4 to 10 kHz, and a significant improvement of 1.3 dB was obtained from extending the bandwidth from 6 to 10 kHz, in both spatial configurations. Hearing-impaired participants (N=25, mean age 71 years) also showed a significant improvement in RTS with extended bandwidth, but the effect was smaller than for the normal-hearing participants. The mean decrease in RTS when the bandwidth was increased from 4 to 10 kHz was 1.3 dB for the asymmetric condition and 0.5 dB for the diffuse condition.

Conclusions

Extending bandwidth from 4 to 10 kHz can improve the ability of normal-hearing and hearing-impaired participants to understand target speech in the presence of spatially separated masking speech. Future studies of the benefits of extended high-frequency amplification should investigate other realistic listening situations, masker types, spatial configurations, and room reverberation conditions, to determine added value in overcoming the technical challenges associated with implementing a device capable of providing extended high-frequency amplification.

INTRODUCTION

Although the highest audible frequency for people with normal hearing is about 20 kHz, the effective upper frequency limit of most hearing aids is only 5–6 kHz, with many limited to 4 kHz (Dillon 2012; Moore et al. 2001; Valente 2002; Aazh et al. 2012). Newer fitting formulae, such as CAM2 (Moore et al. 2010), NAL-NL2 (Keidser et al. 2012) and DSL[i/o] (Scollie et al. 2005), require output and prescribe large amounts of gain to restore at least partial audibility for frequencies above 5 kHz. The large gain is required because the spectral energy of speech is lower for high-frequency than for low- or mid-frequency bands (Moore et al. 2008) and because hearing loss typically increases with increasing frequency. Large amounts of prescribed gain at high frequencies are difficult to achieve in hearing aids because of problems with acoustic feedback, especially when an open-canal fitting is used. An obvious question is whether extending the effective bandwidth of hearing aids beyond that currently available would provide benefit for hearing-impaired listeners, given the technical challenges associated with doing so.

High-frequency acoustic information has been shown to be beneficial for normal-hearing listeners for speech understanding (Amos & Humes 2007; Carlile & Schonstein 2006; Moore et al. 2010), sound quality (Moore & Tan 2003), and sound localization (Best et al. 2005). However, recent studies of the ability of hearing-impaired listeners to make use of high-frequency information, when its audibility was restored through amplification, have come to mixed conclusions (Plyler & Fleck 2006; Horwitz et al. 2007; Hornsby et al. 2011; Moore et al. 2010; Ahlstrom et al. 2014).

Some studies of hearing-impaired listeners have shown that hearing impairment greater than 55–60 dB, which may be associated with cochlear dead regions (Moore 2004), may limit the ability to make use of high-frequency amplification, negating its possible effectiveness and even leading to worse performance with amplification (Hogan & Turner 1998; Vickers et al. 2001; Baer et al. 2002). Other studies have shown that listeners with steeply-sloping high-frequency hearing loss may not judge sound quality to be better when high-frequency amplification is provided (Ricketts et al. 2008; Moore et al. 2011). In addition, methods for predicting speech intelligibility, such as the Speech Intelligibility Index (SII) (ANSI 1997), predict minimal additional benefit from high-frequency speech information in quiet situations, provided that the speech spectrum is fully audible for frequencies up to about 4 kHz, because most speech information is contained in the low and mid-range frequencies; this prediction has been borne out in some studies of hearing-impaired listeners (ANSI 1997; Hogan & Turner 1998; Plyler & Fleck 2006; Horwitz et al. 2011).

Yet, recent studies have also shown that hearing-impaired listeners are able to make use of high-frequency amplification to significantly improve speech understanding, especially in noisy or complex listening situations (Turner & Henry 2002; Hornsby et al. 2011; Plyler & Fleck 2006; Hornsby et al. 2006; Hornsby & Ricketts 2003; Moore et al. 2010; Ahlstrom et al. 2014). This has been shown for speech masked by noise and by speech, and for speech materials varying from consonants to sentences. High-frequency amplification may be most beneficial when the listener is bilaterally aided and under conditions with spatial separation between the target and masking sounds, which is common in real-world conditions (Keidser et al. 2006; Mendel 2007; Carlile & Schonstein 2006; Moore et al. 2010). Other research shows that children with hearing impairment are able to make use of high frequencies to improve learning of words in quiet and in noise (Stelmachowicz et al. 2002; Stelmachowicz et al. 2004; Pittman 2008).

A complication in the issue of assessing benefit from high-frequency amplification is that the definition of what constitutes “high frequencies” for a hearing-impaired individual varies across studies. Generally, studies of the benefit of high-frequency amplification can be separated into two categories: the first defines high-frequency amplification as that which a hearing aid can provide (e.g., Plyler & Fleck 2006), and the second defines high-frequency amplification as that which extends beyond the range of what hearing aids currently provide (Hornsby et al. 2011; Moore et al. 2010). For the first category, both laboratory and field studies can be used, but for the second only simulations in the laboratory can be used. More research is needed to determine the benefits of extending bandwidth above the range commonly used in hearing aids, especially in complex environments.

The goal of this study was to determine the benefit of extended high-frequency amplification for participants with mild to severe hearing loss. Specifically, the study was designed to determine if normal-hearing and hearing-impaired participants would show significant improvement in speech understanding in the presence of spatially separated masking speech when the effective upper frequency limit was extended from 4 to 10 kHz in 2-kHz steps.

First, a corpus with extended bandwidth for both target sentences and masking speech was developed and characterized on a population of normal-hearing participants. Second, reception threshold for sentences (RTS) for normal-hearing participants were measured in two spatial configurations to determine the effects of extending the upper cutoff frequency. Lastly, a device capable of providing sufficient output and gain through 10 kHz was simulated using insert earphones, and RTS values were measured for hearing-impaired participants for the same two spatial configurations, to determine the effect of extending the upper cutoff frequency. These results are compared with results for hearing-impaired participants listening unaided and aided with a novel contact hearing device (CHD) (Fay et al. 2013). The CHD uses a transducer that drives the tympanic membrane directly. The transducer receives both signal and power via a light source from a behind-the-ear (BTE) device. The CHD has a maximum effective output level of 90–110 dB SPL and gain before feedback of up to 40 dB in the frequency range from 0.125 to 10 kHz.

HEARING IN SPEECH TEST (HIST)

The Hearing in Speech Test (HIST) was developed to allow RTS values to be measured using speech materials with a wide bandwidth (Fay et al. 2013). The HIST is similar to the Hearing in Noise Test (HINT), but the HIST uses two to four competing speech maskers instead of one steady noise, and has a bandwidth of about 20 kHz compared to a bandwidth of 8 kHz for the HINT materials (Nilsson et al. 1994).

HIST Materials and Conditions

All HIST materials were recorded at a sample rate of 44.1 kHz in a single-walled soundproof booth (Acoustic Systems, Austin, Texas) that meets the standard for acceptable room noise for audiometric rooms (ANSI 1995). The Bruel and Kjaer type 4134 microphone used had a response that was essentially flat (±2 dB) from 0.004 to 20 kHz. The 25 lists of 10 target sentences from the HINT corpus were re-recorded using a male native speaker of American English. The talker was instructed to speak at a conversational rate and level, and care was taken to ensure that the level of the speech was maintained over the course of each sentence. The long-term root-mean square (RMS) level of each sentence was matched after recording. Pairs of lists were selected from the 25 ten-sentence lists to form twelve 20-sentence lists. HINT practice lists were also re-recorded and processed in the same manner.

Four male native speakers of American English (M1, M2, M3, and M4) were recorded as masking talkers. Each read the Rainbow Passage (Fairbanks 1960) followed by the Television Passage (Nilsson et al. 2005) at a conversational rate and level. Target and masking materials were high-pass filtered using a cutoff frequency of 70 Hz in order to remove low-frequency building noise. Masking files were edited to remove gaps between sentences and gaps corresponding to pauses for breath lasting longer than 50 ms, but natural gaps between words were not altered. Each talker was asked to maintain the level of the speech over the course of the recording, and if there was a part where the level dropped off noticeably, this section was removed from the masker file. The processed masking files were each approximately 3 minutes long. The RMS level across each entire file was matched to the RMS level of the target sentences.

Figure 1 shows spectra of the HIST target (dashed black line) and masking materials (M1–M4). The spectra were calculated using 2-ERBN-wide analysis bands, where ERBN is the equivalent rectangular bandwidth of the auditory filter for listeners with normal hearing (Glasberg & Moore 1990). The levels in such bands provide a more perceptually relevant representation of the high-frequency spectral energy of speech for a hearing-impaired ear than the commonly used one-third octave filter bands (Moore et al. 2008). The figure shows that both the target and masking HIST materials have significant energy up to and above 10 kHz, in contrast to the HINT materials (dash-dotted curve). The spectra of the HIST materials are generally in agreement with those of Moore et al. (2008) for extended-frequency British English speech materials. Differences of up 10 dB in the target speech materials at the higher frequencies might be the result of different talkers or differences between American and British English.

Fig. 1.

Levels in 2-ERBN-wide bands of the new HIST materials, the original HINT materials (Nilsson et al. 1994), the wide-bandwidth materials from Moore et al. (2008), and the Minimum Audible Pressure (MAP) (Killion 1978).

The HIST uses the same adaptive testing protocol as the HINT (Nilsson et al. 1994). The masker level is held constant while the target level is varied. Initially, the target is presented at a low level, such that correct repetition is unlikely. The first sentence is presented repeatedly and the level increased in 4-dB steps until the participant repeats it correctly. This allows the level corresponding to the RTS to be approached without “wasting” sentences. All other sentences are presented only once, with the level determined by the participant's response to the previous sentence. For sentences 2–4, the level is decreased by 4 dB after a correct response (all words repeated correctly) and increased by 4 dB after an incorrect response. A preliminary RTS estimate is then obtained by averaging the levels of the first four sentences and the level at which the fifth sentence would have been presented, based on the response to the fourth sentence. Sentence 5 is presented at this estimated RTS level, and adaptation continues with the step size reduced from 4 dB to 2 dB. The final RTS estimate is determined by averaging the levels of sentences 5–20 and the level at which a 21st sentence would have been presented. This RTS estimate is expressed as the level of the target relative to the overall level of the maskers, in dB.

Testing was performed using either two (M1 and M2) or four masking talkers. The starting point within the M1 masker recording was randomly selected, and starting points for the other maskers were staggered at 5-second intervals within the masker recordings to ensure that the same sentence was not produced by more than one talker at the same time. All maskers were presented simultaneously, starting 2 seconds before the target speech and ending simultaneously with the target speech. The level for all maskers combined was fixed at 65 dB(A). When stimuli were presented in the free field, all loudspeakers were at head level and at a distance of 1 meter. Testing took place in a semi-anechoic soundproof booth with loudspeakers (Boston Acoustics RS 230) placed on monitor stands (On Stage Stands) at about ear level, 38” above the floor, and driven by QSC Audio RMX 850 amplifiers. The order of presentation of the sentence lists was automatically randomized by the software, and no lists were repeated for a participant.

HIST Validation Methods

For test validation purposes, the target and maskers were all presented from a loudspeaker at 0° azimuth (straight ahead), to allow comparison with the HINT using its standard method of administration. Participants were instructed to sit still during the presentation of the stimuli with their heads pointed straight ahead. RTS values for the HIST with both two and four competing talkers (M1 and M2 or M1, M2, M3, and M4) were determined by testing 24 normal-hearing participants, 11 male and 13 female, with a mean age of 36 years (range 22–61 years). Their audiometric thresholds were ≤ 20 dB HL from 0.25 to 10 kHz and speech discrimination scores were ≥ 92% using NU-6 test materials. Five RTS measurements were obtained for each participant for each HIST condition. For comparison, the same participants were tested with the HINT, using the standard unmodulated speech-shaped noise.

HIST Validation Results

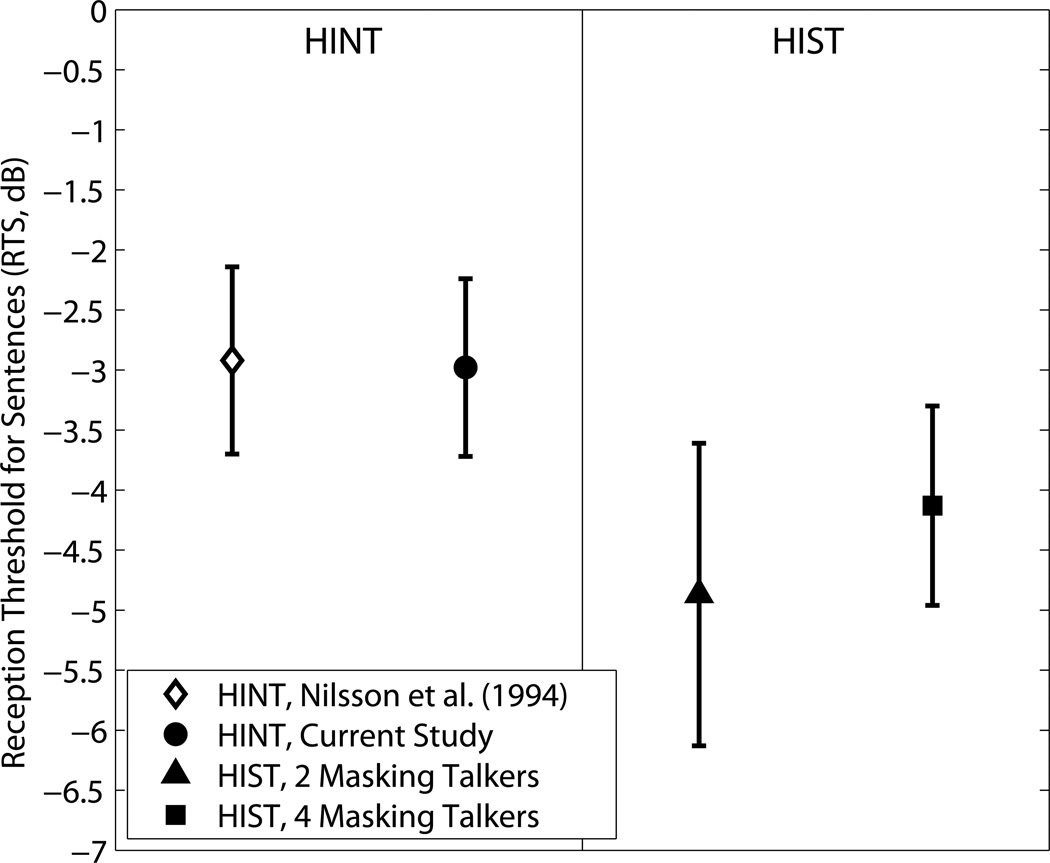

The RTS means and standard deviations (SD) for the HIST and HINT materials are shown in Fig. 2, together with published normative HINT scores for the colocated condition from Nilsson et al. (1994). The mean and SD of the normative scores (−2.9 ± 0. 8 dB) for the HINT from Nilsson et al. (1994) are similar to the HINT scores measured in the current study (−3.0 ± 0.74 dB). A t-test showed no significant difference between the mean RTS values for the two studies. These results indicate that the sound-field setup was sufficient for the purposes of these measurements, and that the 24 normal-hearing participants tested in this study showed a similar range of scores to those found for the 24 normal-hearing participants in the 1994 study.

Fig. 2.

Mean Reception Threshold for Sentences (RTS) ± 1 standard deviation (SD) for the HINT from the normative study of Nilsson et al. (1994) (diamond), for the HINT from the current study (circle), and for the HIST 2- and 4-masking talker conditions (triangle and square, respectively).

The mean RTS and SD were −4.9 ± 1.26 dB for the HIST 2-masking-talker condition and −4.1 ± 0.83 dB for the HIST 4-masking-talker condition. The HIST scores were expected to be more variable than the HINT scores, since level fluctuations over time are greater for speech maskers than for a steady noise masker (Baer & Moore 1994). By this same principle, the HIST 2-masking-talker condition was expected to give more variable scores than the HIST 4-masking-talker condition, because adding more independent masking talkers together reduces the level fluctuations. However, the difference in variability across the different conditions was small. It should be noted that additional variability may occur for spatially separated configurations, as individuals may vary in their ability to utilize spatial separation.

EXPERIMENT 1: EFFECT OF EXTENDED BANDWIDTH ON SPEECH RECEPTION FOR NORMAL-HEARING PARTICIPANTS

Experiment 1 was designed to determine the effect of bandwidth on RTS values for normal-hearing participants. The upper frequency limit was varied from 4 to 10 kHz in 2-kHz increments using two conditions where the target speech and maskers were spatially separated.

Methods

All HIST materials (target and maskers) were lowpass filtered with cutoff frequencies of 4, 6, 8, and 10 kHz. The filters had a stop band attenuation of 60 dB and a 100-Hz transition region from pass band to stop band. The level for all masking talkers combined was set to 65 dB(A), and the level of the target was adapted to determine the RTS. Participants were seated in a soundproof booth in the center of the loudspeaker array, with all loudspeakers at a distance of 1 meter from the center of the participant’s head. Two spatial configurations were used, referred to as "asymmetric" and "diffuse". In the asymmetric configuration (Fig. 3a), the target was presented from −45° azimuth and two colocated masking talkers (M1 and M2) were presented from +45° azimuth. This configuration was chosen to allow the participant to take advantage of the head-shadow effect; the long-term target-to-masker ratio (TMR) was higher for the left than for the right ear, especially at high frequencies (Moore et al. 2010). In the diffuse configuration (Fig. 3b), the target was presented from 0° azimuth and the masking talkers, M1, M2, M3, and M4, were presented from +45°, +135°, −135°, and −45° azimuth, respectively. The symmetry of this configuration meant that there was not a “better ear” with a more favorable long-term TMR. However, the configuration may allow short-term “glimpses” of the target when the momentary TMR is greater at one ear than at the other (Bronkhorst & Plomp 1988). Also, the spatial separation of the target and maskers may help to overcome effects of informational masking (Freyman et al. 1999). Participants were instructed to keep their heads facing straight ahead and stationary during the presentation of the stimuli. For each participant, the RTS was measured once for each spatial configuration and bandwidth condition, and the order of testing of the different conditions was randomized.

Fig.3.

a and 3b. HIST Asymmetric (2 masking talker) and Diffuse (4 masking talker) spatial configurations.

Participants

A new group of 24 normal-hearing participants (thresholds ≤ 20 dB HL from 0.25 to 10 kHz and speech discrimination scores of ≥92%) was tested. There were 10 male and 14 female participants, with a mean age of 40 years (range 22–58 years).

Results

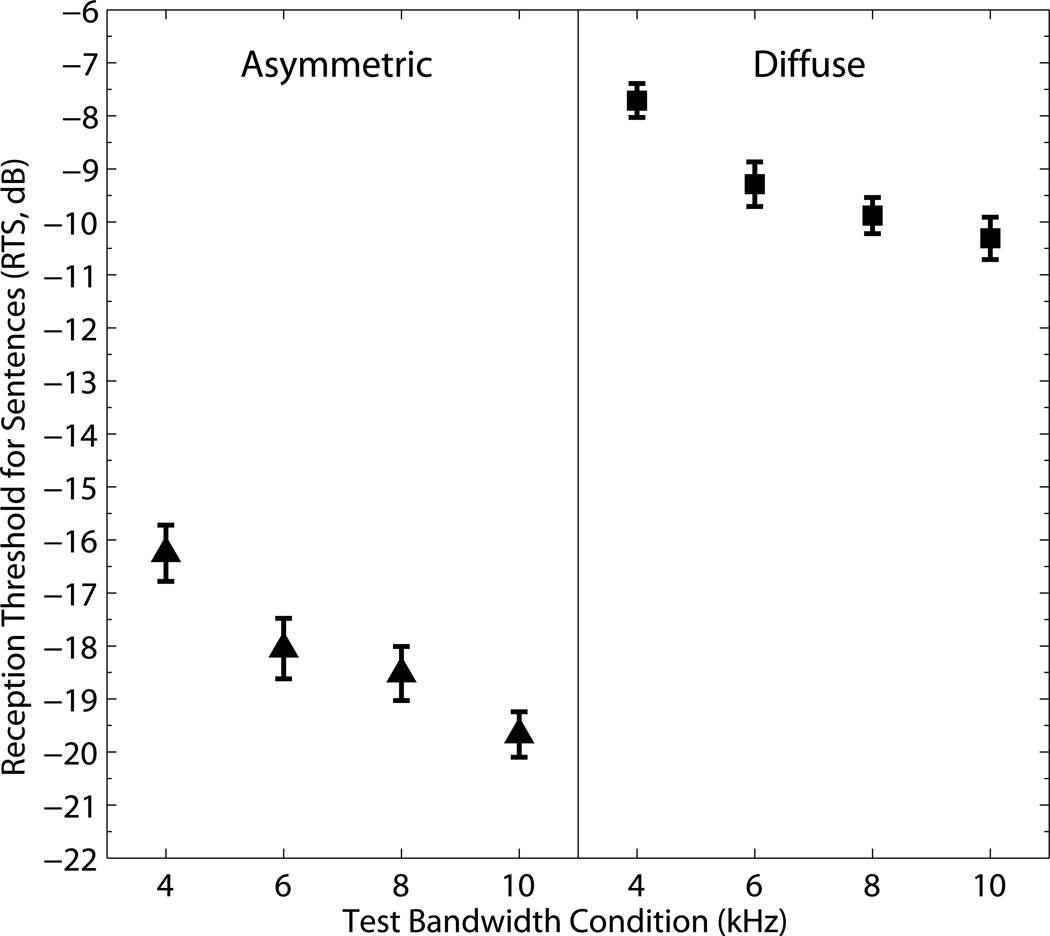

Figure 4 shows the measured RTS ± 1 standard error of the mean (SEM) for the asymmetric and diffuse configurations for the lowpass cutoff frequencies of 4, 6, 8, and 10 kHz. An analysis of variance (ANOVA) for repeated measures with within-subjects factors of spatial configuration and bandwidth revealed significant main effects of spatial configuration [F(1,23) = 498.97, p <0.001] and bandwidth [F(3,69) = 32.71, p <0.001], and no significant interaction [F(3,69) = 0.89, p = 0.5]. The significant effect of spatial configuration reflects the fact that RTS values were lower (better) for the asymmetric condition than for the diffuse condition, as expected.

Fig. 4.

Mean RTS ±1 SEM for the 24 normal-hearing participants for the HIST asymmetric (triangles) and diffuse (squares) spatial configurations with bandwidths of 4, 6, 8, and 10 kHz.

Post-hoc tests with Bonferroni correction showed significant differences (all p <0.001) between RTS values for the 4-kHz bandwidth and the other three bandwidths (6, 8, and 10 kHz), and a significant difference between the 6 and 10 kHz bandwidths (p<0.001). RTS values for the other two pairs of bandwidth (6 vs 8 and 8 vs 10 kHz) did not differ significantly. Increasing the bandwidth from 4 to 10 kHz improved the RTS by an average of 3.0 dB; 1.7 dB of that benefit came from increasing the bandwidth from 4 to 6 kHz, and 1.3 dB came from increasing the bandwidth from 6 to 10 kHz.

Summary

Normal-hearing participants were able to make use of the information from frequency components above 4 kHz to achieve lower RTS values in two spatial configurations. A relatively large improvement of 3 dB was found when the bandwidth was extended from 4 to 10 kHz, and a significant improvement of 1.3 dB was obtained from extending the bandwidth from 6 to 10 kHz. This indicates that extending the bandwidth beyond the frequency range achieved in most currently available hearing aids (above 6 kHz) can significantly improve speech intelligibility in some complex environments for normal-hearing participants.

EXPERIMENT 2: EFFECT OF EXTENDED BANDWIDTH ON SPEECH RECEPTION FOR HEARING-IMPAIRED PARTICIPANTS

Experiment 2 investigated whether hearing-impaired participants also obtained benefit from extending the bandwidth in the two HIST spatial configurations. Participants were tested with insert earphones, using signal processing that simulated the spatial conditions and provided gain prescribed by a modified version of the CAM2 fitting algorithm (Moore et al. 2010). Testing conditions included a simulated “unaided” reference condition, and aided conditions with effective upper frequency limits from 4 to 10 kHz in 2-kHz increments.

Methods

The HIST asymmetric and diffuse conditions as described in Experiment 1 and shown in Fig. 3 were simulated and a gain prescription was applied as described below. The equivalent diffuse-field masker level was set to 65 dB(A), and the level of the target was adapted. To simulate the spatial configurations, impulse responses were measured on KEMAR (Burkhard & Sachs 1975) at two microphone locations for each ear: the Tympanic Membrane (TM) location, which represents an “unaided” location, and a BTE location, which represents an “aided” microphone location above the pinna. Impulse responses were measured at both locations from the loudspeakers at 0°, ±45° and ±135° at a distance of 1 m while KEMAR was facing the front loudspeaker (0°), the same configuration as shown in Fig. 3b. A Bullet card (Mimosa Acoustics) driven by SYSid (Version 7, SPL, Sunnyvale, CA), generated chirp signals in the semi-anechoic soundbooth, and deconvolved the chirp to obtain an impulse response. Each impulse response was high-pass filtered with a Butterworth filter to remove frequency components below 70 Hz, and time-windowed with a 1-ms rise and 5-ms fall cosine-squared window.

For each aided condition tested, the HIST test materials were convolved with the appropriate right-ear impulse responses (BTE from the appropriate loudspeaker location). Then the right-ear signals were low-pass filtered with the appropriate cutoff frequency (4, 6, 8, or 10 kHz), summed, and passed through a multi-channel compressor, which was programmed to provide the prescribed gain, as described below. The stimulus was then presented to the right ear through an Etymotic ER-5A insert earphone. The same procedure was followed for the left ear. For the unaided condition, the simulation procedure was the same, except that no lowpass filtering was performed on the test materials, the TM microphone position was used, and the compressor was programmed to provide no gain.

Compressor and CAM2 Fitting

For the aided conditions, gain was provided by two independent 12-channel wide dynamic range compressors, one for each ear. Compression was used because it is required in everyday life to make weak sounds audible while preventing intense sounds from becoming uncomfortably loud. Low-level expansion was used to prevent microphone noise from being audible, as is commonly done in hearing aids (Dillon 2012). Table 1 shows the participant-independent compressor characteristics, including the frequency range, initial compression threshold (prior to possible modification as described below), expansion threshold, attack time, and release time for each channel. For each channel, the expansion threshold was set to the estimated channel level for 35 dB SPL speech, based on the data reported by Moore et al. (2008), or to the estimated microphone noise level for a typical hearing aid microphone, whichever was higher. The expansion ratio was 0.5 for all channels. The initial compression threshold was set to the estimated channel level for 45 dB SPL speech, or to 3 dB above the expansion threshold, whichever was higher. Time constants were designed to provide approximately the same number of cycles across the channels, which correspond to a slow response at low frequencies and a fast response at high frequencies.

Table 1.

Participant-independent settings of the multi-channel compressor used to provide the prescribed gain.

| Channel # | # of bins | Low cutoff freq (Hz) |

High cutoff freq (Hz) |

Center freq (Hz) | Audiometric frequencies in channel |

Initial compression threshold (dB) |

Expansion threshold (dB) |

T attack (ms) | T release (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 182 | 91 | 125 | 31 | 25 | 55 | 550 |

| 2 | 1 | 182 | 545 | 364 | 250, 500 | 42 | 32 | 55 | 550 |

| 3 | 1 | 545 | 909 | 727 | 750 | 38 | 28 | 28 | 280 |

| 4 | 1 | 909 | 1273 | 1091 | 1000 | 31 | 21 | 18 | 180 |

| 5 | 1 | 1273 | 1636 | 1455 | 1500 | 29 | 19 | 14 | 140 |

| 6 | 2 | 1636 | 2363 | 2000 | 2000 | 30 | 20 | 10 | 100 |

| 7 | 2 | 2363 | 3091 | 2727 | 3000 | 27 | 17 | 7 | 70 |

| 8 | 3 | 3091 | 4181 | 3636 | 4000 | 25 | 16 | 6 | 60 |

| 9 | 5 | 4181 | 5999 | 5090 | -- | 23 | 17 | 4 | 40 |

| 10 | 6 | 5999 | 8181 | 7090 | 6000, 8000 | 22 | 18 | 3 | 30 |

| 11 | 5 | 8181 | 9999 | 9090 | 9000 | 20 | 17 | 3 | 20 |

| 12 | 4 | 9999 | 11635 | 10817 | 10000 | 20 | 17 | 3 | 20 |

Each ear’s compressor was programmed to compensate for each individual participant's hearing loss using a modified version of the CAM2 fitting algorithm (Moore et al. 2010). Note that CAM2 does not prescribe compression thresholds or expansion thresholds. These were selected as described above. The purpose of the modification to CAM2 was to limit the maximum prescribed gain in each channel to what was believed to be achievable in a future wearable device (the CHD described in the introduction), based on feedback considerations of a widely-vented ear canal (Levy et al. 2013). Table 2 shows the gain limits that were used. The gain limits vary across frequency in the same way as for the CHD. For each channel, CAM2 prescribes a compression ratio (CR) and an insertion gain at the compression threshold (IGCT), which is the maximum gain applied. If the IGCT exceeded the gain limit, the prescription was modified by reducing the IGCT to the gain limit and raising the compression threshold (CT) to retain the same gain curve above the CT, using the formula CTnew = CTorig + (IGCTnew−IGCTorig) × CR / (1−CR). No gain reduction was applied for any ear for channels 1–6. For channels 7–12, the mean reduction in IGCT (with SD and maximum value in parentheses) was 0.1 (0.5, 3), 1.9 (2.6, 8), 3.2 (3.3, 10), 5.9 (5.2, 16), 11.1 (7.5, 24), and 11.2 (7.5, 25) dB, respectively. Some gain reduction was applied for 48 out of 50 ears, but the gain reductions were always ≤ 10 dB for channels 1–9 (frequencies up to 6 kHz).

Table 2.

Maximum gain limitations imposed on the CAM2 fitting algorithm.

| Frequency, kHz | 0.125 | 0.25 | 0.5 | 0.75 | 1.0 | 1.5 | 2.0 | 3.0 | 4.0 | 6.0 | 8.0 | 10.0 |

| Max. Gain, dB | 40 | 40 | 40 | 40 | 40 | 40 | 40 | 30 | 30 | 40 | 40 | 40 |

Procedures

For each ear of each participant, the insert earphone response at the participant's TM was determined using the ThÈvenin-equivalent source calibration method described by Lewis et al. (2009). In this method, the total sound pressure in the ear canal is decomposed into forward- and reverse-traveling pressure wave components, and pressure at the TM is estimated as the sum of the magnitudes of these two components. This method allows participant-specific estimation of TM pressure without the need for probe microphone measurements at the TM. The resulting insert earphone response was used to calibrate the compressor output to ensure that signals were presented at the desired level.

For each participant, the RTS was measured once for each spatial and cutoff-frequency condition. The trials were presented in blocks with aided and unaided conditions to avoid programming and reprogramming the compressor multiple times during the testing. The presentation order of the blocks was randomized, as was the order of the conditions within each block.

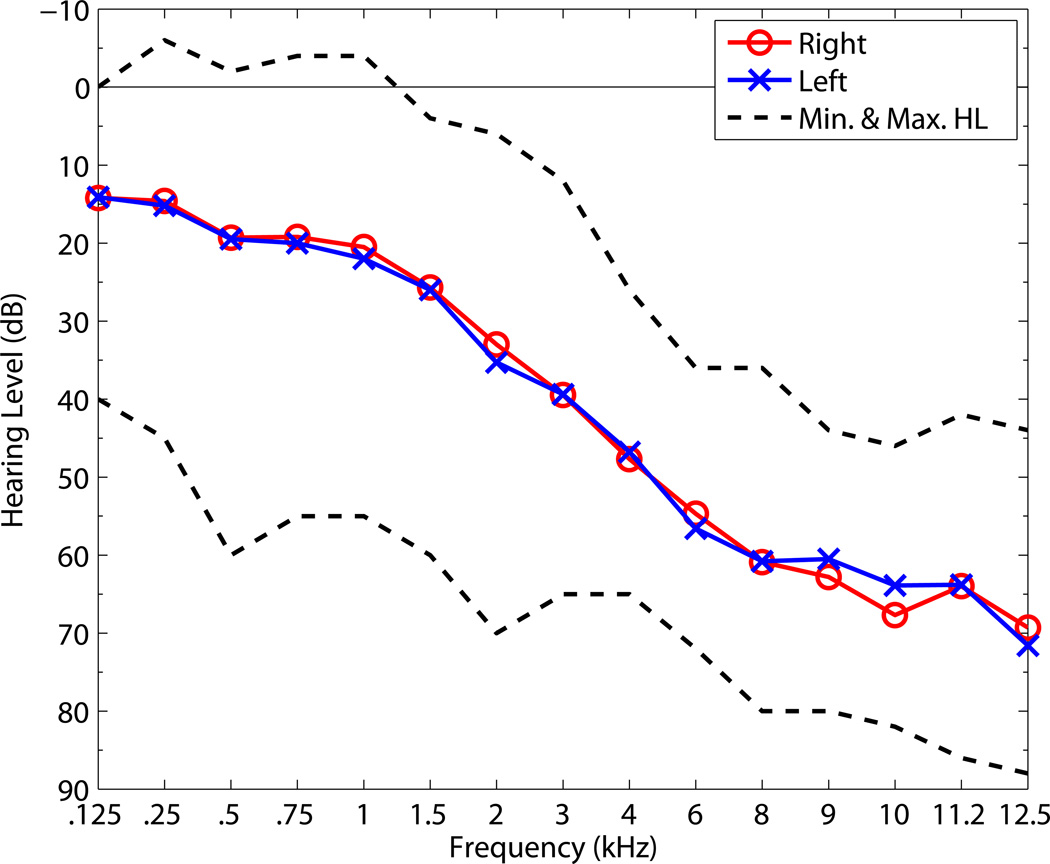

Participants

A group of 25 participants with symmetric mild to severe sensorineural hearing loss and speech discrimination scores of ≥70% were tested. See Fig. 5 for the mean right and left ear audiograms. There were 12 male and 13 female participants, with a mean age of 71 years (range 49–85).

Fig. 5.

Mean right ear (O) and left ear (X) hearing thresholds, in the frequency range 0.125 to 10 kHz, for the 25 hearing-impaired participants used in Experiment 2, together with the minimum and maximum thresholds for each audiometric frequency (dashed lines). The mean thresholds are slightly offset from their correct positions in the abscissa.

Results

Figure 6 shows the measured RTS ± 1 SEM for the unaided and aided, asymmetric and diffuse configurations, for the 4, 6, 8, and 10-kHz bandwidths. The RTS values for the unaided configurations are also shown. An ANOVA for repeated measures with within-subjects factors of spatial configuration (asymmetric and diffuse) and bandwidth (aided conditions only, with cutoff frequencies of 4, 6, 8, and 10 kHz), revealed a significant main effect of spatial configuration (F(1,24) = 474.199, p <0.0001); the RTS values were lower (better) for the asymmetric than for the diffuse condition, as expected. There was also a significant effect of bandwidth (F(3,72) = 2.730, p<0.05); performance improved with increasing bandwidth. The interaction was not significant (F(3,72) = 1.011, p = 0.393). Post hoc analyses with Bonferroni correction showed that the RTS was significantly lower for the 10-kHz than for the 4-kHz bandwidth (p = 0.016, for the combined asymmetric and diffuse configurations). No other pairwise comparisons were significant. Although the interaction was not significant, there was a trend for the effect of bandwidth to be larger for the asymmetric than for the diffuse condition; the mean decrease in RTS when the bandwidth was increased from 4 to 10 kHz was 1.3 dB for the asymmetric condition and 0.5 dB for the diffuse condition.

Fig. 6.

Mean RTS ± 1 SEM for the hearing-impaired participants in the HIST asymmetric (triangles) and diffuse (squares) configurations with test bandwidths of 4, 6, 8, and 10 kHz in the aided condition (BTE microphone location). RTS values are also shown for the reference “unaided” full bandwidth condition (TM microphone location).

The effect of aiding was assessed by comparing RTS values for the unaided configurations with those for the aided configurations with the 10-kHz bandwidth (since the stimuli were not lowpass filtered for the unaided configurations). A repeated-measures ANOVA was conducted with factors spatial configuration and aiding (unaided or aided). The effect of spatial configuration was significant (F(1,24) = 280.6, p <0.0001), as expected. The effect of aiding was also significant (F(1,24) = 5.481, p <0.028); overall performance was better in the aided condition. The interaction was significant (F(1,24) = 33.447, p <0.0001). Post hoc tests showed that the mean RTS was 2.8 dB lower in the aided than in the unaided condition for the asymmetric configuration (p <0.0001). The mean RTS was 0.7 dB higher in the aided condition than in the unaided condition for the diffuse configuration, but the difference was not significant (p = 0.27).

Discussion

For the asymmetric configuration, the hearing-impaired participants performed significantly better in the aided condition with a bandwidth of 10 kHz than in the unaided (wideband) condition. For the two spatial configurations taken together, the participants obtained significant benefit from extending the aided bandwidth from 4 to 10 kHz. This indicates that the hearing-impaired participants in this study were able to benefit from the extended bandwidth in these complex spatial configurations. The monotonic improvement in RTS for the asymmetric configuration as the bandwidth was increased from 4 to 6, 8, and 10 kHz suggests that the modified CAM2 algorithm, with the maximum gain limitation, at least partially restored the audibility of frequency components above 4 kHz. While the hearing-impaired participants clearly benefited from extended bandwidth, they obtained less benefit than the normal-hearing participants, which is not surprising and is consistent with the results of other studies, as discussed later.

The mean RTS was 2.8 dB lower in the aided than in the unaided condition for the asymmetric configuration, but was 0.7 dB higher in the aided than in the unaided condition for the diffuse configuration, although the latter difference was not statistically significant. The lack of a benefit from aiding in the diffuse configuration probably reflects the effects of microphone location. Recall that in the unaided condition, participants were tested using a simulated free-field, with a simulated microphone position at the TM. In contrast, in the aided condition, participants were tested using a simulated BTE microphone position. The use of a TM microphone location might have improved the ability to use spatial cues provided by the pinna in the unaided condition (Jin et al. 2002). A recent study comparing a pinna cue-preserving microphone position with a position that did not preserve pinna cues showed benefit for front-back sound localization for the former but no benefit for spatial release from speech-on-speech masking (Jensen et al. 2013). Also, in the diffuse configuration, the TM microphone location used in the unaided condition would have provided some shielding from the masking sounds towards the rear of the head, leading to a better TMR.

To assess the magnitude of the effect of microphone location on the TMR, we calculated the difference in effective TMR for the two locations, using the KEMAR head-related transfer functions (HRTFs) and weighting the contribution of different frequency regions using the critical band SII weighting values from Table 1 of ANSI (1997). The TMR in the free field was set to 0 dB. The steps were: (1) At each frequency, the ratio of the target HRTF to the masker HRTF was calculated using the HRTFs for the BTE microphone location. When there were multiple maskers, the denominator was a power-sum of the HRTFs for the different masker locations; (2) Step 1 was repeated using the HRTFs for the TM microphone location; (3) The difference (in dB) between the outcomes for Steps 1 and 2 was calculated; (4) The differences obtained in Step 3 were weighted using the SII weights and integrated across frequency to get the SII-weighted difference in effective TMR in dB.

We adopt the convention that a higher effective TMR for the TM microphone location has a positive sign. For the asymmetric configuration, when the target was to the left, the difference in effective TMR was 0.6 dB for the left ear and −0.4 dB for the right ear. Thus, the overall effect was small. For the diffuse configuration, the difference in effective TMR was 2.1 dB for the left ear and 2.7 dB for the right ear. Thus, the TMR was markedly higher for the TM location. This can account for the lack of benefit of aiding for the diffuse condition, as shown in Figure 6. If the aided condition had simulated an in-the-ear or completely-in-the-canal hearing aid, then a benefit of aiding would probably have been obtained even in the diffuse configuration.

It is possible that the fast-acting compression used here somehow interfered with the ability to listen in the dips at high frequencies in the diffuse condition. However, it has been shown that fast-acting compression can actually slightly improve the ability to listen in the dips of a masker with very distinct spectral and temporal dips when the target and masker are colocated (Moore et al. 1999). Possibly, the independent fast-acting compression at the two ears disrupted the use of interaural level cues, but if that were a material effect one would have expected aiding to have a deleterious effect in the asymmetric condition, which was not the case.

We do not think that the lack of benefit from aiding in the diffuse configuration was due to the use of low-level expansion in the simulated hearing aid. The expansion thresholds had low values, and the expansion would not have affected the audibility of speech cues when the overall level of the speech was above 50 dB SPL. The target speech level at the RTS for the diffuse configuration was always above 56 dB.

The results can be compared to those obtained using a similar cohort of 13 hearing-impaired participants, also using the HIST materials and protocol, with the CHD described in the introduction (Fay et al. 2013). The study of Fay, et al. did not investigate the effects of bandwidth, but instead measured performance when amplification was provided over the entire frequency range up to 10 kHz. Thus, the appropriate RTS values for comparison from the current study are those for the unaided condition and for the aided condition with 10-kHz bandwidth. These RTS values are shown in Table 3. The 13 participants in the CHD study consisted of a subset of 12 of the 24 participants used in this study, and one additional participant. Table 3 shows both the mean data from the current study and data for the 12 participants who were common to both studies.

Table 3.

Comparison of the present results (unaided and aided with 10-kHz bandwidth) with those obtained by Fay et al. (2013) using a CHD, for the HIST asymmetric and diffuse configurations.

| Asymmetric | Diffuse | |||

|---|---|---|---|---|

| Unaided | Aided | Unaided | Aided | |

| This study | −8.9 ± 1.0 | −11. 8 ± 0.7 | −3.2 ± 0.7 | −2.3 ± 0.5 |

| Subset of 12 participants common to both studies | −10.1 ± 1.0 | −12.0 ± 0.9 | −3.4 ± 0.8 | −2. 8 ± 0.8 |

| CHD (13 participants) | −9.9 ± 1.1 | −12.7 ± 1.1 | −5.0 ± 1.0 | −4.7 ± 0.9 |

Each cell shows the mean RTS for that condition ± 1 SEM.

For the aided conditions for the subset of 12 participants, the mean RTS values obtained using the CHD are somewhat better (more negative) than for the present study, by about 1 dB for the asymmetric configuration and by about 2 dB for the diffuse configuration. However, the unaided RTS values were also slightly better for the CHD study. The overall benefit of aiding for the asymmetric configuration was larger for the CHD than for the same 12 participants tested here. Methodological differences between the studies could account for these differences. In the present study, the spatially separated sound sources were simulated using KEMAR HRTFs, whereas for the CHD study, speech materials were presented over loudspeakers in the free field, which effectively means that participants were listening using their own HRTFs. Also, in the real free-field environment, participants can make use of small head movements to better localize the target and masker speech and to optimize the TMR, while in the simulated environment the fixed HRTFs do not allow any benefit from head movements (Begault et al. 2001). Participants in the CHD study were instructed to keep their heads still, but there is no guarantee that they did this.

GENERAL DISCUSSION

Overall, the hearing-impaired participants had poorer (more positive) RTS values in both spatial configurations than did the normal-hearing participants. In the asymmetric condition, the effect of extending the bandwidth from 4 to 10 kHz was smaller for the hearing-impaired participants than for the normal-hearing participants, although for both populations the effect was significant. The normal-hearing participants showed a progressive benefit of increasing the bandwidth from 4 to 10 kHz in the asymmetric condition, whereas a previous study (Moore et al. 2010) showed a benefit of increasing the bandwidth from 5 to 7.5 kHz, but no further benefit of increasing the bandwidth to 10 kHz. Moore, et al. used British English target speech and two-talker masker speech and a spatial configuration similar to the asymmetric configuration, but with the loudspeakers located at ±60°, instead of the present ±45°. It is not clear whether this can account for the difference in results across studies.

In the asymmetric configuration, the head-shadow effect led to a long-term TMR advantage at the left ear, and this advantage increased with increasing frequency (Masterton et al. 1969; Heffner & Heffner 2008), being especially large above 5 kHz (Moore et al. 2010). This probably contributed to the benefit obtained from increasing bandwidth for that configuration. However, the hearing-impaired participants showed less benefit than the normal-hearing participants. There are at least four possible reasons for this. One is connected with “listening in the dips”, which occurs when the masking sounds have distinct amplitude fluctuations, as in the present study. The benefit from extended bandwidth presumably depends on the ability to listen in the dips at high frequencies. The hearing-impaired participants may have been less able than the normal-hearing participants to do this, because the former require higher TMRs to reach the RTS, and at high TMRs dip listening becomes less effective (Bernstein & Grant 2009). A second possible reason is that, at the higher frequencies, the prescribed gain was limited to 40 dB, whereas CAM2 (without the gain limits implemented in the current study) actually prescribes significantly higher gains for low-level signals. Thus, at the higher frequencies, only partial audibility was restored, limiting the benefit from amplification for the hearing-impaired participants, and perhaps also restricting the ability to listen in the dips. The use of low-level expansion in the simulated hearing aid may have contributed to the limited restoration of audibility, since the RTS values for five participants correspond to target speech levels slightly below 50 dB SPL, the level below which the expansion would have led to reduced audibility. A comparison of CAM2 and NAL-NL2 (which prescribes less gain at high frequencies than CAM2) showed a slight preference by hearing-impaired participants for CAM2 (Moore & Sek 2013). Thus, further study with the full CAM2 prescription or other high-frequency gain prescription algorithms would be useful to investigate the maximum benefit that could be achieved by hearing-impaired participants.

A third possible reason for the lower benefit of hearing-impaired participants from extended bandwidth is the reduced frequency selectivity that is often associated with cochlear hearing loss (Glasberg & Moore 1986). This would lead to greater upward spread of masking from low frequencies to higher frequencies. Finally, some of the participants may have had dead regions at high frequencies, which would limit their ability to make use of amplified high-frequency components (Moore 2004).

In the diffuse configuration, the normal-hearing participants showed progressive benefits from increased bandwidth (Fig. 4, right half), whereas the hearing-impaired participants showed a much smaller and less regular effect (Fig. 6, right half). A possible explanation for this is that the diffuse configuration is symmetric, so the long-term TMR is the same at the two ears. Normal-hearing participants may still be able to use information from “glimpses” of the target speech during brief time periods when the momentary TMR is higher at one ear than at the other (Bronkhorst & Plomp 1988). The glimpses are potentially more useful at high frequencies than at low frequencies, since the head-shadow effect is greater at high frequencies. Hearing-impaired participants may be less able to take advantage of glimpses at high frequencies (Hopkins & Moore 2009; Ruggles & Shinn-Cunningham 2011). In addition, the fast compression used at high frequencies would have introduced “cross modulation” between the target and maskers, which might have made it harder to perceptually segregate the target and maskers (Stone & Moore 2003; Stone & Moore 2004).

Differences between studies in what is considered “high frequency” amplification may affect the overall conclusions reached about the benefit of extended high-frequency amplification. It is interesting to note that significant benefits of increasing bandwidth would not have been observed for the hearing-impaired group if the maximum cutoff frequency had been limited to 6 kHz, which is the limit of most current devices. Thus a different conclusion about the benefit of high-frequency amplification may be drawn when using different definitions of what constitutes “high frequency”.

PROPOSALS FOR FURTHER WORK

Reverberation may have strong effects on the ability to use high frequencies in real-world scenarios, as reverberation smears temporal modulations, which can lead to poor intelligibility for hearing-impaired participants (Plomp & Duquesnoy 1980). Importantly, reverberation typically decreases with increasing frequency in real rooms, since room surfaces and furnishings tend to absorb high frequencies more than low frequencies. Increasing the bandwidth of hearing aids may be especially important under reverberant conditions, since the high-frequency components in speech will be less corrupted by reverberation. This may shift the relative importance of different frequency regions in speech towards higher frequencies. In other words, the frequency-importance functions assumed in procedures like the SII may not be valid for reverberant spatially complex environments (see also Apoux et al. 2013). Further work is needed to explore the benefit of extended bandwidth in hearing aids under reverberant conditions.

The effects of the microphone location described earlier bring up an interesting question: if the BTE microphone had been moved to a more favorable location, closer to the ear-canal entrance, could the hearing-impaired participants have used the pinna shadow to obtain a larger benefit of increased bandwidth in the diffuse condition? Further investigation into the effect of microphone location separately from and in addition to the benefit of extended bandwidth is important, as is assessment of the interaction between the two. A microphone closer to the ear canal may bring benefits in terms of pinna shadow, but such a location may limit the maximum gain that can be provided (and thus the effective bandwidth provided) in an open-canal configuration, due to air-conducted feedback (Levy et al. 2013).

An alternative way of making high-frequency information in speech available to a hearing-impaired listener is via frequency lowering (Robinson et al. 2007). Many manufacturers of hearing aids now provide some form of frequency lowering as an option. However, there is on-going debate about the effectiveness of frequency lowering (Souza et al. 2013). Further research is needed to compare the relative benefits of extended bandwidth and frequency lowering, and to assess candidature for one or the other.

In summary, it is clear from this study that there are advantages of extended bandwidth for the hearing impaired in at least some circumstances. Future studies of the benefits of extended high-frequency amplification should investigate other realistic listening situations, masker types, spatial configurations, and room reverberation conditions, to determine if there is real value in overcoming the technical challenges associated with implementing a device capable of providing extended high-frequency amplification. As all controlled laboratory studies of speech intelligibility have limitations, wearable devices providing extended high-frequency amplification, such as the CHD, allow the best assessment of “real-world” benefit, including subjective benefit.

CONCLUSIONS

A wideband version of the HINT, called the HIST, has been developed and validated. The HIST uses speech maskers rather than a noise masker.

Extending bandwidth from 4 to 10 kHz improved speech recognition in the presence of spatially separated masking speech for normal-hearing and hearing-impaired participants, but the effect was smaller for the latter than for the former. The benefit of extended bandwidth tended to be larger for the asymmetric than for the diffuse spatial configuration, but this effect was not statistically significant.

More investigation is needed into the benefit of providing extended high-frequency amplification for hearing-impaired participants in complex spatial environments, including environments with reverberation. Also, the effects of microphone location, compression amplification, and low-level expansion on the benefit from high-frequency amplification need further investigation.

ACKNOWLEDGMENTS

Funding for this study was provided in part by SBIR grant R44 DC008499 from the NIDCD of NIH to EarLens Corporation. The authors declare that they have a financial interest in EarLens Corporation. We thank the three reviewers for helpful comments on an earlier version of this paper.

REFERENCES

- Aazh H, Moore BCJ, Prasher D. The accuracy of matching target insertion gains with open-fit hearing aids. Am J Audiol. 2012;21:175–180. doi: 10.1044/1059-0889(2012/11-0008). [DOI] [PubMed] [Google Scholar]

- Ahlstrom JB, Horwitz AR, Dubno JR. Spatial separation benefit for unaided and aided listening. Ear Hear. 2014;35:72–85. doi: 10.1097/AUD.0b013e3182a02274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amos NE, Humes LE. Contribution of high frequencies to speech recognition in quiet and noise in listeners with varying degrees of high-frequency sensorineural hearing loss. J Speech Lang Hear Res. 2007;50:819–834. doi: 10.1044/1092-4388(2007/057). [DOI] [PubMed] [Google Scholar]

- ANSI. ANSI/ASA S12.2-1995, Criteria for Evaluating Room Noise. New York: American National Standards Institute; 1995. [Google Scholar]

- ANSI. ANSI/ASA S3.5-1997, Methods for the calculation of the speech intelligibility index. New York: American National Standards Institute; 1997. [Google Scholar]

- Apoux F, Yoho SE, Youngdahl CL, et al. Role and relative contribution of temporal envelope and fine structure cues in sentence recognition by normal-hearing listeners. J Acoust Soc Am. 2013;134:2205–2212. doi: 10.1121/1.4816413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baer T, Moore BCJ. Effects of spectral smearing on the intelligibility of sentences in the presence of interfering speech. J Acoust Soc Am. 1994;95:2277–2280. doi: 10.1121/1.408640. [DOI] [PubMed] [Google Scholar]

- Baer T, Moore BCJ, Kluk K. Effects of low pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. J Acoust Soc Am. 2002;112:1133–1144. doi: 10.1121/1.1498853. [DOI] [PubMed] [Google Scholar]

- Begault DR, Wenzel EM, Anderson MR. Direct comparison of the impact of head tracking, reverberation, and individualized head-related transfer functions on the spatial perception of a virtual speech source. J Audio Eng Soc. 2001;49:904–916. [PubMed] [Google Scholar]

- Bernstein JG, Grant KW. Auditory and auditory-visual intelligibility of speech in fluctuating maskers for normal-hearing and hearing-impaired listeners. J Acoust Soc Am. 2009;125:3358–3372. doi: 10.1121/1.3110132. [DOI] [PubMed] [Google Scholar]

- Best V, Carlile S, Jin C, et al. The role of high frequencies in speech localization. J Acoust Soc Am. 2005;118:353–363. doi: 10.1121/1.1926107. [DOI] [PubMed] [Google Scholar]

- Bronkhorst AW, Plomp R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. J Acoust Soc Am. 1988;83:1508–1516. doi: 10.1121/1.395906. [DOI] [PubMed] [Google Scholar]

- Burkhard MD, Sachs RM. Anthropometric manikin for acoustic research. J Acoust Soc Am. 1975;58:214–222. doi: 10.1121/1.380648. [DOI] [PubMed] [Google Scholar]

- Carlile S, Schonstein D. Frequency bandwidth and multi-talker environments. Presented at the 120th Convention of the Audio Engineering Society Convention. 2006;118:353–363. [Google Scholar]

- Dillon H. Hearing Aids. Second ed. Turramurra, Australia: Boomerang Press; 2012. [Google Scholar]

- Fairbanks G. Voice and articulation drillbook. 2d ed. New York: Harper; 1960. [Google Scholar]

- Fay JP, Perkins R, Levy SC, et al. Preliminary evaluation of a light-based contact hearing device for the hearing impaired. Otol Neurotol. 2013;34:912–921. doi: 10.1097/MAO.0b013e31827de4b1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freyman RL, Helfer KS, McCall DD, et al. The role of perceived spatial separation in the unmasking of speech. J Acoust Soc Am. 1999;106:3578–3588. doi: 10.1121/1.428211. [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ. Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments. J Acoust Soc Am. 1986;79:1020–1033. doi: 10.1121/1.393374. [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ. Derivation of auditory filter shapes from notched-noise data. Hear Res. 1990;47:103–138. doi: 10.1016/0378-5955(90)90170-t. [DOI] [PubMed] [Google Scholar]

- Heffner H, Heffner . High-frequency hearing. In: Dallos P, Oertel D, editors. Audition. Vol. 3. San Diego: Elsevier; 2008. pp. 55–60. [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: benefits for hearing-impaired listeners. J Acoust Soc Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Hopkins K, Moore BCJ. The contribution of temporal fine structure to the intelligibility of speech in steady and modulated noise. J Acoust Soc Am. 2009;125:442–446. doi: 10.1121/1.3037233. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Johnson EE, Picou E. Effects of degree and configuration of hearing loss on the contribution of high- and low-frequency speech information to bilateral speech understanding. Ear Hear. 2011;32:543–555. doi: 10.1097/AUD.0b013e31820e5028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA. The effects of hearing loss on the contribution of high- and low-frequency speech information to speech understanding. J Acoust Soc Am. 2003;113:1706–1717. doi: 10.1121/1.1553458. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA, Johnson EE. The effects of speech and speechlike maskers on unaided and aided speech recognition in persons with hearing loss. J Am Acad Audiol. 2006;17:432–447. doi: 10.3766/jaaa.17.6.5. [DOI] [PubMed] [Google Scholar]

- Horwitz AR, Ahlstrom JB, Dubno JR. Speech recognition in noise: estimating effects of compressive nonlinearities in the basilar-membrane response. Ear and hearing. 2007;28:682–693. doi: 10.1097/AUD.0b013e31812f7156. [DOI] [PubMed] [Google Scholar]

- Horwitz AR, Ahlstrom JB, Dubno JR. Level-dependent changes in detection of temporal gaps in noise markers by adults with normal and impaired hearing. J Acoust Soc Am. 2011;130:2928–2938. doi: 10.1121/1.3643829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen NS, Neher T, Laugesen S, et al. Laboratory and field study of the potential benefits of pinna cue-preserving hearing aids. Trends Hear. 2013;17:171–188. doi: 10.1177/1084713813510977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jin C, Best V, Carlile S, et al. Speech Localization. Audio Engineering Society Convention. 2002:112. [Google Scholar]

- Keidser G, Dillon H, Carter L, et al. NAL-NL2 empirical adjustments. Trends in amplification. 2012;16:211–223. doi: 10.1177/1084713812468511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keidser G, Rohrseitz K, Dillon H, et al. The effect of multi-channel wide dynamic range compression, noise reduction, and the directional microphone on horizontal localization performance in hearing aid wearers. Int J Audiol. 2006;45:563–579. doi: 10.1080/14992020600920804. [DOI] [PubMed] [Google Scholar]

- Killion MC. Revised estimate of minimum audible pressure: where is the "missing 6 dB"? J Acoust Soc Am. 1978;63:1501–1508. doi: 10.1121/1.381844. [DOI] [PubMed] [Google Scholar]

- Levy SC, Freed DJ, Puria S. Characterization of the available feedback gain margin at two device microphone locations, in the fossa triangularis and Behind the Ear, for the light-based contact hearing device. J Acoust Soc Am. 2013;134:4062. [Google Scholar]

- Lewis JD, McCreery RW, Neely ST, et al. Comparison of in-situ calibration methods for quantifying input to the middle ear. J Acoust Soc Am. 2009;126:3114–3124. doi: 10.1121/1.3243310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masterton B, Heffner H, Ravizza R. The evolution of human hearing. J Acoust Soc Am. 1969;45:966–985. doi: 10.1121/1.1911574. [DOI] [PubMed] [Google Scholar]

- Mendel LL. Objective and subjective hearing aid assessment outcomes. Am J Audiol. 2007;16:118–129. doi: 10.1044/1059-0889(2007/016). [DOI] [PubMed] [Google Scholar]

- Moore BCJ. Dead regions in the cochlea: conceptual foundations, diagnosis, and clinical applications. Ear Hear. 2004;25:98–116. doi: 10.1097/01.aud.0000120359.49711.d7. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Fullgrabe C, Stone MA. Effect of spatial separation, extended bandwidth, and compression speed on intelligibility in a competing-speech task. J Acoust Soc Am. 2010;128:360–371. doi: 10.1121/1.3436533. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Fullgrabe C, Stone MA. Determination of preferred parameters for multichannel compression using individually fitted simulated hearing aids and paired comparisons. Ear Hear. 2011;32:556–568. doi: 10.1097/AUD.0b013e31820b5f4c. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Peters RW, Stone MA. Benefits of linear amplification and multichannel compression for speech comprehension in backgrounds with spectral and temporal dips. J Acoust Soc Am. 1999;105:400–411. doi: 10.1121/1.424571. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Sek A. Comparison of the CAM2 and NAL-NL2 hearing aid fitting methods. Ear and hearing. 2013;34:83–95. doi: 10.1097/AUD.0b013e3182650adf. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Stone MA, Alcántara JI. Comparison of the electroacoustic characteristics of five hearing aids. Br J Audiol. 2001;35:307–325. doi: 10.1080/00305364.2001.11745249. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Stone MA, Fullgrabe C, et al. Spectro-temporal characteristics of speech at high frequencies, and the potential for restoration of audibility to people with mild-to-moderate hearing loss. Ear and Hearing. 2008;29:907–922. doi: 10.1097/AUD.0b013e31818246f6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BCJ, Tan CT. Perceived naturalness of spectrally distorted speech and music. J Acoust Soc Am. 2003;114:408–419. doi: 10.1121/1.1577552. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Ghent RM, Bray V, et al. Development of a test environment to evaluate performance of modern hearing aid features. J Am Acad Audiol. 2005;16:27–41. doi: 10.3766/jaaa.16.1.4. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Pittman AL. Short-term word-learning rate in children with normal hearing and children with hearing loss in limited and extended high-frequency bandwidths. J Speech Lang Hear Res. 2008;51:785–797. doi: 10.1044/1092-4388(2008/056). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plomp R, Duquesnoy AJ. Room acoustics for the aged. J Acoust Soc Am. 1980;68:1616–1621. doi: 10.1121/1.385216. [DOI] [PubMed] [Google Scholar]

- Plyler PN, Fleck EL. The effects of high-frequency amplification on the objective and subjective performance of hearing instrument users with varying degrees of high-frequency hearing loss. J Speech Lang Hear Res. 2006;49:616–627. doi: 10.1044/1092-4388(2006/044). [DOI] [PubMed] [Google Scholar]

- Ricketts TA, Dittberner AB, Johnson EE. High-frequency amplification and sound quality in listeners with normal through moderate hearing loss. J Speech Lang Hear Res. 2008;51:160–172. doi: 10.1044/1092-4388(2008/012). [DOI] [PubMed] [Google Scholar]

- Robinson JD, Baer T, Moore BCJ. Using transposition to improve consonant discrimination and detection for listeners with severe high-frequency hearing loss. Int J Audiol. 2007;46:293–308. doi: 10.1080/14992020601188591. [DOI] [PubMed] [Google Scholar]

- Ruggles D, Shinn-Cunningham B. Spatial selective auditory attention in the presence of reverberant energy: individual differences in normal-hearing listeners. Journal of the Association for Research in Otolaryngology : JARO. 2011;12:395–405. doi: 10.1007/s10162-010-0254-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scollie S, Seewald R, Cornelisse L, et al. The Desired Sensation Level multistage input/output algorithm. Trends Amplif. 2005;9:159–197. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza PE, Arehart KH, Kates JM, et al. Exploring the limits of frequency lowering. J Speech Lang Hear Res. 2013;56:1349–1363. doi: 10.1044/1092-4388(2013/12-0151). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, et al. Aided perception of /s/ and /z/ by hearing-impaired children. Ear Hear. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, et al. The importance of high-frequency audibility in the speech and language development of children with hearing loss. Arch Otolaryngol Head Neck Surg. 2004;130:556–562. doi: 10.1001/archotol.130.5.556. [DOI] [PubMed] [Google Scholar]

- Stone MA, Moore BCJ. Effect of the speed of a single-channel dynamic range compressor on intelligibility in a competing speech task. J Acoust Soc Am. 2003;114:1023–1034. doi: 10.1121/1.1592160. [DOI] [PubMed] [Google Scholar]

- Stone MA, Moore BCJ. Side effects of fast-acting dynamic range compression that affect intelligibility in a competing speech task. J Acoust Soc Am. 2004;116:2311–2323. doi: 10.1121/1.1784447. [DOI] [PubMed] [Google Scholar]

- Turner CW, Henry BA. Benefits of amplification for speech recognition in background noise. J Acoust Soc Am. 2002;112:1675–1680. doi: 10.1121/1.1506158. [DOI] [PubMed] [Google Scholar]

- Valente M. Hearing Aids: Standards, Options, and Limitations. 2nd ed. New York: Thieme; 2002. [Google Scholar]

- Vickers DA, Moore BCJ, Baer T. Effects of low-pass filtering on the intelligibility of speech in quiet for people with and without dead regions at high frequencies. J Acoust Soc Am. 2001;110:1164–1175. doi: 10.1121/1.1381534. [DOI] [PubMed] [Google Scholar]