Abstract

Nonadherence is a major problem in clinical trials of new medications. To evaluate the extent of nonadherence, this study evaluated pharmacokinetic sampling from 1765 subjects receiving active therapy across eight psychiatric trials conducted between 2001 and 2011. With nonadherence defined as > 50% of plasma samples below the limit of quantification for study drug, the percentage of nonadherent subjects ranged from 12.8% to 39.2%. There was a trend toward increased nonadherence in studies with greater numbers of subjects but an association with nonadherence was not apparent for other study design parameters or subject characteristics. For two trials with multiple recruitment sites in geographical proximity, several subjects attempted to simultaneously enroll at separate site locations. The construct of “professional subjects,” those who enroll in trials only for financial gain, is gaining attention, and we therefore modeled the impact of professional subjects on medication efficacy trials. The results indicate that enrollment of professional subjects who are destined to succeed (those who will appear to achieve treatment success regardless of study drug assignment) can substantially increase both the apparent placebo response rate and the sample size requirement for statistical power, while decreasing the observed effect size. The overlapping nature of nonadherence, professional subjects, and placebo response suggests that these issues should be considered and addressed together. Following this approach, we describe a novel clinical trial design to minimize the adverse effects of professional subjects on trial outcomes, and discuss methods to monitor adherence.

Keywords: medication adherence, professional subjects, reinforcement interventions

INTRODUCTION

Developing new medications is a risky and expensive undertaking, with average costs estimated to exceed $1 billion for each new molecular entity (NME) that receives regulatory approval.1;2 Costs are even higher for central nervous system (CNS) medications, which take longer to develop and often fail after large and expensive efficacy trials.3–5 It is therefore of increasing importance to better understand the reasons for failures and modify the discovery and development process to increase success rates for CNS medication candidates.

Medication nonadherence (synonymous with noncompliance but, less suggestive of coercion6;7) is a common problem in medical practice8 and can preclude the detection of an efficacy signal in clinical trials.9;10 Beyond concerns about nonadherence in “real world patients,” clinical trials have to contend with purposeful nonadherence. Thus, some individuals participate in clinical trials only for financial gain and may have no intention of taking study medication. Referred to henceforth as “professional subjects,” these individuals present a challenging problem. Among a surveyed11 group of repeat clinical trial participants, 25% admitted to exaggerating health problems, and 14% to pretending to have the disorder under study. A subject who feigns illness to gain enrollment and then answers questions truthfully during the course of a medication trial will appear to be a responder, regardless of treatment assignment or rate of adherence. In recent years, apparent placebo response rates have increased in several CNS disorders,12–17 and an increase in professional subjects may be contributing to this phenomenon.15;18 Clearly, professional subjects are not responsible for all cases of medication nonadherence in clinical trials, and rising placebo response rates may reflect additional causes, such as the surreptitious use of exclusionary medications,19 clinician bias to enroll inappropriate subjects for financial gain,13 or changing study designs.20 Nevertheless, the overlapping nature of nonadherence, professional subjects, and placebo response suggests these issues should be considered and addressed together.

First, we review the extent of nonadherence in psychiatry trials conducted by AstraZeneca over a ten year period (2001–2011) that included assays of NMEs in biological fluids (plasma). Second, through modeling, we describe the impact of professional subjects on outcomes of efficacy trials. Finally, we describe a novel study design that may mitigate the impact of medication nonadherence, apparent placebo responders, and professional subjects on trial outcomes.

METHODS

Evaluation of Medication Nonadherence and Potential Predictors of Nonadherence

A set of 14 studies were identified from the AstraZeneca internal clinical document repository using the following criteria: studies targeting psychiatric indications; phase II or later; reported between 2001 and 2011; and including one or more measurements of drug in plasma or urine. Baseline and pharmacokinetic (PK) subject-level data for non-placebo patients were retrieved for eight of 14 studies. Six studies were excluded because they involved supervised drug administration prior to PK sampling. Subjects were classified as “nonadherent” if more than half their PK samples had a drug concentration below the lower limit of quantification (BLQ). Additionally, adherence was assessed by pill counts.

Relationships with PK-defined nonadherence were evaluated for 17 study design parameters that varied between studies and were considered potentially related to adherence: number of subjects randomized; diagnosis; age; presence of multiple drug arms; use of active control; add-on or monotherapy experimental therapy; number of tablets; number of tablets to be taken at each dosing time; use of titration; number of drug concentration measurements; length of treatment; number of visits; number of centers; geographic region; enrollment duration; study phase; and planned enrollment per site. These parameters were evaluated by visual inspection using Tibco Spotfire software and by plotting odds-ratios in a tree diagram.

At the subject-level, relationships with nonadherence were evaluated for demographic variables (age, race/ethnicity, gender, diagnosis, and Clinical Global Impression) that could be evaluated across all 8 studies, as well as the results of baseline clinical assessments (Hamilton Anxiety Scale, Montgomery-Asberg Depression Rating Scale, and Hospital Anxiety and Depression Scale), when available. The first set of variables was chosen to represent widely applied characteristics of a study population and the second set to characterize the clinical severity of subjects at baseline. Evaluations were conducted by visual inspection using Tibco Spotfire software and by the random forests data mining approach.21

Modeling the Impact of Professional Subjects on Efficacy Trial Outcomes

In modeling impact on efficacy trials, two types of professional subjects were considered: 1) those who are “destined to succeed” regardless of randomization to placebo or active medication and 2) those who are “destined to fail” regardless of randomization. An example of the first category would be a subject who pretends to be depressed to gain enrollment in an antidepressant trial but then provides truthful answers during assessments after randomization. An example of the second category would be a smoker who enrolls in a smoking cessation trial with no intention of taking study drug or trying to quit.

The modeling studies utilized binary, success/failure analyses. Success rates in legitimate (non-professional) subjects were set at 5% for placebo and 15% for active treatment. In the first modeling study, results were compared for study populations that included 0%, 10% and 20% “destined to succeed” professional subjects (assuming all other subjects were legitimate). In the second modeling study, results were compared for study populations that included 0%, 10% and 20 % “destined to fail” professional subjects (assuming all other subjects were legitimate). The following outcomes were evaluated in both modeling studies: 1) apparent success rates; 2) number of subjects required for 80% power (alpha 0.05, two-sided Pearson’s chi squared test); and 3) apparent effect size (odds ratio).

RESULTS

Evaluation of Medication Nonadherence and Potential Predictors of Nonadherence

Table 1 shows the indication, number of subjects who received active treatment, and nonadherence levels based on PK sampling and pill counts for each of the eight studies. At the evaluated doses, the PK assay for each drug was sufficiently sensitive to capture trough plasma levels during Phase I studies with observed dosing; therefore, it is likely that BLQ samples reflected nonadherence rather than poor absorption or rapid elimination. With nonadherence defined as > 50% of PK samples BLQ, the average rate of nonadherence was 23%. Using this same definition of nonadherence and excluding the studies with only one PK measurement, the average rate of nonadherence was very similar, at 19%. On average, 18.5% of subjects had all PK samples BLQ in the eight studies. In contrast, pill counts resulted in very low estimates of nonadherence (ranging from 0.0 to 5.1%).

TABLE 1.

Medication Nonadherence in AstraZeneca Psychiatry Studies, 2001–2011

| Indication | Number of Subjects Receiving Active Treatment |

Name of Drug Under Study |

ClinicalTrials.gov Identifier (NCT#) |

Subjects with any PK Sample BLQ (%) |

Subjects with > Half of PK Samples BLQ (%) |

Subjects with all PK Samples BLQ (%) |

Nonadherence Calculated from Pill Counts (%) |

|---|---|---|---|---|---|---|---|

| MDD | 39 | AZD2066* | NCT01145755 | 12.8 | 12.8 | 2.6 | NC |

| MDD | 91 | AZD7268† | NCT01020799 | 28.6 | 16.5 | 12.1 | 2.9 |

| MDD | 100 | AZD5077† (quetiapine) | NCT00326144 | 26.0¶ | 26.0¶ | 26.0¶ | 2.2 |

| GAD | 169 | AZD7325‡ | NCT00807937 | 33.0 | 22.5 | 16.0 | 2.8 |

| GAD | 309 | AZD7325‡ | NCT00808249 | 33.7 | 21.7 | 13.6 | 5.1 |

| CIAS | 313 | AZD3480§ | NCT00528905 | 34.8 | 20.1 | 15.0 | 4.6 |

| MDD | 331 | AZD5077† (quetiapine) | NCT00320268 | 23.3¶ | 23.3¶ | 23.3¶ | 0.0 |

| GAD | 413 | AZD5077† (quetiapine) | NCT00329264 | 39.2¶ | 39.2¶ | 39.2¶ | NC |

PK indicates Pharmacokinetic; BLQ, Below the Limit of Quantification; MDD, Major Depressive Disorder; GAD, Generalized Anxiety Disorder; CIAS, Cognitive Impairment Associated with Schizophrenia; NC, Not Calculated.

Limit of Quantification (LQ) = 1.00 nmol/L.

LQ = 0.5 ng/mL.

LQ = 0.05 ng/mL.

LQ = 0.04 nmol/L.

Only one PK sample was obtained in the study.

The only study design parameter that showed a possible relationship to nonadherence was the number of subjects randomized. The studies in Table 1 are sorted by this parameter to facilitate visual evaluation. A trend toward greater nonadherence (based on PK sampling) in studies with greater numbers of subjects can be seen. Evaluation of the relationship between the number of subjects receiving active treatment and the percentage of subjects with > half of PK samples BLQ revealed a Pearson’s correlation coefficient of 0.68 (p = 0.06, uncorrected for multiple comparisons). No pattern was observed for any other study design parameter or any subject-level variable.

Further, review of data from the two AZD7325 generalized anxiety disorder trials revealed that approximately 4% of subjects attempted to enroll in both studies simultaneously, at different locations. These data were not collected for the other studies, which did not have overlapping enrollment periods and sites in close proximity.

Modeling the Impact of Professional Subjects on Efficacy Trial Outcomes

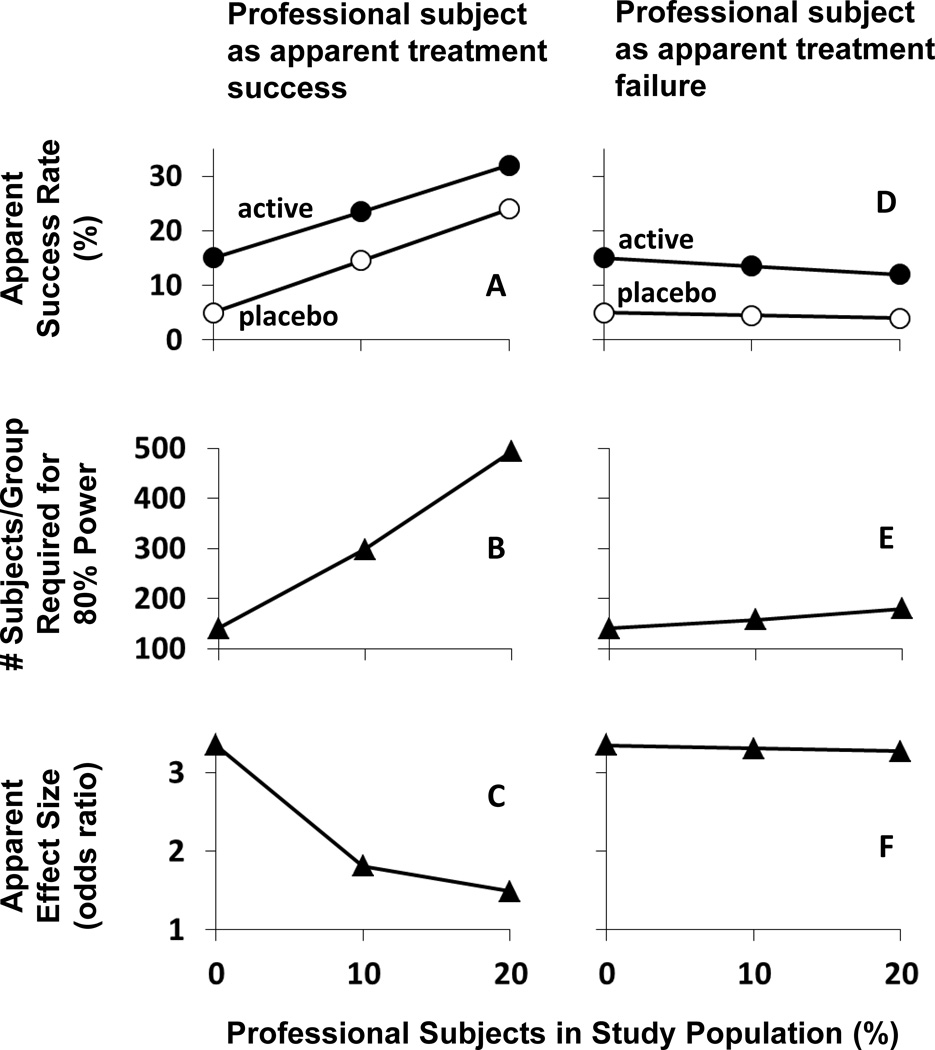

Figure 1 shows the impact of professional subjects who are “destined to succeed” regardless of treatment randomization (panels A–C) or “destined to fail” (panels D–F) on clinical trial outcomes. With no professional subjects in the study population and with success rates set at 5% for placebo and 15% for active treatment, 141 subjects per group were required for 80% power (panels B & E) and the resulting odds ratio was 3.35 (panels C & F). If 10% of the study population was composed of professional subjects who were “destined to succeed,” then 298 subjects per group were required for 80% power (panel B) and the apparent odds ratio was reduced to 1.81 (panel C). If 20% of the study population was composed of professional subjects who were “destined to succeed,” then 494 subjects per group were required for 80% power (panel B) and the apparent odds ratio was further reduced to 1.49.

FIGURE 1.

Impact of professional subjects who appear to achieve treatment success (panels A–C) or treatment failure (panels D–F) on an efficacy trial. A and D: Apparent success rate, with success of legitimate subjects set at 15% for active medication (filled circles) and at 5% for placebo (open circles). B and E: Number of subjects/group required to achieve 80% power in detecting a significant treatment effect (alpha 0.05; 2-sided). C and F: Apparent effect size (odds ratio). In all cases, there is an equal distribution of professional subjects among the placebo and active medication groups.

In contrast, professional subjects who were “destined to fail” had substantially less impact on outcomes when present in similar proportions. For example, when “destined to fail” professional subjects represented 20% of the study population, 180 subjects per group were required for 80% power (panel E) and the effect size was almost unchanged, at 3.27 (panel F).

DISCUSSION

The high rates of nonadherence assessed by measuring drug in plasma samples from these eight clinical trials stand in sharp contrast to a report22 suggesting a high adherence rate (93% of doses taken) in a large set of recent randomized controlled trials. However, this high adherence rate was primarily based on data from pill counts and subject self-report. The present study and past reports10;23;24 indicate that pill counts and self-report greatly overestimate medication adherence relative to PK sampling. Subjects who are financially compensated for their participation in clinical trials may feel compelled to hide medication noncompliance. Furthermore, because clinical trials attract professional subjects who are untruthful about their health information,11;18;25;26 a tendency toward dishonesty may be “enriched” in clinical trial populations. Clearly, these PK data indicate that medication nonadherence is a critical issue that must be addressed to understand the true efficacy of medication candidates.

Although basic statistical considerations would argue that larger clinical trials provide more accurate information about medication efficacy than smaller trials, this may not be true in practice. The argument that statistical power increases as the size of a study population increases is based on the assumption that subject characteristics (e.g., the level of medication adherence) are constant. The data from Table 1 show a trend toward greater medication nonadherence in studies with greater numbers of subjects. Similarly, an evaluation27 of four phase III depression trials with paroxetine showed that the percentage of placebo responders increased in correspondence with the number of subjects enrolled. The fourth quarter of subjects enrolled showed especially high placebo response rates. The authors speculated that “a depleted pool of depressed patients” as well as “differences in the sources of patients over time (referrals versus responders to advertisements)” may contribute to greater placebo response rates in larger trials and over study durations. Because “successful” professional subjects go undetected, they may be responsible for the phenomenon of increased placebo response rates. As the size of a trial increases, sponsors may rely more heavily on advertising, increasing the likelihood that professional subjects will be recruited. Thus, “bigger may not necessarily be better” in clinical trials.

While professional subjects who are “destined to fail” have a marginal impact on statistical power, those who are “destined to succeed” appear to be the greatest threat to medications development. Figure 1 demonstrates that a fairly small percentage of these subjects can have an insurmountable impact on the evaluation of medication efficacy within a study population. This example illustrates that a heroic increase in sample size can restore statistical power (Figure 1, panel B), but even large samples are challenged by a decrease in apparent effect size (Figure 1, panel C). As a consequence, medications that would be highly effective in the intended patient populations could appear unworthy of continued development. If we accept the possibility that increasing numbers of professional subjects have been feigning psychiatric illnesses to gain enrollment in efficacy trials, the implications are clear. We must either improve our ability to identify and exclude such subjects from trials or design trials that mitigate their impact.

Enrichment Strategies

Recent U.S. Food and Drug Administration (FDA) guidance28 states that non-adherent subjects identified after randomization generally cannot be removed from efficacy analyses; however, identifying and selecting patients who are likely to comply with treatment can be an acceptable enrichment strategy prior to randomization. As examples of how this approach has been used to reduce non-drug related variability and increase study power, the guidance cites two studies conducted with marketed medications: 1) a 1960’s study29 demonstrating that antihypertensive therapy reduces morbidity and mortality and 2) the 1980’s Physician’s Health Study30 demonstrating that aspirin can prevent myocardial infarction. In both studies, adherence was assessed during a placebo run-in period and nonadherent subjects were excluded from randomization.

While the FDA-cited studies29;30 demonstrate the potential benefit of enriching medication adherence within a study population, their approach of dropping nonadherent subjects requires careful consideration. Subjects in both studies received no financial compensation for their time and inconvenience, obviating concerns about professional subjects. In modern-day NME trials, we must consider the potential impact of information contained in Informed Consent Forms (ICFs), as well as word-of-mouth communication between study subjects. If factors such as medication nonadherence and early treatment response result in termination of study participation, it may be impossible to conceal this fact from professional subjects, and they could modify their behavior to remain in the study. There is evidence this may occur in antidepressant trials that drop early placebo responders. In a comparison31 of one-week vs. two-week single-blind placebo run-in periods in antidepressant trials, no difference in placebo response rates were seen at the end of the run-in periods, but placebo response rates increased dramatically after randomization, regardless of whether randomization occurred after one or two weeks. Thus, it appears that subjects may “remain depressed” long enough to ensure continued study participation.

The RAMPUP Study Design

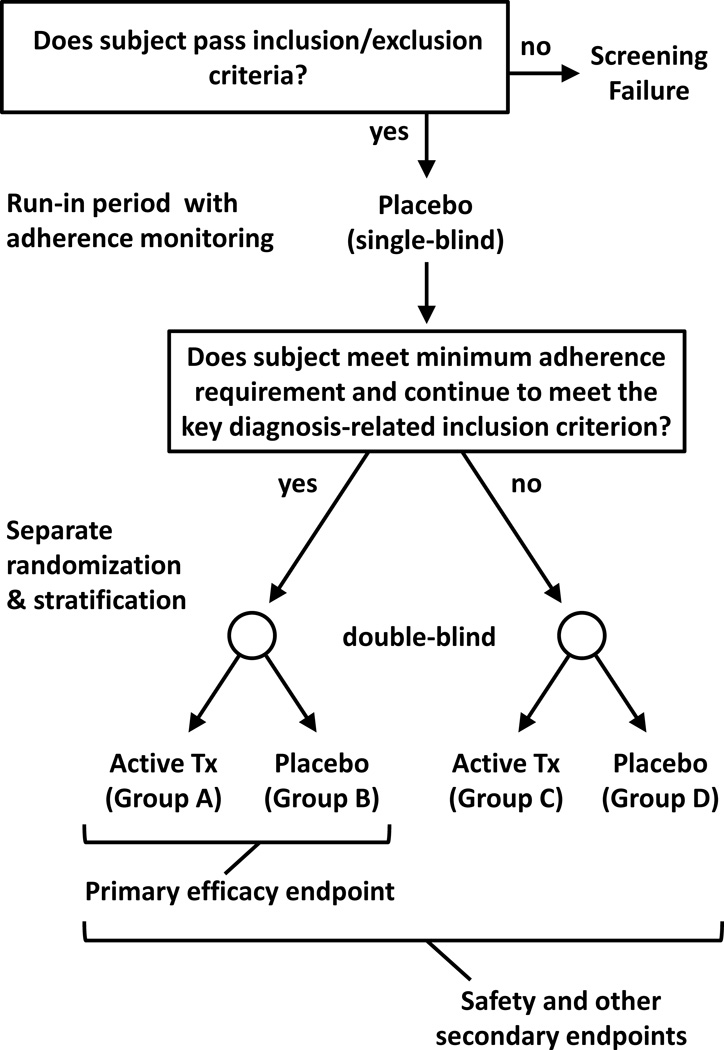

Figure 2 illustrates a study design to mitigate the impact of subjects who are either medication nonadherent or apparent early placebo responders, including professional subjects. Termed the “Run-in with Adherence Monitoring for Prequalification but Undiminished Participation” (RAMPUP) design, a key design feature is that study participation is not terminated for subjects who fail to qualify for the enriched study population. Subjects who meet a specified minimum adherence requirement during the run-in period and maintain stability with regard to key diagnostic criteria “prequalify” for the primary efficacy endpoint comparison; these are the subjects randomized to Groups A and B in Figure 2. Subjects who do not prequalify are retained in the study but are randomized to separate groups, Groups C and D in Figure 2. While only data from Groups A and B are used for the primary efficacy endpoint, data from all subjects are used for safety and secondary efficacy endpoints. The design can also be used with more than two treatment groups. If data required for prequalification cannot be available at the time of randomization (e.g., due to assay turn-around-time), a single randomization can be conducted and prequalified subjects can be identified during data analysis; however, the assessments or biological samples used for prequalification must correspond to the pre-randomization period.

FIGURE 2.

Illustration of the RAMPUP study design. Subjects meeting study inclusion/exclusion criteria participate in a single-blind placebo (or adherence marker capsule) run-in period with adherence monitoring, followed by reassessment of a key diagnosis-related inclusion criterion (e.g., HAM-D score). Subjects who meet a specified minimum adherence requirement during the run-in period and continue to meet the inclusion criterion are prequalified for the primary efficacy endpoint (these subjects are randomized to Groups A & B in the illustration). An important feature of the study design is that all subjects continue to participate, even those who do not prequalify for the primary efficacy endpoint (these subjects are randomized to Groups C & D in the illustration). Data from all subjects are used for safety evaluations and other secondary endpoints.

Retention of medication nonadherent subjects and/or apparent early placebo responders after the run-in period could be beneficial in several ways. First, professional subjects may be more likely to demonstrate medication nonadherence and/or early placebo response during the run-in period if there is no prospect of early study termination for these behaviors. Second, evaluation of adverse events in both adherent and nonadherent subjects may generate a safety profile that is more relevant to “real world” patients. Third, retaining all subjects is responsive to corporate pressure for speed in the process of medications development because safety data from all subjects will contribute to establishing the required safety package for regulatory approval. Finally, to evaluate the merits of any enrichment strategy, it is critically important to evaluate efficacy in subjects who fail to qualify for the enriched population. If the RAMPUP design is beneficial, then greater statistical power and a greater effect size should be apparent in the enriched population. While the theoretical basis of the RAMPUP design is strong and multiple CNS disorders may benefit from its application, its merits must be tested in each patient population. If a specific enrichment strategy does not selectively shift nonadherent subjects and early responders away from the efficacy evaluation, then use of the RAMPUP design could decrease statistical power.

Because the RAMPUP design is being proposed for efficacy trials in multiple CNS disorders, Figure 2 is necessarily vague in some areas. To provide a specific example, a NME smoking cessation study, jointly planned by the National Institute on Drug Abuse (NIDA) and AstraZeneca (AZ), is outlined. Subjects who pass all inclusion and exclusion criteria will participate in a one-week, single-blind run-in period. If medication adherence is inadequate, as evidenced by Medication Event Monitoring System (MEMS) cap openings, or if a subject produces an exhaled CO reading that is < 10 ppm during the run-in period, the subject will not qualify for the primary efficacy evaluation but will continue in the study. A novel reinforcement intervention to improve the accuracy of adherence monitoring will be utilized, as outlined below. The duration of the placebo run-in period is set at one week because of concerns that smokers who are highly motivated to quit would not tolerate long delays before the quit date. For other indications, longer run-in periods may be desirable.

Methods of Monitoring Adherence

Selection of a method of adherence monitoring for use with the RAMPUP design requires careful consideration. All methods have some advantages and drawbacks.

Self-report and pill counts

These appear to be the least reliable methods for collecting adherence data. Self-reports are subject to social desirability as well as recall and response biases, and their reliability and validity can vary depending on the sample, disease, medication and study demand characteristics, as well as the format of the self-report inventory and time frame of assessment.32–39 Among the various techniques for collecting self-report data, completion of daily written or electronic diaries generates the most detailed and reliable data.40 Interactive Voice Response (IVR) systems can also phone participants immediately after dosing windows and inquire about recent medication ingestion.41 While requiring completion of daily diaries or IVR surveys may improve the accuracy of self-report data from legitimate subjects, professional subjects may be untruthful about their adherence and discard study medication to create false records.

Medication Packaging /Organizers with Memory Chips

At least a dozen products track medication adherence electronically with memory chips imbedded in bottle caps, medication organizers, or blister packs.42 The most extensively used product is the MEMS cap. The cap records the date and time of each opening, and if used appropriately by subjects, it produces a precise record of medication dosing. However, if a subject takes too many (or no) pills when the cap is opened or removes pills and takes them later, a false record of dosing is created.

Direct Observation, Photo- or Video-Assisted Observation, and Automated Direct Observation

Although direct observation of dosing may yield the most valid adherence data, it is neither convenient nor feasible for most dosing regimens. In lieu of direct observation, researchers have instructed study participants to use camera/video-capable cell phones to transmit images of capsules before ingestion43 or to record and send videos of ingestion.44 Clearly, subjects could photograph capsules and then discard them. In video recordings, subjects could put capsules or tablets in their mouths without swallowing them or consume non-study products (e.g., vitamins). To guard against these possibilities, a recent study45 with video-assisted observation required a close-up view of the pill itself, a full-face video of ingestion, and finally a shot of an empty mouth. Still, the method is not infallible, and drawbacks include the time required for staff to view and validate video recordings, as well as subject burden. In a similar vein, Ai Cure Technologies, Inc. (New York, NY) has developed interactive software that utilizes the camera in a handheld electronic device to allow automated direct observation. The software can be customized for facial and medication recognition, reminds subjects of dosing times, recognizes medication ingestion, and transmits data to a secure database that investigators can access. This technology appears to reduce burden on research staff to review videos, but the burden on subjects to record and send videos remains.

PK sampling for NMEs and adherence markers

While determination of NME concentrations in plasma or urine can provide a direct measure of adherence for subjects receiving active medication, no useful information is obtained for subjects receiving placebo. To assess adherence in all subjects by PK sampling, compounds may be added to capsules or tablets as adherence markers. Examples include riboflavin46;47 and potassium bromide,48 as well as subtherapeutic doses of medications, such as barbiturates,49;50 digoxin,51 quinine,52 and acetazolamide (15 mg dose; Hampson et al., poster presentation at the 2014 Annual Meeting of the College on Problems of Drug Dependence). A drawback to monitoring adherence through plasma or urine sampling is that only snapshots of adherence are captured, corresponding to times when samples can be collected. Additionally, the coformulation of adherence markers and NMEs may present complex challenges.

Xhale, Inc. (Gainesville, FL) has developed a device to monitor adherence with a breath test by incorporating 2-butanol into capsules as an adherence marker.53 After ingestion, 2-butanol is metabolized to 2-butanone, which is eliminated through the lungs and detected by the device. Subjects are instructed to breathe into the device immediately before dosing and again about 20 minutes later. Data can be transmitted via the Internet or stored in the device, which records a picture of the subject with each exhalation to allow identity verification. While this approach allows remote collection of adherence data, it also places a substantial burden on subjects to document dosing.

Ingestible Sensors

Proteus Digital Health, Inc. (Redwood City, CA) and eTect, Inc. (Newberry, FL) developed ingestible sensors that can be added to placebo and/or NME capsule formulations. The sensors transmit electronic signals after activation by stomach acid, and the signals are detected by adhesive sensors worn on the skin. For the Proteus product, signals are relayed through a subject’s smartphone or tablet computer.54–56 The eTect system is similar in principle, but it does not utilize a handheld electronic device; instead, signals from the ingestible sensors are transmitted directly to digital networks from a watch-like patch worn by subjects. Both products hold promise for improving adherence monitoring in clinical trials, and especially for preventing professional subjects from creating false records of adherence. On the other hand, the burden of constantly wearing a patch (and, in the case of the Proteus product, the burden of using a handheld device) may not be acceptable to all subjects. This could lead to slower enrollment, increased dropout rates, and/or missing data. Without subject cooperation, these products will not create an accurate record of dosing.

Reinforcement Interventions to Improve Adherence Monitoring

Reinforcement interventions incorporate principles of behavioral psychology and behavioral economics. Simply put, a behavior that is reinforced will increase in frequency,57 and these interventions are highly successful in improving patient behaviors.58–61 While direct reinforcement of medication ingestion may be acceptable for medications with established efficacy and well-documented safety profiles,62 the tactic of directly rewarding ingestion of NMEs during efficacy trials would be viewed as coercive. Subjects must feel free to report side effects and discontinue study medication.

In NME trials, reinforcement interventions may prove especially useful to improve subject cooperation with adherence monitoring, using any of the approaches above. If subjects are rewarded for their cooperation regardless of the level of medication adherence, then accuracy should increase. This is an important feature of the planned NIDA/AZ smoking cessation study mentioned above. In this study, subjects will use MEMS caps to record dosing, and will be instructed to take a 15 mg acetazolamide (adherence marker) capsule once daily during the one-week, single-blind run-in period. On Study Day 8, a plasma sample will be taken for determination of acetazolamide concentration. Subjects will receive a cash reward if there is no discrepancy between the record of dosing based on MEMS cap openings and assay results. For example, if a subject opens the MEMS cap once each day, returns an empty bottle on Study Day 8, and has a plasma acetazolamide concentration that is consistent with daily dosing, the subject will receive the cash reward. At the other extreme, if a subject returns a full, unopened bottle on Study Day 8 and has no detectable plasma acetazolamide, the subject will also receive the cash reward. Equally important, a subject who does not take medication and creates a false record of dosing by opening the MEMS cap and discarding capsules will not receive the reward. Similar reinforcement interventions could be designed to increase subject cooperation with other types of adherence monitoring. For example, in studies using ingestible sensors, rewards could be provided to subjects for always wearing the required skin patches and for returning the appropriate number of untaken capsules based on the record of ingestion events.

Professional subjects, who are more financially motivated than legitimate subjects, should be especially responsive to reward interventions. As long as they feel comfortable that honesty about their level of medication adherence (or nonadherence) will not lead to reprimand or negative financial consequences, a high level of cooperation should result.

CONCLUSIONS

Improving success rates for CNS medication candidates requires a multifaceted approach. Medication nonadherence appears to contribute to trial failure and is not well addressed by current trial designs. The RAMPUP design should improve the quality of subjects who participate in efficacy evaluations, but it is not a panacea. Other steps must be taken to improve outcomes. We cannot ignore the issue of medication nonadherence in legitimate subjects, who may be forgetful or experience lapses in motivation to take study medications. Regardless of the selected clinical trial design, subjects should be coached, from the day of randomization through the end of treatment, about the importance of taking study medication. In addition, when weighing the merits of various recruitment methods, we must carefully consider the relative likelihood that professional subjects will be attracted to screening. To the extent that the use of advertising can be minimized in favor of outreach to patients identified during clinic visits or through referral by non-investigator healthcare providers, the number of professional subjects entering screening may be greatly reduced. Avoiding excessive financial compensation may be somewhat helpful, but we must keep in mind that any level of compensation may be attractive to unemployed professional subjects. Finally, an improved ability to identify professional subjects during screening and prevent their enrollment in clinical trials is critical. To this end, clinical trial subject registries may be useful.63–65 Ideally, an international registry or a set of registries covering large geographical areas (e.g., the U.S. and the E.U.) could help researchers identify professional subjects who attempt simultaneous study enrollment. In the previously mentioned study of repeat clinical trial subjects,11 those who admitted to using deception to gain study enrollment reported participating in an average of 12.8 studies during the previous 12 month period! This rate of study enrollment suggests that professional subjects often participate in concurrent studies without detection, a practice that increases the risk of adverse drug interactions and could lead to incorrect characterization of NME safety profiles63;64. Thus, a broadly adopted subject registry would serve to improve both subject safety and study integrity.

In summary, we hypothesize that the RAMPUP study design will lessen the impact of professional subjects, medication nonadherence, and apparent early placebo responders on efficacy trial outcomes. In addition, we encourage efforts to prevent the enrollment of inappropriate subjects in clinical trials and to increase medication adherence. Through this combined approach, we are hopeful that success rates will improve for CNS medication candidates.

Acknowledgments

The authors thank Phil Skolnick (NIDA) and Earle Bain (AbbVie) for useful discussions during data analysis and manuscript preparation.

Source of Funding: David J. McCann is an employee of the National Institutes of Health. Nancy M. Petry is an employee of the University of Connecticut. Robert C. Alexander is an employee of AstraZeneca. Anders Bresell, Eva Isacsson, and Ellis Wilson were employees of AstraZeneca at the time of this study. AstraZeneca sponsored all clinical trials analyzed in this study.

Footnotes

Conflicts of Interest: The authors declare no other conflicts of interest.

Reference List

- 1.Adams CP, Brantner VV. Spending on new drug development. Health Econ. 2010;19:130–141. doi: 10.1002/hec.1454. [DOI] [PubMed] [Google Scholar]

- 2.Paul SM, Mytelka DS, Dunwiddie CT, et al. How to improve R&D productivity: the pharmaceutical industry's grand challenge. Nat Rev Drug Discov. 2010;9:203–214. doi: 10.1038/nrd3078. [DOI] [PubMed] [Google Scholar]

- 3.Kaitin KI, Milne CP. A dearth of new meds. Sci Am. 2011;305:16. doi: 10.1038/scientificamerican0811-16. [DOI] [PubMed] [Google Scholar]

- 4.DiMasi JA, Feldman L, Seckler A, et al. Trends in risks associated with new drug development: success rates for investigational drugs. Clin Pharmacol Ther. 2010;87:272–277. doi: 10.1038/clpt.2009.295. [DOI] [PubMed] [Google Scholar]

- 5.Adams CP, Brantner VV. Estimating the cost of new drug development: is it really 802 million dollars? Health Aff. 2006;25(2):420–428. doi: 10.1377/hlthaff.25.2.420. [DOI] [PubMed] [Google Scholar]

- 6.Barofsky I. Compliance, adherence and the therapeutic alliance: steps in the development of self-care. Soc Sci Med. 1978;12:369–376. [PubMed] [Google Scholar]

- 7.Romano PE. Semantic follow-up: adherence is a better term than compliance is a better term than cooperation. Arch Ophthalmol. 1988;106:450. doi: 10.1001/archopht.1988.01060130492013. [DOI] [PubMed] [Google Scholar]

- 8.Cutler DM, Everett W. Thinking outside the pillbox--medication adherence as a priority for health care reform. N Engl J Med. 2010;362:1553–1555. doi: 10.1056/NEJMp1002305. [DOI] [PubMed] [Google Scholar]

- 9.Haynes RB, Dantes R. Patient compliance and the conduct and interpretation of therapeutic trials. Control Clin Trials. 1987;8:12–19. doi: 10.1016/0197-2456(87)90021-3. [DOI] [PubMed] [Google Scholar]

- 10.Czobor P, Skolnick P. The secrets of a successful clinical trial: compliance, compliance, and compliance. Mol Interv. 2011;11:107–110. doi: 10.1124/mi.11.2.8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Devine EG, Waters ME, Putnam M, et al. Concealment and fabrication by experienced research subjects. Clin Trials. 2013;10:935–948. doi: 10.1177/1740774513492917. [DOI] [PubMed] [Google Scholar]

- 12.Walsh BT, Seidman SN, Sysko R, et al. Placebo response in studies of major depression: variable, substantial, and growing. JAMA. 2002;287:1840–1847. doi: 10.1001/jama.287.14.1840. [DOI] [PubMed] [Google Scholar]

- 13.Fava M, Evins AE, Dorer DJ, et al. The problem of the placebo response in clinical trials for psychiatric disorders: culprits, possible remedies, and a novel study design approach. Psychother Psychosom. 2003;72:115–127. doi: 10.1159/000069738. [DOI] [PubMed] [Google Scholar]

- 14.Chen YF, Wang SJ, Khin NA, et al. Trial design issues and treatment effect modeling in multi-regional schizophrenia trials. Pharm Stat. 2010;9:217–229. doi: 10.1002/pst.439. [DOI] [PubMed] [Google Scholar]

- 15.Kemp AS, Schooler NR, Kalali AH, et al. What is causing the reduced drug-placebo difference in recent schizophrenia clinical trials and what can be done about it? Schizophr Bull. 2010;36:504–509. doi: 10.1093/schbul/sbn110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Khin NA, Chen YF, Yang Y, et al. Exploratory analyses of efficacy data from major depressive disorder trials submitted to the US Food and Drug Administration in support of new drug applications. J Clin Psychiatry. 2011;72:464–472. doi: 10.4088/JCP.10m06191. [DOI] [PubMed] [Google Scholar]

- 17.Khin NA, Chen YF, Yang Y, et al. Exploratory analyses of efficacy data from schizophrenia trials in support of new drug applications submitted to the US Food and Drug Administration. J Clin Psychiatry. 2012;73:856–864. doi: 10.4088/JCP.11r07539. [DOI] [PubMed] [Google Scholar]

- 18.Shiovitz TM, Zarrow ME, Shiovitz AM, et al. Failure rate and "professional subjects" in clinical trials of major depressive disorder. J Clin Psychiatry. 2011;72:1284–1285. doi: 10.4088/JCP.11lr07229. [DOI] [PubMed] [Google Scholar]

- 19.Greenblatt DJ, Harmatz JS, Shader RI. Plasma alprazolam concentrations. Relation to efficacy and side effects in the treatment of panic disorder. Arch Gen Psychiatry. 1993;50:715–722. doi: 10.1001/archpsyc.1993.01820210049006. [DOI] [PubMed] [Google Scholar]

- 20.Khan A, Bhat A, Kolts R, Thase ME, Brown W. Why has the antidepressant-placebo difference in antidepressant clinical trials diminished over the past three decades? CNS Neurosci Ther. 2010;16:217–226. doi: 10.1111/j.1755-5949.2010.00151.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Breiman L. Random Forests. Machine Learning. 2001;45:5–32. [Google Scholar]

- 22.Gossec L, Tubach F, Dougados M, et al. Reporting of adherence to medication in recent randomized controlled trials of 6 chronic diseases: a systematic literature review. Am J Med Sci. 2007;334:248–254. doi: 10.1097/MAJ.0b013e318068dde8. [DOI] [PubMed] [Google Scholar]

- 23.Anderson AL, Li SH, Biswas K, et al. Modafinil for the treatment of methamphetamine dependence. Drug Alcohol Depend. 2012;120:135–141. doi: 10.1016/j.drugalcdep.2011.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Somoza EC, Winship D, Gorodetzky CW, et al. A multisite, double-blind, placebo-controlled clinical trial to evaluate the safety and efficacy of vigabatrin for treating cocaine dependence. JAMA Psychiatry. 2013;70:630–637. doi: 10.1001/jamapsychiatry.2013.872. [DOI] [PubMed] [Google Scholar]

- 25.Tishler CL, Bartholomae S. Repeat participation among normal healthy research volunteers: professional guinea pigs in clinical trials? Perspect Biol Med. 2003;46:508–520. doi: 10.1353/pbm.2003.0094. [DOI] [PubMed] [Google Scholar]

- 26.Apseloff G, Swayne JK, Gerber N. Medical histories may be unreliable in screening volunteers for clinical trials. Clin Pharmacol Ther. 1996;60:353–356. doi: 10.1016/S0009-9236(96)90063-6. [DOI] [PubMed] [Google Scholar]

- 27.Liu KS, Snavely DB, Ball WA, et al. Is bigger better for depression trials? J Psychiatr Res. 2008;42:622–630. doi: 10.1016/j.jpsychires.2007.07.003. [DOI] [PubMed] [Google Scholar]

- 28.US Department of Health and Human Services Food and Drug Administration. Guidance for industry enrichment strategies for clinical trials to support approval of human drugs and biological products [FDA Web site] [Accessed April 1, 2015];2012 Dec; Available at: http://www.fda.gov/downloads/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/UCM332181.pdf.

- 29.Veterans Administration Cooperative Study Group on Antihypertensive Agents. Effects of treatment on morbidity in hypertension. Results in patients with diastolic blood pressures averaging 115 through 129 mm Hg. JAMA. 1967;202:1028–1034. [PubMed] [Google Scholar]

- 30.Young FE, Nightingale SL, Temple RA. The preliminary report of the findings of the aspirin component of the ongoing Physicians' Health Study. The FDA perspective on aspirin for the primary prevention of myocardial infarction. JAMA. 1988;259:3158–3160. [PubMed] [Google Scholar]

- 31.Faries DE, Heiligenstein JH, Tollefson GD, et al. The double-blind variable placebo lead-in period: results from two antidepressant clinical trials. J Clin Psychopharmacol. 2001;21:561–568. doi: 10.1097/00004714-200112000-00004. [DOI] [PubMed] [Google Scholar]

- 32.AlGhurair SA, Hughes CA, Simpson SH, et al. A Systematic Review of Patient Self-Reported Barriers of Adherence to Antihypertensive Medications Using the World Health Organization Multidimensional Adherence Model. J Clin Hypertens. 2012;14(12):877–886. doi: 10.1111/j.1751-7176.2012.00699.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cook CL, Wade WE, Martin BC, et al. Concordance among three self-reported measures of medication adherence and pharmacy refill records. J Am Pharm Assoc. 2005;45(2):151–159. doi: 10.1331/1544345053623573. [DOI] [PubMed] [Google Scholar]

- 34.DiMatteo MR. Variations in patients' adherence to medical recommendations: a quantitative review of 50 years of research. Med Care. 2004;42:200–209. doi: 10.1097/01.mlr.0000114908.90348.f9. [DOI] [PubMed] [Google Scholar]

- 35.Doro P, Benko R, Czako A, et al. Optimal recall period in assessing the adherence to antihypertensive therapy: a pilot study. Int J Clin Pharm. 2011;33:690–695. doi: 10.1007/s11096-011-9529-7. [DOI] [PubMed] [Google Scholar]

- 36.Farmer KC. Methods for measuring and monitoring medication regimen adherence in clinical trials and clinical practice. Clin Ther. 1999;21:1074–1090. doi: 10.1016/S0149-2918(99)80026-5. [DOI] [PubMed] [Google Scholar]

- 37.Garber MC, Nau DP, Erickson SR, et al. The concordance of self-report with other measures of medication adherence: a summary of the literature. Med Care. 2004;42:649–652. doi: 10.1097/01.mlr.0000129496.05898.02. [DOI] [PubMed] [Google Scholar]

- 38.Simoni JM, Kurth AE, Pearson CR, et al. Self-report measures of antiretroviral therapy adherence: A review with recommendations for HIV research and clinical management. AIDS Behav. 2006;10:227–245. doi: 10.1007/s10461-006-9078-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wang PS, Benner JS, Glynn RJ, et al. How well do patients report noncompliance with antihypertensive medications?: a comparison of self-report versus filled prescriptions. Pharmacoepidemiol Drug Saf. 2004;13:11–19. doi: 10.1002/pds.819. [DOI] [PubMed] [Google Scholar]

- 40.Quittner AL, Modi AC, Lemanek KL, et al. Evidence-based assessment of adherence to medical treatments in pediatric psychology. J Pediatr Psychol. 2008;33:916–936. doi: 10.1093/jpepsy/jsm064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tucker JA, Simpson CA, Huang J, et al. Utility of an interactive voice response system to assess antiretroviral pharmacotherapy adherence among substance users living with HIV/AIDS in the rural South. AIDS Patient Care STDS. 2013;27:280–286. doi: 10.1089/apc.2012.0322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Park LG, Howie-Esquivel J, Dracup K. Electronic Measurement of Medication Adherence. West J Nurs Res. 2015;37:28–49. doi: 10.1177/0193945914524492. [DOI] [PubMed] [Google Scholar]

- 43.Galloway GP, Coyle JR, Guillen JE, et al. A simple, novel method for assessing medication adherence: capsule photographs taken with cellular telephones. J Addict Med. 2011;5:170–174. doi: 10.1097/ADM.0b013e3181fcb5fd. [DOI] [PubMed] [Google Scholar]

- 44.Hoffman JA, Cunningham JR, Suleh AJ, et al. Mobile direct observation treatment for tuberculosis patients: a technical feasibility pilot using mobile phones in Nairobi, Kenya. Am J Prev Med. 2010;39:78–80. doi: 10.1016/j.amepre.2010.02.018. [DOI] [PubMed] [Google Scholar]

- 45.Petry NM, Alessi SM, Byrne S, et al. Reinforcing adherence to antihypertensive medications. J Clin Hypertens. 2015 Jan;17(1):33–38. doi: 10.1111/jch.12441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Deuschle KW, Jordahl C, Hobby GL. Clinical usefulness of riboflavin-tagged isoniazid for self-medication in tuberculous patients. Am Rev Respir Dis. 1960;82:1–10. doi: 10.1164/arrd.1960.82.1.1. [DOI] [PubMed] [Google Scholar]

- 47.Fuller R, Roth H, Long S. Compliance with disulfiram treatment of alcoholism. J Chronic Dis. 1983;36:161–170. doi: 10.1016/0021-9681(83)90090-5. [DOI] [PubMed] [Google Scholar]

- 48.Braam RL, van Uum SH, Lenders JW, et al. Bromide as marker for drug adherence in hypertensive patients. Br J Clin Pharmacol. 2008;65:733–736. doi: 10.1111/j.1365-2125.2007.03068.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Feely M, Cooke J, Price D, et al. Low-dose phenobarbitone as an indicator of compliance with drug therapy. Br J Clin Pharmacol. 1987;24:77–83. doi: 10.1111/j.1365-2125.1987.tb03139.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Karbwang J, Fungladda W, Pickard CE, et al. Initial evaluation of low-dose phenobarbital as an indicator of compliance with antimalarial drug treatment. Bull World Health Organ. 1998;76(Suppl 1):67–73. [PMC free article] [PubMed] [Google Scholar]

- 51.Maenpaa H, Javela K, Pikkarainen J, et al. Minimal doses of digoxin: a new marker for compliance to medication. Eur Heart J. 1987;8(Suppl I):31–37. doi: 10.1093/eurheartj/8.suppl_i.31. [DOI] [PubMed] [Google Scholar]

- 52.Geisler A, Thomsen K. Drug defaulting estimated by the use of a drug marker. Curr Med Res Opin. 1973;1:596–602. doi: 10.1185/03007997309111727. [DOI] [PubMed] [Google Scholar]

- 53.Morey TE, Booth M, Wasdo S, et al. Oral adherence monitoring using a breath test to supplement highly active antiretroviral therapy. AIDS Behav. 2013;17:298–306. doi: 10.1007/s10461-012-0318-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Au-Yeung KY, Moon GD, Robertson TL, et al. Early clinical experience with networked system for promoting patient self-management. Am J Manag Care. 2011;17:e277–e287. [PubMed] [Google Scholar]

- 55.Kane JM, Perlis RH, Dicarlo LA, et al. First experience with a wireless system incorporating physiologic assessments and direct confirmation of digital tablet ingestions in ambulatory patients with schizophrenia or bipolar disorder. J Clin Psychiatry. 2013;74:e533–e540. doi: 10.4088/JCP.12m08222. [DOI] [PubMed] [Google Scholar]

- 56.Eisenberger U, Wuthrich RP, Bock A, et al. Medication adherence assessment: high accuracy of the new Ingestible Sensor System in kidney transplants. Transplantation. 2013;96:245–250. doi: 10.1097/TP.0b013e31829b7571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ferster CB, Skinner BF. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- 58.Volpp KG, Troxel AB, Pauly MV, et al. A randomized, controlled trial of financial incentives for smoking cessation. N Engl J Med. 2009;360:699–709. doi: 10.1056/NEJMsa0806819. [DOI] [PubMed] [Google Scholar]

- 59.Volpp KG, John LK, Troxel AB, et al. Financial incentive-based approaches for weight loss: a randomized trial. JAMA. 2008;300:2631–2637. doi: 10.1001/jama.2008.804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Petry NM, Andrade LF, Barry D, et al. A randomized study of reinforcing ambulatory exercise in older adults. Psychol Aging. 2013;28:1164–1173. doi: 10.1037/a0032563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Petry NM, Cengiz E, Wagner JA, et al. Incentivizing behaviour change to improve diabetes care. Diabetes Obes Metab. 2013;15:1071–1076. doi: 10.1111/dom.12111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Petry NM, Rash CJ, Byrne S, et al. Financial reinforcers for improving medication adherence: findings from a meta-analysis. Am J Med. 2012;125:888–896. doi: 10.1016/j.amjmed.2012.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Boyar D, Goldfarb NM. Preventing overlapping enrollment in clinical studies. Journal of Clinical Research Best Practices. 2010;6:1–4. [Google Scholar]

- 64.Resnik DB, Koski G. A national registry for healthy volunteers in phase 1 clinical trials. JAMA. 2011;305:1236–1237. doi: 10.1001/jama.2011.354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Shiovitz TM, Wilcox CS, Gevorgyan L, et al. CNS sites cooperate to detect duplicate subjects with a clinical trial subject registry. Innov Clin Neurosci. 2013;10:17–21. [PMC free article] [PubMed] [Google Scholar]