Abstract

Small area estimation is a statistical technique used to produce reliable estimates for smaller geographic areas than those for which the original surveys were designed. Such small area estimates (SAEs) often lack rigorous external validation. In this study, we validated our multilevel regression and poststratification SAEs from 2011 Behavioral Risk Factor Surveillance System data using direct estimates from 2011 Missouri County-Level Study and American Community Survey data at both the state and county levels. Coefficients for correlation between model-based SAEs and Missouri County-Level Study direct estimates for 115 counties in Missouri were all significantly positive (0.28 for obesity and no health-care coverage, 0.40 for current smoking, 0.51 for diabetes, and 0.69 for chronic obstructive pulmonary disease). Coefficients for correlation between model-based SAEs and American Community Survey direct estimates of no health-care coverage were 0.85 at the county level (811 counties) and 0.95 at the state level. Unweighted and weighted model-based SAEs were compared with direct estimates; unweighted models performed better. External validation results suggest that multilevel regression and poststratification model-based SAEs using single-year Behavioral Risk Factor Surveillance System data are valid and could be used to characterize geographic variations in health indictors at local levels (such as counties) when high-quality local survey data are not available.

Keywords: American Community Survey, Behavioral Risk Factor Surveillance System, external validation, Missouri County-Level Study, multilevel regression and poststratification, small area estimation

Small area estimation is a statistical technique used to produce statistically reliable estimates for smaller geographic areas than those for which the original surveys were designed (1). Typically, “small areas” means small geographic areas, such as counties or subcounty areas in the United States. For national health surveys, these small areas usually have such small sample sizes that direct estimates have large variances and are not reliable. More often, many of these small areas have no samples at all. Substantial variations in population health outcomes have been observed at local geographic levels, such as neighborhoods (census tracts) (2, 3), zip codes (4), cities (5), and counties (6, 7). Thus, small area estimates (SAEs) of population health conditions and behaviors at local levels are critical for informing local health policy-makers, improving community-based public health program planning and intervention strategy development, and facilitating public health resource allocation and delivery.

In order to meet the growing need for local-level data in public health practice, a variety of small area estimation methods, especially model-based methods, have been applied to produce SAEs using data from US national health surveys, such as the National Health and Nutrition Examination Survey (8), the National Health Interview Survey (9, 10), and the National Survey of Children’s Health (11). The Behavioral Risk Factor Surveillance System (BRFSS) has been a major data source that has been used to produce model-based SAEs at the levels of the county (12–26), zip code (27–30), and census tract (31).

In general, these model-based SAEs are generated under the assumption that small area models constructed for survey sample data are applicable for the entire target population of interest (32). This strong assumption requires further evaluation, especially of the model results, to confirm the validity of model-based SAEs. Validation of model-based SAEs includes 1) internal validation to evaluate their consistency with direct estimates from the surveys from which the SAEs are derived and 2) external validation to evaluate their consistency with reliable external measurements from other local surveys or administrative data, such as a census. Most small area estimation studies have used internal validation, while only a few investigators have conducted critical external validations (10, 13, 33). External validation has been difficult to carry out, because 1) few local health surveys or administrative data were originally designed to generate county-level or subcounty-level health indicators and 2) US Census data, which include rich information on small area demographic and socioeconomic factors, usually lack relevant population health measures.

We recently developed a multilevel regression and poststratification (MRP) approach for estimating the prevalence of chronic obstructive pulmonary disease (COPD) at the levels of the census block, census tract, congressional district, and county using 2011 BRFSS data (31). Our internal validation confirmed strong consistency between our model-based SAEs and BRFSS direct estimates at both the state and county levels (31). However, as in most previous reports of SAEs, we did not have an external data source with which to conduct external validation.

Strictly speaking, there are no absolute “gold standard” health surveys or Census Bureau surveys for performing external validation, especially for population health measures; even data from the conventional decennial long-form Census (census survey data) are based on approximately 5% of the US population. However, we found that 2 surveys were quite desirable for external validation of our MRP methodology: the 2011 Missouri County-Level Study (MO-CLS) and the Census Bureau’s American Community Survey (ACS). MO-CLS was originally designed to produce reliable county-level prevalence estimates of chronic disease conditions and risk factors for all 115 counties within the state of Missouri. MO-CLS used the same survey questions as those in the regular BRFSS survey; therefore, MO-CLS county-level direct estimates could be treated as a relative gold standard with which to validate the model-based SAEs using BRFSS data applied to Missouri counties. Both the ACS and BRFSS surveys ask respondents about current health insurance coverage. ACS direct estimates of no health-care coverage at various geographic levels could be used as another reliable gold standard for validating the model-based SAEs across the United States. These survey instruments’ congruity with the BRFSS provides a solid basis for valid comparisons between BRFSS model-based SAEs and direct estimates from the MO-CLS and ACS at both the state and county levels.

Our objective in this study was to validate our model-based SAEs using the MRP approach based on the BRFSS data by 1) comparing our model-based SAEs with MO-CLS county-level direct prevalence estimates of current smoking, obesity, diabetes, COPD among adults aged ≥18 years, and lack of health-care coverage among adults aged 18–64 years; and 2) comparing state- and county-level model-based SAEs for the prevalence of no health-care coverage among adults aged 18–64 years with those provided by the ACS. We included health indicators that have different levels of prevalence in this study to determine whether the methodology works well with indicators of various levels of prevalence. We generated model-based estimates with and without inclusion of BRFSS survey weights in the model fitting (weighted and unweighted model-based SAEs) to assess the validity of the common practice among some investigators of ignoring survey weights in unit-level small area estimation models (34).

METHODS

The MRP approach involves the following 2 basic steps: First, multilevel models are constructed and fitted with the use of health surveys, such as nationwide state-based BRFSS data as in this study, to simultaneously estimate the associations between individual demographic factors and geographic contexts and population health conditions and behaviors; and second, the fitted multilevel models are applied to make predictions using available US Census population counts at the smallest geographic level (the census block) which could be further aggregated to produce reliable health indicator estimates at any higher geographic level of interest in public health practice. For this validation study of MRP, we used the 2011 MO-CLS (http://health.mo.gov/data/cls) to compare its direct estimates for all 115 Missouri counties with 2011 county-level BRFSS (http://www.cdc.gov/brfss/annual_data/annual_2011.htm) model-based SAEs for the following population health indicators, which were covered by both surveys: COPD, diabetes, current smoking, obesity, and proportion of uninsured adults. The prevalences of these selected BRFSS indicators in the United States ranged from 6% (COPD) to 30% (obesity). We then used the 2011 ACS data (http://www.census.gov/acs/www/) to make a comparison of ACS direct survey estimates of the percentage of uninsured adults aged 18–64 years at both the state and county levels (n = 811) with their corresponding model-based SAEs from the 2011 BRFSS survey.

Data sources

Behavioral Risk Factor Surveillance System

The BRFSS is a nationwide, state-based random-digit-dialed telephone survey of the noninstitutionalized US adult population aged ≥18 years (http://www.cdc.gov/brfss/). The survey uses a disproportionate stratified sample design and is administered annually to households with landlines or cellular telephones by state health departments in collaboration with the Centers for Disease Control and Prevention. The median of the 2011 survey response rates for all 50 states and the District of Columbia (DC) was 49.7%, ranging from 33.8% for New York to 64.1% for South Dakota. The 2011 Missouri BRFSS survey response rate was 52.8%.

We selected the following 5 health indicators from the 2011 BRFSS, which were also available from the MO-CLS: diagnosed COPD (1 = COPD, 0 = no COPD); diagnosed diabetes (1 = diabetes, 0 = no diabetes); obesity (body mass index (weight (kg)/height (m)2) ≥30 (1 = obese, 0 = nonobese), calculated from self-reported heights and weights); current smoking (1 = current smoker, 0 = not current smoker) among adults aged ≥18 years; and percentage of adults aged 18–64 years without any health-care coverage (1 = uninsured, 0 = insured). Diagnosis was based on responses to questions that began with “Has a doctor, nurse, or other health professional ever told you that you had any of the following [chronic conditions]?” We excluded respondents who had missing values, refused to answer the question, or did not know. Gestational diabetes diagnosed during pregnancy was defined as not having diabetes. Current smokers were respondents who reported having ever smoked 100 cigarettes during their lifetime and who reported currently smoking on some days or every day. We excluded respondents with biologically unlikely body mass index values (<12 or >70). Lack of health-care coverage was defined as a “no” response to the question, “Do you have any kind of health-care coverage, including health insurance, prepaid plans such as health maintenance organizations, or government plans such as Medicare or the Indian Health Service?” Thus, all of the indicators were binary. For the validation studies, there were 489,391 eligible BRFSS respondents aged ≥18 years from 3,127 counties (county-level sample sizes ranged from 1 to 4,415, with a mean of 157 and a median of 53) and 332,573 respondents aged 18–64 years from 3,114 counties (county-level sample sizes ranged from 1 to 3,214, with a mean of 106 and a median of 35) in the entire United States. In Missouri, there were 6,331 respondents aged ≥18 years (county-level sample sizes ranged from 6 to 684, with a mean of 55 and a median of 27) and 4,178 respondents aged 18–64 years (county-level sample sizes ranged from 3 to 479, with a mean of 36 and a median of 17) from all 115 counties.

Missouri County-Level Study

The 2011 MO-CLS followed the standard Centers for Disease Control and Prevention BRFSS protocol. The sample was drawn from all 115 counties (including the City of St. Louis) in Missouri. The sample size was approximately 800 for Jackson County, St. Louis County, and the City of St. Louis and approximately 400 for each of 112 remaining counties. The overall sample size in the study was 52,089, including 47,261 landline users and 4,828 cellphone-only users. The questionnaire included the core and optional questions in the Adult Tobacco Survey (35), as well as selected questions on key chronic disease and behavioral risk factors and the demographic questions in the BRFSS. The overall survey response rate was 58.7%. Data were weighted to be representative of the Missouri adult (aged ≥18 years), noninstitutionalized population of each county using iterative proportional fitting or raking methodology. For the validation study, there were 50,690 eligible MO-CLS respondents aged ≥18 years and 29,171 respondents aged 18–64 years.

American Community Survey

The ACS is currently the largest nationwide, continuous sample survey being implemented by the US Census Bureau to produce reliable estimates for cities, counties, states, and the entire country. The 2011 ACS sampled approximately 3.3 million housing-unit addresses in all 50 states and DC. As with the decennial Census, response to the ACS is mandatory. The ACS has collected demographic, housing, social, and economic data since 2000 and information on health insurance coverage since 2008. Starting in 2005, single-year ACS estimates were available for census geographic areas with populations of 65,000 or greater. Thus, in this study, we used the 2011 ACS estimates of the percentage of the population aged 18–64 years who did not have any health-care coverage for all 50 states and DC and for 811 counties with a population size of at least 65,000. In addition, the Census Bureau’s Small Area Health Insurance Estimates (SAHIE) program (http://www.census.gov/did/www/sahie/) uses ACS data and produces single-year estimates of health insurance coverage for every county in the United States. The SAHIE estimates were ACS model-based estimates.

Data analysis

MRP with BRFSS

Using an MRP approach, we estimated the prevalences of the 5 health indicators for all 50 states and DC and all 3,143 counties in the United States. Our MRP modeling framework with the BRFSS involved the following 4 basic steps: 1) construct multilevel prevalence models using BRFSS data; 2) apply multilevel prediction models to the census population; 3) generate model-based SAEs via poststratification; and 4) validate model-based SAEs (31).

In this study, the same multilevel prevalence model was constructed for all 5 population health indicators. This was a multilevel logistic model that included the following individual-level predictors: age group (18–24, 25–29, 30–34, 35–39, 40–44, 45–49, 50–54, 55–59, 60–64, 65–69, 70–74, 75– 79, or ≥80 years), sex, race/ethnicity (non-Hispanic white; non-Hispanic black; American Indian or Alaska Native; Asian, Native Hawaiian, or other Pacific Islander; other single race; 2 or more races; or Hispanic), and county-level poverty, as well as state- and nested county-level random effects. The multilevel prediction models for all 5 indicators followed the same format as the multilevel prevalence models. The multilevel prevalence models were fitted both with and without BRFSS survey weights, and the corresponding multilevel prevalence models generated the weighted and unweighted model-based SAEs, respectively. County-level poverty information was obtained from 5-year ACS (2007–2011) estimates, and Census 2010 population counts were used in poststratification.

Internal validation with BRFSS direct survey estimates

We implemented internal validation of our model-based SAEs by comparing them with BRFSS direct estimates for all 50 states and DC and for counties with at least 50 respondents. Basic summary statistics (minimum, first quartile, median, third quartile, maximum, interquartile range, and range) were used to compare the distributions of our model-based SAEs and BRFSS direct estimates, and Pearson correlation coefficients were used to evaluate their internal consistency.

External validation with MO-CLS and ACS direct estimates

We compared our county-level model-based prevalence estimates of the 5 population health indicators with the MO-CLS direct survey estimates for all 115 Missouri counties. We also compared other basic summary statistics (minimum, first quartile, median, third quartile, maximum, interquartile range, and range) and calculated the Pearson correlations of prevalence estimates. We counted the number of model-based SAEs within the 95% confidence intervals of 115 MO-CLS direct estimates. We ranked the model-based SAEs and the 95% confidence intervals of MO-CLS direct estimates and compared their ranking consistency.

We conducted a similar comparison between our model-based prevalence estimates of no health-care coverage with the ACS direct estimates available for all 50 states and DC and 811 counties. In addition, we compared our model-based prevalence estimates of no health-care coverage with SAHIE estimates, which were available for all 50 states and DC and for 3,142 counties.

RESULTS

Internal validation

For all 5 population health indicators, the Pearson correlation coefficients for correlation between BRFSS model-based estimates and BRFSS direct estimates at the state level were consistently higher than 0.99 for weighted estimates and higher than 0.94 for unweighted estimates (Table 1). Slightly lower correlations were observed at the county level, with correlation coefficients higher than 0.85 for weighted estimates and higher than 0.73 for unweighted estimates. Overall, the coefficients for correlation between weighted estimates and direct estimates were higher than those for correlation between unweighted estimates and direct estimates. Compared with direct survey estimates at both the state and county levels, BRFSS model-based estimates tended to have a narrower range (the difference between the highest and lowest prevalence estimates) (Table 1).

Table 1.

Comparisons of Direct State-Level and County-Level Estimates of the Prevalence of Selected Health Indicators Among Adults Aged ≥18 Years With Weighted and Unweighted Model-Based Estimates, Behavioral Risk Factor Surveillance System, United Statesa, 2011

| Indicator and Type of Estimate |

ρb | No.c | Prevalence Estimate, % |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Minimum | Quartile 1 | Median | Quartile 3 | Maximum | IQRd | Rangee | |||

| State Level | |||||||||

| COPD | |||||||||

| Directf | 51 | 4.01 | 5.22 | 6.06 | 7.68 | 9.85 | 2.46 | 5.84 | |

| Weightedg | 0.997 | 51 | 4.11 | 5.28 | 6.07 | 7.60 | 9.85 | 2.32 | 5.73 |

| Unweightedh | 0.970 | 51 | 3.74 | 5.02 | 5.84 | 7.29 | 9.23 | 2.26 | 5.49 |

| Current smoking | |||||||||

| Direct | 51 | 11.84 | 19.11 | 21.21 | 23.03 | 28.99 | 3.92 | 17.15 | |

| Weighted | 0.998 | 51 | 12.18 | 19.16 | 21.32 | 23.26 | 28.94 | 4.10 | 16.76 |

| Unweighted | 0.970 | 51 | 11.39 | 17.50 | 19.12 | 21.63 | 25.99 | 4.13 | 14.60 |

| Diabetes | |||||||||

| Direct | 51 | 6.69 | 8.42 | 9.51 | 10.38 | 12.35 | 1.96 | 5.66 | |

| Weighted | 0.994 | 51 | 6.65 | 8.45 | 9.48 | 10.17 | 12.39 | 1.72 | 5.74 |

| Unweighted | 0.945 | 51 | 6.32 | 8.23 | 8.99 | 9.88 | 12.61 | 1.65 | 6.29 |

| Obesityi | |||||||||

| Direct | 51 | 20.71 | 25.10 | 27.82 | 29.65 | 34.90 | 4.54 | 14.18 | |

| Weighted | 0.997 | 51 | 20.67 | 25.20 | 27.52 | 29.39 | 34.82 | 4.19 | 14.15 |

| Unweighted | 0.980 | 51 | 19.79 | 24.06 | 27.11 | 29.10 | 33.75 | 5.04 | 13.97 |

| Uninsuredj | |||||||||

| Direct | 51 | 7.73 | 15.61 | 21.27 | 24.95 | 34.62 | 9.35 | 26.90 | |

| Weighted | 0.999 | 51 | 7.77 | 15.62 | 21.04 | 24.72 | 34.44 | 9.10 | 26.67 |

| Unweighted | 0.984 | 51 | 6.73 | 14.91 | 18.58 | 22.50 | 31.55 | 7.58 | 24.82 |

| County Levelk | |||||||||

| COPD | |||||||||

| Direct | 569 | 0.00 | 5.18 | 6.88 | 9.37 | 33.20 | 4.19 | 33.20 | |

| Weighted | 0.941 | 569 | 2.28 | 5.47 | 6.86 | 8.71 | 18.82 | 3.24 | 16.54 |

| Unweighted | 0.852 | 569 | 2.77 | 5.23 | 6.60 | 8.04 | 17.08 | 2.81 | 14.31 |

| Current smoking | |||||||||

| Direct | 1,232 | 0.00 | 18.45 | 23.05 | 28.34 | 55.49 | 9.89 | 55.49 | |

| Weighted | 0.957 | 1,232 | 8.54 | 19.21 | 23.16 | 27.18 | 46.68 | 7.97 | 38.14 |

| Unweighted | 0.787 | 1,232 | 8.09 | 18.24 | 21.14 | 24.03 | 44.17 | 5.80 | 36.08 |

| Diabetes | |||||||||

| Direct | 886 | 2.99 | 8.39 | 10.16 | 12.91 | 28.37 | 4.52 | 25.37 | |

| Weighted | 0.854 | 886 | 3.97 | 8.66 | 10.10 | 11.92 | 20.98 | 3.27 | 17.01 |

| Unweighted | 0.738 | 886 | 4.47 | 8.41 | 9.80 | 11.44 | 19.88 | 3.03 | 15.41 |

| Obesity | |||||||||

| Direct | 1,492 | 7.03 | 25.08 | 29.57 | 34.68 | 69.04 | 9.59 | 62.01 | |

| Weighted | 0.929 | 1,492 | 13.35 | 26.22 | 29.82 | 33.32 | 51.59 | 7.10 | 38.24 |

| Unweighted | 0.731 | 1,492 | 13.77 | 26.24 | 29.02 | 31.73 | 45.41 | 5.49 | 31.64 |

| Uninsured | |||||||||

| Direct | 873 | 4.28 | 17.78 | 23.56 | 29.83 | 63.45 | 12.05 | 59.18 | |

| Weighted | 0.960 | 873 | 4.69 | 18.43 | 23.52 | 28.93 | 60.89 | 10.50 | 56.20 |

| Unweighted | 0.847 | 873 | 5.18 | 17.11 | 21.42 | 25.67 | 62.47 | 8.56 | 57.29 |

Abbreviations: BRFSS, Behavioral Risk Factor Surveillance System; COPD, chronic obstructive pulmonary disease; IQR, interquartile range.

All 50 states and the District of Columbia.

Pearson correlation coefficient.

Number of states or counties included in comparison.

Third quartile minus first quartile.

Difference between the maximum and minimum values.

BRFSS direct survey estimates.

Small area estimates based on the multilevel prevalence models with the BRFSS survey weights in the model-fitting.

Small area estimates based on the multilevel prevalence models without the BRFSS survey weights in the model-fitting.

Obesity was defined as body mass index (weight (kg)/height (m)2) ≥30.

Percentage of adults aged 18–64 years without health insurance.

Limited to counties with at least 50 respondents and their BRFSS direct survey estimates with a coefficient of variation less than 0.30.

External validation

Pearson linear and Spearman rank correlation coefficients for correlations between BRFSS model-based estimates and MO-CLS direct estimates were significantly positive for all indicators, ranging from 0.28 for obesity and no health-care coverage to 0.69 for COPD in linear correlation and from 0.17 for obesity to 0.63 for COPD in rank correlation (Table 2). Compared with MO-CLS direct estimates, model-based estimates produced much smaller prevalence ranges. Again, the unweighted model produced the lowest ranges, which were less than half of those produced by MO-CLS direct estimates (Table 2).

Table 2.

Comparisons of MO-CLS Direct Estimates of the Prevalence of Selected Health Indictors With BRFSS Model-Based Estimates Among Adults Aged ≥18 Years for All 115 Counties in Missouria, 2011

| Indicator and Type of Estimate |

ρb | γc | Prevalence Estimate, % |

Accuracyd |

Rankinge |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Minimum | Quartile 1 | Median | Quartile 3 | Maximum | IQRf | Rangeg | No. | % | No. | % | |||

| COPD | |||||||||||||

| MO-CLSh | 3.8 | 7.8 | 9.6 | 12.3 | 20.7 | 4.5 | 16.9 | ||||||

| Weightedi | 0.56 | 0.54 | 5.1 | 8.7 | 9.7 | 11.1 | 16.9 | 2.4 | 11.8 | 94 | 81.7 | 84 | 73.0 |

| Unweightedj | 0.69 | 0.63 | 5.9 | 8.4 | 9.3 | 10.4 | 13.2 | 2.0 | 7.3 | 95 | 82.6 | 88 | 76.5 |

| Current smoking | |||||||||||||

| MO-CLS | 8.4 | 21.1 | 24.2 | 27.0 | 45.5 | 5.9 | 37.1 | ||||||

| Weighted | 0.32 | 0.20 | 17.4 | 22.9 | 25.4 | 29.6 | 44.4 | 6.7 | 27.0 | 85 | 73.9 | 80 | 69.6 |

| Unweighted | 0.40 | 0.32 | 20.2 | 23.3 | 24.7 | 26.2 | 31.7 | 2.9 | 11.5 | 96 | 83.5 | 88 | 76.5 |

| Obesityk | |||||||||||||

| MO-CLS | 20.5 | 28.8 | 32.9 | 35.8 | 50.4 | 7.0 | 29.9 | ||||||

| Weighted | 0.30 | 0.22 | 23.2 | 29.3 | 31.7 | 34.4 | 44.3 | 5.1 | 21.1 | 91 | 79.1 | 87 | 75.7 |

| Unweighted | 0.28 | 0.17 | 24.7 | 29.1 | 30.9 | 32.5 | 38.7 | 3.4 | 14.0 | 98 | 85.2 | 91 | 79.1 |

| Diabetes | |||||||||||||

| MO-CLS | 6.3 | 9.8 | 11.9 | 13.6 | 22.6 | 3.8 | 16.3 | ||||||

| Weighted | 0.52 | 0.45 | 6.8 | 10.6 | 11.5 | 12.6 | 18.3 | 2.0 | 11.5 | 97 | 84.3 | 92 | 80.0 |

| Unweighted | 0.51 | 0.50 | 7.3 | 9.7 | 10.8 | 11.7 | 14.8 | 2.0 | 7.5 | 99 | 86.1 | 91 | 79.1 |

| Uninsuredl | |||||||||||||

| MO-CLS | 12.2 | 20.1 | 25.4 | 31.4 | 61.3 | 11.3 | 49.1 | ||||||

| Weighted | 0.28 | 0.19 | 11.1 | 20.4 | 23.2 | 26.8 | 45.2 | 6.4 | 34.1 | 84 | 73.0 | 79 | 68.7 |

| Unweighted | 0.28 | 0.22 | 13.5 | 20.0 | 21.7 | 23.7 | 30.6 | 3.7 | 17.1 | 83 | 72.2 | 78 | 67.8 |

Abbreviations: BRFSS, Behavioral Risk Factor Surveillance System; COPD, chronic obstructive pulmonary disease; IQR, interquartile range; MO-CLS, Missouri County-Level Study.

114 Missouri counties and the City of St. Louis.

Pearson linear correlation coefficient.

Spearman rank correlation coefficient.

Number and percentage of counties with BRFSS model-based county-level estimates that were within the 95% confidence intervals of corresponding MO-CLS direct survey estimates.

Number and percentage of counties with rankings based on BRFSS model-based county-level estimates that were within the 95% confidence intervals of the rankings based on MO-CLS direct survey estimates.

Third quartile minus first quartile.

Difference between the maximum and minimum values.

Direct survey estimates from the MO-CLS.

Small area estimates based on the multilevel prevalence models with the BRFSS survey weights in the model-fitting.

Small area estimates based on the multilevel prevalence models without the BRFSS survey weights in the model-fitting.

Obesity was defined as body mass index (weight (kg)/height (m)2) ≥30.

Percentage of adults aged 18–64 years without health insurance.

The numbers and percentages of counties with model-based estimates that were within the 95% confidence intervals of corresponding direct estimates ranged from 84 (73.0%) for no health-care coverage to 97(84.3%) for diabetes using weighted models and from 83 (72.2%) for no health-care coverage to 99 (86.1%) for diabetes using unweighted models. Numbers and percentages tended to be higher for unweighted models. Similar patterns were observed for numbers and percentages of counties with rankings based on model-based estimates that were within 95% confidence intervals of the rankings based on direct survey estimates (Table 2).

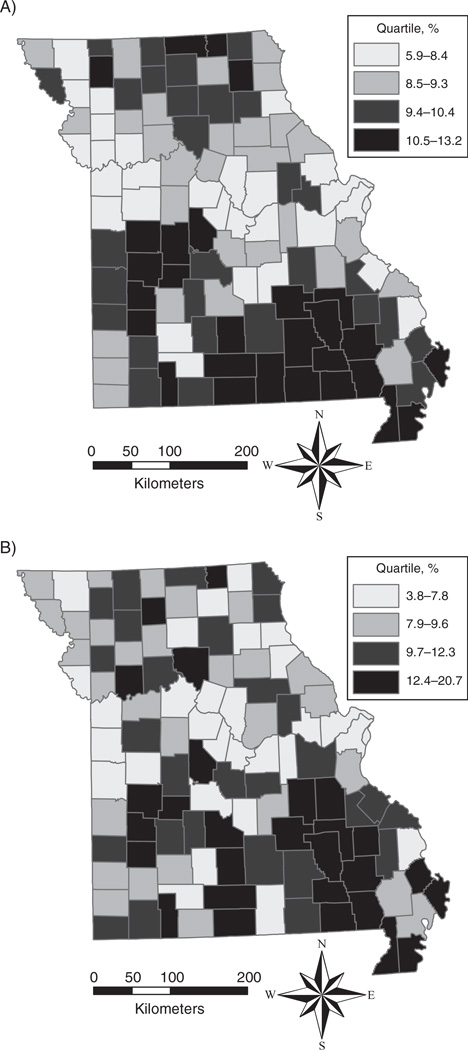

Figure 1 compares 2 Missouri maps that depict quartiles of unweighted BRFSS model-based estimates and MO-CLS direct estimates for COPD prevalence. Similar geographic clustering of the highest and lowest levels of COPD is shown in these maps.

Figure 1.

Comparison of county-level geographic variations in chronic obstructive pulmonary disease prevalence among adults aged ≥18 years, United States, 2011. A) Unweighted model-based small area estimates from Behavioral Risk Factor Surveillance System data; B) direct survey estimates from the Missouri County-Level Study.

Table 3 provides a nationwide comparison between the Census Bureau’s ACS direct estimates, SAHIE model-based estimates, and BRFSS model-based SAEs of the percentage of no health-care coverage in 2011 among adults aged 18–64 years. For 3,142 US counties, Pearson correlation coefficients for correlation between BRFSS model-based estimates and SAHIE model-based estimates were 0.76 (weighted) and 0.83 (unweighted). For the 811 counties with ACS direct estimates, Pearson coefficients for correlation between BRFSS model-based estimates and ACS direct survey estimates were 0.79 (weighted) and 0.85 (unweighted). Figure 2 illustrates county-level geographic variation across the entire United States for unweighted BRFSS model-based estimates (top) and SAHIE model-based estimates (bottom) in 2011. Again geographic clustering of uninsured adults was very similar between the 2 methods. At the state level, BRFSS model-based estimates and ACS direct estimates were strongly correlated, and correlation coefficients were as high as 0.96. Very similar patterns were observed for Spearman rank correlations (Table 3). Weighted BRFSS model-based estimates had larger ranges than either unweighted estimates or ACS direct estimates.

Table 3.

Comparisons of Direct Survey Estimates of the Prevalence of Uninsured Adults Aged 18–64 Years From the US Census Bureau’s ACS and Model-Based SAHIE Data With BRFSS Model-Based Estimates at the County and State Levels, United States, 2011

| Type of Estimate | ρa |

γb |

No.c | Prevalence Estimate, % |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ACSd | SAHIEe | ACS | SAHIE | Minimum | Quartile 1 | Median | Quartile 3 | Maximum | IQRf | Rangeg | ||

| County Level | ||||||||||||

| All counties | ||||||||||||

| SAHIE | 1.00 | 1.00 | 3,142 | 3.9 | 16.9 | 21.5 | 26.2 | 52.5 | 9.3 | 48.6 | ||

| BRFSS | ||||||||||||

| Weightedh | 0.76 | 0.77 | 3,143 | 4.6 | 17.0 | 22.4 | 28.8 | 75.3 | 11.8 | 70.7 | ||

| Unweightedi | 0.83 | 0.85 | 3,143 | 5.2 | 15.6 | 20.3 | 25.0 | 65.1 | 9.4 | 59.9 | ||

| Large counties | ||||||||||||

| ACS | 1.00 | 0.96 | 1.00 | 0.96 | 811 | 3.0 | 14.0 | 18.9 | 24.7 | 54.9 | 10.7 | 51.9 |

| SAHIE | 1.00 | 1.00 | 811 | 3.9 | 15.0 | 19.7 | 24.5 | 52.5 | 9.5 | 48.6 | ||

| BRFSS | ||||||||||||

| Weighted | 0.79 | 0.83 | 0.79 | 0.82 | 811 | 4.6 | 15.1 | 20.5 | 26.4 | 60.9 | 11.2 | 56.3 |

| Unweighted | 0.85 | 0.90 | 0.85 | 0.90 | 811 | 5.2 | 14.6 | 18.9 | 23.7 | 58.3 | 9.1 | 53.2 |

| State Level | ||||||||||||

| ACS | 1.00 | >0.99 | 1.00 | >0.99 | 51 | 5.9 | 14.9 | 19.7 | 23.5 | 30.9 | 8.7 | 25.0 |

| SAHIE | 1.00 | 1.00 | 51 | 6.0 | 15.4 | 19.6 | 23.5 | 31.2 | 8.1 | 25.2 | ||

| BRFSS | ||||||||||||

| Weighted | 0.96 | 0.95 | 0.96 | 0.96 | 51 | 7.8 | 15.6 | 21.0 | 24.7 | 34.4 | 9.1 | 26.7 |

| Unweighted | 0.96 | 0.95 | 0.95 | 0.95 | 51 | 6.7 | 14.9 | 18.6 | 22.5 | 31.6 | 7.6 | 24.8 |

Abbreviations: ACS, American Community Survey; BRFSS, Behavioral Risk Factor Surveillance System; IQR, interquartile range; SAHIE, Small Area Health Insurance Estimates.

Pearson linear correlation coefficient.

Spearman rank correlation coefficient.

Number of states or counties included in comparison.

ACS direct survey estimates (n = 811).

Model-based estimates from the Census Bureau’s SAHIE program (http://www.census.gov/did/www/sahie/).

Third quartile minus first quartile.

Difference between the maximum and minimum values.

Small area estimates based on the multilevel prevalence models with the BRFSS survey weights in the model-fitting.

Small area estimates based on the multilevel prevalence models without the BRFSS survey weights in the model-fitting.

Figure 2.

Comparison of geographic variations in county-level prevalence of uninsured adults aged 18–64 years, United States, 2011. A) Unweighted model-based small area estimates from Behavioral Risk Factor Surveillance System data; B) model-based estimates from the Census Bureau’s Small Area Health Insurance Estimates (SAHIE) program.

DISCUSSION

Our systematic validation study showed that BRFSS model-based SAEs obtained by MRP demonstrated both high internal consistency with BRFSS direct survey estimates and good consistency with reliable external estimates (36). To our knowledge, this is the first study to have used both a local survey and a large national survey to validate county-level model-based SAEs of population health indicators. The main validation results empirically confirmed that MRP could provide reliable and sensible SAEs of population health indicators using a nationwide state-based health survey (31). They also confirmed our basic statistical assumption that the multilevel models constructed from BRFSS data with both fixed effects (individual demographic characteristics and local poverty) and random effects (state and county contexts) could be applied to the target census population to capture local geographic variations in the prevalence of health indicators (31).

Correlations between BRFSS model-based SAEs and MO-CLS direct estimates were higher for chronic diseases, such as COPD and diabetes, than for health behaviors and chronic conditions, such as smoking and obesity. There may be differential self-report bias between chronic diseases and health behaviors. Reports of COPD and diabetes were based on having been told by a health professional that one had these conditions, and survey respondents may provide accurate reporting on such variables. On the other hand, survey respondents may tend to report their health risk behaviors with more bias. It is well known that there is substantial bias in obesity status determined by self-reported heights and weights, and this bias also differs by demographic factors (37,38). In addition to the reporting bias, another important reason for lower correlations could be the impact of local public health programs. A good example involves 2 adjacent counties, Andrew and Nodaway, in the northwestern corner of Missouri. Their populations are predominantly non-Hispanic white (>90%). Nodaway County’s poverty rate is more than double that of Andrew County, but Nodaway County has a very active local tobacco control coalition. The model-based estimates of current smoking prevalence were 24.2% and 24.0% for Andrew and Nodaway counties, respectively, while their corresponding MO-CLS direct estimates were 25.3% and 13.5%. This impact was captured by MO-CLS data but was not fully captured in our model-based estimates. In the absence of strong local public-health program impact, our model-based estimates are quite close to reliable direct survey estimates and could reflect the local geographic variations in health indicators. If there is substantial local public-health program impact, our model-based estimates could be significantly different from what we observed. Thus, without reliable local information about public health programs, our model-based local estimates should not be used to evaluate the impact of local public health programs.

There were a few additional limitations of this study. First, we could not implement an external validation for subcounty-level estimates. Second, different small area estimation methods have been applied to BRFSS data (13,18,19,29); a comparison of these methods via external validation might provide a better picture of small area estimation using health surveys.

The comparison of BRFSS model-based SAEs and MO-CLS direct estimates has shown that BRFSS model-based SAEs have smaller ranges and tend to smooth out the local geographic variations in population health outcomes while specifically underestimating those small areas with high prevalence estimates and overestimating small areas with lower prevalence estimates. This is to be expected, since small area statistical models generalize population characteristics and always tend to smooth the final predictions of population outcomes and underestimate the true ranges. On the other hand, direct survey estimates tend to overestimate the true ranges of SAEs, especially when there are larger survey measurement errors (32, 39).

In addition to comparing results within a single state, we took advantage of the largest census survey, the ACS, for a nationwide external validation. The ACS uses a completely different sample design but has the ability to estimate the percentage of the population with no current health-care coverage. Thus, in terms of the outcome measurement itself, the ACS and BRFSS were comparable for this variable. The strengths of the ACS included nationwide coverage, mandatory participation, and less nonrespondent bias than was present in the MO-CLS survey. The comparison of BRFSS model-based SAEs of the percentage of uninsured adults with ACS direct estimates has confirmed their good external consistency. We observed near-perfect correlations between BRFSS model-based SAEs and ACS direct estimates at the state level and very strong correlations at the county level. Consistency was also observed in the distribution of SAEs, including the ranges of SAEs.

Unit-level model-based small area estimators often do not make use of unit-level survey weights and have been criticized for the potential lack of design consistency as direct survey small area estimators (34). The comparison of uninsured estimates with ACS direct estimates has shown that unweighted BRFSS model-based SAEs had better consistency than weighted ones. When both BRFSS model-based SAEs (weighted and unweighted) were further compared with SAHIE model-based county-level estimates for 3,142 US counties, unweighted BRFSS model-based SAEs still showed better correlation. Further studies should confirm whether conventional survey sample weights are necessary for unit-level model-based small area estimators, especially those producing SAEs via poststratification by age, sex, and race/ethnicity within small census geographic units such as counties (10).

Population-based external validation of model-based SAEs is critical to evaluate the quality of statistical small area estimators. In the United States, a few studies have used the Census long-form (Summary File 3) data to validate corresponding model-based SAEs (10, 13, 33). Census 1990 long-form data were used by Malec et al. (10) to validate model-based state-level disability estimates based on 1985–1994 National Health Interview Survey data for the 50 states and DC and sub-populations within states. Hudson (33) used Census 2000 long-form data to validate model-based state-level estimates of mental disability based on the 2001–2002 National Comorbidity Survey and used local administrative hospitalization data (the Massachusetts Acute Hospital Case Mix databases, 1994–2000) to validate model-based estimates of mental disability for towns, cities, and even zip codes in Massachusetts. Census 2000 long-form data were also used by Jia et al. (13) to validate county-level BRFSS model-based SAEs and confirmed that their multilevel regression model could produce the most valid and precise estimates of county-level disability prevalence. However, there may have been a substantial discrepancy in the disability measures between the BRFSS survey and the Census long form, which could have introduced significant bias for external validation. Two studies in the United Kingdom validated SAEs at the local neighborhood (ward) level with local health surveys (36, 40), but those surveys were not designed to produce reliable local estimates at the ward level.

In this study, we took advantage of both a local health survey (MO-CLS) and a nationwide survey with health information (ACS) to validate our MRP approach for SAEs of population health outcomes using the BRFSS. The advantages of our validation study include the following: 1) population health measures from the BRFSS are highly consistent with MO-CLS and ACS; 2) we used the same single-year data from all 3 surveys; 3) multiple population health indicators from MO-CLS were compared with their corresponding BRFSS model-based SAEs at the county level; and 4) both state- and county-level BRFSS model-based estimates were compared with ACS direct estimates across the entire United States.

In conclusion, the external validation of BRFSS model-based SAEs, especially using ACS direct estimates for the entire United States, suggests that the model-based SAEs obtained from MRP methodology with single-year BRFSS data are valid and could be used to characterize local geographic variations in population health indicators when high-quality local survey data are not available.

ACKNOWLEDGMENTS

The findings and conclusions presented in this article are those of the authors and do not necessarily represent the views of the Centers for Disease Control and Prevention or the Missouri Department of Health and Senior Services.

Abbreviations

- ACS

American Community Survey

- BRFSS

Behavioral Risk Factor Surveillance System

- COPD

chronic obstructive pulmonary disease

- DC

District of Columbia

- MO-CLS

Missouri County-Level Study

- MRP

multilevel regression and poststratification

- SAEs

small area estimates

- SAHIE

Small Area Health Insurance Estimates

Footnotes

Conflict of interest: none declared.

REFERENCES

- 1.Rao NK. Small Area Estimation. New York, NY: John Wiley & Sons, Inc; 2003. [Google Scholar]

- 2.Drewnowski A, Rehm CD, Arterburn D. The geographic distribution of obesity by census tract among 59 767 insured adults in King County, WA. Int J Obes (Lond) 2014;38(6):833–839. doi: 10.1038/ijo.2013.179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Margellos-Anast H, Shah AM, Whitman S. Prevalence of obesity among children in six Chicago communities: findings from a health survey. Public Health Rep. 2008;123(2):117–125. doi: 10.1177/003335490812300204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Arrieta M, White HL, Crook ED. Using zip code-level mortality data as a local health status indicator in Mobile, Alabama. Am J Med Sci. 2008;335(4):271–274. doi: 10.1097/maj.0b013e31816a49c0. [DOI] [PubMed] [Google Scholar]

- 5.Cui Y, Baldwin SB, Lightstone AS, et al. Small area estimates reveal high cigarette smoking prevalence in low-income cities of Los Angeles County. J Urban Health. 2012;89(3):397–406. doi: 10.1007/s11524-011-9615-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chien LC, Yu HL, Schootman M. Efficient mapping and geographic disparities in breast cancer mortality at the county-level by race and age in the U.S. Spat Spatiotemporal Epidemiol. 2013;5:27–37. doi: 10.1016/j.sste.2013.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Will JC, Nwaise IA, Schieb L, et al. Geographic and racial patterns of preventable hospitalizations for hypertension: Medicare beneficiaries, 2004–2009. Public Health Rep. 2014;129(1):8–18. doi: 10.1177/003335491412900104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Malec D, Davis WW, Cao X. Model-based small area estimates of overweight prevalence using sample selection adjustment. Stat Med. 1999;18(23):3189–3200. doi: 10.1002/(sici)1097-0258(19991215)18:23<3189::aid-sim309>3.0.co;2-c. [DOI] [PubMed] [Google Scholar]

- 9.Malec D, Sedransk J. Bayesian predictive inference for units with small sample sizes. The case of binary random variables. Med Care. 1993;31(5 suppl):YS66–YS70. doi: 10.1097/00005650-199305001-00010. [DOI] [PubMed] [Google Scholar]

- 10.Malec D, Sedransk J, Moriarity CL, et al. Small area inference for binary variables in the National Health Interview Survey. J Am Stat Assoc. 1997;92(439):815–826. [Google Scholar]

- 11.Zhang X, Onufrak S, Holt JB, et al. A multilevel approach to estimating small area childhood obesity prevalence at the census block-group level. Prev Chronic Dis. 2013;10:E68. doi: 10.5888/pcd10.120252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jia H, Link M, Holt J, et al. Monitoring county-level vaccination coverage during the 2004–2005 influenza season. Am J Prev Med. 2006;31(4):275–280. doi: 10.1016/j.amepre.2006.06.005. e4. [DOI] [PubMed] [Google Scholar]

- 13.Jia H, Muennig P, Borawski E. Comparison of small-area analysis techniques for estimating county-level outcomes. Am J Prev Med. 2004;26(5):453–460. doi: 10.1016/j.amepre.2004.02.004. [DOI] [PubMed] [Google Scholar]

- 14.Xie D, Raghunathan TE, Lepkowski JM. Estimation of the proportion of overweight individuals in small areas—a robust extension of the Fay-Herriot model. Stat Med. 2007;26(13):2699–2715. doi: 10.1002/sim.2709. [DOI] [PubMed] [Google Scholar]

- 15.Zhang Z, Zhang L, Penman A, et al. Using small-area estimation method to calculate county-level prevalence of obesity in Mississippi, 2007–2009. Prev Chronic Dis. 2011;8(4):pA85. [PMC free article] [PubMed] [Google Scholar]

- 16.Goodman MS. Comparison of small-area analysis techniques for estimating prevalence by race. Prev Chronic Dis. 2010;7(2):A33. [PMC free article] [PubMed] [Google Scholar]

- 17.Barker LE, Thompson TJ, Kirtland K, et al. Bayesian small area estimates of diabetes incidence by United States county, 2009. J Data Sci. 2013;11(2):249–269. [PMC free article] [PubMed] [Google Scholar]

- 18.Cadwell BL, Thompson TJ, Boyle JP, et al. Bayesian small area estimates of diabetes prevalence by U.S. county, 2005. J Data Sci. 2010;8(1):173–188. [PMC free article] [PubMed] [Google Scholar]

- 19.Srebotnjak T, Mokdad AH, Murray CJL. A novel framework for validating and applying standardized small area measurement strategies. Popul Health Metr. 2010;8:26. doi: 10.1186/1478-7954-8-26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schneider KL, Lapane KL, Clark MA, et al. Using small-area estimation to describe county-level disparities in mammography. Prev Chronic Dis. 2009;6(4):A125. [PMC free article] [PubMed] [Google Scholar]

- 21.Raghunathan TE, Xie D, Schenker N, et al. Combining information from two surveys to estimate county-level prevalence rates of cancer risk factors and screening. J Am Stat Assoc. 2007;102(478):474–486. [Google Scholar]

- 22.Olives C, Myerson R, Mokdad AH, et al. Prevalence, awareness, treatment, and control of hypertension in United States counties, 2001–2009. PLoS One. 2013;8(4):e60308. doi: 10.1371/journal.pone.0060308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Eberth JM, Hossain MM, Tiro JA, et al. Human papillomavirus vaccine coverage among females aged 11 to 17 in Texas counties: an application of multilevel, small area estimation. Womens Health Issues. 2013;23(2):e131–e141. doi: 10.1016/j.whi.2012.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Eberth JM, Zhang X, Hossain MM, et al. County-level estimates of human papillomavirus vaccine coverage among young adult women in Texas, 2008. Tex Public Health J. 2013;65(1):37–40. [PMC free article] [PubMed] [Google Scholar]

- 25.D’Agostino L, Goodman MS. Multilevel reweighted regression models to estimate county-level racial health disparities using PROC GLIMMIX. San Francisco, California. Presented at SAS Global Forum 2013.2013. Apr-May. [Google Scholar]

- 26.Dwyer-Lindgren L, Mokdad AH, Srebotnjak T, et al. Cigarette smoking prevalence in US counties: 1996–2012. Popul Health Metr. 2014;12(1):5. doi: 10.1186/1478-7954-12-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Li W, Kelsey JL, Zhang Z, et al. Small-area estimation and prioritizing communities for obesity control in Massachusetts. Am J Public Health. 2009;99(3):511–519. doi: 10.2105/AJPH.2008.137364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Li W, Land T, Zhang Z, et al. Small-area estimation and prioritizing communities for tobacco control efforts in Massachusetts. Am J Public Health. 2009;99(3):470–479. doi: 10.2105/AJPH.2007.130112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Congdon P. A multilevel model for cardiovascular disease prevalence in the US and its application to micro area prevalence estimates. Int J Health Geogr. 2009;8:6. doi: 10.1186/1476-072X-8-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Congdon P, Lloyd P. Estimating small area diabetes prevalence in the US using the Behavioral Risk Factor Surveillance System. J Data Sci. 2010;8(2):235–252. [Google Scholar]

- 31.Zhang X, Holt JB, Lu H, et al. Multilevel regression and poststratification for small-area estimation of population health outcomes: a case study of chronic obstructive pulmonary disease prevalence using the Behavioral Risk Factor Surveillance System. Am J Epidemiol. 2014;179(8):1025–1033. doi: 10.1093/aje/kwu018. [DOI] [PubMed] [Google Scholar]

- 32.Pfeffermann D. New important developments in small area estimation. Stat Sci. 2013;28(1):40–68. [Google Scholar]

- 33.Hudson CG. Validation of a model for estimating state and local prevalence of serious mental illness. Int J Methods Psychiatr Res. 2009;18(4):251–264. doi: 10.1002/mpr.294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.You Y, Rao JNK. A pseudo-empirical best linear unbiased prediction approach to small area estimation using survey weights. Can J Stat. 2002;30(3):431–439. [Google Scholar]

- 35.McClave AK, Whitney N, Thorne SL, et al. Adult tobacco survey—19 states, 2003–2007. MMWR Surveill Summ. 2010;59(3):1–75. [PubMed] [Google Scholar]

- 36.Scarborough P, Allender S, Rayner M, et al. Validation of model-based estimates (synthetic estimates) of the prevalence of risk factors for coronary heart disease for wards in England. Health Place. 2009;15(2):596–605. doi: 10.1016/j.healthplace.2008.10.003. [DOI] [PubMed] [Google Scholar]

- 37.Connor Gorber S, Tremblay MS. The bias in self-reported obesity from 1976 to 2005: a Canada-US comparison. Obesity (Silver Spring) 2010;18(2):354–361. doi: 10.1038/oby.2009.206. [DOI] [PubMed] [Google Scholar]

- 38.Ezzati M, Martin H, Skjold S, et al. Trends in national and state-level obesity in the USA after correction for self-report bias: analysis of health surveys. J R Soc Med. 2006;99(5):250–257. doi: 10.1258/jrsm.99.5.250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Judkins DR, Liu J. Correcting the bias in the range of a statistic across small areas. J Off Stat. 2000;16(1):1–13. [Google Scholar]

- 40.Twigg L, Moon G. Predicting small area health-related behaviour: a comparison of multilevel synthetic estimation and local survey data. Soc Sci Med. 2002;54(6):931–937. doi: 10.1016/s0277-9536(01)00065-x. [DOI] [PubMed] [Google Scholar]