Abstract

Stroke patients report hand function as the most disabling motor deficit. Current evidence shows that learning new motor skills is essential for inducing functional neuroplasticity and functional recovery. Adaptive training paradigms that continually and interactively move a motor outcome closer to the targeted skill are important to motor recovery. Computerized virtual reality simulations when interfaced with robots, movement tracking and sensing glove systems are particularly adaptable, allowing for online and offline modifications of task based activities using the participant’s current performance and success rate. We have developed a second generation system that can exercise the hand and the arm together or in isolation and provides for both unilateral and bilateral hand and arm activities in three-dimensional space. We demonstrate that by providing haptic assistance for the hand and arm and adaptive anti-gravity support, the system can accommodate patients with lower level impairments. We hypothesize that combining training in VE with observation of motor actions can bring additional benefits. We present a proof of concept of a novel system that integrates interactive VE with functional neuroimaging to address this issue. Three components of this system are synchronized, the presentation of the visual display of the virtual hands, the collection of fMRI images and the collection of hand joint angles from the instrumented gloves. We show that interactive VEs can facilitate activation of brain areas during training by providing appropriately modified visual feedback. We predict that visual augmentation can become a tool to facilitate functional neuroplasticity.

Keywords: virtual environment, haptics, fMRI, stroke, cerebral palsy

Introduction

During the past decade the intersection of knowledge gained within the fields of engineering, neuroscience and rehabilitation has provided the conceptual framework for a host of innovative rehabilitation treatment paradigms. These newer treatment interventions are taking advantage of technological advances such as the improvement in robotic design, the development of haptic interfaces, and the advent of human-machine interactions in virtual reality and are in accordance with current neuroscience literature in animals and motor control literature in humans. We therefore find ourselves on a new path in rehabilitation.

Studies have shown that robotically-facilitated repetitive movement training might be an effective stimulus for normalizing upper extremity motor control in persons with moderate to severe impairments who have difficulty performing unassisted movements [1][2]. An important feature of the robots is their ability to measure the kinematic and dynamic properties of a subject’s movements and provide the assistive force necessary for the subject to perform the activity, with the robot adjusting the assistance and transitioning to resistance as the subject’s abilities expand [2]. Most of these first generation robotic devices train unilateral gross motor movements [3][4] and a few upper extremity devices have the capability of training bilateral motion [2][5]. None of these systems allow for three dimensional arm movements with haptic assistance. Robotics for wrist and hand rehabilitation is much less developed [6] and systems for training the hand and arm together are non-existent.

Virtual reality simulations when interfaced with robots, movement tracking and sensing glove systems can provide an engaging, motivating environment where the motion of the limb displayed in the virtual world is a replication of the motion produced in the real world by the subject. Virtual environments (VE’s) can be used to present complex multimodal sensory information to the user and have been used in military training, entertainment simulations, surgical training, training in spatial awareness and more recently as a therapeutic intervention for phobias [7][8]. Our hypothesis for the use of a virtual reality/robotic system for rehabilitation is that this environment can monitor the specificity and frequency of visual and auditory feedback, and can provide adaptive learning algorithms and graded assistive or resistive forces that can be objectively and systematically manipulated to create individualized motor learning paradigms. Thus, it provides a rehabilitation tool that can be used to exploit the nervous systems’ capacity for sensorimotor adaptation and provide plasticity-mediated therapies.

This chapter describes the design and feasibility testing of a second-generation system, a revised and advanced version of the virtual reality based exercise system that we have used in our past work [9][10]. The current system has been tested on patients post-stroke [11] [12] [13] and on children with Cerebral Palsy [14]. By providing haptic assistance and adaptive anti-gravity support and guidance, the system can now accommodate patients with greater physical impairments. The revised version of this system can exercise the hand alone, the arm alone and the arm and hand together as well as provide for unilateral and bilateral upper extremity activities. Through adaptive algorithms it can provide assistance or resistance during the movement directly linking the assistance to the patients force generation.

1. Description of the System

1.1. Hardware

The game architecture was designed so that various inputs can be seamlessly used to track the hands as well as retrieve the finger angles. The system supports the use of a pair of 5DT [15]) or CyberGlove [16] instrumented gloves for hand tracking and a CyberGrasp ([16] for haptic effects. The CyberGrasp device is a lightweight, force-reflecting exoskeleton that fits over a CyberGlove data glove and adds resistive force feedback to each finger. The CyberGrasp is used in our simulations to facilitate individual finger movement by resisting flexion of the adjacent fingers in patients with more pronounced deficits thus allowing for individual movement of each finger.

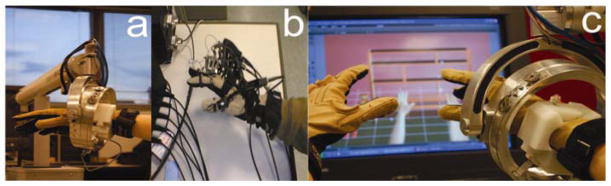

The arm simulations utilize the Haptic MASTER [17] a 3 degrees of freedom, admittance controlled (force controlled) robot. Three more degrees of freedom (yaw, pitch and roll) can be added to the arm by using a gimbal, with force feedback available for pronation/supination (roll). A three-dimensional force sensor measures the external force exerted by the user on the robot. In addition, the velocity and position of the robot’s endpoint are measured. These variables are used in real time to generate reactive motion based on the properties of the virtual haptic environment in the vicinity of the current location of the robot’s endpoint, allowing the robotic arm to act as an interface between the participants and the virtual environments, enabling multiplanar movements against gravity in a 3D workspace. The haptic interface provides the user with a realistic haptic sensation that closely simulates the weight and force found in upper extremity tasks [18] (Figure 1).

Figure 1.

a. Hand & Arm Training System using a CyberGlove and Haptic Master interface that provides the user with a realistic haptic sensation that closely simulates the weight and force found in upper extremity tasks. b. Hand & Arm Training System using a CyberGlove, a CyberGrasp and Flock of Birds electromagnetic trackers. c. Close view of the haptic interface in a bimanual task.

Hand position and orientation as well as finger flexion and abduction is recorded in real time and translated into three dimensional movements of the virtual hands shown on the screen in a first-person perspective. The Haptic MASTER robot or the Ascension Flock of Birds motion trackers [19] are used for arm tracking.

1.2. Simulations

We have developed a comprehensive library of gaming simulations; two exercise the hand alone, five exercise the arm alone, and five exercise the hand and arm together. Eight of these gaming simulations facilitate bilateral, symmetrical movement of the two upper extremities. To provide clarification of the richness of these virtual worlds, and the sophistication of the haptic modifications for each game we will describe some of them in detail.

Most of the games have been programmed using C++/OpenGL or Virtools software package [20]) with the VRPack plug-in which communicates with the open source VRPN (Virtual Reality Peripheral Network) [21]. In addition, two activities were adopted from existing Pong games in which we have transferred the game control from the computer mouse to one of our input devices (e.g., CyberGlove or Haptic Master). The Haptic Master measures position, velocity and force in three dimensions at a rate of up to 1000 Hz and records for off-line analysis. We used Haptic Master’s Application Programming Interface (API) to program the robot to produce haptic effects, such as spring, damper and global force. Virtual haptic objects, including blocks, cylinders, toruses, spheres, walls and complex surfaces can be created.

1.2.1. Piano Trainer

The piano trainer is a refinement and elaboration of one of our previous simulations [22] and is designed to help improve the ability of subjects to individually move each finger in isolation (fractionation). It consists of a complete virtual piano that plays the appropriate notes as they are pressed by the virtual fingers (Figure 2a). The position and orientation of both hands as well as the flexion and abduction of the fingers are recorded in real time and translated into 3D movement of the virtual hands, shown on the screen in a first person perspective. The simulation can be utilized for training the hand alone to improve individuated finger movement (fractionation), or the hand and the arm together to improve the arm trajectory along with finger motion. This is achieved by manipulating the octaves on which the songs are played. These tasks can be done unilaterally or bilaterally. The subjects play short recognizable songs, scales, and random notes. Color-coding between the virtual fingers and piano keys serve as cues as to which notes are to be played. The activity can be made more challenging by changing the fractionation angles required for successful key pressing (see 1.2.7. Movement Assessment). When playing the songs bilaterally, the notes are key-matched. When playing the scales and the random notes bilaterally, the fingers of both hands are either key matched or finger matched. Knowledge of results and knowledge of performance is provided with visual and auditory feedback.

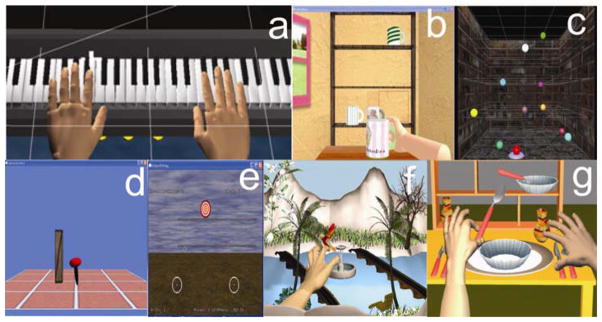

Figure 2.

a. The piano trainer consists of a complete virtual piano that plays the appropriate notes as they are pressed by the virtual fingers. b. Placing Cups displays a three-dimensional room with a haptically rendered table and shelves. c. Reach/Touch is accomplished in the context of aiming /reaching type movements in a normal, functional workspace. d. The Hammer Task trains a combination of three dimensional reaching and repetitive finger flexion and extension. Targets are presented in a scalable 3D workspace. e. Catching Falling Objects enhances movement of the paretic arm by coupling its motion with the less impaired arm. f. Humming Bird Hunt depicts a hummingbird as it moves through an environment filled with trees, flowers and a river. g. The full screen displays a three-dimensional room containing three shelves and a table.

1.2.2. Hummingbird Hunt

This simulation depicts a hummingbird as it moves through an environment filled with trees, flowers and a river. Water and bird sounds provide a pleasant encouraging environment in which to practice repeated arm and hand movements (Figure 2f). The game provides practice in the integration of reach, hand-shaping and grasp using a pincer grip to catch and release the bird while it is perched on different objects located on different levels and sections of the workspace. The flight path of the bird is programmed into three different levels, low, medium and high allowing for progression in the range of motion required to successfully transport the arm to catch the bird. Adjusting the target position as well as the size, scales the difficulty of the task and the precision required for a successful grasp and release.

1.2.3. Placing Cups

The goal of the “Placing Cups” task is to improve upper extremity range and smoothness of motion in the context of a functional reaching movement. The screen displays a three-dimensional room with a haptically rendered table and shelves (Figure 2b). The participants use their virtual hand (hemiparetic side) to lift the virtual cups and place them onto one of nine spots on one of three shelves. Target spots on the shelves (represented by red squares) are presented randomly for each trial. To accommodate patients with varying degrees of impairments, there are several haptic effects that can be applied to this simulation; gravity and antigravity forces can be applied to the cups, global damping can be provided for dynamic stability and to facilitate smoother movement patterns, and the three dimensions of the workspace can be calibrated to increase the range of motion required for successful completion of the task. The intensity of these effects can be modified to challenge the patients as they improve.

1.2.4. Reach/Touch

The goal of the Reach/Touch game is to improve speed, smoothness and range of motion of shoulder and elbow movement patterns. This is accomplished in the context of aiming /reaching type movements (Figure 2c). Subjects view a 3-dimensional workspace aided by stereoscopic glasses [23] to enhance depth perception, to increase the sense of immersion and to facilitate the full excursion of upper extremity reach. The participant moves a virtual cursor through this space in order to touch ten targets presented randomly. Movement initiation is cued by a haptically rendered activation target (torus at the bottom of the screen). In this simulation, there are three control mechanisms used to accommodate varying levels of impairments. The first mechanism is an adjustable spring-like assistance that draws the participants’ arm/hand toward the target if they are unable to reach it in 5 seconds. The spring stiffness gradually increases when hand velocity and force applied by the subject do not exceed predefined thresholds within 5 seconds after movement onset. Current values of active force and hand velocity are compared online with threshold values and the assistive force increases if both velocity and force are under threshold. If either velocity or force is above threshold, spring stiffness starts to decrease in 5 N/m increments. The range of the spring stiffness is from 0 to 10000 N/m. The velocity threshold is predefined for each of the ten target spheres based on the mean velocity of movement recorded from a group of neurologically healthy subjects. The second mechanism, a haptic ramp (invisible tilted floor that goes through the starting point and the target) decreases the force necessary to move the upper extremity toward the target. This can be added or removed as needed. Finally, a range restriction limits participant’s ability to deviate from an ideal trajectory toward each target. This restriction can be decreased to provide less guidance as participants’ accuracy improves. To keep the children’s focused attention, we modified this game to make it more dynamic by enhancing the visual and auditory presentation. The spheres, rather than just disappearing, now explode accompanied by the appropriate bursting sound.

1.2.5. Hammer Task

The Hammer Task trains a combination of three dimensional reaching and repetitive finger flexion and extension. Targets are presented in a scalable 3D workspace (Figure 2d). There are two versions of this simulation. One game exercises movement of the hand and arm together by having the subjects reach towards a wooden cylinder and then use their hand (finger extension or flexion) to hammer the cylinders into the floor. The other uses supination and pronation to hammer the wooden cylinders into a wall. The haptic effects allow the subject to feel the collision between the hammer and target cylinders as they are pushed through the floor or wall. Hammering sounds accompany collisions as well. The subjects receive feedback regarding their time to complete the series of hammering tasks. Adjusting the size of the cylinders, the amount of anti-gravity assistance provided by the robot to the arm and the time required to successfully complete the series of cylinders adaptively modifies the task requirements and game difficulty.

1.2.6. Catching Falling Objects

The goal of this bilateral task simulation, Catching Falling Objects, is to enhance movement of the paretic arm by coupling its motion with the less impaired arm (Figure 2e). Virtual hands are presented in a mono-view workspace. Each movement is initiated by placing both virtual hands on two small circles. The participant’s arms then move in a synchronized symmetrical action to catch virtual objects with both hands as they drop from the top of the screen. Real-time 3-D position of the less affected arm is measured from either a Flock of Birds sensor attached to the less impaired hand or a second Haptic Master robot. The position of the less affected arm guides the movement of the impaired arm. For the bilateral games, an initial symmetrical (relative to the patient’s midline) relationship between the two arm positions is established prior to the start of the game and maintained through out the game utilizing a virtual spring mechanism. At the highest levels of the virtual spring‘s stiffness, the haptic master guides the subject’s arm in a perfect 1:1 mirrored movement. As the trajectory of the subject’s hemiparetic arm deviates from a mirrored image of the trajectory of the less involved arm, the assistive virtual spring is stretched exerting a force on the subject’s impaired arm. This force draws the arm back to the mirrored image of the trajectory of the uninvolved arm. The Catching Falling Objects simulation requires a quick, symmetrical movement of both arms towards an object falling along the midline of the screen. If the subject successfully hits the falling object three times in a row the spring stiffness diminishes. The subject then has to exert a greater force with their hemiplegic arm in order to maintain the symmetrical arm trajectory required for continuous success. If the subject can not touch the falling object appropriately by exerting the necessary force, the virtual spring stiffens again to assist the subject. In this way, the adaptive algorithm maximizes the active force generated by the impaired arm. The magnitude of the active force measured by the robot defines the progress and success in the game, therefore this adaptive algorithm insures that the patient continually utilizes their arm and does not rely on the Haptic Master to move it for them.

1.2.7. Movement assessment

Several kinematic measures are derived from the training simulations. Each task in a simulation consists of a series of movements e.g. pressing a series of piano keys to complete a song, or placing 9 cups on the virtual shelves. Time to complete a task, range of motion and peak velocity for each individual movement was measured in each simulation. Accuracy, which denotes the proportion of correct key, presses, and fractionation are measures specific to the hand. Peak fractionation score quantifies the ability to isolate each finger’s motion and is calculated online by subtracting the mean of the MCP and PIP joint angles of the most flexed non-active finger from the mean angle of the active finger. When the actual fractionation score becomes greater than the target score during the trial, a successful key press will take place (assuming the subject’s active finger was over the correct piano key). The target fractionation score starts at 0 at the beginning of each finger. After each trial, and for each finger, our algorithm averages the fractionation achieved when the piano key is pressed. If the average fractionation score is greater than 90% of the target, the target fractionation will increase by 0.005 radians. If the average fractionation is less than 75% of the target, the target will decrease by the same amount. Otherwise, the target will remain the same. There is a separate target for each finger and for each hand, (total 10 targets). Once a key is displayed for the subject to press, the initial threshold will be the set target. This will decrease during the trial according to the Bezier Progression (interpolation according to a Bezier curve). Thresholds will start at the target value and decrease to zero or to a predefined negative number over the course of one minute. Negative limits for the target score will be used to allow more involved subjects to play the game To calculate movement smoothness, we compute the normalized integrated third derivative of hand displacement [24] [25]. Finally, Active Force denotes the mean force applied by the subject to move the robot to the target during the movement.

2. Training paradigms

2.1. Training the hand

We trained patients using three different paradigms, the hand alone, the hand and arm separately, and the hand and arm together. We trained the hemiplegic hand of 8 subjects in the chronic phase post-stroke [9][10]. Examination of the group effects using analysis of variance of the data from the first two days of training, last two days of training, and the one-week retention test showed significant changes in performance in each of the parameters of hand movement that were trained in the virtual environment. Post-hoc analyses revealed that subjects as a group improved in finger fractionation (a measurement of finger flexion independence), thumb range of motion, finger range of motion, thumb speed and finger speed. The Jebsen Test of Hand Function (JTHF) [26], a timed test of hand function and dexterity, was used to determine whether the kinematic improvements gained through practice in the VE measures transferred to real world functional activities. After training, the average task completion time for all seven subtests of the JTHF for the affected hand (group mean (SD) decreased from 196 (62) sec to 172 (45) sec; paired t-test, t =2.4, p=<.05). In contrast, no changes were observed for the unaffected hand (t=.59, p=.54). Analysis of variance of the Jebsen scores from the pre-therapy, post-therapy and one-week retention test demonstrated significant improvement in the scores. The subjects’ affected hand improved in this test (pre-therapy versus post-therapy) on average by 12%. In contrast, no significant changes were observed for the unaffected hand. Finally, scores obtained during the retention testing were not significantly different from post-therapy scores.

2.2. Training the hand and arm

Four other subjects (mean age=51; years post stroke =3.5) practiced approximately three hrs/day for 8 days on simulations that trained the arm and hand separately (Reach/Touch, Placing Cups, Piano/Hand alone). Four other subjects (mean age=59; years post stroke =4.75) practiced for the same amount of time on simulations that trained the arm and hand together (Hammer, Plasma Pong, Piano/Hand/Arm, Hummingbird Hunt). All subjects were tested pre and post training on two of our primary outcome measures the JTHF and the Wolf Motor Function Test (WMFT) a time-based series of tasks that evaluates upper extremity performance [27]. The groups that practiced arm and hand tasks separately (HAS) showed a 14% change in the WMFT and an 9% change in the JTHF whereas the group that practiced using the simulations that trained the arm and hand together (HAT) showed a 23% (WMFT) and 29% change (JTHF) in these tests of real world hand and arm movements.

There were also notable changes in the secondary outcome measures; the kinematics and force data derived from the virtual reality simulations during training. These kinematic measures include time to task completion (duration), accuracy, velocity, smoothness of hand trajectory and force generated by the hemiparetic arm. Subjects in both groups showed similar changes in the time to complete each game, 36%–42% decrease, depending on the specific simulation. Additionally three of the four subjects in the HAS group improved the smoothness of their hand trajectories (in the range of 50%–66%) indicating better control [28].

However, the subjects in the HAT group showed a more pronounced decrease in the path length. This suggests a reduction in extraneous and inaccurate arm movement with more efficient limb segment interactions. Figure 3 shows the hand trajectories generated by a representative subject in the Placing Cup activity pre and post training. Figure 3a depicts a side view of a trajectory generated without haptic assistance, and another trajectory generated with additional damping and increased antigravity support. At the beginning of the training the subject needed the addition of the haptic effects to stabilize the movement and to provide enough arm support for reaching the virtual shelf. However, Figure 3b shows that after two weeks of training this subject demonstrated a more normalized trajectory even without haptic assistance.

Figure 3.

Trajectories of a representative subject performing single repetitions of the cup reaching simulation. a. The dashed line represents the subject’s performance without any haptic effects on Day 1 of training. The solid line represents the subjects performance with the trajectory stabilized by the damping effect and with the work against gravity decreased by the robot. Also note the collision with the haptically rendered shelf during this trial. b. The same subject’s trajectory while performing the cup placing task without haptic assistance following 9 days of training. Note the coordinated, up and over trajectory, consistent with normal performance of a real world placing task.

2.3. Bilateral training

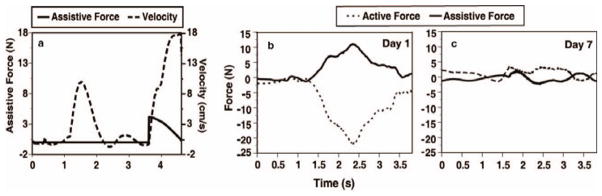

In the upper arm bilateral games, movement of the unimpaired hand guides the movement of the impaired hand. Importantly, an adaptive algorithm continually modifies the amount of force assistance provided by the robot. This is based upon the force generation and success in the game achieved by the subject. This adaptive algorithm thereby ensures that the patient continually utilizes their arm and does not rely on the Haptic Master to move it for them. Figure 4 shows the change in the relationship between the assistive force provided by the robot and the active force generated by a representative subject on Day 1 and Day 7 of training. With the aid of this algorithm, the subjects were able to minimize their reliance on the assistance provided by the robot during training, and greatly increase the force they could generate to successfully complete the catching task during the final days of training. Active force was calculated as the amount of force generated to move the robot towards the target and did not take into account the force required to support the arm against gravity. The mean active force produced by the impaired upper extremity during this bilateral elbow-shoulder activity increased by 82% and 95% for two subjects who were more impaired (pre-training WMFT scores of 180 sec and 146 sec).

Figure 4.

depicts the interaction between the subject and robot which is coordinated by on-line assistance algorithms. Figure 4a depicts the performance of a repetition of Reach and Touch. The dashed line plots the hand velocity over time. As the subject moves toward the target, the assistive force, depicted by the solid line, stays at a zero level unless the subject fails to reach the target within a predefined time window. As the subjects progress toward the target slows, the assistive force increases until progress resumes and then starts to decrease after velocity exceeds a predefined threshold value. Figure 4b and c describe two repetitions of the bilateral Catching Falling Objects simulation. Performance on Day 1 (b) requires Assistive Force from the robot (solid line) when the subject is unable to overcome gravity and move the arm towards the target (Active Force (dashed lines) dips below zero). Figure 4c represents much less assistance from the robot to perform the same task because the subject is able to exert active force throughout the task.

Two other subjects who were less impaired, (pre-training WMFT scores of 67 sec and 54 sec) improved their active force by 17% and 22% respectively.

Questionnaires have been used to assess subjects’ perception and satisfaction with the training sessions, the physical and mental effort involved in the training and an evaluation of the different exercises. The subjects were eager to participate in the project. They found the computer sessions required a lot of mental concentration, were engaging and helped improve their hand motion. They found the exercises to be tiring but wished this form of training had been part of their original therapy. When comparing the hand simulations they stated that playing the piano one finger at a time (fractionation exercise) required the most physical and mental effort.

3. Virtual Reality as a tool for engaging targeted brain networks

Studies show that training in virtual environments (VE) have had positive effects on motor recovery [10][9][22][29][30] and neural [31[32] adaptations. However, what remains untested is whether these benefits emerge simply because VR is an entertaining practice environment or whether interacting in a specially-designed VE can be used to selectively engage a frontoparietal action observation and action production network.

It is important to understand the neural mechanism underlying these innovative rehabilitation strategies. Little is understood about susceptibility of brain function to various sensory (visual, tactile, auditory) manipulations within the VE. It is critical to determine the underlying neurological mechanisms of moving and interacting within a VE and to consider how they may be exploited to facilitate activation in neural networks associated with sensorimotor learning.

Empirical data suggests that sensory input can be used to facilitate reorganization in the sensory motor system. Additionally, recent studies have also shown that a distributed neural network, which includes regions containing mirror neurons can be activated through observation of actions when intending to imitate those actions. Regions within the frontoparietal network: the opercular region of the inferior frontal gyrus (IFG) and the adjacent precentral gyrus (which we will collectively refer to as the IFG) and the rostral extent of the inferior parietal lobule (IPL) have been extensively researched for their role in higher-order representation of action [33][34][35][36]. Mirror and canonical neurons may play a central role. Detailed accounts and physiological characteristics of these neurons are extensively documented (for review, see [37]), however, a key property of a mirror cell is that it is equally activated by either observing or actuating a given behavior. Though initially identified in non-human primates, there is now compelling evidence for the existence of a human mirror neuron system [37][38]. Although the nature of tasks and functions that may most reliably capture this network remains under investigation (for example, see [36]), neurophysiological evidence suggests that mirror neurons may be the link that allows the sensorimotor system to resonate when observing actions, such as for motor learning. Notably, the pattern of muscle activation evoked by TMS to primary motor cortex while observing a grasping action was found to be similar to the pattern of muscle activation seen during actual execution of that movement [39][40] suggesting that the neural architecture for action recognition overlaps with and can prime the neural architecture for action production [41]. This phenomenon may have profound clinical implications [42].

Literature on the effects of observation on action and motor learning allows us to suggest the potential for using observation within a virtual environment for facilitating activation of targeted neural circuits. If we can show proof of concept for using virtual reality feedback to selectively drive brain circuits in healthy individuals, then this technology can have profound implications for use in diagnoses, rehabilitation, and studying basic brain mechanisms (i.e. neuroplasticity). We have done several pilot experiments using MRI-compatible data gloves to combine virtual environment experiences with fMRI to test the feasibility of using VE-based sensory manipulations to recruit select sensorimotor networks. In this chapter, in addition to the data supporting the feasibility of our enhanced training system, we also present preliminary data indicating that through manipulations in the VE, one can activate specific neural networks, particularly those neural networks associated with sensorimotor learning.

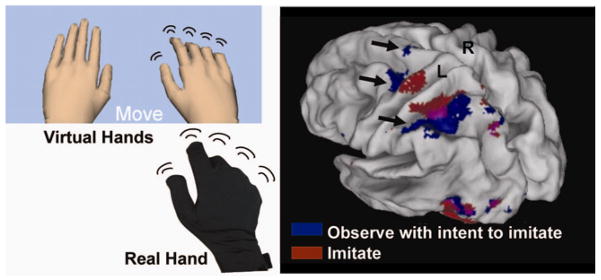

3.1. fMRI Compatible Virtual Reality System

Three components of this system are synchronized, the presentation of the visual display of the virtual hands, the collection of fMRI images and the collection of hand joint angles from the MRI-compatible (5DT) data gloves. We have extracted the essential elements common to all of our environments, the virtual hands, in order to test the ability of visual feedback provided through our virtual reality system to effect brain activity (Figure 5, left panel). Subjects performed simple sequential finger flexion movements with their dominant right hand (index through pinky fingers) as if they were pressing imaginary piano keys at a rate of 1 Hz. Subjects’ finger motion was recorded and the joint angles were transmitted in real time to a computer controlling the motion of the virtual hands. Thus we measured event-related brain responses in real-time as subjects interacted in the virtual environment.

Figure 5.

Left panel Subject’s view during fMRI experiment (top). The real hand in a 5DT glove is shown below that. Movement of the virtual hand can be generated as an exact representation of the real hand, or can be distorted to study action-observation interaction inside a virtual environment. Right panel. Observing finger sequences with the intention to imitate afterwards. Significant BOLD activity (p<.001) is rendered on an inflated cortical surface template. Arrows show activation in the dorsal premotor cortex, BA 5, rostral portion of the IPS, supramarginal gyrus, and (pre)supplementary motor area, likely associated with planning sequential finger movements.

The virtual hand on the display was sized in proportion to the subjects’ actual hand and its movement was calibrated for each subject before the experiment. After calibration, glove data collection was synchronized with the first functional volume of each functional imaging run by a back-tic TTL transmitted from the scanner to the computer controlling the glove. From that point, glove data was collected in a continuous stream until termination of the visual presentation program at the end of each functional run. As glove data was acquired, it was time-stamped and saved for offline analysis. fMRI data was realigned, coregistered, normalized, and smoothed (10 mm Gaussian filter) and analyzed using SPM5 (http://www.fil.ion.ucl.ac.uk/spm/). Activation was significant if it exceeded a threshold level of P<0.001 and a voxel extent of 10 voxels. Finger motion data was analyzed offline using custom written Matlab software to confirm that subjects produced the instructed finger sequences and rested in the appropriate trials. Finger motion amplitude and frequency was analyzed using standard multivariate statistical approaches to assure that differences in finger movement did not account for any differences in brain activation.

First, we investigated whether observing virtual hand actions with the intention to imitate those actions afterwards activates known frontoparietal observation-execution networks. After signing institutionally approved consent, eight right-handed subjects who were naïve to the virtual reality environment and free of neurological disease were tested in two conditions: 1) Watch Virtual Hands: observe finger sequences performed by a virtual hand model with the understanding that they would imitate the sequence after it was demonstrated – observe with intent to imitate (OTI), 2) Move and Watch Hands: execute the observed sequence while receiving realtime feedback of the virtual hands (actuated by the subject’s motion), The trials were arranged as 9 second long miniblocks and separated by a random interval lasting between 5–10 seconds. Each subject completed four miniblocks of each condition.

In the Move+Watch condition, significant activation was noted in a distributed network traditionally associated with motor control – contralateral sensorimotor pre-motor, posterior parietal, basal ganglia, and ipsilateral anterior intermediate cerebellum. In the OTI condition, significant activation was noted in the contralateral dorsal premotor cortex, the (pre)supplementary motor area, and the parietal cortex. Parietal activation included regions in the superior and inferior parietal lobules and overlapped with activation noted in the Move+Watch condition in the rostral extent of the intraparietal sulcus (see Figure 5). The common activation noted in this region for intentional observation and execution of action is in line with other reports using video playback of real hands moving (Hamilton et al., 2005; Dinstein et al., 2007) and suggests that well constructed VE may tap into similar neural networks.

Having demonstrated the ability to use movement observation and execution in an interactive VE to activate brain areas often recruited for real-world observation and movement, we then tested the ability of VE to facilitate select regions in the brain. If this proves successful, VE can offer a powerful tool to clinicians treating patients with various pathologies. As a vehicle for testing our proof of concept, we chose to test a common challenge facing the stroke patient population: hemiparesis. Particularly early after stroke, facilitating activation in the lesioned motor cortex is extremely challenging since paresis during this phase is typically most pronounced. We hypothesized that viewing a virtual hand corresponding to the patient’s affected side and animated by movement of the patient’s unaffected hand could selectively facilitate the motor areas in the affected hemisphere. This design and hypothesis was inspired by studies by Altschuler [43] who demonstrated that viewing hand motion through a mirror placed in the sagittal plane during bilateral arm movements may facilitate hand recovery in patients post stroke.

Three healthy subjects and one patient who had a right subcortical stroke performed sequences of finger flexions and extensions with their right hand in four sessions. During each session, subjects performed 50 trials while receiving one of four types of visual feedback: 1. left virtual hand motion, 2. right virtual hand motion, 3. left virtual blob motion, 4. right virtual blob motion. Data were submitted to a fixed effects model at the first level (Factors: left VR hand, right VR hand, left blob, right blob). We created an ROI mask (right primary motor cortex) based on significant activation in a Movement > Rest contrast (mean ROI centers: healthy subjects [42 −12 64], radius: 20 mm; stroke patient: [34 −18 64], radius 20 mm) using the ‘simpleROIbuilder’ extension [44]. We then applied the mask to the contrast of interest using the ‘Volumes’ toolbox extension [45].

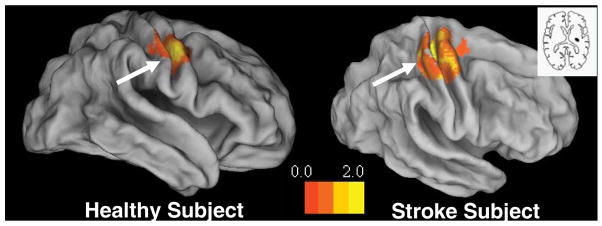

Fig. 6 shows activation in the ROI that was greater when the LEFT (relative to RIGHT) virtual hand was actuated by the subject’s physical movement of their right hand. In other words, this contrast represents activation greater when seeing the virtual mirrored hand than the corresponding hand. This simple sensory manipulation was sufficient to selectively facilitate lateralized activity in the cortex representing the observed (mirrored) virtual hand. As our preliminary data suggest in the case of stroke patients, this visual manipulation in VE may be effective in facilitating the sensorimotor motor cortex in the lesioned hemisphere and may help explain the positive therapeutic effects noted by Altschuler and colleagues [43] when training stroke patients using mirror therapy.

Figure 6.

A representative healthy subject (left panel) and a chronic stroke patient (right panel) performed a finger sequence with the RIGHT hand. The inset in the right panel shows the lesion location in the stroke patient, (see also (Merians et al. 2006)). For each subject, the panels show the activations that were significantly greater when viewing the corresponding finger motion of the LEFT more than the RIGHT virtual hand (i.e. activation related to ‘mirror’ viewing). Note that viewing the LEFT virtual hand led to significantly greater activation of the primary motor cortex IPSILATERAL to the moving hand (i.e. contralateral to the observed virtual hand) (see arrow). Significant BOLD activity (p<.01) is rendered on an inflated cortical surface template using Caret software.

4. Discussion

Rehabilitation of the upper extremity is difficult. It has been reported that 75%–95% of patients post stroke learn to walk again, but 55% have continuing problems with upper extremity function [46][47]. The complexity of sensorimotor control required for hand function as well as the wide range of recovery of manipulative abilities makes rehabilitation of the hand even more challenging. Walking drives the integration of both the affected and unaffected limbs, while functional activities performed with the upper extremity may be completed with one limb, therefore allowing the individual to transfer a task to the remaining good limb and neglect the affected side.

Although we demonstrated positive outcomes with the original system it was only appropriate for patients with mild impairments. Our second generation system, combining, movement tracking, virtual reality therapeutic gaming simulations and robotics appears to be a viable possibility for patients with more significant impairments of the upper extremity. The haptic mechanisms such as the spring assistance, the damping to stabilize trajectories and the adaptable anti-gravity assistance allowed patients with greater impairments to successfully participate in activities in which they could not usually partake. From the therapists perspective, they can tailor the interventions to address the particular needs of the patients, and from the patients perspective, it was clear throughout the testing of the system, that the patients enjoyed the activities and were challenged by the intervention.

In addition to their use in assisting to provide more intense therapy of longer duration, Brewer [6] suggests that robotics have the potential to address the challenge of conducting clinically relevant research. An example of this is the comparison we described above, training the hand and arm separately to training them together. It is controversial whether training the upper extremity as an integrated unit leads to better outcomes than training the proximal and distal components separately. The current prevailing paradigm for upper extremity rehabilitation describes the need to develop proximal control and mobility prior to initiating training of the hand. During recovery from a lesion the hand and arm are thought to compete with each other for neural territory [48]. Therefore, training proximal control first or along with distal control may actually have deleterious effects on the neuroplasticity and functional recovery of the hand. However, neural control mechanisms of arm transport and hand-object interaction are interdependent. Therefore, complex multisegmental motor training is thought to be more beneficial for skill retention. Our preliminary results demonstrate that in addition to providing an initial proof of concept, the system allows for the systematic testing of such controversial treatment interventions.

Our second goal was to design a sensory stimulation paradigm for acute and severe patients with limited ability to participate in therapy. A practice condition used during a therapy session is that of visual demonstration or modeling. Current neurological evidence exists that the observation of motor actions is more than an opportunity to understand the requirements of the movement to be executed. Many animal and human studies have shown activation of the motor cortex during observation of actions done by others [37]. Observation of motor actions may actually activate similar neural pathways as to those involved in the performance of the observed action. These findings provide an additional potential avenue of therapeutic intervention to induce neural activation.

However, some studies indicate that neural processing is not the same when observing real actions and when observing virtual actions suggesting that observing virtual models of human arms could have significantly less facilitation effect when compared to video clips of real arm motion [49]. We found that when our subjects viewed the movement of the virtual hands, with the intention of imitating that action, the pre-motor and posterior parietal areas were activated. Furthermore, we showed in both healthy subjects and in one subject post-stroke, that when the left virtual hand was actuated by the subject’s physical movement of their right hand this selectively facilitated activity in the cortex ipsilateral to the real moving hand (contralateral to the moving virtual hand).

We hypothesized that viewing a virtual hand corresponding to the patient’s affected side and animated by movement of the patient’s unaffected hand could selectively facilitate the motor areas in the affected hemisphere. This sensory manipulation takes advantage of the capabilities of virtual reality to induce activation through observation and to perturb the reality in order to target particular networks. We are optimistic about our preliminary findings and suggest that this visual manipulation in VE should be further explored to determine its effectiveness in facilitating sensorimotor areas in a lesioned hemisphere.

We believe that VR is a promising tool for rehabilitation. We found that adding haptic control mechanisms to the system enabled subjects with greater impairments to successfully participate in these intensive computerized training paradigms. Finally, we tested the underlying mechanism of interacting within a virtual environment. We found that the value of training in a virtual environment is not just limited to its ability to provide an intensive practice environment but that specially-designed VE’s can be used to selectively activate a frontoparietal action observation and action production network. This finding opens a doorway to a potential tool for clinicians treating patients with a variety of neuropathologies.

Acknowledgments

This work was supported in part by funds provided by New York University, Steinhardt School of Culture, Education, and Human Development, and by RERC grant # H133E050011 from the National Institute on Disability and Rehabilitation Research

References

- 1.Patton JL, Mussa-Ivaldi FA. Robot-assisted adaptive training: custom force fields for teaching movement patterns. IEEE Transactions in Biomedical Engineering. 2004;51(4):636–646. doi: 10.1109/TBME.2003.821035. [DOI] [PubMed] [Google Scholar]

- 2.Lum PS, Burgar CG, Shor PC, Majmundar M, Van der Loos M. MIME robotic device for upper limb neurorehabilitation in subacute stroke subjects: A follow up study. Journal of Rehabilitation Research and Development. 2006;42:631–642. doi: 10.1682/jrrd.2005.02.0044. [DOI] [PubMed] [Google Scholar]

- 3.Krebs HI, Hogan N, Aisen ML, Volpe BT. Robot-aided neurorehabilitation. IEEE Transactions in Rehabilitation Engineering. 1998;6(1):75–87. doi: 10.1109/86.662623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kahn LE, Lum PS, Rymer WZ, Reinkensmeyer DJ. Robot assisted movement training fro the stroke impaired arm: Does it matter what the robot does? Journal of Rehabilitation Research and Development. 2006;43:619–630. doi: 10.1682/jrrd.2005.03.0056. [DOI] [PubMed] [Google Scholar]

- 5.McCombe-Waller S, Whittall J. Fine motor coordination in adults with and without chronic hemiparesis: baseline comparison to non-disabled adults and effects of bilateral arm training. Archives of Physical Medicine and Rehabilitation. 2004;85(7):1076–1083. doi: 10.1016/j.apmr.2003.10.020. [DOI] [PubMed] [Google Scholar]

- 6.Brewer BR, McDowell SK, Worthen-Chaudhari LC. Poststroke upper Extremity rehabilitation: A review of robotic systems and clinical results. Topics in Stroke Rehabilitation. 1993;14(6):22–44. doi: 10.1310/tsr1406-22. [DOI] [PubMed] [Google Scholar]

- 7.Stanney KM. Handbook of Virtual Environments: Design, Implementation and Applications. London: Lawrence Erlbaum; 2002. [Google Scholar]

- 8.Burdea GC, Coiffet P. Virtual Reality Technology. New Jersey: Wiley; 2003. [Google Scholar]

- 9.Merians AS, Poizner H, Boian R, Burdea G, Adamovich S. Sensorimotor training in a virtual reality environment: does it improve functional recovery poststroke? Neurorehabilitation and Neural Repair. 2006;20(2):252–67. doi: 10.1177/1545968306286914. [DOI] [PubMed] [Google Scholar]

- 10.Adamovich SV, Merians AS, Boian R, Tremaine M, Burdea GC, Recce M, Poizner H. A virtual reality (VR)-based exercise system for hand rehabilitation after stroke. Presence. 2005;14:161–174. [Google Scholar]

- 11.Merians AS, Lewis J, Qiu Q, Talati B, Fluet GG, Adamovich SA. Strategies for Incorporating Bilateral Training into a Virtual Environment. IEEE International Conference on Complex Medical Engineering; New York, IEEE. 2007. [Google Scholar]

- 12.Adamovich SA, Qiu Q, Talati B, Fluet GG, Merians AS. Design of a Virtual Reality Based System for Hand and Arm Rehabilitation. IEEE 10th International Conference on Rehabilitation Robotics; New York IEEE. 2007; pp. 958–963. [Google Scholar]

- 13.Adamovich SA, Fluet GG, Merians AS, Mathai A, Qiu Q. Incorporating haptic effects into three-dimensional virtual environments to train the hemiparetic upper extremity. IEEE Transactions on Neural Engineering and Rehabilitation. 2008 doi: 10.1109/TNSRE.2009.2028830. under revision. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Qiu Q, Ramirez DA, Swift K, Parikh HD, Kelly D, Adamovich SA. Virtual environment for upper extremity rehabilitation in children with hemiparesis. NEBC / IEEE 34th Annual Northeast Bioengineering Conference; 2008. [Google Scholar]

- 15.5DT. 5DT Data Glove 16 MRI. http://www.5dt.com.

- 16.Immersion. CyberGlove. 2006 http://www.immersion.com.

- 17.Moog FCS Corporation. Haptic Master. 2006 http://www.fcs-cs.com.

- 18.Van der Linde RQ, Lammertse P, Frederiksen E, Ruiter B. The HapticMaster, a new high-performance haptic interface. Proceedings Eurohaptics. 2002:1–5. [Google Scholar]

- 19.Ascension. Flock of Birds. 2006 http://www.ascension-tech.com.

- 20.Dassault Systemes. Virtools Dev 3.5. 2005 www.virtools.com.

- 21.Taylor RM. The Virtual Reality Peripheral Network (VRPN) 2006 http://www.cs.unc.edu/Research/vrpn.

- 22.Merians A, Jack D, Boian R, Tremaine M, Burdea GC, Adamovich SV, Reece M, Poizner H. Virtual reality-augmented rehabilitation for patients following stroke. Physical Therapy. 2002;82:898–915. [PubMed] [Google Scholar]

- 23.RealD/StereoGraphics. CrystalEyes shutter eyewear. 2006 htt://www.reald.com.

- 24.Poizner H, Feldman AG, Levin MF, Berkinblit MB, Hening WA, Patel A, Adamovich SV. The timing of arm-trunk coordination is deficient and vision-dependent in Parkinson’s patients during reaching movements. Experimental Brain Research. 2000;133(3):279–92. doi: 10.1007/s002210000379. [DOI] [PubMed] [Google Scholar]

- 25.Adamovich SV, Berkinblit MB, Hening W, Sage J, Poizner H. The interaction of visual and proprioceptive inputs in pointing to actual and remembered targets in Parkinson’s disease. Neuroscience. 2001;104(4):1027–41. doi: 10.1016/s0306-4522(01)00099-9. [DOI] [PubMed] [Google Scholar]

- 26.Jebsen RH, Taylor N, Trieschmann RB, Trotter MJ, Howard LA. An objective and standardized test of hand function. Archives of Physical Medicine and Rehabilitation. 1969;50(6):311–319. [PubMed] [Google Scholar]

- 27.Wolf S, Thompson P, Morris D, Rose D, Winstein C, Taub E, Giuliani C, Pearson S. The EXCITE Trial: Attributes of the Wolf Motor Function Test in Patients with Sub acute Stroke. Neurorehabilitation & Neural Repair. 2005;19:194–205. doi: 10.1177/1545968305276663. [DOI] [PubMed] [Google Scholar]

- 28.Rohrer B, Fasoli S, Krebs HI, Hughes R, Volpe B, Frontera WR, Stein J, Hogan N. Movement smoothness changes during stroke recovery. Journal of Neuroscience. 2002;22(18):8297–82304. doi: 10.1523/JNEUROSCI.22-18-08297.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Deutsch JE, Merians AS, Adamovich SV, Poizner HP, Burdea GC. Development and application of virtual reality technology to improve hand use and gait of individuals post-stroke. Restorative Neurology and Neuroscience. 2004;22:341–386. [PubMed] [Google Scholar]

- 30.Holden MK. Proceedings of HCI International. London: Lawrence Erlbaum Assoc; 2001. NeuroRehabilitation using ‘Learning by Imitation’ in Virtual Environments. [Google Scholar]

- 31.You SH, Jang SH, Kim YH, Hallett M, Ahn SH, Kwon YH, Kim JH, Lee MY. Virtual reality-induced cortical reorganization and associated locomotor recovery in chronic stroke: an experimenter-blind randomized study. Stroke. 2005;36(6):1166–71. doi: 10.1161/01.STR.0000162715.43417.91. [DOI] [PubMed] [Google Scholar]

- 32.You SH, Jang SH, Kim YH, Kwon YH, Barrow I, Hallett M. Cortical reorganization induced by virtual reality therapy in a child with hemiparetic cerebral palsy. Developmental Medicine and Child Neurology. 2005;47(9):628–35. [PubMed] [Google Scholar]

- 33.Hamilton AF, Grafton ST. Goal representation in human anterior intraparietal sulcus. J Neurosci. 2006;26(4):1133–1137. doi: 10.1523/JNEUROSCI.4551-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cross ES, Hamilton AF, Grafton ST. Building a motor simulation de novo: Observation of dance by dancers. Neuroimage. 2006;31(3):1257–1267. doi: 10.1016/j.neuroimage.2006.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tunik E, Schmitt P, Grafton ST. BOLD coherence reveals segregated functional neural interactions when adapting to distinct torque perturbations. Journal of Neurophysiology. 2007;97(3):2107–2120. doi: 10.1152/jn.00405.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dinstein I, Hasson U, Rubin N, Heeger DJ. Brain areas selective for both observed and executed movements. Journal of Neurophysiology. 2007;98(3):1415–1427. doi: 10.1152/jn.00238.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rizzolatti G, Craighero L. The mirror neuron system. In: Hyman S, editor. Annual Review of Neuroscience. Vol. 27. 2004. pp. 169–192. [DOI] [PubMed] [Google Scholar]

- 38.Iacoboni M, Molnar-Szakacs I, Gallese V, Buccino G, Mazziotta JC, Rizzolatti G. Grasping the intentions of others with one’s own mirror neuron system. PLoS Biol. 2005;3:3. e79. doi: 10.1371/journal.pbio.0030079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fadiga L, Fogassi L, Pavesi G, Rizzolatti G. Motor facilitation during action observation: a magnetic stimulation study. Journal of Neurophysiology. 1995;73(6):2608–2611. doi: 10.1152/jn.1995.73.6.2608. [DOI] [PubMed] [Google Scholar]

- 40.Gangitano M, Mottaghy FM, Pascual-Leone A. Phase-specific modulation of cortical motor output during movement observation. Neurology Report. 2001;12(7):1489–1499. doi: 10.1097/00001756-200105250-00038. [DOI] [PubMed] [Google Scholar]

- 41.Buccino G, Binkofski F, Riggio L. The mirror neuron system and action recognition. Brain & Language. 2004;89(2):370–376. doi: 10.1016/S0093-934X(03)00356-0. [DOI] [PubMed] [Google Scholar]

- 42.Iacoboni M, Mazziotta JC. Mirror neuron system: basic findings and clinical applications. Annals of Neurology. 2007;62(3):213–218. doi: 10.1002/ana.21198. [DOI] [PubMed] [Google Scholar]

- 43.Altschuler EL, Wisdom SB, Stone L, Foster C, Galasko D, Llewellyn DM, Ramachandran VS. Rehabilitation of hemiparesis after stroke with a mirror. Lancet. 1999;353:2035–2036. doi: 10.1016/s0140-6736(99)00920-4. [DOI] [PubMed] [Google Scholar]

- 44.Welsh RC. simpleROIbuilder. 2008 ( http://wwwpersonal.umich.edu/~rcwelsh/SimpleROIBuilder)

- 45.‘Volumes’ toolbox extension. http://sourceforge.net/projects/spmtools)

- 46.Olsen T. Arm and leg paresis as outcome predictors in stroke rehabilitation. Stroke. 1990;21:247–251. doi: 10.1161/01.str.21.2.247. [DOI] [PubMed] [Google Scholar]

- 47.Hiaraoka K. Rehabilitation effort to improve upper extremity function in post stroke patients: A meta-analysis. Journal of Physical Therapy Science. 2001;13:5–9. [Google Scholar]

- 48.Muellbacher W, Richards C, Ziemann U, Wittenberg G, Weltz D, Boroojerdi B, Cohen L, Hallett M. Improving hand function in chronic stroke. Archives of Neurology. 2002;59(8):1278–1282. doi: 10.1001/archneur.59.8.1278. [DOI] [PubMed] [Google Scholar]

- 49.Perani D, Fazio F, Borghese NA. Different brain correlates for watching real and virtual hand actions. NeuroImage. 2001;14:749–758. doi: 10.1006/nimg.2001.0872. [DOI] [PubMed] [Google Scholar]