Abstract

Humans are capable of moving about the world in complex ways. Every time we move, our self-motion must be detected and interpreted by the central nervous system in order to make appropriate sequential movements and informed decisions. The vestibular labyrinth consists of two unique sensory organs the semi-circular canals and the otoliths that are specialized to detect rotation and translation of the head, respectively. While thresholds for pure rotational and translational self-motion are well understood surprisingly little research has investigated the relative role of each organ on thresholds for more complex motion. Eccentric (off-center) rotations during which the participant faces away from the center of rotation stimulate both organs and are thus well suited for investigating integration of rotational and translational sensory information. Ten participants completed a psychophysical direction discrimination task for pure head-centered rotations, translations and eccentric rotations with 5 different radii. Discrimination thresholds for eccentric rotations reduced with increasing radii, indicating that additional tangential accelerations (which increase with radius length) increased sensitivity. Two competing models were used to predict the eccentric thresholds based on the pure rotation and translation thresholds: one assuming that information from the two organs is integrated in an optimal fashion and another assuming that motion discrimination is solved solely by relying on the sensor which is most strongly stimulated. Our findings clearly show that information from the two organs is integrated. However the measured thresholds for 3 of the 5 eccentric rotations are even more sensitive than predictions from the optimal integration model suggesting additional non-vestibular sources of information may be involved.

Introduction

Reliable and robust self-motion perception is essential for everyday life since we are constantly moving about in the world. Understanding exactly how the central nervous system creates robust percepts from available sensory information is crucial in order to be able to assess and treat neural disorders as well as to design motion simulators or vestibular prostheses that are able to accurately induce desired self-motion percepts. One of the fundamental methodologies for investigating self-motion perception in humans is the measurement of perceptual thresholds. Thresholds have been intensively studied for translational motions [1–4] as well as rotational motions [5–8] and a good understanding of how thresholds vary with the presented motion profile has been achieved. However, thresholds for more complex motions that include both translational and rotational components have received less attention [9]. The goal of the present work is to systematically investigate how thresholds change as the ratio of translational/rotational cue intensity varies. Specifically we try to distinguish between two alternative scenarios: either canal and otolith cues are integrated in order to form a percept or the stronger cue dominates perception.

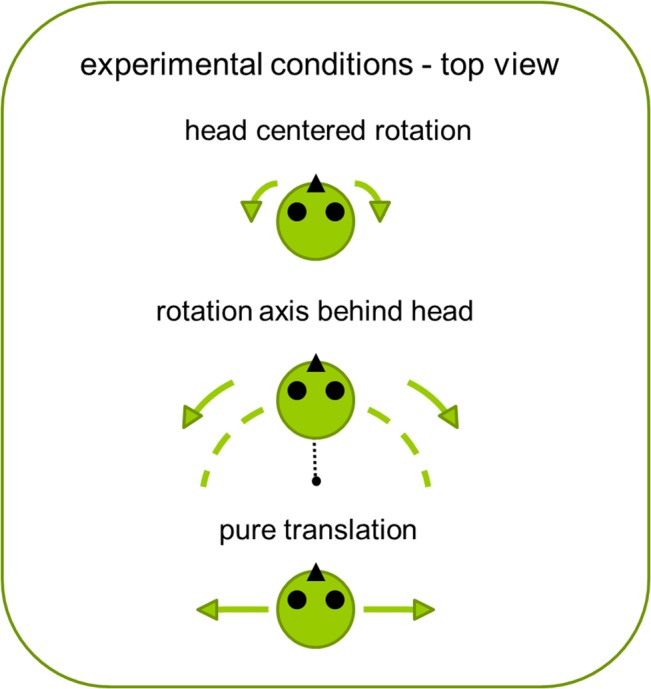

In this study, we used a direction discrimination task in which participants had to judge whether a motion was leftward or rightward and thresholds were measured for translational motions, head-centered rotations and eccentric rotations (Fig 1). Eccentric rotations (also known as off-center rotations) are motions with a circular trajectory in which the center of rotation is located behind the participant and participants are facing away from the center of rotation. Such motions include both translational and rotational cues and are therefore well suited for studying potential cue integration, or dominance.

Fig 1. Conditions.

Description of the experimental conditions as they were presented to the participants.

It has been shown that direction discrimination of passive whole-body motions in the dark is dominated by the vestibular system [4,10]. The vestibular labyrinth consists of two unique sensory organs: the semi-circular canals (SCC) and the otolith organs (OO) that are specialized to detect rotation and translation of the head, respectively. By having an eccentric (not head-centered) rotation, a tangential acceleration is introduced which is detectable by the otolith organs in addition to the rotational signal detectable by the semi-circular canals. This tangential acceleration depends on the direction of the eccentric rotation and therefore provides additional information that can be used to solve the direction discrimination task. Note that there is also a centrifugal acceleration present in the motion stimulus, which points away from the center of rotation. However, this centrifugal acceleration does not depend on the direction of the motion and therefore it can be neglected since it is not relevant for a direction discrimination task.

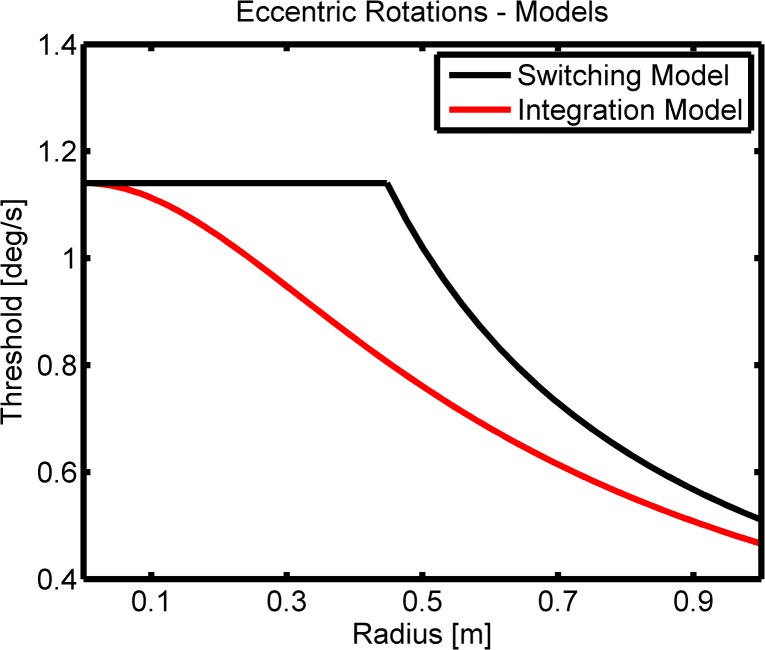

The strength of the tangential acceleration t for a given rotational acceleration α depends on the radius r of the rotation: t = r ⋅ α. Therefore, it should be possible to observe a transition from SCC dominated direction of motion discrimination to OO dominated discrimination by increasing the radius of the eccentric rotation and thereby increasing the tangential acceleration. For intermediate radii there is a regime in which SCC and OO cues are similar in perceived intensity. This range is most interesting for the purpose of the experiment, because it will help identify whether the cues from both organs are integrated or whether discrimination performance is dominated by the stronger cue. Fig 2 illustrates the two alternatives which we refer to as the switching and the integration model. With the switching model the threshold stays constant up until the radius at which the tangential acceleration becomes larger than the threshold for discriminating translational motions. After this "switching radius", the rotational threshold decreases continuously with increasing radius since less rotational acceleration is needed in order to achieve the necessary tangential acceleration for OO dominated detection. In the integration model the rotational and the translational cues are optimally combined based on each cue's relative reliability and therefore the thresholds for discriminating the direction of eccentric rotations begin to decrease already for small radii where performance for intermediate radii is better than with the switching model. For large radii the thresholds predicted by both models converge again because the translational cue is so strong that the additional rotational cue does not increase discrimination. Measuring the threshold behavior for small radii eccentric rotations will therefore allow us to discriminate between these two alternatives. Detailed model descriptions are provided in the Methods section.

Fig 2. Models.

Description of the two alternative models which we refer to as the switching model and the integration model. For both models less rotational velocity is needed with increasing radius for discriminating the motion direction. However for intermediate radii there are clear differences in threshold behavior between the models, where an integration model is more sensitive.

To our knowledge how perceptual thresholds for direction discrimination change during eccentric rotations and whether and how cues from the SCC and the OO are integrated have not been previously investigated. Eccentric rotations have been researched in the context of centrifugation [11,12]. However, in these experiments the participant is often aligned with the direction of motion and there are periods of prolonged constant centrifugation whereas our experiment investigates more natural and transient eccentric rotations of one second duration. Vestibulo-ocular reflexes (VORs) have been studied during eccentric rotations where it has been found that both rotational and translational cues are integrated and contribute to the resulting VORs [13–15].

MacNeilage et al. [9] looked at canal-otolith interaction for detection thresholds during curved-path motion and found that translation detection thresholds become significantly worse with increasing concurrent angular motions. Note that here, the curved-path motion used in their study was not an eccentric rotation, but also stimulated SCC and OO at the same time. Furthermore the task was different: participants did not make a judgment about the whole motion percept, but were asked to only judge translational motion while there was concurrent rotational motion. The authors suggest that “conscious perception might not have independent access to separate estimates of linear and angular movement parameters during curved-path motion. Estimates of linear (and perhaps angular) components might instead rely on integrated information from canals and otoliths” [9]. Assessing whether canal and otolith cues are integrated or, alternatively, drive self-motion perception based on their relative dominance is the purpose of the present paper.

Another study looking at the ability to reproduce self-rotations found that for off-center trials (eccentric motions) the additional information from the OO seemed to interfere with the reproduction of movement duration [16]. This represents an instance in which additional information from the OO hindered rather than helped this specific task. In general, these examples demonstrate that the canal-otolith interaction is not trivial and that there might not be independent perceptual access to either the SCC or the OO signals, but rather that the resulting percept might rely on integrated information from both organs.

Integration of canal-otolith signals is also present in another crucial function of the vestibular system, the distinction between gravitational and inertial accelerations whereby ambiguous otolith signals, that alone cannot be used to distinguish tilt relative to gravity from linear translation, are integrated with semi-circular canal signals and compared to internal representations of gravity [17,18]. Consequently, models describing eye movements or perception have to take this interaction into account as well [19,20]. Both perception and action processes are determined by sensory signals from the vestibular system, however differences in the response dynamics for perception and action have been reported and might reflect additional involvement of central processing [21–26]. It is thus necessary to study how specifically information is integrated in different scenarios in order to better understand the neural mechanisms subservient of perceived self-motion. Note that both the switching and integration models assume that there is independent access to the tangential component of the gravito-inertial force vector. The implications of this assumption will be reviewed in more detail in the Discussion.

Here we measured how thresholds for eccentric rotations change with increasing radii and to distinguish between two possible scenarios: either the information from both organs is integrated (“integration model”) during direction discrimination or the decision is made based on the stronger of the two signals (“switching model”). In order to distinguish between the two scenarios we first measured thresholds for translations, for rotations and for five eccentric rotations with increasing radii in order to observe the transition between SCC dominated detection to OO dominated detection. Next, the thresholds obtained for the translation and rotation conditions are used in order to predict the eccentric rotation thresholds and these predictions are compared to the observations.

Methods

Participants

Ten participants (5 female) took part in the study. They were 21–29 years old and reported no vestibular problems. The participants were paid a standard fee and signed a consent form prior to the experiment. They did not receive any feedback about their performance during the study.

Ethics Statement

The experiment was conducted in accordance with the requirements of the Helsinki Declaration and all procedures were reviewed and approved by the ethics committee of the Eberhard-Karls-Universität Tübingen.

Motion Stimuli

Participants were tested in 7 conditions: a lateral translational motion, a head-centered yaw rotation and 5 eccentric rotations with different radii. The center of rotation of the eccentric rotations was located behind the participant and the 5 radii were: 0.1, 0.2, 0.3, 0.5 and 0.8 meters. The motion profiles had durations of 1 second (1 Hz) and were sinusoidal accelerations with varying peak amplitudes: . The sinusoidal acceleration profile is also known as a raised cosine bell profile (referring to its shape in the velocity domain). For this specific type of stimulus peak acceleration equals peak velocity times Pi and therefore one can easily convert between acceleration and velocity units. This is useful since usually rotational thresholds are reported in terms of velocity, but due to the fact that we are interested in the relation between tangential and rotational acceleration we prefer to use acceleration units for describing rotations. Short 1Hz motions were chosen in order to avoid having the translational components interpreted as tilt [27] or having velocity storage mechanisms influence the perception of rotation [28]. Based on threshold knowledge from previous experiments [1,5] and preliminary testing data, the radii were chosen in such a way that the transition between SCC dominated detection to OO dominated detection can be readily observed.

Stimulus intensities ranged between 0.2 and 40 deg/s2 for the head-centered and the eccentric rotations and between 0.001 and 0.14 m/s2 for the lateral translations. Since the eccentric motions consist of both rotational and translational components they could also be described in terms of their tangential acceleration t instead of their rotational acceleration α. Both are related through the radius r of the motion: (The factor converts from degrees to radians). Therefore tangential accelerations ranged between 0.0003 m/s2 and 0.07 m/s2 for r = 0.1 and 0.0028 m/s2 and 0.56 m/s2 for r = 0.8. Note that the tangential accelerations also follow the same sinusoidal profile as the rotational accelerations. We report both parametrizations (rotational and translational) for the eccentric rotations in the results in order to clarify their relationship. For the sake of simplicity we only use the rotational parameterization for the modeling. However the whole analysis could also be done using the translational parameterization resulting in the same conclusions.

In order to present motion stimuli to participants, we used the Max Planck Institute CyberMotion Simulator. Further details on its hardware and software specifications are available (Robocoaster, KUKA Roboter GmbH, Germany; [29–32]). Since the setup in this study is similar to those used in our previous works measuring perceptual self-motion thresholds, we refer the interested reader to [1,5,33] for further information.

Experimental Procedures

A within-participants design was employed. In order to counterbalance possible learning effects, the presentation sequence of the conditions was varied between participants (sequence can be found in the supplementary material [34]). Participants were seated in a chair with a 5-point harness and wore light-proof goggles. They were instructed to keep their head still, and additionally, a neck brace was used in order to restrict head movements. Acoustic white noise was played back during the movements via headphones. Participants wore clothing with long sleeves and trousers, and a fan was directed towards the participant’s face to mask possible air movement cues during movement of the simulator. Participants were tested in two sessions of 2 hours each on two separate days. Each condition consisted of 150 direction discrimination trials and took approximately 15 minutes with a 5 minute break in between conditions in order to prevent fatigue. Participants were informed about the type of motion they would experience before each condition in order to ensure that they understood the task. They received verbal explanations about the upcoming motion and saw the pictures shown in Fig 1. They knew what kind of motion to expect in each condition and therefore there was no ambiguity in the instructions. Participants reported the direction of motion, irrespective of it consisting of translational or rotational cues.

A one-interval two-alternative forced-choice task was used to measure the psychometric function for direction discrimination. Participants initiated a trial with a button press and, after a one second pause, the movement began. They were moved either leftward or rightward, were instructed to indicate the direction of their motion as fast as possible via a button press, and were then moved back to the starting position with the same speed as the stimulus previously delivered. In total there was at least a two second period of standstill between consecutive motions (longer if participants did not immediately start the next trial).

Psychometric Function Estimation

A Bayesian adaptive procedure, based on the method proposed by Kontsevich and Tyler [35], was used for choosing stimuli [36]. This method fits a psychometric function after each newly acquired data point to the whole data set. Simulating the answer of the next trial for each possible acceleration stimulus allows for calculating which stimulus would most change the fit of the psychometric function. This stimulus is considered the most informative and is presented as the next trial. Making use of this method allows for fast and accurate estimation of the psychometric function.

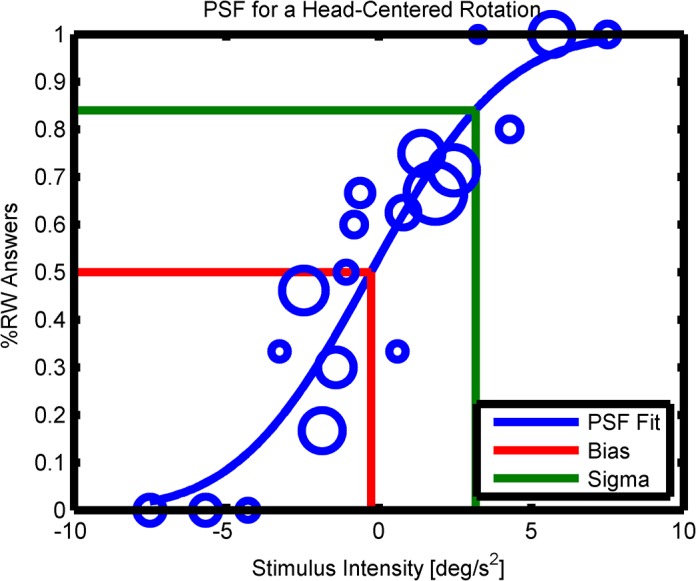

Data for a discrimination task can be analyzed by fitting a psychometric function ranging from 0% rightward answers to 100% rightward answers. This allows for an estimate of the point of subjective equality between leftward and rightward motions (the bias μ) as well as an estimate for the discrimination threshold σ (Fig 3). The psychometric function was modeled as a cumulative normal distribution with the two parameters μ and σ: . The best fitting parameters given the measurements were found by maximizing the log-likelihood of the model: , where the sum runs over all 150 trials, response i equals 1 if the response was rightward and 0 otherwise, and stimulus i is the stimulus intensity of the i-th trial.

Fig 3. PSF Fit.

A psychometric function (PSF) fit: the percentage of rightward responses is plotted against the stimulus intensity (positive intensities correspond to rightward motions). The sizes of the blue circles correspond to how often a certain stimulus was tested. The blue line shows the fit for the psychometric function based on maximum likelihood estimation.

Modeling

It is unclear exactly how rotational and tangential accelerations are combined in order to solve the direction discrimination task during eccentric rotations. The discrimination thresholds given only rotational cues (R = 0.0, σ rot) and only translational cues (R = ∞, lateral translation, σ trans) describe the sensitivity of the respective sensor. During eccentric rotations both of these cues (rotational and translational) are present. Our aim is to model the performance during the eccentric rotations (σ ecc) based on the discrimination thresholds σ trans and σ rot and the radii of the eccentric rotations. In order to understand how the discrimination thresholds change with increasing radius we propose two plausible models and test which one best fits the data. For the sake of simplicity of notation, thresholds σ within this section are assumed to be given in acceleration units, whereas the results will be reported in velocity units since this is what is usually done in the literature. As explained above one can easily convert between these units since peak acceleration equals peak velocity times Pi.

Switching model

The switching model is based on the assumption that the discrimination task is solved by either using only rotational or only translational cues depending on which cue is stronger. Assume a head-centered rotation with threshold stimulus intensity σ rot. A participant should perceive approximately 84% of all trials as being rightward motions (definition of σ rot being the standard deviation of the underlying normal distribution). Going from head-centered to eccentric rotations with the same rotational acceleration intensity σ rot, the motion will also include the tangential acceleration t. As described above, rotational accelerations α and tangential accelerations t are coupled through the radius . Once the radius becomes so large that t = σ trans, both cues independently should result in 84% of all trials being judged as rightward motions (using either one of the sensors). For bigger radii less rotational acceleration is needed in order to achieve t = σ trans and the translational cue will dominate the discrimination task. The transition radius can be calculated as: .

This model implies that up to r transition the discrimination threshold σ ecc for eccentric motions would be the same as the threshold for head-centered rotations σ rot. Only after the rotational acceleration α results in such a strong tangential acceleration t (due to the radius r) that t > σ trans will the translational cue contribute more to the discrimination task than the rotational cue. Therefore σ ecc can be modeled as: , see Fig 2 (black line).

Integration model

An alternative model is one where the discrimination task is solved by taking both, rotational and translation cues into account at the same time. This should lead to a better performance for the intermediate radii compared to only relying on one of the two available cues. For small radii the rotational cue should dominate the discrimination process whereas for large radii the strong tangential acceleration should be easier to detect than the corresponding rotational acceleration. In between we would expect a regime in which the cues are equally strong and the benefit of using both cues in terms of task performance is expected to be largest.

An integration framework providing these properties is the maximum-likelihood integrator scheme which has been successfully applied in many different tasks where sensory information is combined in order to come up with an optimal judgment [37–42]. Assuming independent sources of Gaussian noise underlying the direction discrimination performance of translational and rotational motions, and uniform priors, the combined maximum-likelihood estimate is given by [43]:

where . The conversion of σ trans is necessary in order to get comparable quantities in terms of rotational accelerations. σ trans,r expresses how much rotational acceleration is needed to get a tangential acceleration equal to σ trans and therefore it describes the sensitivity to translational motions at the radius r in units of rotational accelerations.

For small and large radii the switching and the integration model produce similar results (if they use the same parameters σ trans and σ rot), since either rotational or translational cues dominate the discrimination task. However, in between there is a range of radii for which the models clearly differ (Fig 2).

Note that σ ecc is given in rotational units, meaning that σ ecc indicates how much rotational acceleration is needed in order to be at the threshold level. Therefore in the extreme cases of r = 0 (head-centered rotation) and r = ∞ (translation) we expect σ ecc(r = 0) = σ rot and σ ecc(r = ∞) = 0, because at r = ∞ an infinitesimally small rotational acceleration would already lead to an infinitely large translation. Strictly speaking σ ecc is not defined for r = 0 and r = ∞. We explain these considerations in order to clarify the asymptotic convergence behavior of σ ecc. In case r = 0, σ trans,r will become ∞ and therefore the term in the denominator of σ ecc can be neglected. Now the two terms cancel each other out and . In case r = ∞, σ trans,r becomes 0, the terms cancel each other and .

Results

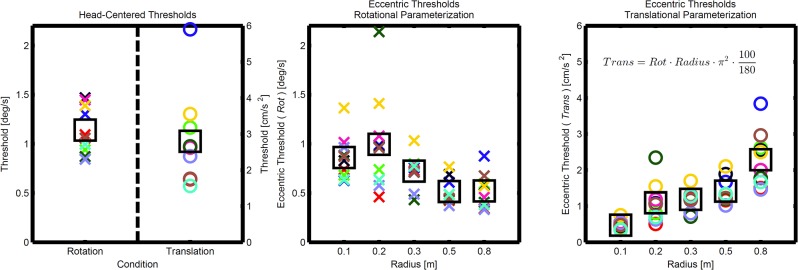

The maximum likelihood parameters for the biases and the discrimination thresholds estimated by fitting psychometric functions to each participant’s data for all 7 conditions, as well as the raw data showing every single response can be found in the supplementary material [34]. Table 1 shows the mean biases for all conditions and Fig 4 shows the discrimination thresholds. Note that all means used herein are arithmetic means since our data did not justify the use of geometric means, which are often used for vestibular dominated self-motion perception thresholds (see data distribution in Fig 4). Nevertheless we did the analysis using geometric means as well and the results did not substantially differ. The discrimination thresholds for the eccentric rotations are shown in both possible parameterizations. One can simply convert between the parameterizations using the formula given in Fig 4. We provide both parameterizations to illustrate their relation, but from here on we will use the rotational parameterization only. All statistical analyses were performed using IBM SPSS Statistics 21 with a significance level of p = 0.05.

Table 1. Results for the Biases.

| Condition | Rotation | r = 0.1 | r = 0.2 | r = 0.3 | r = 0.5 | r = 0.8 | Translation |

|---|---|---|---|---|---|---|---|

| [deg/s] | [deg/s] | [deg/s] | [deg/s] | [deg/s] | [deg/s] | [cm/s2] | |

| Mean Bias | 0.03 | -0.02 | -0.04 | 0.05 | -0.01 | 0.05 | 0.4 |

| Standard Error | 0.13 | 0.04 | 0.06 | 0.05 | 0.04 | 0.04 | 0.2 |

Fig 4. Discrimination Thresholds.

The left panel shows the thresholds for the head-centered rotation and translation. Each participant’s data is shown (crosses and circles) together with the arithmetic means (squares). The middle panel shows the thresholds for the eccentric rotation conditions (varying radii) in the rotational parameterization. The right panel shows the same thresholds but in the translational parameterization together with the conversion formula. The effect of the radius on the thresholds can be seen.

One-sample t-tests showed no significant deviations of the biases from zero in any of the conditions. A one-way repeated measures ANOVA was conducted to test for an effect of radius on discrimination thresholds in all rotation conditions. There was a statistically significant effect of radius on discrimination thresholds, F (5, 5) = 20.47, p = .002; Wilk's Λ = 0.047, partial η2 = .95.

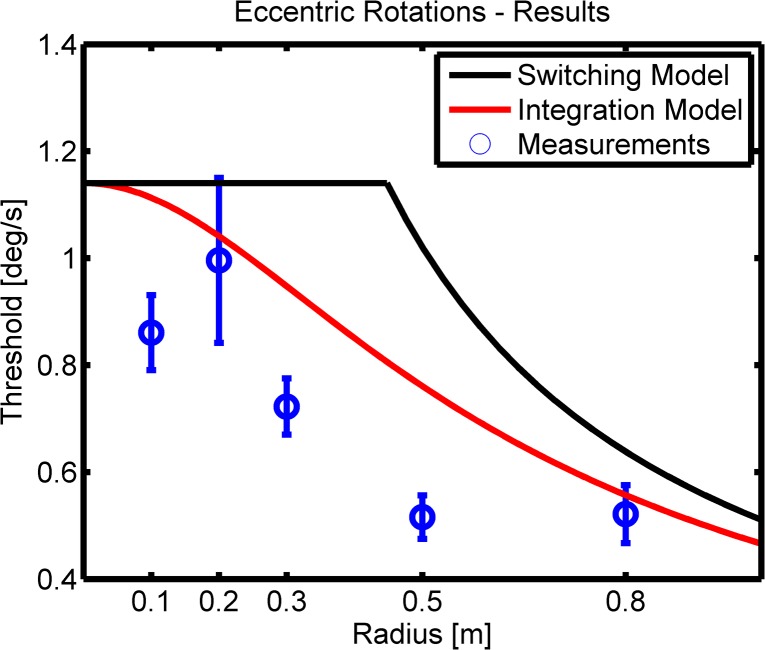

Model Predictions

The predictions for the eccentric thresholds based on the integration and the switching model are shown together with the measurements in Fig 5. It can be seen that the integration model (red) better fits all the measurements (blue) than the switching model (black). However, it can also be seen that only 2 out of 5 of the measured thresholds are actually well predicted by the integration model. In order to quantify this, one-sample t-tests were performed testing if the measured threshold distribution at a given radius significantly differs from the integration model prediction for that radius. For r = 0.1, 0.3 and 0.5m we find significant differences p = .006, .002 and p < .001. Whereas for r = 0.2 and 0.8m there are no differences p = .78 and .53.

Fig 5. Model Results.

Mean results for the eccentric discrimination thresholds together with standard errors are shown (blue). Model fits are shown in black for the switching model and in red for the integration model. It can be clearly seen that the integration model predicts the eccentric thresholds better, but 3 out of 5 thresholds are even significantly lower than what the optimal integration model predicts.

Discussion

We measured 10 participants' ability to discriminate leftward from rightward motions. Head-centered yaw rotation, lateral translation and 5 eccentric rotations with the rotation center located behind the participant were tested. Psychometric functions (cumulative normal) were fit to the data in order to extract each participant’s bias and discrimination sensitivity. The mean biases did not significantly differ from zero, and, as expected, the discrimination sensitivity increased with increasing radius. This increase is likely due to the fact that eccentric rotations with a given rotational acceleration exhibit tangential accelerations that increase with the radius. These tangential accelerations can be perceived in addition to the rotational accelerations, and due to this increase in sensory information it becomes easier to solve the task.

To our knowledge this is the first study that systematically investigates the changes in thresholds with increasing radii and therefore it represents an important step towards a better understanding of the perception of more complex motions stimulating the SCC and the OO at the same time. Such motions occur in driving or flight simulators for example and the motion cueing algorithms of the simulators make use of perceptual thresholds in order to optimize the perceived motions. Currently tilt thresholds which are based on head-centered measurements are often used for limiting simulator motions [44]. However, often it would be more realistic to use thresholds that are not based on head-centered but eccentric rotations. Our findings could directly be applied in order to understand how thresholds change with eccentricity and therefore might lead to better motion cueing algorithms. Future research should investigate whether these results are generalizable and whether similar improvements in thresholds can also be found for other types of motions that concurrently stimulate both the SCC and the OO.

Our findings show that task performance increases (thresholds decrease) for concurrent SCC and OO stimulation. These findings are especially interesting given that other previous research on concurrent stimulation found performance decreases in motion detection and reproduction tasks [9,16]. Note that those tasks were different in that participants did not make judgments about their whole motion percept, but were asked to judge individual components of the whole motion to which they might not have independent access. Therefore sensory integration seems to be task dependent and further research is necessary to better understand why this is the case.

Our results suggest that the information from the SCC and the OO are integrated during eccentric rotations, resulting in better performance for a direction discrimination task as compared to switching between one of the two sensors. This represents an interesting finding that is in line with results from VOR research [13] and many other examples of integration of sensory information for perceptual tasks. However, most of the previous perceptual research in humans has focused on visual-vestibular integration and did not look at integration within the vestibular system for direction discrimination tasks. Therefore our research adds valuable perceptual data showing how information between the organs of the vestibular system is integrated. The paradigm of eccentric rotations allows for systematic tuning of the ratio between translational and rotational cue intensity and therefore it represents an interesting task which could be helpful in bridging the gap between psychophysical and physiological accounts of cue integration [45].

We showed that an integration model predicts our data better as compared to a switching model. However, some of the measured thresholds were even lower than what an optimal integration model would predict. This hints at an additional source of information that is informative about the direction of motion. Our model assumes that there is independent access to the tangential component of the gravito-inertial force (GIF), but this is likely not the case. The information of the OO about the GIF is combined with information of the SCC in order to achieve the GIF resolution [17]. However at threshold level this might not be easily possible, because the contributing signals from the OO and SCC are close to the noise level. Nevertheless our data suggests that the information from the two sensory organs are combined and task performance increases even beyond what one would expect from combining tangential and rotational acceleration alone. In case GIF resolution would not be possible we would have rather expected the opposite effect: a worsening of task performance, because no independent access to the constituting signals would be possible. As discussed in the introduction there is also a centrifugal component of the stimulus which could generate the feeling of pitch in the participants. Although this potential pitch sensation would not be informative about the direction of motion we still want to quantify its contribution. At threshold level the highest centrifugal acceleration f occurs for the r = 0.8m radius condition: f = r ⋅ ω 2 = 7 ⋅ 10−5 m/s 2, where ω is the rotational velocity at threshold level in radians per second. This acceleration is so little that it would only produce a tilt of the gravito-inertial vector of , which is so small that it should not influence task performance. One possible factor that could explain the performance increase, which is not included in our model, could be that there are somatosensory cues that could help discriminating the direction of motion. As mentioned in the introduction, the discrimination of passive whole-body motions in the dark is dominated by the vestibular system, but nevertheless there are also somatosensory cues present which can be used for direction discrimination and could explain the additional performance increase [4]. These are possibilities, but we do not know for certain why there is more than optimal integration of the cues and more research is needed in order to better understand the behavior of thresholds for eccentric rotations with radii in the range where integration can be observed. In summary we can say that cues from both vestibular organs are integrated which yields significant performance increase that can be observed as compared to what one would expect from a switching model.

Acknowledgments

The authors wish to thank Suzanne Nooij, Karl Beykirch, Alessandro Nesti, Michael Kerger and Harald Teufel for scientific discussion and technical support.

Data Availability

All supplementary files are available from the FigShare database under the following URL: http://figshare.com/s/9f10f890e81111e49f5306ec4b8d1f61.

Funding Statement

This research was supported by Max Planck Society stipends and by the WCU (World Class University) program through the National Research Foundation of Korea funded by the Ministry of Education, Science and Technology (R31–10008).

References

- 1. Soyka F, Robuffo Giordano P, Beykirch K, Bülthoff HH. Predicting direction detection thresholds for arbitrary translational acceleration profiles in the horizontal plane. Exp Brain Res. 2011;209: 95–107. 10.1007/s00221-010-2523-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Benson AJ, Spencer MB, Stott JRR. Thresholds for the detection of the direction of whole-body, linear movement in the horizontal plane. Aviat Sp Environ Med. 1986;57: 1088–1096. [PubMed] [Google Scholar]

- 3. Heerspink HM, Berkouwer WR, Stroosma O, Van Paassen MM, Mulder M, Mulder JA. Evaluation of Vestibular Thresholds for Motion Detection in the SIMONA Research Simulator. AIAA Model Simul Technol Conf Exhib. 2005; 1–20. [Google Scholar]

- 4. Valko Y, Lewis RF, Priesol AJ, Merfeld DM. Vestibular Labyrinth Contributions to Human Whole-Body Motion Discrimination. J Neurosci. 2012;32: 13537–13542. 10.1523/JNEUROSCI.2157-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Soyka F, Giordano PR, Barnett-Cowan M, Bülthoff HH. Modeling direction discrimination thresholds for yaw rotations around an earth-vertical axis for arbitrary motion profiles. Exp Brain Res. 2012;220: 89–99. 10.1007/s00221-012-3120-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Grabherr L, Nicoucar K, Mast FW, Merfeld DM. Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res. 2008;186: 677–681. 10.1007/s00221-008-1350-8 [DOI] [PubMed] [Google Scholar]

- 7. Roditi RE, Crane BT. Directional asymmetries and age effects in human self-motion perception. JARO—J Assoc Res Otolaryngol. 2012;13: 381–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Benson AJ, Hutt ECB, Brown SF. Thresholds for the perception of whole body angular movement about a vertical axis. Aviat Sp Environ Med. 1989;60: 205–213. [PubMed] [Google Scholar]

- 9. MacNeilage PR, Turner AH, Angelaki DE. Canal-otolith interactions and detection thresholds of linear and angular components during curved-path self-motion. J Neurophysiol. 2010;104: 765–73. 10.1152/jn.01067.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Walsh EG. Role of the Vestibular Apparatus in the Perception of Motion on a Parallel Swing. J Physiol. 1961;155: 506–513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Merfeld DM, Zupan LH, Gifford CA. Neural processing of gravito-inertial cues in humans. II. Influence of the semicircular canals during eccentric rotation. J Neurophysiol. 2001;85: 1648–1660. [DOI] [PubMed] [Google Scholar]

- 12. Mittelstaedt M, Mittelstaedt H. The influence of otoliths and somatic gravireceptors on angular velocity estimation. J Vestib Res. 1996;6: 355–366. [PubMed] [Google Scholar]

- 13. Seidman S, Paige G, Tomlinson R, Schmitt N. Linearity of canal-otolith interaction during eccentric rotation in humans. Exp Brain Res. 2002;147: 29–37. 10.1007/s00221-002-1214-6 [DOI] [PubMed] [Google Scholar]

- 14. Crane BT, Demer JL. A linear canal-otolith interaction model to describe the human vestibulo-ocular reflex. Biol Cybern. 1999;81: 109–118. [DOI] [PubMed] [Google Scholar]

- 15. Merfeld DM. Modeling human vestibular responses during eccentric rotation and off vertical axis rotation. Acta Otolaryngol Suppl. 1995;520 Pt 2: 354–359. [DOI] [PubMed] [Google Scholar]

- 16. Israël I, Crockett M, Zupan L, Merfeld D. Reproduction of ON-center and OFF-center self-rotations. Exp Brain Res. 2005;163: 540–6. 10.1007/s00221-005-2323-9 [DOI] [PubMed] [Google Scholar]

- 17. Angelaki DE, McHenry MQ, Dickman JD, Newlands SD, Hess BJ. Computation of inertial motion: neural strategies to resolve ambiguous otolith information. J Neurosci. 1999;19: 316–327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Zupan LH, Peterka RJ, Merfeld DM. Neural processing of gravito-inertial cues in humans. I. Influence of the semicircular canals following post-rotatory tilt. J Neurophysiol. 2000;84: 2001–2015. [DOI] [PubMed] [Google Scholar]

- 19. Bos JE, Bles W. Theoretical considerations on canal-otolith interaction and an observer model. Biol Cybern. 2002;86: 191–207. 10.1007/s00422-001-0289-7 [DOI] [PubMed] [Google Scholar]

- 20. Zupan LH, Merfeld DM, Darlot C. Using sensory weighting to model the influence of canal, otolith and visual cues on spatial orientation and eye movements. Biol Cybern. 2002;86: 209–230. [DOI] [PubMed] [Google Scholar]

- 21. Merfeld DM, Park S, Gianna-Poulin C, Black FO, Wood S. Qualitatively different strategies are used to elicit reflexive and cognitive responses. J Vestib Res. 2004;14: 109–110. [Google Scholar]

- 22. Barnett-Cowan M, Dyde RT, Harris LR. Is an internal model of head orientation necessary for oculomotor control? Ann N Y Acad Sci. 2005;1039: 314–324. [DOI] [PubMed] [Google Scholar]

- 23. Merfeld DM, Park S, Gianna-Poulin C, Black FO, Wood S. Vestibular perception and action employ qualitatively different mechanisms. I. Frequency response of VOR and perceptual responses during Translation and Tilt. J Neurophysiol. 2005;94: 186–98. 10.1152/jn.00904.2004 [DOI] [PubMed] [Google Scholar]

- 24. Merfeld DM, Park S, Gianna-Poulin C, Black FO, Wood S. Vestibular perception and action employ qualitatively different mechanisms. II. VOR and perceptual responses during combined Tilt&Translation. J Neurophysiol. 2005;94: 199–205. [DOI] [PubMed] [Google Scholar]

- 25. Bertolini G, Ramat S, Laurens J, Bockisch CJ, Marti S, Straumann D, et al. Velocity storage contribution to vestibular self-motion perception in healthy human subjects. J Neurophysiol. 2011;105: 209–223. 10.1152/jn.00154.2010 [DOI] [PubMed] [Google Scholar]

- 26. Bertolini G, Ramat S, Bockisch CJ, Marti S, Straumann D, Palla A. Is vestibular self-motion perception controlled by the velocity storage? insights from patients with chronic degeneration of the vestibulo-cerebellum. PLoS One. 2012;7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Merfeld DM, Zupan LH. Neural processing of gravitoinertial cues in humans. III. Modeling tilt and translation responses. J Neurophysiol. 2002;87: 819–833. [DOI] [PubMed] [Google Scholar]

- 28. MacNeilage PR, Ganesan N, Angelaki DE. Computational approaches to spatial orientation: from transfer functions to dynamic Bayesian inference. J Neurophysiol. 2008;100: 2981–96. 10.1152/jn.90677.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Teufel H, Nusseck H, Beykirch K, Butler J, Kerger M, Bülthoff HH. MPI motion simulator: Development and analysis of a novel motion simulator. AIAA Model Simul Technol Conf Exhib. 2007; 1–11. Available: http://arc.aiaa.org/doi/pdf/10.2514/6.2007-6476 [Google Scholar]

- 30. Barnett-Cowan M, Meilinger T, Vidal M, Teufel H, Bülthoff HH. MPI CyberMotion Simulator: Implementation of a Novel Motion Simulator to Investigate Multisensory Path Integration in Three Dimensions. J Vis Exp. 2012;63: 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Robuffo Giordano P, Masone C, Tesch J, Breidt M, Pollini L, Bülthoff HH. A novel framework for closed-loop robotic motion simulation—Part II: Motion cueing design and experimental validation. Proceedings—IEEE International Conference on Robotics and Automation. 2010. pp. 3896–3903.

- 32.Robuffo Giordano P, Masone C, Tesch J, Breidt M, Pollini L, Bülthoff HH. A novel framework for closed-loop robotic motion simulation—Part I: Inverse kinematics design. Proceedings—IEEE International Conference on Robotics and Automation. 2010. pp. 3876–3883.

- 33. Soyka F, Bülthoff HH, Barnett-Cowan M. Temporal processing of self-motion: Modeling reaction times for rotations and translations. Exp Brain Res. 2013;228: 51–62. 10.1007/s00221-013-3536-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Soyka F. Supplementary Material [Internet]. 2015. Available: http://figshare.com/s/9f10f890e81111e49f5306ec4b8d1f61

- 35. Kontsevich LL, Tyler CW. Bayesian adaptive estimation of psychometric slope and threshold. Vision Res. 1999;39: 2729–2737. [DOI] [PubMed] [Google Scholar]

- 36. Tanner T. Generalized adaptive procedure for psychometric measurement. Perception. 2008;37: 93. [Google Scholar]

- 37. Ernst MO, Bülthoff HH. Merging the senses into a robust percept. Trends Cogn Sci. 2004;8: 162–169. [DOI] [PubMed] [Google Scholar]

- 38. Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29: 15601–12. 10.1523/JNEUROSCI.2574-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Hillis JM, Ernst MO, Banks MS, Landy MS. Combining sensory information: mandatory fusion within, but not between, senses. Science. 2002;298: 1627–1630. [DOI] [PubMed] [Google Scholar]

- 40. Butler JS, Smith ST, Campos JL, Bülthoff HH. Bayesian integration of visual and vestibular signals for heading. J Vis. 2010;10: 23. [DOI] [PubMed] [Google Scholar]

- 41. Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nature. 2004;427: 244–247. [DOI] [PubMed] [Google Scholar]

- 42. Van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. J Neurophysiol. 1999;81: 1355–1364. [DOI] [PubMed] [Google Scholar]

- 43. Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415: 429–433. Available: http://www.nature.com/nature/journal/v415/n6870/abs/415429a.html [DOI] [PubMed] [Google Scholar]

- 44. Nesti A, Masone C, Barnett-Cowan M, Giordano PR, Bülthoff HH, Pretto P. Roll rate thresholds and perceived realism in driving simulation. Driv Simul Conf. 2012; [Google Scholar]

- 45. Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci. Nature Publishing Group; 2013;14: 429–42. Available: http://www.nature.com.gate1.inist.fr/nrn/journal/v14/n6/full/nrn3503.html 10.1038/nrn3503 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All supplementary files are available from the FigShare database under the following URL: http://figshare.com/s/9f10f890e81111e49f5306ec4b8d1f61.