Abstract

The superior temporal sulcus (STS) is implicated in a variety of social processes, ranging from language perception to simulating the mental processes of others (theory of mind). In a new study, Deen and colleagues use fMRI to show a regular anterior-to-posterior organization in the STS for different social tasks.

In the human brain, the superior temporal sulcus (STS) is second in size only to the central sulcus. Although the basic organization of the central sulcus has long been known, there is no generally accepted scheme for the organization of the STS. The fMRI study by Deen and colleagues [1] represents a step forward in understanding this fascinating chunk of the brain.

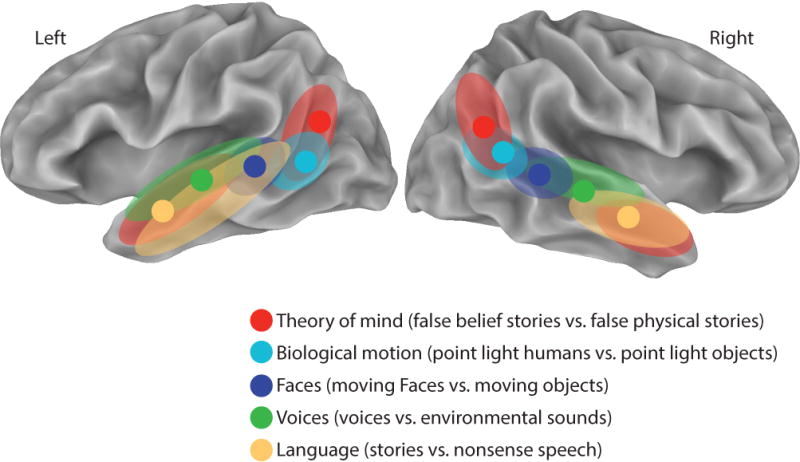

The first cognitive function ascribed to the STS was language comprehension. What we now term Wernicke’s area consists of a posterior section of the superior temporal gyrus and middle temporal gyrus—and by extension the cortex between them in the posterior STS. The explosion of BOLD fMRI studies has linked many other cognitive functions, especially those related to social cognition and perception, to the STS. To search for functional specialization within the STS, Deen and colleagues used a traditional block-design fMRI approach. The response to different conditions relative to fixation baseline was measured and the responses in selected conditions were compared to create contrasts. For the theory of mind contrast, the response to stories about beliefs was compared with the response to stories about physical properties. For biological motion, point-light displays of humans vs. geometric shapes; for faces, moving faces vs. objects; for voices, voice vs. environmental sounds; for language, stories vs. nonsense speech.

Figure 1 summarizes two different analyses in the paper. The first analysis examined only the peak response for the different contrasts. The most posterior peak was for the theory of mind contrast, where the STS ascends sharply upwards into the inferior parietal lobule and terminates in the temporal-parietal junction (TPJ). Peak responses to biological motion were more inferior, where the STS begins its ascent. Peak responses to moving faces, voices, and language were progressively more anterior along the sulcus, with language peaking almost 60 mm anterior to the theory of mind peak.

Figure 1. Organization of Social Perception and Cognition within the STS.

Results of Deen et al. [1] shown on an inflated cortical surface model of the left and right hemisphere of the N27 atlas brain. Filled circles show the location of the peak activation, averaged across subjects, for each contrast. Colored regions show the extent of the activation for each contrast (multiple colored regions for some contrasts). Created from Deen et al. Table 1 and description of activations.

The stimuli used in the study were largely taken from previously published fMRI experiments. Therefore, it is no surprise that the activation peaks reported are similar to those found in previous experiments. For instance, the TPJ activity for theory of mind in the Deen study was within 6 mm of that previously reported [2]. This means that the posterior-to-anterior organization of peak coordinates can be compared with those from a meta-analysis of fMRI papers reporting STS activation [3]. Reassuringly, the results of the two approaches are in good agreement. For instance, both report both posterior and anterior theory-of-mind foci, with an intervening middle STS region responsive to biological motion.

A second analysis conducted by Deen et al. could not have been generated with a peak co-ordinate meta-analysis, because it examined the entire STS activation for each contrast instead of a single peak co-ordinate. The spatial extent of the STS activation map for each contrast was calculated, followed by the overlap across contrasts (a methodological note is that this analysis was properly performed within individual subjects: using group average data grossly overestimates the degree of overlap, as demonstrated by recent studies that found overlapping auditory, visual and somatosensory responses in the STS, but not nearby areas, only with individual subject analysis [4, 5].) The extent and overlap variables are related, because if two contrasts both activate a large fraction of the STS, by necessity they will have a high overlap. Indeed, overlap was observed between the language and theory of mind contrasts, two contrasts that in the left hemisphere activated most of the length of the STS. Strong overlap was also observed between moving faces and voices, contrasts that both activated a region of mid-posterior STS.

A few cautions are in order. First, the authors examine only the STS, masking out all other brain areas even though whole-brain fMRI data was collected. This is problematic because the STS is densely connected with both early sensory cortex and association areas. Since it is certain that many of these other areas were also active, understanding the pattern of overlap across contrasts for the whole brain would provide valuable extra information. Second, the approach of Deen et al. reduces complex cognitive functions such as “language” to single A vs. B contrasts. Although the authors used different contrasts to localize different social cognitive functions, it is not clear if even a very large number of contrasts would fully elucidate the organization of the STS in the absence of both theoretical models of the cognitive processes isolated in each contrast and neurobiological models of the neural computations underlying each process.

One conceptual framework that may help guide future progress is provided by the field of multisensory integration. The authors take note that a visual contrast (faces vs. objects) and an auditory contrast (voice vs. environmental sounds) both activated an overlapping focus in mid-posterior STS. However, when we speak face-to-face, we use both visual information from the talker’s face and auditory information from the talker’s voice. A theoretical model that supposes that specific visual mouth movements become linked with specific auditory speech features during language learning predicts that neurons sensitive to both complex auditory and visual features should be important for face-to-face speech perception. Correspondingly, the STS of human and non-human primates share an organization in which small regions of cortex that respond to unisensory visual and auditory stimuli, including faces and vocalization, are juxtaposed with regions that respond to audiovisual stimuli [6, 7]. Evidence for a link between this anatomical organization and language processing is provided by studies showing that activity in multisensory STS is correlated with behavioral measures of multisensory speech perception in adults [8] and children [9], and disrupting the STS with transcranial magnetic stimulation interferes with multisensory speech perception [10]. More generally, many forms of social communication, both verbal and non-verbal, are multisensory—a friendly pat on the back, a warm smile, a murmur of encouragement. One attractive possibility is that over the course of evolution and development, the multisensory anatomy of the STS has been recruited for social processes, such as theory of mind, that make use of information from multiple modalities.

Acknowledgments

The author would like to acknowledge Tony Ro for helpful discussions. Supported by NIH R01 NS065395.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Deen B, Koldewyn K, Kanwisher N, Saxe R. Functional Organization of Social Perception and Cognition in the Superior Temporal Sulcus. Cereb Cortex. 2015 doi: 10.1093/cercor/bhv111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19:1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 3.Hein G, Knight RT. Superior temporal sulcus–It’s my area: or is it? J Cogn Neurosci. 2008;20:2125–2136. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- 4.Jiang F, Beauchamp MS, Fine I. Re-examining overlap between tactile and visual motion responses within hMT+ and STS. Neuroimage. 2015 doi: 10.1016/j.neuroimage.2015.06.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Beauchamp MS, Yasar NE, Frye RE, Ro T. Touch, sound and vision in human superior temporal sulcus. Neuroimage. 2008;41:1011–1020. doi: 10.1016/j.neuroimage.2008.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nature Neuroscience. 2004;7:1190–1192. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]

- 7.Dahl CD, Logothetis NK, Kayser C. Spatial organization of multisensory responses in temporal association cortex. J Neurosci. 2009;29:11924–11932. doi: 10.1523/JNEUROSCI.3437-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nath AR, Beauchamp MS. A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. NeuroImage. 2012;59:781–787. doi: 10.1016/j.neuroimage.2011.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nath AR, Fava EE, Beauchamp MS. Neural correlates of interindividual differences in children’s audiovisual speech perception. J Neurosci. 2011;31:13963–13971. doi: 10.1523/JNEUROSCI.2605-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beauchamp MS, Nath AR, Pasalar S. fMRI-Guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the McGurk effect. J Neurosci. 2010;30:2414–2417. doi: 10.1523/JNEUROSCI.4865-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]