Abstract

Reward learning plays a central role in decision making and adaptation. Accumulating evidence suggests that the striatum contributes enormously to reward learning but that its subregions may have distinct functions. A recent article by Tricomi and Lempert (J Neurophysiol. First published 22 October 2014, doi:10.1152/jn.00086.2014.) found that ventral striatum tracks reward value, whereas dorsolateral and dorsomedial striatum track the trialwise reward probability. In this Neuro Forum we reinterpret their findings and provide additional insights.

Keywords: striatum, reward learning, model free, model based

reward learning plays a central role in decision making and adaptation. Two major forms of reward learning that have been studied extensively in the past century are Pavlovian learning (or classical conditioning) and instrumental learning (or operant conditioning) (Dayan and Berridge 2014). In Pavlovian learning, subjects predict the reward value by a conditioned cue that occurs before it. In instrumental learning, on the other hand, subjects have to predict which cue will be rewarded and choose among cues.

Recent research employing the computational framework has greatly elucidated the underlying process of reward learning (Daw et al. 2005, 2011; Dayan and Berridge 2014). Under this framework, subjects predict the reward value of cues according to mathematical value functions. Value functions can be updated through trial-and-error experience, i.e., reward prediction error (RPE), or by forward planning based on internal cognitive models of the environment. The former is known as model-free learning, which is habitual and slow to change. The latter is known as model-based learning, which is goal directed and flexible.

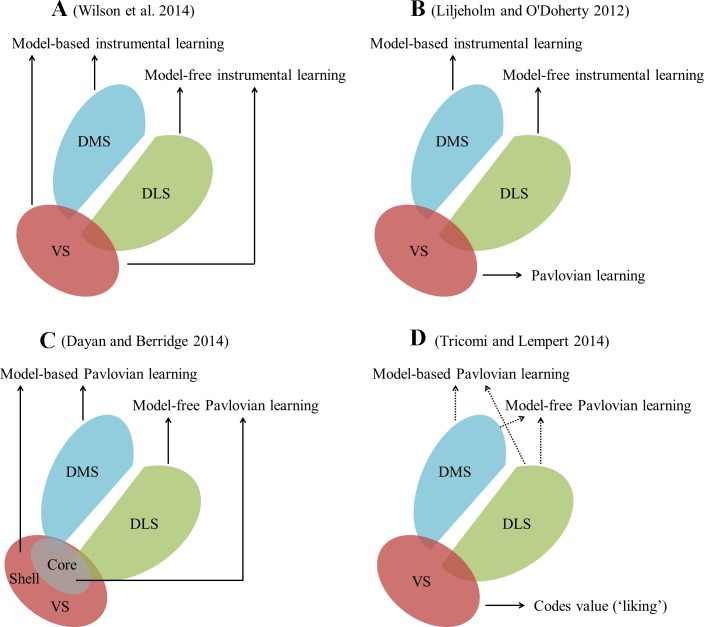

It was originally proposed in the literature of instrumental learning that model-free instrumental learning depends on the dopamine-rich striatum (Daw et al. 2005; Gläscher et al. 2010), whereas model-based instrumental learning involves the prefrontal cortex (PFC), typically the lateral PFC (Gläscher et al. 2010), the ventromedial PFC (Wunderlich et al. 2012), and the orbitofrontal cortex (Wilson et al. 2014), etc. Surprisingly however, recent lesion and functional magnetic resonance imaging (fMRI) studies suggest that dorsomedial striatum (DMS; for a brief anatomic introduction of striatum, see Fig. 1) is implicated in model-based instrumental learning (Liljeholm and O'Doherty 2012; Wunderlich et al. 2012). What makes the picture more complex is that, using a task dissociating the two types of learning, Daw et al. (2011) found that ventral striatum (VS) signal encodes both model-free and model-based instrumental learning. The available evidence raised a popular belief that dorsolateral striatum (DLS) is involved in model-free, whereas DMS is involved in model-based and VS in both model-free and model-based instrumental learning (Wilson et al. 2014; Fig. 1A). However, Liljeholm and O'Doherty (2012) argued that rather than a direct role in instrumental learning per se, the VS is more involved in Pavlovian learning and that it is the latter that potentially supports instrumental learning (Fig. 1B). More recently, Dayan and Berridge (2014) brought the model-free and model-based paradigm into Pavlovian learning. They suggested that DLS and the core of VS are involved in model-free Pavlovian learning, whereas DMS and the shell of VS are necessary for model-based Pavlovian learning (Fig. 1C). Thus the role of striatum in reward learning might be more significant and complex than originally thought, and research interest has been accumulating on the heterogeneous function of its subregions. In a recent article, Tricomi and Lempert (2014), employing the selective reward devaluation task, provided valuable insights into the dissociating roles of the subregions of striatum in reward processing (Fig. 1D).

Fig. 1.

Current understanding of the heterogeneous role of the subregions of striatum in reward processing (A–C) and the findings of Tricomi and Lempert (2014) (D). Anatomically, striatum is dissected into ventral striatum (VS), dorsolateral striatum (DLS), and dorsomedial striatum (DMS). VS includes the core and shell of nucleus accumbens, whereas DLS and DMS correspond to putamen and caudate, respectively.

In this study, Tricomi and Lempert (2014) first trained subjects to associate five fractal images with one of two foods or no reward (omission). During each trial, a fractal image occurred along with three buttons below it. Subjects were explicitly informed that if they pressed the yellow button, they would have the chance to earn a food. Therefore, what subjects needed to do was to associate the fractal images with different reward outcomes (with different probabilities ranging from 3% to 88%). Since subjects could not choose between the cues of fractal images, this task in essence was more Pavlovian rather than instrumental learning. After the training phase, subjects were selectively satiated with one of the two foods. Both at the beginning of the experiment and after the devaluation procedure, subjects were asked to rate the pleasantness of the two foods. The pleasantness reflects the hedonic impact of a food in the consummatory phase, i.e., subjective “liking” (Dayan and Berridge 2014). The authors found that the pleasantness rating of the devalued food following the selective satiation procedure decreased significantly more than that of the valued food. Therefore, that food was successfully devalued.

Following the devaluation procedure, subjects were then tested again during fMRI scanning. By comparing fMRI blood oxygen level-dependent (BOLD) responses between reward outcome and devalued outcome, Tricomi and Lempert (2014) could detect which brain area was sensitive to the disappeared value (devaluation) or liking. They found that the VS showed less activation for devalued outcome than for reward outcome. These findings suggest that VS codes value or liking. Previous studies have generally utilized the reward devaluation task to generate RPEs or examine the brain structures or neurochemicals that are involved in model-free and model-based instrumental learning (Daw et al. 2005; Wilson et al. 2014). Tricomi and Lempert (2014), however, employed this task from a different perspective and elegantly showed that the VS tracks reward value. This is consistent with another recent study (Miller et al. 2014), which found a similar association between activity in VS and self-reported liking during instrumental learning. Coding value may be one of the reasons why this brain area is necessary for both model-free and model-based learning, since both have to track the value of the reward outcome.

Furthermore, the authors also analyzed the trialwise probability of reward outcome (the still valued outcome, not devalued) and related it to changes of fMRI BOLD signals at the time of outcome. They found that DLS and DMS showed significant increase in BOLD signal with a positive correlation with the trialwise probability of reward receipt (other areas showing significant effect included central orbitofrontal cortex, anterior lobe of cerebellum, etc.). As the probability of reward receipt increased, BOLD signal in DLS and DMS also increased. At first glance, it is tempting to conclude that these two areas track the probability of reward and therefore code model-based learning (Daw et al. 2005, 2011; Dayan and Berridge 2014). However, after a closer examination, we argue that this is actually not the case. Because model-free learning updates expected reward function by fully relying on reinforcement history, its value function can be specified as Expected reward value = Reward probability × Reward value; that is, model-free learning tracks reward probability together with reward value but does not differentiate them. In contrast, model-based learning tracks these two variables separately. In the present study, the authors only analyzed the still valued reward outcome. Therefore, the reward value in the above formula was fixed, and the expected reward value updated by model-free learning was proportional to reward probability. In other words, the increased BOLD signal in DLS and DMS correlating with increased trialwise probability of reward receipt may reflect either model-free or model-based Pavlovian learning (or both). This interpretation is in line with previous findings that DLS codes model-free, whereas DMS processes model-based, instrumental learning (Liljeholm and O'Doherty 2012; Wunderlich et al. 2012). It suggests that the neural computation underlying Pavlovian learning may be similar to that of instrumental learning (Dayan and Berridge 2014). However, the failure to incorporate devalued outcome, and thus the failure to separate model-free vs. model-based Pavlovian learning, remains a limitation of Tricomi and Lempert (2014).

As their third finding, Tricomi and Lempert (2014) reported that DLS and DMS, but not VS, were also more activated following the reward cue than the reward omission cue in the anticipation phase before outcomes were received. To confirm this result and provide more powerful evidence, the authors additionally fitted a computational model and model-based fMRI (O'Doherty et al. 2007). The computational model used was a simple model-free Rescorla-Wegner (RW) model, which assumes subjects rely exclusively on RPE to update expected value function. In the RW model, if, following a cue on trial t, value Qt is expected and, finally, reward rt is received, then RPEt is calculated as

| (1) |

The expected value of the cue in a forthcoming trial t + 1 is updated according to the following formula:

| (2) |

where α is the learning rate, which reflects the efficiency of subjects in using RPE to update the expected value function. In model-based fMRI, briefly speaking, a computational model is first fit to observed behaviors, and the best-fitting model is regressed against the fMRI data of BOLD signal changes over time. In this way statistical correlation of the cognitive process and BOLD signal change can be detected. This allows precise understanding of the neural computation of the cognitive process, which goes beyond the mere comparison of “activation” or “response” of brain areas by traditional neuroimaging studies (O'Doherty et al. 2007). Tricomi and Lempert (2014) did not analyze the behavioral data, and learning rate could not be calculated. Instead, the fMRI data of the first five subjects was modeled iteratively using learning rate ranging from 0.1 to 0.7, and the best fit figure was chosen. By fitting the model-based fMRI, the authors found that only DLS showed significant effect of expected value. However, none of the subregions of striatum showed significant effect of RPE (the only region showing this effect was left inferior frontal gyrus).

The absence of significant effect of VS in both expected value and RPE as reported by Tricomi and Lempert (2014) is consistent with a previous suggestion that this brain area might not be necessary for learning the cue-reward associations (Saunders and Robinson 2012), nor does it code RPE in Pavlovian learning (Garrison et al. 2013). As we have mentioned earlier, the frequent involvement of VS in Pavlovian and instrumental learning may be accounted by its role in coding reward value. These findings together challenge the popular belief that VS is implicated in Pavlovian learning, whereas dorsal striatum is involved primarily in instrumental learning (DLS in model-free and DMS in model-based learning) (Liljeholm and O'Doherty 2012; see Fig. 1).

The observation that DLS is involved in expected value is in accordance with the earlier finding that DLS and DMS were correlated with the trialwise probability of reward receipt. It was surprising that the authors did not find significant effects of both DLS and DMS in RPE, since there was evidence that these areas might calculate RPE in Pavlovian learning (Garrison et al. 2013). One possible explanation lies in the reward coding method used by Tricomi and Lempert (2014) in the computational model. The authors chose 1 for expected value as well as received value of the still valued food but chose 0 for both the devalued and omitted values. That means they did not differentiate the devalued and omitted values. However, the neural responses to the devalued and omitted reward outcome were likely to be different. This mixed effect might prevent the authors from getting significant findings. Another possibility may be related to the RW computational model in Tricomi and Lempert (2014). It has been revealed that the temporal difference model, which also takes all future reward into consideration, is better and more consistent with the neural data than the RW model (Garrison et al. 2013). Furthermore, algorithms incorporating both model-based and model-free learning are much closer to the reality that subjects generally utilize these two kinds of strategies in combination (Daw et al. 2011; Gläscher et al. 2010; Wunderlich et al. 2012), especially in the face of reward devaluation (Daw et al. 2005; Wilson et al. 2014). Future research should try to address the limitations of Tricomi and Lempert (2014) and deepen our understanding of the neural computation underlying Pavlovian learning.

In conclusion, Tricomi and Lempert (2014), employing a reward devaluation task, confirmed that the striatum is not a single, homogeneous region with respect to reward learning (Fig. 1). In particular, VS tracks reward value but may not be associated with Pavlovian learning per se. In contrast, DLS and DMS track the trialwise probability of reward receipt and are involved in either model-free or model-based Pavlovian learning (or both). Future study is warranted to validate these findings and further specify the likely distinct role of DLS and DMS in model-based vs. model-free Pavlovian learning.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

C.C., Y.O., and S.Y. conception and design of research; C.C. prepared figures; C.C. drafted manuscript; C.C., Y.O., and S.Y. edited and revised manuscript; C.C., Y.O., and S.Y. approved final version of manuscript.

ACKNOWLEDGMENTS

We are grateful to Nathaniel Daw for valuable discussion on tracking reward probability by model-based vs. model-free learning. We also thank Yukiko Ogura and two anonymous reviewers for helpful comments on the manuscript. However, we take full responsibility for the ideas discussed in this article.

REFERENCES

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans' choices and striatal prediction errors. Neuron 69: 1204–1215, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci 8: 1704–1711, 2005. [DOI] [PubMed] [Google Scholar]

- Dayan P, Berridge KC. Model-based and model-free Pavlovian reward learning: revaluation, revision, and revelation. Cogn Affect Behav Neurosci 14: 473–492, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrison J, Erdeniz B, Done J. Prediction error in reinforcement learning: a meta-analysis of neuroimaging studies. Neurosci Biobehav Rev 37: 1297–1310, 2013. [DOI] [PubMed] [Google Scholar]

- Gläscher J, Daw N, Dayan P, O'Doherty JP. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron 66: 585–595, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liljeholm M, O'Doherty JP. Contributions of the striatum to learning, motivation, and performance: an associative account. Trends Cogn Sci 16: 467–475, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EM, Shankar MU, Knutson B, McClure SM. Dissociating motivation from reward in human striatal activity. J Cogn Neurosci 26: 1075–1084, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty JP, Hampton A, Kim H. Model-based fMRI and its application to reward learning and decision making. Ann NY Acad Sci 1104: 35–53, 2007. [DOI] [PubMed] [Google Scholar]

- Saunders BT, Robinson TE. The role of dopamine in the accumbens core in the expression of Pavlovian-conditioned responses. Eur J Neurosci 36: 2521–2532, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tricomi E, Lempert KM. Value and probability coding in a feedback-based learning task utilizing food rewards. J Neurophysiol. First published 22 October 2014; doi: 10.1152/jn.00086.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RC, Takahashi YK, Schoenbaum G, Niv Y. Orbitofrontal cortex as a cognitive map of task space. Neuron 81: 267–279, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wunderlich K, Dayan P, Dolan RJ. Mapping value based planning and extensively trained choice in the human brain. Nat Neurosci 15: 786–791, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]