Abstract

Objectives

Adverse drug event (ADE) detection is an important priority of patient safety research. Trigger tools have been developed to help identify ADEs. As part of a larger study, we developed complex and specific trigger algorithms intended for concurrent use with clinical care to detect outpatient ADEs. This paper assesses the use of a modified-Delphi process to obtain expert consensus on the value of these triggers.

Methods

We selected a panel of distinguished clinical and research experts to participate in the modified-Delphi process. We created a set of outpatient ADE triggers based on literature review, clinical input, and methodological expertise. The importance of the targeted ADEs, associated drug classes, and trigger logic was used to rate each trigger. Specific criteria were developed to establish consensus.

Results

The modified-Delphi process established consensus on six outpatient ADE triggers to test with patient-level data based on high ratings of utility for patient-level interventions. These triggers focused on detecting ADEs caused by the following drugs or drug classes: bone-marrow toxins, potassium raisers, potassium reducers, creatinine, warfarin, and sedative hypnotics. Participants reported including all aspects of the trigger in their ratings, despite our efforts to separate evaluation of clinical need and trigger logic. Participants’ expertise affected the evaluation of trigger rules, leading to contradictory feedback on how to improve trigger design.

Conclusions

The efficiency of the modified-Delphi method could be improved by allowing participants to produce an overall summary score that incorporates both the clinical value and general logic of the trigger. Revising and improving trigger design should be conducted in a separate process limited only to trigger experts.

INTRODUCTION

Improvements in the ability to accurately identify adverse drug events (ADEs) have led to more efficient and effective strategies to prevent patient harm.1-3 An important development in ADE detection is the use of trigger tools: algorithms that search patient-level data and flag patterns consistent with a possible past, current, or future ADE.4 Computerized trigger tools for inpatient ADEs perform moderately well, are relatively inexpensive to use, and are being deployed in many hospitals.5-9 Despite the high burden of outpatient ADEs and the opportunity to apply inpatient ADE triggers to the outpatient setting, research in this area is limited.10,11

Outpatient triggers have been challenging to develop and implement for several reasons. First, electronic medical records (EMRs) are rarer in outpatient care and the ability to process triggers using data from multiple care settings and healthcare systems is challenging.12-15 Second, outpatient laboratory and pharmacy data is sparse compared to the inpatient setting, which makes identification of trends more difficult.1 These data challenges are more serious for trigger systems that operate concurrently with clinical care.16 The goal of concurrent systems is to make a difference in real-time; however, the persistent limitations of electronic outpatient data have resulted in only a handful of healthcare systems adopting trigger systems for outpatient intervention.17,18

To make concurrent triggers more relevant to clinical care and adapt them to relatively sparse data in the outpatient setting, we developed complex logic for a variety of outpatient ADEs.19 In this paper, we describe and critique the process used to identify outpatient ADE triggers with the highest value to healthcare systems for real-time application. We used a modified-Delphi panel to obtain consensus on the most promising triggers to test with patient data.20-22 This modified approach, based on the original RAND Corporation’s model, is widely used in the development of patient safety and quality improvement tools.23 Based on our own review and feedback from participants, we assessed whether the modified-Delphi process was an appropriate approach for developing outpatient ADE triggers.

METHODS

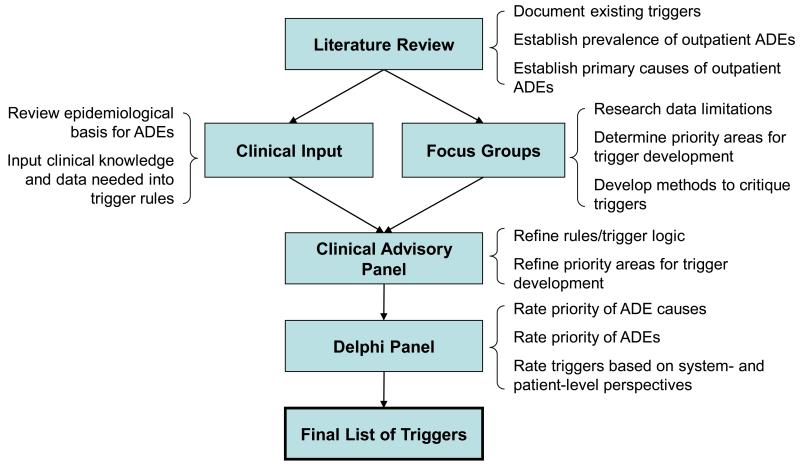

Consistent with the RAND method, the consensus-building process followed specific steps in collecting information about ADE triggers (see Figure 1). As part of a broader study on outpatient ADEs, we reviewed the literature on adverse event (AE) triggers designed for any setting, including outpatient care, to identify sources of greatest harm for outpatient AEs.24 We began with literature obtained in previous work, and then systematically searched PubMed, Medline, ISI Web of Science, the Cochrane databases, EBM reviews and PsychInfo using the following keyword combinations:

adverse event surveillance, signal, alert, trigger;

medical errors, medication errors, postoperative complications, iatrogenic disease;

safety management, risk management, quality assurance;

data collection, decision support systems, clinical reminder systems, medication systems, hospital medical records systems, computerized monitoring, physiologic drug monitoring, adverse drug reaction reporting systems, clinical laboratory information systems, user-computer interface; and,

ambulatory care, primary health care, outpatients, inpatients, emergency service, hospital.

Figure 1.

Description of outpatient adverse drug event (ADE) trigger development process

We reviewed all abstracts identified in the literature search, excluding those that were irrelevant to triggers or AE detection. Many of the excluded articles focused on post-marketing population-level surveillance of drug/vaccine safety using voluntary report databases. Relevant articles were abstracted by one of three reviewers (HM, SS, and HK) using a Microsoft Access form. The Access database included fields on AE detection setting (i.e. hospital/inpatient), trigger type (i.e. medical, surgical, ADE), trigger name (i.e., warfarin trigger), and trigger testing results (i.e. positive predictive value). The literature search retrieved 505 abstracts; 54 of these were abstracted. The majority of these retained articles focused on ADE triggers.

The clinician researchers on the project team used the information in the literature database, as well as their own expertise, to create a set of potential outpatient ADE triggers based on clinical logic and epidemiological principles.8 Outpatient triggers were designed to have high positive predictive value by isolating the clinical characteristics of an ADE and excluding events that could be explained as routine care. Many of the triggers were drawn from the literature and adapted for the outpatient setting using information on timing and type of prescription fills, and laboratory values.

Concurrent with the development of outpatient ADE trigger logic, we held focus groups at three large healthcare institutions (Boston Medical Center and the Boston VA Healthcare System, both in Boston, MA, and Intermountain Healthcare in Salt Lake City, UT). We presented results from the literature review on ADE severity and prevalence and asked potential end users and other stakeholders to identify priorities for outpatient trigger design.25

In the final stage of outpatient trigger development, the project’s Clinical Advisory Panel, composed of seven clinicians and two pharmacists, refined the list of outpatient ADE trigger algorithms based on focus group input and gaps in the trigger literature. This process resulted in a set of outpatient triggers for evaluation and assessment of face validity by a modified-Delphi panel.

We recruited national experts in inpatient trigger design, medical informatics, clinical care, and patient safety research to participate in the modified-Delphi process (Table 1 presents the participants’ characteristics). Experts were expected to spend approximately 8-12 hours on the study and were reimbursed for their participation. Most of our participants were well known in their respective fields. Our modified-Delphi process consisted of three rounds over a 2-month period, followed by a group phone call. In each round, a Microsoft Excel workbook was emailed to each participant. Responses were collected via email and summarized anonymously in subsequent rounds.

Table 1.

Characteristics and Participation of Delphi Panelists

| Professional Degrees |

Specialty | Employer | Participation | |||

|---|---|---|---|---|---|---|

| Round 1 | Round 2 | Round 3 | Phone Call |

|||

| M.D. | Surgery | Federal healthcare organization |

✓ | ✓ | ✓ | |

| M.D. | Patient safety, trigger research |

Non-profit research and advocacy organization |

✓ | |||

| M.D. | Patient safety, trigger research |

Private consulting company | ✓ | ✓ | ||

| M.D., M.P.H. | Pediatric care, patient safety research |

Teaching hospital | ✓ | ✓ | ✓ | ✓ |

| Ph.D. | Patient safety research | Teaching hospital | ✓ | ✓ | ✓ | |

| M.D. | Emergency medicine, trigger research |

Teaching hospital | ✓ | ✓ | ✓ | ✓ |

| M.D., M.Stat. | Patient safety research | Teaching hospital | ✓ | ✓ | ✓ | ✓ |

| Ph.D., M.S. | Informatics, trigger research | Teaching hospital | ✓ | ✓ | ✓ | |

| M.D., M.P.H. | Patient safety research | Federal healthcare organization |

✓ | ✓ | ✓ | |

| M.D., M.P.H. | Surgery | Federal healthcare organization |

✓ | ✓ | ||

| M.D., M.S. | Surgery | Teaching hospital | ✓ | ✓ | ✓ | |

The workbook presented all the triggers developed and refined through the Clinical Advisory Panel stage. We also presented summaries from the literature on rates and severity of outpatient ADEs and associated drug classes and asked the participants to rate the importance of designing triggers to address these causes and events. Trigger information included:

the cause (the drug or drug class),

the event, the trigger rule (algorithm),

the goal (the harm the trigger was designed to prevent), and

a description of each trigger.

For example, the trigger Angiotensin Converting Enzyme Inhibitor (ACE Inhibitor, trigger 2) addressed cardiovascular agents (cause) and respiratory problems (AE); the rule was ‘New order for Angiotensin Receptor Blocker (ARB) within 3 months of starting an Angiotensin Converting Enzyme Inhibitor (ACEI);’ the goal was to ‘Detect cough from an ACEI;’ and the description was ‘Look for switch from ACEI to ARB.’

Based on trigger goals and feedback from the focus groups, we asked Delphi participants to rate each ADE trigger’s utility for patient-level interventions to reduce patient harm. This criterion was considered the most important for rating ADE triggers for real-time applications to prevent specific harm to patients. We used a scale from 1-9 to rate triggers, with 1 as the most useful and 9 as the least useful for helping patients.26 We also asked participants to respond to open-ended questions about the strengths and weaknesses of each trigger and suggest modifications to improve trigger design. Finally, we solicited feedback, including information about the expertise and comfort participants had in rating triggers, and general comments on how to improve the consensus building process. Overall, experts assessed the face validity of each trigger, helping to determine whether or not the trigger would be recommended for further testing on patient-level data.

In Round 2, the workbook was customized to show the overall ratings and each participant’s individual responses. Modifications to the trigger algorithms were incorporated into the list of triggers, with specific changes to the text bolded and identified in red. Round 3 presented the Round 2 median and ranges and asked participants to justify Round 3 ratings if they differed by more than 2 units from the Round 2 median.

We held a phone call after Round 3 to obtain comments and feedback on the Delphi process and to review the list of triggers recommended for further testing. The primary goal of the post-Delphi phone call was to better understand the rationale behind divergent ratings. For participants who could not attend the group call but were interested in providing feedback, we held individual calls.

To determine which ADE triggers to test using patient-level data, specific criteria were imposed: 1) presence of a median rating for “utility for patient-level interventions” above 3, and 2) sufficient agreement between participants. We defined agreement according to the RAND/UCLA method – all but one of the participants’ ratings must be within a range of 3 points around the median to achieve consensus.20

RESULTS

Eleven experts participated in the Delphi process (see Table 1). The majority of participants worked in teaching hospitals and all but two held medical degrees.

We developed and presented 19 outpatient ADE triggers to Delphi participants. Table 2 shows the trigger rule, the number of respondents, and medians and ranges for the ADE triggers from Rounds 1, 2 and 3. We ordered triggers by ratings and highlighted rows that met consensus criteria for testing with patient-level data.

Table 2.

Results, ranked by final rating, for Rounds 1-3 assessing 19 outpatient ADE triggers for utility of patient interventions

| Outpatient ADE Trigger Information | Utility of Patient Intervention (1=High, 9=Low) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Name | Rule | Goal | Description | Round 1 | Round 2 | Round 3 | |||

| N | Median (Range) |

N | Median (Range) |

N | Median (Range) |

||||

| Warfarin | [Started on warfarin within 14 days AND (International Normalized Ratio (INR)>3.0 AND INR increased by 1 within 2 days) AND no repeat INR within 2 days] OR (Started in warfarin longer than 14 days prior AND INR>4 AND no repeat INR within 2 weeks) OR (INR>6 AND no repeat INR within 2 days) |

Detect rapid or excessive anticoagulation to prevent bleed |

Look for over anticoagulation and no evidence of rechecking within reasonable window |

8 |

1.0

(1-5) |

7 |

1.0

(1-2) |

6 |

1.0

(1-2) |

|

Potassium-

high |

[(K+>5.5 and up by >10% since last measurement) OR (K+>6.0)] AND (Potassium (K) raiser active OR Potassium reducer discontinued 1 day to 4 weeks days prior) AND No new potassium reducer OR Decrease in potassium raiser within 5 days of triggering result |

Detect hyperkalemia to prevent further increase and arrhythmia |

Look for rising potassium and no evidence of change |

7 |

1.0

(1-6) |

6 |

1.0

(1-2) |

5 |

1.0

(1-3) |

|

Potassium-

low |

Use of potassium (K) reducer AND [K <3.0 OR (K < 3.5 AND K decreased by >15%) versus previous measurement] AND (No new potassium raiser OR decreased potassium reducer) within 5 days of triggering potassium result |

Detect hypokalemia to prevent further decline and arrhythmia |

Look for dropping potassium and no evidence of change |

7 |

2.0

(1-7) |

6 |

2.0

(1-3) |

5 |

2.0

(1-3) |

| Delirium | Active prescription of sedative hypnotic including anticholinergic AND Subsequent new diagnosis of (dementia, fall, delirium) |

Detect impairment in consciousness and cognition to improve quality of life |

Look for psychotropic with subsequent decline in consciousness or cognition |

7 |

2.0

(1-7) |

6 |

2.0

(1-3) |

5 |

2.0

(1-3) |

|

Bone

Marrow Toxin |

(On bone-marrow-toxic drug with a course more than 2 weeks AND No chemotherapy within 2 weeks) AND [(white blood cell count (WBCs)<2,500 AND decrease from before course by more than 2,000) OR (WBCs<2,000 AND decrease from before course by more than 1,000) OR (Platelets<50k AND decrease by 75k within 1 week)] AND (no repeat complete blood count OR no decrease in drug) within 5 days of triggering result |

Detect early signs of myelo-suppression to prevent more severe cases |

Look for decrease in cells after non-cancer drug and no evidence of recognition |

7 |

1.0

(1-3) |

6 |

1.0

(1-2) |

5 |

2.0

(1-4) |

|

Hepato-

toxic |

Active prescription for hepatotoxic drug AND aspartate aminotransferase (AST) or alanine aminotransferase (ALT) levels >150 |

Detect potential hepatotoxicity to prevent serious liver damage |

Look for development of hepatotoxicity while on drug that can cause hepatotoxicity |

7 |

2.0

(1-3) |

6 |

2.0

(1-3) |

5 |

2.0

(1-4) |

| Thyroid | Abnormal thyroid-stimulating hormone (TSH) test on thyroid replacement and no repeat test or new prescription of thyroid replacement within 2 months of triggering value. |

Detect out of range thyroid to prevent related problems |

Look for out-of-range TSH without evidence of follow up |

8 |

2.5

(1-7) |

7 |

3.0

(1-4) |

6 |

3.0

(2-4) |

| Antibiotic | Trough levels of antibiotic more than double the recommended maximum AND at least two days left in the course AND no reduction in dose of the antibiotic. |

Prevent antibiotic toxicity (hearing and renal function) |

Look for dangerously high trough levels |

8 |

2.0

(1-3) |

7 |

2.0

(1-3) |

6 |

3.0

(2-4) |

| Steroid | Long-term prescription of oral glucocorticoid (written for 3-months supply or for 6 months of refills or renewed after 3 months) AND at 6 weeks after steroid started no bisphosphonate prescribed |

Detect long-term steroid course with inappropriate monitoring/ treatment for prevention of related problems |

Look for long term course of steroid and no bisphosphonate or no glucose check |

8 |

3.0

(1-6) |

7 |

3.0

(2-6) |

6 |

3.0

(3-6) |

|

Blood

Pressure |

Diagnosis code for (fall, dizziness, orthostatic hypotension) AND antihypertensive started within 3 months AND systolic BP below 140 AND no reduction in or new prescription for any antihypertensive medication |

Detect symptomatic low BP to prevent further problems |

Look for problem associated with relatively low BP and no evidence of addressing the problem |

7 |

4.0

(1-9) |

6 |

3.0

(1-8) |

6 |

3.0

(1-6) |

|

Anti–

Platelet |

Receiving antiplatelet agent AND platelets < 50,000/m3 AND no repeat complete blood count within 2 weeks |

Detect platelet impairment with thrombocytopenia to prevent bleed |

Look for low platelets in a patient receiving an antiplatelet agent |

7 |

3.0

(1-9) |

6 |

3.0

(1-8) |

6 |

3.0

(2-9) |

| Creatinine | [New order or increase in (direct GFR reducer OR volume reducer OR nephrotoxin) within (1-5 days OR 1 day to last creatinine measure) AND (No new trimethoprim within 5 days)] AND (No decrease in any meds above since the last creatinine measure OR no repeat order for creatinine) AND (>25% reduction in creatinine clearance since initiation or increase of above med AND resulting creatinine clearance < 50) |

Detect decreased renal function to prevent reactions from other drugs that are renally cleared |

Soon after starting a drug that might decrease creatinine, look for a decrease in creatinine clearance to a concerning level and make sure that the new drug has not been decreased. |

7 |

3.0

(1-9) |

5 |

3.0

(2-9) |

6 |

3.5

(2-7) |

|

Calcium

Channel Blocker |

Weight increased >6 pounds between 2 weeks and 3 months of starting dihydropyridine calcium-channel blocker (CCB) AND no diagnosis of congestive heart failure AND (no discontinuation of CCB OR no new antihypertensive other than a diuretic) |

Detect edema to mitigate it |

Look for rapid weight gain soon after starting CCB that may induce LE edema and no evidence of recognition |

7 |

3.0

(2-9) |

6 |

3.5

(2-6) |

6 |

4.5

(3-8) |

|

Theo-

phylline |

Theophylline level >20 μg/ml OR Theophylline given for >12 months with no level measured |

Detect potential or actual dangerous theophylline level to prevent dangerous adverse reactions |

Look for high theophylline level or inadequate monitoring |

7 |

4.0

(2-8) |

6 |

4.5

(3-8) |

5 |

5.0

(4-8) |

|

Parkin-

sonism |

Change from (typical to atypical antipsychotic OR from any antipsychotic to quetiapine) within 4 weeks of starting therapy OR [prescription of dopaminergic medication OR new diagnosis of (akathisia OR parkinsonism OR tremor)] within 3 months of starting |

Detect drug induced parkinsonism |

Look for early change to less toxic antipsychotic or new diagnosis of common adverse effects of antipsychotic medication |

7 |

5.0

(2-9) |

6 |

5.0

(3-9) |

5 |

5.0

(3-8) |

|

ACE

Inhibitor |

New order for Angiotensin II Receptor Blockers (ARB) within 3 months of starting Angiotensin Converting Enzyme Inhibitor (ACEI) |

Detect cough from ACE inhibitor |

Look for switch from ACE inhibitor to ARB. |

7 |

5.0

(2-9) |

6 |

5.0

(4-9) |

6 |

5.0

(4-9) |

| C-Diff | Prescription of (oral vancomycin or metronidazole OR Order for Clostridium Difficile culture or toxin OR ICD9 code for C. diff colitis or similar codes) within (1 day after start AND before 4 weeks after the completion) of an antibiotic course OR Prescription of antidiarrheal within (1 day after start AND before 3 weeks after the completion) of an antibiotic course |

Detect antibiotic- associated diarrhea |

Look for evidence of clinical suspicion of C. difficile colitis or regular antibiotic-associated diarrhea |

8 |

6.0

(1-9) |

7 |

6.0

(1-9) |

6 |

6.0

(3-7) |

| Fungal | Prescription of an oral or vaginal antifungal between 3 days after start of antibiotic and 10 days after end of course. |

Detect antibiotic- associated fungal infection |

Look for treatment of mucosal fungal infection |

8 |

7.0

(3-9) |

7 |

7.0

(3-9) |

6 |

7.0

(3-9) |

|

Anti-

depressant |

Within a time frame of 1 day to 6 weeks after the prescription of an antidepressant, prescription of a different antidepressant. |

Detect any adverse event from an antidepressant |

Look for new antidepressant within a time frame that is more suggestive of replacing a prior antidepressant than augmentation therapy. |

7 |

7.0

(3-9) |

6 |

7.5

(3-9) |

6 |

7.5

(3-8) |

ADE=Adverse drug event

N= number of participants who rated the trigger

Highlighted cells indicate triggers that met consensus criteria in Round 2and were selected for testing with patient-level data

Round 1 resulted in several suggested modifications to trigger algorithms to increase sensitivity or specificity:

Calcium Channel Blocker was changed from a weight gain of 8 lbs to a gain of 6 lbs or more;

Delirium specified that the subsequent diagnosis of dementia, fall, or delirium must be ‘new.’

Thyroid initially required ‘new prescription of thyroid replacement within 2 months of triggering value;’ however, this criterion was changed from ‘and’ to ‘or.’

Steroid removed the following component: ‘OR no glucose/Hgb A1c ordered since 1 week after steroid started.’

Initially, the triggers that met our consensus criteria included Antibiotics, Bone Marrow Toxin, and Hepatotoxic. However, although Bone Marrow Toxin was rated highly, several participants felt that associated ADEs were more likely to occur in the inpatient setting. Participants also noted that the Hepatotoxic trigger would be too complicated to implement and could result in a high proportion of false negatives. Round 1 feedback was presented anonymously to participants in the Round 2 workbooks. Four new triggers met the consensus criteria in Round 2: Warfarin, Potassium-low, Potassium-high, and Delirium.

Although we achieved consensus on the triggers to test in Round 2, we proceeded with Round 3 to better understand the rationale behind divergent ratings. Participants’ reasons for giving a higher or lower rating included: overly complicated logic, difficulties in intervening or preventing the ADE, low prevalence of the criteria for the ADE, and low expected sensitivity for the trigger. These reasons were further discussed with the three participants that took part in the final phone call. The discussion led to two revisions to our trigger list. Hepatotoxic had a high rating; however, it was excluded because phone participants agreed that it had performed poorly in other studies and was likely to result in a high number of false negatives. The Creatinine trigger had divergent ratings largely because several participants assumed that the outpatient ADE was similar to events caused by creatinine in the inpatient setting. Once the outpatient creatinine ADE was described on the call, participants were able to reach consensus, and the trigger was given a high utility rating for patient-level intervention. The final six triggers for testing (highlighted in Table 2) focused on detecting ADEs caused by the following drugs or drug classes: bone marrow toxins, potassium raisers, potassium reducers, creatinine, warfarin, and sedative hypnotics.

Participants provided feedback on the Delphi process using the workbook’s structured and open-ended questions; however, several participants responded with feedback via email. Overall, many participants felt the process was too time consuming; in fact completing the workbook took some participants twice as long as was estimated (other participants completed the workbooks in the time frame we expected). We also learned that several participants did not feel their expertise permitted them to comment on certain triggers: three surgical experts on the panel refrained from rating many of the ADE triggers. Finally, experts in trigger development were more likely to spend a long time completing the workbook and to provide very specific comments and changes on the components of the trigger rule.

DISCUSSION

Experts rapidly reached consensus on 5 of 19 triggers for concurrent detection of ADEs and consensus on an additional trigger outside the Delphi process. Based on evidence from the modified-Delphi process, it appears that the triggers we developed addressed important ADEs and drug classes and would be likely to perform relatively well in practice. Future studies will evaluate the performance of the six outpatient ADE triggers using patient data. Our experience of establishing consensus on outpatient ADE triggers using a modified-Delphi process presented a number of lessons learned.

We asked participants to separately rate the importance of the ADE, the importance of the drug class, and the utility of the trigger. Based on feedback from participants, the rating process was burdensome and overly complex. The original rationale for separating these criteria was to avoid “double barreled” or “triple barreled” ratings and maintain conceptual purity. However, it became clear during the modified-Delphi process that the rating of trigger utility was often considered simultaneously with the importance of both the ADE and drug class. Additionally, results suggested that ratings of the targeted ADEs or drugs were not as valuable in determining the utility of a trigger as the anticipated performance. The consensus rejection of the liver failure trigger (Hepatotoxic) is a case in point; the targeted ADE is severe, yet previous experience with triggers addressing this event has shown that the event can only be detected after it is too late or with far too many false positives. Our criteria for selecting triggers to test with patient-level data focused only on the utility rating, a decision we did not share with Delphi participants, yet this proved appropriate in light of how they rated the triggers.

The study’s strengths included access to an esteemed panel of patient safety experts with diverse knowledge and experience with trigger tools. However, we also had some limitations. The varying backgrounds of the participants resulted in inconsistent feedback. Those who had published on trigger systems using very simple logic generally expressed skepticism about the rules’ complexity. Experts who had used trigger systems before or were more familiar with complex rules provided recommendations intended to improve trigger validity. As a result, participants gave contradictory advice on how to refine trigger logic. Some suggested shorter monitoring intervals, while others suggested longer intervals for the same aspect of the trigger rule. Differences in opinion on trigger logic were consistent with clinical versus non-clinical expertise, and non-clinicians were more likely to suggest logic changes and to spend more time completing the workbooks in each round.

Given the issues with rating criteria and the conflicting comments on trigger logic, we believe that two separate, consecutive processes would have more effectively used the expertise of participants as well as reduced the time and complexity of trigger evaluation. The modified-Delphi could have been conducted more efficiently by first holding a separate, open discussion on the trigger rules with experts in the design of trigger logic and clinical experts knowledgeable about the targeted ADE. The anonymous consensus-building of the Delphi approach could have focused on one overall summary rating for each trigger that simultaneously considered the importance of the ADE, the drug class, and the summarized trigger rule. Separating evaluation of trigger logic from consensus-building would improve the efficiency of trigger development.

CONCLUSIONS

This study developed and prioritized concurrent outpatient ADE triggers based on utility for patient interventions and generated important lessons for others who might consider a modified-Delphi process to develop tools for AE detection. Our analytical approach to the Delphi process, whereby we had a national panel of experts separately rate the importance of ADE causes, importance of events, and utility of logic, established consensus on outpatient ADE triggers but was overly burdensome. In discussions with participants, we learned that they considered all factors together and that a single rating of trigger utility would have been possible and also more efficient. Additionally, we used the modified-Delphi process to confirm the detailed logic of trigger rules, when a separate process with a different set of experts would have been more appropriate for this goal. The results and lessons learned in this study should be helpful to future studies developing adverse event triggers.

Acknowledgments

This research was funded through contract number HHSA29020060012 Task Order #3 awarded to Amy Rosen, PhD by the Agency for Healthcare Research and Quality (AHRQ).

References

- 1.Bates DW, Evans RS, Murff H, Stetson PD, Pizziferri L, Hripcsak G. Detecting adverse events using information technology. J Am Med Inform Assoc. 2003 Mar-Apr;10(2):115–128. doi: 10.1197/jamia.M1074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Budnitz DS, Pollock DA, Weidenbach KN, Mendelsohn AB, Schroeder TJ, Annest JL. National surveillance of emergency department visits for outpatient adverse drug events. Jama. 2006 Oct 18;296(15):1858–1866. doi: 10.1001/jama.296.15.1858. [DOI] [PubMed] [Google Scholar]

- 3.Classen DC, Pestotnik SL, Evans RS, Burke JP. Computerized surveillance of adverse drug events in hospital patients. Qual Saf Health Care. 2005 Jun;14(3):221–225. doi: 10.1136/qshc.2002.002972. 1991. discussion 225-226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Resar RK, Rozich JD, Classen D. Methodology and rationale for the measurement of harm with trigger tools. Qual Saf Health Care. 2003 Dec;12(Suppl 2):ii39–45. doi: 10.1136/qhc.12.suppl_2.ii39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Classen D, Lloyd RC, Provost L, Griffin FA, Resar R. Development and evaluation of the Institute for Healthcare Improvement Global Trigger Tool. J Patient Saf. 2008;4(3):169–177. [Google Scholar]

- 6.Gardner RM, Evans RS. Using computer technology to detect, measure, and prevent adverse drug events. J Am Med Inform Assoc. 2004 Nov-Dec;11(6):535–536. doi: 10.1197/jamia.M1651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sharek PJ, Horbar JD, Mason W, et al. Adverse events in the neonatal intensive care unit: development, testing, and findings of an NICU-focused trigger tool to identify harm in North American NICUs. Pediatrics. 2006 Oct;118(4):1332–1340. doi: 10.1542/peds.2006-0565. [DOI] [PubMed] [Google Scholar]

- 8.Szekendi MK, Sullivan C, Bobb A, et al. Active surveillance using electronic triggers to detect adverse events in hospitalized patients. Qual Saf Health Care. 2006 Jun;15(3):184–190. doi: 10.1136/qshc.2005.014589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nebeker JR, Stoddard GJ, Rosen AK. Considering Sensitivity and Positive Predictive Value in Comparing the Performance of Triggers Systems for Iatrogenic Adverse Events; Triggers and Targeted Injury Detection Systems (TIDS) Expert Panel Meeting: Conference Summary; Agency for Healthcare Research and Quality. Rockville, MD. February 2009. [Google Scholar]

- 10.Cantor MN, Feldman HJ, Triola MM. Using trigger phrases to detect adverse drug reactions in ambulatory care notes. Qual Saf Health Care. 2007 Apr;16(2):132–134. doi: 10.1136/qshc.2006.020073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Thomsen LA, Winterstein AG, Sondergaard B, Haugbolle LS, Melander A. Systematic review of the incidence and characteristics of preventable adverse drug events in ambulatory care. Ann Pharmacother. 2007 Sep;41(9):1411–1426. doi: 10.1345/aph.1H658. [DOI] [PubMed] [Google Scholar]

- 12.Andrews JE, Pearce KA, Sydney C, Ireson C, Love M. Current state of information technology use in a US primary care practice-based research network. Inform Prim Care. 2004;12(1):11–18. doi: 10.14236/jhi.v12i1.103. [DOI] [PubMed] [Google Scholar]

- 13.Gandhi TK, Weingart SN, Borus J, et al. Adverse drug events in ambulatory care. N Engl J Med. 2003 Apr 17;348(16):1556–1564. doi: 10.1056/NEJMsa020703. [DOI] [PubMed] [Google Scholar]

- 14.Honigman B, Lee J, Rothschild J, et al. Using computerized data to identify adverse drug events in outpatients. J Am Med Inform Assoc. 2001 May-Jun;8(3):254–266. doi: 10.1136/jamia.2001.0080254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mull HJ. Using Electronic Medical Records Data for Health Services Research Case Study: Development and Use of Ambulatory Adverse Event Trigger Tools; Paper presented at: AcademyHealth Annual Research Meeting; Boston, MA. 2010. [Google Scholar]

- 16.Nebeker JR, Shimada SL. Definitions for Trigger Terminology; Triggers and Targeted Injury Detection Systems (TIDS) Expert Panel Meeting: Conference Summary; Agency for Healthcare Research and Quality. Rockville, MD. February 2009. [Google Scholar]

- 17.Lederer J, Best D. Reduction in anticoagulation-related adverse drug events using a trigger-based methodology. Jt Comm J Qual Patient Saf. 2005 Jun;31(6):313–318. doi: 10.1016/s1553-7250(05)31040-3. [DOI] [PubMed] [Google Scholar]

- 18.Kennerly D, Snow D, Rosen A, Nebeker J. In: Classen D, editor. Use of Trigger Tools To Identify Risks and Hazards to Patient Safety; AHRQ 2010 Annual Conference; Bethesda, MD: AHRQ. 2010. [Google Scholar]

- 19.Mull HJ, Nebeker JR. Informatics tools for the development of action-oriented triggers for outpatient adverse drug events. AMIA Annu Symp Proc. 2008:505–509. [PMC free article] [PubMed] [Google Scholar]

- 20.Fitch K, Bernstein SJ, Aguilar MS, et al. The RAND/UCLA Appropriateness Method User’s Manual. RAND; 2001. [Google Scholar]

- 21.Jones J, Hunter D. Consensus methods for medical and health services research. BMJ (Clinical research ed. 1995 Aug 5;311(7001):376–380. doi: 10.1136/bmj.311.7001.376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brook RH, Chassin MR, Fink A, Solomon DH, Kosecoff J, Park RE. A method for the detailed assessment of the appropriateness of medical technologies. International journal of technology assessment in health care. 1986;2(1):53–63. doi: 10.1017/s0266462300002774. [DOI] [PubMed] [Google Scholar]

- 23.Black N, Murphy M, Lamping D, et al. Consensus development methods: a review of best practice in creating clinical guidelines. Journal of health services research & policy. 1999 Oct;4(4):236–248. doi: 10.1177/135581969900400410. [DOI] [PubMed] [Google Scholar]

- 24.Mull HJ, Shimada S, Nebeker JR, Rosen AK. A Review of the Trigger Literature: Adverse Events Targeted and Gaps in Detection; Triggers and Targeted Injury Detection Systems (TIDS) Expert Panel, Meeting: Conference Summary; Agency for Healthcare Research and Quality. Rockville, MD. February 2009; 2009. AHRQ Pub. No. 09-0003. [Google Scholar]

- 25.Shimada S, Rivard P, Nebeker J, et al. Priorities & Preferences of Potential Ambulatory Trigger Tool Users; Paper presented at: AcademyHealth Annual Research Meeting; Chicago, IL. 2009. [Google Scholar]

- 26.Preston CC, Colman AM. Optimal number of response categories in rating scales: reliability, validity, discriminating power, and respondent preferences. Acta Psychol (Amst) 2000 Mar;104(1):1–15. doi: 10.1016/s0001-6918(99)00050-5. [DOI] [PubMed] [Google Scholar]