Abstract

Background

The extent to which articles are cited is a surrogate of the impact and importance of the research conducted; poorly cited papers may identify research of limited use and potential wasted investments. We assessed trends in the rates of poorly cited articles and journals in the cardiovascular literature from 1997–2007.

Methods and Results

We identified original articles published in cardiovascular journals and indexed in the Scopus citation database from 1997–2007. We defined poorly cited articles as those with ≤5 citations in the 5 years following publication and poorly cited journals as those with >75% of journal content poorly cited. We identified 164,377 articles in 222 cardiovascular journals from 1997–2007. From 1997–2007, the number of cardiovascular articles and journals increased by 56.9% and 75.2% respectively. Of all the articles, 75,550 (46.0%) were poorly cited, of which 25,650 (15.6% overall) had no citations. From 1997–2007, the proportion of poorly cited articles declined slightly (52.1% to 46.2%, trend P<0.001), although the absolute number of poorly cited articles increased by 2,595 (trend P<0.001). At a journal level, 44% of cardiovascular journals had more than three quarters of the journal’s content poorly cited at 5 years.

Conclusion

Nearly half of all peer-reviewed articles published in cardiovascular journals are poorly cited 5 years after publication, and many are not cited at all. The cardiovascular literature, and the number of poorly cited articles, have both increased substantially from 1997–2007. The high proportion of poorly cited articles and journals suggest inefficiencies in the cardiovascular research enterprise.

Keywords: Citation analysis, Bibliometrics, Scopus, Impact Factor

INTRODUCTION

The cardiovascular literature has expanded rapidly, with the number of cardiovascular journals more than doubling between 1998 and 2010.1 Scientific advances, greater access to data, increasing resources for research, and the demand on researchers to produce publications are likely contributors to this rapid growth. Concurrently, the availability of many articles online and the emergence of open-access journals provide increasing access to this body of literature. On the surface, these changes are encouraging as they suggest growth and more effective dissemination of knowledge in the cardiovascular sciences.

However, many of these articles and journals may have limited impact. For example, not all research is novel or of high quality. Limitations such as unnecessary duplication of research,2 prolonged delays to publication,3 and methodological flaws4–7 may reduce the impact and value of an article to readers. Furthermore, the academic phenomena of ‘publish or perish’ may lead to persistent pressure to publish less useful or poorly performed science. Many medical journals are for-profit enterprises or generate substantial revenues for non-profit entities, and the growth in journals may potentially reflect publishers’ motivation to increase profits. A potential downside of increased publications may be that useful and high quality articles may be overlooked. In this context, an article may make minimal impact because its scientific contribution and value are unrecognized. Generation of research output of limited impact, irrespective of the reason, may ultimately reflect inefficiency and waste in the research enterprise.

One measure of the impact or importance of a published article is the number of citations it accrues. Citation, a widely used metric in publishing, reflects recognition that the knowledge generated by the article has influenced others’ research or practice.8 While the number of citations has limitations as a measure of impact, highly cited articles generally identify research that is useful to others. In contrast, poorly cited articles may indicate resources spent on research output that is either unrecognized or of limited use. Furthermore, if the expansion of publications is due to growth in useful research output, an increasing number of published articles are more likely to be cited often, especially given the increased number of articles available to cite them. In contrast, if the expansion is due to articles that are either unrecognized or of limited use, then more of these cardiovascular articles may remain poorly cited.

To provide perspective on the value of increased publications, we determined the number of poorly cited articles published in cardiovascular journals 5 years after publication, whether these articles clustered within certain journals, and examined the trends from 1997 through 2007. Furthermore, because article access and visibility may affect the number of citations, we evaluated the 5-year citation of articles in the subset of open-access cardiovascular journals.

METHODS

Data Source

We identified articles in cardiovascular journals from Scopus, a citation database produced by Elsevier. Scopus is the largest database available for citation analysis and contains >50 million reported records from over 21,000 titles including 100% coverage of articles indexed in Medline and Pubmed.9 Scopus captures citation of any article by other records within the Scopus database and provides a yearly citation count for each article. Scopus has more coverage and retrieves a greater number of citations compared with other citation databases.10 As stated by Scopus, all indexed journals in Scopus publish peer-reviewed content and have, at minimum, an English language abstract for each article.

Selection of cardiovascular articles and journals

From this dataset, we identified all journals from 1997 to 2007 containing primarily cardiovascular content using SCImago, a third party provider that categorizes journals into fields based on data obtained from Scopus.11 We then reviewed and refined the SCImago list of cardiovascular journals to ensure that we only included journals primarily publishing content relevant to the cardiovascular field. For each journal, we determined the open-access status of the journal based on journal data published by Elsevier.12 From the identified cardiovascular journals, we then selected documents indexed in Scopus as articles for analysis as these are typically original articles and contain original content. We excluded documents indexed as reviews and other non-citable documents such as editorials, letters, and conference abstracts because these documents do not typically contain original content and are normally excluded from citation analysis.13

Outcomes

Rates of poorly cited cardiovascular articles

We assessed the number and proportion of poorly cited articles recorded in Scopus 5 years following the year of publication. We defined poorly cited articles using a conservative cutoff of five or fewer citations at 5 years after publication. We chose the 5-year outcome period because the peak citation of articles typically occurs within the first 2–4 years after publication.14 Therefore, this time horizon should provide sufficient time for an article to be recognized and potentially cited. We also included author and journal self-citations within the 5-year period because many authors (and journals) may use prior published work to advance their subsequent work.

Rates of poorly cited cardiovascular journals

To assess whether poorly cited articles cluster within certain journals, we assessed the proportion of poorly cited articles within each journal. We further defined a journal as a poorly cited journal if more than 75% of its article content was poorly cited 5 years after publication. We defined moderately cited journals as those with 26%–75% of its article content poorly cited and well cited journals as those with 25% or less of its article content poorly cited using the above definition. For this outcome, we limited our analysis to journals publishing at least 20 articles per year to obtain an accurate estimate of the journal citation rates. To further characterize citation patterns within journals, we reviewed each journal’s stated aim, scope and “author instructions” to categorize all journals into those that solely published basic science articles and those that accepted clinical or population health articles. Journals that accepted both type of publications were categorized as mixed. We then evaluated the proportion of poorly cited article content within each journal type.

Statistical Analysis

We summarize data as frequencies and percentages for categorical variables. We present citation counts as median and interquartile range. For the analysis of yearly trend at the article level, we compared the absolute number and the proportion of poorly cited articles within each year from 1997 to 2007. For yearly trend analysis at a journal level, we compared the yearly trend in the proportion of poorly cited journals. To assess the 5-year citation rate among articles where access to the publication is not a barrier, we repeated our analysis (at the article and journal level) among the subset of open-access cardiovascular journals. We used the Cochran–Armitage test for all trend analyses. For all statistical analysis, significance levels were 2-sided with a P value <0.05. We used SAS 9.2 (SAS Institute Inc., Cary, NC).

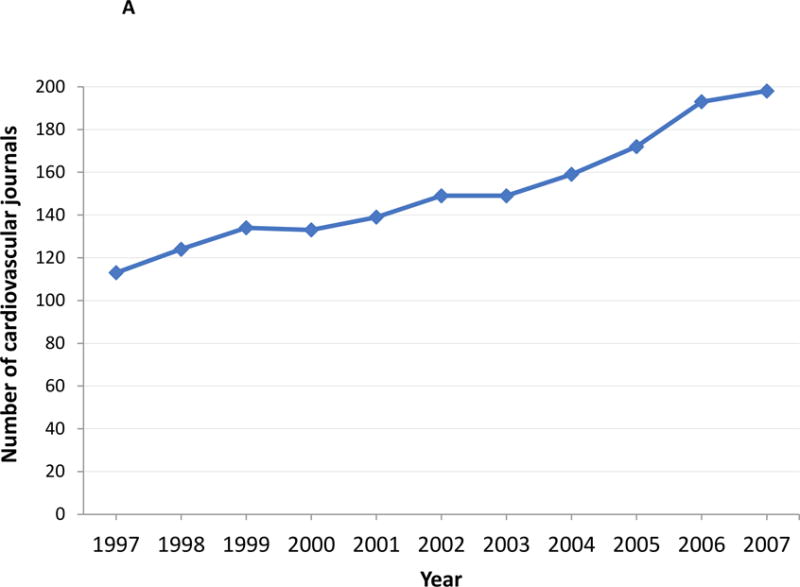

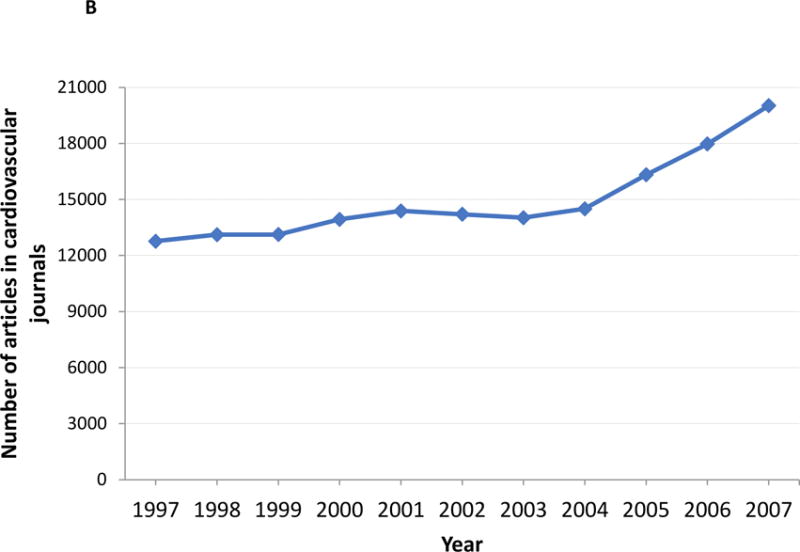

RESULTS

We identified 222 unique cardiovascular journals indexed at any time from 1997–2007 that contained 164,377 original articles over the same period. The number of unique cardiovascular journals indexed in Scopus increased from 113 in 1997 to 198 in 2007, representing an increase of 75.2% (trend P <0.001, Figure 1A). Of the 113 Journals from 1997, 103 (91%) were still indexed in Scopus in 2007. The remaining 10 journals (9%) from 1997 were no longer indexed in 2007. Of the 198 Journals indexed in Scopus in 2007, 52% were, therefore, present in 1997 and the remaining 95 Journals (42%) were new journals. The number of articles indexed in these journals by Scopus increased by 56.9%, from 12,761 in 1997 to 20,024 in 2007 (trend P <0.001, Figure 1B).

Figure 1.

Figure 1 shows the yearly trend in the number of cardiovascular journals (1A) and articles in cardiovascular journals (1B).

Rates of Poorly Cited Articles

Of the 164,377 articles, 75,550 (46.0%) articles were poorly cited, of which 25,650 (15.6% of all articles) were not cited at all 5 years after publication. Poorly cited articles were cited a median of 2 times (IQR 1–4 citations) during the 5 years post-publication. The remaining 88,827 (54.0%) articles were well cited. Well cited articles were cited a median of 17 times (IQR 10–32 citations) during the 5 years post publication.

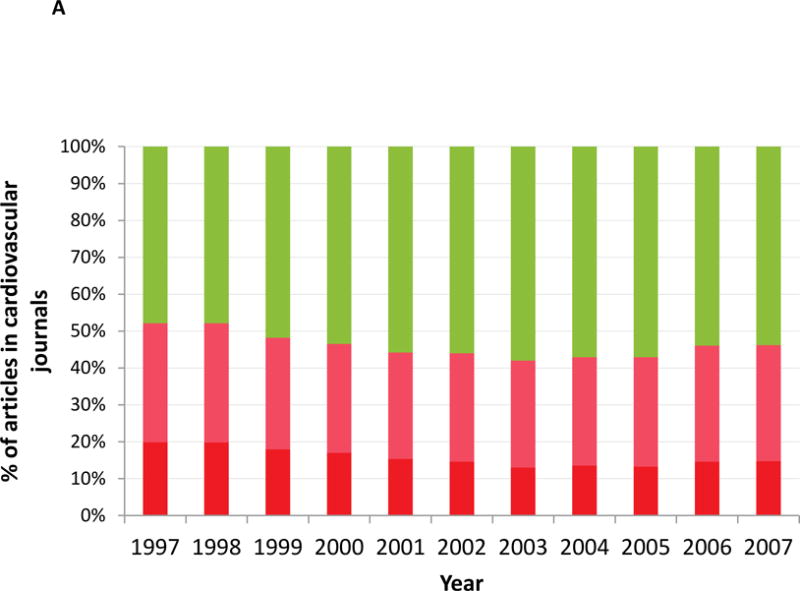

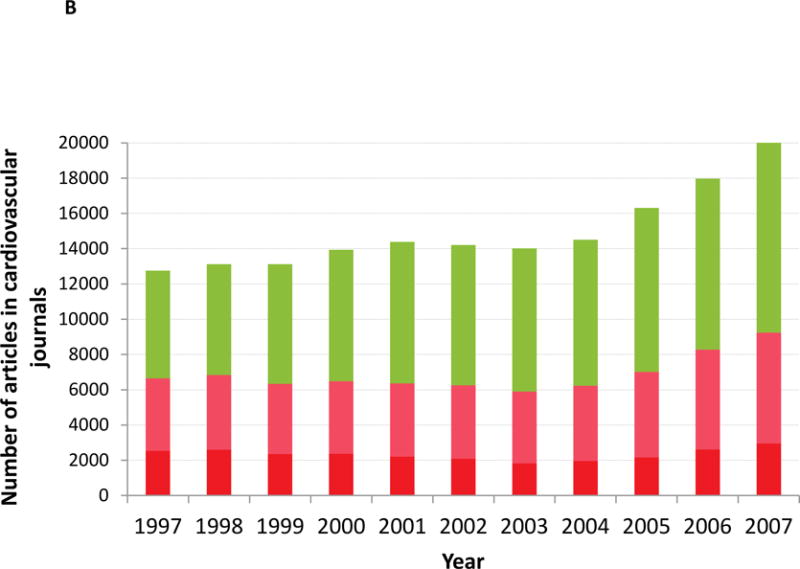

From 1997 to 2007, the proportion of poorly cited articles decreased from 52.1% to 46.2% (trend P <0.001, Figure 2A), largely because of a decrease in the proportion of articles with no citations during the same period (19.1% overall in 1997 to 14.7% overall in 2007, trend P <0.001). However, due to the growth in volume of all articles, the absolute number of poorly cited articles increased from 6,648 in 1997 to 9,243 in 2007, an increase of 2595 articles, of which 410 had no citations (Figure 2B).

Figure 2.

Figure 2 shows the yearly trend in the proportion (2A) and absolute number (2B) of poorly cited and well cited articles from 1997–2007. The red bars indicate poorly cited articles with the darker red bars indicating the subset of uncited articles among the poorly cited articles. The green bars indicate well cited articles.

To rule out probable effect of citations beyond 5 years, we conducted a post-hoc analysis of 2002–07 citation for journal articles published in 1997, which showed that 91% of articles that are poorly cited first 5 years (97–01) continued to be poorly cited within the next 5 years (02–07)(Supplemental Figure 1 and Supplemental Table 1).

Rates of poorly cited cardiovascular journals

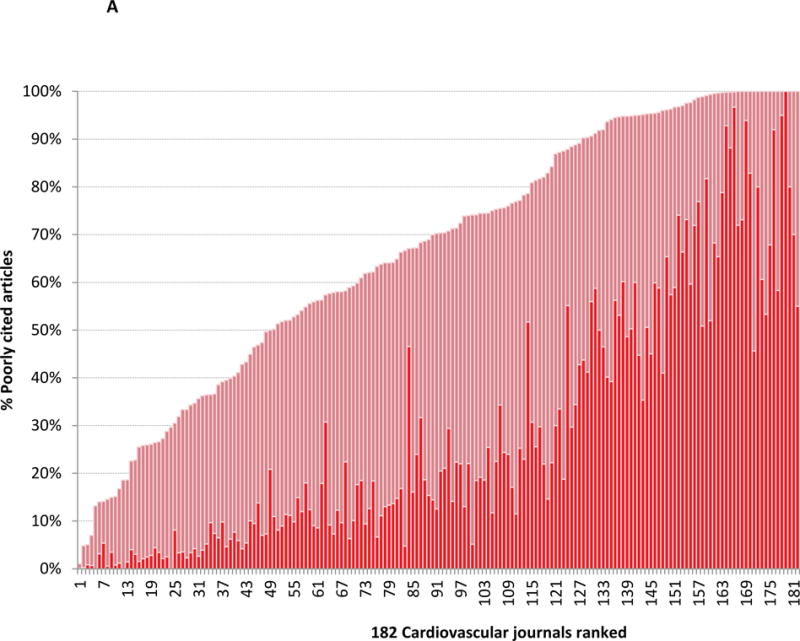

When all cardiovascular journals published from 1997–07 were ranked by the proportion of poorly cited article content, 44% were poorly cited (where more than three quarters of the journal’s content was poorly cited 5 years after publication, Figure 3A). Of the remaining journals, 47.2% were moderately cited (i.e. contained 26%–74% poorly cited content) and 7.9% were highly cited (i.e. contained ≤25% of poorly cited content). Table 1 identifies the journals with the least amount of poorly cited content (Supplemental Table 2 includes the full list of journals). While our focus was to assess poorly cited content within journals, we performed a post-hoc analysis of very highly cited articles (>25 citations). We note such articles were rare among poorly cited journals (Supplemental Figure 2).

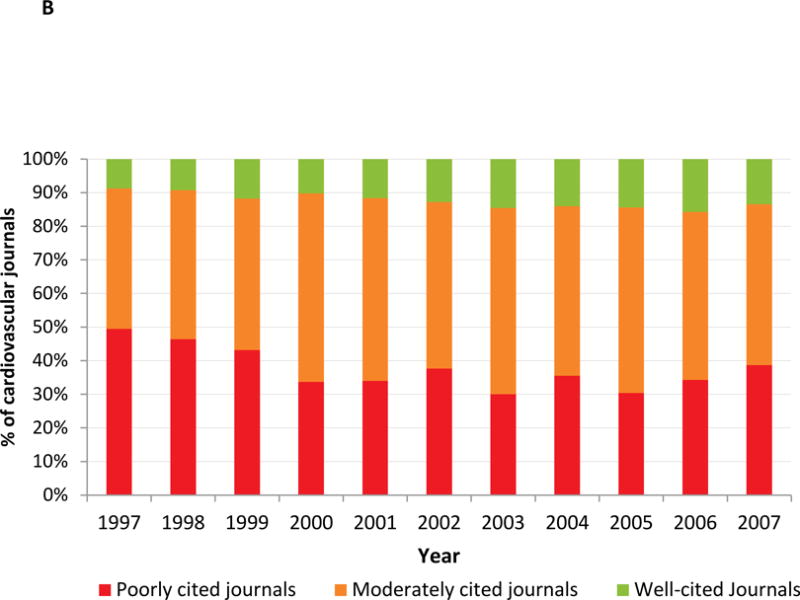

Figure 3.

Figure 3A shows cardiovascular journals ranked by the proportion of poorly cited article content. Journals with the least amount of poorly cited content are placed on the left of the graph (journal 1) and those with the highest proportion of poorly cited content is placed to the right of the graph (journal 182). The red bars indicate the proportion of poorly cited content. The dark red bars indicate the proportion of uncited content; a subset of the poorly cited content. Only journals with at least 20 publications in a given year were included in this analysis. Figure 3B shows the yearly trend in poorly cited journals from 1997 to 2007. The red bars indicate the proportion of poorly cited journals. Orange bars indicate the proportion of moderately cited journals and the green bars indicate the proportion of well cited journals. Only journals with at least 20 publications in a given year were included in this analysis.

Table 1.

Top 10 cardiovascular journals with the least proportion of articles content that remains poorly cited at 5 years after publication#

| Journal Name | 1997 Impact Factor* | 2007 Impact Factor* | Poorly cited | Well cited | Total | % Poorly cited articles trofrom 1997–07 |

|---|---|---|---|---|---|---|

| CIRCULATION RESEARCH | 8.4 | 9.7 | 32 | 3067 | 3099 | 1% |

| STROKE | 4.3 | 6.3 | 217 | 4260 | 4477 | 5% |

| JOURNAL OF THE AMERICAN COLLEGE OF CARDIOLOGY | 6.7 | 11.1 | 262 | 4921 | 5183 | 5% |

| HYPERTENSION | 4.9 | 7.2 | 241 | 3199 | 3440 | 7% |

| PROGRESS IN CARDIOVASCULAR DISEASES | 3.2 | 2.8 | 7 | 46 | 53 | 13% |

| CARDIOVASCULAR DIABETOLOGY | Not Reported† | Not Reported† | 9 | 55 | 64 | 14% |

| CIRCULATION | 9.8 | 12.8 | 1502 | 9144 | 10646 | 14% |

| JOURNAL OF MOLECULAR AND CELLULAR CARDIOLOGY | 3.3 | 5.2 | 285 | 1668 | 1953 | 15% |

| EUROPEAN HEART JOURNAL | 2.1 | 7.9 | 361 | 2052 | 2413 | 15% |

| ATHEROSCLEROSIS | 2.9 | 4.3 | 475 | 2658 | 3133 | 15% |

| JOURNAL OF HYPERTENSION | 2.8 | 4.4 | 401 | 1991 | 2392 | 17% |

Only cardiovascular journals with at least 20 published articles in a given year were included in this analysis.

Note the journal Impact Factor is derived from Journal Citation Reports for 1997 and 2007 published by Thompson Reuters. The Impact Factor is calculated using the Web of Science citation database and not based on the citation of articles in Scopus citation database used in this study.

Impact Factor not reported in Journal Citation Reports.

From 1997–2007, the proportion of poorly cited cardiovascular journals declined by 10.8% (from 49.5% to 38.7%, trend P <0.001, Figure 3B). Correspondingly, the proportion of moderate and well cited journals increased by 6.1% and 4.7% respectively (all trend P <0.05). However, due to the growth in the number of journals, the absolute number of poorly cited journals increased from 45 to 63 journals (Trend P<0.01). Similarly, the absolute number of moderately cited journals increased from 38 to 78 and the number of well cited journals increased from 8 to 22 (both Trend P <0.01).

When journal were categorized by the type of articles published, six were categorized as publishing purely basic science research, 72 as publishing clinical or population health content, and 104 as publishing mixed article content. The proportion of poorly cited content was 14.4% among the basic science journals, 63.3% among clinical or population health journals, and 40.3% among journals that published mixed article content.

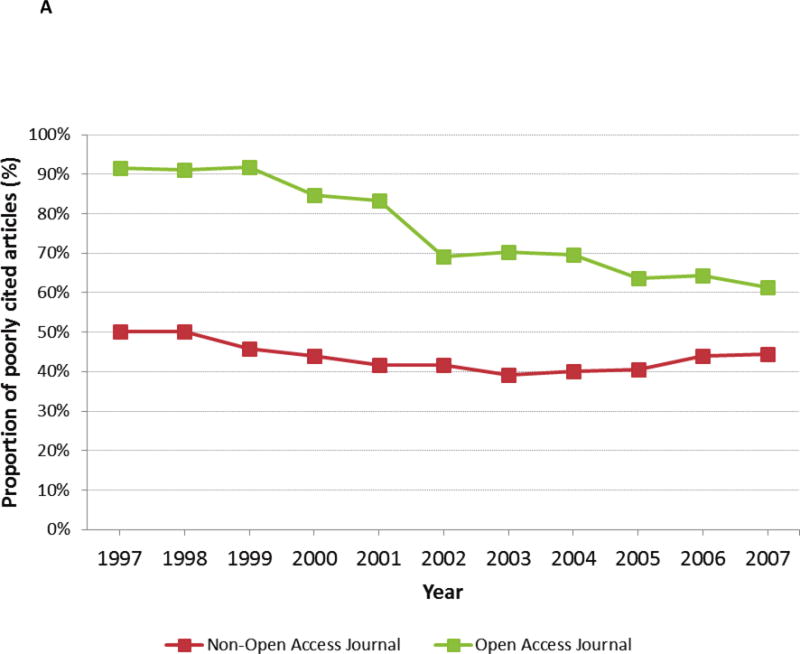

Poorly cited articles in the subset of open-access cardiovascular journals

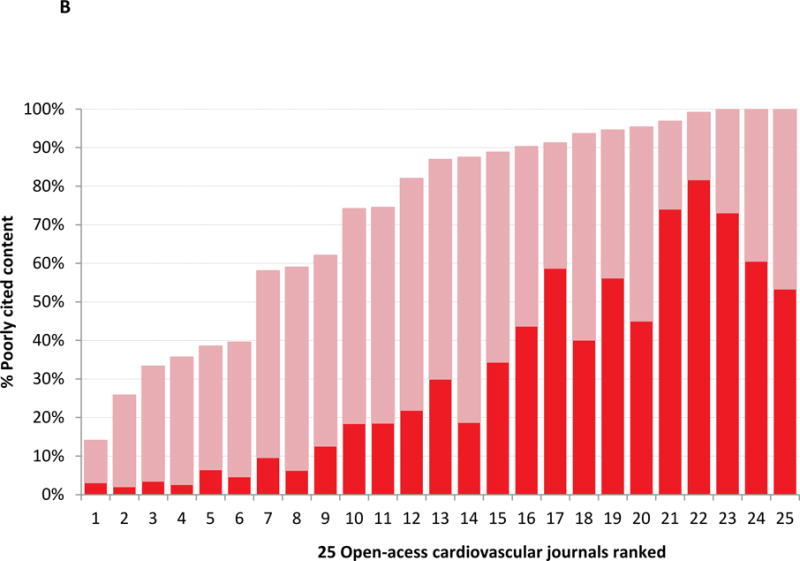

Of the cardiovascular journals included in the study, 25 (11.3%) were open-access journals that contributed 12,829 (7.8%) of all included articles. Of the articles in these open-access journals, 71.8% were poorly cited with 28.7% overall having no citations at 5 years. From 1997–2007, the proportion of poorly cited articles in open-access journals declined by 30.2% (from 91.5% to 61.3%, trend P <0.001, Figure 4A). Despite the declining trend, the proportion of uncited and poorly cited articles published in open access journals remained substantially higher than non-open access journals throughout the study period. At a journal level, 14 of the 25 open-access journals (56%) were poorly cited journals with more than three-quarters of the journal’s content poorly cited at 5 years (Figure 4B). Comparatively, 42% (66/157) of non open-access journals were poorly cited journals.

Figure 4.

Figure 4A shows the trend in uncited and poorly cited articles stratified by the open access status of the journal. Figure 4B shows open-access cardiovascular journals ranked by the proportion of poorly cited article content. The red bars indicate the proportion of poorly cited content. The dark red bars indicate the proportion of uncited content; a subset of the poorly cited content.

DISCUSSION

In our study of all articles published in peer-reviewed cardiovascular journals from 1997 through 2007, we found that nearly half of all articles were poorly cited 5-years after publication. While this proportion has changed modestly in over a decade, the proliferation of journals over the same period has resulted in a substantial increase in the absolute number of poorly cited articles. Furthermore, we found that 44% of journals have more than three-quarters of their content poorly cited 5-years after publication. Using a conservative measure of poor citation that included self-citations, our findings suggest many of the articles that are published in the cardiovascular literature are not cited in subsequent articles. Such high rates of poorly cited articles may indicate significant waste in the research enterprise.

Several reasons may explain poor citation of articles. The literature frequently notes studies that lack novelty, address topics of little relevance, and methodological limitations; such studies, lacking in quality or usefulness, may be poorly cited because they make minimal contributions to scientific advancement. Even novel and high-quality articles may remain poorly cited because of lack of recognition of their scientific merit.15, 16 For example, it is well known that researchers often fail to recognize and incorporate appropriate references; a problem that maybe compounded by the ever increasing volume of articles that are produced.16 Furthermore, factors relating to article recognition, such as the number of keywords and authors, are known to influence citation independent of the article’s scientific content. However, our observations occurred during a period of marked expansion of Internet access and the emergence of open-access journals, public repositories such as PubMed Central, and social media; articles’ accessibility, visibility, and potential for recognition are perhaps more favorable now than ever before. For this reason, lack of recognition alone is unlikely to justify the near 50% poor citation rates observed in our study.

Our findings extend the literature by showing the temporal growth in the number of poorly cited articles. In the early 1990’s several cross-sectional studies reported that 16–55% articles in the field of science were not cited with rates as high as 98% reported in the arts and humanities literature.17–19 These observations raised the question of whether such large-scale ‘uncitedness’ reflected waste of research resources. However, concern existed that citations were not comprehensively captured because citation databases at the time were relatively small and indexed only the main journals.15, 16, 20 We use a citation database that is several-fold larger and more likely to comprehensively capture citations than prior investigations of this topic. We show the presence of a high proportion of poorly cited articles in the cardiovascular literature and a concerning growth in the number of poorly cited articles. We focus on the cardiovascular literature because citation patterns can be variable between subspecialties with autonomous journal networks;15, 16, 21 focusing on the cardiovascular literature allowed us to closely examine citation patterns within a specialty that has experienced rapid publication growth. However, the growth in publications is a widespread phenomenon in medicine1 and our findings are likely to be of relevance to the broader medical literature.

We also show for the first time that a large proportion of cardiovascular journals are poorly cited and the absolute number of poorly cited journals are increasing; an observation that questions the value of many of the new cardiovascular journals. Journal publishing is a profitable enterprise. The continued expansion of cardiovascular journals raises the possibility that monetary gain in the context of demand to publish, rather than the presence of high-quality articles, is the primary driver of the continued journal expansion. Indeed, an increasing presence of open-access ‘pay for publishing’ journals with dubious peer-review processes, or so called ‘predatory’ journals, is well documented.22 While journals indexed in Scopus are required to meet ethical publishing guidelines, and unlikely to include such journals, it nevertheless highlights the presence of perverse incentives for continued journal expansion. Of note, we observe a modest decline in the proportion of poorly cited journals with time that may suggest a gradual improvement in the distribution of cited articles among cardiovascular journals.

Irrespective of the cause, poorly cited articles are a cause for concern. Research typically consumes researchers’ time, is expensive, creates demand on regulatory processes such as institutional board review, and may involve sizable contributions from study subjects. This is equally applicable to both clinical and basic science research. For example, 53% of the US 35.5 billion in federal research funding for Health in 2009 was for basic science research.23 Furthermore, basic science researches also involves and requires many (and often extensive) regulatory processes such as institutional board review approvals for animal studies, tissue banking or genetic testing. If the end research-output is of limited use, or its value is not recognized, then these resources and contributions may be wasteful. Many of the poorly cited articles that we identify may well be low-cost studies produced with minimal resources. However, poor quality studies, and misleading findings, are frequent even among trials registered in clinical trials registries,6 leading some to suggest waste in the research enterprise is a widespread and costly problem.24 Several recently proposed strategies may reduce such waste and increase the use of research including (1) prioritizing research addressing concerns of patients and clinicians to ensure research output is relevant to end users;25 (2) improving research design, conduct, analysis, and reporting to improve the quality of research output;26 (3) ensuring research protocols, full study reports, and data are accessible to the end users;27 and (4) streamlining regulatory and study management processes so that they are appropriate to the risks associated with the research thereby minimizing undue burden on researchers and patients.26 Rigorous application of these steps in the research process may generate output of greater use to others.

Our findings have implications for how we value articles, journals, and research productivity more broadly.28 The number of publication by the author is the most commonly used measure of the research performance29 and often a deciding factor in awarding qualifications, academic promotions, and granting of competitive research funding. However, our observations suggest that the number of publications alone may be a poor indicator of the use of the research output. Alternatives such as the H-index,30 which considers both the number of publication and the number of citations, or adoption of a panel of indices31 or article level metrics,32 may provide a more robust assessment of the author’s research productivity and the value of the research output.

Our analysis has several limitations. First, article citation is the most common quantitative indicator of article impact. However, research may have different types of impact and achieve these impacts by a multitude of diverse routes, which may not be readily measurable or transparent. For example, citation does not consider how well an article is viewed, read, discussed and integrated into grant applications for future research. Alternative measures of research impact include the willingness of others to invest in the research or pay for the knowledge generated,28 financial returns of the research output, dissemination of the findings into guidelines and clinical practice, and the broader health policy, social, economic, and environmental impact. Second, increasing numbers of publications are likely to lead to increased citation of prior publications. This endogeneity bias, over time, may lead to an increase in citation of articles and a progressive reduction in the proportion of articles that are poorly cited. However, despite the endogeneity bias, we only observed a relatively modest reduction in the proportion of poorly cited articles with time with an increase in the absolute number of poorly cited articles. Third, we selected documents indexed in Scopus as articles to identify original research rather than individually reviewing articles. While the latter method is perhaps more precise, it was impractical due to the volume of articles included in this analysis. Fourth, research that is likely to lead to the highest impact often tends to be ‘high-risk’; in ‘high risk, high reward’ situations, the research may still warrant investment despite a high degree of anticipated failure. However, even negative or unexpected findings from a well-conducted study are likely to generate important knowledge and one would expect such research output to be well cited. Lastly, the knowledge within an article may be slow to be recognized. However, 5-years are a reasonable duration of time from publication for an article to be recognized.

CONCLUSION

Nearly half of all peer-reviewed articles published in cardiovascular journal are poorly cited 5 years after publication with continued growth in the absolute numbers of poorly cited articles from 1997 to 2007. Furthermore, 44% of cardiovascular journal have more than three-quarters of its article content poorly cited at 5 years. While citation is one of many measures of article use and value, such a high proportion of poorly cited articles and journals suggest the presence of waste in the cardiovascular research enterprise spent on generation of research output that is either unrecognized or of limited use to end-users.

Supplementary Material

Acknowledgments

Funding Sources: This study was supported by grant 1U01HL105270-03 (Center for Cardiovascular Outcomes Research at Yale University) from the National Heart, Lung, and Blood Institute. Dr. Isuru Ranasinghe is supported by an Early Career Fellowship co-funded by the National Health and Medical Research Council of Australia and the National Heart Foundation of Australia. Dr. Ross is supported by the National Institute on Aging (K08 AG032886) and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program.

Disclosures: Drs. Krumholz and Ross receive support from Medtronic, Inc. and Johnson & Johnson to develop methods of clinical trial data sharing, from the Centers of Medicare and Medicaid Services (CMS) to develop and maintain performance measures that are used for public reporting, and from the Food and Drug Administration (FDA) and Medtronic to develop methods for post-market surveillance of medical devices. Dr. Krumholz is Editor of the American Heart Association’s Circulation: Cardiovascular Quality and Outcomes and of the Massachusetts Medical Society’s Journal Watch Cardiology and CardioExchange. Dr. Masoudi is Associate Editor of the American Heart Associations’ Circulation: Cardiovascular Quality and Outcomes and of the Massachusetts Medical Society’s Journal Watch Cardiology. Dr. Spertus is the Deputy Editor of the American Heart Associations’ Circulation: Cardiovascular Quality and Outcomes. Dr. Ross is Associate Editor of the American Medical Association’s JAMA Internal Medicine.

References

- 1.Zhang Y, Kou J, Zhang XG, Zhang L, Liu SW, Cao XY, Wang YD, Wei RB, Cai GY, Chen XM. The evolution of academic performance in nine subspecialties of internal medicine: an analysis of journal citation reports from 1998 to 2010. PLoS One. 2012;7:e48290. doi: 10.1371/journal.pone.0048290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cooper NJ, Jones DR, Sutton AJ. The use of systematic reviews when designing studies. Clin Trials. 2005;2:260–264. doi: 10.1191/1740774505cn090oa. [DOI] [PubMed] [Google Scholar]

- 3.Ross JS, Mulvey GK, Hines EM, Nissen SE, Krumholz HM. Trial Publication after Registration in ClinicalTrials.Gov: A Cross-Sectional Analysis. Plos Med. 2009;6 doi: 10.1371/journal.pmed.1000144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hewitt C, Hahn S, Torgerson DJ, Watson J, Bland MJ. Adequacy and reporting of allocation concealment: review of recent trials published in four general medical journals. Brit Med J. 2005;330:1057–1058. doi: 10.1136/bmj.38413.576713.AE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rutjes AWS, Reitsma JB, Di Nisio M, Smidt N, van Rijn JC, Bossuyt PMM. Evidence of bias and variation in diagnostic accuracy studies. Can Med Assoc J. 2006;174 doi: 10.1503/cmaj.050090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Califf RM, Zarin DA, Kramer JM, Sherman RE, Aberle LH, Tasneem A. Characteristics of clinical trials registered in ClinicalTrials.gov, 2007–2010. JAMA. 2012;307:1838–47. doi: 10.1001/jama.2012.3424. [DOI] [PubMed] [Google Scholar]

- 7.Glasziou P, Meats E, Heneghan C, Shepperd S. What is missing from descriptions of treatment in trials and reviews? Brit Med J. 2008;336:1472–1474. doi: 10.1136/bmj.39590.732037.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Garfield EMM, Small H. Citation Data as Science Indicators. In: L J, Elkana Y, Thackray A, Merton RK, Zuckerman H, editors. Toward a Metric of Science: The Advent of Science Indicators. New York: John Wiley & Sons; 1978. pp. 181–182. [Google Scholar]

- 9.Elsevier. Scopus. 2013;2013 [Google Scholar]

- 10.Kulkarni AV, Aziz B, Shams I, Busse JW. Comparisons of citations in Web of Science, Scopus, and Google Scholar for articles published in general medical journals. JAMA. 2009;302:1092–6. doi: 10.1001/jama.2009.1307. [DOI] [PubMed] [Google Scholar]

- 11.SCImago. SJR — SCImago Journal & Country Rank. 2007;2013 [Google Scholar]

- 12.Elsevier. Scopus Title List [Google Scholar]

- 13.McVeigh ME, Mann SJ. The journal impact factor denominator: defining citable (counted) items. JAMA. 2009;302:1107–9. doi: 10.1001/jama.2009.1301. [DOI] [PubMed] [Google Scholar]

- 14.Amin M, Mabe MA. Impact factors: use and abuse. Medicina-Buenos Aire. 2003;63:347–354. [PubMed] [Google Scholar]

- 15.Macroberts MH, Macroberts BR. Problems of Citation Analysis – a Critical-Review. J Am Soc Inform Sci. 1989;40:342–349. [Google Scholar]

- 16.MacRoberts MH, MacRoberts BR. Problems of Citation Analysis: A Study of Uncited and Seldom-Cited Influences. J Am Soc Inf Sci Tec. 2010;61:1–12. [Google Scholar]

- 17.Pendlebury DA. Science, citation, and funding. Science. 1991;251:1410–1. doi: 10.1126/science.251.5000.1410-b. [DOI] [PubMed] [Google Scholar]

- 18.Hamilton DP. Publishing by–and for?–the numbers. Science. 1990;250:1331–2. doi: 10.1126/science.2255902. [DOI] [PubMed] [Google Scholar]

- 19.Hamilton DP. Research papers: who’s uncited now? Science. 1991;251:25. doi: 10.1126/science.1986409. [DOI] [PubMed] [Google Scholar]

- 20.Seglen PO. Citations and journal impact factors: questionable indicators of research quality. Allergy. 1997;52:1050–6. doi: 10.1111/j.1398-9995.1997.tb00175.x. [DOI] [PubMed] [Google Scholar]

- 21.Garfield E. I had a dream … about Uncitedness. The Scientist. 1998;12:10. [Google Scholar]

- 22.Haug C. The downside of open-access publishing. N Engl J Med. 2013;368:791–3. doi: 10.1056/NEJMp1214750. [DOI] [PubMed] [Google Scholar]

- 23.Foundation NS. Federal Funds for Research and Development: Fiscal Years 2009–11. 2012 [Google Scholar]

- 24.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374:86–9. doi: 10.1016/S0140-6736(09)60329-9. [DOI] [PubMed] [Google Scholar]

- 25.Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, Gulmezoglu AM, Howells DW, Ioannidis JP, Oliver S. How to increase value and reduce waste when research priorities are set. Lancet. 2014;383:156–65. doi: 10.1016/S0140-6736(13)62229-1. [DOI] [PubMed] [Google Scholar]

- 26.Al-Shahi Salman R, Beller E, Kagan J, Hemminki E, Phillips RS, Savulescu J, Macleod M, Wisely J, Chalmers I. Increasing value and reducing waste in biomedical research regulation and management. Lancet. 2014;383:176–85. doi: 10.1016/S0140-6736(13)62297-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chan AW, Song F, Vickers A, Jefferson T, Dickersin K, Gotzsche PC, Krumholz HM, Ghersi D, van der Worp HB. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383:257–66. doi: 10.1016/S0140-6736(13)62296-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Krumholz HM. How do we know the value of our research? Circ Cardiovasc Qual Outcomes. 2013;6:371–2. doi: 10.1161/CIRCOUTCOMES.113.000423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Patel VM, Ashrafian H, Ahmed K, Arora S, Jiwan S, Nicholson JK, Darzi A, Athanasiou T. How has healthcare research performance been assessed? A systematic review. J Roy Soc Med. 2011;104:251–261. doi: 10.1258/jrsm.2011.110005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102:16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Martin BR. The use of multiple indicators in the assessment of basic research. Scientometrics. 1996;36:343–362. [Google Scholar]

- 32.Neylon C, Wu S. Article-level metrics and the evolution of scientific impact. PLoS Biol. 2009;7:e1000242. doi: 10.1371/journal.pbio.1000242. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.