Abstract

Linking the outcomes from interprofessional education to improvements in patient care has been hampered by educational assessments that primarily measure the short-term benefits of specific curricular interventions. Competencies, recently published by the Interprofessional Education Collaborative (IPEC), elaborate overarching goals for interprofessional education by specifying desired outcomes for graduating health professions students. The competencies define a transition point between the prescribed and structured educational experience of a professional degree program and the more self-directed, patient-oriented learning associated with professional practice. Drawing on the IPEC competencies for validity, we created a 42-item questionnaire to assess outcomes related to collaborative practice at the degree program level. To establish the usability and psychometric properties of the questionnaire, it was administered to all the students on a health science campus at a large urban university in the mid-Atlantic of the United States. The student responses (n = 481) defined four components aligned in part with the four domains of the IPEC competencies. In addition, the results demonstrated differences in scores by domain that can be used to structure future curricula. These findings suggest a questionnaire based on the IPEC competencies might provide a measure to assess programmatic outcomes related to interprofessional education. We discuss directions for future research, such as a comparison of results within and between institutions, and how these results could provide valuable insights about the effect of different curricular approaches to interprofessional education and the success of various educational programs at preparing students for collaborative practice.

INTRODUCTION

For more than forty years, policy makers and curriculum planners have proposed interprofessional education and collaborative practice as a means to reduce errors, improve the quality of care, and control health care costs (Institute of Medicine, 1972; Institute of Medicine, 2003; World Health Organization, 2010). However, those who study education in health care continue to struggle to identify methods of interprofessional education that lead to better practice (Reeves, et al., 2009; Reeves, et al., 2011; Barr, 2013; Reeves, Perrier, Goldman, Freeth, & Zwarenstein, 2013). To bridge the gap between education and practice, educators need measures of interprofessional competency to assess the outcomes from health professional degree programs and to determine what approaches to interprofessional education benefit patients and communities. Yet, our search of the literature did not find a suitable instrument to measure graduating competency in interprofessional practice.

A recent review demonstrated the limited number of well-evaluated assessment tools for interprofessional education (Thannhauser, Russell-Mayhew, & Scott, 2010). Tools with well-established psychometric properties are restricted to certain settings, such as measurement of attitudes toward interprofessional learning (Parsell & Bligh, 1999) or profession-specific attitudes resulting from select experiences in interprofessional education (Luecht, Madsen, Taugher, & Petterson, 1990). As a result, almost all published studies of interprofessional education measure changes in short-term educational outcomes (Abu-Rish, et al., 2012). While existing tools make important contributions to an assessment toolbox, a measure is needed to describe how effective a program of study (e.g., a degree program such as four years of professional school) is at training the collaborative competencies needed to support successful interprofessional care.

Useful measurement tools are valid, reliable, and practical and have well-defined sources of error (Nunnally & Bernstein, 1994). Validity emerges from a theoretical understanding of the state being studied, but measures of interprofessional collaboration struggle because of the lack of construct clarity about interprofessional collaboration (Ødegård & Bjørkly, 2012). To provide a theoretical basis for an assessment tool, we examined the competencies for interprofessional education defined by the Interprofessional Education Collaborative (IPEC) (Interprofessional Education Collaborative Expert Panel, 2011). Published for the United States, the IPEC competencies built on preceding work in Canada (Canadian Interprofessional Health Collaborative, 2010) and for the World Health Organization (World Health Organization, 2010). The IPEC competencies were produced by an expert panel and are described as “behavioral learning objectives to be achieved by the end of pre-licensure or pre-certification education” (Interprofessional Education Collaborative Expert Panel, 2011). They are divided into four domains – Values and Ethics, Roles and Responsibilities, Interprofessional Communication, and Teams and Teamwork – which are linked conceptually and purposefully to the Institute of Medicine's core competencies for all health professionals (Institute of Medicine, 2003). Each domain includes eight to eleven specific competencies representing guidelines for institutional planners to use in program and curriculum development. These competencies have been put forth as a possible foundation for common accreditation language in the health professions (Zorek & Raehl, 2013).

The current report describes the initial phase of the development of a questionnaire designed to explore the utility of using the IPEC competencies for assessment. Measuring the interprofessional competency of health professional students could: 1) inform curriculum planning with valid and reliable information, 2) track the effects of degree programs on interprofessional competency, and 3) provide data that can be used within and between institutions to compare programmatic outcomes. Herein, we report the usability and initial psychometric properties of the questionnaire from its initial administration on a health science campus. We also describe differences in scores by competency, year of study, and profession to provide an example of the potential implications of these findings for curriculum planners and other stakeholders.

METHODS

Questionnaire Development

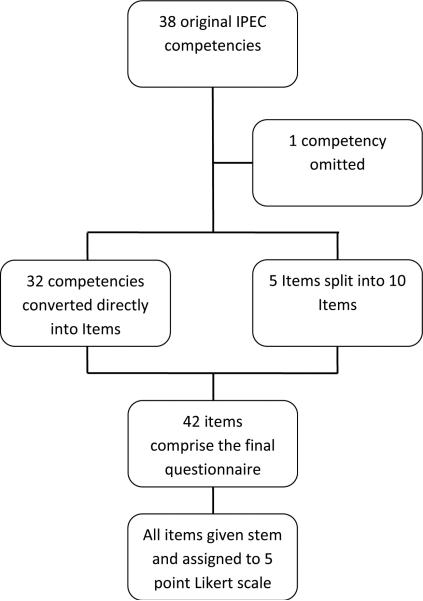

To begin the process of developing questionnaire items (Figure 1), two authors (AD and DDG) adapted thirty-two of the 38 competencies directly into items with minimal change. Five competencies were found to address two concepts, such as individual and team perspectives; these competencies were divided into ten survey items.

Figure 1.

Derivation of IPEC Questionnaire from IPEC Competencies

One competency was omitted from the questionnaire because it referred to a group rather than individual behavior. The stem ‘I am able to’ was added to the beginning of each item, and each item was then assigned to a 5-point Likert scale (1-Strongly Disagree, 2-Disagree, 3-Neither Agree nor Disagree, 4-Agree, and 5-Strongly Agree). To ensure item clarity before distribution, two fourth-year medical students vetted the questionnaire, and edits were made based on their comments to create the final version.1

Study Population

The study population consisted of students (N = 3236) enrolled in clinical degree programs for the 2012 academic year on the health science campus at our institution, a major academic health center in an urban environment. The health science campus occupies 53 acres and includes five health science schools – allied health, dentistry, medicine, nursing, and pharmacy – and a tertiary-referral health system. The School of Allied Health comprises nine departments with programs such as physical therapy, occupational therapy, nurse anesthesia, health administration, and gerontology. The School of Dentistry includes both Doctor of Dental Surgery and dental hygiene programs. The School of Medicine enrolls students in the Doctor of Medicine program and graduate students in basic science programs. The School of Nursing educates students for Bachelors, Masters, and Doctorate degrees in Nursing. The School of Pharmacy has primarily Doctorate of Pharmacy students with a small number of other graduate students in Masters or Doctoral programs. Although occasional interprofessional education activities involving fewer than fifty students were ongoing at the time of the survey, no large-scale or high visibility interprofessional education courses had been implemented at this juncture.

Procedure

After approval by the Institutional Review Board, the questionnaire was administered to the study population electronically using REDCap, an electronic data collection and analysis software program in April, 2012. The students received invitation emails with a link to the online tool. These invitation emails were sent once a week for four weeks by educational leaders, such as the Associate Dean of Education for each school, but these individuals were not aware of whether students had completed the survey. On the first page of the questionnaire, students were asked to enter their email address if they wished to complete the survey. The email address was required for two purposes: 1) to contact five students each week who were randomly selected to receive a $25 gift card, and2) to link student responses across subsequent years of study and performance., Appended to the questionnaire were demographic questions about program of study, year of study, gender, race, and prior healthcare experience.

Data Analysis

Using SPSS 20.0 software, the data were analyzed using standard statistical procedures (Howell, 2002), (Tabachnick & Fidell, 2012). First, they were screened for outliers and item normality. The data were then checked to determine the appropriateness for conducting an exploratory factor analysis on all 42 IPEC survey items. Several well-recognized criteria to test the factorability of the items were used. The Bartlett's test of sphericity, which tested the hypothesis that the correlation matrix is an identity matrix, was significant. The Kaiser-Meyer-Olkin measure of sampling adequacy was .98, above the recommended value of .6. Finally, the communalities were all above .3 (see Table 1).

Table 1.

Factor Loading and Associated Variance for IPEC Competency Survey (n = 481 respondents)

| Item Number | Original IPEC Domain | Factor 1 | Factor 2 | Factor 3 | Factor 4 | Communality |

|---|---|---|---|---|---|---|

| 31 | TT | .786 | .201 | .152 | .271 | 0.755 |

| 40 | TT | .739 | .367 | .351 | .236 | 0.860 |

| 41 | TT | .737 | .356 | .290 | .204 | 0.796 |

| 36 | TT | .708 | .267 | .303 | .292 | 0.750 |

| 32 | TT | .698 | .258 | .201 | .438 | 0.786 |

| 35 | TT | .689 | .313 | .327 | .313 | 0.778 |

| 33 | TT | .679 | .269 | .251 | .431 | 0.782 |

| 25 | IC | .657 | .283 | .356 | .201 | 0.679 |

| 39 | TT | .635 | .324 | .448 | .231 | 0.762 |

| 37 | TT | .609 | .427 | .343 | .332 | 0.781 |

| 34 | TT | .603 | .310 | .295 | .498 | 0.795 |

| 42 | TT | .583 | .425 | .433 | .285 | 0.789 |

| 29 | IC | .571 | .316 | .499 | .307 | 0.769 |

| 30 | IC | .558 | .394 | .489 | .275 | 0.781 |

| 20 | IC | .527 | .354 | .388 | .456 | 0.762 |

| 7 | VE | .287 | .786 | .330 | .261 | 0.877 |

| 9 | VE | .235 | .786 | .382 | .233 | 0.873 |

| 3 | VE | .355 | .771 | .259 | .254 | 0.852 |

| 5 | VE | .315 | .758 | .239 | .357 | 0.858 |

| 4 | VE | .374 | .749 | .306 | .160 | 0.820 |

| 1 | VE | .253 | .743 | .306 | .315 | 0.809 |

| 10 | VE | .243 | .740 | .367 | .317 | 0.842 |

| 2 | VE | .303 | .737 | .320 | .242 | 0.796 |

| 6 | VE | .331 | .729 | .352 | .275 | 0.841 |

| 8 | VE | .422 | .624 | .296 | .271 | 0.729 |

| 12 | RR | .170 | .441 | .680 | .325 | 0.791 |

| 27 | IC | .404 | .397 | .669 | .169 | 0.797 |

| 22 | IC | .297 | .262 | .639 | .341 | 0.681 |

| 38 | TT | .392 | .405 | .637 | .159 | 0.749 |

| 21 | IC | .321 | .447 | .631 | .334 | 0.813 |

| 26 | IC | .430 | .477 | .629 | .180 | 0.840 |

| 24 | IC | .434 | .464 | .620 | .213 | 0.833 |

| 23 | IC | .466 | .407 | .576 | .212 | 0.760 |

| 28 | IC | .488 | .398 | .572 | .277 | 0.800 |

| 11 | RR | .318 | .446 | .560 | .390 | 0.766 |

| 15 | RR | .388 | .373 | .270 | .669 | 0.810 |

| 14 | RR | .449 | .316 | .253 | .658 | 0.798 |

| 13 | RR | .470 | .373 | .241 | .656 | 0.848 |

| 16 | RR | .457 | .370 | .263 | .643 | 0.828 |

| 17 | RR | .391 | .421 | .369 | .571 | 0.792 |

| 19 | RR | .456 | .421 | .360 | .548 | 0.815 |

| 18 | RR | .444 | .422 | .370 | .528 | 0.791 |

| Eigenvalues | 10.267 | 9.962 | 7.447 | 5.760 | Total variance | |

| Percentage of total Variance | 24.446 | 23.718 | 17.730 | 13.714 | 79.608 |

Note. Factor loadings grouped by cutoff loading of >.5. TT = Teams and Teamwork domain, VE = Values and Ethics domain, IC = Interprofessional Communication domain, RR = Roles and Responsibilities domain. See appendix for full wording of each item.

Given these overall indicators, all 42 items were retained. A principle components analysis with varimax rotation was performed to empirically summarize the dataset and group items into components. For each component and for the tool overall, Cronbach's alphas were calculated to assess reliability. In addition, for each initial IPEC competency domain, mean and median scores were calculated. Having completed the exploratory factor analysis, median scores were compared across domains. We then conducted analyses to examine whether there were differences across domains by discipline and by level of education.

RESULTS

Respondent Characteristics

The questionnaire was completed by 481 of 3236 students for an overall response rate of 14.9%. Response rates for specific populations were 25.4% for Doctor of Medicine students (194/763), 26.3% for Doctor of Pharmacy students (137/520), and 7.8% for Bachelors of Science in Nursing and Masters of Science in Nursing students, combined (70/902). The majority of the respondents were female (71.1%) and were Caucasian (67.9%) with the race of the remaining of the respondents being Asian (23.9%), African-American (4.9%), or Other (6.8%) (respondents could select more than one race). These data mirror the overall student population on the health science campus. The percent of students who described their prior healthcare experience as none, shadowing, part-time employment, and prior career was 7.1%, 41.8%, 27.4%, and 22.5%, respectively.

Measurement Tool Characteristics

Principal components analysis with an eigenvalue greater than or equal to 1.0 criterion revealed four components in the response data (Table 1). A loading threshold of 0.50 was used to assist in defining the components. Because of the alignment of each component with a domain of the initial IPEC competencies, the first component was defined as the ‘Teams and teamwork’ domain; the second component, the ‘Values and ethics’ domain; the third component, the ‘Interprofessional communication’ domain; and the fourth component, the ‘Roles and responsibilities’ domain. The initial eigenvalues showed that the first component explained 24.4% of the variance, the second component, 23.7% of the variance, the third component, 17.7% of the variance, and the fourth component, 13.7% of the variance. In total, the components accounted for 79% of the variance in the response data. Each component demonstrated a high degree of internal consistency with overall alphas ranging from 0.96 to 0.98 (see Tables 2, 3, 4 and 5).

Table 2.

Alpha statistics for Factor 1 (“Teams and Teamwork” factor)

| Item Number | Original IPEC Domain | Corrected Item – Total Correlation | Cronbach's Alpha if Item Deleted |

|---|---|---|---|

| 31 | TT | .792 | .975 |

| 40 | TT | .907 | .973 |

| 41 | TT | .853 | .974 |

| 36 | TT | .838 | .975 |

| 32 | TT | .840 | .975 |

| 35 | TT | .862 | .974 |

| 33 | TT | .854 | .974 |

| 25 | IC | .784 | .975 |

| 39 | TT | .841 | .975 |

| 37 | TT | .858 | .974 |

| 34 | TT | .857 | .974 |

| 42 | TT | .861 | .974 |

| 29 | IC | .844 | .974 |

| 30 | IC | .848 | .974 |

| 20 | IC | .836 | .975 |

Note. Overall alpha = .976

Table 3.

Alpha statistics for Factor 2 (“Values and Ethics” factor)

| Item Number | Original IPEC Domain | Corrected Item – Total Correlation | Cronbach's Alpha if Item Deleted |

|---|---|---|---|

| 7 | VE | .918 | .972 |

| 9 | VE | .907 | .973 |

| 3 | VE | .897 | .973 |

| 5 | VE | .897 | .973 |

| 4 | VE | .871 | .974 |

| 1 | VE | .872 | .974 |

| 10 | VE | .893 | .973 |

| 2 | VE | .868 | .974 |

| 6 | VE | .898 | .973 |

| 8 | VE | .817 | .975 |

Note. Overall alpha = .976

Table 4.

Alpha statistics for Factor 3 (“Interprofessional Communication” factor)

| Item Number | Original IPEC Domain | Corrected Item – Total Correlation | Cronbach's Alpha if Item Deleted |

|---|---|---|---|

| 12 | RR | .821 | .964 |

| 27 | IC | .855 | .962 |

| 22 | IC | .757 | .966 |

| 38 | TT | .823 | .964 |

| 21 | IC | .876 | .962 |

| 26 | IC | .891 | .961 |

| 24 | IC | .893 | .961 |

| 23 | IC | .845 | .963 |

| 28 | IC | .859 | .962 |

| 11 | RR | .839 | .963 |

Note. Overall alpha = .966

Table 5.

Alpha statistics for Factor 4 (“Roles and Responsibilities” factor)

| Item Number | Original IPEC Domain | Corrected Item – Total Correlation | Cronbach's Alpha if Item Deleted |

|---|---|---|---|

| 15 | RR | .854 | .957 |

| 14 | RR | .840 | .958 |

| 13 | RR | .886 | .955 |

| 16 | RR | .885 | .955 |

| 17 | RR | .860 | .957 |

| 19 | RR | .876 | .955 |

| 18 | RR | .863 | .956 |

Note. Overall alpha = .962

Differences across Domains, Year of Education, and Discipline

Three sets of analyses are reported herein. First, we compared medians across domains with no consideration given to the level of education variable. Because data were not normally distributed, non-parametric analyses were conducted for all analyses (Howell, 2002; Tabachnick & Fidell, 2012). The second set of analyses, which resulted in no statistically significant findings, compared medians on each domain to determine whether there were differences across year of education (e.g., Were first year students scoring higher or lower on the ‘Values and Ethics’ domain as compared to their counterparts in their 2nd, 3rd, and 4th+ year of education?). Third, we compared medians on each domain to determine if there were differences across disciplines (e.g., Were medical students scoring higher or lower on each domain as compared to the other disciplines?).

As a result of our first set of analyses, when comparing the average score for each IPEC domain, data indicate that the median scores on the ‘Values and Ethics’ domain is greater than all other domains (p<.001). In addition, the data indicate that the median scores on the ‘Teams and Teamwork’ domain are lower than all other domains (p=.002). Significant differences were not found between the scores for the ‘Roles and Responsibilities’ and ‘Interprofessional Communication’ domains (p = .89). Table 6 provides summary data across domains.

Table 6.

Means, medians, and standard deviations across domains for all respondents

| Domain | n | M | SD | Md |

|---|---|---|---|---|

| Values and Ethics* | 478 | 4.330 | .889 | 4.600 |

| Roles and Responsibilities | 475 | 4.126 | .888 | 4.222 |

| Interprofessional Communication | 475 | 4.138 | .862 | 4.272 |

| Teams and Teamwork* | 475 | 3.998 | .882 | 4.000 |

Scores for ‘Values and Ethics’ and ‘Teams and Teamwork’ domains significantly different than other domains (p<01)

Next, we examined the differences across the competency domains to determine whether differences existed across year of education. Due to the data not being normally distributed and groups having unequal sample sizes, a non-parametric Kruskal-Wallis test was performed. Results indicated no statistically significant differences across level of education for the ‘Values and Ethics’, ‘Roles and Responsibilities’, ‘Interprofessional Communication’, and ‘Teams and Teamwork’ domains (p=.311, p=.915, p=.400, p=.967; respectively).

For the third analysis, we excluded health professions schools with a low number of respondents: analyzed response data included only students in the schools of medicine, nursing, and pharmacy. We conducted a Kruskal-Wallis non-parametric test to examine whether differences in each domain existed across disciplines. When differences in medians were examined across disciplines, no significant difference for scores in the ‘Values and Ethics’ (p=.327), ‘Interprofessional Communication’ (p=.316) or the ‘Teams and Teamwork’ domains (p=.119) were found. However, for the ‘Roles and Responsibilities’ domain, the median score for nursing students was statistically different than the other disciplines (p=.047).

DISCUSSION

To measure overall competency in interprofessional collaboration, we sought to develop an assessment tool based on the IPEC competencies (Interprofessional Education Collaborative Expert Panel, 2011). We assessed the initial psychometrics of the tool by collecting data across the student population of a health science campus. Based on data collected from 481 student respondents, the tool was found to have four discrete components which accounted for 79% of the variance in response data. The items in these components aligned with the original domains defined by the IPEC group, strengthening the evidence for these constructs within the IPEC competencies. Because interprofessional competency frameworks vary – the Canadian Interprofessional Health Collaborative competencies have six domains, for example (Canadian Interprofessional Health Collaborative, 2010) – future research could include direct comparisons of various competency frameworks to define the relative significance of each domain and determine how different constructs contribute to the conceptualization of interprofessional collaboration.

Each item had a high degree of communality with the other items. In addition, the tool as a whole and each component within the tool demonstrated high reliability coefficients. This finding suggests that the items are measuring related concepts. Future efforts to develop the tool should include a confirmatory factor analysis within a different population representing several institutions. Based on these results, the scale may be able to be shortened and still retain the discriminative properties important for a measurement tool. In addition, examining the responses from practitioners who engage in interprofessional care effectively could strengthen the tool and improve conceptual clarity about interprofessional care.

The differences in ratings by IPEC domain help define potential curricular directions, specifically competency-driven, sequenced learning experiences that span programs of study (Blue, Mitcham, Smith, Raymond, & Greenberg, 2010). For example, the high ratings in the ‘Values and Ethics’ domain suggest students enter health professions education with a positive view of collaborative practice. Further, the study results suggest these views are largely maintained throughout the students’ educational experience. In contrast, the low scores in the domain of ‘Teams and Teamwork’ underscore this domain's complex concepts in which students find more difficulty obtaining competency. The ‘Teams and Teamwork’ competencies may build on the attitudinal foundation of the ‘Values and Ethics’ domain through the knowledge and skills defined by the intermediately scored domains of ‘Roles and Responsibilities’ and ‘Interprofessional Communication’. One strategy would be to support the commitment of students to interprofessional care denoted by the scores in the ‘Values and Ethics’ domain through institutional emphasis on interprofessional education. Concurrently, early classroom-based curricula on ‘Roles and Responsibilities’ and best practices in ‘Interprofessional Communication’ could be followed by simulation- or clinically-based education designed to develop and demonstrate competency in the more advanced concepts of communication and teamwork. Following the changes in domain scores over time could help track the overall success of educational programs at building these competencies.

Although prior studies of tools for measuring interprofessional concepts have found significant differences between different health professions (Horsburgh, Lamdin, & Williamson, 2001), (Braithwaite, et al., 2013), we found differences in only one domain, higher scores in the roles and responsibilities domain for nursing students. This constellation of findings may stem from different tools measuring separate concepts (i.e. attitudes towards interprofessional education and care versus ratings of competency), or differences in study populations or settings. Overall, our findings suggest students identify similar unmet needs in competencies related to interprofessional care and support centralized, institutional programs to develop interprofessional education. While a larger group of respondents may increase the statistical power to detect a difference between professions, the clinical impact of any observed variation should also be quantified.

Analysis of student responses showed no improvement of interprofessional competency as students progressed through training. Although no formal, large-scale interprofessional education programs existed at our institution at the time of the survey, we expected existing experiences in the clinical environment to shape interprofessional knowledge, skills, and attitudes (Hafferty, 1998), perhaps for the worse as noted in prior studies such as decreasing empathy during the clinical education of medical students (Hojat, et al., 2009). Although we saw no decrements related to acculturation in the clinical environment, educators should prepare students to assess their own interprofessional competency and account for the potential effects of the practice environment on attitudes and overall performance (Institute of Medicine, 2009). Another possibility is that the tool may not discriminate changes in competency over time and is better used as a cross-sectional rather than longitudinal measure.

Because the goal of this questionnaire is to help define the success of educational institutions at graduating collaborative health professions students, the results of this survey are most valuable when viewed in comparison to data from other institutions. Other outcome surveys, such as the Association of American Medical Colleges (AAMC) Graduation Questionnaire, have been used to compare institutions and identify curricular needs (Lockwood, Sabharwal, Danoff, & Whitcomb, 2004). Being able to compare the outcomes of interprofessional education programs across institutions would allow educational planners to measure the effects of the culture and various interprofessional education experiences at an institution and adopt the most beneficial practices from across the educational landscape. In addition, other parties concerned with the interprofessional competency of graduates – such as employers, accreditors, directors of training programs, and prospective students – could use these comparative data to assess how well a program prepares its students for interprofessional practice. Future studies could expand this comparative framework to clinical units, practitioners, and continuing professional development.

Major limitations of this study include the low response rate and the restriction to one academic health center; our findings may not generalize to other sites. However, because the survey respondents mirrored our campus population in gender and race, the findings may be applicable locally. In addition, directed efforts to recruit participants in certain schools (e.g. nursing) may increase the ability to draw conclusions in the future. People also tend to overrate their abilities on instruments of self-assessed competency, particularly in situations where the self-raters are not being stimulated by relevant situational factors (Eva & Regehr, 2011). Linking self-assessment data from this instrument to objective measures of performance from the clinical realm (including interprofessional practice sites) or educational settings such as simulation should provide an opportunity to further validate the survey.

CONCLUDING COMMENTS

Based on responses from students across a health science campus, a questionnaire created from the IPEC competencies (Interprofessional Education Collaborative Expert Panel, 2011) demonstrated four components that aligned with the four domains of the IPEC competencies. These findings strengthen the theoretical basis for the competencies while also suggesting some approaches to improve the instrument and our understanding of interprofessional practice. Comparison of scores by domain demonstrated high attitudinal scores and lower ratings for skills and behaviors. These ratings can also be used by educational planners to structure curricula in interprofessional care. Few differences were seen in scores by program or year of study suggesting a homogenous student population, unaffected by current educational experiences. Further study is needed to refine the instrument, especially across other populations, including students of other institutions and practicing health professionals. With a well-established instrument to measure interprofessional competency, outcomes between institutions may be compared in order to appraise the merits of different institutional approaches to interprofessional education and to guide certification, hiring, and matriculation decisions related to desired competency in interprofessional practice.

Table 7.

Overall Mean Scores by School Grouped by IPEC Domain

| IPEC Domain | Mean (SDs) and Median Scores by School | ||

|---|---|---|---|

| Medicine (N=194) | Pharmacy (N=137) | Nursing (N=70) | |

| Values and Ethics | 4.335 (0.784) ; 4.55 | 4.358 (0.861) ; 4.70 | 4.407 (0.913) ; 4.80 |

| Roles and Responsibilities | 4.116 (0.807) ; 4.22 | 4.121 (0.832) ; 4.22 | 4.311 (0.929) ; 4.67* |

| Interprofessional Communication | 4.149 (0.790) ; 4.18 | 4.112 (0.803) ; 4.18 | 4.255 (0.885) ; 4.45 |

| Teams and Teamwork | 3.996 (0.796); 4.00 | 3.995 (0.849); 4.00 | 4.155, (0.910); 4.25 |

Denotes statistically significant difference in comparison with other schools (p<.05)

Acknowledgments

The authors would like to thank Molly Madden and Jessica Evans for their assistance in study development and data collection and the faculty and participants of the Harvard-Macy Institute's Program for Educators in the Health Professions for guidance in study development.

Alan Dow is supported by a faculty fellowship grant from the Josiah H. Macy, Jr. Foundation and by a grant from the Donald W. Reynolds Foundation. Deborah DiazGranados and Paul Mazmanian are funded by a grant award (UL1TR000058) from the NIH. Alan Dow and Paul Mazmanian are supported by a grant award (UD7HP26044) from the Department of Health and Human Services (DHHS), Health Resources and Services Administration, the Bureau of Health Professions (BHPr), Division of Nursing, under the Nurse Education, Practice, Quality, and Retention (NEPQR) Program - Interprofessional Collaborative Practice. The contents are solely the responsibility of the authors and do not necessarily represent official views of the National Center for Advancing Translational Sciences, the Josiah H. Macy Jr. Foundation, the Donald W. Reynolds Foundation, the National Institutes of Health, the U.S. Government, DHHS, BHPr or the Division of Nursing.

Footnotes

Declaration of Interest

The authors report no conflict of interest. The authors alone are responsible for the writing and content of this paper.

REFERENCES

- Abu-Rish E, Kim S, Choe L, Varpio L, Malik E, White A, et al. Current trends in interprofessional education of health sciences students: A literature review. Journal of Interprofessional Care. 2012;26(6):444–451. doi: 10.3109/13561820.2012.715604. doi: 10.3109/13561820.2012.715604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barr H. Toward a theoretical framework for interprofessional education. Journal of Interprofessional Care. 2013;27(1):4–9. doi: 10.3109/13561820.2012.698328. doi: 10.3109/13561820.2012.698328. [DOI] [PubMed] [Google Scholar]

- Blue A, Mitcham M, Smith T, Raymond J, Greenberg R. Changing the future of health professions: embedding interprofessional education within an academic health center. Academic Medicine. 2010:1290–1295. doi: 10.1097/ACM.0b013e3181e53e07. doi: 10.1097/ACM.0b013e3181e53e07. [DOI] [PubMed] [Google Scholar]

- Braithwaite J, Westbrook M, Nugus P, Greenfield D, Travaglia J, Runciman W, et al. Continuing differences between health professions' attitudes: the saga of accomplishing systems-wide interprofessionalism. International Journal of Quality Health Care. 2013;25(1):8–15. doi: 10.1093/intqhc/mzs071. doi: 10.1093/intqhc/mzs071. [DOI] [PubMed] [Google Scholar]

- Canadian Interprofessional Health Collaborative . A National Interprofessional Competency Framework. University of British Columbia; Vancouver: 2010. [Google Scholar]

- Eva K, Regehr G. Exploring the divergence between self-assessment and self- monitoring. Advances in Health Sciences Education: Theory, and Practice. 2011;16(3):311–329. doi: 10.1007/s10459-010-9263-2. doi: 10.1007/s10459-010-9263-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hafferty F. Beyond Curriculum Reform: Confronting Medicine's Hidden Curriculum. Academic Medicine. 1998:403–407. doi: 10.1097/00001888-199804000-00013. [DOI] [PubMed] [Google Scholar]

- Hojat M, Vergare M, Maxwell K, Brainard G, Herrine S, Isenberg G, et al. The devil is in the third year: a longitudinal study of erosion of empathy in medical school. Academic Medicine. 2009:1182–1191. doi: 10.1097/ACM.0b013e3181b17e55. doi: 10.1097/ACM.0b013e3181b17e55. [DOI] [PubMed] [Google Scholar]

- Horsburgh M, Lamdin R, Williamson E. Multiprofessional learning: the attitudes of medical, nursing and pharmacy students to shared learning. Medical Education. 2001;35(9):876–883. doi: 10.1046/j.1365-2923.2001.00959.x. [DOI] [PubMed] [Google Scholar]

- Howell DC. Statistical Methods for Psychology. 5th ed. Duxbury; Pacific Grove,CA: 2002. [Google Scholar]

- Institute of Medicine . Educating for the health team. National Academy of Sciences; Washington, DC: 1972. [Google Scholar]

- Institute of Medicine . Health professions education: a bridge to quality. National Academy of Sciences; 2003. 2003. [Google Scholar]

- Institute of Medicine . Redesigning Continuing Education in the Health Professions. National Academies Press; Washington, DC: 2009. [PubMed] [Google Scholar]

- Interprofessional Education Collaborative Expert Panel . Core competencies for interprofessional collaborative practice: report of an expert panel. Interprofessional Education Collaborative; Washington, D.C.: 2011. [Google Scholar]

- Lockwood J, Sabharwal R, Danoff D, Whitcomb M. Quality improvement in medical students' education: the AAMC medical school graduation questionnaire. Medical Education. 2004;38(3):234–236. doi: 10.1111/j.1365-2923.2004.01760.x. [DOI] [PubMed] [Google Scholar]

- Luecht R, Madsen M, Taugher M, Petterson B. Assessing professional perceptions: design and validation of an Interdisciplinary Education Perception Scale. Journal of Allied Health. 1990;19(2):181–191. [PubMed] [Google Scholar]

- Nunnally J, Bernstein I. Psychometric Theory. McGraw-Hill; New York, NY: 1994. [Google Scholar]

- Parsell G, Bligh J. The development of a questionnaire to assess the readiness of health care students for interprofessional learning (RIPLS). Medical Education. 1999;32(2):95–100. doi: 10.1046/j.1365-2923.1999.00298.x. [DOI] [PubMed] [Google Scholar]

- Reeves S, Goldman J, Gilbert J, Tepper J, Silver I, Suter E, et al. A scoping review to improve conceptual clarity of interprofessional interventions. Journal of Interprofessional Care. 2011:167–174. doi: 10.3109/13561820.2010.529960. doi: 10.3109/13561820.2010.529960. [DOI] [PubMed] [Google Scholar]

- Reeves S, Perrier L, Goldman J, Freeth D, Zwarenstein M. Interprofessional education: effects on professional practice and healthcare outcomes (update). The Cochrane Database of Systematic Reviews. 2013;3:CD002213. doi: 10.1002/14651858.CD002213.pub3. doi: 10.1002/14651858.CD002213.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reeves S, Zwarenstein M, Goldman J, Barr H, Freeth D, Hammick M, et al. Interprofessional education: effects on professional practice and health care outcomes. The Cochrane Collaboration and Database of Systemic Reviews. 2008:CD002213. doi: 10.1002/14651858.CD002213.pub2. doi: 10.1002/14651858.CD002213.pub2. [DOI] [PubMed] [Google Scholar]

- Tabachnick BG, Fidell LS. Using Multivariate Statistics. 6th ed. Allyn & Bacon; Needham Heights, MA: 2012. [Google Scholar]

- Thannhauser J, Russell-Mayhew S, Scott C. Measures of interprofessional education and collaboration. Journal of Interprofessional Care. 2010;24(4):336–349. doi: 10.3109/13561820903442903. doi: 10.3109/13561820903442903. [DOI] [PubMed] [Google Scholar]

- World Health Organization . Framework for action on interprofessional education and collaborative practice. World Health Organization; Geneva: 2010. [PubMed] [Google Scholar]

- Zorek J, Raehl C. Interprofessional education acceditation standards in the USA: A comparative analysis. Journal of Interprofessional Care. 2013;27(2):123–130. doi: 10.3109/13561820.2012.718295. doi: 10.3109/13561820.2012.718295. [DOI] [PubMed] [Google Scholar]