Abstract

Screaming is arguably one of the most relevant communication signals for survival in humans. Despite their practical relevance and their theoretical significance as innate [1] and virtually universal [2, 3] vocalizations, what makes screams a unique signal and how they are processed is not known. Here, we use acoustic analyses, psychophysical experiments, and neuroimaging to isolate those features that confer to screams their alarming nature, and we track their processing in the human brain. Using the modulation power spectrum (MPS, [4, 5]), a recently developed neurally-informed characterization of sounds, we demonstrate that human screams cluster within restricted portion of the acoustic space (between ∼30–150 Hz modulation rates) that corresponds to a well-known perceptual attribute, roughness. In contrast to the received view that roughness is irrelevant for communication [6], our data reveal that the acoustic space occupied by the rough vocal regime is segregated from other signals, including speech, a pre-requisite to avoid false-alarms in normal vocal communication. We show that roughness is present in natural alarm signals as well as in artificial alarms, and that the presence of roughness in sounds boosts their detection in various tasks. Using fMRI, we show that acoustic roughness engages subcortical structures critical to rapidly appraise danger. Altogether, these data demonstrate that screams occupy a privileged acoustic niche that, being separated from other communication signals, ensures their biological and ultimately social efficiency.

Results

Screams result from the bifurcation of regular phonation to a chaotic regime, thereby making screams particularly difficult to predict and ignore [2]. While previous research in humans suggested that acoustic parameters such as ‘jitter’ and ‘shimmer’ [7-9] are modulated in screams, whether such dynamics and parameters correspond to a specific acoustic regime and how such sounds impact receivers' brains remain unclear.

To characterize the spectro-temporal specificity of screams, we used the modulation power spectrum (MPS) (Figure 1). The MPS, beyond classical representations such as the waveform and spectrogram (Figure 1A and B, upper and middle panels), displays the time-frequency power in modulation across both spectral and temporal dimensions (Figure 1A and B, lower panels). The MPS has become a particularly useful tool in auditory neuroscience because it provides a neurally and ecologically relevant parameterization of sounds [5, 6, 10].

Figure 1.

The modulation power spectrum (MPS): examples and ecological relevance.

(A) Representations of a 1000 Hz tone amplitude modulated at 25 Hz. Top, waveform. Middle, spectrogram. Bottom, MPS: power modulations in the spectral (y-axis) and temporal (x-axis) domains. 25 Hz modulation highlighted.

(B) As in A. for a spoken sentence.

(C) Modulations in human vocal communication. Perceptual attributes occupy distinct areas of the MPS and encode distinct categories of information. Modulations corresponding to pitch (blue) carry gender/size information [6, 11]. Temporal modulations below 20 Hz (green) encode linguistic meaning [13, 15]. Orange rectangles delimit ‘roughness’ [16, 17]. This unpleasant attribute has not yet been linked to ecologically relevant functions. We hypothesize that this part of the MPS space might be dedicated to alarm signals.

In speech, spectro-temporal attributes encode distinct categories of information, which in turn occupy distinct areas of the MPS (Figure 1B and C). For instance, whereas the fundamental frequency of the voice informs the listener about the gender of the speaker [6, 11, 12] (Figure 1C, blue region), slow temporal fluctuations carry cues such as the syllabic or prosodic information that underlie parsing and decoding speech to extract meaning [13-15] (Figure 1C, green region). Interestingly, the large region of the MPS that corresponds to temporal modulations between 30 and 150 Hz (orange zones in Figure 1C) has, to date, not been associated with any ecological function – and is generally considered irrelevant for human communication [6]. This spectro-temporal region corresponds to a perceptual attribute called roughness [16, 17]. Sounds in this region correspond to amplitude modulations ranging from 30 to 150 Hz and typically induce unpleasant, rough auditory percepts.

To ensure communication efficacy, screams should be acoustically well segregated from other communication signals. Conventional features that can further modulate or accentuate speech, such as increased loudness or high pitch, contribute to potentiate fear responses [18-20] but are not sufficiently distinctive, as these attributes accompany a wide range of utterances. Therefore we conjectured that screams might occupy a dedicated part of the MPS, so that false alarms, i.e. confusions with non-alarm signals, are unlikely to occur. The roughness region (Figure 1C) is unexploited by speech, and therefore constitutes a plausible candidate space to encode alarm communication signals.

Screams selectively exploit the roughness acoustic regime that is unused by speech

To examine whether screams versus other communication sounds (speech) exploit distinct spectro-temporal features, we compared the MPS of screamed and spoken utterances with equivalent communicative content. We analyzed the MPS of 4 types of vocalizations, recorded from nineteen participants, according to 2 factors: scream and sentence (Figure 2A and B). A 2-way repeated-measures ANOVA was performed using the MPS of each vocalization. As hypothesized, screamed vocalizations contain stronger temporal modulations in the 30–150 Hz roughness window than non-screamed ones (Figure 2C, left; averaged clusters statistic: F = 64.8, P = 2.5 × 10-6, see also Figure S1). On the other hand, consistent with the literature [6, 14], linguistic information in sentences (including syllabic and prosodic cues) is encoded in slower temporal modulations (<20 Hz, Figure 2C, right; averaged clusters statistic: F(2,40) = 76.5, P = 0.001). This finding demonstrates that speech mainly uses slow temporal modulations (green region in Figure 1C) whereas screams occupy the unused spectro-temporal modulation space (orange rectangles in Figure 1C). Our observations further support the view that signals communicating distinct types of information (i.e. danger versus gender versus meaning) are segregated into distinct parts of the acoustic sensorium that match perceptual attributes, and that ‘rough’ temporal modulations between 30 and 150 Hz are used to communicate danger.

Figure 2.

Acoustic characterization of screamed vocalizations.

(A) Example spectrograms of the 4 utterance types, produced by one participant: screamed vocalizations, vowel [a] (top left); sentence (top right); neutral vocalizations, vowel [a] (bottom left); spoken sentence (bottom right).

(B) Average MPS across participants (n=19) for each type. For the factorial analysis, the sentence factor (vertical dashed line) determines whether the utterance contains sentential information or the vowel [a]; the scream factor (horizontal dashed line) determines whether the utterance was screamed or neutral.

(C) Main effect of scream.

(D) Main effect of sentence.

In B and C, contours delimit statistical thresholds of P < 0.001 (Bonferroni corrected).

Roughness is exploited in both natural and artificial alarm signals

We next tested the hypothesis that roughness in screams is selectively used to signal danger and should therefore not be exploited to the same degree in other kinds of communication signals. We performed a series of comparisons with other, vocal and non-vocal, stimuli. We first compared the average magnitude of temporal modulations in the roughness range (30– 150 Hz) between sentential vocalizations (normal speaking), musical vocalizations (a capella singing) and screaming (Figure 3A, left). The MPS values in the roughness range were significantly stronger in screams than in sung (unpaired t-test: P = 6 × 10-19) and spoken (unpaired t-test: P = 8 × 10-27) vocalizations. In order to explore whether rough sound modulations might be used in other languages, we compared the roughness index between English, French and Chinese neutrally spoken sentences. We found that roughness indices did not differ across languages (F = 0.04, P = 0.957, Figure S2) and were consistently smaller than those of screamed sentences in English (F = 24.97, P = 9 × 10-14). Together, these results suggest that, regardless of communicative intention, only screamed vocalizations (whether sentential or not), maintain their invariant niche in the rough modulation regime.

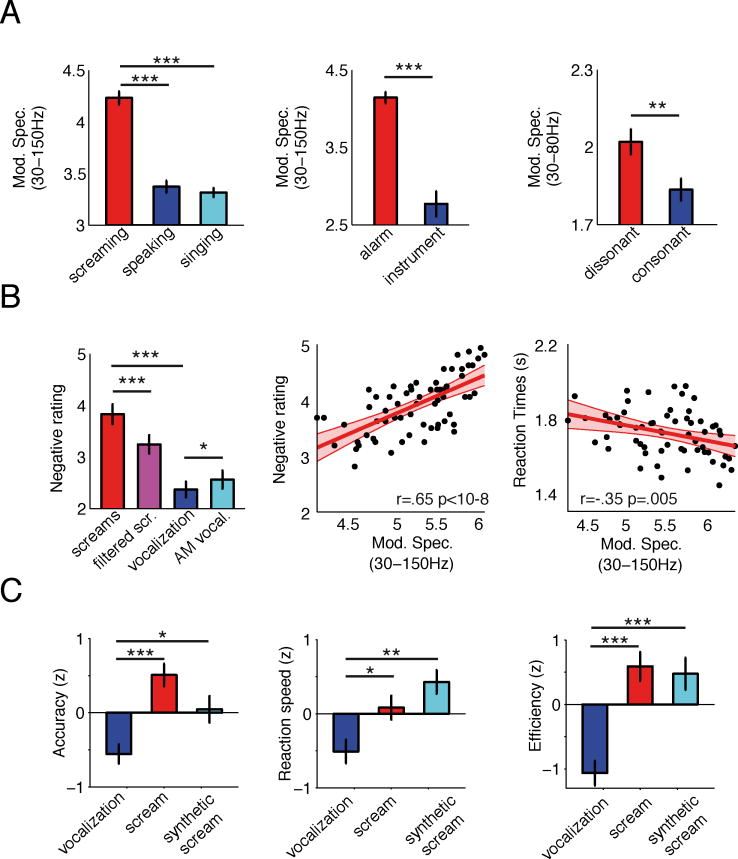

Figure 3.

Roughness modulations: natural and artificial sounds and behavior.

(A) MPS roughness across categories. Left: screams, neutral speech, and musical (a capella) vocalizations. Center: artificial alarms versus musical instruments. Right: dissonant versus consonant sounds.

(B) Perceived fear induced by natural and acoustically altered vocalizations. Left: Averaged rating (on a 1–5 negative scale) across participants, as a function of vocalization type: scream, filtered scream, neutral vocalization [a] and amplitude modulated (AM) neutral vocalization. Middle: Negative subjective ratings increase with MPS values in roughness range (red shading: 95% regression confidence interval). Right: average reaction times decrease with increasing roughness.

(C) Spatial localization of screams, neutral vocalizations, and artificial screams. Left: localization accuracy. Center: speed. Right: efficiency.

*** P < 0.001; ** P < 0.01; * P < 0.05; Error bars indicate SEM.

If sound roughness is an effective feature for screams to constitute an alarm signal, it might also be exploited by man-made technological devices that generate non-biological acoustic signals to alert humans to danger. To address this, we compared the MPS values in the roughness range of artificial alarm signals (buzzers, horns, etc.; Table S1) to that of musical instruments (e.g strings or keyboards), which also have spectro-temporally complex structure but are not a priori designed to trigger danger-related reactions. This comparison (Figure 3A, center) reveals that alarm but not musical sounds exploit scream-like rough modulations (unpaired t-test: P = 9 × 10-10). The fact that roughness appears to be used in the design of artificial alarm signals in human culture, perhaps unwittingly, underlines both the perceptual salience and ecological relevance of rough sounds. This discovery is intriguing, as roughness is barely ever mentioned as a relevant feature in the applied acoustics literature on alarm signals [21].

Dissonant intervals elicit temporal modulations in the (screams') rough regime

The observation that roughness induces an unpleasant percept is reminiscent of the foundational work of Hermann von Helmholtz on musical consonance [16]. The origin of consonance has been debated for centuries. Empirical studies generally point to roughness [22] and harmonicity [23] as factors underlying the perception of dissonance [24]. Current views suggest that roughness is unlikely to be the main or unique determinant of dissonance (harmonicity matters, as does experience and cultural exposure [25]). However, the fact that roughness is exploited to communicate danger via screams argues for its behavioral and neural relevance and points to a possible (if not unique) biological origin of dissonance. One possibility is that sound intervals that contain rough modulation frequencies elicit responses in those neural circuits that induce the unpleasant percept in response to roughness. By comparing the roughness values provided by the MPS analysis of a set of consonant and dissonant tone intervals (Figure 3A, right; see Table S2), we find that dissonant intervals generate stronger modulations in the lower half (30–80 Hz) of the roughness window (unpaired t-test: P = 0.006). This result reveals that dissonant sounds elicit temporal modulations in the spectro-temporal regime that is also exploited to communicate danger and hence nicely dovetails von Helmholtz's intuition that roughness constitutes one possible biological origin of dissonance. Note that the aim here is merely to revisit Helmholtz's hypothesis in the light of the observation that there is a surprising convergence between roughness, screams, and dissonance.

Screams roughness confers a behavioral advantage to react efficiently

We next addressed whether roughness is merely incidentally and epiphenomenally stronger in screams or whether this modulation window is universally exploited because of its causal relevance to behavior. We conjectured that if roughness informs conspecifics about danger, rough screams should induce more fearful subjective percepts than less rough vocalizations. To address this hypothesis, we asked twenty participants to rate the fear induced by screams and neutral vocalizations [a] on a subjective scale, ranging from neutral (1) to fearful (5). To assess the effect of rough modulations on perceived fear, we tested two additional conditions in which (i) we low-pass filtered screams' temporal modulations in the roughness range (<20 Hz) and (ii) we added rough temporal modulations to neutral vocalizations (see Supplemental Experimental Procedures). As expected, the data show (Figure 3B, left) that screams are perceived as more fearful than neutral vocalizations (paired t-test: P = 4 × 10-9). Furthermore, screams were perceived as more fearful than filtered screams (paired t-test: P = 4 × 10-4); in complementary fashion, modulating neutral vocalizations in the roughness range increased perceived fear (paired t-test: P = 0.045).

To test whether this effect generalizes to artificial alarm signals, we performed a similar experiment using the same acoustic alteration procedures on the set of artificial sounds. Thirteen participants rated the perceived ‘alarmness’ on a subjective scale, ranging from neutral (1) to alarming (5). As found for human vocalizations, the data show (Figure S3) that alarm sounds are perceived as more alarming than instrument sounds (paired t-test: P = 8 × 10-9). Also, alarm sounds were perceived as more alarming than filtered alarm sounds (paired t-test: P = 0.035), whereas musical instrument sounds modulated in the roughness range yielded increased perceived alarmness ratings (paired t-test: P = 5 × 10-5). Taken together, these results are consistent with the hypothesis that roughness contributes to induce an aversive percept, regardless of the nature (vocal or artificial) of the sound. We further tested whether screams' roughness scaled with subjective ratings, querying eleven participants who rated the perceived fear induced by scream recordings (Table S3). The data reveal (Figure 3B, middle) that the rougher the screams, the more fearful the induced emotional reaction (Pearson's r = 0.65, P = 10-8). Interestingly, the speed of behavioral responses (Figure 3B, right) also scaled with scream roughness (Pearson's r = -0.35, P = 0.005). Roughness hence not only increases the perceived fear valence of screams but also enables a faster appraisal of danger.

Rapid, accurate evaluation of danger (as indexed by the valence of screams) is presumably crucial for adaptive behavior. In that context, the precise location of the scream source in the environment is of critical relevance. To assess whether roughness improves the ability to localize vocalizations, we implemented a spatial localization behavioral experiment. We measured in twenty-one participants the speed and accuracy to detect whether normal vocalizations and screams were presented on their left or right sides using inter-aural time difference cues (ITD). In addition to natural vocalizations, we also tested a control set of ‘synthetic screams’, constructed by modulating neutral vocalizations in the roughness range (Figure S4). As anticipated, accuracy and speed varied as a function of vocalization type (Figure 3C, left and center panels; repeated-measures ANOVA: accuracy: F(2,40) = 7.01, P = 0.004, reaction speed: F(2, 40) = 5.8, P = 0.006). Participants were both more accurate and faster at localizing natural (paired t-test, for accuracy: P = 3 × 10-6; reaction speed: P = 0.013) and synthetic screams (t-test, for accuracy: P = 0.03; reaction speed: P = 0.003) than normal vocalizations. To control for potential speed-accuracy trade-off, we tested the combined effects of speed and accuracy using a composite measure, efficiency. This analysis reveals a robust effect of vocalization type on localization efficiency (Figure 3C, right; repeated-measures ANOVA: F(2,40) = 11.63, P = 2 × 10-4) and establishes that spatial localization performance is better for both natural screams (t-test, P = 1.5 × 10-6) and synthetic screams (t-test, P = 6 × 10-4) than for regular vocalizations. Interestingly, natural and synthetic screams are equally efficient (t-test, P = 0.789). The fact that ‘adding’ roughness to normal vocalizations considerably improves localization efficiency underscores the causal importance of this acoustic feature.

The current findings show that rough temporal modulations are (i) characteristic of screams, (ii) selectively exploited to communicate danger across signal types, (iii) perceived as more fear inducing, and (iv) confer a behavioral advantage by increasing speed and accuracy of spatially localizing screamed vocalizations. These findings plausibly suggest that rough vocalizations recruit dedicated neural processes that prioritize fast reaction to danger over detailed contextual evaluation.

Rough temporal modulations induce selective responses in the amygdala

Since the current work is the first, to our knowledge, to identify the relevance of roughness for auditory processing of danger, we assessed the neural responses to rough temporal modulations. We performed an fMRI experiment in which sixteen participants listened to sounds selected for diversity of acoustic content and levels of roughness. As above, we used three different categories of sounds in a neutral and unpleasant version, respectively: human vocalizations (normal voices, screams), artificial sounds (instruments, alarms), and musical intervals (consonant, dissonant; Tables S2–S4). We identified regions involved in processing unpleasantness by contrasting responses to unpleasant versus neutral sounds (regardless of sound category). This analysis revealed that unpleasant sounds induce larger hemodynamic responses in the bilateral anterior amygdala and primary auditory cortices (Figure 4A and Table S4). To determine whether these regions encode specific subparts of the MPS, we implemented a reverse-correlation approach and related single-trial BOLD response estimates with the MPS of the corresponding sound (after removal of the variance explained by the valence of the stimuli, as indexed by individual participant ratings, see [26]). We found that the amygdala – but not auditory cortex – is specifically sensitive to temporal modulations in the roughness range (Figure 4B). These results demonstrate that rough sounds specifically target neural circuits involved in fear/danger processing [27, 28] and hence provide evidence that roughness constitutes an efficient acoustic attribute to trigger adapted reactions to danger.

Figure 4.

fMRI measurement of roughness and screams.

(A) Main effect of unpleasantness across all sound categories. Unpleasant (rough) sounds induce larger responses bilaterally in the amygdala (left) and the primary auditory cortex (right). Contrasts are rendered at P < 0.005 threshold for display; see Table S4 for a summary of activations and associated anatomical coordinates.

(B) Reverse-correlation analysis between single-trial beta values and MPS profiles of the corresponding sounds. The amygdala – but not primary auditory cortex – is maximally sensitive to the restricted spectro-temporal window corresponding to roughness. Contours delimit statistical thresholds of P < 0.05, cluster-corrected for multiple comparisons.

In this series of acoustic, behavioral and neuroimaging experiments we characterized the spectral modulation of various natural and artificial sounds and demonstrated the ecological, behavioral, and neural relevance of roughness, a well-known perceptual attribute hitherto unrelated to any specific communicative function. The findings support the view that roughness, as featured in screams, improves the efficiency of warning signals, possibly by targeting sub-cortical neural circuits that promote the survival of the individual and speed up reaction to danger.

Experimental Procedures

A bank of sounds containing several types of human vocalizations (screams and sentences), artificial sounds (alarm and instrument sounds) and sound intervals (pure tone intervals) was constructed for subsequent acoustic characterization. Sounds were edited to last 1000 ms and were root mean square (RMS) normalized. In order to quantify the power in temporal and spectral modulations, the two-dimensional Fourier transform of the spectrogram was calculated to obtain the Modulation Power Spectrum (MPS) of each sound [6]. A repeated-measures ANOVA (n=19 speakers) was performed on the vocalizations' MPS to test for specific scream and sentence effects. After identifying a restricted window in the roughness domain (30–150 Hz) for screamed vocalizations, we compared the averaged MPS values in this window between the different categories of the sound bank using ANOVAs and unpaired t-tests.

The influence of MPS values in the roughness range was assessed in four behavioral experiments. The first three experiments tested the relationship between roughness and behavioral ratings in both natural and artificial sounds. The fourth experiment tested the influence of roughness on the spatial localization of vocalizations. We measured the localization performance, reaction times and efficiency during the perception of lateralized vocalizations [a], screams and synthetic screams (100 Hz amplitude modulated vocalizations [a]).

Finally, we used fMRI to explore the neural structures implicated in the processing of such sounds. We executed a sparse-sampling experiment in which participants rated the unpleasantness (on a 1–5 scale) of three types of sounds (human vocalizations, artificial sounds and tone intervals). After identifying the brain regions that responded to the unpleasantness of these sounds, a reverse-correlation approach was used to investigate the relative hemodynamic sensitivity of these regions to sub-regions of the MPS.

Supplementary Material

Highlights.

We provide the first evidence of a special acoustic regime (roughness) for screams.

Roughness is used in both natural and artificial alarm signals.

Roughness confers a behavioral advantage to react rapidly and efficiently.

Acoustic roughness selectively activates amygdala, involved in danger processing.

Acknowledgments

We thank Jan Manent for useful discussions, Jess Rowland, Tobias Overath, Jean M. Zarate, Mariane Haddad and Josh Barocas for technical assistance; and Gregory Hickok, Shihab A. Shamma, Gregory B. Cogan, Nai Ding and Keith B. Doelling for comments on the manuscript. This work was supported, in part, by the Fondation Fyssen and the Philippe Foundation (LHA), the Fondation Louis Jeantet (LHA, AK) and 2R01DC05660 (DP).

Footnotes

Supplemental Information: Supplemental Information includes four figures, four tables, Supplemental Discussion and Supplemental Experimental Procedures and can be found with this article online.

Author Contributions: LHA designed the experiments, performed the research, analyzed the data and wrote the manuscript, AF contributed to analysis tools, AK, ALG and DP wrote the manuscript.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Lieberman P. The Physiology of Cry and Speech in Relation to Linguistic Behavior. In: Lester B, Zachariah Boukydis CF, editors. Infant Crying. Springer; US: 1985. pp. 29–57. [Google Scholar]

- 2.Fitch WT, Neubauer J, Herzel H. Calls out of chaos: the adaptive significance of nonlinear phenomena in mammalian vocal production. Animal Behaviour. 2002;63:407–418. [Google Scholar]

- 3.Lingle S, Wyman MT, Kotrba R, Teichroeb LJ, Romanow CA. What makes a cry a cry? A review of infant distress vocalizations. Current Zoology. 2012;58 [Google Scholar]

- 4.Chi T, Gao Y, Guyton MC, Ru P, Shamma S. Spectro-temporal modulation transfer functions and speech intelligibility. J Acoust Soc Am. 1999;106:2719–2732. doi: 10.1121/1.428100. [DOI] [PubMed] [Google Scholar]

- 5.Theunissen FE, Elie JE. Neural processing of natural sounds. Nat Rev Neurosci. 2014;15:355–366. doi: 10.1038/nrn3731. [DOI] [PubMed] [Google Scholar]

- 6.Elliott TM, Theunissen FE. The modulation transfer function for speech intelligibility. PLoS Comput Biol. 2009;5:e1000302. doi: 10.1371/journal.pcbi.1000302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kato K, Ito A. Intelligent Information Hiding and Multimedia Signal Processing, 2013 Ninth International Conference on. IEEE; 2013. Acoustic Features and Auditory Impressions of Death Growl and Screaming Voice; pp. 460–463. [Google Scholar]

- 8.Scherer KR. Vocal affect expression: a review and a model for future research. Psychological bulletin. 1986;99:143. [PubMed] [Google Scholar]

- 9.Rothganger H, L# auudge W, Grauel EL. Jitter-index of the fundamental frequency of infant cry as a possible diagnostic tool to predict future developmental problems. Early Child Development and Care. 1990;65:145–152. [Google Scholar]

- 10.Chi T, Ru P, Shamma SA. Multiresolution spectrotemporal analysis of complex sounds. J Acoust Soc Am. 2005;118:887–906. doi: 10.1121/1.1945807. [DOI] [PubMed] [Google Scholar]

- 11.Fant G. Acoustic theory of speech production: with calculations based on X-ray studies of Russian articulations. Vol. 2. Walter de Gruyter; 1971. [Google Scholar]

- 12.Pisanski K, Rendall D. The prioritization of voice fundamental frequency or formants in listeners' assessments of speaker size, masculinity, and attractiveness. The Journal of the Acoustical Society of America. 2011;129:2201–2212. doi: 10.1121/1.3552866. [DOI] [PubMed] [Google Scholar]

- 13.Rosen S. Temporal information in speech: acoustic, auditory and linguistic aspects. Philos Trans R Soc Lond B Biol Sci. 1992;336:367–373. doi: 10.1098/rstb.1992.0070. [DOI] [PubMed] [Google Scholar]

- 14.Drullman R, Festen JM, Plomp R. Effect of reducing slow temporal modulations on speech reception. J Acoust Soc Am. 1994;95:2670–2680. doi: 10.1121/1.409836. [DOI] [PubMed] [Google Scholar]

- 15.Giraud AL, Poeppel D. Cortical oscillations and speech processing: emerging computational principles and operations. Nat Neurosci. 2012;15:511–517. doi: 10.1038/nn.3063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.v Helmholtz H. Die Lehre von den Tonempfindungen als Physiologische Grundlage für die Theorie der Musik. Braunschweig: F. Vieweg und Sohn; 1863. [PubMed] [Google Scholar]

- 17.Fastl H, Zwicker E. Psychoacoustics: facts and models 2001 [Google Scholar]

- 18.Bach DR, Schächinger H, Neuhoff JG, Esposito F, Di Salle F, Lehmann C, Herdener M, Scheffler K, Seifritz E. Rising sound intensity: an intrinsic warning cue activating the amygdala. Cerebral Cortex. 2008;18:145–150. doi: 10.1093/cercor/bhm040. [DOI] [PubMed] [Google Scholar]

- 19.Maier JX, Ghazanfar AA. Looming biases in monkey auditory cortex. The Journal of neuroscience. 2007;27:4093–4100. doi: 10.1523/JNEUROSCI.0330-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zeskind PS, Collins V. Pitch of infant crying and caregiver responses in a natural setting. Infant Behavior and Development. 1987;10:501–504. [Google Scholar]

- 21.Lemaitre G, Susini P, Winsberg S, McAdams S, Letinturier B. The sound quality of car horns: Designing new representative sounds. ACTA Acustica united with Acustica. 2009;95:356–372. [Google Scholar]

- 22.Terhardt E. Pitch, consonance, and harmony. J Acoust Soc Am. 1974;55:1061–1069. doi: 10.1121/1.1914648. [DOI] [PubMed] [Google Scholar]

- 23.McDermott JH, Lehr AJ, Oxenham AJ. Individual differences reveal the basis of consonance. Curr Biol. 2010;20:1035–1041. doi: 10.1016/j.cub.2010.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McDermott JH, Oxenham AJ. Music perception, pitch, and the auditory system. Curr Opin Neurobiol. 2008;18:452–463. doi: 10.1016/j.conb.2008.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lundin RW. Toward a cultural theory of consonance. The Journal of Psychology. 1947;23:45–49. [Google Scholar]

- 26.Kumar S, von Kriegstein K, Friston K, Griffiths TD. Features versus feelings: dissociable representations of the acoustic features and valence of aversive sounds. J Neurosci. 2012;32:14184–14192. doi: 10.1523/JNEUROSCI.1759-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Scott SK, Young AW, Calder AJ, Hellawell DJ, Aggleton JP, Johnsons M. Impaired auditory recognition of fear and anger following bilateral amygdala lesions. Nature. 1997;385:254–257. doi: 10.1038/385254a0. [DOI] [PubMed] [Google Scholar]

- 28.Phelps EA, LeDoux JE. Contributions of the Amygdala to Emotion Processing: From Animal Models to Human Behavior. Neuron. 2005;48:175–187. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.