Abstract

Speakers around the globe gesture when they talk, and young children are no exception. In fact, children's first foray into communication tends to be through their hands rather than their mouths. There is now good evidence that children typically express ideas in gesture before they express the same ideas in speech. Moreover, the age at which these ideas are expressed in gesture predicts the age at which the same ideas are first expressed in speech. Gesture thus not only precedes, but also predicts, the onset of linguistic milestones. These facts set the stage for using gesture in two ways in children who are at risk for language delay. First, gesture can be used to identify individuals who are not producing gesture in a timely fashion, and can thus serve as a diagnostic tool for pinpointing subsequent difficulties with spoken language. Second, gesture can facilitate learning, including word learning, and can thus serve as a tool for intervention, one that can be implemented even before a delay in spoken language is detected.

Even before young children begin to use words, they gesture. Moreover, gesture does not disappear from a young child's communicative repertoire after the onset of speech. Rather, it becomes integrated with speech, often serving a communicative function in its own right. For example, a child says, “open,” while pointing at a box—gesture makes it clear which object the child wants open. Thus, at certain times in development, gesture can extend a child's range of communicative devices. Importantly, there is variability across individual children in the way they use gesture, and this variability can be used to predict differences in the children's onset of linguistic milestones (Goldin-Meadow, 2003). My first goal in this paper is to review the evidence that gesture not only precedes the onset of linguistic constructions in speech, but also predicts them.

I then consider the implications of these findings for children at risk for language delay. Because early gesture indexes when a child is likely to acquire a particular linguistic construction in speech, it has the potential to serve as a diagnostic tool, one that can be used to identify children who are likely to miss the milestone before the delay is detectable in speech. My second goal is to provide evidence that gesture can serve this diagnostic function.

My third goal is to suggest that gesture not only can index a child's potential for linguistic growth, but can also play a role in bringing that growth about. Encouraging children to gesture on a task has been found to facilitate their acquisition of that task (e.g., Goldin-Meadow, Cook & Mitchell, 2009). I provide evidence that telling children to gesture at very early ages can increase the size of their spoken vocabularies. Gesture thus has the potential to be used as a tool for intervention.

Gesture not only precedes, but also predicts the onset of linguistic milestones

At a time in development when children are limited in the words they know and use, gesture offers a way to extend their communicative range. Children typically begin to gesture between 8 and 12 months (Bates, 1976; Bates, Benigni, Bretherton, Camaioni, & Volterra, 1979), first producing deictic gestures (pointing at objects, people, and places in the immediate environment, or holding up objects to draw attention to them), and later producing iconic gestures that capture aspects of the objects, action or attributes they represent (e.g., flapping arms to refer to a bird or to flying, Iverson, Capirci, & Caselli, 1994). The fact that gesture allows children to communicate meanings that they do not yet express in speech opens up the possibility that gesturing itself facilitates language learning. If so, changes in gesture should not only predate, but they should also predict, changes in language. And they do, for a variety of linguistic constructions.

Vocabulary

The more children gesture early on, the more words they are likely to have in their spoken vocabularies later in development (Acredolo & Goodwyn, 1988; Rowe, Özçalıskan, & Goldin-Meadow, 2008; Colonnesi, Stams, Koster, & Noom, 2010). In fact, the well-described disparity in vocabulary size between children from low vs. high socioeconomic status (SES) homes when they first enter school (Hart & Risely, 1995) can be traced, in part, to the number of different gesture types they produced at 14 months (Rowe & Goldin-Meadow, 2009a).

Although child gesture and SES are correlated, they are not perfectly aligned, allowing us to explore the impact of both variables on vocabulary growth. Rowe, Raudenbush and Goldin-Meadow (2012) modeled cumulative vocabulary growth (using the number of different words children produced at 4-month intervals between the ages of 14 and 46 months) and explored the impact of child gesture and SES on this growth. To see the joint role of child gesture and family SES, they plotted four hypothetical growth trajectories based on high vs. low (75th vs. 25th percentile) SES levels and high vs. low (75th vs. 25th percentile) gesture levels (holding parent input constant at its mean). At the earliest ages, the growth trajectories separate into two lines based only on child gesture—vocabulary growth is lower for low-gesturers than for high-gesturers, regardless of SES. However, around 2 years of age, the trajectories begin to separate and the effect of SES becomes more apparent. From this point on, estimated vocabulary growth is highest for high-gesturers in high SES families, and lowest for low-gesturers in low-SES families. Around 3 years of age, the two middle trajectories become virtually indistinguishable, indicating that (controlling for input) a high-gesturer in a low SES family typically has a vocabulary by age 3 that has caught up to the vocabulary of a low-gesturer in a high SES family.

Not only can we predict the size of children's spoken vocabulary from looking at the size of their early gesture vocabulary, but we can also predict which particular words will enter a child's spoken vocabulary by looking at the objects that child indicated using deictic gestures several months earlier (Iverson & Goldin-Meadow, 2005). For example, a child who does not know the word “dog,” but communicates about dogs by pointing at them is likely to learn the word “dog” within three months (Iverson & Goldin-Meadow, 2005). Gesture paves the way for children's early words.

Sentences

Even though they treat gestures like words in some respects, children very rarely combine their gestures with other gestures, and if they do, the phase tends to be short-lived (Goldin-Meadow & Morford, 1985). But children often combine gestures with words, and they produce these gesture+speech combinations well before they produce word+word combinations. Children's earliest gesture+speech combinations contain gestures that convey information that complements the information conveyed in speech; for example, pointing at a bottle while saying “bottle” (Capirci, Iverson, Pizzuto, & Volterra, 1996; de Laguna, 1927; Greenfield & Smith, 1976; Guillaume, 1927; Leopold, 1939–49). Soon after, children begin to produce combinations in which gesture conveys information that is different from and supplements the information conveyed in the accompanying speech; for example, pointing at a bottle while saying “here” to request that the bottle be moved to a particular spot (Goldin-Meadow & Morford, 1985; Greenfield & Smith, 1976; Masur, 1982, 1983; Morford & Goldin-Meadow, 1992; Zinober & Martlew, 1985).

As in the acquisition of words, we find that changes in gesture (in this case, changes in the relationship gesture holds to the speech it accompanies) predict changes in language (the onset of sentences). The age at which children first produce supplementary gesture+speech combinations (e.g., point at bird+“nap”) reliably predicts the age at which they first produce two-word utterances (e.g., “bird nap”) (Goldin-Meadow & Butcher, 2003; Iverson, Capirci, Volterra, & Goldin-Meadow, 2008; Iverson & Goldin-Meadow, 2005). The age at which children first produce complementary gesture+speech combinations (e.g., point at bird+“bird”) does not.

Moreover, supplementary combinations selectively relate to the syntactic complexity of children's later sentences. Rowe and Goldin-Meadow (2009b) found that the number of supplementary gesture+speech combinations children produce at 18 months reliably predicts the complexity of their sentences (as measured by the IPSyn, Scarborough, 1990) at 42 months, but the number of different meanings they convey in gesture at 18 months does not. Conversely, the number of different meanings children convey in gesture at 18 months reliably predicts their spoken vocabulary (as measured by the Peabody Picture Vocabulary Test, PPVT, Dunn & Dunn, 1997) at 42 months, but the number of supplementary gesture+speech combinations they produce at 18 months does not. Gesture is thus not merely an early index of global communicative skill, but is a harbinger of specific linguistic steps children will soon take—early gesture words predict later spoken vocabulary, and early gesture sentences predict later spoken syntax.

Gesture does more than open the door to sentence construction—the particular gesture+speech combinations children produce predict the onset of corresponding linguistic milestones. Özçalıskan and Goldin-Meadow (2005) observed children at 14, 18, and 22 months, and found that the types of supplementary combinations the children produced changed over time and, critically, presaged changes in their speech. For example, the children began producing “two-verb” complex sentences in gesture+speech combinations (“I like it” + EAT gesture) several months before they produced complex sentences entirely in speech (“help me find it”). Supplementary gesture+speech combinations thus continue to provide stepping-stones to increasingly complex linguistic constructions.

Nominal Constituents

As mentioned earlier, the age at which children first produce complementary gesture+speech combinations in which gesture indicates the object labeled in speech (e.g., point at bird+“bird”) does not reliably predict the onset of two-word utterances (Iverson & Goldin-Meadow, 2005), reinforcing the point that it is the specific way in which gesture is combined with speech, rather than the ability to combine gesture with speech per se, that signals the onset of future linguistic achievements. The gesture in a complementary gesture+speech combinational has traditionally been considered redundant with the speech it accompanies but, as Clark and Estigarribia (2011) point out, gesture typically locates the object being labeled and, in this sense, has a different function from speech. Complementary gesture+speech combinations have, in fact, recently been found to point forward—but to the onset of nominal constituents rather than to the onset of sentential constructions.

If children are using nouns to classify the objects they label (as recent evidence suggests infants do when hearing spoken nouns; Parise & Csibra, 2012), then producing a complementary point with a noun could serve to specify an instance of that category. In this sense, a pointing gesture could be functioning like a determiner. Cartmill, Hunsicker and Goldin-Meadow (2014) analyzed all of the utterances containing nouns that a sample of children produced between 14 and 30 months, and focused on (a) utterances containing an unmodified noun combined with a complementary pointing gesture (e.g, point at ball+“ball”), and (b) utterances containing a noun modified by a determiner (e.g., “the/a/that ball”).

Cartmill and colleagues (2014) found that the age at which children first produced complementary point+noun combinations (point at ball+”ball”) selectively predicted the age at which the children first produced determiner+noun combinations (“the ball”). Not only did complementary point+noun combinations precede and predict the onset of determiner+noun combinations in speech, but these point+noun combinations also decreased in number once children gained productive control over determiner+noun combinations, suggesting a tight relation between the two types of constructions. When children point to and label an object simultaneously, they appear to be on the cusp of developing an understanding of nouns as a modifiable unit of speech.

Perspective-taking in Narratives

Gesture has also been found to predict changes in narrative structure later in development. Demir, Levine and Goldin-Meadow (2014) asked children to retell a cartoon at age 5 and then again at ages 6, 7, and 8. Although their narrative structure continued to improve over the 4-year period, the children showed no evidence of framing their narratives from a character's perspective in speech even at age 8. However, at age 5, many of the children were able to take a character's viewpoint into account in gesture. For example, to describe a woodpecker's actions, a child moved her upper body and head back and forth, thus assuming the perspective of the bird (as opposed to moving a beak-shaped hand back and forth and thus taking the perspective of someone looking at the bird, cf. McNeill, 1992). Moreover, the children who produced character-viewpoint gestures at age 5 were more likely than children who did not produce character-viewpoint gestures to produce well-structured stories in the later years. Gesture thus continues to act as a harbinger of change as it assumes new roles in relation to discourse and narrative structure.

Why does early gesture forecast later linguistic skills? Early gesture might be an early index of global communicative skill. Children who convey a large number of different meanings in their early gestures might be generally verbally facile. If so, not only should these children have large vocabularies later in development, but their sentences ought to be relatively complex as well. However, we have seen that particular types of early gesture use are specifically related to particular aspects of later spoken language use (Rowe & Goldin-Meadow, 2009b). A child who conveys a large number of different meanings via gesture early in development is likely to have a relatively large vocabulary several years later, but not necessarily produce complex sentences. Conversely, a child who frequently combines gesture and speech to create sentence-like meanings (e.g. point at location where ball belongs+“ball” = “ball [goes] there”) early in development is likely to produce relatively complex spoken sentences several years later, but not necessarily to have a large vocabulary. Thus, the specific skills displayed in gesture selectively predict linguistic milestones, paving the way for gesture to be used as a diagnostic tool to identify children at risk for language delay.

Gesture as a possible tool for diagnosis

We have seen that individual differences in early gesture can predict later differences in speech in children who are developing language at a typical pace. What would happen if we were to extend the range and examine children with brain injury who are at risk for language delay? Although many studies have explored the relation between biological characteristics (lesion laterality, location, and size; seizure history) and differences in language skill in children with brain injury (e.g., Feldman, 2005), few studies have examined the gestures that children with unilateral brain injury produce, particularly in relation to their subsequent linguistic development. Can we identify children who are at risk for language delay by examining their early gestures?

There is, in fact, evidence that early gesture can predict later productive and receptive vocabulary in children with brain injury. Sauer, Levine and Goldin-Meadow (2010) categorized 11 children with pre or perinatal unilateral brain injury (BI) into two groups based on whether their gesture use at 18 months was within or below the range for a sample of 53 typically developing (TD) children: (1) Children in the LOW BI group (n=5) fell below the 25th percentile for gesture production at 18 months in the TD group. (2) Children in the HIGH BI group (n=6) fell above the 25th percentile.

Importantly, there was stability in the children's gesture use between 18 and 26 months. All 5 of the children assigned to the LOW gesture group on the basis of their 18-month performance fell below the 25th percentile cut-off on at least one of the other two sessions (22 and 26 months); 2 fell below on both sessions. Conversely, all 6 of the children assigned to the HIGH gesture group on the basis of their 18-month performance fell above the cut-off on both of the other two sessions. Thus, early differences in gesture use in the children with BI were stable over time.

But what about speech? On average, the children with BI produced 15.36 (SD = 14.64) speech types at 18 months, 56.91 (SD = 36.44) at 22 months, and 123.36 (SD = 77.23) at 26 months. Thus, as a group, the children with BI increased the number of different words they produced over time. However, there was a great deal of variability within the group. Can this variability be related to gesture use at 18 months?

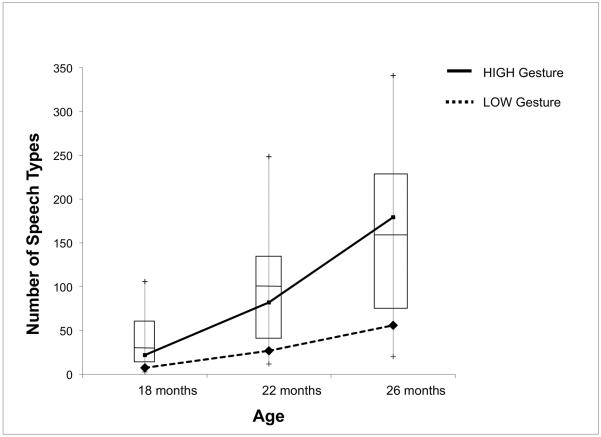

Figure 1 presents the mean number of speech types children with BI in the LOW and HIGH gesture groups produced at each age, displayed in relation to the speech type data from the TD children. The boxes in the graph represent the interquartile range for speech types in the TD children; the line in the middle of each box represents the median for the TD children, and the tails represent the 5th and 95th percentiles.

Figure 1.

Mean number of speech types produced by children with pre- or perinatal unilateral brain lesions (BI) in the HIGH and LOW gesture groups at each observation session. Children with BI were divided into gesture groups based on their production of gesture types at 18 months. The boxes in the graph represent the interquartile range for speech types in the typically developing (TD) children; the line in the middle of each box represents the median for the TD children, and the tails represent the 5th and 95th percentiles. Reprinted from Figure 3 in Sauer, Levine & Goldin-Meadow (2010).

Children with BI whose gesture use was within the TD range at 18 months (the HIGH group) went on to develop a productive vocabulary at 22 and 26 months that was within the TD range, indeed close to the mean. In contrast, children with BI whose gesture use was below the TD range at 18 months (the LOW group) remained outside of (and below) the range for the TD children at both 22 and 26 months. The number of speech types produced by the HIGH gesture group differed significantly from the number produced by the LOW gesture group at 22 and 26 months (p < .005), but not at 18 months. Moreover, gesture production at 18 months correlated significantly with productive vocabulary at both 22 months (ρ = .81, p = .002) and 26 months (ρ = .81, p = .003) using Spearman rank order correlations.

The children with BI displayed a similar pattern for receptive vocabulary (PPVT administered at 30 months). There was a significant correlation between the number of gesture types a child produced at 18 months and that child's PPVT score at 30 months (ρ = 0.80, p = .003). Importantly, the number of speech types a child produced at 18 months did not significantly correlate with that child's PPVT score at 30 months (ρ = .34, p = .31), presumably because there was very little variation in number of speech types these children produced at 18 months.

These findings suggest that early gesture use can predict subsequent spoken vocabulary, both receptive and productive, not only in children who are learning language at a typical pace, but also in children who are exhibiting delays. Early gesture thus has the potential to identify which children with unilateral pre- or perinatal lesions are likely to experience delays in word learning at a time when their language-learning trajectory is thought to be most malleable.

Ozcaliskan, Levine, and Goldin-Meadow (2013) found similar effects at a group level with respect to the onset of different types of sentence constructions. For example, on average, children with BI produced their first instance of a sentence containing two arguments in gesture + speech (“mama”+point at stairs) at 22.0 (SD=2.86) months, compared to 18.8 (SD=2.67) months for the TD children, and the children with BI were comparably delayed in their first instance of a two-argument sentence conveyed entirely in speech (“dad inside”)—26.26 (SD=4.00) months for the children with BI, compared to 22.93 (SD=3.88) months for the TD children. The children with BI displayed the same pattern for argument+predicate sentences, producing them first in gesture+speech (“drink”+point at juice) and only later entirely in speech (“pour the tea”), both at a 4-month delay relative to the TD children. Interestingly, the children with BI (unlike the TD children) did not reliably produce predicate+predicate constructions in gesture+speech (“I see”+GIVE gesture) before producing them entirely in speech (“I get zipper and zip this up”). There was some evidence that motoric limitations in the children with BI constrained the types of iconic gestures they produced; this restricted range, in turn, may have had an impact on the breadth of predicate+predicate constructions the children with BI produced in speech, which were more limited than the TD children's predicate+predicate constructions. These findings raise the possibility that producing particular gesture+speech combinations not only predicts the emergence of parallel constructions in speech, but may also help children take their first steps into these constructions.

Gesture as a possible tool for intervention

Although the findings described thus far underscore the point that gesture and speech form an integrated system (cf. McNeill, 1992), they do not bear on the degree to which one component of the gesture-speech system impacts the other. Does gesture merely correlate with subsequent vocabulary development, or can it play a causal role in fostering that development? Gesture has been shown to play a causal role in older children learning to solve math problems. Telling 9 and 10-year-old children to gesture either prior to (Broaders, Cook, Mitchell, & Goldin-Meadow, 2007) or during (Cook, Mitchell, & Goldin-Meadow, 2008; Goldin-Meadow, Cook, & Mitchell, 2009; Novack, Congdon, Hermani-Lopez, 2014) a math lesson facilitates the children's ability to profit from that lesson. In one case (Broaders et al., 2007), children were told to move their hands while explaining their responses to a set of math problems. Children complied and gestured, but the interesting result is that the gestures they produced when told to move their hands revealed knowledge about the problem that they had not previously revealed in either speech or their solutions to the problem. Having articulated this knowledge in the manual modality, the children were then more open to input from a math lesson.

Can gesture play the same type of causal role for young children in the early stages of language learning? Children's own actions have been shown to direct their attention to aspects of the environment important for acquiring particular skills (for review, see Rakison & Woodward, 2008). For example, Needham, Barrett and Peterman (2002) gave 3-month-old infants Velcro-covered “sticky mittens”; when the infants swiped at an object, the object would often “stick” to the infants' mittens, thus simulating a grabbing behavior well before the infants were able to grab on their own. Importantly, this experience led infants to increase attention to and exploration of novel objects, even when they were not wearing the mittens. The gestures a child produces are, in fact, actions and, when produced in a naming context, could increase attention to both the objects named and to the names themselves. Increasing attention in this way has the potential to be useful for early word learning, particularly since object names are among the first words learned and account for a majority of children's early spoken lexicons (Fenson et al., 1994). In addition, increasing pointing gestures in labeling contexts could emphasize the pragmatic utility of pointing for the transmission of knowledge, including transmitting names for objects (Csibra & Gergely, 2009). A child's own gesturing thus has the potential to affect the child's word learning.

Very little experimental work has been done to determine whether child gesture plays a causal role in language learning. The one exception is a study by Goodwyn, Acredolo and Brown (2000), who trained parents to model gestures for their 11-month-old infants in everyday interactions. They found that children in this gesture training condition performed significantly better than controls on a variety of language measures assessed through age 3 years. However, the study did not directly assess whether child gesture was the cause of the experimental effects on child language. Children's gesture was not directly observed, and effects of increases in child gesture on child speech were not tested. Thus, it is possible that the effects that parent gesture training had on child language operated through a mechanism other than child gesture.

To determine whether child gesture plays a causal role in vocabulary learning, we need to go beyond observing the spontaneous gestures young language-learners produce—we need to manipulate the gestures they produce. LeBarton, Goldin-Meadow and Raudenbush (2013) accomplished this goal by showing children pictures of objects, pointing to one of the pictures while labeling the object, and then telling the child to put his or her pointing figure on the picture (e.g., “that's a dress,” said while pointing at a picture of a dress, followed by, “can you put your finger here”). They compared this experimental condition (Child & Experimenter Gesture, C&EG) to two control conditions, one in which the experimenter labeled the picture while pointing at it but did not instruct the child to point (Experimenter Gesture, EG), and one in which the experimenter labeled the picture and did nothing else (No Gesture, NG). Fifteen children aged 17 months participated in an 8-week at-home intervention study, 6 weekly training sessions plus a follow-up session 2 weeks later. All of the children were exposed to object words, but only some (the C&EG group) were told to point at the named objects. Before each of the 6 training sessions and at the follow-up session, children interacted naturally with their caregivers for at least a half-hour to establish a baseline against which changes in communication could be measured.

The first goal of the study was to see whether children's pointing behavior in interactions with the experimenter could be changed. The second was to determine whether this experimental manipulation would have a spill over effect on the children's gesturing in spontaneous interactions with their caregivers. If so, the third goal was to assess whether these increases in child gesturing would lead to increases in the children's spoken vocabulary.

Does instructing children to gesture increase child gesture during the experiment? As expected, children told to gesture (C&EG) produced more gestures during the experiment (M = 46.6, SD = 21.3) than children who were not told to gesture (M = 10.7, SD = 6.3). Moreover, this difference did have an effect on child gesturing overall: Children who were told to gesture (C&EG) had a greater increase in gesturing with their caregivers over the course of the study (M = 2.06, SD = 1.49) than children who were not told to gesture (M = 0.16, SD = 1.31). Gesturing is a behavior that can be manipulated.

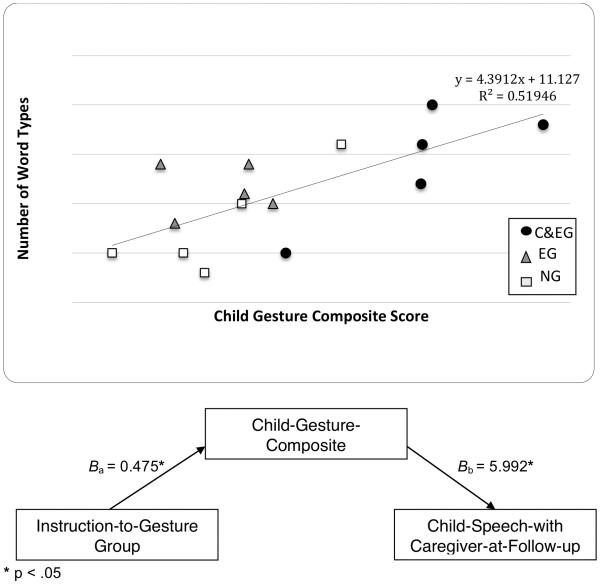

The key question is whether experimentally increasing gesturing had an impact on the number of words children produced after training in naturalistic interactions with their caregivers. To increase the stability of their gesture measure, LeBarton et al. (2013) combined their two child gesture measures (child gesture in experiment plus child gesture with caregiver) into one composite score. They found that the more children gestured during the 6-week training period (i.e., the higher their composite gesture scores), the bigger their vocabularies 2 weeks after the the training period ended (i.e., the more different words they produced when interacting with their caregivers after the training ended; Figure 2, top).

Figure 2.

A scatter plot (top) showing the relation between child gesture (a composite score of the gestures produced with the experimenter plus the increase in gestures produced with the child's caregiver) and child speech at follow-up (word types children produced with the caregiver two weeks after session 6); each symbol represents a child and his or her training condition: C&EG = Child produces own Gesture and sees Experimenter Gesture; EG = child produces no gesture but sees Experimenter Gesture; NG = child produces and sees No Gesture. The Indirect Effects analysis (bottom) shows that the experimental manipulation (Instruction-to-Gesture, C&EG) had an effect on child speech after training (Child-Speech-with-Caregiver-at-Follow-up), which was mediated by child gesture during training (Child-Gesture-Composite). Reprinted from Figure 5 in LeBarton, Goldin-Meadow & Raudenbush (in press).

Importantly, LeBarton et al. (2013) also found a significant indirect effect (p < .001) of their experimental manipulation (telling children to gesture) on outcome (child speech with caregiver at follow-up) that operated through child gesture (child gesture composite score) (Figure 2, bottom). It is thus possible to experimentally increase the rate at which children gesture and, by doing so, increase the number of different words they produce in spontaneous interactions with their caregivers.

Conclusion

There are two take-home messages from the literature on early gesture that are relevant to children with language delay. First, charting early gesture allows us to predict when a child is likely to acquire particular linguistic constructions in speech. Gesture thus has the potential to serve as a diagnostic tool to identify individuals at risk for language delay. The Sauer et al. (2010) findings suggest that a diagnostic tool based on the number of different meanings a child produces in gesture during the earliest stages of language learning could be used to identify children at risk for later vocabulary deficits well before those children can be identified using speech. Given that we see the first signs of sentence construction in gesture+speech combinations in typically developing children, we may also be able to use the number and types of gesture+speech combinations children produce prior to the onset of two-word combinations to identify individual children who are at risk for later deficits in sentence construction (e.g., Iverson, Longbardi & Caselli, 2003).

However, a great deal of work will have to be done to make a gesture diagnostic feasible. Clinicians and teachers do not have time to take long samples of child speech and examine them for gesture. But it should be possible to construct elicitation tasks that generate gesture, norm those tasks on typically developing children, and then use the tasks to assess gesture production in children at risk for language delay. Moreover, the advantage of a gesture test is that potential delays can be detected even before the onset of speech, providing an earlier start for intervention, and a longer time during which to intervene before school entry. Early identification would also help focus attention on children most at-risk for language delay, and thus in need of intervention, before they display delays. Given limited resources, it would be useful to identify, within children at risk for language delay, which children are more likely to require intervention to end up within the range for typically developing children.

Second, encouraging children to gesture at very early ages has the potential to increase the size of their spoken vocabularies at school entry, and perhaps to facilitate the onset of a variety of linguistic constructions. Gesture thus has the potential to serve as a tool for intervention. There are at least two reasons why gesture might be an ideal candidate around which to design an intervention program. First, SES differences in vocabulary are already well established by the time children enter school. To alleviate social disparities, we need to intervene with low-SES children early in development and we therefore need to focus on early appearing skills—gesture is just such an early developing skill. Second, unlike SES, which is extremely difficult to alter, gesturing can be manipulated, as demonstrated in the LeBarton et al. (2013) study.

Child gesture has the potential to influence language learning in a direct way by giving children an opportunity to practice producing particular meanings by hand at a time when those meanings are difficult to produce by mouth (Iverson & Goldin-Meadow, 2005). Child gesture could also play a more indirect role in language learning by eliciting timely speech from listeners. Gesture can alert listeners (parents, teachers, clinicians) to the fact that a child is ready to learn a particular word or sentence; listeners might then adjust their talk, providing just the right input to help the child learn the word or sentence. For example, a child who does not yet know the word “cat” points at it and his mother obligingly responds, “yes, that's a cat”. Because they are finely tuned to a child's current state (cf. Vygotsky's, 1986, zone of proximal development), parental responses of this sort can be effective in teaching children how to express a particular idea in the language they are learning (Goldin-Meadow, Goodrich, Sauer, & Iverson, 2007; see also Golinkoff, 1986; Masur, 1982, 1983). It may be beneficial for parents, teachers, and clinicians to encourage children to gesture, and then use those gestures to guide the linguistic input they offer the children.

Supplementary Material

Acknowledgments

Research reported in this publication was supported by the Eunice Kennedy Shriver National Institute of Child Health & Human Development of the National Institutes of Health under Award Numbers P01HD040605 and R01HD47450, and by the National Science Foundation under Award Numbers BCS0925595 and SBE0541957 through the Spatial Intelligence and Learning Center. The content is solely the responsibility of the author and does not necessarily represent the official views of the National Institutes of Health or the National Science Foundation.

References

- Acredolo LP, Goodwyn SW. Symbolic gesturing in normal infants. Child Development. 1988;59:450–466. [PubMed] [Google Scholar]

- Bates E. Language and context: The acquisition of pragmatics. Academic Press; New York, NY: 1976. [Google Scholar]

- Bates E, Benigni L, Bretherton I, Camaioni L, Volterra V. The emergence of symbols: Cognition and communication in infancy. Academic Press; New York, NY: 1979. [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136(4):539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Capirci O, Iverson JM, Pizzuto E, Volterra V. Communicative gestures during the transition to two-word speech. Journal of Child Language. 1996;23:645–673. [Google Scholar]

- Cartmill E, Hunsicker D, Goldin-Meadow S. Pointing and naming are not redundant: Children use gesture to modify nouns before they modify nouns in speech. Developmental Psychology. 2014;50(6):1660–1666. doi: 10.1037/a0036003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark EV, Estigarribia B. Using speech and gesture to introduce new objects to young children. Gesture. 2011;11(1):1–23. [Google Scholar]

- Colonnesi C, Stams GJJM, Koster I, Noom MJ. The relation between pointing and language development: A meta-analysis. Developmental Review. 2010;30:352–366. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Csibra G, Gergely G. Natural pedagogy. Trends in Cognitive Sciences. 2009;13:148–153. doi: 10.1016/j.tics.2009.01.005. [DOI] [PubMed] [Google Scholar]

- de Laguna G. Speech: Its function and development. Indiana University Press; Bloomington, IN: 1927. [Google Scholar]

- Demir OE, Levine S, Goldin-Meadow S. A tale of two hands: Children's early gesture use in narrative production predicts later narrative structure in speech. Journal of Child Language. 2014 doi: 10.1017/S0305000914000415. DOI: 10.1017/S0305000914000415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3rd ed. American Guidance Service; Circle Pines, MN: 1997. [Google Scholar]

- Feldman HM. Language learning with an injured brain. Language Learning and Development. 2005;1(3&4):265–288. [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59 Serial No. 242. [PubMed] [Google Scholar]

- Goldin-Meadow S. Hearing gestures: How our hands help us think. Harvard University Press; Cambridge, MA: 2003. [Google Scholar]

- Goldin-Meadow S, Butcher C. Pointing toward two-word speech in young children. In: Kita S, editor. Pointing: Where language, culture, and cognition meet. Erlbaum Associates; Mahwah, NJ: 2003. [Google Scholar]

- Goldin-Meadow S, Cook SW, Mitchell ZA. Gesturing gives children new ideas about math. Psychological Science. 2009;20(3):267–272. doi: 10.1111/j.1467-9280.2009.02297.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Goodrich W, Sauer E, Iverson J. Young children use their hands to tell their mothers what to say. Developmental Science. 2007;10:778–785. doi: 10.1111/j.1467-7687.2007.00636.x. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Morford M. Gesture in early child language: Studies of deaf and hearing children. Merrill-Palmer Quarterly. 1985;31:145–176. [Google Scholar]

- Golinkoff R. I beg your pardon?: the preverbal negotiation of failed messages. Journal of Child Language. 1986;13:455–476. doi: 10.1017/s0305000900006826. [DOI] [PubMed] [Google Scholar]

- Goodwyn SW, Acredolo LP, Brown CA. Impact of symbolic gesturing on early language development. Journal of Nonverbal Behavior. 2000;24:81–103. [Google Scholar]

- Greenfield P, Smith J. The structure of communication in early language development. Academic Press; New York, NY: 1976. [Google Scholar]

- Guillaume P. Les debuts de la phrase dans le langage de l'enfant. Journal de Psychologie. 1927;24:1–25. [Google Scholar]

- Hart B, Risley TR. Meaningful differences in the everyday experiences of young children. Paul H. Brookes Publishing; Baltimore, MD: 1995. [Google Scholar]

- Iverson JM, Goldin-Meadow S. Gesture paves the way for language development. Psychological Science. 2005;16:368–371. doi: 10.1111/j.0956-7976.2005.01542.x. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Capirci O, Caselli MS. From communication to language in two modalities. Cognitive Development. 1994;9:23–43. [Google Scholar]

- Iverson JM, Capirci O, Volterra V, Goldin-Meadow S. Learning to talk in a gesture-rich world: Early communication of Italian vs. American children. First Language. 2008;28:164–181. doi: 10.1177/0142723707087736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iverson JM, Longobardi E, Caselli MC. Relationship between gestures and words in children with Down's syndrome and typically developing children in the early stages of communicative development. International Journal of Language and Communication Disorders. 2003;38:179–197. doi: 10.1080/1368282031000062891. [DOI] [PubMed] [Google Scholar]

- LeBarton ES, Goldin-Meadow S, Raudenbush S. Experimentally-induced increases in early gesture lead to increases in spoken vocabulary. Journal of Cognition and Development. 2013 doi: 10.1080/15248372.2013.858041. DOI:10.1080/15248372.2013.858041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leopold WF. Speech development of a bilingual child: A linguist's record. umes 1–4. Northwestern University Press; Evanston, IL: 1939–49. [Google Scholar]

- Masur EF. Mothers' responses to infants' object-related gestures: Influences on lexical development. Journal of Child Language. 1982;9:23–30. doi: 10.1017/s0305000900003585. [DOI] [PubMed] [Google Scholar]

- Masur EF. Gestural development, dual-directional signaling, and the transition to words. Journal of Psycholinguistic Research. 1983;12:93–109. [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thought. University of Chicago Press; Chicago: 1992. [Google Scholar]

- Morford M, Goldin-Meadow S. Comprehension and production of gesture in combination with speech in one-word speakers. Journal of Child Language. 1992;19:559–580. doi: 10.1017/s0305000900011569. [DOI] [PubMed] [Google Scholar]

- Needham A, Barrett T, Peterman K. A pick-me up for infants' exploratory skills: Early simulated experiences reaching for objects using “sticky mittens” enhances young infants' exploration skills. Infant Behavior and Development. 2002;25:279–295. [Google Scholar]

- Novack M, Congdon E, Hermani-Lopez N, Goldin-Meadow S. From action to abstraction: Using the hands to learn math. Psychological Science. 2014;25(4):903–910. doi: 10.1177/0956797613518351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Özçalışkan S, Goldin-Meadow S. Gesture is at the cutting edge of early language development. Cognition. 2005;96:B01–113. doi: 10.1016/j.cognition.2005.01.001. [DOI] [PubMed] [Google Scholar]

- Özçalışkan S, Levine S, Goldin-Meadow S. Gesturing with an injured brain: How gesture helps children with early brain injury learn linguistic constructions. Journal of Child Language. 2013;40(1):69–105. doi: 10.1017/S0305000912000220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parise E, Csibra G. Electrophysiological evidence for the understanding of maternal speech by 9-month-old infants. Psychological Science. 2012;23(7):728–733. doi: 10.1177/0956797612438734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakison D, Woodward A. New perspectives on the effects of action on perceptual and cognitive development. Developmental Psychology. 2008;44:1209–1213. doi: 10.1037/a0012999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe M, Goldin-Meadow S. Differences in early gesture explain SES disparities in child vocabulary size at school entry. Science. 2009a;323:951–953. doi: 10.1126/science.1167025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe M, Goldin-Meadow S. Early gesture selectively predicts later language learning. Developmental Science. 2009b;12(1):182–187. doi: 10.1111/j.1467-7687.2008.00764.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe M, Özçalışkan S, Goldin-Meadow S. Learning words by hand: Gesture's role in predicting vocabulary development. First Language. 2008;28(2):185–203. doi: 10.1177/0142723707088310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe ML, Raudenbush SW, Goldin-Meadow S. The pace of early vocabulary growth helps predict later vocabulary skill. Child Development. 2012;83(2):508–525. doi: 10.1111/j.1467-8624.2011.01710.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauer E, Levine SC, Goldin-Meadow S. Early gesture predicts language delay in children with pre- or perinatal brain lesions. Child Development. 2010;81:528–539. doi: 10.1111/j.1467-8624.2009.01413.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scarborough HS. Index of productive syntax. Applied Psycholinguistics. 1990;11:1–22. [Google Scholar]

- Vygotsky L. Thought and language. The MIT Press; Cambridge, MA: 1986. [Google Scholar]

- Zinober B, Martlew M. Developmental changes in four types of gesture in relation to acts and vocalizations from 10 to 21 months. British Journal of Developmental Psychology. 1985;3:293–306. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.