Abstract

Background:

Hospital-level measures of patient satisfaction and quality are now reported publically by the Centers for Medicare and Medicaid Services. There are limited metrics specific to cancer patients. We examined whether publically reported hospital satisfaction and quality data were associated with surgical oncologic outcomes.

Methods:

The Nationwide Inpatient Sample was utilized to identify patients with solid tumors who underwent surgical resection in 2009 and 2010. The hospitals were linked to Hospital Compare, which collects data on patient satisfaction, perioperative quality, and 30-day mortality for medical conditions (pneumonia, myocardial infarction [MI], and congestive heart failure [CHF]). The risk-adjusted hospital-level rates of morbidity and mortality were calculated for each hospital and the means compared between the highest and lowest performing hospital quartiles and reported as absolute reduction in risk (ARR), the difference in risk of the outcome between the two groups. All statistical tests were two-sided.

Results:

A total of 63197 patients treated at 448 hospitals were identified. For patients at high vs low performing hospitals based on Hospital Consumer Assessment of Healthcare Providers and Systems scores, the ARR in perioperative morbidity was 3.1% (95% confidence interval [CI] = 0.4% to 5.7%, P = .02). Similarly, the ARR for mortality based on the same measure was -0.4% (95% CI = -1.5% to 0.6%, P = .40). High performance on perioperative quality measures resulted in an ARR of 0% to 2.2% for perioperative morbidity (P > .05 for all). Similarly, there was no statistically significant association between hospital-level mortality rates for MI (ARR = 0.7%, 95% CI = -1.0% to 2.5%), heart failure (ARR = 1.0%, 95% CI = -0.6% to 2.7%), or pneumonia (ARR = 1.6%, 95% CI = -0.3% to 3.5%) and complications for oncologic surgery patients.

Conclusion:

Currently available measures of patient satisfaction and quality are poor predictors of outcomes for cancer patients undergoing surgery. Specific metrics for long-term oncologic outcomes and quality are needed.

The measurement of quality has become a major focus of US medicine (1,2). Prior work has shown that quality is often highly variable. While patients often do not receive treatments and interventions that are known to be beneficial, overuse of unnecessary and sometimes harmful treatments is also common (3).

A number of sources have advocated the public reporting of hospital quality and satisfaction data to help medical consumers make informed choices about where they receive care and to help hospitals improve quality (4). The Hospital Quality Alliance, a public and private collaborative, collects data on process measures for common medical conditions including acute myocardial infarction, pneumonia, heart failure, and perioperative surgical care, as well as measures of patient satisfaction (4). These hospital-level quality data are updated regularly and have now been made available to the public (5).

Although hospital satisfaction and quality data are now widely available, whether these measures correlate with improved patient outcomes remains uncertain (6–8). Studies in other disciplines have reported mixed results on whether process measures and satisfaction correlate with outcomes (7,9–14). In oncology, although a number of initiatives are underway to improve the quality of cancer care, public reporting of outcomes and the subjective experiences of cancer patients remains infrequent (15,16). Aside from data on procedural volume, comparative data to guide newly diagnosed cancer patients requiring surgery are often sparse. Given the lack of specific data in oncology, cancer patients are forced to rely on publically available hospital data that is often derived from general medical and surgical patients to guide decision making.

Given the relative lack of publically reported hospital data for inpatient oncologic care and outcomes, we explored whether currently available publically reported hospital data for medical and surgical conditions could be used as a surrogate for quality of cancer care. Specifically, we examined whether hospital-level patient satisfaction and quality metrics for common medical and surgical conditions were correlated with perioperative outcomes for patients undergoing inpatient oncologic surgery.

Methods

Nationwide Inpatient Sample

Data from Nationwide Inpatient Sample (NIS) (2009–2010) was linked to Hospital Compare (2008) data describing hospital-level patient satisfaction, compliance with perioperative process measures, and medical condition mortality. NIS, developed and maintained by the Agency for Healthcare Research and Quality, includes a random sample of 20% of all hospital discharges within the United States. In 2010, NIS captured approximately eight million hospital stays from 45 states (17). The study was deemed exempt by the Columbia University Institutional Review Board.

Patients and Procedures

Patients with an ICD-9 code for esophageal, pancreatic, breast, lung, gastric, colon, uterine, ovarian, prostate, and bladder cancer in combination with an ICD-9 code for the site-specific cancer-directed surgery were included (Supplementary Table 1, available online). For each surgical procedure, the extent of surgery (ie, partial vs total organ resection) and utilization of a minimally invasive surgical approach were noted. Patients with codes for multiple tumor types were excluded. All patients were deidentified so written informed consent was not required.

Each patient’s age (<40, 40–49, 50–59, 60–69, 70–79, ≥80 years), gender, race (white, black, Hispanic, other, unknown), income (low, medium, high, highest, unknown), and insurance status (Medicare, Medicaid, commercial, self pay, other, unknown) were noted. The hospital admission was classified as elective, urgent, or other/unknown. Comorbid medical conditions were measured using the Elixhauser index (18).

Hospitals

Hospitals were classified based on teaching status (teaching, nonteaching), location (urban, rural), region (northeast, midwest, south, west), size (small, medium, large), and ownership (government, private/not for profit, private/for profit, and unknown). Procedural volume was estimated as annualized volume (total number of procedures divided by the number of years in which a given hospital performed at least one procedure). Separate volume calculations were performed for each procedure and hospital volume classified as low, intermediate, or high.

Outcomes

The primary outcomes were hospital-level risk-adjusted mortality, risk-adjusted complication rates, and failure to rescue. Risk-adjusted mortality rates for each hospital were calculated as previously described (19,20). A logistic regression model was used to predict the probability of death for each individual patient. The models included the clinical and demographic characteristics of patients, procedure volume, type of surgery, and hospital characteristics. The predicted probabilities of all patients at a given hospital were then summed to determine the hospital-specific expected mortality. The risk-adjusted mortality rate for each hospital was then estimated by multiplying the ratio of the observed to expected mortality by the overall mortality of the cohort.

We examined major perioperative complications including myocardial infarction, cardiopulmonary arrest, renal failure, respiratory failure, stroke, venous thromboembolism, shock, hemorrhage, gastrointestinal bleeding, pneumonia, bacteremia/sepsis, abscess, and wound complications (19,21). Risk-adjusted complication rates were calculated for each hospital using methodology similar to the analysis of risk-adjusted mortality. Failure to rescue was defined as death in patients with any of the complications listed above (19,20,22). The failure-to-rescue rate for each hospital was determined by dividing the number of patients who died after a complication by the total number of patients with a complication in the given hospital.

Hospital Compare

Hospital Compare is a repository of data that is publically reported and includes structural measures, process measures, outcomes, hospital resource utilization, and patient satisfaction (5). It is estimated that 98% of hospitals now participate (8). Hospital-level data on patient satisfaction (Hospital Consumer Assessment of Healthcare Providers and Systems), surgical process measures (Surgical Care Improvement Project), and mortality for medical conditions were obtained from Hospital Compare.

Hospital Consumer Assessment of Healthcare Providers and Systems

Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) is a survey administered nationally to measure patients’ perceptions of their hospital experience. HCAHPS has been validated by Centers for Medicare and Medicaid Services (CMS) and Agency for Healthcare and research Quality (AHRQ) (23).

The HCAHPS survey consists of 27 questions about hospital experience that focus on communication with staff, staff responsiveness, cleanliness and quietness, pain management, medication communication, and discharge planning. The survey contains two global questions in which patients are asked to rate their overall experience (responses from 0, worst to 10, best, and grouped as low [0–6], medium [7–8], and high [9–10]) and whether they would recommend the hospital to others (responses as no [probably not or definitely not], yes, probably recommend, and yes, definitely recommend). Additionally, the survey includes a number of items to characterize patient attributes that are used for risk adjustment across hospitals. The survey is administered to a random sample of patients between 48 hours and six weeks after discharge (23,24).

Our analysis focused on the two questions directed at the global assessment of patient experiences. For overall satisfaction, we ranked hospitals based on the percentage of patients would reported their experience as high (9–10). Similarly, we ranked hospitals based on the proportion of respondents who would definitely recommend the hospital to family or friends (23).

Surgical Care Improvement Project

The Surgical Care Improvement Project (SCIP) measures consist of perioperative process measures (25). SCIP measures use of perioperative antibiotics for high-risk surgical patients (antibiotic administered within one hour of incision, appropriate antibiotic chosen, antibiotic discontinued within 24 hours of surgery), hair removal, urinary catheter removal, perioperative glucose control, temperature monitoring, and compliance with venous thromboembolism (VTE) prophylaxis (VTE prophylaxis ordered, VTE prophylaxis administered). Data from SCIP is abstracted and is one of the Joint Commission’s core measures (25). We utilized compliance with the three perioperative antibiotic measures and the two perioperative VTE prophylaxis measures.

Mortality for Medical Conditions

CMS and Hospital Quality Alliance (HQA) began reporting hospital-level 30-day mortality rates for acute myocardial infarction (AMI) and heart failure (HF) in 2007 and for pneumonia (PN) in 2008. These mortality measures are risk-standardized to facilitate comparisons across hospitals (26). We ranked hospitals based on their 30-day risk-standardized mortality rates for AMI, HF, and PN.

Statistical Analysis

Hospital-level data from Hospital Compare (HCAHPS scores, SCIP measures, and the mortality for medical conditions) are displayed as medians with interquartile ranges. Patient and hospitals were grouped into quartiles based on HCAHPS scores for satisfaction (based on percentage of patients reporting high satisfaction) and willingness to recommend the hospital (based on percentage of patients that would definitely recommend the hospital). Across these quartiles, frequency distributions between categorical variables were compared using χ2 tests while continuous variables were compared with t-test, analysis of variance, and Wilcoxon rank-sum tests.

The correlation between the HCAHPS scores for satisfaction and willingness to recommend the hospital and risk-adjusted mortality and risk-adjusted complication rates are reported using the Pearson’s correlation coefficient. Each hospital’s HCAHPS scores and SCIP and mortality measures were determined. Hospitals were then ranked based on their respective scores for each measures. We then compared surgical outcomes (risk-adjusted mortality, complication rates, and failure-to-rescue rates) between high and low performing hospitals. Specifically, we compared surgical outcomes between hospitals performing at the highest quartile (75th percentile) and lowest quartile (25th percentile) for HCAHPS, SCIP, and the mortality measures. We report the percentage absolute risk reduction (ARR) for the outcomes between the highest and lowest performing hospitals for the measures. The ARR is the incremental difference in risks of an outcome between two groups. All analyses were performed with SAS version 9.4 (SAS Institute Inc, Cary, NC). All statistical tests were two-sided. A P value of less than .05 was considered statistically significant.

Results

From 2009 to 2010 we identified 135132 surgical oncology patients treated at 1384 hospitals in the NIS database. In 2010, 18 states did not supply hospital-level identifiers to NIS, thus 92041 (68.1%) patients at 797 (57.6%) hospitals were successfully matched to the Hospital Compare. The final cohort includes the 63197 (46.8%) patients at 448 (32.4%) hospitals that reported responses to HCAHPS questions.

Overall, the median value for high patient satisfaction across hospitals was 62.0% (IQR = 56.0%-69.0%), while the median value for whether patients would recommend the hospital was 68.0% (IQR = 61.0%-74.0%) (Table 1). The median hospital-level scores for the five SCIP measures we examined ranged from 83.0% (receipt of appropriate antibiotics) to 95.0% (appropriate discontinuation after antibiotics within 24 hours of surgery). The median hospital-level mortality rates for medical conditions were 10.8% (IQR = 10.1%-11.7%) for heart failure, 11.3% (IQR = 10.3%-12.2%) for pneumonia, and 15.9% (IQR = 15.3%-16.7%) for myocardial infarction.

Table 1.

Median hospital performance on measures of satisfaction, quality, and outcomes*

| Metric | Hospitals | Patients | Median, % | IQR, % |

|---|---|---|---|---|

| HCAHPS | ||||

| Overall satisfaction | 448 | 63 197 | 62.0 | 56.0–69.0 |

| Recommend hospital to family or friends | 448 | 63 197 | 68.0 | 61.0–74.0 |

| SCIP | ||||

| Received antibiotics one hour before incision | 338 | 47 878 | 90.0 | 85.0–94.0 |

| Received appropriate antibiotic | 332 | 46 879 | 83.0 | 72.0–89.0 |

| Antibiotics stopped within 24 hours of surgery | 338 | 47 878 | 95.0 | 92.0–97.0 |

| VTE prophylaxis ordered | 332 | 46 879 | 87.5 | 79.0–92.0 |

| VTE prophylaxis received | 338 | 47 878 | 85.0 | 76.0–91.0 |

| Mortality measures | ||||

| Myocardial infarction | 440 | 63 013 | 15.9 | 15.3–16.7 |

| Heart failure | 442 | 62 924 | 10.8 | 10.1–11.7 |

| Pneumonia | 442 | 62 318 | 11.3 | 10.3–12.2 |

* HCAHPS = Hospital Consumer Assessment of Healthcare Providers and Systems; IQR = interquartile range; SCIP = Surgical Care Improvement Project; VTE = venous thromboembolism.

Hospitals were then stratified into quartiles based on the median overall satisfaction scores. The median overall satisfaction rate was 52.0% (IQR = 46.0%-55.0%) at the lowest-satisfaction hospitals, 60.0% (IQR = 59.0%-61.0%) at the intermediate-satisfaction hospitals, 65.0% (IQR = 64.0%-67.0%) at the high-satisfaction facilities, and 72.0% (IQR = 70.0%-75.0%) at the highest-satisfaction hospitals (Table 2). Patients at low-satisfaction hospitals were older (P < .001), more often female (P < .001), had higher reported incomes (P < .001), more frequently had Medicare (P < .001), and were more often black or Hispanic (P < .001) than those at the highest-satisfaction hospitals. Patients at the lowest-satisfaction hospitals were more often admitted urgently (P < .001) and had a greater number of comorbid medical conditions (P < .001). Low-satisfaction hospitals were more often large centers (P = .03), were more often located outside the Midwest (P = .01), more often private and for profit centers (P < .001). Similar trends were noted when hospitals were stratified based on how often patients would recommend the hospital.

Table 2.

Comparison of hospitals based on adjusted HCAHPS scores for high patient satisfaction

| Characteristic | Low satisfaction | Intermediate satisfaction | High satisfaction | Highest satisfaction | P† |

|---|---|---|---|---|---|

| No. (%) | No. (%) | No. (%) | No. (%) | ||

| Hospitals | 113 (25.2) | 116 (25.9) | 106 (23.7) | 113 (25.2) | |

| Patients | 13 279 (21.0) | 16 451 (26.0) | 17 555 (27.8) | 15 912 (25.2) | |

| Range | 33.0–56.0 | 57.0–62.0 | 63.0–68.0 | 69.0–91.0 | |

| Mean (SD)* | 49.6 (5.99) | 59.9 (1.68) | 65.4 (1.63) | 73.0 (4.27) | <.001 |

| Median (IQR)* | 52.0 (46.0–55.0) | 60.0 (59.0–61.0) | 65.0 (64.0–67.0) | 72.0 (70.0–75.0) | <.001 |

| Patient characteristics | |||||

| Age, y | <.001 | ||||

| <40 | 344 (2.6) | 417 (2.5) | 453 (2.6) | 383 (2.4) | |

| 40–49 | 1141 (8.6) | 1416 (8.6) | 1515 (8.6) | 1323 (8.3) | |

| 50–59 | 2787 (21.0) | 3732 (22.7) | 4126 (23.5) | 3703 (23.3) | |

| 60–69 | 4075 (30.7) | 5055 (30.7) | 5701 (32.5) | 5377 (33.8) | |

| 70–79 | 3005 (22.6) | 3671 (22.3) | 3811 (21.7) | 3444 (21.6) | |

| ≥80 | 1927 (14.5) | 2160 (13.1) | 1949 (11.1) | 1682 (10.6) | |

| Sex | <.001 | ||||

| Male | 5557 (41.8) | 7206 (43.8) | 8306 (47.3) | 7578 (47.6) | |

| Female | 7673 (57.8) | 9130 (55.5) | 9189 (52.3) | 8290 (52.1) | |

| Unknown | 49 (0.4) | 115 (0.7) | 60 (0.3) | 44 (0.3) | |

| Primary site | <.001 | ||||

| Bladder | 254 (1.9) | 390 (2.4) | 431 (2.5) | 378 (2.4) | |

| Breast | 2316 (17.4) | 2999 (18.2) | 3191 (18.2) | 2781 (17.5) | |

| Colon | 3573 (26.9) | 4007 (24.4) | 3532 (20.1) | 3333 (20.9) | |

| Esophageal | 38 (0.3) | 69 (0.4) | 176 (1.0) | 126 (0.8) | |

| Lung | 1544 (11.6) | 2458 (14.9) | 2400 (13.7) | 2261 (14.2) | |

| Ovary | 824 (6.2) | 728 (4.4) | 743 (4.2) | 697 (4.4) | |

| Pancreas | 246 (1.9) | 298 (1.8) | 444 (2.5) | 437 (2.7) | |

| Prostate | 2585 (19.5) | 3506 (21.3) | 4577 (26.1) | 4104 (25.8) | |

| Gastric | 357 (2.7) | 382 (2.3) | 419 (2.4) | 355 (2.2) | |

| Uterine | 1542 (11.6) | 1614 (9.8) | 1642 (9.4) | 1440 (9.0) | |

| Race | <.001 | ||||

| White | 9254 (69.7) | 11 288 (68.6) | 13 555 (77.2) | 10 885 (68.4) | |

| Black | 1369 (10.3) | 1668 (10.1) | 1207 (6.9) | 1075 (6.8) | |

| Hispanic | 1300 (9.8) | 1498 (9.1) | 1165 (6.6) | 449 (2.8) | |

| Other | 1114 (8.4) | 921 (5.6) | 791 (4.5) | 961 (6.0) | |

| Unknown | 242 (1.8) | 1076 (6.5) | 837 (4.8) | 2542 (16.0) | |

| Year of surgery | <.001 | ||||

| 2009 | 7484 (56.4) | 9139 (55.6) | 7280 (41.5) | 8857 (55.7) | |

| 2010 | 5795 (43.6) | 7312 (44.4) | 10 275 (58.5) | 7055 (44.3) | |

| Income | <.001 | ||||

| Low | 2236 (16.8) | 2715 (16.5) | 2850 (16.2) | 3301 (20.7) | |

| Intermediate | 3147 (23.7) | 3194 (19.4) | 4171 (23.8) | 3903 (24.5) | |

| High | 3827 (28.8) | 4026 (24.5) | 4656 (26.5) | 3370 (21.2) | |

| Highest | 3753 (28.3) | 6225 (37.8) | 5547 (31.6) | 5005 (31.5) | |

| Unknown | 316 (2.4) | 291 (1.8) | 331 (1.9) | 333 (2.1) | |

| Insurance status | <.001 | ||||

| Medicare | 6540 (49.3) | 7783 (47.3) | 8119 (46.2) | 7363 (46.3) | |

| Medicaid | 791 (6.0) | 1032 (6.3) | 961 (5.5) | 652 (4.1) | |

| Commercial | 5390 (40.6) | 7043 (42.8) | 7892 (45.0) | 7331 (46.1) | |

| Self pay | 267 (2.0) | 194 (1.2) | 237 (1.4) | 231 (1.5) | |

| Other | 291 (2.2) | 396 (2.4) | 333 (1.9) | 330 (2.1) | |

| Unknown | 0 | 3 (0.02) | 13 (0.1) | 5 (0.03) | |

| Admission type | <.001 | ||||

| Urgent | 2244 (16.9) | 2052 (12.5) | 1739 (9.9) | 1849 (11.6) | |

| Elective | 8219 (61.9) | 10 326 (62.8) | 12 292 (70.0) | 11 528 (72.4) | |

| Other | 2816 (21.2) | 4073 (24.8) | 3524 (20.1) | 2535 (15.9) | |

| Admission source | <.001 | ||||

| ER | 476 (3.6) | 462 (2.8) | 371 (2.1) | 180 (1.1) | |

| Hospital/facility | 9074 (68.3) | 11 107 (67.5) | 13 126 (74.8) | 12 635 (79.4) | |

| Other | 3729 (28.1) | 4882 (29.7) | 4058 (23.1) | 3097 (19.5) | |

| Elixhauser comorbidity | <.001 | ||||

| 1 | 6870 (51.7) | 8749 (53.2) | 9741 (55.5) | 8989 (56.5) | |

| 2 | 3669 (27.6) | 4531 (27.5) | 4731 (26.9) | 4305 (27.1) | |

| ≥3 | 2740 (20.6) | 3171 (19.3) | 3083 (17.6) | 2618 (16.5) | |

| Hospital characteristics | |||||

| Hospital size | .03 | ||||

| Small | 13 (11.5) | 25 (21.6) | 23 (21.7) | 36 (31.9) | |

| Medium | 39 (34.5) | 37 (31.9) | 33 (31.1) | 32 (28.3) | |

| Large | 61 (54.0) | 54 (46.6) | 50 (47.2) | 45 (39.8) | |

| Region | .01 | ||||

| Northeast | 33 (29.2) | 37 (31.9) | 35 (33.0) | 27 (23.9) | |

| Midwest | 6 (5.3) | 18 (15.5) | 22 (20.8) | 25 (22.1) | |

| South | 38 (33.6) | 22 (19.0) | 20 (18.9) | 30 (26.5) | |

| West | 36 (31.9) | 39 (33.6) | 29 (27.4) | 31 (27.4) | |

| Location | .01 | ||||

| Rural | 20 (17.7) | 25 (21.6) | 17 (16.0) | 38 (33.6) | |

| Urban | 93 (82.3) | 91 (78.4) | 89 (84.0) | 75 (66.4) | |

| Teaching status | .97 | ||||

| Nonteaching | 82 (72.6) | 82 (70.7) | 77 (72.6) | 83 (73.5) | |

| Teaching | 31 (27.4) | 34 (29.3) | 29 (27.4) | 30 (26.5) | |

| Hospital ownership | <.001 | ||||

| Government | 5 (4.4) | 6 (5.2) | 8 (7.5) | 15 (13.3) | |

| Private, not for profit | 19 (16.8) | 27 (23.3) | 21 (19.8) | 32 (28.3) | |

| Private, for profit | 33 (29.2) | 10 (8.6) | 12 (11.3) | 4 (3.5) | |

| Unknown | 56 (49.6) | 73 (62.9) | 65 (61.3) | 62 (54.9) | |

* Mean and median represent values of Hospital Consumer Assessment of Healthcare Providers and Systems scores within each group. HCAHPS = Hospital Consumer Assessment of Healthcare Providers and Systems; IQR = interquartile range.

† Analysis of variance and Wilcoxon rank-sum tests were used to compare continuous variables, while χ2 tests were used to compare categorical variables. All tests were two-sided.

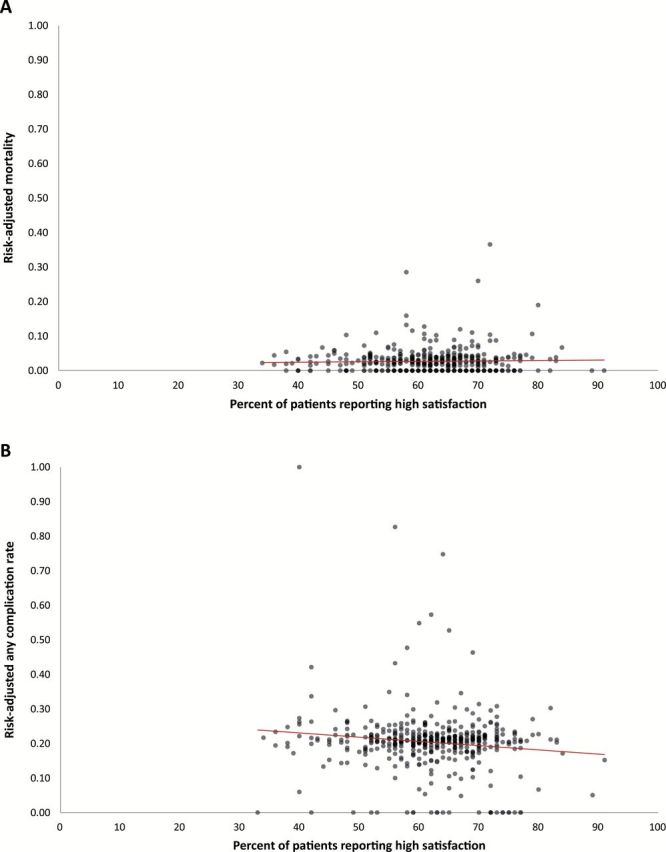

Figure 1 displays the correlation graphs comparing risk-adjusted, hospital-level outcomes with HCAHPS satisfaction scores. There was a weak correlation between high hospital satisfaction and risk-adjusted mortality (r = 0.03) and risk-adjusted complication rates (r = -0.13). Similarly, when hospitals were stratified based on the percentage of HCAHPS respondents that would definitely recommend the hospital, there was a weak correlation with risk-adjusted mortality (r = 0.02) and a weak negative correlation with risk-adjusted complication rates (r = -0.09).

Figure 1.

Comparison between patient satisfaction and outcomes. A) Correlation between hospital-level satisfaction and risk-adjusted mortality. B) Correlation between hospital-level satisfaction and risk-adjusted complication rates.

After risk-adjustment, outcomes were compared between hospitals in the 25th and 75th percentile for each measure (Table 3). For example, among hospitals with an overall satisfaction score in the 25th percentile, the risk adjusted complication rate was 21.8%, while the complication rate was 18.8% among hospitals in the 75th percentile of patient satisfaction. The absolute risk reduction in complications between low- and high-performing hospitals based on this rating was 3.1% (95% CI = 0.4% to 5.7%, P = .02). The ARR in complications was 1.1% (95% CI = -1.4% to 3.5%, P = .38) across low- and high-performing hospitals based on patient recommendation. The ARR based on performance on the SCIP measures ranged from 0% to 2.2% across the measures, although none of the measures was associated with a statistically significant reduction in complications. Similarly, there was no statistically significant association between high- and low-mortality hospitals for myocardial infarction (MI) (ARR = 0.7%, 95% CI = -1.0% to 2.5%), heart failure (ARR = 1.0%, 95% CI = -0.6% to 2.7%), and pneumonia (ARR = 1.6%, 95% CI = -0.3% to 3.5%) and complications for oncologic surgery patients.

Table 3.

Comparison of absolute risk for complications, mortality, and failure to rescue between lowest-performing (25th percentile) and highest-performing (75th percentile) hospitals for each hospital measure (HCAHPS, SCIP, and mortality measures)

| Metric | Perioperative complications, % | Perioperative mortality, % | Failure to rescue, % | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 25th | 75th | ARR (95% CI) | P* | 25th | 75th | ARR (95% CI) | P | 25th | 75th | ARR (95% CI) | P* | |

| HCAHPS | ||||||||||||

| Highest (9–10) overall rating | 21.8 | 18.8 | 3.1 (0.4 to 5.7) | .02 | 2.4 | 2.9 | -0.4 (-1.5 to 0.6) | .40 | 5.2 | 5.3 | -0.1 (-2.0 to 1.8) | .92 |

| Would definitely recommend hospital | 20.5 | 19.4 | 1.1 (-1.4 to 3.5) | .38 | 2.8 | 2.6 | 0.2 (-0.7 to 1.2) | .67 | 5.3 | 5.4 | -0.1 (-1.9 to 1.9) | .99 |

| SCIP | ||||||||||||

| Received antibiotics within 1 hour of Surgery |

21.4 | 19.2 | 2.2 (-0.8 to 5.2) | .15 | 3.0 | 2.6 | 0.4 (-0.8 to 1.6) | .51 | 6.2 | 5.3 | 0.8 (-1.3 to 2.9) | .43 |

| Received treatment to prevent blood clots |

20.6 | 20.6 | 0 (-3.2 to 3.1) | .99 | 2.6 | 2.2 | 0.4 (-0.5 to 1.4) | .35 | 5.2 | 4.4 | 0.8 (-0.9 to 2.5) | .35 |

| Received appropriate antibiotics | 21.7 | 20.4 | 1.2 (-1.7 to 4.2) | .40 | 2.2 | 3.2 | -1.0 (-2.3 to 0.2) | .09 | 4.1 | 6.1 | -2.0 (-3.8 to -0.2) | .03 |

| Doctor ordered treatment to prevent blood clot |

20.9 | 19.0 | 1.9 (-0.4 to 4.2) | .10 | 2.6 | 2.3 | 0.3 (-0.7 to 1.3) | .56 | 5.3 | 5.1 | 0.2 (-1.8 to 2.2) | .84 |

| Patients whose antibiotics were stopped within 24 hours after surgery |

20.8 | 20.5 | 0.3 (-2.9 to 3.5) | .85 | 2.8 | 2.1 | 0.7 (-0.4 to 1.9) | .20 | 5.6 | 4.3 | 1.3 (-0.6 to 3.2) | .19 |

| Mortality Measures | ||||||||||||

| 30-day death from MI | 21.2 | 20.4 | 0.7 (-1.0 to 2.5) | .41 | 2.6 | 2.3 | 0.3 (-0.5 to 1.0) | .50 | 5.1 | 5.3 | -0.2 (-1.8 to 1.5) | .85 |

| 30-day death from heart failure | 21.3 | 20.2 | 1.0 (-0.6 to 2.7) | .20 | 2.2 | 3.2 | -0.9 (-1.7 to -0.2) | .01 | 4.6 | 6.7 | -2.1 (-3.7 to -0.6) | .008 |

| 30-day death from pneumonia | 20.9 | 19.4 | 1.6 (-0.3 to 3.5) | .10 | 2.7 | 2.8 | -0.1 (-1.0 to 0.8) | .79 | 6.0 | 5.5 | 0.5 (-1.3 to 2.3) | .56 |

* Two-sample t tests were used, and all tests were two-sided. ARR = absolute risk reduction; HCAHPS = Hospital Consumer Assessment of Healthcare Providers and Systems; SCIP = Surgical Care Improvement Project.

The absolute risk reductions for perioperative mortality based on performance on HCAHPS scores (range = -0.4%-0.2%), SCIP measures (range = -1.0%-0.7%), and medical condition mortality measures (range = -0.9%-0.3%) were small. The only statistically significant association was for heart failure mortality in which mortality for surgical oncology patients was 2.2% at the highest heart failure mortality hospitals compared with 3.2% at those centers in the 75th percentile for mortality from heart failure (ARR = -0.9, 95% CI = -1.7% to -0.2%) (P = .01). Similar trends were noted for failure to rescue; hospitals in the highest quartile of mortality from heart failure had higher failure-to-rescue rates.

Discussion

Our findings suggest that publically reported hospital performance data is a poor surrogate for outcomes for cancer patients undergoing surgical resection. Hospital satisfaction, measures of adherence to surgical quality measures, and mortality for common medical conditions were all associated with very small differences in morbidity and mortality for oncologic surgery.

The measurement of patient experience has become an important focus of hospitals and is now tied to reimbursement by CMS through their Value-Based Purchasing initiative (27). However, whether subjective measures of patient satisfaction correlate with quality of care has been difficult to demonstrate (4,28–33). An analysis of over 800 hospitals noted an association between patient experiences measured by HCAHPS and medical and surgical process measures of quality and found some association between HCAHPS scores and patient safety indicators, although results for some of these indicators were mixed (28). A second study examined the association between patient satisfaction and quality indicators after myocardial infarction. Among over 1800 respondents, the investigators noted that there was no association with satisfaction and either quality of care or survival (32). Further, patient perception of quality often differs from reported quality (34). We were unable to identify a consistent association between hospital satisfaction scores and perioperative outcomes for patients undergoing cancer-directed surgery.

Unlike patient satisfaction that is largely subjective, process measures that are reported by hospitals represent best practice and are typically developed based on rigorous data of an intervention’s association with outcomes. Despite the rationale for reporting process measures, prior work has shown mixed results in linking process measure adherence and outcomes (7,9–12). An analysis of Hospital Compare performance measures for acute myocardial infarction, pneumonia, and heart failure found that hospital compliance with performance measures was associated with only small differences in mortality (7). A large analysis of the SCIP measures noted that compliance with the composite of all measures was associated with a reduction in infectious morbidity, however there was no association with any of the individual measures, which is the data that is publically reported, and infection rates (12). Similarly, we found no association between SCIP compliance rates and all-cause morbidity and mortality among oncologic surgery patients. While process metrics may have a direct effect on outcome, there is growing recognition that these measures likely also reflect other underlying hospital characteristics and unmeasured factors (11).

To date, quality in surgical oncology has focused largely on procedural volume and process measures. The recognition of the importance of the relationship between procedural volume and outcomes for high-risk procedures led to public reporting of volume data and the development of recommended volume minimums for some procedures, including pancreatectomy and esophagectomy (35–39). Importantly, there is evidence that the recognition of this relationship has not only altered referral patterns, but also improved survival (40). More recently, national quality metrics have been proposed for breast, colon, and rectal cancer (41). These metrics largely focus on process measures such as lymph node counts, but currently are not easily accessible by health consumers. A recent analysis of Hospital Compare data found the accredited cancer centers performed better on measures of patient experience and process measures but worse on outcomes measures (42). The modest associations we found between publically reported, hospital-level data from Hospital Compare and outcomes for surgical oncology patients suggest that using available data for decision-making is of limited value.

We recognize a number of important limitations. First, we were unable to perform complete risk adjustment. Risk adjustment was performed for numerous characteristics, although detailed oncologic characteristics of patients that may have influenced outcomes were limited. Further, given that disease severity in oncology is often highly variable, differences in case mix unmeasured and confounders may have influenced our findings (43). Second, the magnitude of differences in scores for individual measures between high- and low-performing hospitals was often modest. Although we performed a number of sensitivity analyses stratifying hospitals into deciles and tertiles in which our findings were largely unchanged, differences may exist between some hospitals. Additional sensitivity analyses were also performed, examining the other individual HCAHPS metrics.

Third, measurement of inpatient care using administrative data may undercapture complications (44). To mitigate this bias, we examined only major complications likely to generate a code. Further, overall mortality and failure to rescue after a complication do not rely on billing data and should not vary across hospitals. We recognize that patient satisfaction scores and adherence to process measures were not specific to patients undergoing oncologic surgery. The goal of our study was to determine whether publically reported hospital data were associated with outcomes for surgical oncology patients. Although our models of risk adjustment correct for hospital volume, we recognize that differing sample sizes across hospitals may have influenced our findings. Lastly, as some states do not report hospital identifiers to NIS, we were unable to match all hospitals and some hospitals did not provide HCAHPS scores.

Based on these data, currently available publically reported hospital quality and satisfaction data appear to be of modest value for cancer patients attempting to determine hospital outcomes for cancer-directed surgery. Encouragingly, a number of initiatives specific to cancer patients are currently underway to measure the quality of both inpatient and outpatient care (45,46). Efforts should be made to ensure that these metrics correlate with outcomes, measure both short- and long-term outcomes, and are readily available to newly diagnosed cancer patients.

Funding

Dr. Wright (NCI R01CA169121) and Dr. Hershman (NCI R01 CA166084) are recipients of grants, and Dr. Tergas is the recipient of a fellowship (NCI R25 CA094061-11) from the National Cancer Institute.

Supplementary Material

The sponsor had no role in the design, collection or analysis of data, the preparation of the manuscript, nor the decision to submit the data for publication.

The authors have no conflicts of interest or disclosures.

References

- 1. Birkmeyer JD, Dimick JB, Birkmeyer NJ. Measuring the quality of surgical care: structure, process, or outcomes? J Am Coll Surg. 2004;198(4):626–632. [DOI] [PubMed] [Google Scholar]

- 2. Lilford R, Mohammed MA, Spiegelhalter D, Thomson R. Use and misuse of process and outcome data in managing performance of acute medical care: avoiding institutional stigma. Lancet. 2004;363(9415):1147–1154. [DOI] [PubMed] [Google Scholar]

- 3. Wright JD, Neugut AI, Ananth CV, et al. Deviations from guideline-based therapy for febrile neutropenia in cancer patients and their effect on outcomes. JAMA Intern Med. 2013;173(7):559–568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Jha AK, Li Z, Orav EJ, Epstein AM. Care in U.S. hospitals--the Hospital Quality Alliance program. N Engl J Med. 2005;353(3):265–274. [DOI] [PubMed] [Google Scholar]

- 5. Hospital Compare. http://www.medicare.gov/hospitalcompare/search.html Accessed April 6, 2014.

- 6. Jha AK, Orav EJ, Zheng J, Epstein AM. Patients’ perception of hospital care in the United States. N Engl J Med. 2008;359(18):1921–1931. [DOI] [PubMed] [Google Scholar]

- 7. Werner RM, Bradlow ET. Relationship between Medicare’s hospital compare performance measures and mortality rates. Jama. 2006;296(22):2694–2702. [DOI] [PubMed] [Google Scholar]

- 8. Werner RM, Goldman LE, Dudley RA. Comparison of change in quality of care between safety-net and non-safety-net hospitals. JAMA. 2008;299(18):2180–2187. [DOI] [PubMed] [Google Scholar]

- 9. Werner RM, Bradlow ET, Asch DA. Hospital performance measures and quality of care. LDI issue brief. 2008;13(5):1–4. [PubMed] [Google Scholar]

- 10. Werner RM, Bradlow ET. Public reporting on hospital process improvements is linked to better patient outcomes. Health Aff (Millwood). 2010;29(7):1319–1324. [DOI] [PubMed] [Google Scholar]

- 11. Werner RM, Bradlow ET, Asch DA. Does hospital performance on process measures directly measure high quality care or is it a marker of unmeasured care? Health Serv Res. 2008;43(5 Pt 1):1464–1484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Stulberg JJ, Delaney CP, Neuhauser DV, Aron DC, Fu P, Koroukian SM. Adherence to surgical care improvement project measures and the association with postoperative infections. JAMA. 2010;303(24):2479–2785. [DOI] [PubMed] [Google Scholar]

- 13. Friedberg MW, Schneider EC, Rosenthal MB, Volpp KG, Werner RM. Association between participation in a multipayer medical home intervention and changes in quality, utilization, and costs of care. JAMA. 2014;311(8):815–825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Rosenthal MB, Landrum MB, Meara E, Huskamp HA, Conti RM, Keating NL. Using performance data to identify preferred hospitals. Health Serv Res. 2007;42(6 Pt 1):2109–2119; discussion 294–323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Birkmeyer JD, Finlayson EV, Birkmeyer CM. Volume standards for high-risk surgical procedures: potential benefits of the Leapfrog initiative. Surgery. 2001;130(3):415–422. [DOI] [PubMed] [Google Scholar]

- 16. Birkmeyer JD, Dimick JB. Potential benefits of the new Leapfrog standards: effect of process and outcomes measures. Surgery. 2004;135(6):569–575. [DOI] [PubMed] [Google Scholar]

- 17. Nationwide Inpatient Sample (NIS). Healthcare Cost and Utilization Project (HCUP). 2007–2009. Agency for Healthcare Research and Quality, Rockville, MD. http://www.hcup-us.ahrq.gov/nisoverview.jsp Accessed January 26, 2014. [PubMed]

- 18. van Walraven C, Austin PC, Jennings A, Quan H, Forster AJ. A modification of the Elixhauser comorbidity measures into a point system for hospital death using administrative data. Med Care. 2009;47(6):626–633. [DOI] [PubMed] [Google Scholar]

- 19. Ghaferi AA, Birkmeyer JD, Dimick JB. Complications, failure to rescue, and mortality with major inpatient surgery in medicare patients. Ann Surg. 2009;250(6):1029–1034. [DOI] [PubMed] [Google Scholar]

- 20. Ghaferi AA, Birkmeyer JD, Dimick JB. Hospital volume and failure to rescue with high-risk surgery. Med Care. 2011;49(12):1076–1081. [DOI] [PubMed] [Google Scholar]

- 21. Wright JD, Herzog TJ, Siddiq Z, et al. Failure to rescue as a source of variation in hospital mortality for ovarian cancer. J Clin Oncol. 2012;30(32):3976–3982. [DOI] [PubMed] [Google Scholar]

- 22. Ghaferi AA, Birkmeyer JD, Dimick JB. Variation in hospital mortality associated with inpatient surgery. N Engl J Med. 2009;361(14):1368–1375. [DOI] [PubMed] [Google Scholar]

- 23. HCAHPS: Patients’ Perspectives of Care Survey. http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/HospitalHCAHPS.html Accessed April 6, 2014.

- 24. Hospital Consumer Assessment of Healthcare Providers and Systems. http://www.hcahpsonline.org/home.aspx Accessed April 6, 2014.

- 25. Surgical Care Improvement Project. http://www.jointcommission.org/core_measure_sets.aspx Accessed April 6, 2014.

- 26. Outcome Measures. http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/OutcomeMeasures.html Accessed April 6, 2014.

- 27. Hospital Value-Based Purchasing. http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/hospital-value-based-purchasing/index.html?redirect=/hospital-value-based-purchasing/ Accessed April 15, 2014.

- 28. Isaac T, Zaslavsky AM, Cleary PD, Landon BE. The relationship between patients’ perception of care and measures of hospital quality and safety. Health Serv Res. 2010;45(4):1024–1040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Girotra S, Cram P, Popescu I. Patient satisfaction at America’s lowest performing hospitals. Circ Cardiovasc Qual Outcomes. 2012;5(3):365–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Glickman SW, Boulding W, Manary M, et al. Patient satisfaction and its relationship with clinical quality and inpatient mortality in acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2010;3(2):188–195. [DOI] [PubMed] [Google Scholar]

- 31. Chang JT, Hays RD, Shekelle PG, et al. Patients’ global ratings of their health care are not associated with the technical quality of their care. Ann Intern Med. 2006;144(9):665–672. [DOI] [PubMed] [Google Scholar]

- 32. Lee DS, Tu JV, Chong A, Alter DA. Patient satisfaction and its relationship with quality and outcomes of care after acute myocardial infarction. Circulation. 2008;118(19):1938–1945. [DOI] [PubMed] [Google Scholar]

- 33. Sandoval GA, Brown AD, Sullivan T, Green E. Factors that influence cancer patients’ overall perceptions of the quality of care. Int J Qual Health Care. 2006;18(4):266–274. [DOI] [PubMed] [Google Scholar]

- 34. Bickell NA, Neuman J, Fei K, Franco R, Joseph KA. Quality of breast cancer care: perception versus practice. J Clin Oncol. 2012;30(15):1791–1795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Bach PB, Cramer LD, Schrag D, Downey RJ, Gelfand SE, Begg CB. The influence of hospital volume on survival after resection for lung cancer. N Engl J Med. 2001;345(3):181–188. [DOI] [PubMed] [Google Scholar]

- 36. Begg CB, Riedel ER, Bach PB, et al. Variations in morbidity after radical prostatectomy. N Engl J Med. 2002;346(15):1138–1144. [DOI] [PubMed] [Google Scholar]

- 37. Birkmeyer JD, Siewers AE, Finlayson EV, et al. Hospital volume and surgical mortality in the United States. N Engl J Med. 2002;346(15):1128–1137. [DOI] [PubMed] [Google Scholar]

- 38. Birkmeyer JD, Stukel TA, Siewers AE, Goodney PP, Wennberg DE, Lucas FL. Surgeon volume and operative mortality in the United States. N Engl J Med. 2003;349(22):2117–2127. [DOI] [PubMed] [Google Scholar]

- 39. Birkmeyer JD, Sun Y, Wong SL, Stukel TA. Hospital volume and late survival after cancer surgery. Ann Surg. 2007;245(5):777–783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Finks JF, Osborne NH, Birkmeyer JD. Trends in hospital volume and operative mortality for high-risk surgery. N Engl J Med. 2011;364(22):2128–2137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Surgeons ACo. CoC Quality of Care Measures. http://www.facs.org/cancer/qualitymeasures.html Accessed March 26, 2014.

- 42. Merkow RP, Chung JW, Paruch JL, Bentrem DJ, Bilimoria KY. Relationship between cancer center accreditation and performance on publicly reported quality measures. Ann Surg. 2014;259(6):1091–1097. [DOI] [PubMed] [Google Scholar]

- 43. Stukel TA, Fisher ES, Wennberg DE, Alter DA, Gottlieb DJ, Vermeulen MJ. Analysis of observational studies in the presence of treatment selection bias: effects of invasive cardiac management on AMI survival using propensity score and instrumental variable methods. JAMA. 2007;297(3):278–285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Quan H, Li B, Saunders LD, et al. Assessing validity of ICD-9-CM and ICD-10 administrative data in recording clinical conditions in a unique dually coded database. Health Serv Res. 2008;43(4):1424–1441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Jacobson JO, Neuss MN, McNiff KK, et al. Improvement in oncology practice performance through voluntary participation in the Quality Oncology Practice Initiative. J Clin Oncol. 2008;26(11):1893–1898. [DOI] [PubMed] [Google Scholar]

- 46. Spinks TE, Walters R, Feeley TW, et al. Improving cancer care through public reporting of meaningful quality measures. Health Aff (Millwood). 2011;30(4):664–672. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.