Abstract

The basis of musical consonance has been debated for centuries without resolution. Three interpretations have been considered: (i) that consonance derives from the mathematical simplicity of small integer ratios; (ii) that consonance derives from the physical absence of interference between harmonic spectra; and (iii) that consonance derives from the advantages of recognizing biological vocalization and human vocalization in particular. Whereas the mathematical and physical explanations are at odds with the evidence that has now accumulated, biology provides a plausible explanation for this central issue in music and audition.

Keywords: consonance, biology, music, audition, vocalization

Why we humans hear some tone combinations as relatively attractive (consonance) and others as less attractive (dissonance) has been debated for over 2,000 years (1–4). These perceptual differences form the basis of melody when tones are played sequentially and harmony when tones are played simultaneously.

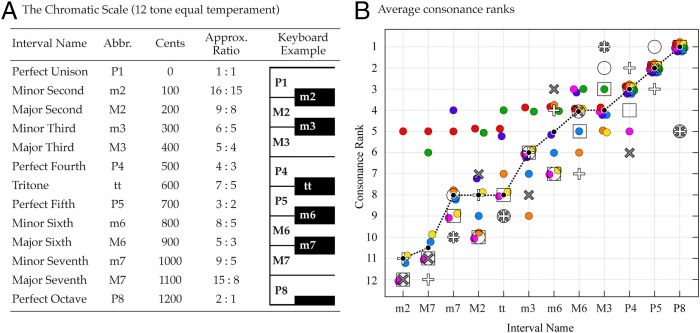

Musicians and theorists have long considered consonance and dissonance to depend on the fundamental frequency ratio between tones (regularly repeating sound signals that we perceive as having pitch). A number of studies have asked listeners to rank the relative consonance of a standard set of two-tone chords whose fundamental frequency ratios range from 1:1 to 2:1 (Fig. 1A) (5–8). The results show broad agreement about which chords are more consonant and which less (Fig. 1B). But why two-tone combinations with different frequency ratios are differently appealing to listeners has never been settled.

Fig. 1.

The relative consonance of musical intervals. (A) The equal tempered chromatic scale used in modern Western and much other music around the world. Each interval is defined by the ratio between a tone’s fundamental frequency and that of the lowest (tonic) tone in the scale. In equal temperament, small adjustments to these ratios ensure that every pair of adjacent tones is separated by 100 cents (a logarithmic measure of frequency). (B) The relative consonance assigned by listeners to each of the 12 chromatic intervals played as two-tone chords. The filled black circles and dashed line show the median rank for each interval; colored circles represent data from ref. 5; open circles from ref. 6; crosses from ref. 7; open squares from ref. 8. These data were collected between 1898 and 2012, in Germany, Austria, the United Kingdom, the United States, Japan, and Singapore.

In what follows, we discuss the major ways that mathematicians, physicists, musicians, psychologists, and philosophers have sought to rationalize consonance and dissonance, concluding that the most promising framework for understanding consonance is evolutionary biology.

Consonance Based on Mathematical Simplicity

Most accounts of consonance begin with the interpretation of Greek mathematician and philosopher Pythagoras in the sixth century BCE. According to legend, Pythagoras showed that tones generated by plucked strings whose lengths were related by small integer ratios were pleasing. In light of this observation, the Pythagoreans limited permissible tone combinations to the octave (2:1), the perfect fifth (3:2), and the perfect fourth (4:3), ratios that all had spiritual and cosmological significance in Pythagorean philosophy (9, 10).

The mathematical range of Pythagorean consonance was extended in the Renaissance by the Italian music theorist and composer Geoseffo Zarlino. Zarlino expanded the Pythagorean “tetrakys” to include the numbers 5 and 6, thus accommodating the major third (5:4), minor third (6:5), and major sixth (5:3), which had become increasingly popular in the polyphonic music of the Late Middle Ages (2). Echoing the Pythagoreans, Zarlino’s rationale was based on the numerological significance of 6, which is the first integer that equals the sum of all of the numbers of which it is a multiple (1 + 2 + 3 = 1 × 2 x 3 = 6). Additional reasons included the natural world as it was then understood (six “planets” in the sky), and Christian theology (the world was created in 6 days) (11). According to one music historian, Zarlino sought to create “a divinely ordained natural sphere within which the musician could operate freely” (ref. 10, p. 103).

Although Pythagorean beliefs have long been derided as numerological mysticism, the coincidence of numerical simplicity and pleasing perceptual effect continues to influence music theory and concepts of consonance even today (12, 13). The idea that tone combinations are pleasing because they are simple, however, begs the question of why simple is pleasing. And theories of consonance based on mathematical simplicity have no better answer today than did Pythagoras.

Consonance Based on Physics

Enthusiasm for mathematical explanations of consonance waned during the scientific revolution in the 17th century, which introduced a physical understanding of musical tones. The science of sound attracted many scholars of that era, including Vincenzo and Galileo Galilei, Renee Descartes, and later Daniel Bernoulli and Leonard Euler. Vincenzo Galilei, who had studied under Zarlino, undermined theories based on mathematical simplicity by demonstrating the presence of more complex ratios in numerologically “pure” scales (e.g., 32:27 between the second and fourth tones of Zarlino’s justly tuned major scale). He also showed that simple ratios do not account for consonance when their terms express the relative weights of hammers or volumes enclosed in bells, as proponents of mathematical theories had assumed. Galileo confirmed his father’s work, and went on to show that, properly conceived, the terms of consonant ratios express the frequencies at which objects vibrate (10).

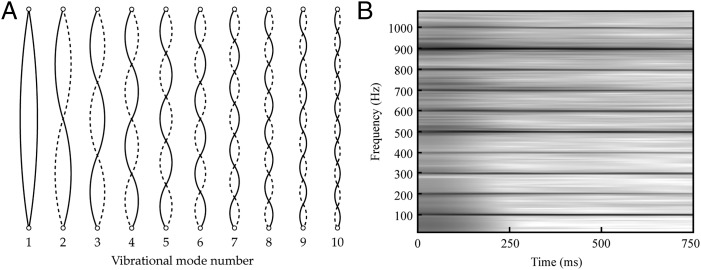

At about the same time, interest grew in the sounds produced by vibrating objects that do not correspond to the pitch of the fundamental frequency. Among the early contributors to this further issue was the French theologian and music theorist Marin Mersenne, who correctly concluded that the pitches of these “overtones” corresponded to specific musical intervals above the fundamental (10). A physical basis for overtones was provided shortly thereafter by the French mathematician and physicist Joseph Sauveur, who showed that the overtones of a plucked string arise from different vibrational modes and that their frequencies are necessarily integer multiples of the fundamental (Fig. 2). By the early 19th century, further contributions from Bernoulli, Euler, Jean le Rond d’Alembert, and Joseph Fourier had provided a complete description of this “harmonic series,” which arises not only from strings, but also from air columns and other physical systems (14).

Fig. 2.

The harmonic series generated by a vibrating string. (A) Diagram of the first 10 vibrational modes of a string stretched between fixed points. (B) A spectrogram of the frequencies produced by a vibrating string with a fundamental frequency of 100 Hz. Each of the dark horizontal lines is generated by one of the vibrational modes in A. The first mode gives rise to the fundamental at 100 Hz, the second mode to the component at 200 Hz, the third mode to the component at 300 Hz, etc. These component vibrations are called harmonics, overtones, or partials and their frequencies are integer multiples of the fundamental frequency. Note that many ratios between harmonic frequencies correspond to ratios used to define musical intervals (cf. Fig. 1A).

The ratios among harmonic overtones also drew the attention of the 18th century French music theorist and composer Jean-Philippe Rameau, who used their correspondence to musical intervals to conclude that the harmonic series was the foundation of musical harmony (14). He asserted that all physical objects capable of producing tonal sounds generate harmonic vibrations, the most prominent being the octave, perfect fifth, and major third. For Rameau, this conclusion justified the appeal of the major triad and made consonance a direct consequence of musical ratios naturally present in tones. Dissonance, on the other hand, occurred when intervals did not easily fit into this harmonic structure (2, 14).

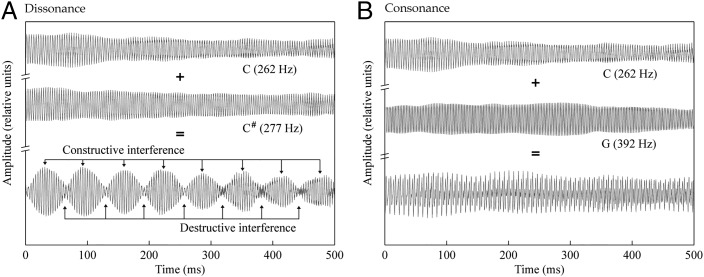

Despite these earlier insights, the major contributor to modern physical theories of consonance and dissonance was the 19th century polymath Hermann von Helmholtz, whose ideas are still regarded by many as the most promising approach to understanding these phenomena (15). Helmholtz credited Rameau and d’Alembert with the concept of the harmonic series as critical to music but bridled at the idea that a tone combination is consonant because it is natural, arguing that “in nature we find not only beauty but ugliness… proof that anything is natural does not suffice to justify it esthetically” (ref. 16, p. 232). He proposed instead that consonance arises from the absence of “jarring” amplitude fluctuations that can be heard in some tone combinations but not others. The basis for this auditory “roughness” is the physical interaction of sound waves with similar frequencies, whose combination gives rise to alternating periods of constructive and destructive interference (Fig. 3). Helmholtz took these fluctuations in amplitude to be inherently unpleasant, suggesting that “intermittent excitation” of auditory nerve fibers prevents “habituation.” He devised an algorithm for estimating the expected roughness of two-tone chords and showed that the combinations perceived as relatively consonant indeed exhibited little or no roughness, whereas those perceived as dissonant had relatively more. Helmholtz concluded that auditory roughness is the “true and sufficient cause of consonance and dissonance in music” (ref. 16, p. 227).

Fig. 3.

Auditory roughness as the basis of dissonance and its absence as the basis of consonance. (A) Acoustic waveforms produced by middle C, C#, and their combination (a minor second) played on an organ. Small differences in the harmonic frequencies that comprise these tones give rise to an alternating pattern of constructive and destructive interference perceived as auditory roughness. (B) Waveforms produced by middle C, G, and their combination (a perfect fifth). Helmholtz argued that the relative lack of auditory roughness in this interval is responsible for its consonance.

Studies based on more definitive physiological and psychophysical data in the 20th century generally supported Helmholtz’s interpretation. Georg von Békésy’s mapping of physical vibrations along the basilar membrane in response to sine tones made it possible to compare responses of the inner ear with the results of psychoacoustical studies (17, 18). This comparison gave rise to the idea of “critical bands,” regions ∼1 mm in length along the basilar membrane within which the inner ear integrates frequency information (19–21). Greenwood (18) related critical bands to auditory roughness by comparing estimates of their bandwidth to the psychophysics of roughness perception (22). The result suggested that tones falling within the same critical band are perceived as rough whereas tones falling in different critical bands are not (see also ref. 23). A link was thus forged between Helmholtz’s conception of dissonance and modern sensory physiology, and the phrase “sensory dissonance” was coined to describe this synthesis (24–26). The fact that perceived roughness tracked physical interactions on the basilar membrane was taken as support for Helmholtz’s theory.

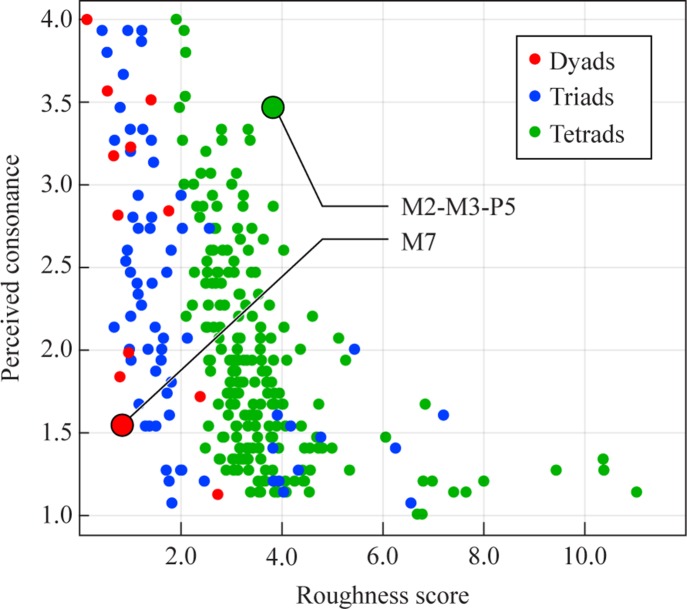

Despite these further observations, problems with this physical theory were also apparent. One concern is that perceptions of consonance and dissonance persist when the tones of a chord are presented independently to the ears, precluding physical interaction at the input stage and greatly reducing the perception of roughness (6, 27–29). Another problem is that the perceived consonance of a chord does not necessarily increase when roughness is artificially removed by synthesizing chords that lack interacting harmonics (30). Moreover, although the addition of tones to a chord generally increases roughness, chords with more tones are not necessarily perceived as less consonant. Many three- and four-tone chords are perceived as more consonant than two-tone chords despite exhibiting more auditory roughness (Fig. 4) (8, 26, 30).

Fig. 4.

Auditory roughness versus perceived consonance. Roughness scores plotted against mean consonance ratings for the 12 possible two-tone chords (dyads), 66 three-tone chords (triads), and 220 four-tone chords (tetrads) that can be formed using the intervals of the chromatic scale. The labeled chords highlight an example of a rough chord (the tetrad comprised of a major second, major third, and perfect fifth) that is perceived as more consonant than a chord with less roughness (the major-seventh dyad). The chord stimuli were comprised of synthesized piano tones with fundamental frequencies tuned according to the ratios in Fig. 1A and adjusted to maintain a mean frequency of 262 Hz (middle C). Roughness scores were calculated algorithmically as described in ref. 4. Consonance ratings were made by 15 music students at the Yong Siew Toh Conservatory of Music in Singapore using a seven-point scale that ranged from “quite dissonant” to “quite consonant.” (Data from ref. 8.)

Perhaps the most damning evidence against roughness theory has come from studies that distinguish roughness from consonance, showing that the perception of consonance and roughness are independent. McDermott et al. (29) examined the relationship between consonance and roughness by asking participants to rate the “pleasantness” of consonant and dissonant chords, as well as pairs of sine tones with interacting fundamental frequencies presented diotically (both tones to both ears) or dichotically (one tone to each ear). For each participant, the difference between their ratings of consonant and dissonant chords was used as a measure of the strength of their preference for consonance. Participants with a strong consonance preference rated consonant chords as more pleasing than dissonant chords whereas participants with a weak consonance preference rated consonant and dissonant chords as being more or less similar. Likewise, the differences between ratings of diotically and dichotically presented sine tone pairs were used to calculate the strength of a participant’s aversion to auditory roughness. The results showed that, across participants, the strength of consonance preferences was not significantly related to the strength of aversion to roughness, suggesting that these two aspects of tone perception are independent.

Building on this result, Cousineau et al. (31) played the same stimuli to participants with congenital amusia, a neurogenetic disorder characterized by a deficit in melody processing and reduced connectivity between the right auditory and inferior frontal cortices (32–34). In contrast to a control group, amusics showed smaller differences between ratings of consonant and dissonant chords but did not differ with respect to the perception of auditory roughness. The fact that amusics exhibit abnormal consonance perception but normal roughness perception further weakens the idea that the absence of roughness is the basis of consonance. Although most people find auditory roughness irritating and in that sense unpleasant, this false dichotomy (rough vs. not rough) neglects the possibility that consonance can be appreciated apart from roughness. By analogy, arguing that sugar tastes sweet because it is not sour misses that sweetness can be appreciated apart from sourness.

Consonance Based on Biology

A third framework for understanding consonance and dissonance is biological. Most natural sounds, such as those generated by forces like wind, moving water, or the movements of predators or prey, have little or no periodicity. When periodic sounds do occur in nature, they are almost always sound signals produced by animals for social communication. Although many periodic animal sounds occur in the human auditory environment, the most biologically important for our species are those produced by other humans: hearing only a second or less of human vocalization is often enough to form an impression of the source’s sex, age, emotional state, and identity. This efficiency reflects an auditory system tuned to the benefits of attending and processing conspecific vocalization.

Like most musical tones, vocalizations are harmonic. As with strings, vocal fold vibration generates sounds with a fundamental frequency and harmonic overtones at integer multiples of the fundamental. The presence of a harmonic series is thus characteristic of the sound signals that define human social life, attracting attention and processing by neural circuitry that responds with special efficiency. With respect to music, these facts suggest that our attraction to harmonic tones and tone combinations derives in part from their relative similarity to human vocalization. To the extent that our appreciation of tonal sounds has been shaped by the benefits of responding to conspecific vocalization, it follows that the more voice-like a tone combination is, the more we should “like” it.

Although the roots of this idea can be traced back to Rameau (14), it was not much pursued until the 1970s when Ernst Terhardt argued that harmonic relations are learned through developmental exposure to speech (15). According to his “virtual pitch theory,” this learning process results in an auditory system that functions as a harmonic pattern matcher, recognizing harmonic relations between the components of tones and associating them with physical or virtual fundamental frequencies (35). Although Terhardt proposed that virtual pitches account for several aspects of tonal music, he did not consider virtual pitch an explanation for the relative consonance of tone combinations, which, following Helmholtz, he explained by the absence of roughness (15).

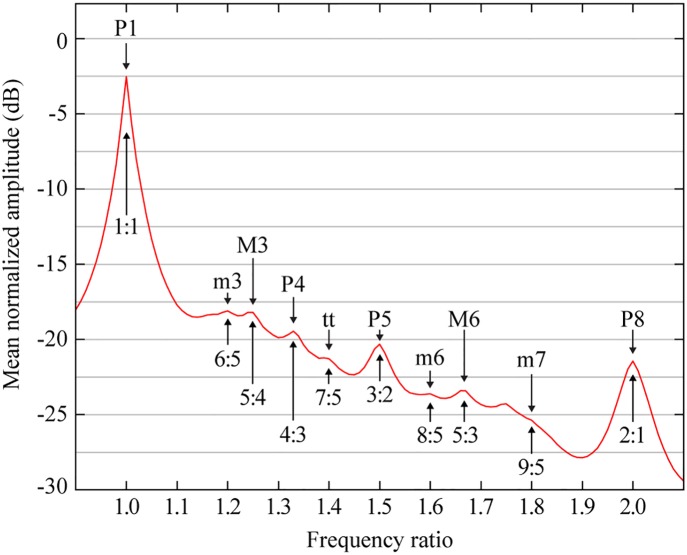

A direct exploration of musical consonance based on experience with vocalization was not undertaken until the turn of the century. To approximate human experience with vocal tones, Schwartz et al. (36) extracted thousands of voiced segments from speech databases and determined their spectra. When the frequency values were expressed as ratios and averaged across segments, popular musical intervals appeared as peaks in the distribution (Fig. 5). Furthermore, the prominence of these peaks (measured in terms of residual amplitude or local slope) tracked the consonance ranking depicted in Fig. 1B. Although musical ratios can be extracted from any harmonic series, these observations show that the intervals we perceive as consonant are specifically emphasized in vocal spectra.

Fig. 5.

Musical intervals in voiced speech. The graph shows the statistical prominence of physical energy at different frequency ratios in voiced speech. Each labeled peak corresponds to one of the musical intervals in Fig. 1A. The data were produced by averaging the spectra of thousands of voiced speech segments, each normalized with respect to the amplitude and frequency of its most powerful spectral peak. This method makes no assumptions about the structure of speech sounds or how the auditory system processes them; the distribution simply reflects ratios emphasized by the interaction of vocal fold vibration with the resonance properties of the vocal tract. (Data from ref. 36.)

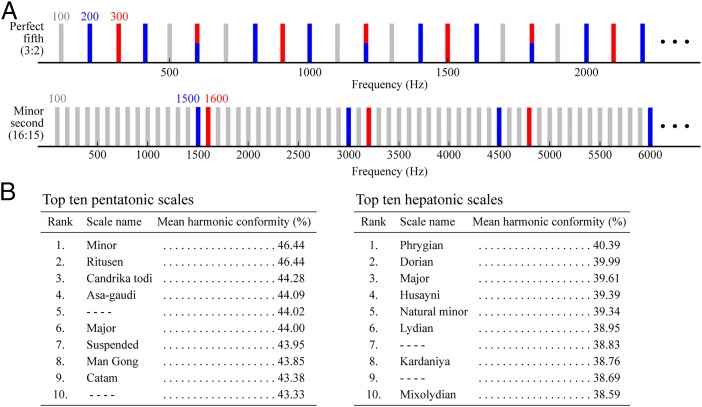

Appreciation of vocal similarity in musical tones also seems to have influenced the scales that humans prefer as vehicles for music. Although the human auditory system is capable of dividing an octave into billions of different arrangements, only a relatively small number of scales are used to create music. To address this issue, a related study developed a method for assessing how closely the spectra of two-tone chords conform to the uniform harmonic series that characterizes tonal vocalization (Fig. 6A) (37). By applying this measure iteratively to all of the possible two-tone chords in a given scale and calculating an average score, the overall conformance of any scale to a harmonic series could be measured. The results for millions of possible pentatonic and heptatonic scales showed that overall harmonic conformity predicted the popularity of scales used in different musical traditions (Fig. 6B). Although the primary aim of this study was to rationalize scale preferences, the analysis also predicts the consonance ranking in Fig. 1B (see table 1 in ref. 37).

Fig. 6.

The conformity of widely used pentatonic and heptatonic scales to a uniform harmonic series. (A) Schematic representations of the spectra of a relatively consonant (perfect fifth) and a relatively dissonant (minor second) two-tone chord. Blue bars represent the harmonic series of the lower tone, red bars indicate the harmonic series of the upper tone, and blue/red bars indicate coincident harmonics. Gray bars indicate the harmonic series defined by the greatest common divisor of the red and blue tones. The metric used by Gill and Purves (37) defined harmonic conformity as the percentage of harmonics in the gray series that are actually filled in by chord spectra (for another metric, see “harmonic entropy” in ref. 4). (B) When ranked according to harmonic conformity, the top ten pentatonic and heptatonic scales out of >40 million examined corresponded to popular scales used in different musical traditions. (Data from ref. 37.)

The importance of harmonic conformity in consonance was also examined by McDermott et al. (29) and Cousineau et al. (31). In addition to assessing the strength of consonance preferences and aversion to roughness, these authors evaluated the strength of preferences for “harmonicity.” Participants rated the pleasantness of single tones comprising multiple frequency components arranged harmonically (i.e., related to the fundamental by integer multiples) or inharmonically (i.e., not related to the fundamental by integer multiples); the difference between these ratings was used to calculate the strength of their preference for harmonicity. In contrast to roughness aversion, the strength of the participants’ harmonicity preferences covaried with the strength of their consonance preferences (29). Similarly, Cousineau et al. found that the impairment in consonance perception observed in participants with amusia was accompanied by a diminished ability to perceive harmonicity (31). Unlike the control group, the amusics considered harmonic and inharmonic tones to be equally pleasant.

Finally, the importance of harmonics in tone perception is supported by auditory neurobiology. Electrophysiological experiments in monkeys show that some neurons in primary auditory cortex are driven not only by tones with fundamentals at the frequency to which an auditory neuron is most sensitive, but also by integer multiples and ratios of that frequency (38). Furthermore, when tested with two tones, many auditory neurons show stronger facilitation or inhibition when the tones are harmonically related. Finally, in regions bordering primary auditory cortex, neurons are found that respond to both isolated fundamental frequencies and their associated harmonic series, even when the latter is presented without the fundamental (39). These experiments led Wang to propose that sensitivity to harmonic stimuli is an organizational principle of the auditory cortex in primates, with the connections of at least some auditory neurons determined by the harmonics of the frequency they respond to best (40).

Although current neuroimaging technologies lack the combined temporal and spatial resolution to observe harmonic effects in humans, functional MRI studies suggest an integration of harmonic information comparable with that observed in nonhuman primates (41–43), as does the nature of human pitch perception (44).

Some Caveats

Whereas the sum of present evidence suggests that recognizing harmonic vocalization is central to consonance, this interpretation should not be taken to imply that vocal similarity is the only factor underlying our attraction to musical tone combinations. Indeed, other observations suggest that it is not. For example, experience and familiarity also play important roles. The evidence for consonance preferences in infants is equivocal (45–51). It is clear, however, that musical training sharpens the perception of harmonicity and consonance (29). Furthermore, many percussive instruments (e.g., gongs, bells, and metallophones) produce periodic sounds that lack harmonically related overtones, indicating that tonal preferences are not rigidly fixed and that interaction with other factors such as rhythmic patterning also shapes preferences.

Another obstacle for the biological interpretation advanced here is why consonance depends on tone combinations at all, given that isolated tones perfectly represent uniform harmonic series. One suggested explanation for our attraction to tone combinations rather than isolated tones is that successfully parsing complex auditory signals generates a greater dopaminergic reward (26). Another is that tone combinations imply vocal cooperation, social cohesion, and the positive emotions they entail (52, 53). Another possibility is that isolated harmonic tones are indeed attractive compared with other sound sources, but irrelevant in a musical context.

Finally, several studies have explored consonance in nonhuman animals, so far with inclusive and sometimes perplexing results (54–58). For example, male hermit thrush songs comprise tones with harmonically related fundamental frequencies, despite the fact that their vocalizations do not exhibit strong harmonics (58). The authors argue that rather than attraction to conspecific vocalization, small-integer ratios may be more easily remembered or processed by the auditory system, an idea for which there is also some support in humans (50). Based on the evidence reviewed here, however, it seems fair to suggest that any species that generates harmonics in vocal communication possesses the biological wherewithal to develop a sense of consonance. What seems lacking is the evolution of the social and cultural impetus to do so.

Conclusion

The basis for the relative consonance and dissonance of tone combinations has long been debated. An early focus was on mathematical simplicity, an approach first attributed to Pythagoras. In the Renaissance, interest shifted to tonal preferences based on physics, with Helmholtz’s roughness theory becoming prevalent in the 19th and 20th centuries. Although physical (and to a lesser degree mathematical) theories continue to have their enthusiasts, neither accounts for the phenomenology of consonance or explains why we are attracted to tonal stimuli. In light of present evidence, the most plausible explanation for consonance and related tonal phenomenology is an evolved attraction to the harmonic series that characterize conspecific vocalizations, based on the biological importance of social sound signals. If correct, this explanation of consonance would rationalize at least some aspects of musical aesthetics.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Cazden N. Sensory theories of musical consonance. J Aesthet Art Crit. 1962;20:301–319. [Google Scholar]

- 2.Tenney J. A History of ‘Consonance’ and ‘Dissonance’. Excelsior Music Publishing; New York: 1988. [Google Scholar]

- 3.Burns EM. Intervals, scales, and tuning. In: Deutsch D, editor. The Psychology of Music. 2nd Ed. Elsevier; San Diego: 1999. pp. 215–264. [Google Scholar]

- 4.Setharas WA. 2005. Tuning, Timbre, Spectrum, Scale (Springer, London), 2nd Ed.

- 5.Malmberg CF. The perception of consonance and dissonance. Psychol Monogr. 1918;25:93–133. [Google Scholar]

- 6.Guernsey M. Consonance and dissonance in music. Am J Psychol. 1928;40(2):173–204. [Google Scholar]

- 7.Butler JW, Daston PG. Musical consonance as musical preference: A cross-cultural study. J Gen Psychol. 1968;79(1st Half):129–142. doi: 10.1080/00221309.1968.9710460. [DOI] [PubMed] [Google Scholar]

- 8.Bowling DL. 2012. The biological basis of emotion in musical tonality. PhD dissertation (Duke University, Durham, NC)

- 9.Crocker RL. Pythagorean mathematics and music. J Aesthet Art Crit. 1963;22:189–198. [Google Scholar]

- 10.Palisca CV. Scientific empiricism in musical thought. In: Rhys HH, editor. Seventeenth-Century Science and the Arts. Princeton Univ Press; Princeton: 1961. pp. 91–137. [Google Scholar]

- 11.Palisca CV. 1968. Introduction. The Art of Counterpoint: Part Three of Le Istitutioni Harmoniche, by Gioseffo Zarlino (Norton, New York), pp ix-xxii.

- 12.Boomsliter P, Creel W. The long pattern hypothesis in harmony and hearing. J Music Theory. 1961;5(1):2–31. [Google Scholar]

- 13.Roederer JG. Introduction to the Physics and Psychophysics of Music. Springer; New York: 1973. [Google Scholar]

- 14.Christensen T. Rameau and Musical Thought in the Enlightenment. Cambridge University Press; Cambridge, UK: 1993. [Google Scholar]

- 15.Terhardt E. The concept of musical consonance: A link between music and psychoacoustics. Music Percept. 1984;1(c):276–295. [Google Scholar]

- 16.Helmholtz H. 1885. Die Lehre von den Tonempfindungen (Longman, London); trans Ellis AJ (1954) [On the Sensations of Tone] (Dover, New York), 4th Ed. German.

- 17.Békésy G. Experiments in Hearing. McGraw-Hill; New York: 1960. [Google Scholar]

- 18.Greenwood DD. Critical bandwidth and the frequency coordinates of the basilar membrane. J Acoust Soc Am. 1961;33(10):1344–1356. [Google Scholar]

- 19.Fletcher H. 1995. The ASA Edition of Speech and Hearing in Communication, ed Allen JB (Acoustical Society of America, Melville, NY)

- 20.Zwicker E, Flottorp G, Stevens SS. Critical band width in loudness summation. J Acoust Soc Am 1957 [Google Scholar]

- 21.Greenwood DD. Auditory masking and the critical band. J Acoust Soc Am. 1961;33(4):484–502. doi: 10.1121/1.1912668. [DOI] [PubMed] [Google Scholar]

- 22.Mayer AM. Research in acoustics, no. IX. Philos Mag Ser 5. 1894;37(226):259–288. [Google Scholar]

- 23.Plomp R, Levelt WJ. Tonal consonance and critical bandwidth. J Acoust Soc Am. 1965;38(4):548–560. doi: 10.1121/1.1909741. [DOI] [PubMed] [Google Scholar]

- 24.Kameoka A, Kuriyagawa M. Consonance theory part I: Consonance of dyads. J Acoust Soc Am. 1969;45(6):1451–1459. doi: 10.1121/1.1911623. [DOI] [PubMed] [Google Scholar]

- 25.Greenwood DD. Critical bandwidth and consonance in relation to cochlear frequency-position coordinates. Hear Res. 1991;54(2):164–208. doi: 10.1016/0378-5955(91)90117-r. [DOI] [PubMed] [Google Scholar]

- 26.Huron D. 2002. A new theory of sensory dissonance: A role for perceived numerosity. Proceedings of the 7th International Conference for Music Perception and Cognition (ICMPC)

- 27.Licklider JCR, Webset JC, Hedlun JM. On the frequency limits of binaural beats. J Acoust Soc Am. 1950;22(4):468–473. [Google Scholar]

- 28.Bidelman GM, Krishnan A. Neural correlates of consonance, dissonance, and the hierarchy of musical pitch in the human brainstem. J Neurosci. 2009;29(42):13165–13171. doi: 10.1523/JNEUROSCI.3900-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McDermott JH, Lehr AJ, Oxenham AJ. Individual differences reveal the basis of consonance. Curr Biol. 2010;20(11):1035–1041. doi: 10.1016/j.cub.2010.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nordmark J, Fahlén LE. 1988. Beat theories of musical consonance. KTH Royal Institute of Technology STL-QPSR 29(1):111–122.

- 31.Cousineau M, McDermott JH, Peretz I. The basis of musical consonance as revealed by congenital amusia. Proc Natl Acad Sci USA. 2012;109(48):19858–19863. doi: 10.1073/pnas.1207989109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ayotte J, Peretz I, Hyde K. Congenital amusia: A group study of adults afflicted with a music-specific disorder. Brain. 2002;125(Pt 2):238–251. doi: 10.1093/brain/awf028. [DOI] [PubMed] [Google Scholar]

- 33.Peretz I, Cummings S, Dubé MP. The genetics of congenital amusia (tone deafness): A family-aggregation study. Am J Hum Genet. 2007;81(3):582–588. doi: 10.1086/521337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Loui P, Alsop D, Schlaug G. Tone deafness: A new disconnection syndrome? J Neurosci. 2009;29(33):10215–10220. doi: 10.1523/JNEUROSCI.1701-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Terhardt E. Pitch, consonance, and harmony. J Acoust Soc Am. 1974;55(5):1061–1069. doi: 10.1121/1.1914648. [DOI] [PubMed] [Google Scholar]

- 36.Schwartz DA, Howe CQ, Purves D. The statistical structure of human speech sounds predicts musical universals. J Neurosci. 2003;23(18):7160–7168. doi: 10.1523/JNEUROSCI.23-18-07160.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gill KZ, Purves D. A biological rationale for musical scales. PLoS One. 2009;4(12):e8144. doi: 10.1371/journal.pone.0008144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kadia SC, Wang X. Spectral integration in A1 of awake primates: Neurons with single- and multipeaked tuning characteristics. J Neurophysiol. 2003;89(3):1603–1622. doi: 10.1152/jn.00271.2001. [DOI] [PubMed] [Google Scholar]

- 39.Bendor D, Wang X. The neuronal representation of pitch in primate auditory cortex. Nature. 2005;436(7054):1161–1165. doi: 10.1038/nature03867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wang X. The harmonic organization of auditory cortex. Front Syst Neurosci. 2013;7:114. doi: 10.3389/fnsys.2013.00114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Patterson RD, Uppenkamp S, Johnsrude IS, Griffiths TD. The processing of temporal pitch and melody information in auditory cortex. Neuron. 2002;36(4):767–776. doi: 10.1016/s0896-6273(02)01060-7. [DOI] [PubMed] [Google Scholar]

- 42.Penagos H, Melcher JR, Oxenham AJ. A neural representation of pitch salience in nonprimary human auditory cortex revealed with functional magnetic resonance imaging. J Neurosci. 2004;24(30):6810–6815. doi: 10.1523/JNEUROSCI.0383-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Bendor D, Wang X. Cortical representations of pitch in monkeys and humans. Curr Opin Neurobiol. 2006;16(4):391–399. doi: 10.1016/j.conb.2006.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Plack CJ, Oxenham AJ. The psychophysics of pitch. In: Fay RR, Popper AN, editors. Springer Handbook of Auditory Research. Vol 24. Springer; New York: 2005. pp. 7–55. [Google Scholar]

- 45.Crowder RG, Reznick JS, Rosenkrantz SL. Perception of the major/minor distinction: V. Preferences among infants. Bull Psychon Soc. 1991;29:187–188. [Google Scholar]

- 46.Zentner MR, Kagan J. Infants’ perception of consonance and dissonance in music. Infant Behav Dev. 1998;21(3):483–492. [Google Scholar]

- 47.Trainor LJ, Heinmiller BM. The development of evaluative responses to music. Infant Behav Dev. 1998;21:77–88. [Google Scholar]

- 48.Trainor LJ, Tsang CD, Cheung VHW. Preference for sensory consonance in 2- and 4-month-old infants. Music Percept. 2002;20(2):187–194. [Google Scholar]

- 49.Masataka N. Preference for consonance over dissonance by hearing newborns of deaf parents and of hearing parents. Dev Sci. 2006;9(1):46–50. doi: 10.1111/j.1467-7687.2005.00462.x. [DOI] [PubMed] [Google Scholar]

- 50.Trehub SE. 2000. Human processing predispositions and musical universals. The Origins of Music, eds Wallin NL, Merker B, Brown S (MIT Press, New York), pp 427–448.

- 51.Plantinga J, Trehub SE. Revisiting the innate preference for consonance. J Exp Psychol Hum Percept Perform. 2014;40(1):40–49. doi: 10.1037/a0033471. [DOI] [PubMed] [Google Scholar]

- 52.Roederer J. The search for a survival value of music. Music Percept. 1984;1:350–356. [Google Scholar]

- 53.Hagen EH, Bryant GA. Music and dance as a coalition signaling system. Hum Nat. 2003;14(1):21–51. doi: 10.1007/s12110-003-1015-z. [DOI] [PubMed] [Google Scholar]

- 54.McDermott J, Hauser M. Are consonant intervals music to their ears? Spontaneous acoustic preferences in a nonhuman primate. Cognition. 2004;94(2):B11–B21. doi: 10.1016/j.cognition.2004.04.004. [DOI] [PubMed] [Google Scholar]

- 55.McDermott J, Hauser MD. Probing the evolutionary origins of music perception. Ann N Y Acad Sci. 2005;1060:6–16. doi: 10.1196/annals.1360.002. [DOI] [PubMed] [Google Scholar]

- 56.Borchgrevink HM. Musikalske akkordpreferanser hos mennesket belyst ved dyreforsøk. Tidsskr Nor Laegeforen. 1975;95(6):356–358. [PubMed] [Google Scholar]

- 57.Chiandetti C, Vallortigara G. Chicks like consonant music. Psychol Sci. 2011;22(10):1270–1273. doi: 10.1177/0956797611418244. [DOI] [PubMed] [Google Scholar]

- 58.Doolittle EL, Gingras B, Endres DM, Fitch WT. Overtone-based pitch selection in hermit thrush song: Unexpected convergence with scale construction in human music. Proc Natl Acad Sci USA. 2014;111(46):16616–16621. doi: 10.1073/pnas.1406023111. [DOI] [PMC free article] [PubMed] [Google Scholar]