Abstract

Background

Adverse event (AE) surveillance may be enhanced by the Institute for Healthcare Improvement’s Global Trigger Tool (GTT). A pilot study of the GTT was conducted in one Veterans Health Administration (VA) facility to assess the rates, types, and harm of AEs detected, and to examine the overlap in AE detection between the GTT and existing surveillance mechanisms.

Methods

GTT guidelines were followed and medical charts were reviewed for 17 weeks of acute-care hospitalizations. Investigators met monthly, first to adjudicate discordant reviewer categorizations of harm and later to categorize the AEs detected using standardized definitions. GTT-detected AEs were compared with incident reports, Patient Safety Indicators, and the VA Surgical Quality Improvement Program.

Results

Medical charts were reviewed for 273 cases out of 1,980 eligible cases. Using the GTT, a total of 109 AEs were identified. More than 1 out of 5 hospitalizations (21%) were associated with an AE. The majority of AEs detected (60%) were minor harms; there were no deaths attributable to medical care. Ninety-six of the 109 AEs (88%) were not detected by other measures.

Conclusions

The GTT identified previously undetected AEs at one VA. The GTT has the potential to track AEs and guide quality improvement efforts in conjunction with existing AE surveillance mechanisms.

Keywords: quality measurement, trigger tools, health services research, patient safety, harm, adverse event epidemiology/detection

INTRODUCTION

Adverse event (AE) surveillance is one of the key practices associated with patient safety improvement.1 Chart review is widely considered the gold standard for AE detection;2 however, because chart review is resource-intensive, many AE surveillance programs rely on other mechanisms, such as voluntary incident reports,3 or administrative data-based tools such as the Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators (PSIs).4,5 Despite the lower costs of these other systems, providers and hospital leaders place more trust in chart review-based results, and therefore are more motivated to address the patient safety problems detected by these processes.6

The cost effectiveness of chart review for AE detection can be improved through the use of triggers that filter medical record data to identify cases with a high probability of a true AE.7 A trigger is an algorithm that characterizes a pattern of utilization consistent with a potential AE. In 2007, the Institute for Healthcare Improvement (IHI) released the Global Trigger Tool (GTT) for use in hospital AE detection.8 Unlike traditional chart review, the IHI GTT process imposes a 20-minute time limit in which a trained reviewer, typically a nurse, records whether any of 52 triggers are evident. Trigger-flagged cases then undergo a second round of review by a physician to confirm the event and assign a rating of degree of harm using a validated harm scale.9 The second review may take as little as 2–5 minutes. Studies have found high reliability in the measurement of AEs within facilities over time.8,10

Recent studies comparing the IHI GTT with other methods of patient safety measurement (e.g., incident reports and PSIs) demonstrate not only the high yield of harmful events detected by the GTT, but also the number of events missed by existing tools.10–12 The GTT may be a promising method for detecting harmful AEs with a higher yield of true events than automated methods,11 requiring a lower resource investment than traditional chart review.13 Although the GTT has been evaluated in a number of healthcare systems,14–19 it has not been applied in the Veterans Health Administration (VA), the largest integrated healthcare delivery system in the United States with a reputation for exceptional patient safety.20,21

VA has made a strong commitment to improving patient safety in the last decade, and centrally tracks a variety of AEs through various mechanisms, including incident reports, PSIs and healthcare-associated infections (HAIs). Furthermore, VA applies a chart review-based approach to detect specific AEs in a limited selection of surgical patients through the VA Surgical Quality Improvement Program (VASQIP;22 called the National Surgical Quality Improvement Program (NSQIP) in the private sector23). A pilot study of the IHI GTT was conducted at one VA inpatient facility to assess the value of the GTT approach alongside existing AE surveillance programs. Although chart review is an expensive process, detecting heretofore unidentified AEs may lead to needed patient safety initiatives that may be well worth the cost.24 The specific aims of the study were to 1) assess the rates, types, and harm of AEs detected according to the GTT methodology, 2) examine the overlap in AE detection between the GTT and incident reports, PSIs, and VASQIP events, and 3) compare healthcare utilization following hospital discharge between patients with and without an AE detected by the GTT.

METHODS

Design and Sample

This pilot study applied the IHI GTT methodology based on the GTT White Paper version 2 published in 2009.8 Study findings were presented to hospital and national leadership. The study population included medical/surgical hospitalizations at one large VA facility with a discharge between July 1 – October 27, 2012. VA Boston Healthcare System and the participating facility’s Institutional Review Board approved this study.

Adaptation of the GTT Method for VA

The GTT White Paper guidelines for chart review were generally followed with some process changes recommended by Good et al.10 and Landrigan et al.12 and further changes required to fit the VA healthcare system.

Review Team

The GTT White Paper recommends that the review team include two nurse reviewers and one physician reviewer, and that “exhaustive studies to measure reliability” should not be undertaken.8 This pilot deviated from the GTT White Paper in that only one nurse was used for reviews. While the GTT White Paper recommends two nurses for chart review, the purpose is to increase the yield of potential AEs, not to check the reliability of findings.8 The GTT entails a limited chart review (20 minutes), and if one reviewer identifies different events than the other reviewer, this discrepancy may reflect which part of the chart was reviewed in the timeframe allotted rather than an issue with reliability. Given the pilot nature of this study, one nurse reviewed medical charts and inter-rater reliability was checked by having a nurse co-investigator review the same set of patient’s charts in week 5. All trigger-flagged case’s charts were re-reviewed by a physician to ensure the validity of the events detected.

Sampling

The White Paper guidelines were followed on sampling patient records by randomly selecting patients discharged more than 30 days prior with a LOS greater than 1 day and age greater than 18 years (n=1,980). Per GTT methodology, there were 20 randomly selected cases per week for chart review for 17 weeks, with an additional 10 cases in week 1 used for training. This pilot deviated from the White Paper in that nearly twice as many case’s medical charts were reviewed as required to generate AE rates (the White Paper recommends between 5–10 records per week). The higher volume of cases was deliberately undertaken to increase the yield of GTT-detected events for comparison with AEs detected by other surveillance systems. After the first month of reviews, the research team decided to review 15 cases per week as opposed to 20, because reviewing 15 cases weekly was a more manageable workload for nurse and physician reviewers.

Review Process

The entire research team (co-principal investigators, nurse co-investigator, nurse reviewer and physician reviewer), completed the IHI GTT training process described in the White Paper8 and consulted with a representative from IHI to resolve questions. The review process consisted of a nurse conducting a 20-minute review of key sections of the patient’s medical record for any of the GTT triggers; after documenting the trigger, the nurse reviewer then determined whether an AE occurred. Cases with an AE were forwarded to a physician for a brief re-review of the medical chart to confirm the AE and the associated level of harm. Research team members familiar with the VA electronic health record (EHR) prioritized chart review to begin with the sections most likely to hold relevant information about AEs (e.g., coding summary, discharge summary, operative report). Similar to both Good et al.10 and Landrigan et al.12, additional data to collect as part of the GTT review process were discussed. In addition to indicating whether an AE was present-on-admission, the research team also wanted to know where the harm was detected in the EHR (to facilitate further investigation of the event, if necessary) and where the harm occurred in the facility (to assist hospital leadership in looking for patterns of possible patient safety problems). Finally, given that VA treats primarily an elderly male population, and no obstetrics services are provided, the Perinatal Module triggers were removed, with the exception of P3 “Platelet count less than 50,000,” because thrombocytopenia can be important beyond perinatal patients. These process changes were reviewed with the representative from IHI, who concurred with the decisions.

In previous work using medical chart review, electronic chart review tools were developed using Microsoft InfoPath and Access.5 A similar tool was created to capture GTT chart review results. On a weekly basis the study coordinator sent the nurse reviewer an encrypted file with the patient identifiers, case identifiers, admission and discharge dates. The nurse then used the file to find the hospitalization in the patient’s EHR, and entered de-identified results into the InfoPath form. When the nurse review was complete, the study coordinator reviewed cases in the Access database, and sent the physician reviewer an encrypted file with only the patients who had a potential AE. The physician completed the review by accessing the InfoPath form by case identifier, and typically reviewed the identified trigger in the EHR, before approving or disapproving the level of harm detected.

The GTT methodology defines an AE as patient harm, ranging from “temporary harm to the patient that required intervention” (Category E) to “death” (Category I).11 Nurse reviewers were asked to consider triggers and potential AEs from the perspective of the patient and to record only harms where there was documentation in the EHR of the harm itself. Harms that were the result of the patient’s medical condition as opposed to medical care were not considered AEs. Reviewers were also instructed to record any AEs detected in the 20 minute review time regardless of whether the AE was detected because of a trigger.

Following the GTT White Paper protocol, the study team met monthly to discuss any cases with disagreement between the nurse and physician reviewers and to address issues related to this initial pilot experience of the GTT in the VA system. For example, in the second research team meeting, inter-rater reliability results for week 5 were reviewed and, differing from the standard GTT methodology, it was determined that cases with disagreement should not be decided solely by the physician reviewer. Many of these disagreements centered on the distinction between harm attributable to medical care versus natural disease progression. In most cases of disagreement, the physician attributed harm to the patient’s condition, while the nurse characterized it as attributable to medical care. Team discussions revealed that the GTT methodology did not give the two clinical opinions equal value. It was agreed that the pilot study results must be acceptable to patient safety leadership in VA, many of whom are nurses, and therefore nurse and physician input should be valued comparably. Thus, group meetings evolved first to include a review of all cases with disagreement, then to discuss cases with high severity of harm, and finally to categorize the AEs detected into standardized AE types (described below). The entire research team participated in these meetings and infrequently a subsequent review of the medical chart was conducted to clarify events and achieve consensus regarding decisions on level of harm.

Analysis

Sample Demographics

Demographic and clinical characteristics of the chart review sample (n=273) were compared with the characteristics of all patients admitted to the facility in the 17 weeks of the study (N=1,707) to confirm whether the sample was generalizable to the patient population. Variables included sex, age, length of stay, medical versus surgical admission type (i.e., Diagnostic Related Group, DRG), and patient disease burden (i.e., Elixhauser comorbidity score).25 Characteristics of patients with and without an AE in the sample undergoing chart review were compared next. All comparisons were either two-sided Fisher’s Exact test or the Satterthwaite t-test for unequal variance and SAS programming software was used for all analyses.26

GTT Analyses

The following rates were calculated according to the GTT methodology: AEs per 1,000 patient days, AEs per 100 admissions, the percent of admissions with an AE, and the severity of harm among the AEs detected.8

Categorization of AEs

The pilot study expanded upon the standard GTT analyses to present the types of AEs detected. AEs were categorized to determine the primary types of harms and to assess overlap with existing patient safety measures that target specific AEs (e.g., pressure ulcer or falls). The World Health Organization International Classification for Patient Safety (ICPS)27 and the AHRQ Common Formats28 were used as a starting point for classification. These categories include: Blood or Blood Product; Device or Medical/Surgical Supply, including Health Information Technology (HIT); Fall; Healthcare-associated Infection; Medication or Other Substance; Pressure Ulcer; Surgery or Anesthesia; and Venous Thromboembolism. As a team, each AE was reviewed to determine its classification and new categories were created as necessary. AEs could be classified from the perspective of causation versus remediation; the goal of this study was to define AE types by cause in order to facilitate quality improvement efforts at the facility.

Overlap with Other Measures

Harms detected by the GTT were compared with AEs detected by three other patient safety surveillance programs used in VA: voluntary incident reports, PSIs, and VASQIP. The incident report data were obtained from the local patient safety manager; the PSI data were obtained from VA’s Inpatient Evaluation Center; and the VASQIP data were obtained from the local VASQIP nurse with approval from the Chief of Surgery. AEs were cross-referenced from each source using hospitalization dates and patient identifiers. Overlaps was determined at the case level (i.e., did each measure identify the case as having any AE?) and at the event level (i.e., did each measure identify the same AE?). Overlap in surgical versus medical patients was also examined.

Comparison of Healthcare Utilization

Finally, future (post-hospitalization) healthcare utilization was compared between patients with and without an AE detected by the GTT. A Poisson regression model was constructed to predict future outpatient visits, as well as a logistic regression to calculate the odds of a subsequent hospitalization in the six months after the index hospitalization as a function of whether an AE was detected. Both models controlled for 12-month prior inpatient and outpatient utilization, sex, age, number of comorbidities, and length of stay in the index admission.

RESULTS

Sample Demographics

There were 273 randomly sampled cases that underwent review out of 1,980 eligible cases. Table 1 shows the demographics of the review sample, as well as the demographic differences between cases with at least one AE and those without. There were 109 AEs over 17 weeks and 2,079 hospital days (calculated by summing the length of stay for all cases that were sampled for review of medical charts). Two of the cases in the sample with AEs were removed because they were found to be sub-acute care cases (not inpatient cases) miscoded in the VA administrative data file. Many of the cases with an AE had multiple AEs such that 109 AEs were detected among 59 cases with any AE;. A higher proportion of these were surgical cases (i.e., identified by the presence of a surgical DRG at discharge), although this difference was not significant. Compared to cases without an AE, those with an AE had longer lengths of stay (mean 11.8 days versus 4.9 days, p-value<0.0001) and were older (mean 71.1 years versus 67.5 years, p-value=0.04).

Table 1.

Demographics of Overall Patient Population, Chart Review Sample and Patients With and Without an Adverse Event (AE)

| VA Inpatient Facility (N=1,707) |

Chart Review Sample (n=273) |

p-value | Chart Review Sample - No AE (N=214) |

Chart Review Sample - Any AE (n=59) |

p-value | |

|---|---|---|---|---|---|---|

| % Male | 96.4% | 95.6% | 0.5 | 94.9% | 98.3% | 0.5 |

| Length of stay in days, mean (sd) | 6.5 (6.9) | 6.4 (5.2) | 0.7 | 4.9 (2.8) | 11.8 (7.9) | <0.0001 |

| Age in years, mean (sd) | 67.6 (12.6) | 68.2 (12.6) | 0.4 | 67.5 (12.8) | 71.1 (11.5) | 0.04 |

| Number of Elixhauser comorbidities, mean (sd) | 2.3 (1.4) | 2.3 (1.4) | 0.5 | 2.2 (1.4) | 2.4 (1.4) | 0.32 |

| % of admissions with a surgery DRG | 27.5% | 28.2% | 0.8 | 25.7% | 37.2% | 0.1 |

Bold indicates statistically significant difference (p value < 0.05) based on either the two-sided Fisher’s Exact test or the Satterthwaite t-test for unequal variance

Abbreviations: sd= standard deviation; DRG = diagnosis-related group

GTT Analyses

Of the 288 cases reviewed (273 unique patients), 15 cases had their medical charts dual-reviewed by 2 nurses. The second review of the 15 cases generated new information such that the final sample of reviewed cases was 288. The inter-rater reliability check found a high level of agreement between the nurse reviewer and the nurse co-investigator in terms of the triggers found and whether harm was associated with the trigger. The test of nurse agreement confirmed that in cases with a LOS greater than 7 days, there was less overlap in AEs detected largely because more triggers were detected overall. The dual review process also found that adding a second nurse resulted in the identification of more triggers but only 1 new case with an AE.

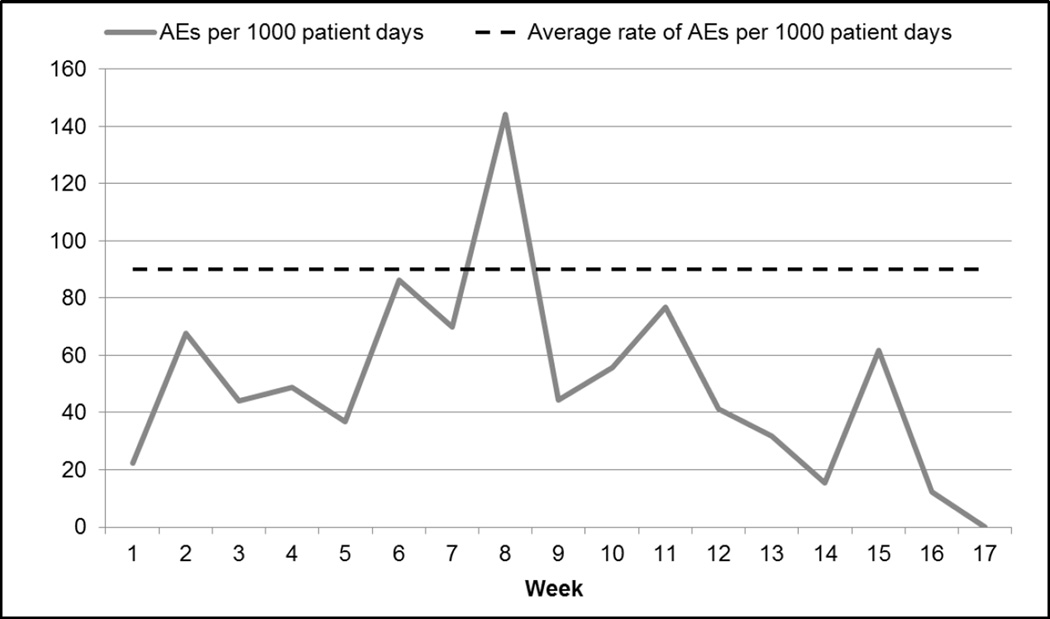

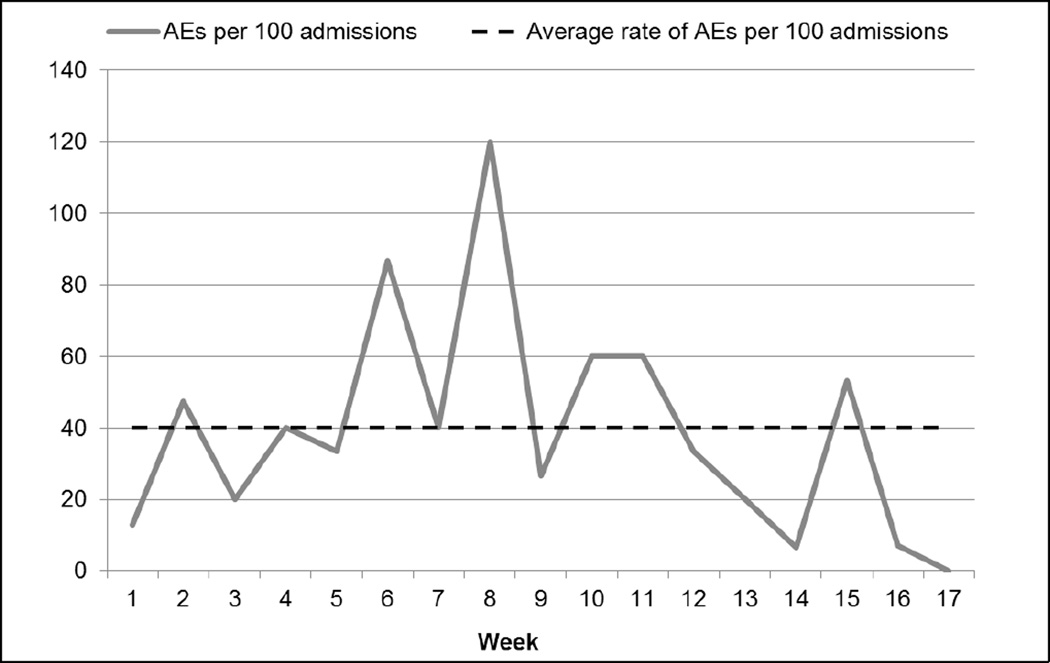

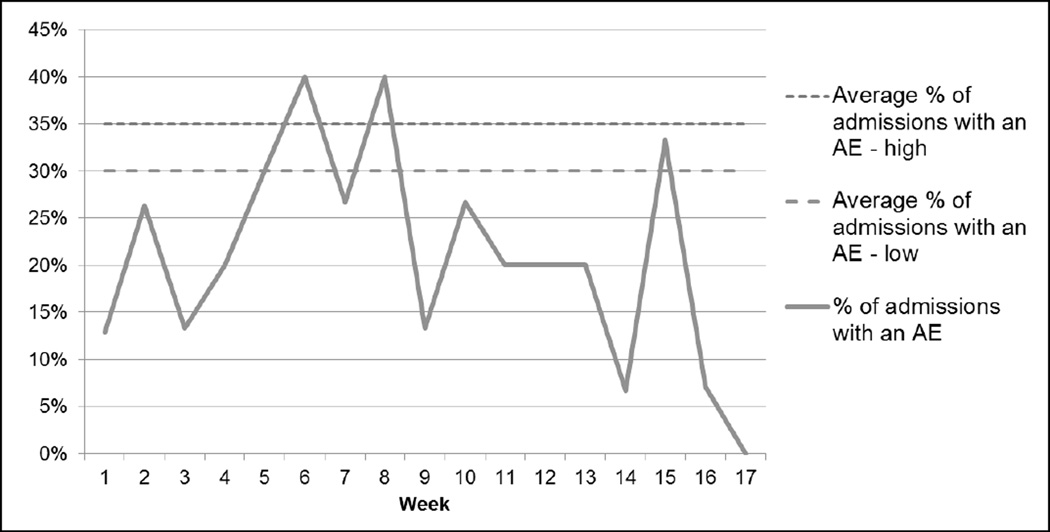

Over the review period, there were 52 AEs per 1,000 patient days and 38 AEs per 100 admissions (see Figures 1 and 2). The VA facility had an overall rate of 21% of admissions with an AE (see Figure 3). The majority (60%) of AEs detected were minor; no deaths were found to be attributable to medical care.

Figure 1.

Rate of Adverse Events (AEs) per 1,000 patient days (109 events in 288 cases over 17 weeks)

Figure 2.

Rate of Adverse Events (AEs) per 100 admissions (109 events in 288 cases over 17 weeks)

Figure 3.

Percent of Admissions with an Adverse Event (AE) (109 events in 288 cases over 17 weeks)

Note: The average range of admissions with an adverse event is from the Institute for Healthcare Improvement Global Trigger Tool White Paper, 2009.8

Categorization of AEs

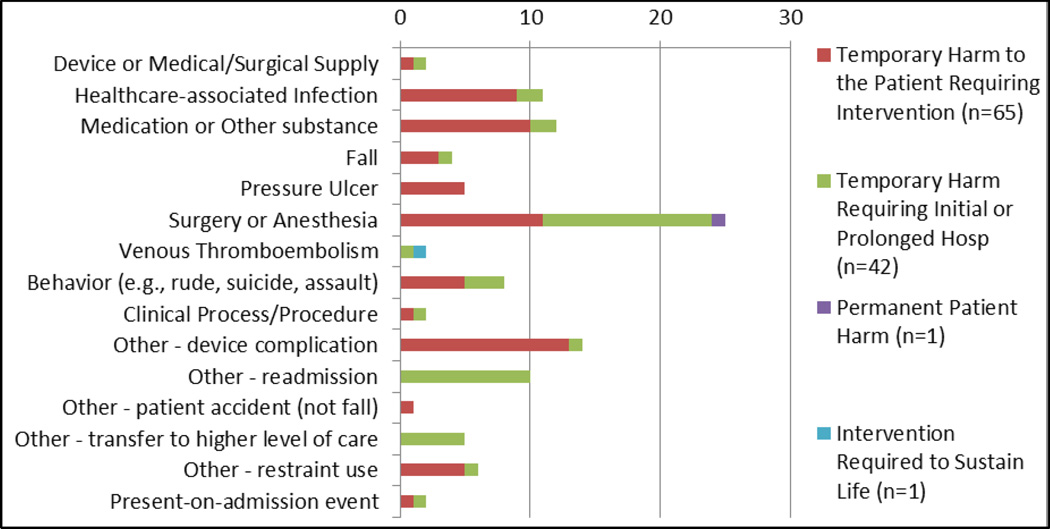

In the categorization process, 65% of the 109 AEs detected could be classified into the ICPS or AHRQ Common Formats categories. Six new “other” categories were also created: 1) 30-day readmissions from a VA facility, 2) device complications primarily related to problems with catheters, 3) patient accidents – not falls – causing injury, 4) transfers to higher levels of care, 5) patient restraint use, and 6) present-on-admission events. Most of the harms were problems related to intra- or post-operative care (23%); however, there were moderate frequencies of device complications (13%), medication problems (11%), and healthcare-associated infections (10%) (see Figure 4). The highest levels of harm detected (intervention required to sustain life, Category H) was attributed to a Venous Thromboembolism. The majority of more serious harms (Category F and higher) were Surgery and Anesthesia cases or hospital readmissions.

Figure 4.

Distribution of Harm by Adverse Event Type (109 events in 288 cases over 17 weeks)

Overlap with Other Measures

Thirteen of the 109 AEs (12%) were also detected by other measures: 1 case was detected by an incident report, 5 cases were detected by PSIs (3 of these were also detected by VASQIP) and 9 cases were detected by VASQIP (see Table 2). Of the 13 events detected by multiple measures, 11 (84%) were detected in patients with a surgical DRG. The other 2 events were detected by the GTT and PSIs, and were associated with temporary harm to the patient that required initial or prolonged hospitalization.

Table 2.

Summary of Harms Detected by Patient Safety Measures over 17 weeks

| Level of Harm | GTT (% of Total) | IRs* | PSIs* | VASQIP* |

|---|---|---|---|---|

| Temporary harm to the patient and required intervention | 65 (60%) | 1 | 5 | |

| Temporary harm to the patient and required initial or prolonged hospitalization | 42 (39%) | 4 | 4 | |

| Permanent patient harm | 1 (1%) | 1 | ||

| Intervention required to sustain life | 1 (1%) | |||

| Patient death | 0 | |||

| TOTAL | 109 | 1 | 5 | 9 |

These AEs were detected by both the GTT and the other measurement methods.

GTT=Global Trigger Tool

IR= Incident Report

PSI=Patient Safety Indicator

VASQIP=Veterans Affairs Surgical Quality Improvement Program

Comparison of Healthcare Utilization

The Poisson regression model found a statistically significant relationship between having an AE detected by the GTT and future outpatient utilization. Patients with an AE had 22% more visits within 6 months after their hospitalization than those who did not have an AE. The logistic regression model did not show a relationship between patient harm and future hospitalizations.

DISCUSSION

This is the first pilot of the GTT in VA and the findings are similar to private sector experiences with GTT implementation in several respects. Like Good et al, this study found that 60% of the AEs detected by the GTT were minimal harm, and very few harms caused permanent damage or life-threatening situations.10 The results also showed that many of the AEs detected (e.g., healthcare-associated infections, falls and surgical problems) were well known safety problems that were already addressed through existing surveillance and quality improvement (QI) efforts in the hospital. As Classen et al11 and Naessens et al16 found, there was very little overlap between IRs, PSIs and VASQIP, and more importantly, the GTT detected more events than other methods.

Several of the results added new information to published GTT implementation efforts. Unlike the range reported in the GTT White Paper (i.e., 30–35% of admissions with an AE),8 this study found only 21% of the 17 weeks of reviewed admissions in the VA facility had an AE. Further, there was no stabilization of rates by the 12-week mark, as the White Paper suggested. When harms detected by other patient safety surveillance mechanisms were compared, it was revealed that the GTT had very little overlap with VASQIP, a chart-review AE detection program that had not yet been compared to the GTT. Further, 84% of the AEs detected by multiple surveillance mechanisms were in surgical patients. Finally, patients with an AE had 22% more post-hospitalization outpatient visits than patients without an AE.

The pilot was designed and conducted with the goal of presenting results and recommendations to facilitate a larger implementation effort, should the VA decide to move forward with the GTT methodology. These changes may be helpful to other healthcare systems considering the use of the GTT to increase AE surveillance. The pilot employed one nurse reviewer because of resource constraints, but healthcare systems may decide to add a second reviewer to increase the yield of AEs detected in the limited chart review time (as the inter-rater reliability check showed). Furthermore, the White Paper did not recommend categorizing the AEs by type, or presenting the harms by location in the hospital, yet this study found that these data were more useful and actionable to the facility-based patient safety leadership. The final report to the facility utilized AE types to identify problems that patient safety leaders may not have been actively tracking and the categorization of AEs revealed that many AEs associated with catheter use (categorized as either device complications, behavior problems for patients pulling out their catheters, or restraint use to prevent removing catheters) that were not actively monitored at the facility. This observation illustrates the value of the GTT in combination with other patient safety measures; unlike the PSIs and VASQIP, the GTT is not limited to tracking specifically defined AEs in specifically defined patients and can therefore reveal previously unmeasured sources of patient harm.24,29

The IHI acknowledges that a significant barrier to adoption of the GTT methodology is cost.8 This research was a pilot study and no cost data were collected; however, the results on the potential long-term cost to the healthcare system of AEs showed that patients with GTT-detected harms had higher outpatient utilization 6 months following the hospitalization with an AE. This finding suggests an important source of healthcare costs that should be considered in an economic analysis. The research team evaluated individual time and effort related to GTT training, sampling cases, reviewing medical charts, attending team meetings and categorizing AEs. This work amounted to 49 hours at an estimated cost higher than $10,000, translating to a cost of $140 per AE detected. Notably, this is NOT a true estimate of the value of implementing the GTT in the healthcare system as the facility staff may not have the same hourly costs as members of the research team. Furthermore, the cost of utilizing the GTT results in terms of developing and implementing QI must be considered.

This pilot study has notable strengths. This is the first reported test of the GTT in a VA facility. Moreover, this study is the first to compare AEs detected by the GTT with those identified by VASQIP. The VA-tailored application of the GTT methodology included a modification to review potential AEs as an inter-professional group and classify them according to standardized definitions. Although this innovation increased resource burden, additional review improved the reliability of findings, and the categorization of AEs made communication of results more actionable for patient safety managers and hospital leadership. Furthermore, categorizing AEs enabled comparison across AE detection programs within VA to determine whether the GTT identified new patient safety problems.

This research also has limitations. Previous implementation efforts suggested modifications to the GTT methodology that were not always used. For example, Adler et al, recommended hiring reviewers with 5+ years of experience, and indicated that 3–4 months were required before reviewers established reliability.9 While the nurse reviewer in this study had only 1 year of experience reviewing charts in VA prior to the pilot work, the study collected more than 4 months of data and the inter-rater reliability check found nurse agreement was high. This was a pilot study in one VA facility and the findings may not generalize to other VA facilities or outside the VA healthcare system. Finally, this study did not pilot the implementation of the GTT in the VA facility in that facility staff who would continue GTT reviews in the future if the GTT methodology was adopted for long-term use did not participate. Furthermore, formal feedback from local patient safety leadership regarding the GTT results and how they would be used to develop and implement QI activities was not obtained.

Despite the longstanding commitment to patient safety in VA, the GTT detected AEs not identified by existing programs and appears to be valuable in appropriately targeting QI efforts. This research was a pilot study and cost effectiveness was not calculated; however, any assessment of cost effectiveness must consider the costs of developing and implementing QI initiatives to reduce AEs. Certainly if no action is taken in response to the GTT findings, the methodology is not adding value to the healthcare system. In future work, the research team proposes to expand the implementation of the GTT for patient safety surveillance in order to both evaluate the cost of the implementation and measure the benefits of associated QI activities. The GTT is a promising tool for AE surveillance.

Acknowledgements

We would like to acknowledge the contributions of Frank Federico, RPh, from the Institute for Healthcare Improvement (IHI), for guidance and support throughout our study. William O’Brien provided programming support to complete the analyses of our data.

Funding

This work was supported by the VA National Center for Patient Safety (NCPS) through the Patient Safety Center of Inquiry (PSCI) at VA Boston Healthcare System (VA NCPS XVA 68-023, Director: Amy Rosen, PhD).

Footnotes

Disclosures

The authors report no conflicts of interest.

REFERENCES

- 1.Institute of Medicine (IOM) Patient Safety: Achieving a New Standard of Care. Washington, DC: National Academy Press; 2003. [Google Scholar]

- 2.Leape LL, Brennan TA, Laird N, et al. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med. 1991 Feb 7;324(6):377–384. doi: 10.1056/NEJM199102073240605. [DOI] [PubMed] [Google Scholar]

- 3.Nuckols TK, Bell DS, Liu H, Paddock SM, Hilborne LH. Rates and types of events reported to established incident reporting systems in two US hospitals. Qual Saf Health Care. 2007 Jun;16(3):164–168. doi: 10.1136/qshc.2006.019901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Agency for Healthcare Research and Quality (AHRQ) AHRQ Patient Safety Indicators Overview. [Accessed Mar 3, 2013];AHRQ Homepage. 2013 http://www.qualityindicators.ahrq.gov/Modules/psi_overview.aspx.

- 5.Rosen AK, Itani K, Cevasco M, et al. Validating the Patient Safety Indicators (PSIs) in the Veterans Health Administration: Do They Accurately Identify True Safety Events? Medical Care. 2012;50(1):74–85. doi: 10.1097/MLR.0b013e3182293edf. [DOI] [PubMed] [Google Scholar]

- 6.Liebhaber A, Draper DA, Cohen GR. Hospital Strategies to Engage Physicians in Quality Improvement. Center for Studying Health System Change; 2009. [PubMed] [Google Scholar]

- 7.Classen DC, Lloyd RC, Provost L, Griffin FA, Resar R. Development and evaluation of the Institute for Healthcare Improvement Global Trigger Tool. J Patient Saf. 2008;4(3):169–177. [Google Scholar]

- 8.Griffin FA, Resar R. IHI Global Trigger Tool for Measuring Adverse Events (Second Edition). IHI Innovation Series white paper. Cambridge, MA: Institute for Healthcare Improvement; 2009. (Available on www.IHI.org)2009. [Google Scholar]

- 9.Adler L, Denham CR, McKeever M, et al. Global Trigger Tool: Implementation Basics. Journal of Patient Safety. 2008;4(4):245–249. 210.1097/PTS.1090b1013e31818e31818a31887. [Google Scholar]

- 10.Good VS, Saldana M, Gilder R, Nicewander D, Kennerly DA. Large-scale deployment of the Global Trigger Tool across a large hospital system: refinements for the characterisation of adverse events to support patient safety learning opportunities. BMJ Qual Saf. 2011 Jan;20(1):25–30. doi: 10.1136/bmjqs.2008.029181. [DOI] [PubMed] [Google Scholar]

- 11.Classen DC, Resar R, Griffin F, et al. 'Global trigger tool' shows that adverse events in hospitals may be ten times greater than previously measured. Health Aff (Millwood) 2011 Apr;30(4):581–589. doi: 10.1377/hlthaff.2011.0190. [DOI] [PubMed] [Google Scholar]

- 12.Landrigan CP, Parry GJ, Bones CB, Hackbarth AD, Goldmann DA, Sharek PJ. Temporal trends in rates of patient harm resulting from medical care. N Engl J Med. 2010 Nov 25;363(22):2124–2134. doi: 10.1056/NEJMsa1004404. [DOI] [PubMed] [Google Scholar]

- 13.Naessens JM, Campbell CR, Huddleston JM, et al. A comparison of hospital adverse events identified by three widely used detection methods. Int J Qual Health Care. 2009 Aug;21(4):301–307. doi: 10.1093/intqhc/mzp027. [DOI] [PubMed] [Google Scholar]

- 14.Kirkendall ES, Kloppenborg E, Papp J, et al. Measuring Adverse Events and Levels of Harm in Pediatric Inpatients With the Global Trigger Tool. Pediatrics. 2012 Oct 8; doi: 10.1542/peds.2012-0179. [DOI] [PubMed] [Google Scholar]

- 15.Lipczak H, Neckelmann K, Steding-Jessen M, Jakobsen E, Knudsen JL. Uncertain added value of Global Trigger Tool for monitoring of patient safety in cancer care. Dan Med Bull. 2011 Nov;58(11):A4337. [PubMed] [Google Scholar]

- 16.Naessens JM, O'Byrne TJ, Johnson MG, Vansuch MB, McGlone CM, Huddleston JM. Measuring hospital adverse events: assessing inter-rater reliability and trigger performance of the Global Trigger Tool. Int J Qual Health Care. 2010 Aug;22(4):266–274. doi: 10.1093/intqhc/mzq026. [DOI] [PubMed] [Google Scholar]

- 17.Schildmeijer K, Nilsson L, Perk J, Arestedt K, Nilsson G. Strengths and weaknesses of working with the Global Trigger Tool method for retrospective record review: focus group interviews with team members. BMJ Open. 2013;3(9):e003131. doi: 10.1136/bmjopen-2013-003131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sharek PJ, Parry G, Goldmann D, et al. Performance characteristics of a methodology to quantify adverse events over time in hospitalized patients. Health Serv Res. 2011 Apr;46(2):654–678. doi: 10.1111/j.1475-6773.2010.01156.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.von Plessen C, Kodal AM, Anhoj J. Experiences with global trigger tool reviews in five Danish hospitals: an implementation study. BMJ Open. 2012;2(5) doi: 10.1136/bmjopen-2012-001324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Department of Veterans Affairs. Veterans Health Administration. [Accessed Oct 17, 2013];2013 http://www.va.gov/health/aboutVHA.asp.

- 21.Leape LL, Berwick DM. Five years after To Err Is Human: what have we learned? JAMA. 2005 May 18;293(19):2384–2390. doi: 10.1001/jama.293.19.2384. [DOI] [PubMed] [Google Scholar]

- 22.Khuri SF, Daley J, Henderson W, et al. The Department of Veterans Affairs' NSQIP: the first national, validated, outcome-based, risk-adjusted, and peer-controlled program for the measurement and enhancement of the quality of surgical care. National VA Surgical Quality Improvement Program. Ann Surg. 1998 Oct;228(4):491–507. doi: 10.1097/00000658-199810000-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fink AS, Campbell DA, Jr, Mentzer RM, Jr, et al. The National Surgical Quality Improvement Program in non-veterans administration hospitals: initial demonstration of feasibility. Ann Surg. 2002 Sep;236(3):344–353. doi: 10.1097/00000658-200209000-00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pham JC, Frick KD, Pronovost PJ. Why Don't We Know Whether Care Is Safe? Am J Med Qual. 2013 Mar 24; doi: 10.1177/1062860613479397. [DOI] [PubMed] [Google Scholar]

- 25.Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998 Jan;36(1):8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- 26.SAS Institute Inc. SAS 9.1.3 Help and Documentation. 9.1 ed. Cary, NC: SAS Institute Inc.; 2000–2004. [Google Scholar]

- 27.Sherman H, Castro G, Fletcher M, et al. Towards an International Classification for Patient Safety: the conceptual framework. Int J Qual Health Care. 2009 Feb;21(1):2–8. doi: 10.1093/intqhc/mzn054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Agency for Healthcare Research and Quality. AHRQ Common Formats Version 1.1 - March 2010 Release Users Guide. 2010 [Google Scholar]

- 29.Garrett PR, Jr, Sammer C, Nelson A, et al. Developing and implementing a standardized process for global trigger tool application across a large health system. Jt Comm J Qual Patient Saf. 2013 Jul;39(7):292–297. doi: 10.1016/s1553-7250(13)39041-2. [DOI] [PubMed] [Google Scholar]