Abstract

Key properties of inferior temporal cortex neurons are described, and then, the biological plausibility of two leading approaches to invariant visual object recognition in the ventral visual system is assessed to investigate whether they account for these properties. Experiment 1 shows that VisNet performs object classification with random exemplars comparably to HMAX, except that the final layer C neurons of HMAX have a very non-sparse representation (unlike that in the brain) that provides little information in the single-neuron responses about the object class. Experiment 2 shows that VisNet forms invariant representations when trained with different views of each object, whereas HMAX performs poorly when assessed with a biologically plausible pattern association network, as HMAX has no mechanism to learn view invariance. Experiment 3 shows that VisNet neurons do not respond to scrambled images of faces, and thus encode shape information. HMAX neurons responded with similarly high rates to the unscrambled and scrambled faces, indicating that low-level features including texture may be relevant to HMAX performance. Experiment 4 shows that VisNet can learn to recognize objects even when the view provided by the object changes catastrophically as it transforms, whereas HMAX has no learning mechanism in its S–C hierarchy that provides for view-invariant learning. This highlights some requirements for the neurobiological mechanisms of high-level vision, and how some different approaches perform, in order to help understand the fundamental underlying principles of invariant visual object recognition in the ventral visual stream.

Electronic supplementary material

The online version of this article (doi:10.1007/s00422-015-0658-2) contains supplementary material, which is available to authorized users.

Keywords: Visual object recognition, Invariant representations , Inferior temporal visual cortex, VisNet, HMAX, Trace learning rule

Introduction

The aim of this research is to assess the biological plausibility of two models that aim to be biologically plausible or at least biologically inspired by performing investigations of how biologically plausible they are and comparing them to the known responses of inferior temporal cortex neurons. Four key experiments are performed to measure the firing rate representations provided by neurons in the models: whether the neuronal representations are of individual objects or faces as well as classes; whether the neuronal representations are transform invariant; whether whole objects with the parts in the correct spatial configuration are represented; and whether the systems can correctly represent individual objects that undergo catastrophic view transforms. In all these cases, the performance of the models is compared to that of neurons in the inferior temporal visual cortex. The overall aim is to provide insight into what must be accounted for more generally by biologically plausible models of object recognition by the brain, and in this sense, the research described here goes beyond these two models. We do not consider non-biologically plausible models here as our aim is neuroscience, how the brain works, but we do consider in the Discussion some of the factors that make some other models not biologically plausible, in the context of guiding future investigations of biologically plausible models of how the brain solves invariant visual object recognition. We note that these biologically inspired models are intended to provide elucidation of some of the key properties of the cortical implementation of invariant visual object recognition, and of course as models the aim is to include some modelling simplifications, which are referred to below, in order to provide a useful and tractable model.

One of the major problems that are solved by the visual system in the primate including human cerebral cortex is the building of a representation of visual information that allows object and face recognition to occur relatively independently of size, contrast, spatial frequency, position on the retina, angle of view, lighting, etc. These invariant representations of objects, provided by the inferior temporal visual cortex (Rolls 2008, 2012a), are extremely important for the operation of many other systems in the brain, for if there is an invariant representation, it is possible to learn on a single trial about reward/punishment associations of the object, the place where that object is located, and whether the object has been seen recently, and then to correctly generalize to other views, etc., of the same object (Rolls 2008, 2014). In order to understand how the invariant representations are built, computational models provide a fundamental approach, for they allow hypotheses to be developed, explored and tested, and are essential for understanding how the cerebral cortex solves this major computation.

We next summarize some of the key and fundamental properties of the responses of primate inferior temporal cortex (IT) neurons (Rolls 2008, 2012a; Rolls and Treves 2011) that need to be addressed by biologically plausible models of invariant visual object recognition. Then we illustrate how models of invariant visual object recognition can be tested to reveal whether they account for these properties. The two leading approaches to visual object recognition by the cerebral cortex that are used to highlight whether these generic biological issues are addressed are VisNet (Rolls 2012a, 2008; Wallis and Rolls 1997; Rolls and Webb 2014; Webb and Rolls 2014) and HMAX (Serre et al. 2007c, a, b; Mutch and Lowe 2008). By comparing these models, and how they perform on invariant visual object recognition, we aim to make advances in the understanding of the cortical mechanisms underlying this key problem in the neuroscience of vision. The architecture and operation of these two classes of network are described below.

Some of the key properties of IT neurons that need to be addressed, and that are tested in this paper, include:

Inferior temporal visual cortex neurons show responses to objects that are typically translation, size, contrast, rotation, and in many cases view invariant, that is, they show transform invariance (Hasselmo et al. 1989; Tovee et al. 1994; Logothetis et al. 1995; Booth and Rolls 1998; Rolls 2012a; Trappenberg et al. 2002; Rolls and Baylis 1986; Rolls et al. 1985, 1987, 2003; Aggelopoulos and Rolls 2005).

Inferior temporal cortex neurons show sparse distributed representations, in which individual neurons have high firing rates to a few stimuli and lower firing rates to more stimuli, in which much information can be read from the responses of a single neuron from its firing rates (because they are high to relatively few stimuli), and in which neurons encode independent information about a set of stimuli, as least up to tens of neurons (Tovee et al. 1993; Rolls and Tovee 1995; Rolls et al. 1997a, b; Abbott et al. 1996; Baddeley et al. 1997; Rolls 2008, 2012a; Rolls and Treves 2011).

Inferior temporal cortex neurons often respond to objects and not to low-level features, in that many respond to whole objects, but not to the parts presented individually nor to the parts presented with a scrambled configuration (Perrett et al. 1982; Rolls et al. 1994).

Inferior temporal cortex neurons convey information about the individual object or face, not just about a class such as face versus non-face, or animal versus non-animal (Rolls and Tovee 1995; Rolls et al. 1997a, b; Abbott et al. 1996; Baddeley et al. 1997; Rolls 2008, 2012a; Rolls and Treves 2011). This key property is essential for recognizing a particular person or object and is frequently not addressed in models of invariant object recognition, which still focus on classification into, e.g. animal versus non-animal, hats versus bears versus beer mugs (Serre et al. 2007c, a, b; Mutch and Lowe 2008; Yamins et al. 2014).

The learning mechanism needs to be physiologically plausible, that is, likely to include a local synaptic learning rule (Rolls 2008). We note that lateral propagation of weights, as used in the neocognitron (Fukushima 1980), HMAX (Riesenhuber and Poggio 1999; Mutch and Lowe 2008; Serre et al. 2007a), and convolution nets (LeCun et al. 2010), is not biologically plausible.

Methods

Overview of the architecture of the ventral visual stream model, VisNet

The architecture of VisNet (Rolls 2008, 2012a) is summarized briefly next, with a full description provided after this.

Fundamental elements of Rolls’ (1992) theory for how cortical networks might implement invariant object recognition are described in detail elsewhere (Rolls 2008, 2012a). They provide the basis for the design of VisNet, which can be summarized as:

A series of competitive networks organized in hierarchical layers, exhibiting mutual inhibition over a short range within each layer. These networks allow combinations of features or inputs occurring in a given spatial arrangement to be learned by neurons using competitive learning (Rolls 2008), ensuring that higher-order spatial properties of the input stimuli are represented in the network. In VisNet, layer 1 corresponds to V2, layer 2 to V4, layer 3 to posterior inferior temporal visual cortex, and layer 4 to anterior inferior temporal cortex. Layer one is preceded by a simulation of the Gabor-like receptive fields of V1 neurons produced by each image presented to VisNet (Rolls 2012a).

A convergent series of connections from a localized population of neurons in the preceding layer to each neuron of the following layer, thus allowing the receptive field size of neurons to increase through the visual processing areas or layers, as illustrated in Fig. 1.

A modified associative (Hebb-like) learning rule incorporating a temporal trace of each neuron’s previous activity, which, it has been shown (Földiák 1991; Rolls 1992, 2012a; Wallis et al. 1993; Wallis and Rolls 1997; Rolls and Milward 2000), enables the neurons to learn transform invariances.

The learning rates for each of the four layers were 0.05, 0.03, 0.005, and 0.005, as these rates were shown to produce convergence of the synaptic weights after 15–50 training epochs. Fifty training epochs were run.

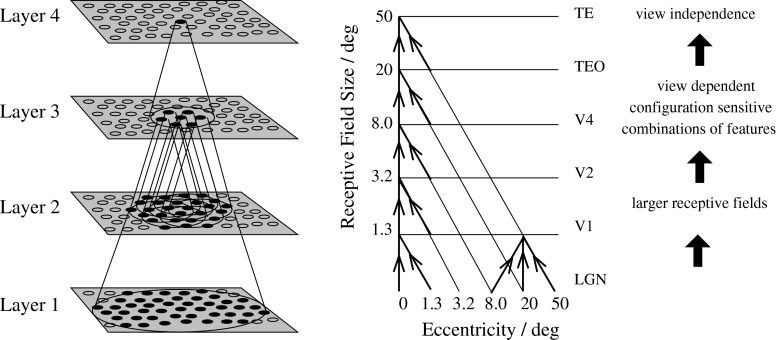

Fig. 1.

Convergence in the visual system. Right as it occurs in the brain. V1, visual cortex area V1; TEO, posterior inferior temporal cortex; TE, inferior temporal cortex (IT). Left as implemented in VisNet. Convergence through the network is designed to provide fourth layer neurons with information from across the entire input retina

VisNet trace learning rule

The learning rule implemented in the VisNet simulations utilizes the spatio-temporal constraints placed upon the behaviour of ‘real-world’ objects to learn about natural object transformations. By presenting consistent sequences of transforming objects, the cells in the network can learn to respond to the same object through all of its naturally transformed states, as described by Földiák (1991), Rolls (1992, 2012a), Wallis et al. (1993), and Wallis and Rolls (1997). The learning rule incorporates a decaying trace of previous cell activity and is henceforth referred to simply as the ‘trace’ learning rule. The learning paradigm we describe here is intended in principle to enable learning of any of the transforms tolerated by inferior temporal cortex neurons, including position, size, view, lighting, and spatial frequency (Rolls 1992, 2000, 2008, 2012a; Rolls and Deco 2002).

Various biological bases for this temporal trace have been advanced as follows: The precise mechanisms involved may alter the precise form of the trace rule which should be used. Földiák (1992) describes an alternative trace rule which models individual NMDA channels. Equally, a trace implemented by temporally extended cell firing in a local cortical attractor could implement a short-term memory of previous neuronal firing (Rolls 2008).

The persistent firing of neurons for as long as 100–400 ms observed after presentations of stimuli for 16 ms (Rolls and Tovee 1994) could provide a time window within which to associate subsequent images. Maintained activity may potentially be implemented by recurrent connections between as well as within cortical areas (Rolls and Treves 1998; Rolls and Deco 2002; Rolls 2008). The prolonged firing of anterior ventral temporal / perirhinal cortex neurons during memory delay periods of several seconds and associative links reported to develop between stimuli presented several seconds apart (Miyashita 1988) are on too long a time scale to be immediately relevant to the present theory. In fact, associations between visual events occurring several seconds apart would, under normal environmental conditions, be detrimental to the operation of a network of the type described here, because they would probably arise from different objects. In contrast, the system described benefits from associations between visual events which occur close in time (typically within 1 s), as they are likely to be from the same object.

The binding period of glutamate in the NMDA channels, which may last for 100 ms or more, may implement a trace rule by producing a narrow time window over which the average activity at each presynaptic site affects learning (Rolls 1992; Rhodes 1992; Földiák 1992; Spruston et al. 1995; Hestrin et al. 1990).

Chemicals such as nitric oxide may be released during high neural activity and gradually decay in concentration over a short time window during which learning could be enhanced (Földiák 1992; Montague et al. 1991; Garthwaite 2008).

The trace update rule used in the baseline simulations of VisNet (Wallis and Rolls 1997) is equivalent to both Földiák’s used in the context of translation invariance (Wallis et al. 1993) and the earlier rule of Sutton and Barto (1981) explored in the context of modelling the temporal properties of classical conditioning and can be summarized as follows:

| 1 |

where

| 2 |

and : jth input to the neuron; : Trace value of the output of the neuron at time step ; : Synaptic weight between jth input and the neuron; y: Output from the neuron; : Learning rate; : Trace value. The optimal value varies with presentation sequence length.

At the start of a series of investigations of different forms of the trace learning rule, Rolls and Milward (2000) demonstrated that VisNet’s performance could be greatly enhanced with a modified Hebbian trace learning rule (Eq. 3) that incorporated a trace of activity from the preceding time steps, with no contribution from the activity being produced by the stimulus at the current time step. This rule took the form

| 3 |

The trace shown in Eq. 3 is in the postsynaptic term. The crucial difference from the earlier rule (see Eq. 1) was that the trace should be calculated up to only the preceding timestep. This has the effect of updating the weights based on the preceding activity of the neuron, which is likely given the spatio-temporal statistics of the visual world to be from previous transforms of the same object (Rolls and Milward 2000; Rolls and Stringer 2001). This is biologically not at all implausible, as considered in more detail elsewhere (Rolls 2008, 2012a), and this version of the trace rule was used in this investigation.

The optimal value of in the trace rule is likely to be different for different layers of VisNet. For early layers with small receptive fields, few successive transforms are likely to contain similar information within the receptive field, so the value for might be low to produce a short trace. In later layers of VisNet, successive transforms may be in the receptive field for longer, and invariance may be developing in earlier layers, so a longer trace may be beneficial. In practice, after exploration we used values of 0.6 for layer 2, and 0.8 for layers 3 and 4. In addition, it is important to form feature combinations with high spatial precision before invariance learning supported by a temporal trace starts, in order that the feature combinations and not the individual features have invariant representations (Rolls 2008, 2012a). For this reason, purely associative learning with no temporal trace was used in layer 1 of VisNet (Rolls and Milward 2000).

The following principled method was introduced to choose the value of the learning rate for each layer. The mean weight change from all the neurons in that layer for each epoch of training was measured and was set so that with slow learning over 15–50 trials, the weight changes per epoch would gradually decrease and asymptote with that number of epochs, reflecting convergence. Slow learning rates are useful in competitive nets, for if the learning rates are too high, previous learning in the synaptic weights will be overwritten by large weight changes later within the same epoch produced if a neuron starts to respond to another stimulus (Rolls 2008). If the learning rates are too low, then no useful learning or convergence will occur. It was found that the following learning rates enabled good operation with the 100 transforms of each of 4 stimuli used in each epoch in the present investigation: Layer 1 ; Layer 2 (this is relatively high to allow for the sparse representations in layer 1); Layer 3 ; Layer 4 .

To bound the growth of each neuron’s synaptic weight vector, for the ith neuron, its length is explicitly normalized [a method similarly employed by Malsburg (1973) which is commonly used in competitive networks (Rolls 2008)]. An alternative, more biologically relevant implementation, using a local weight bounding operation which utilizes a form of heterosynaptic long-term depression (Rolls 2008), has in part been explored using a version of the Oja (1982) rule (see Wallis and Rolls 1997).

Network implemented in VisNet

The network itself is designed as a series of hierarchical, convergent, competitive networks, in accordance with the hypotheses advanced above. The actual network consists of a series of four layers, constructed such that the convergence of information from the most disparate parts of the network’s input layer can potentially influence firing in a single neuron in the final layer—see Fig. 1. This corresponds to the scheme described by many researchers (Van Essen et al. 1992; Rolls 1992, 2008, for example) as present in the primate visual system—see Fig. 1. The forward connections to a cell in one layer are derived from a topologically related and confined region of the preceding layer. The choice of whether a connection between neurons in adjacent layers exists or not is based upon a Gaussian distribution of connection probabilities which roll-off radially from the focal point of connections for each neuron. (A minor extra constraint precludes the repeated connection of any pair of cells.) In particular, the forward connections to a cell in one layer come from a small region of the preceding layer defined by the radius in Table 1 which will contain approximately 67 % of the connections from the preceding layer. Table 1 shows the dimensions for the research described here, a (16) larger version than the version of VisNet used in most of our previous investigations, which utilized neurons per layer. For the research on view and translation invariance learning described here, we decreased the number of connections to layer 1 neurons to 100 (from 272), in order to increase the selectivity of the network between objects. We increased the number of connections to each neuron in layers 2–4 to 400 (from 100), because this helped layer 4 neurons to reflect evidence from neurons in previous layers about the large number of transforms (typically 100 transforms, from 4 views of each object and 25 locations) each of which corresponded to a particular object.

Table 1.

VisNet dimensions

| Dimensions | No. of connections | Radius | |

|---|---|---|---|

| Layer 4 | 400 | 48 | |

| Layer 3 | 400 | 36 | |

| Layer 2 | 400 | 24 | |

| Layer 1 | 100 | 24 | |

| Input layer | – | – |

Figure 1 shows the general convergent network architecture used. Localization and limitation of connectivity in the network are intended to mimic cortical connectivity, partially because of the clear retention of retinal topology through regions of visual cortex. This architecture also encourages the gradual combination of features from layer to layer which has relevance to the binding problem, as described elsewhere (Rolls 2008, 2012a).

Competition and lateral inhibition in VisNet

In order to act as a competitive network some form of mutual inhibition is required within each layer, which should help to ensure that all stimuli presented are evenly represented by the neurons in each layer. This is implemented in VisNet by a form of lateral inhibition. The idea behind the lateral inhibition, apart from this being a property of cortical architecture in the brain, was to prevent too many neurons that received inputs from a similar part of the preceding layer responding to the same activity patterns. The purpose of the lateral inhibition was to ensure that different receiving neurons coded for different inputs. This is important in reducing redundancy (Rolls 2008). The lateral inhibition is conceived as operating within a radius that was similar to that of the region within which a neuron received converging inputs from the preceding layer (because activity in one zone of topologically organized processing within a layer should not inhibit processing in another zone in the same layer, concerned perhaps with another part of the image). The lateral inhibition in this investigation used the parameters for as shown in Table 3.

Table 3.

Lateral inhibition parameters

| Layer | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Radius, | 1.38 | 2.7 | 4.0 | 6.0 |

| Contrast, | 1.5 | 1.5 | 1.6 | 1.4 |

The lateral inhibition and contrast enhancement just described are actually implemented in VisNet2 (Rolls and Milward 2000) and VisNet (Perry et al. 2010) in two stages, to produce filtering of the type illustrated elsewhere (Rolls 2008, 2012a). The lateral inhibition was implemented by convolving the activation of the neurons in a layer with a spatial filter, I, where controls the contrast and controls the width, and a and b index the distance away from the centre of the filter

| 4 |

The second stage involves contrast enhancement. A sigmoid activation function was used in the way described previously (Rolls and Milward 2000):

| 5 |

where r is the activation (or firing rate) of the neuron after the lateral inhibition, y is the firing rate after the contrast enhancement produced by the activation function, and is the slope or gain and is the threshold or bias of the activation function. The sigmoid bounds the firing rate between 0 and 1 so global normalization is not required. The slope and threshold are held constant within each layer. The slope is constant throughout training, whereas the threshold is used to control the sparseness of firing rates within each layer. The (population) sparseness of the firing within a layer is defined (Rolls and Treves 1998, 2011; Franco et al. 2007; Rolls 2008) as:

| 6 |

where n is the number of neurons in the layer. To set the sparseness to a given value, e.g. 5 %, the threshold is set to the value of the 95th percentile point of the activations within the layer.

The sigmoid activation function was used with parameters (selected after a number of optimization runs) as shown in Table 2.

Table 2.

Sigmoid parameters

| Layer | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Percentile | 99.2 | 98 | 88 | 95 |

| Slope | 190 | 40 | 75 | 26 |

In addition, the lateral inhibition parameters are as shown in Table 3.

Input to VisNet

VisNet is provided with a set of input filters which can be applied to an image to produce inputs to the network which correspond to those provided by simple cells in visual cortical area 1 (V1). The purpose of this is to enable within VisNet the more complicated response properties of cells between V1 and the inferior temporal cortex (IT) to be investigated, using as inputs natural stimuli such as those that could be applied to the retina of the real visual system. This is to facilitate comparisons between the activity of neurons in VisNet and those in the real visual system, to the same stimuli. In VisNet no attempt is made to train the response properties of simple cells, but instead we start with a defined series of filters to perform fixed feature extraction to a level equivalent to that of simple cells in V1, as have other researchers in the field (Hummel and Biederman 1992; Buhmann et al. 1991; Fukushima 1980), because we wish to simulate the more complicated response properties of cells between V1 and the inferior temporal cortex (IT). The elongated orientation-tuned input filters used in accord with the general tuning profiles of simple cells in V1 (Hawken and Parker 1987) and were computed by Gabor filters. Each individual filter is tuned to spatial frequency (0.0626–0.5 cycles/pixel over four octaves); orientation ( to in steps of ); and sign (). Of the 100 layer 1 connections, the number to each group in VisNet is shown in Table 4. Any zero D.C. filter can of course produce a negative as well as positive output, which would mean that this simulation of a simple cell would permit negative as well as positive firing. The response of each filter is zero thresholded and the negative results used to form a separate anti-phase input to the network. The filter outputs are also normalized across scales to compensate for the low-frequency bias in the images of natural objects.

Table 4.

VisNet layer 1 connectivity

| Frequency | 0.5 | 0.25 | 0.125 | 0.0625 |

|---|---|---|---|---|

| No. of connections | 74 | 19 | 5 | 2 |

The frequency is in cycles per pixel

The Gabor filters used were similar to those used previously (Deco and Rolls 2004; Rolls 2012a; Rolls and Webb 2014; Webb and Rolls 2014). Following Daugman (1988) the receptive fields of the simple cell-like input neurons are modelled by 2D Gabor functions. The Gabor receptive fields have five degrees of freedom given essentially by the product of an elliptical Gaussian and a complex plane wave. The first two degrees of freedom are the 2D locations of the receptive field’s centre; the third is the size of the receptive field; the fourth is the orientation of the boundaries separating excitatory and inhibitory regions; and the fifth is the symmetry. This fifth degree of freedom is given in the standard Gabor transform by the real and imaginary part, i.e. by the phase of the complex function representing it, whereas in a biological context this can be done by combining pairs of neurons with even and odd receptive fields. This design is supported by the experimental work of Pollen and Ronner (1981), who found simple cells in quadrature-phase pairs. Even more, Daugman (1988) proposed that an ensemble of simple cells is best modelled as a family of 2D Gabor wavelets sampling the frequency domain in a log-polar manner as a function of eccentricity. Experimental neurophysiological evidence constrains the relation between the free parameters that define a 2D Gabor receptive field (De Valois and De Valois 1988). There are three constraints fixing the relation between the width, height, orientation, and spatial frequency (Lee 1996). The first constraint posits that the aspect ratio of the elliptical Gaussian envelope is 2:1. The second constraint postulates that the plane wave tends to have its propagating direction along the short axis of the elliptical Gaussian. The third constraint assumes that the half-amplitude bandwidth of the frequency response is about 1–1.5 octaves along the optimal orientation. Further, we assume that the mean is zero in order to have an admissible wavelet basis (Lee 1996). Cells of layer 1 receive a topologically consistent, localized, random selection of the filter responses in the input layer, under the constraint that each cell samples every filter spatial frequency and receives a constant number of inputs. The mathematical details of the Gabor filtering are described elsewhere (Rolls 2012a; Rolls and Webb 2014; Webb and Rolls 2014).

Recent developments in VisNet implemented in the research described here

The version of VisNet used in this paper differed from the versions used for most of the research published with VisNet before 2012 (Rolls 2012a) in the following ways. First, Gabor filtering was used here, with a full mathematical description provided here, as compared to the difference of Gaussian filters used earlier. Second, the size of VisNet was increased from the previous neurons per layer to the neurons per layer described here. Third, the steps described in the Method to set the learning rates to values for each layer that encouraged convergence in 20–50 learning epochs were utilized here. Fourth, the method of pattern association decoding described in Sect. 2.8.2 to provide a biologically plausible way of decoding the outputs of VisNet neurons was used in the research described here. Fuller descriptions of the rationale for the design of VisNet, and of alternative more powerful learning rules not used here, are provided elsewhere (Rolls 2008, 2012a; Rolls and Stringer 2001).

HMAX models used for comparison with VisNet

The performance of VisNet was compared against a standard HMAX model (Mutch and Lowe 2008; Serre et al. 2007a, b). We note that an HMAX family model has in the order of 10 million computational units (Serre et al. 2007a), which is at least 100 times the number contained within the current implementation of VisNet (which uses neurons in each of 4 layers, i.e. 65,536 neurons). HMAX has as an ancestor the neocognitron (Fukushima 1980, 1988), which is also a hierarchical network that uses lateral copying of filter analysers within each layer. Both approaches select filter analysers using feedforward processing without a teacher, in contrast to convolutional and deep learning networks (LeCun et al. 2010) which typically use errors from a teacher backpropagated through multiple layers that do not aim for biological plausibility (Rolls 2008, 2016).

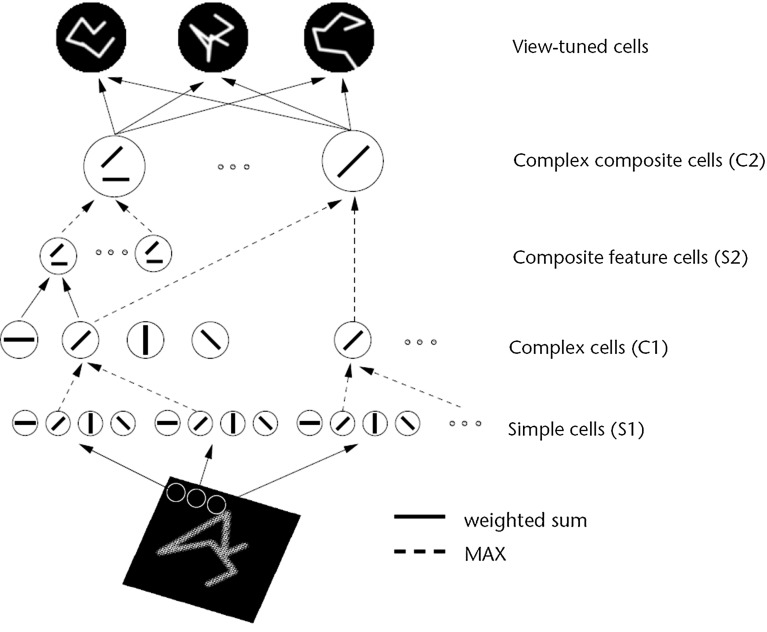

HMAX is a multiple layer system with simple and complex cell layers alternating that sets up connections to simple cells based on randomly chosen exemplars, and a MAX function performed by the complex cells of their simple cell inputs. The inspiration for this architecture Riesenhuber and Poggio (1999) may have come from the simple and complex cells found in V1 by Hubel and Wiesel (1968). A diagram of the model as described by Riesenhuber and Poggio (1999) is shown in Fig. 2. The final complex cell layer is then typically used as an input to a non-biologically plausible support vector machine or least squares computation to perform classification of the representations into object classes. The inputs to both HMAX and VisNet are Gabor-filtered images intended to approximate V1. One difference is that VisNet is normally trained on images generated by objects as they transform in the world, so that view, translation, size, rotation, etc., invariant representations of objects can be learned by the network. In contrast, HMAX is typically trained with large databases of pictures of different exemplars of, for example, hats and beer mugs as in the Caltech databases, which do not provide the basis for invariant representations of objects to be learned, but are aimed at object classification.

Fig. 2.

Sketch of Riesenhuber and Poggio (1999) HMAX model of invariant object recognition. The model includes layers of ‘S’ cells, which perform template matching (solid lines), and ‘C’ cells (solid lines), which pool information by a non-linear MAX function to achieve invariance (see text) (After Riesenhuber and Poggio 1999.)

When assessing the biological plausibility of the output representations of HMAX, we used the implementation of the HMAX model described by Mutch and Lowe (2008) using the code available at http://cbcl.mit.edu/jmutch/cns/index.html#hmax. In this instantiation of HMAX with 2 layers of S–C units, the assessment of performance was typically made using a support vector machine applied to the top layer C neurons. However, that way of measuring performance is not biologically plausible. However, Serre et al. (2007a) took the C2 neurons as corresponding to V4 and following earlier work in which view-tuned units were implemented (Riesenhuber and Poggio 1999) added a set of view-tuned units (VTU) which might be termed an S3 layer which they suggest corresponds to the posterior inferior temporal visual cortex. We implemented these VTUs in the way described by Riesenhuber and Poggio (1999) and Serre et al. (2007a) with an S3 VTU layer, by setting up a moderate number of view-tuned units, each one of which is set to have connection weights to all neurons in the C2 layer that reflect the firing rate of each C2 unit to one exemplar of a class. (This will produce the firing for any VTU that would be produced by one of the training views or exemplars of a class.) The S3 units that we implemented can thus be thought of as representing posterior inferior temporal cortex neurons (Serre et al. 2007a). The VTU output is classified by a one-layer error minimization network, i.e. a perceptron with one neuron for each class.

To ensure that the particular implementation of HMAX that we used for the experiments described in the main text, that of Mutch and Lowe (2008), was not different generically in the results obtained from other implementations of HMAX, we performed further investigations with the version of HMAX described by Serre et al. (2007a), which has 3 S–C layers. The S3 layer is supposed to correspond to posterior inferior temporal visual cortex, and the C3 layer, which is followed by S4 view-tuned units, to anterior inferior temporal visual cortex. The results with this version of HMAX were found to be generically similar in our investigations to those with the version implemented by Mutch and Lowe (2008), and the results with the version described by Serre et al. (2007a) are described in the Supplementary Material. We note that for both these versions of HMAX, the code is available at http://cbcl.mit.edu/jmutch/cns/index.html#hmax and that code defines the details of the architecture and the parameters, which were used unless otherwise stated, and for that reason the details of the HMAX implementations are not considered in great detail here. In the Supplementary Material, we do provide some further information about the HMAX version implemented by Serre et al. (2007a) which we used for the additional investigations reported in the Supplementary Material.

Measures for network performance

Information theory measures

The performance of VisNet was measured by Shannon information-theoretic measures that are identical to those used to quantify the specificity and selectiveness of the representations provided by neurons in the brain (Rolls and Milward 2000; Rolls 2012a; Rolls and Treves 2011). A single cell information measure indicated how much information was conveyed by the firing rates of a single neuron about the most effective stimulus. A multiple cell information measure indicated how much information about every stimulus was conveyed by the firing rates of small populations of neurons and was used to ensure that all stimuli had some neurons conveying information about them.

A neuron can be said to have learnt an invariant representation if it discriminates one set of stimuli from another set, across all transforms. For example, a neuron’s response is translation invariant if its response to one set of stimuli irrespective of presentation is consistently higher than for all other stimuli irrespective of presentation location. Note that we state ‘set of stimuli’ since neurons in the inferior temporal cortex are not generally selective for a single stimulus but rather a subpopulation of stimuli (Baylis et al. 1985; Abbott et al. 1996; Rolls et al. 1997a; Rolls and Treves 1998, 2011; Rolls and Deco 2002; Rolls 2007; Franco et al. 2007; Rolls 2008). We used measures of network performance (Rolls and Milward 2000) based on information theory and similar to those used in the analysis of the firing of real neurons in the brain (Rolls 2008; Rolls and Treves 2011). A single cell information measure was introduced which is the maximum amount of information the cell has about any one object independently of which transform (here position on the retina and view) is shown. Because the competitive algorithm used in VisNet tends to produce local representations (in which single cells become tuned to one stimulus or object), this information measure can approach bits, where is the number of different stimuli. Indeed, it is an advantage of this measure that it has a defined maximal value, which enables how well the network is performing to be quantified. Rolls and Milward (2000) also introduced a multiple cell information measure used here, which has the advantage that it provides a measure of whether all stimuli are encoded by different neurons in the network. Again, a high value of this measure indicates good performance.

For completeness, we provide further specification of the two information-theoretic measures, which are described in detail by Rolls and Milward (2000) (see Rolls 2008 and Rolls and Treves 2011 for an introduction to the concepts). The measures assess the extent to which either a single cell or a population of cells responds to the same stimulus invariantly with respect to its location, yet responds differently to different stimuli. The measures effectively show what one learns about which stimulus was presented from a single presentation of the stimulus at any randomly chosen transform. Results for top (4th) layer cells are shown. High information measures thus show that cells fire similarly to the different transforms of a given stimulus (object) and differently to the other stimuli. The single cell stimulus-specific information, I(s, R), is the amount of information the set of responses, R, has about a specific stimulus, s (see Rolls et al. 1997b and Rolls and Milward 2000). I(s, R) is given by

| 7 |

where r is an individual response from the set of responses R of the neuron. For each cell the performance measure used was the maximum amount of information a cell conveyed about any one stimulus. This (rather than the mutual information, I(S, R) where S is the whole set of stimuli s) is appropriate for a competitive network in which the cells tend to become tuned to one stimulus. (I(s, R) has more recently been called the stimulus-specific surprise (DeWeese and Meister 1999; Rolls and Treves 2011). Its average across stimuli is the mutual information I(S, R).)

If all the output cells of VisNet learned to respond to the same stimulus, then the information about the set of stimuli S would be very poor and would not reach its maximal value of of the number of stimuli (in bits). The second measure that is used here is the information provided by a set of cells about the stimulus set, using the procedures described by Rolls et al. (1997a) and Rolls and Milward (2000). The multiple cell information is the mutual information between the whole set of stimuli S and of responses R calculated using a decoding procedure in which the stimulus that gave rise to the particular firing rate response vector on each trial is estimated. (The decoding step is needed because the high dimensionality of the response space would lead to an inaccurate estimate of the information if the responses were used directly, as described by Rolls et al. 1997a and Rolls and Treves 1998.) A probability table is then constructed of the real stimuli s and the decoded stimuli . From this probability table, the mutual information between the set of actual stimuli S and the decoded estimates is calculated as

| 8 |

This was calculated for the subset of cells which had as single cells the most information about which stimulus was shown. In particular, in Rolls and Milward (2000) and subsequent papers, the multiple cell information was calculated from the first five cells for each stimulus that had maximal single cell information about that stimulus, that is, from a population of 35 cells if there were seven stimuli (each of which might have been shown in, for example, 9 or 25 positions on the retina).

Pattern association decoding

In addition, the performance was measured by a biologically plausible one-layer pattern association network using an associative synaptic modification rule. There was one output neuron for each class (which was set to a firing rate of 1.0 during training of that class but was otherwise 0.0) and 10 input neurons per class to the pattern associator. These 10 neurons for each class were the most selective neurons in the output layer of VisNet or HMAX to each object. The most selective output neurons of VisNet and HMAX were identified as those with the highest mean firing rate to all transforms of an object relative to the firing rates across all transforms of all objects and a high corresponding stimulus-specific information value for that class. Performance was measured as the per cent correct object classification measured across all views of all objects.

The output of the inferior temporal visual cortex reaches structures such as the orbitofrontal cortex and amygdala, where associations to other stimuli are learned by a pattern association network with an associative (Hebbian) learning rule (Rolls 2008, 2014). We therefore used a one-layer pattern association network (Rolls 2008) to measure how well the output of VisNet could be classified into one of the objects. The pattern association network had one output neuron for each object or class. The inputs were the 10 neurons from layer 4 of VisNet for each of the objects with the best single cell information and high firing rates. For HMAX, the inputs were the 10 neurons from the C2 layer (or from 5 of the view-tuned units) for each of the objects with the highest mean firing rate for the class when compared to the firing rates over all the classes. The network was trained with the Hebb rule:

| 9 |

where is the change of the synaptic weight that results from the simultaneous (or conjunctive) presence of presynaptic firing and postsynaptic firing or activation , and is a learning rate constant that specifies how much the synapses alter on any one pairing. The pattern associator was trained for one trial on the output of VisNet produced by every transform of each object.

Performance on the training or test images was tested by presenting an image to VisNet and then measuring the classification produced by the pattern associator. Performance was measured by the percentage of the correct classifications of an image as the correct object.

This approach to measuring the performance is very biologically appropriate, for it models the type of learning thought to be implemented in structures that receive information from the inferior temporal visual cortex such as the orbitofrontal cortex and amygdala (Rolls 2008, 2014). The small number of neurons selected from layer 4 of VisNet might correspond to the most selective for this stimulus set in a sparse distributed representation (Rolls 2008; Rolls and Treves 2011). The method would measure whether neurons of the type recorded in the inferior temporal visual cortex with good view and position invariance are developed in VisNet. In fact, an appropriate neuron for an input to such a decoding mechanism might have high firing rates to all or most of the view and position transforms of one of the stimuli, and smaller or no responses to any of the transforms of other objects, as found in the inferior temporal cortex for some neurons (Hasselmo et al. 1989; Perrett et al. 1991; Booth and Rolls 1998), and as found for VisNet layer 4 neurons (Rolls and Webb 2014). Moreover, it would be inappropriate to train a device such as a support vector machine or even an error correction perceptron on the outputs of all the neurons in layer 4 of VisNet to produce 4 classifications, for such learning procedures, not biologically plausible (Rolls 2008), could map the responses produced by a multilayer network with untrained random weights to obtain good classifications.

Results

Categorization of objects from benchmark object image sets: Experiment 1

The performance of HMAX and VisNet was compared on a test that has been used to measure the performance of HMAX (Mutch and Lowe 2008; Serre et al. 2007a, b) and indeed typical of many approaches in computer vision, the use of standard datasets such as the CalTech-256 (Griffin et al. 2007) in which sets of images from different object classes are to be classified into the correct object class.

Object benchmark database

The Caltech-256 dataset (Griffin et al. 2007) is comprised of 256 object classes made up of images that have many aspect ratios and sizes and differ quite significantly in quality (having being manually collated from web searches). The objects within the images show significant intra-class variation and have a variety of poses, illumination, scale, and occlusion as expected from natural images (see examples in Fig. 3). In this sense, the Caltech-256 database has been considered to be a difficult challenge to object recognition systems. We come to the conclusion below that the benchmarking approach with this type of dataset is not useful for training a system that must learn invariant object representations. The reason for this is that the exemplars of each object class in the CalTech-256 dataset are too discontinuous to provide a basis for learning transform-invariant object representations. For example, the image exemplars within an object class in these datasets may be very different indeed.

Fig. 3.

Example images from the Caltech256 database for two object classes, hats and beer mugs

Performance on a Caltech-256 test

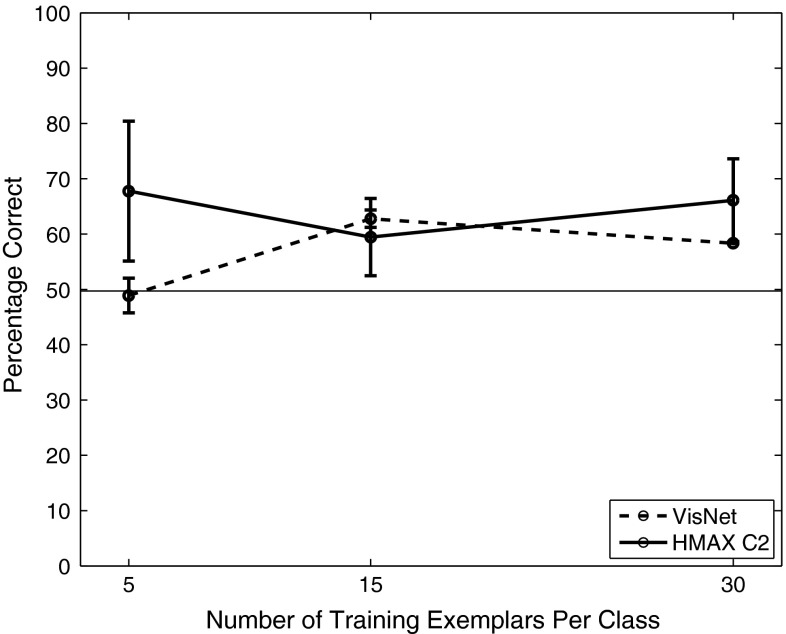

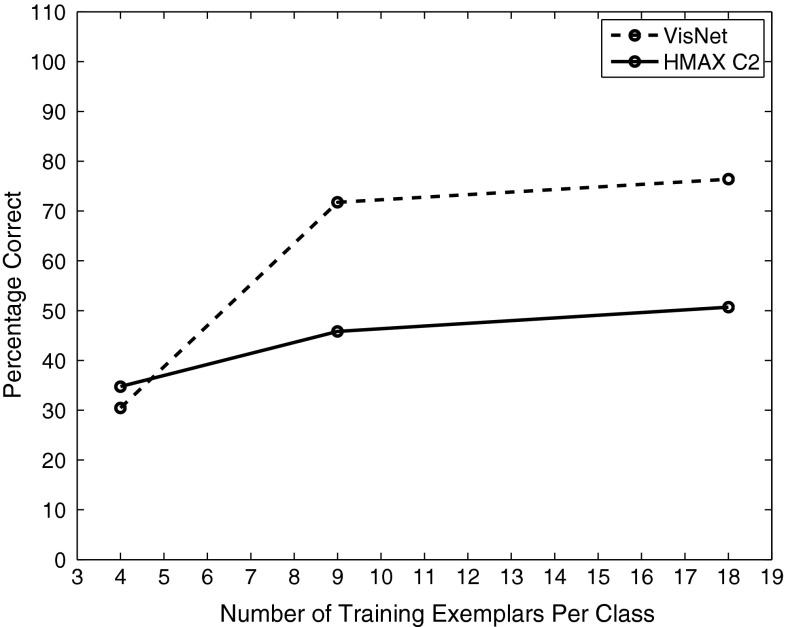

VisNet and the HMAX model were trained to discriminate between two object classes from the Caltech-256 database, the beer mugs and cowboy-hat (see examples in Fig. 3). The images in each class were rescaled to and converted to grayscale, so that shape recognition was being investigated. The images from each class were randomly partitioned into training and testing sets with performance measured in this cross-validation design over multiple random partitions. Figure 4 shows the performance of the VisNet and HMAX models when performing the task with these exemplars of the Caltech-256 dataset. Performance of HMAX and VisNet on the classification task was measured by the proportion of images classified correctly using a linear support vector machine (SVM) on all the C2 cells in HMAX [chosen as the way often used to test the performance of HMAX (Mutch and Lowe 2008; Serre et al. 2007a, b)] and on all the layer 4 (output layer) cells of VisNet. The error bars show the standard deviation of the means over three cross-validation trials with different images chosen at random for the training set and test set on each trial. The number of training exemplars is shown on the abscissa. There were 30 test examples of each object class. Chance performance at 50 % is indicated. Performance of HMAX and VisNet was similar, but was poor, probably reflecting the fact that there is considerable variation of the images within each object class, making the cross-validation test quite difficult. The nature of the performance of HMAX and VisNet on this task is assessed in the next section.

Fig. 4.

Performance of HMAX and VisNet on the classification task (measured by the proportion of images classified correctly) using the Caltech-256 dataset and linear support vector machine (SVM) classification. The error bars show the standard deviation of the means over three cross-validation trials with different images chosen at random for the training set on each trial. There were two object classes, hats and beer mugs, with the number of training exemplars shown on the abscissa. There were 30 test examples of each object class. All cells in the C2 layer of HMAX and layer 4 of Visnet were used to measure the performance. Chance performance at 50 % is indicated

Biological plausibility of the neuronal representations of objects that are produced

In the temporal lobe visual cortical areas, neurons represent which object is present using a sparse distributed representation (Rolls and Treves 2011). Neurons typically have spontaneous firing rates of a few spikes/s and increase their firing rates to 30–100 spikes/s for effective stimuli. Each neuron responds with a graded range of firing rates to a small proportion of the stimuli in what is therefore a sparse representation (Rolls and Tovee 1995; Rolls et al. 1997b). The information can be read from the firing of single neurons about which stimulus was shown, with often 2–3 bits of stimulus-specific information about the most effective stimulus (Rolls et al. 1997b; Tovee et al. 1993). The information from different neurons increases approximately linearly with the number of neurons recorded (up to approximately 20 neurons), indicating independent encoding by different neurons (Rolls et al. 1997a). The information from such groups of responsive neurons can be easily decoded (using, for example, dot product decoding utilizing the vector of firing rates of the neurons) by a pattern association network (Rolls et al. 1997a; Rolls 2008, 2012a; Rolls and Treves 2011). This is very important for biological plausibility, for the next stage of processing, in brain regions such as the orbitofrontal cortex and amygdala, contains pattern association networks that associate the outputs of the temporal cortex visual areas with stimuli such as taste (Rolls 2008, 2014).

We therefore compared VisNet and HMAX in the representations that they produce of objects, to analyse whether they produce these types of representation, which are needed for biological plausibility. We note that the usual form of testing for VisNet does involve the identical measures used to measure the information present in the firing of temporal cortex neurons with visual responses (Rolls and Milward 2000; Rolls 2012a; Rolls et al. 1997a, b). On the other hand, the output of HMAX is typically read and classified by a powerful and artificial support vector machine (Mutch and Lowe 2008; Serre et al. 2007a, b), so it is necessary to test its output with the same type of biologically plausible neuronal firing rate decoding used by VisNet. Indeed, the results shown in Sect. 3.1.2 were obtained with support vector machine decoding used for both HMAX and VisNet. In this section, we analyse the firing rate representations produced by VisNet and HMAX, to assess the biological plausibility of their output representations. The information measurement procedures are described in Sect. 2.8, and in more detail elsewhere (Rolls and Milward 2000; Rolls 2012a; Rolls et al. 1997a, b).

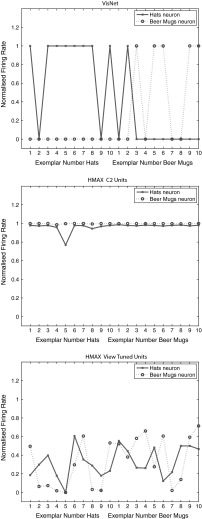

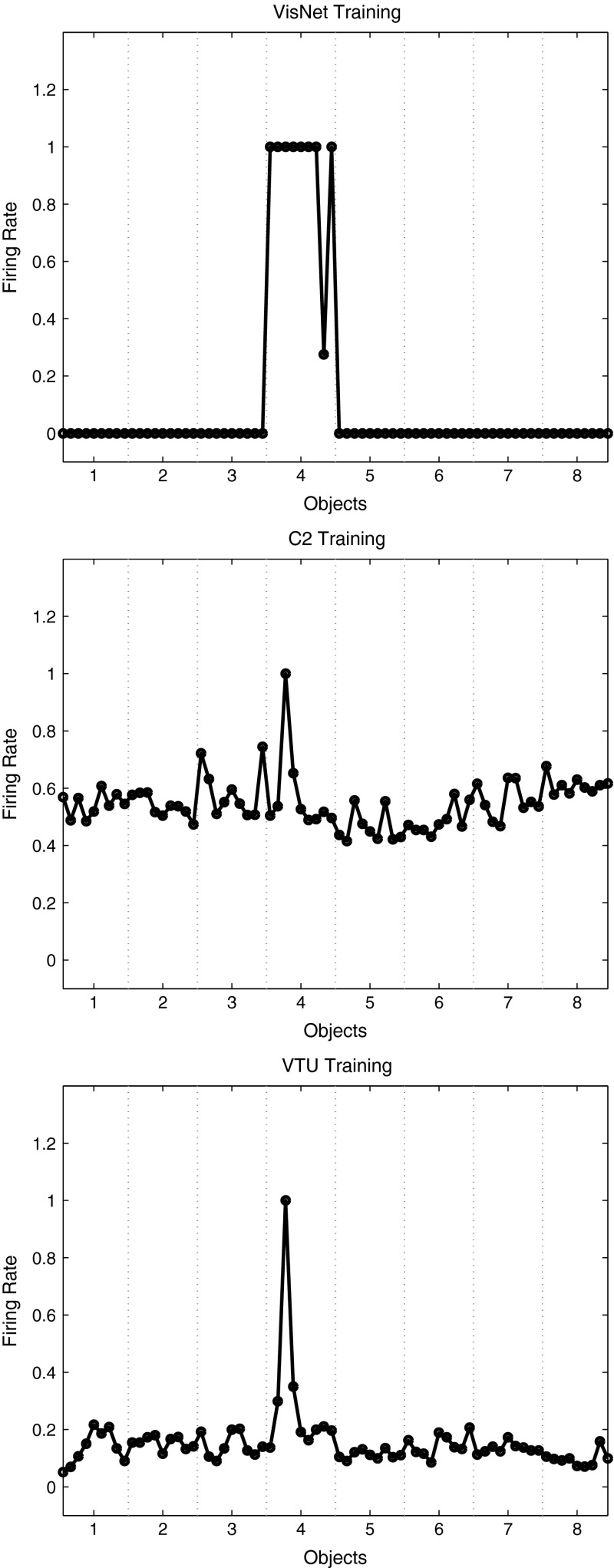

Figure 5 Upper shows the firing rates of two VisNet neurons for the test set, in the experiment with the Caltech-256 dataset using two object classes, beer mugs and hats, when trained on 50 exemplars of each class, and then tested in a cross-validation design with 10 test exemplars of each class that had not been seen during training.

Fig. 5.

Top firing rate of two output layer neurons of VisNet, when tested on two of the classes, hats and beer mugs, from the Caltech 256. The firing rates to 10 untrained (i.e. testing) exemplars of each of the two classes are shown. One of the neurons responded more to hats than to beer mugs (solid line). The other neuron responded more to beer mugs than to hats (dashed line). Middle firing rate of two C2 tuned units of HMAX when tested on two of the classes, beer mugs and hats, from the Caltech 256. Bottom firing rate of a view-tuned unit of HMAX when tested on two of the classes, hats (solid line) and beer mugs (dashed line), from the Caltech 256. The neurons chosen were those with the highest single cell information that could be decoded from the responses of a neuron to 10 exemplars of each of the two objects (as well as a high firing rate) in the cross-validation design

For the testing (untrained, cross validation) set of exemplars, one of the neurons responded with a high rate to 8 of the 10 untrained exemplars of one class (hats) and to 1 of the exemplars of the other class (beer mugs). The single cell information was 0.38 bits. The other neuron responded to 5 exemplars of the beer mugs class and to no exemplars of the hats class, and its single cell information was 0.21 bits. The mean stimulus-specific single cell information across the 5 most informative cells for each class was 0.28 bits.

The results for the cross-validation testing mode shown in Fig. 5(upper) thus show that VisNet can learn about object classes and can perform reasonable classification of untrained exemplars. Moreover, these results show that VisNet can do this using simple firing rate encoding of its outputs, which might potentially be decoded by a pattern associator. To test this, we trained a pattern association network on the output of VisNet to compare with the support vector machine results shown in Fig. 4. With 30 training exemplars, classification using the best 10 neurons for each class was 61.7 % correct, compared to chance performance of 50 % correct.

Figure 5 (middle) shows two neurons in the C2 and (bottom) two neurons in the view-tuned unit layer of HMAX on the test set of 10 exemplars of each class in the same task. It is clear that the C2 neurons both responded to all 10 untrained exemplars of both classes, with high firing rates to almost presented images. The normalized mean firing rate of one of the neurons was 0.905 to the beer mugs and 0.900 to the hats. We again used a pattern association network on the output of HMAX C2 neurons to compare with the support vector machine results shown in Fig. 4. With 30 training exemplars, classification using the best 10 neurons for each class was 63 % correct, compared to chance performance of 50 % correct. When biologically plausible decoding by an associative pattern association network is used, the performance of HMAX is poorer than when the performance of HMAX is measured with powerful least squares classification. The mean stimulus-specific single cell information across the 5 most informative cells for each class was 0.07 bits. This emphasizes that the output of HMAX is not in a biologically plausible form.

The relatively poor performance of VisNet (which produces a biologically plausible output), and of HMAX when its performance is measured in a biologically plausible way, raises the point that training with a diverse sets of exemplars of an object class as in the Caltech dataset is not a very useful way to test object recognition networks of the type found in the brain. Instead, the brain produces view-invariant representations of objects, using information about view invariance simply not present in the Caltech type of dataset, because it does not provide training exemplars shown with different systematic transforms (position over up to 70, size, rotation and view) for transform invariance learning. In the next experiment, we therefore investigated the performance of HMAX and VisNet with a dataset in which different views of each object class are provided, to compare how HMAX and VisNet perform on this type of problem.

Figure 5 (bottom) shows the firing rates of two view-tuned layer units of HMAX. It is clear that the view-tuned neurons had lower firing rates (and this is just a simple function of the value chosen for , which in this case was 1), but that again the firing rates differed little between the classes. For example, the mean firing rate of one of the VTU neurons to the beer mugs was 0.3 and to the hats was 0.35. The single cell stimulus-specific information measures were 0.28 bits for the hats neuron and 0.24 bits for the beer mugs neuron. The mean stimulus-specific single cell information across the 5 most informative VTUs for each class was 0.10 bits.

We note that if the VTU layer was classified with a least squares classifier (i.e. a perceptron, which is not biologically plausible, but is how the VTU neurons were decoded by Serre et al. 2007a), then performance was at 67 %. (With a pattern associator, the performance was 66 % correct.) Thus the performance of the VTU outputs (introduced to make the HMAX outputs otherwise of C neuron appear more biologically plausible) was poor on this type of CalTech-256 problem when measured both by a linear classifier and by a pattern association network.

Figure 1 of the Supplementary Material shows that similar results were obtained for the HMAX implementation by Serre et al. (2007a).

Evaluation of categorization when tested with large numbers of images presented randomly

The benchmark type of test using large numbers of images of different object classes presented in random sequence has limitations, in that an object can look quite different from different views. Catastrophic changes in the image properties of objects can occur as they are rotated through different views (Koenderink 1990). One example is that any view from above a cup into the cup that does not show the sides of the cup may look completely different from any view where some of the sides or bottom of the cup are shown. In this situation, training any network with images presented in a random sequence (i.e. without a classification label for each image) is doomed to failure in view-invariant object recognition. This applies to all such approaches that are unsupervised and that attempt to categorize images into objects based on image statistics. If a label for its object category is used for each image during training, this may help to produce good classification, but is very subject to over-fitting, in which small pixel changes in an image that do not affect which object it is interpreted as by humans may lead to it being misclassified (Krizhevsky et al. 2012; Szegedy et al. 2014).

In contrast, the training of VisNet is based on the concept that the transforms of an object viewed from different angles in the natural world provide the information required about the different views of an object to build a view-invariant representation and that this information can be linked together by the continuity of this process in time. Temporal continuity (Rolls 2012a) or even spatial continuity (Stringer et al. 2006; Perry et al. 2010) and typically both (Perry et al. 2006) provide the information that enables different images of an object to be associated together. Thus two factors, continuity of the image transforms as the object transforms through different views, and a principle of spatio-temporal closeness to provide a label of the object based on its property of spatio-temporal continuity, provide a principled way for VisNet, and it is proposed for the real visual system of primates including humans, to build invariant representations of objects (Rolls 1992, 2008, 2012a). This led to Experiment 2.

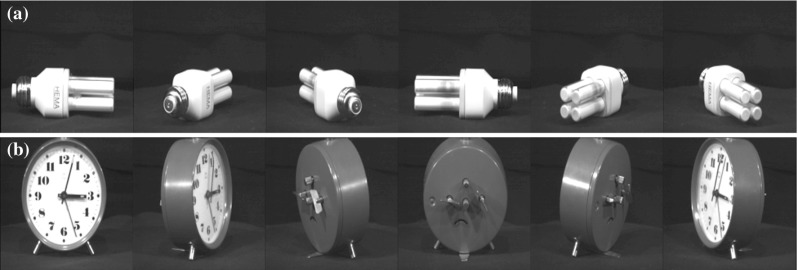

Performance with the Amsterdam library of images: Experiment 2

Partly because of the limitations of the Caltech-256 database for training in invariant object recognition, we also investigated training with the Amsterdam Library of Images (ALOI) database (Geusebroek et al. 2005) (http://staff.science.uva.nl/~aloi/). The ALOI database takes a different approach to the Caltech-256 and instead of focussing on a set of natural images within an object category or class, provides images of objects with a systematic variation of pose and illumination for 1000 small objects. Each object is placed onto a turntable and photographed in consistent conditions at increments, resulting in a set of images that not only show the whole object (with regard to out of plane rotations), but does so with some continuity from one image to the next (see examples in Fig. 6).

Fig. 6.

Example images from the two object classes within the ALOI database, a 293 (light bulb) and b 156 (clock). Only the increments are shown

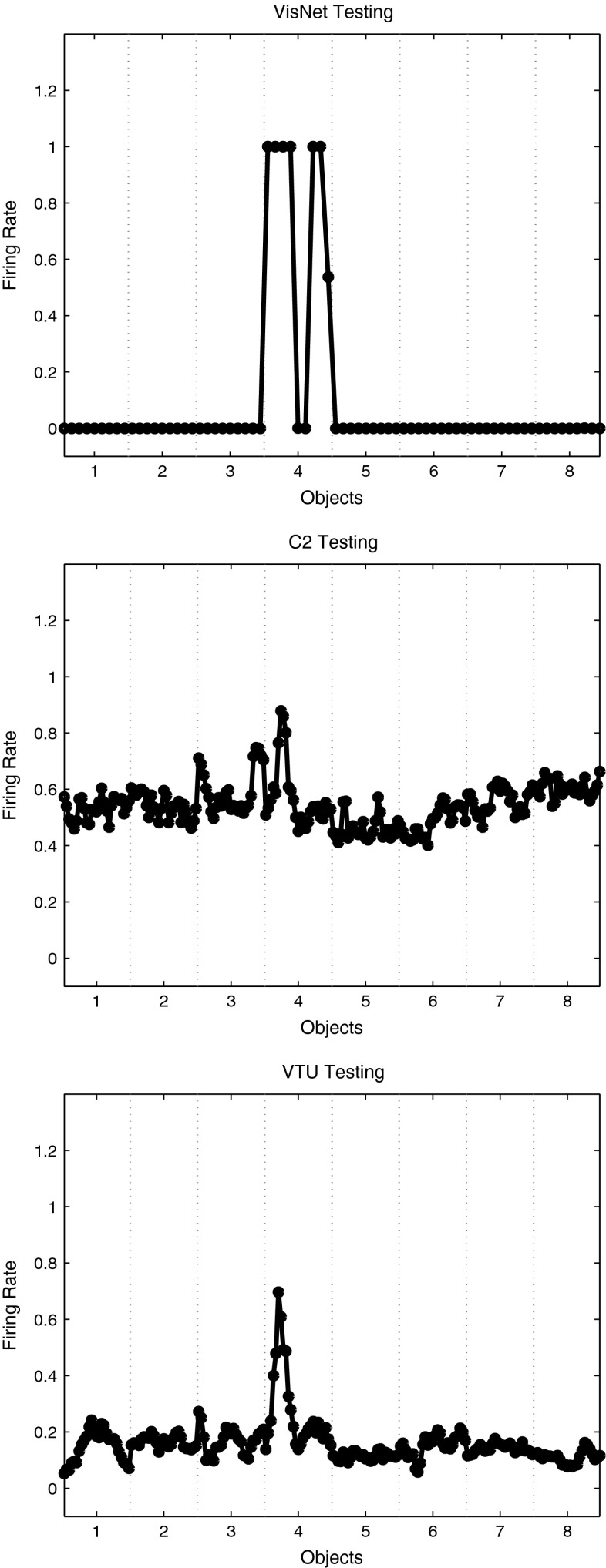

Eight classes of object (with designations 156, 203, 234, 293, 299, 364, 674, 688) from the dataset were chosen (see Fig. 6 for examples). Each class or object comprises of 72 images taken at increments through the full horizontal plane of rotation. Three sets of training images were used as follows. The training set consisted of 4 views of each object spaced apart; 9 views spaced apart; or 18 views spaced apart. The test set of images was in all cases a cross-validation set of 18 views of each object spaced apart and offset by from the training set with 18 views and not including any training view. The aim of using the different training sets was to investigate how close in viewing angle the training images need to be and also to investigate the effects of using different numbers of training images. The performance was measured with a pattern association network with one neuron per object and 10 inputs for each class that were the most selective neurons for an object in the output layer of VisNet or the C2 layer of HMAX. The best cells of VisNet or HMAX for a class were selected as those with the highest mean rate across views to the members of that class relative to the firing rate to all views of all objects and with a high stimulus-specific information for that class.

Figure 7 shows (measuring performance with a pattern associator trained on the 10 best cells for each of the 8 classes) that VisNet performed moderately well as soon as there were even a few training images, with the coding of its outputs thus shown to be suitable for learning by a pattern association network. In a statistical control, we found that an untrained VisNet performed at 18 % correct when measured with the pattern association network compared with the 73 % correct after training with 9 exemplars that is shown in Fig. 7. HMAX performed less well than VisNet. There was some information in the output of the HMAX C2 neurons, for if a powerful linear support vector machine (SVM) was used across all output layer neurons, the performance in particular for HMAX improved, with 78 % correct for 4 training views and 93 % correct for 9 training views and 92 % correct for 18 training views (which in this case was also achieved by VisNet).

Fig. 7.

Performance of VisNet and HMAX C2 units measured by the percentage of images classified correctly on the classification task with 8 objects using the Amsterdam Library of Images dataset and measurement of performance using a pattern association network with one output neuron for each class. The training set consisted of 4 views of each object spaced apart; or 9 views spaced apart; or 18 views spaced apart. The test set of images was in all cases a cross-validation set of 18 views of each object spaced apart and offset by from the training set with 18 views and not including any training view. The 10 best cells from each class were used to measure the performance. Chance performance was 12.5 % correct

What VisNet can do here is to learn view-invariant representations using its trace learning rule to build feature analysers that reflect the similarity across at least adjacent views of the training set. Very interestingly, with 9 training images, the view spacing of the training images was , and the test images in the cross-validation design were the intermediate views, away from the nearest trained view. This is promising, for it shows that enormous numbers of training images with many different closely spaced views are not necessary for VisNet. Even 9 training views spaced apart produced reasonable training.

We next compared the outputs produced by VisNet and HMAX, in order to assess their biological plausibility. Figure 8 Upper shows the firing rate of one output layer neuron of VisNet, when trained on 8 objects from the Amsterdam Library of Images, with 9 exemplars of each object with views spaced apart (set 2 described above). The firing rates on the training set are shown. The neuron responded to all 9 views of object 4 (a light bulb) and to no views of any other object. The neuron illustrated was chosen to have the highest single cell stimulus-specific information about object 4 that could be decoded from the responses of a neuron to the 9 exemplars of object 4 (as well as a high firing rate). That information was 3 bits. The mean stimulus-specific single cell information across the 5 most informative cells for each class was 2.2 bits. Figure 8 Middle shows the firing rate of one C2 unit of HMAX when trained on the same set of images. The unit illustrated was that with the highest mean firing rate across views to object 4 relative to the firing rates across all stimuli and views. The neuron responded mainly to one of the 9 views of object 4, with a small response to 2 nearby views. The neuron provided little information about object 4, even though it was the most selective unit for object 4. Indeed, the single cell stimulus-specific information for this C2 unit was 0.68 bits. The mean stimulus-specific single cell information across the 5 most informative C2 units for each class was 0.28 bits. Figure 8 Bottom shows the firing rate of one VTU of HMAX when trained on the same set of images. The unit illustrated was that with the highest firing rate to one view of object 4. Small responses can also be seen to view 2 of object 4 and to view 9 of object 4, but apart from this, most views of object 4 were not discriminated from the other objects. The single cell stimulus-specific information for this VTU was 0.28 bits. The mean stimulus-specific single cell information across the 5 most informative VTUs for each class was 0.67 bits.

Fig. 8.

Top firing rate of one output layer neuron of VisNet, when trained on 8 objects from the Amsterdam Library of Images, with 9 views of each object spaced apart. The firing rates on the training set are shown. The neuron responded to all 9 views of object 4 (a light bulb) and to no views of any other object. The neuron illustrated was chosen to have the highest single cell stimulus-specific information about object 4 that could be decoded from the responses of the neurons to all 72 exemplars shown, as well as a high firing rate to object 4. Middle firing rate of one C2 unit of HMAX when trained on the same set of images. The unit illustrated was that the highest mean firing rate across views to object 4 relative to the firing rates across all stimuli and views. Bottom firing rate of one view-tuned unit (VTU) of HMAX when trained on the same set of images. The unit illustrated was that the highest firing rate to one view of object 4

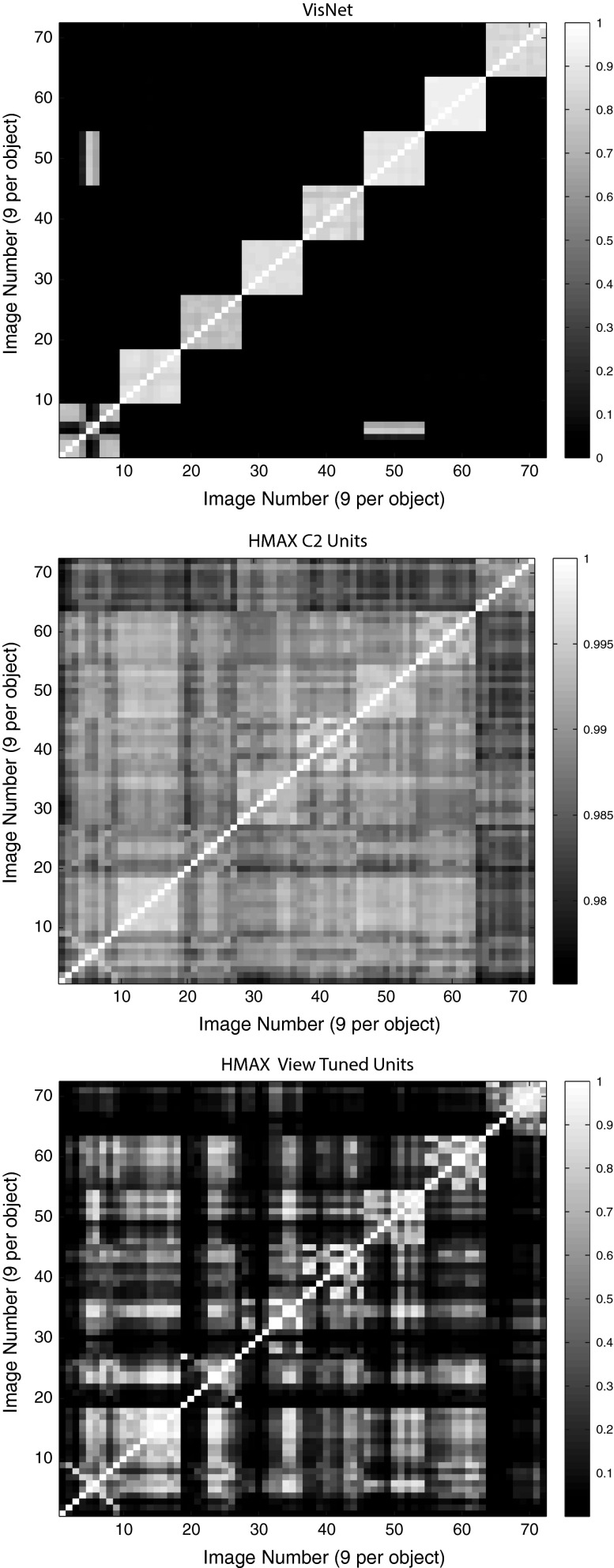

The stimulus-specific single unit information measures show that the neurons of VisNet have much information in their firing rates about which object has been shown, whereas there is much less information in the firing rates of HMAX C2 units or view-tuned units. The firing rates for different views of an object are highly correlated for VisNet, but not for HMAX. This is further illustrated in Fig. 10, which shows the similarity between the outputs of the networks between the 9 different views of 8 objects produced by VisNet (top), HMAX C2 (middle), and HMAX VTUs (bottom) for the Amsterdam Library of Images test. Each panel shows a similarity matrix (based on the cosine of the angle between the vectors of firing rates produced by each object) between the 8 stimuli for all output neurons of each network. The maximum similarity is 1, and the minimal similarity is 0. The results are from the simulations with 9 views of each object spaced apart during training, with the testing results illustrated for the 9 intermediate views from the nearest trained view. For VisNet (top), it is shown that the correlations measured across the firing rates of all output neurons are very similar for all views of each object (apart from 2 views of object 1) and that the correlations with all views of every other object are close to 0.0. For HMAX C2 units, the situation is very different, with the outputs to all views of all objects being rather highly correlated, with a minimum correlation of 0.975. In addition, the similarity of the outputs produced by the different views of any given object is little more than the similarity with the views of other objects. This helps to emphasize the point that the firing within HMAX does not reflect well even a view of one object as being very different from the views of another object, let alone that different views of the same object produce similar outputs. This emphasizes that for HMAX to produce measurably reasonable performance, most of the classification needs to be performed by a powerful classifier connected to the outputs of HMAX, not by HMAX itself. The HMAX VTU firing (bottom) was more sparse ( was 1.0), but again the similarities between objects are frequently as great as the similarities within objects.

Fig. 10.

Similarity between the outputs of the networks between the 9 different views of 8 objects produced by VisNet (top), HMAX C2 (middle), and HMAX VTUs (bottom) for the Amsterdam Library of Images test. Each panel shows a similarity matrix (based on the cosine of the angle between the vectors of firing rates produced by each object) between the 8 stimuli for all output neurons of each type. The maximum similarity is 1, and the minimal similarity is 0

Figure 2 of the Supplementary Material shows that similar results were obtained for the HMAX implementation by Serre et al. (2007a).

Experiment 2 thus shows that with the ALOI training set, VisNet can form separate neuronal representations that respond to all exemplars of each of 8 objects seen in different view transforms and that single cells can provide perfect information from their firing rates to any exemplar about which object is being presented. The code can be read in a biologically plausible way with a pattern association network, which achieved 77 % correct on the cross-validation set. Moreover, with training views spaced apart, VisNet performs moderately well (72 % correct) on the intermediate views ( away from the nearest training view) (Fig. 9 Top). In contrast, C2 output units of HMAX discriminate poorly between the object classes (Fig. 9 Middle), view-tuned units of HMAX respond only to test views that are away from the training view, and the performance of HMAX tested with a pattern associator is correspondingly poor.

Fig. 9.

Top firing rate during cross-validation testing of one output layer neuron of VisNet, when trained on 8 objects from the Amsterdam Library of Images, with 9 exemplars of each object with views spaced apart. The firing rates on the cross-validation testing set are shown. The neuron was selected to respond to all views of object 4 of the training set, and as shown responded to 7 views of object 4 in the test set each of which was from the nearest training view and to no views of any other object. Middle firing rate of one C2 unit of HMAX when tested on the same set of images. The neuron illustrated was that the highest mean firing rate across training views to object 4 relative to the firing rates across all stimuli and views. The test images were away from the test images. Bottom firing rate of one view-tuned unit (VTU) of HMAX when tested on the same set of images. The neuron illustrated was that the highest firing rate to one view of object 4 during training. It can be seen that the neuron responded with a rate of 0.8 to the two training images (1 and 9) of object 4 that were away from the image for which the VTU had been selected

Effects of rearranging the parts of an object: Experiment 3

Rearranging parts of an object can disrupt identification of the object, while leaving low-level features still present. Some face-selective neurons in the inferior temporal visual cortex do not respond to a face if its parts (e.g. eyes, nose, and mouth) are rearranged, showing that these neurons encode the whole object and do not respond just to the features or parts (Rolls et al. 1994; Perrett et al. 1982). Moreover, these neurons encode identity in that they respond differently to different faces (Baylis et al. 1985; Rolls et al. 1997a, b; Rolls and Treves 2011). We note that some other neurons in the inferior temporal visual cortex respond to parts of faces such as eyes or mouth (Perrett et al. 1982; Issa and DiCarlo 2012), consistent with the hypothesis that the inferior temporal visual cortex builds configuration-specific whole face or object representations from their parts, helped by feature combination neurons learned at earlier stages of the ventral visual system hierarchy (Rolls 1992, 2008, 2012a, 2016) (Fig. 1).

To investigate whether neurons in the output layers of VisNet and HMAX can encode the identity of whole objects and faces (as distinct from their parts, low-level features, etc.), we tested VisNet and HMAX with normal faces and with faces with their parts scrambled. We used 8 faces from the ORL database of faces (http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html) each with 5 exemplars of different views, as illustrated in Fig. 11. The scrambling was performed by taking quarters of each face and making 5 random permutations of the positions of each quarter. The procedure was to train on the set of unscrambled faces and then to test how the neurons that responded best to each face then responded when the scrambled versions of the faces were shown, using randomly scrambled versions of the same eight faces each with the same set of 5 view exemplars.

Fig. 11.

Examples of images used in the scrambled faces experiment. Top two of the 8 faces in 2 of the 5 views of each. Bottom examples of the scrambled versions of the faces

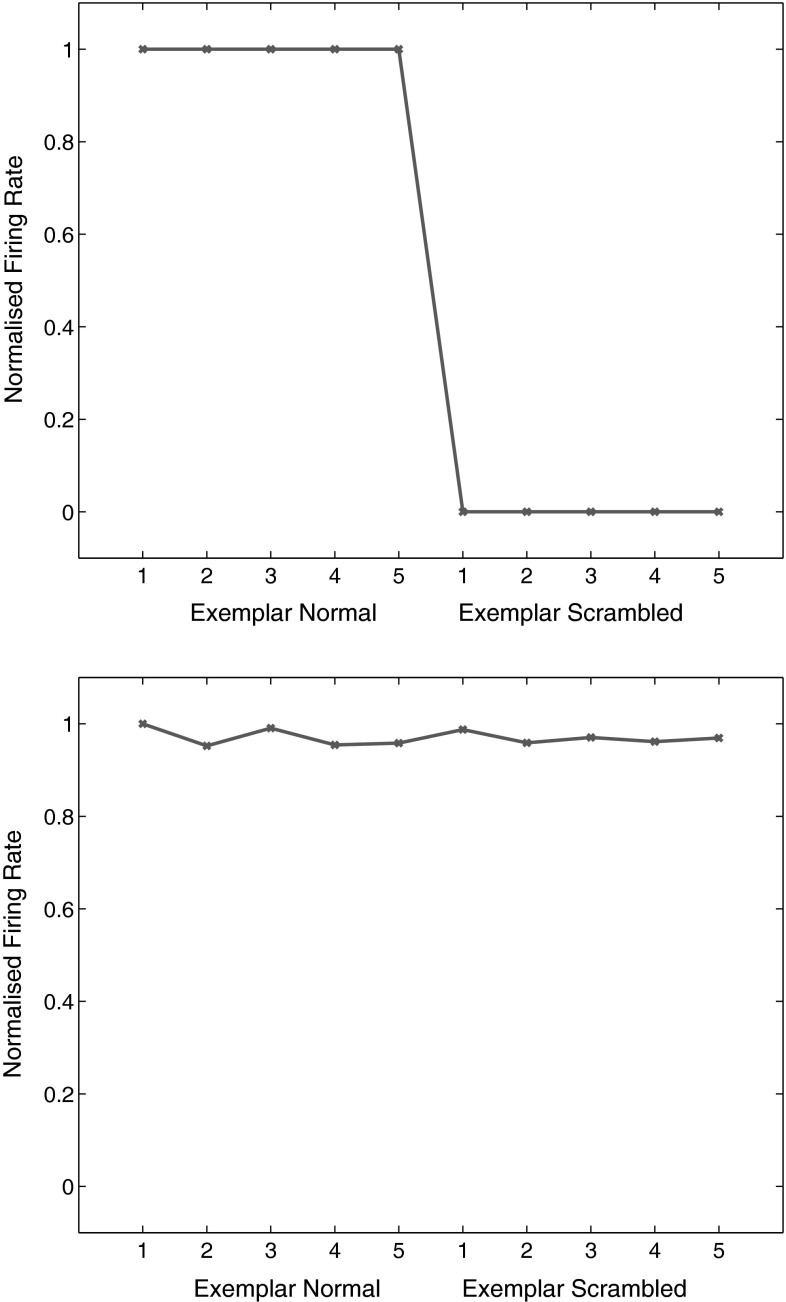

VisNet was trained for 20 epochs and performed 100 % correct on the training set. When tested with the scrambled faces, performance was at chance, 12.5 % correct, with 0.0 bits of multiple cell information using the 5 best cells for each class. An example of a VisNet layer 4 neuron that responded to one of the faces after training is shown in Fig. 12 top. The neuron responded to all the different view exemplars of the unscrambled face (and to no other faces in the training set). When the same neuron was then tested with the randomly scrambled versions of the same face stimuli, the firing rate was zero. In contrast, HMAX neurons did not show a reduction in their activity when tested with the same scrambled versions of the stimuli. This is illustrated in Fig. 12 bottom, in which the responses of a view-tuned neuron (selected as the neuron with most selectivity between faces, and a response to exemplar 1 of one of the non-scrambled faces) were with similarly high firing rates to the scrambled versions of the same set of exemplars. Similar results were obtained for the HMAX implementation by Serre et al. (2007a) as shown in the Supplementary Material.

Fig. 12.

Top effect of scrambling on the responses of a neuron in VisNet. This VisNet layer 4 neuron responded to one of the faces after training and to none of the other 7 faces. The neuron responded to all the different view exemplars 1–5 of the unscrambled face (exemplar normal). When the same neuron was then tested with the randomly scrambled versions of the same face stimuli (exemplar scrambled), the firing rate was zero. Bottom effect of scrambling on the responses of a neuron in HMAX. This view-tuned neuron of HMAX was chosen to be as discriminating between the 8 face identities as possible. The neuron responded to all the different view exemplars 1–5 of the unscrambled face. When the same neuron was then tested with the randomly scrambled versions of the same face stimuli, the neuron responded with similarly high rates to the scrambled stimuli

This experiment provides evidence that VisNet learns shape-selective responses that do not occur when the shape information is disrupted by scrambling. In contrast, HMAX must have been performing its discrimination between the faces based not on the shape information about the face that was present in the images, but instead on some lower-level property such as texture or feature information that was still present in the scrambled images. Thus VisNet performs with scrambled images in a way analogous to that of neurons in the inferior temporal visual cortex (Rolls et al. 1994).

The present result with HMAX is a little different from that reported by Riesenhuber and Poggio (1999) where some decrease in the responses of neurons in HMAX was found after scrambling. We suggest that the difference is that in the study by Riesenhuber and Poggio (1999), the responses were not of natural objects or faces, but were simplified paper-clip types of image, in which the degree of scrambling used would (in contrast to scrambling natural objects) leave little feature or texture information that may normally have a considerable effect on the responses of neurons in HMAX.

View-invariant object recognition: Experiment 4

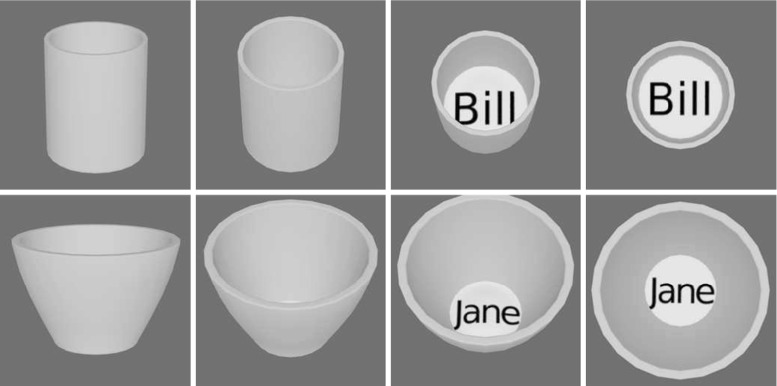

Some objects look different from different views (i.e. the images are quite different from different views), yet we can recognize the object as being the same from the different views. Further, some inferior temporal visual cortex neurons respond with view-invariant object representations, in that they respond selectively to some objects or faces independently of view using a sparse distributed representation (Hasselmo et al. 1989; Booth and Rolls 1998; Logothetis et al. 1995; Rolls 2012a; Rolls and Treves 2011). An experiment was designed to compare how VisNet and HMAX operate in view-invariant object recognition, by testing both on a problem in which objects had different image properties in different views. The prediction is that VisNet will be able to form by learning neurons in its output layer that respond to all the different views of one object and to none of the different views of another object, whereas HMAX will not form neurons that encode objects, but instead will have its outputs dominated by the statistics of the individual images.

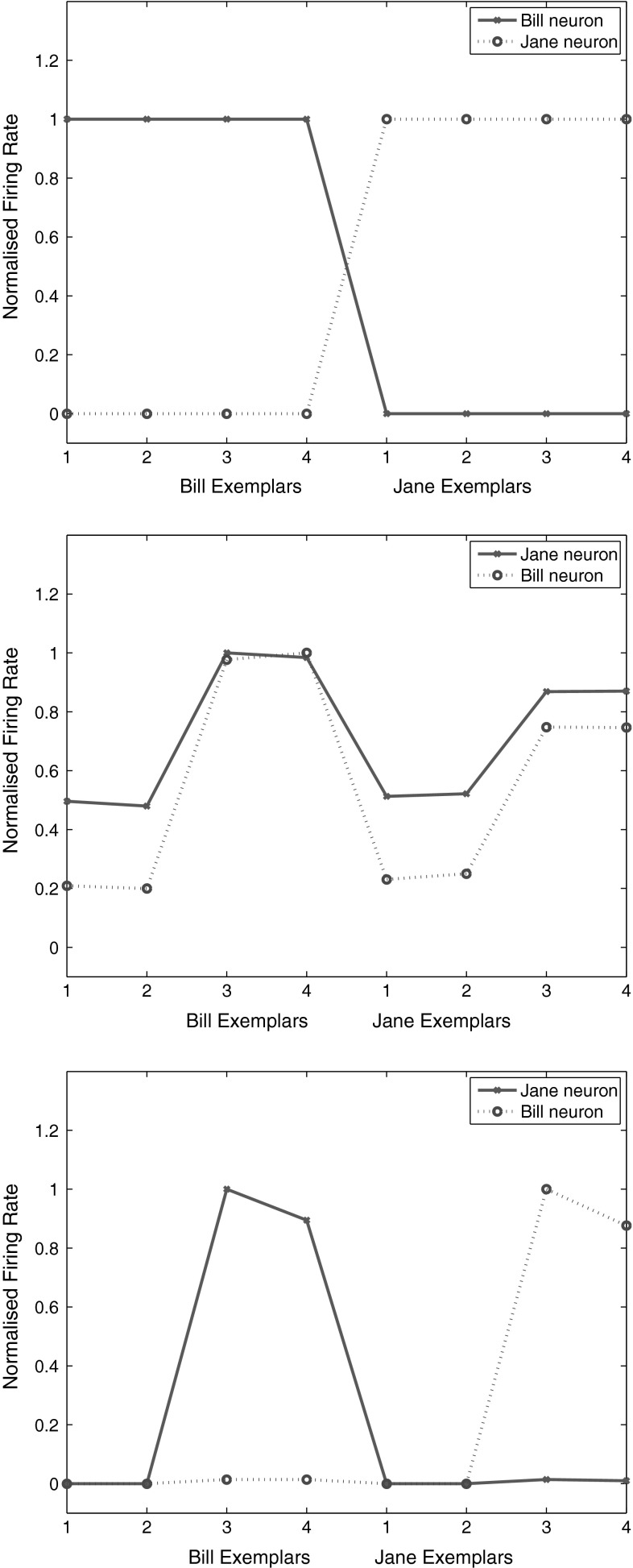

The objects used in the experiment are shown in Fig. 13. There were two objects, two cups, each with four views, constructed with Blender. VisNet was trained for 10 epochs, with all views of one object shown in random permuted sequence, then all views of the other object shown in random permuted sequence, to enable VisNet to use its temporal trace learning rule to learn about the different images that occurring together in time were likely to be different views of the same object. VisNet performed 100 % correct in this task by forming neurons in its layer 4 that responded either to all views of one cup (labelled ‘Bill’) and to no views of the other cup (labelled ‘Jane’), or vice versa, as illustrated in Fig. 14 top.

Fig. 13.

View-invariant representations of cups. The two objects, each with four views

Fig. 14.