Abstract

Objective

To develop a robotic surgery training regimen integrating objective skill assessment for otolaryngology and head and neck surgery trainees consisting of training modules of increasing complexity and leading up to procedure specific training. In particular, we investigate applications of such a training approach for surgical extirpation of oropharyngeal tumors via a transoral approach using the da Vinci Robotic system.

Study Design

Prospective blinded data collection and objective evaluation (OSATS) of three distinct phases using the da Vinci Robotic surgical system.

Setting

Academic University Medical Engineering/Computer Science laboratory

Methods

Between September 2010 and July 2011, 8 Otolaryngology Head and Neck Surgery residents and 4 staff “experts” from an academic hospital participated in three distinct phases of robotic surgery training involving 1) robotic platform operational skills, 2) set-up of the patient side system, and 3) a complete ex-vivo surgical extirpation of an oropharyngeal “tumor” located in the base of tongue. Trainees performed multiple (4) approximately equally spaced training sessions in each stage of the training. In addition to trainees, baseline performance data was obtained for the experts. Each surgical stage was documented with motion and event data captured from the application programming interfaces (API) of the da Vinci system, as well as separate video cameras as appropriate. All data was assessed using automated skill measures of task efficiency, and correlated with structured assessment (OSATS, and similar Likert scale) from three experts to assess expert and trainee differences, and compute automated and expert assessed learning curves.

Results

Our data shows that such training results in an improved didactic robotic knowledge base and improved clinical efficiency with respect to the set-up and console manipulation. Experts (e.g. average OSATS 25, Stdev. 3.1, module 1 – suturing) and trainees (average OSATS 15.9, Stdev. 3.9, week 1) are well separated at the beginning of the training, and the separation reduces significantly (expert average OSATS 27.6, Std. 2.7, trainee average OSATS 24.2, Std. 6.8, module 3) at the conclusion of the training. Learning curves in each of the three stages show diminishing differences between the experts and trainees, also consistent with expert assessment. Subjective assessment by experts verified the clinical utility of the module 3 surgical environment and a survey of trainees consistently rated the curriculum as very useful in progression to human operating room assistance.

Conclusions

Structured curricular robotic surgery training with objective assessment promises to reduce the overhead for mentors, allow detailed assessment of human-machine interface skills and create customized training models for individualized training. This preliminary study verifies the utility of such training in improving human-machine operations skills (module 1), and operating room and surgical skills (module 2 and 3). In contrast to current coarse measures of total operating time and subjective assessment of error for short mass training sessions, these methods may allow individual tasks to be removed from the trainee regimen when skill levels are within the standard deviation of the experts for these tasks, which can greatly enhance overall efficiency of the training regimen and allow time for additional more complex training to be incorporated in the same timeframe.

Level of Evidence

NA

Keywords: Head and Neck, Oral cavity, Oropharynx, TORS, robotic surgery, robotic surgery training

Introduction

It is estimated that a large majority (65% in 2008 [1]) of prostatic surgical procedures are now performed [2,3] with minimally invasive techniques, such as with the da Vinci Surgical System (Intuitive Surgical, Inc. CA). A large number of robotic procedures are also performed in other surgical specialties including gynecology [4], cardiothoracic surgery [5,6], and general surgery (e.g. gastrointestinal, colorectal) [7]. Although these and other surgical specialties have been using robotic surgical assistance for over a decade, otolaryngology/head and neck surgery has only relatively recently begun to apply robotics in any significant volumes.

In head and neck surgery, one of the most significant developments in the last several years has been the application of robotic surgery to oropharyngeal tumors. Traditional open approaches to the oropharynx included large transcervical incisions and mandibulotomies with their inherent morbidities. Historically these approaches increased the risk for debilitating functional and wound complications including pharyngocutaneous fistulae and loss of mandibulo-facial integrity [8–12]. This has led to a predilection for non-surgical therapies (radiation and chemotherapy) for oropharyngeal cancers in many institutions [1]. The da Vinci surgical system was first reported as a minimally invasive approach to the oropharynx in 2005 [2, 3]. This has since been demonstrated to be safe and feasible [7–9]. Many studies, albeit retrospective and with single treatment arms, demonstrated overall and disease-free survival of greater than 90% even for advanced disease; rates that are comparable to those in non-surgical modalities [4–6]. Primary surgical treatment affords the most accurate tumor staging, and consequently the opportunity to intensify or de-intensify adjuvant therapy which has implications for functional recovery [7–10]. Not only is robotic surgery changing treatment paradigms in oropharyngeal cancer patients [11, 12], but its utility is also increasing in thyroid surgery. Transaxillary robotic thyroidectomy is seen as a promising alternative approach to select thyroid tumors, eliminating neck incision scars[13]. A recent modification of this technique utilizes a postauricular, face-lift approach that may facilitate further adoption of robotic technology in the neck [14]. Robotic surgical access to the lateral skull base [15], anterior skull base[16], parapharyngeal space[30], hypopharynx, and larynx [31], are all also under investigation, along with transoral access to neck sites [23,24].

With increasing implementation of robotic techniques, training and credentialing of otolaryngologists/head and neck surgeons is imperative to standardize patient care and safety [12]. This includes training programs for residents, with basic robotic training to hone skills in a laboratory setting [17, 18]. These training methods have traditionally relied on manual assessment by experts, and traditional metrics (task completion, counting error rates) for assessing robotic surgical skills. The skills that are needed for robotic head and neck surgery include clinical acumen for patient selection, competence with endoscopic surgical technique, and skill in setup and manipulation of the surgeon-machine interfaces. Traditional training methods and measures applied so far may have limited capabilities in trending progress of a trainee, providing objective timely feedback, and developing customizable training programs to match an individual trainee’s progress.

By contrast, we report on the first head and neck residency-focused training program that incorporates analysis of instrument motion and system configuration information and endoscopic video assessment to perform objective assessment of a trainees’ progress. This work describes the training study and expert structured assessment, while continued validation of our previously described automated metrics [17] will be reported in a follow-on article.

Materials and methods

Institutional Review Board approval was obtained for a prospective multi-module investigation in July 2010 and eight otolaryngology residents were enrolled in the study. Each resident provided informed consent with written documentation. They completed an online da Vinci Robotic Surgery didactic module and a pre-training questionnaire surveying demographics, self-assessment of hand-eye coordination, gaming/computing experience and da Vinci training experience. Four practicing head and neck surgeons with robotic surgery expertise (proficient at human surgery) voluntarily provided baseline performance data by performing the same three training modules two times.

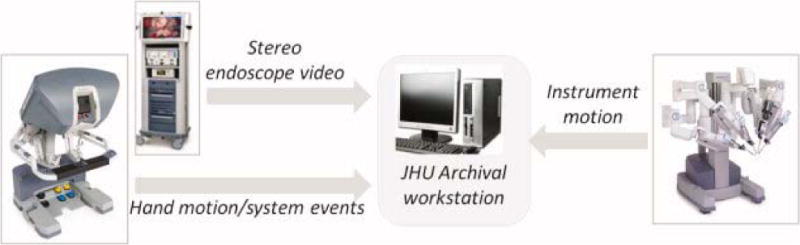

All robotic training was performed using the da Vinci S Surgical System installed in the Swirnow Mock Operating Room of the Visual Imaging and Surgical Robotics Laboratory (VISR) at the Johns Hopkins University, Homewood Campus. Our experimental video and instrument motion data was collected using a PC-based recording system [17]. A small VISR workstation and connecting cables were installed in the vision cart of the da Vinci Surgical System (Figure 1). No modifications of the system were required and no configuration was needed for data recording. Subjects were not involved in nor had access to any of the data collection. Our recording system acquires multiple video channels (left and right endoscopic cameras, operating room views when needed) time-stamped and synchronized with the corresponding motion data from the da Vinci Application Programming Interface (API) [19]. These quantitative measurements include motion data (hand and instrument tip), and system events (e.g. camera, clutch pedal presses). The detailed motion data recorded includes instrument, camera and master handle motion, such as joint angles, Cartesian position and velocity, gripper angle, joint velocity, and torque data. Over each hour of training this generated over 20GB of video and motion data. Acquired data was transferred to a data cartridge and archived using our web-integrated archival infrastructure. Apart from allowing our subjects and experts to remotely view and assess video data, the archive also supports Objective Structured Assessment of Technical Skills (OSATS) evaluations [20].

Figure 1.

Data collection setup. The Johns Hopkins University (JHU) Visual Imaging and Surgical Robotics Laboratory archival station acquires both endoscopic video and hand motion without the need for subject intervention. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

© IF THIS IMAGE HAS BEEN PROVIDED BY OR IS OWNED BY A THIRD PARTY, AS INDICATED IN THE CAPTION LINE, THEN FURTHER PERMISSION MAY BE NEEDED BEFORE ANY FURTHER USE. PLEASE CONTACT WILEY’S PERMISSIONS DEPARTMENT ON PERMISSIONS@WILEY.COM OR USE THE RIGHTSLINK SERVICE BY CLICKING ON THE ‘REQUEST PERMISSION’ LINK ACCOMPANYING THIS ARTICLE. WILEY OR AUTHOR OWNED IMAGES MAY BE USED FOR NON-COMMERCIAL PURPOSES, SUBJECT TO PROPER CITATION OF THE ARTICLE, AUTHOR, AND PUBLISHER.

The hands-on training during the study consisted of the following three modules each performed by trainees at approximately weekly intervals. Subjects were enrolled as available during the yearlong study. Each subject completed at least 4 training sessions for each of the following modules over the approximately 3 month long training period:

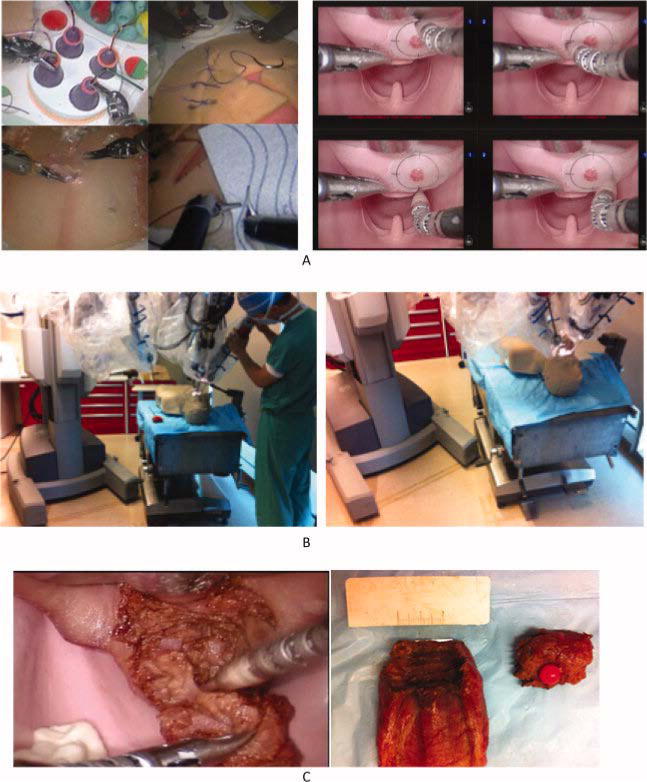

Module 1: In the orientation module of robotic surgery training, we collected data from four basic minimally invasive surgery skills performed on inanimate training task pods (The Chamberlain Group, Figure 2,a). The four tasks were suturing, object manipulation, dissection, and transection. These tasks also form part of the Intuitive Surgical System Skill Practicum [21] and are stepping stones to developing proficiency with the da Vinci robot controls. We have previously used this module in our other training protocols [17–18].

Module 2: Since transoral robotic surgery (TORS) requires a setup distinct from other robotic procedures, our system setup module focused on docking the da Vinci robot in operating position for a TORS procedure. A half-body mannequin (Airway Larry, Simulaids; head, neck, and thoracic torso) was setup on an operating table simulating a patient. The mannequin tongue was retracted with a Crowe-Davis mouth gag with the mouth open in the approximate position to receive the robotic instrument cannulas (Figure 2,b). A 5mm tumor target with peripheral margins was visibly at the base of tongue. Residents were assessed on their performance placing the robotic patient side cart into proper position adjacent to the operative table, docking the robotic arms to gain visualization, and confirming circumferential access to the tumor target. A 0 degree endoscope (as compared to a 30 degree endoscope typically used for TORS), a 5mm Maryland dissector, and a monopolar cautery instruments were used. Correct instrument installation and configuration was also assessed by reviewing the endoscopic view as the residents drove the instruments from the console to demonstrate adequate range of motion and access to the tumor.

Module 3: Subjects reviewed a video of a human TORS base of tongue tumor resection and studied the detailed training protocol to learn the surgical approach steps prior to the first session of this module. This procedure module assessed resident performance removing the tongue “tumor” with appropriate 5mm peripheral and deep margins of normal tongue tissue (Figure 2,c). A porcine tongue implanted with a visible 5mm simulated tumor (a colored hard fiducial) was configured for a TORS procedure with a Crow-Davis mouth gag in the mannequin mouth. Each trainee performed the procedure by themselves as console surgeons removing the “tumor”. The required 5mm margin was measured using a scale on the excised specimen.

Figure 2.

Study training tasks. Each subject practiced four training tasks in module 1, three (cart setup, port placement, workspace test) in module 2, and an ex vivo procedure in module 3. (A) Module 1. Four tasks for first module (left), endoscopic workspace test for module 2 (right). (B) Module 2 patient cart, port, and instrument setup. (C) Tumor excision and margin measurement in module 3. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

© IF THIS IMAGE HAS BEEN PROVIDED BY OR IS OWNED BY A THIRD PARTY, AS INDICATED IN THE CAPTION LINE, THEN FURTHER PERMISSION MAY BE NEEDED BEFORE ANY FURTHER USE. PLEASE CONTACT WILEY’S PERMISSIONS DEPARTMENT ON PERMISSIONS@WILEY.COM OR USE THE RIGHTSLINK SERVICE BY CLICKING ON THE ’REQUEST PERMISSION’ LINK ACCOMPANYING THIS ARTICLE. WILEY OR AUTHOR OWNED IMAGES MAY BE USED FOR NON-COMMERCIAL PURPOSES, SUBJECT TO PROPER CITATION OF THE ARTICLE, AUTHOR, AND PUBLISHER.

As noted previously, each module was performed four times by the trainees prior to advancing to the next module. All trainees completed a post-training questionnaire after the final training session of the third module. Subjects transitioned to human operating room assistance after completion of the training.

Evaluation

Video documentation of each module was scored by 3 experts using a 5-point Likert scale based on the Objective Structured Assessment of Technical Skills (OSATS) scale [20]. Elements of the OSATS scale not used during a training module (for example, use of assistants in the first and third modules), were omitted in the assessment for that module. The first module used six Likert scales of 1 to 5 for: Respect for tissue (R), Time and motion (TM), Instrument handling (IH), Knowledge of instruments(K), Flow of procedure(F), and Knowledge of procedure (KP) for a total score of 0–30. The second module used TM, H, K, and KP elements for a score of 0–20, and the third module was again scored 0–30 using the six OSATS elements used in module 1. Given moderate to strong inter-observer agreement (for example, the average Kappa was 0.53, 0.39, 0.30, and 0.54 respectively for module 1 suturing, manipulation, transection and dissection at week 1 respectively), we averaged the three expert scores to obtain a composite expert rating for each module.

Measures of correlation were computed between known skill levels and expert assessment, and learning curves were computed for each module for structured expert assessments. These expert annotated datasets will be used to validate automated assessment methods. Automated measurements to be computed by processing the instrument and hand motion data acquired above include instrument and hand efficiency, hand and instrument volumes, and related motion efficiency and safety measures based on distances and velocities.

Results

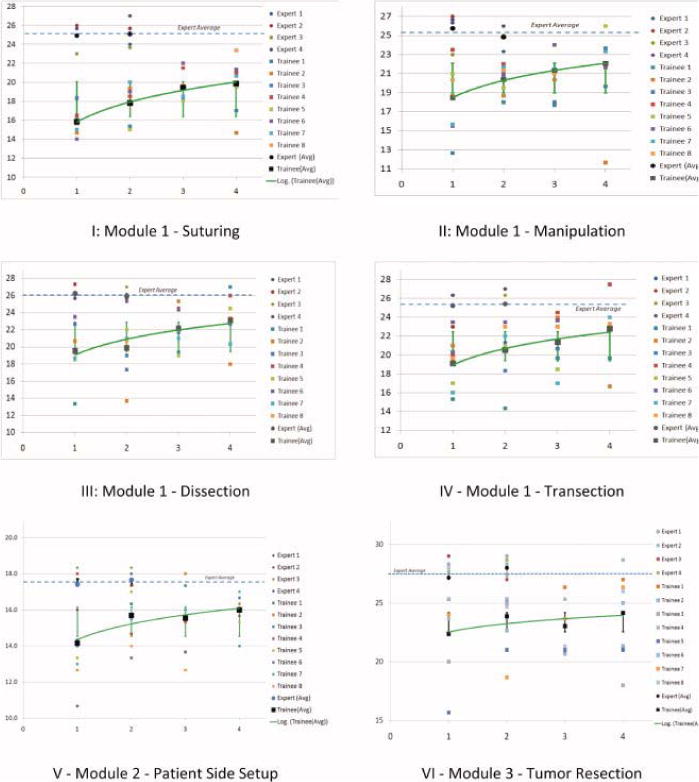

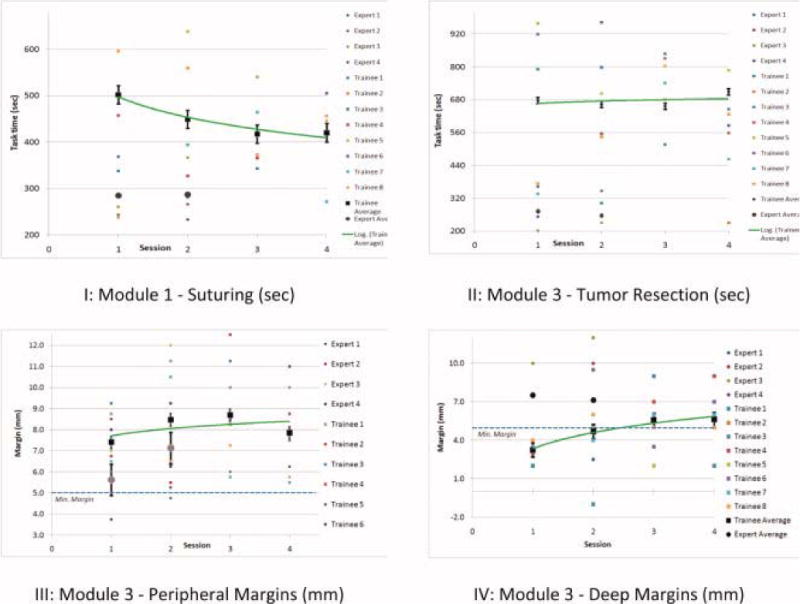

Table 1 shows a measurable separation between trainees and experts at the beginning of the training for a selected range of metrics. These trends were consistent across the training modules. Our trainees gradually improved their performance metrics towards the average of the experts as shown by the learning curves (Figure 3, 4) and in clinical outcomes measured by margins maintained in module III.

Table 1.

Average scores for OSATS elements for Experts and trainees at Week 1. Scores are averages across the four skills in module 1 and ratings by three experts. Note the score differences of a full OSATS element point or more, as well as large Std. dev in trainees.

| Time and Motion (TM) | Instrument Handling(IH) | Knowledge of Instruments(K) | Flow of Operation(F) | Knowledge of Procedure(KP) | Respect for Tissue (R) | |

|---|---|---|---|---|---|---|

| Expert 1 | 4.08 | 4.00 | 4.50 | 4.25 | 4.50 | 4.25 |

| Expert 2 | 4.17 | 4.08 | 4.58 | 4.33 | 4.50 | 4.17 |

| Expert 3 | 3.83 | 4.08 | 4.42 | 3.83 | 4.17 | 4.33 |

| Expert 4 | 4.08 | 4.25 | 4.50 | 4.17 | 4.92 | 4.17 |

|

| ||||||

| Average | 4.04 | 4.10 | 4.50 | 4.15 | 4.52 | 4.23 |

| Stdev. | 0.14 | 0.10 | 0.07 | 0.22 | 0.31 | 0.08 |

|

| ||||||

| Trainee 1 | 2.17 | 2.17 | 3.00 | 2.00 | 3.09 | 2.25 |

| Trainee 2 | 3.08 | 2.83 | 3.50 | 2.92 | 3.58 | 2.83 |

| Trainee 3 | 3.00 | 3.00 | 3.58 | 3.08 | 4.00 | 3.75 |

| Trainee 4 | 3.00 | 3.00 | 3.63 | 3.13 | 3.88 | 3.25 |

| Trainee 5 | 2.50 | 2.63 | 3.25 | 3.00 | 3.50 | 3.25 |

| Trainee 6 | 2.88 | 3.00 | 3.50 | 2.88 | 3.63 | 3.25 |

| Trainee 7 | 2.50 | 2.33 | 2.75 | 2.50 | 3.08 | 3.17 |

| Trainee 8 | 2.83 | 3.00 | 3.17 | 2.83 | 3.58 | 3.33 |

|

| ||||||

| Average. | 2.74 | 2.74 | 3.30 | 2.79 | 3.54 | 3.14 |

| Stdev | 0.32 | 0.34 | 0.31 | 0.37 | 0.33 | 0.44 |

Figure 3.

Selected learning curves. OSATS scores range from 0 to 30 over the four sessions and three modules. Experts appear in the upper left hand corner. In future iterations of the training regimen, the average expert scores will be used to graduate trainees to the next module. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

© IF THIS IMAGE HAS BEEN PROVIDED BY OR IS OWNED BY A THIRD PARTY, AS INDICATED IN THE CAPTION LINE, THEN FURTHER PERMISSION MAY BE NEEDED BEFORE ANY FURTHER USE. PLEASE CONTACT WILEY’S PERMISSIONS DEPARTMENT ON PERMISSIONS@WILEY.COM OR USE THE RIGHTSLINK SERVICE BY CLICKING ON THE ’REQUEST PERMISSION’ LINK ACCOMPANYING THIS ARTICLE. WILEY OR AUTHOR OWNED IMAGES MAY BE USED FOR NON-COMMERCIAL PURPOSES, SUBJECT TO PROPER CITATION OF THE ARTICLE, AUTHOR, AND PUBLISHER.

Figure 4.

Task completion times and margins over four sessions of respective modules. Experts appear at the bottom left. Task completion times decrease and assessed task quality improves with training (compared with Fig. 3). [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

© IF THIS IMAGE HAS BEEN PROVIDED BY OR IS OWNED BY A THIRD PARTY, AS INDICATED IN THE CAPTION LINE, THEN FURTHER PERMISSION MAY BE NEEDED BEFORE ANY FURTHER USE. PLEASE CONTACT WILEY’S PERMISSIONS DEPARTMENT ON PERMISSIONS@WILEY.COM OR USE THE RIGHTSLINK SERVICE BY CLICKING ON THE ’REQUEST PERMISSION’ LINK ACCOMPANYING THIS ARTICLE. WILEY OR AUTHOR OWNED IMAGES MAY BE USED FOR NON-COMMERCIAL PURPOSES, SUBJECT TO PROPER CITATION OF THE ARTICLE, AUTHOR, AND PUBLISHER.

Figure 3 and 4 show learning curves derived for times required to complete a task, and the corresponding learning curves based on expert OSATS structured assessment (Table 2). Figure 3 also demonstrates convergence towards the experts in each module. These results were significant at the 5% level for each session (two-tail P-values 9.17E-09, 4.17E-09, 6.35E-10, 3.45E-10 for suturing, manipulation, transection and dissection respectively at week 1 for module 1). Table 3 details further correlation of known skill levels, expert measurements, and margins for selected measurements.

Table 2.

Task times and Measured Margins for the ex-vivo TORS procedure training module (module 3 at week 1). Note the high separation of task times, as well as low deep margins for trainees. The peripheral margin is the average of the four measured surface margins.

| Task Time (sec) | Excised Margin (mm) |

||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| M1 | M2 | M3 | M4 | Peripheral | Deep | ||

| Expert 1 | 362.4 | 5.0 | 5.0 | 5.0 | 5.0 | 5.0 | 2.0 |

| Expert 2 | – | 5.0 | 10.0 | 7.0 | 5.0 | 6.8 | 15.0 |

| Expert 3 | 201.7 | 5.0 | 10.0 | 7.0 | 6.0 | 7.0 | 10.0 |

| Expert 4 | 252.7 | 2.0 | 6.0 | 4.0 | 3.0 | 3.8 | 3.0 |

|

| |||||||

| Average | 272.3 | 4.3 | 7.8 | 5.8 | 4.8 | 5.6 | 7.5 |

| Stdev | 113.7 | 1.5 | 2.63 | 1.5 | 1.26 | 1.53 | 6.14 |

|

| |||||||

| Trainee 1 | 791.3 | 5.0 | 10.0 | 10.0 | 10.0 | 8.8 | 2.0 |

| Trainee 2 | 171.9 | 5.0 | 7.0 | 5.0 | 17.0 | 8.5 | 3.0 |

| Trainee 3 | 1190.7 | 5.0 | 10.0 | 7.0 | 15.0 | 9.3 | 3.0 |

| Trainee 4 | – | 5.0 | 10.0 | 7.0 | 5.0 | 6.8 | 3.0 |

| Trainee 5 | 958.4 | 4.0 | 5.0 | 5.0 | 10.0 | 6.0 | 4.0 |

| Trainee 6 | 919.1 | 7.0 | 8.0 | 7.0 | 10.0 | 8.0 | 3.5 |

| Trainee 7 | 336.2 | 9.0 | 5.0 | 8.0 | 4.0 | 6.5 | 3.5 |

| Trainee 8 | 372.8 | 5.0 | 4.0 | 4.0 | 9.0 | 5.5 | 4.0 |

|

| |||||||

| Average | 677.2 | 4.25 | 7.75 | 5.75 | 4.75 | 7.4 | 3.3 |

| Stdev. | 382.7 | 1.50 | 2.63 | 1.50 | 1.26 | 1.39 | 0.65 |

Table 3.

Two tail p-values for pair-wise t-tests (alpha = 0.05): Structured assessment (OSATS scores) versus task time (manipulation, module I), structured assessment vs. known skill levels (suturing, module I), known skill vs. task time (module III) for each of the 4 sessions in the respective modules.

| Session | OSATS/time | OSATS/skill | Skill/time |

|---|---|---|---|

| 1 | 0.001 | 1.66E-7 | 0.001 |

| 2 | 5.64E-5 | 1.48E-8 | 0.0006 |

| 3 | 0.003 | 8.38E-9 | 0.0004 |

| 4 | 0.008 | 3.68E-7 | 0.0034 |

In each module, trainee performance improved with time as indicated by lower task completion times (Figure 3). As also previously discovered in our work on robotic surgery training [17], expert task performance displayed a much smaller variability between the two executions.

Discussion

The da Vinci master-slave type robotic surgical system has been used to demonstrate safe and feasible transoral robotic surgical approaches (TORS) [22–24] and evidence of decreased length of hospital stay and morbidity is now also becoming available[7, 8]. Based on favorable oncologic and functional outcomes demonstrated in multiple studies [4, 7–10, 25, 26] and the increasing incidence of oropharyngeal carcinoma secondary to the HPV epidemic in younger, healthier patients, TORS is gaining increasing adoption as a useful surgical technique for these tumors. Correspondingly TORS and other head and neck procedures are now seeing multiple training efforts at the residency level of training [27–30].

The OSATS Global Rating Scale (Table 4 [20]) consists of skill-related variables that are typically graded using a 5-point Likert scale; with 1,3 and 5 points anchored with explicit descriptors. We customized our composite scale for considerations suitable for assessing the performance of the surgical and training tasks obtained from the expert users. OSATS assessments of surgical skill have been validated previously for consistency and utility (e.g. [17,18], and references contained within) in robotic surgery. Robotic surgery also provides a unique opportunity to compare automatically measured metrics with such expert assessment.

Table 4.

The customized Objective Structured Assessment of Technical Skill (OSATS) [20] global rating scale.

| Measure | 1 | 3 | 5 |

|---|---|---|---|

| Respect for tissue | Used unnecessary force, caused damage. | Careful handling but occasional minor mistakes. | Consistent handling, with minimal damage |

| Time and motion | Many unnecessary moves, jerks, and pauses. | Efficient time and motion, but some unneeded or out of view moves | Efficient movement with no mistakes |

| Instrument handling | Awkward pose or tentative moves. | Competent use, but a few occasional awkward moves | Fluid movement with good instrument pose. |

| Knowledge of instruments | Used wrong or inappropriate instrument. | Knew the names and used appropriate instruments. | Obviously familiar with the instruments. |

| Use of assistants | No use or poor use of assistants. | Occasional delay or lack of use of assistants. | Used assistants to best advantage. |

| Flow of operation | Frequent pauses, shows lack of forward planning. | Demonstrated ability for forward planning, occasional pause. | Demonstrated planned operation without pauses |

| Knowledge of procedure | Deficient knowledge of protocol or procedure | Knew all important aspects of the operation, occasional instruction. | Demonstrated knowledge of all aspects |

Our longitudinal, multi-module training program aims to transparently monitor development of surgical and system skills through each step of the robotic curriculum, subject only to the continued access to trainee data and robotic systems. Our analysis aims to reduce the workload for the mentor, and aims to provide objective, and specific formative feedback to the trainee. Towards this end, this work performs initial construct validity analysis of manually computed measures of both surgical technique (expert assessment) and clinical outcomes (margins). Such evidence is crucial in the curricular development process, and for assessment and feedback during the training program.

In this report, we describe a longitudinal study of robotic surgery trainees, including preliminary assessment (OSATS) by experts as well as traditional margin-based outcomes. Using multiple modules, appropriate tasks, and objective measures, we develop separate learning curves for robotic trainees that can be monitored, and the skills integrated can be used to compose a proficiency based curriculum instead of current time bound practice. We note strong agreement between assessment of task performance using OSATS and margins. Corresponding automatically computed objective measures of motion (to be reported in a follow-on publication) could be used to automatically trigger graduation between modules, as well as removal of skills assessments where proficiency has been reached (as measured by std. deviation from expert performance) from the training curriculum in any module. Additional computed measures, not described here, include camera management, abnormal events, and reactions to abnormal events, are to be reported separately.

In this first iteration, the residents participating included at least one resident at each PGY level, including 2 PGY3 and 2 research students. Given the small number of samples at PGY level, comparative performance analysis between PGY levels will be performed after additional iterations of the protocol. The trainees had no prior robotic experience. The expert surgeons were trained robotic educators familiar with resident training programs in robotic surgery. These surgeons included at least one expert who is also a proctor for Intuitive Surgical head and neck surgery training program. Intuitive Surgical also recommends a combined TORS protocol. This protocol includes, massed inanimate (same as module 1) training, followed by one animal procedure and human surgery observation. The Intuitive surgical protocol does not include motion data collection that might provide comparable assessment. As might be expected, massed practice (large number of trials), particularly using simple familiarity exercises included in module 1 are not expected to provide procedure-specific proficiency for TORS. We will aim to perform a comparative assessment in follow-on protocols.

The presented protocol should yield increased learning by the resident and likely increased efficiency within the operating room environment. With ever increasing work-hour restrictions on our residents and a greater demand for proficiency-based training rather than just case volume, our robotic training curriculum is particularly attractive. Robotic training allows for the objective assessment of skill acquisition and as we’ve demonstrated allows for the comparison to expert performance. Therefore, our training program will require the attainment of a requisite skill level for each module prior to graduating to the next level. The rate of progression will depend on the individual resident’s skill level rather than the number of times a module has been performed. We believe such a proficiency-based training program optimizes the use of our residents’ time. Furthermore, this model could be diversified to include other TORS procedures such as radical tonsillectomy or supraglottic laryngectomy based on mannequin modifications. This program is currently being considered for incorporation into the Johns Hopkins Otolaryngology / Head and Neck Surgery residency training program to prepare all residents in this ever promising field of TORS and enable them in conjunction with supervised console time and surgical experience to actively participate in this “shift in paradigm” for the treatment of this cancer population.

Conclusion

Transoral robotic surgery is rapidly being adopted by many otolaryngologists-head and neck surgeons for the treatment of benign and malignant disease of the upper aerodigestive tract. Most otolaryngology residents therefore have significant exposure to robotic surgery today through their training programs. We have developed a comprehensive, three-module, pre-clinical TORS training program to develop the skills requisite for the novice robotic surgeon to begin TORS. The training modules will also be further refined based on these results in future iterations of the training protocol. This structured robotic surgery curriculum allows for objective assessment of performance and promises to reduce the overhead for mentors, allows detailed assessment of human-machine interface skills, and creates customized training models for individualized training.

Acknowledgments

Grant Support: This work was supported in part by NIH grant # 1R21EB009143-01A1 and Johns Hopkins Hospital internal funds. A collaborative agreement with Intuitive Surgical, Inc with no financial support provided the da Vinci robotic instruments and access to the API data. The sponsors played no role in study development, data collection, interpretation of data, or analysis.

Footnotes

Disclosures: This work was supported by the NIH grants above. Data collection was supported by donated surgical instruments and a collaborative agreement with Intuitive Surgical, Inc. provided access to the API motion data. Neither agreement included any financial support. The study sponsors played no role in collection, analysis or interpretation of data. Author Richmon provides proctoring services for robotic surgery training to trainee surgeons. Such (compensated) proctoring is unrelated to the presented work and does not create any conflicts of interest. J.A. Califano is the Director of Research of the Milton J. Dance Head and Neck Endowment. The terms of this arrangement are being managed by the Johns Hopkins University in accordance with its conflict of interest policies.

Author Contributions- Authors Curry, Richmon, and Kumar designed the study, and were involved in data acquisition, developed the analysis methods, and performed the evaluation. Authors Malpani, Jog, and Tantillo were involved in the data collection. Authors Malpani and Kumar performed the automated data analysis. Authors Richmon, Kumar, Blanco, Ha, and Califano were involved in clinical interpretation of the data and analysis.

Contributor Information

Martin Curry, Department of Otolaryngology-Head and Neck Surgery, Johns Hopkins Hospital, Baltimore, MD.

Anand Malpani, Department of Computer Science, Johns Hopkins University. 3400 North Charles St., Baltimore MD.

Ryan Li, Department of Otolaryngology-Head and Neck Surgery, Johns Hopkins Hospital, Baltimore, MD.

Thomas Tantillo, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Amod Jog, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Ray Blanco, Department of Otolaryngology-Head and Neck Surgery, Greater Baltimore Medical Center, Baltimore MD.

Patrick K Ha, Department of Otolaryngology-Head and Neck Surgery, Johns Hopkins Medical Institutions, and Milton J. Dance Head and Neck Center, Greater Baltimore Medical Center, Baltimore, MD.

Joseph Califano, Department of Otolaryngology-Head and Neck Surgery, Johns Hopkins Medical Institutions, and Milton J. Dance Head and Neck Center, Greater Baltimore Medical Center, Baltimore, MD.

Rajesh Kumar, Department of Computer Science, Johns Hopkins Hospital, Baltimore, MD.

Jeremy Richmon, Department of Otolaryngology-Head and Neck Surgery, Johns Hopkins Hospital, Baltimore, MD.

References

- 1.Parsons JT, et al. Squamous cell carcinoma of the oropharynx: surgery, radiation therapy, or both. Cancer. 2002;94(11):2967–80. doi: 10.1002/cncr.10567. [DOI] [PubMed] [Google Scholar]

- 2.McLeod IK, Mair EA, Melder PC. Potential applications of the da Vinci minimally invasive surgical robotic system in otolaryngology. Ear Nose Throat J. 2005;84(8):483–7. [PubMed] [Google Scholar]

- 3.McLeod IK, Melder PC. Da Vinci robot-assisted excision of a vallecular cyst: a case report. Ear Nose Throat J. 2005;84(3):170–2. [PubMed] [Google Scholar]

- 4.Cohen MA, et al. Transoral robotic surgery and human papillomavirus status: Oncologic results. Head Neck. 33(4):573–80. doi: 10.1002/hed.21500. [DOI] [PubMed] [Google Scholar]

- 5.Genden EM, et al. The role of reconstruction for transoral robotic pharyngectomy and concomitant neck dissection. Arch Otolaryngol Head Neck Surg. 137(2):151–6. doi: 10.1001/archoto.2010.250. [DOI] [PubMed] [Google Scholar]

- 6.Weinstein GS, et al. Transoral robotic surgery: does the ends justify the means? Curr Opin Otolaryngol Head Neck Surg. 2009;17(2):126–31. doi: 10.1097/MOO.0b013e32832924f5. [DOI] [PubMed] [Google Scholar]

- 7.Moore EJ, Olsen KD, Kasperbauer JL. Transoral robotic surgery for oropharyngeal squamous cell carcinoma: a prospective study of feasibility and functional outcomes. Laryngoscope. 2009;119(11):2156–64. doi: 10.1002/lary.20647. [DOI] [PubMed] [Google Scholar]

- 8.Genden EM, Desai S, Sung CK. Transoral robotic surgery for the management of head and neck cancer: a preliminary experience. Head Neck. 2009;31(3):283–9. doi: 10.1002/hed.20972. [DOI] [PubMed] [Google Scholar]

- 9.Iseli TA, et al. Functional outcomes after transoral robotic surgery for head and neck cancer. Otolaryngol Head Neck Surg. 2009;141(2):166–71. doi: 10.1016/j.otohns.2009.05.014. [DOI] [PubMed] [Google Scholar]

- 10.Weinstein GS, et al. Transoral robotic surgery for advanced oropharyngeal carcinoma. Arch Otolaryngol Head Neck Surg. 136(11):1079–85. doi: 10.1001/archoto.2010.191. [DOI] [PubMed] [Google Scholar]

- 11.Holsinger FC, et al. The emergence of endoscopic head and neck surgery. Curr Oncol Rep. 12(3):216–22. doi: 10.1007/s11912-010-0097-0. [DOI] [PubMed] [Google Scholar]

- 12.Bhayani MK, Holsinger FC, Lai SY. A shifting paradigm for patients with head and neck cancer: transoral robotic surgery (TORS) Oncology (Williston Park) 24(11):1010–5. [PubMed] [Google Scholar]

- 13.Kuppersmith RB, Holsinger FC. Robotic thyroid surgery: an initial experience with North American patients. Laryngoscope. 2011;121(3):521–6. doi: 10.1002/lary.21347. [DOI] [PubMed] [Google Scholar]

- 14.Singer MC, Seybt MW, Terris DJ. Robotic facelift thyroidectomy: I. Preclinical simulation and morphometric assessment. Laryngoscope. 2011;121(8):1631–5. doi: 10.1002/lary.21831. [DOI] [PubMed] [Google Scholar]

- 15.Labadie RF, Majdani O, Fitzpatrick JM. Image-guided technique in neurotology. Otolaryngol Clin North Am. 2007;40(3):611–24. x. doi: 10.1016/j.otc.2007.03.006. [DOI] [PubMed] [Google Scholar]

- 16.Lee JY, et al. Transoral robotic surgery of the skull base: a cadaver and feasibility study. ORL J Otorhinolaryngol Relat Spec. 2010;72(4):181–7. doi: 10.1159/000276937. [DOI] [PubMed] [Google Scholar]

- 17.Kumar R, et al. Assessing system operation skills in robotic surgery trainees. Int J Med Robot. 2011 doi: 10.1002/rcs.449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kumar R, Vagvolgyi AJB, Nguyen H, Hager G, Chen CCG, Yuh D. Objective Measures for Longitudinal Assessment of Robotic Surgery Training. The Journal of Thoracic and Cardiovascular Surgery. 2011 doi: 10.1016/j.jtcvs.2011.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dimaio S, Hasser C. The da Vinci Research Interface. The MIDAS Journal – Systems and Architectures for Computer Assisted Interventions. 2008 [Google Scholar]

- 20.Martin JA, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 1997;84(2):273–8. doi: 10.1046/j.1365-2168.1997.02502.x. [DOI] [PubMed] [Google Scholar]

- 21.Curet M, Mohr C. The Intuitive Surgical Robotic Surgery Training Practicum. Intuitive Surgical Inc; CA: 2008. [Google Scholar]

- 22.Hockstein NG, et al. Robotic microlaryngeal surgery: a technical feasibility study using the daVinci surgical robot and an airway mannequin. Laryngoscope. 2005;115(5):780–5. doi: 10.1097/01.MLG.0000159202.04941.67. [DOI] [PubMed] [Google Scholar]

- 23.Hockstein NG, O’Malley BW, Jr, Weinstein GS. Assessment of intraoperative safety in transoral robotic surgery. Laryngoscope. 2006;116(2):165–8. doi: 10.1097/01.mlg.0000199899.00479.75. [DOI] [PubMed] [Google Scholar]

- 24.O’Malley BW, Jr, et al. Transoral robotic surgery (TORS) for base of tongue neoplasms. Laryngoscope. 2006;116(8):1465–72. doi: 10.1097/01.mlg.0000227184.90514.1a. [DOI] [PubMed] [Google Scholar]

- 25.Weinstein GS, et al. Transoral robotic surgery: radical tonsillectomy. Arch Otolaryngol Head Neck Surg. 2007;133(12):1220–6. doi: 10.1001/archotol.133.12.1220. [DOI] [PubMed] [Google Scholar]

- 26.White HN, et al. Transoral robotic-assisted surgery for head and neck squamous cell carcinoma: one- and 2-year survival analysis. Arch Otolaryngol Head Neck Surg. 136(12):1248–52. doi: 10.1001/archoto.2010.216. [DOI] [PubMed] [Google Scholar]

- 27.Lin SY, et al. Development and pilot-testing of a feasible, reliable, and valid operative competency assessment tool for endoscopic sinus surgery. Am J Rhinol Allergy. 2009;23(3):354–9. doi: 10.2500/ajra.2009.23.3275. [DOI] [PubMed] [Google Scholar]

- 28.Luginbuhl AJ, et al. Assessment of microvascular anastomosis training in otolaryngology residencies: survey of United States program directors. Otolaryngol Head Neck Surg. 2010;143(5):633–6. doi: 10.1016/j.otohns.2010.08.007. [DOI] [PubMed] [Google Scholar]

- 29.Moles JJ, et al. Establishing a training program for residents in robotic surgery. Laryngoscope. 2009;119(10):1927–31. doi: 10.1002/lary.20508. [DOI] [PubMed] [Google Scholar]

- 30.Stack BC, Jr, et al. A study of resident proficiency with thyroid surgery: creation of a thyroid-specific tool. Otolaryngol Head Neck Surg. 2010;142(6):856–62. doi: 10.1016/j.otohns.2010.02.028. [DOI] [PubMed] [Google Scholar]

- 31.Kenney PA, et al. Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology. 2009;73(6):1288–92. doi: 10.1016/j.urology.2008.12.044. [DOI] [PubMed] [Google Scholar]