Abstract

We report the development of a high-throughput whole slide imaging (WSI) system by adapting a cost-effective optomechanical add-on kit to existing microscopes. Inspired by the phase detection concept in professional photography, we attached two pinhole-modulated cameras at the eyepiece ports for instant focal plane detection. By adjusting the positions of the pinholes, we can effectively change the view angle for the sample, and as such, we can use the translation shift of the two pinhole-modulated images to identify the optimal focal position. By using a small pinhole size, the focal-plane-detection range is on the order of millimeter, orders of magnitude longer than the objective’s depth of field. We also show that, by analyzing the phase correlation of the pinhole-modulated images, we can determine whether the sample contains one thin section, folded sections, or multiple layers separated by certain distances – an important piece of information prior to a detailed z scan. In order to achieve system automation, we deployed a low-cost programmable robotic arm to perform sample loading and $14 stepper motors to drive the microscope stage to perform x-y scanning. Using a 20X objective lens, we can acquire a 2 gigapixel image with 14 mm by 8 mm field of view in 90 seconds. The reported platform may find applications in biomedical research, telemedicine, and digital pathology. It may also provide new insights for the development of high-content screening instruments.

OCIS codes: (170.0180) Microscopy, (170.3010) Image reconstruction techniques, (110.0110) Imaging systems, (110.1220) Apertures

1. Introduction

Whole slide imaging (WSI) system is one important tool for biomedical research and clinical diagnosis. In particular, the advances of computer and image sensor technologies in recent years have significantly accelerated the development of WSI systems for high-content screening, telemedicine, and digital pathology. One important aspect of WSI systems is to maintain the sample at the optimal focal position over a large field of view. Autofocus method for WSI systems is still an active research area due to its great potentials in industrial and clinical applications. There are two main types of autofocus methods in WSI systems: 1) laser-reflection methods and 2) image-contrast-related method. For laser-reflection method [1–3], an infrared laser beam is reflected by the sample surface and creates a reference point for determining the distance between the surface and the objective lens. This method only works well for samples that have a fixed distance off the surface. If a sample varies its location from the surface, this method cannot maintain the optimal focal position. Different from the laser-reflection method, image-contrast-related method [2, 4–6] is able to track topographic variations and identify the optimal focal position through image processing. This method acquires multiple images by moving the sample along the z direction and calculates the focal position by maximizing a figure of merit (such as image contrast, entropy, and frequency content) of the acquired images. Since z-stacking increases the total scanning time, image-contrast-related method achieves better imaging performance by trading off system throughput. However, due to the topographic variation of pathology slides, most WSI systems employ image-contrast-related method for tracking the focus [2].

In this paper, we report the development of a WSI platform by adapting an optomechanical add-on kit to a regular microscope. Inspired by the phase detection concept in professional photography [7], we attached two pinhole-modulated cameras at the eyepiece ports for focal plane detection. By adjusting the positions of the pinholes, we can effectively change the view angle through the two eyepiece ports. The focal position can be recovered by calculating the phase correlation of the two corresponding pinhole-modulated images. There are several advantage of the reported platform: 1) By deploying a small-sized pinhole in both cameras, autofocusing can reach the millimeter range, orders of magnitude longer than the objective’s depth of field. On the other hand, conventional image-contrast-based method relies on the captured images from the main camera port, which will be blurred out if the sample is defocused by a long distance. 2) The two images captured by the pinhole-modulated cameras provide additional information of the sample’s tomographic structure in the z direction. By analyzing the phase correlation curve, we can readily determine whether the sample contains one thin section, folded sections, or multiple layers separated by certain distances. Different z-sampling strategies can then be used in conjunction with the reported method for better image acquisition. For example, we can perform z-stacking for the area that contains folded sections or multiple layers. We can also avoid air burbles by comparing the layered structure with the surrounding areas. 3) One of the major barriers for the adoption of WSI system is the cost. In the reported platform, we used a cost-effective mechanical add-on kit to convert a regular microscope into a WSI system, making it affordable to small research labs. For each x-y position, the reported platform is able to directly move the stage to the optimal focal position; no z-stacking is needed and the focus error will not propagate to other x-y positions. 4) In the reported platform, we employed a cost-effective programmable robotic arm (uArm from Kickstarter) for sample loading. We can easily expand its capability for handling other samples (such as Petri dish) and integrate other image recognition strategies for better and affordable laboratory automation.

This paper is structured as follows: in section 2, we will report the design and the operation principle of the pinhole-modulated camera. In section 3, we will report the use of the phase correlation curve for peeking the sample structure in the z direction. In section 4, we will report the design of the add-on kit for converting a conventional microscope into a WIS system. Finally, we will summarize the results and discuss the future directions in section 5.

2. Instant focal plane detection using pinhole-modulated cameras

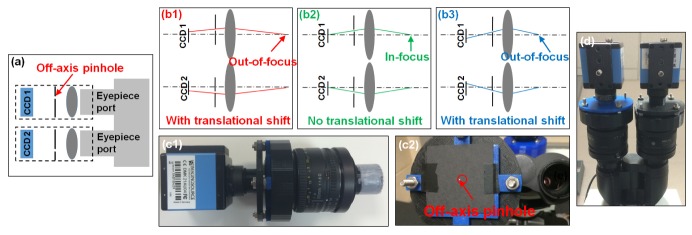

Inspired by the phase detection concept in professional photography [7], we attached two pinhole-modulated cameras at the eyepiece ports for instant focal plane detection, as shown in Fig. 1(a), where the pinhole is inserted at the Fourier plane of the lens. By adjusting the positions of the two pinholes, we can effectively change the view angle of the sample. If the sample is placed at the in-focus position, the two captured images will be identical (Fig. 1(b2)). If the sample is placed at an out-of-focus position, the sample will be projected at two different view angles, causing a translational shift in the two captured images (Fig. 1(b1) and 1(b3)). The translation shift is proportional to the defocus distance of the sample. Therefore, by identifying the translational shift of the two captured images, we can recover the optimal focal position of the sample without a z-scan.

Fig. 1.

(a) Pinhole-modulated cameras for instant focal plane detection. (b) By inserting an off-axis pinhole at the Fourier plane, we can effectively change the view angle of the sample. (c1) A 3D-printed plastic case was used to assemble the pinhole-modulated camera. (c2) The off-axis pinhole was punched by a needle on a printing paper. (d) We attached the assembly to the eyepiece ports of a microscope platform.

The design of the pinhole-modulated camera is shown in Fig. 1(c), where we used a 3D-printed case to assemble a 50 mm Nikon photographic lens (f/1.8), a pinhole, and a CCD detector. We used a needle to punch a hole on a printing paper, as shown in Fig. 1(c2). The size of the pinhole is ~0.5 mm, and it locates at ~1.5 mm away from the optical axis. To adjust the position of the pinhole, we increase the off-axis distance until the image vanishes in the camera. The whole module was attached to the eyepiece ports of a microscope (Fig. 1(d)).

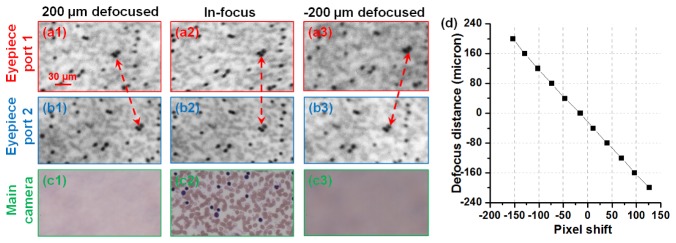

Figure 2 shows the experimental characterization of the instant focal plane detection scheme. By putting the sample at different positions, we can see different translational shift from the two pinhole-modulated images (Fig. 2(a) and 2(b)). The images captured at the main camera port are shown in Fig. 2(c) as a comparison. We can see that, the depth of field of the pinhole modulated images is orders of magnitude longer than that of the high-resolution image captured through the main camera port. Figure 2(d) shows the measured relationship between the translational shift and the defocus distance of the sample. For imaging new samples, we first identify the translational shift of the two pinhole-modulated images and then use this calibration curve to recover the focal position.

Fig. 2.

The captured images through the pinhole-modulated cameras (a)-(b), and the main camera (c). (d) The measured relationship between the translational shift of the two pinhole-modulated images and the defocus distance.

3. Unveiling sample’s tomographic structure using the phase correlation curve

In the reported platform, we used phase correlation to identify the translational shift of the two pinhole-modulated images. The use of phase correlation for subpixel registration is an established technique in image processing [8]. In this paper, we explore the use of phase correlation curve to peek the sample’s tomographic structure without a detailed z-scan.

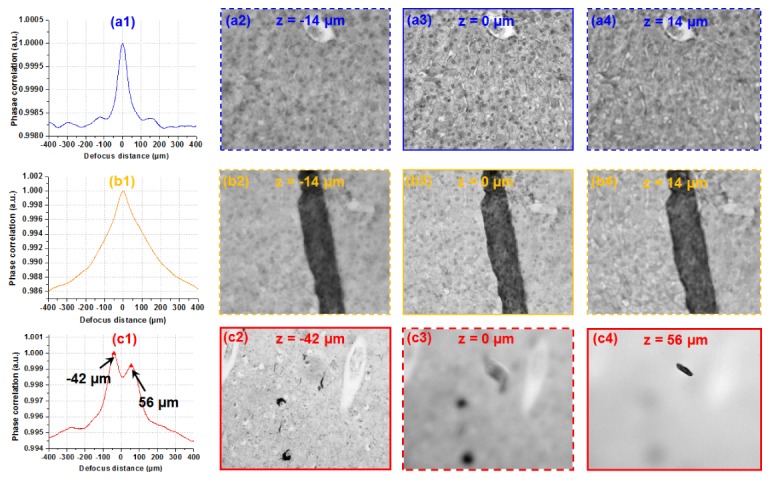

Figure 3 demonstrates that, different samples have different characteristics on the phase correlation curves. A thin section renders a single sharp peak (Fig. 3(a)) while a sample with folded sections has a peak with a boarder full width at half maximum (FWHM) (Fig. 3(b)). For samples with multiple layers, we can see multiple peaks from the curve, as shown in Fig. 3(c). In particular, in Fig. 3(c), the two layers are separated by 100 µm. The reported platform is able to recover this information over such a long depth of field. The sample information along the z direction is valuable for determining the sampling strategy. For example, we can perform multilayer sampling according to the peaks or the FWHM of the phase correlation curve. Further research is needed to relay the phase correlation characteristics with the sample property [9]. In the reported platform, we simply identify the maximum point of the phase correlation curve to recover the focal position of the sample; no z-scanning was used.

Fig. 3.

Using the phase correlation curve for exploring sample structures at the z direction. Samples with one thin section (a), folded section (b), and two different layers separated by certain distance (c).

4. Mechanical design and high-throughput gigapixel imaging

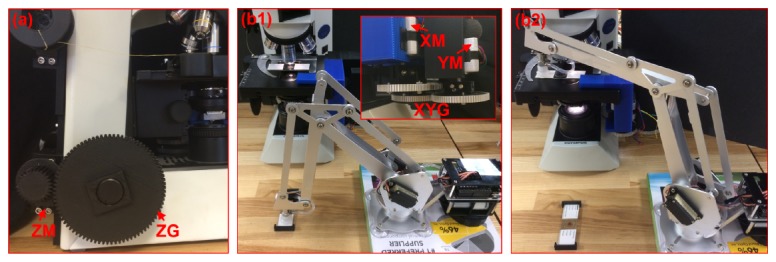

In order to achieve system automation, we used a low-cost programmable robotic arm (uArm, Kickstarter) to perform sample loading and stepper motors (NEMA-17, Adafruit) to drive the microscope stage to perform x-y-z scanning. In our implementation, we used 3D-printed plastic gears to control the focus knob for sample autofocusing, as shown in Fig. 4(a). The smallest z-step is 350 nm in our design. If needed, one can change the size ratio of the two mechanical gears in Fig. 4(a) to achieve a better z resolution. Figure 4(b) shows the mechanical add-on kit for controlling sample scanning in x-y plane and the programmable robotic arm for automatic sample loading. We used Arduino microcontroller to control the scanning process.

Fig. 4.

Sample loading and mechanical scanning schemes in the reported platform. (a) 3D-printed plastic gear for controlling focus knob. (b) Sample scanning using a mechanical kit and sample loading using a programmable robotic arm. XM: x-axis motor; YM: y-axis motor; XYG: x-y scanning gear group; ZM: z-axis motor; ZG: z-axis scanning gear. Also refer to Visualization 1 (13.1MB, MP4) and Visualization 2 (7.2MB, MP4) .

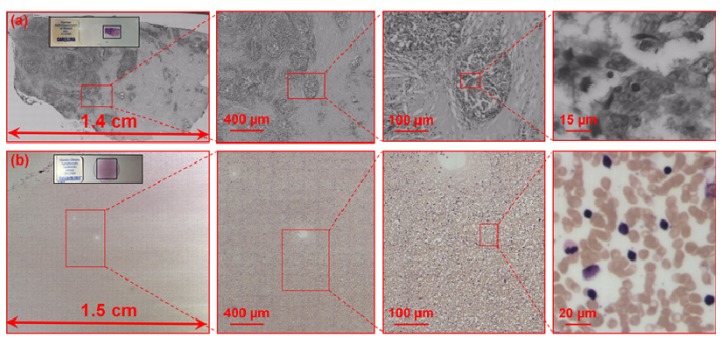

Figure 5 shows the gigapixel images captured using the reported platform. In Fig. 5(a), we used a 9 megapixel monochromatic CCD camera (Prosilica GT 34000, 3.69 µm pixel size) to capture a pathology slide. Using a 20X, 0.75 numerical aperture objective lens, it took 90 seconds to acquire a 2 gigapixel image with 14 mm by 8 mm field of view. This image contains 340 segments, and the image acquisition of each segment takes ~0.24 second using a regular desktop computer with an Intel i5 processor. The detailed breakdown of the acquisition time is as follow: 1) 0.1 second for the pinhole-modulated cameras to acquires two images from the eyepiece ports; 2) 0.02 second for the calculation of the phase correlation and recover the optimal focal position; 3) 0.04 second to drive the focus knob; 4) 0.02 second to trigger the main camera to capture the high-resolution in-focus image; 5) 0.06 second to drive the x-y stage to another positon. The main speed limitation is located at the data readout from pinhole-modulated cameras. In this early prototype, we used an old camera model (31AU03, IC Capture, 1024 by 768 pixels). A CMOS webcam with faster data readout can reduce the acquisition time of single segment to 0.16 second (~40% improvement). In Fig. 5(b), we use a 1.5 megapixel color CMOS camera (Infinity lite, 4.2 µm pixel size) to acquire a color image of blood smear. The total acquisition time is 16 minutes and the field of view is 15 mm by 15 mm with 2400 segments. The longer acquisition time is caused by the detector size being much smaller than the CCD used in Fig. 5(a) and the absence of hardware triggering.

Fig. 5.

Gigapixel images captured by using the reported platform. (a) A captured image of a pathology slide using a 9 megapixel CCD. The field of view is 14 mm by 8 mm and the acquisition time is 90 seconds. (b) A captured image of a blood smear using a 1.5 megapixel color CMOS sensor. The field of view is 15 mm by 15 mm and the acquisition time is 16 minutes. These images can be viewed at: http://gigapan.com/profiles/SmartImagingLab.

To test the autofocusing capability, we have also moved the sample to 25 pre-defined z-positions and used the reported approach to recover the z-positions. The standard deviation between the ground truth and our recovery is ~300 nm, much smaller than the depth of field of the employed objective. Finally, we note that, the use of stepper motor to drive microscope is not a new idea [6]; however, integrating it with the proposed autofocusing scheme for high-throughput WSI is new and may find various biomedical applications.

5. Discussion and conclusion

In summary, we have demonstrated the use of pinhole-modulated camera for instant focal plane detection. We have developed a WSI add-on kit to convert a regular microscope to a WSI system. For each x-y position, the reported WSI platform is able to directly move the stage to the optimal focal position; no z-stacking in needed for focal plane searching and the focus error will not be accumulated to other x-y positions. By using the reported platform, we demonstrated the acquisition of a 2 gigapixel image (14 mm by 8 mm) in 90 seconds. Compared to laser reflection methods, the reported approach is able to track the topographic variations of the tissue section; neither external laser source nor angle-tracking optics is needed. Compared to image-contrast methods, the reported approach has an ultra-long autofocusing range and requires no z-scanning for focal plane detection. From the cost point of view, the mechanical kit, including the stepper motor and related drivers, costs ~$50 (Amazon). The camera lens at the eyepiece port can be replaced by low-cost eyepiece adapter with 0.5X reduction lens (~$25, Amscope). The pinhole can be inserted into the Fourier plane of the reduction lens. Lastly, we can use low-cost stereo Minoru webcam (~$20, Amazon) or other low-cost webcams at the eyepiece port to capture the pinhole-modulated images. The rest of system remains the same as the regular microscope. The reported design may enable the dissemination of high-throughput imaging/screening instruments for the broad biomedical community. It can also be directly combined with other cost-effective imaging schemes for high-throughput multimodal microscopy imaging [10, 11].

There are several areas we plan to improve in the next phase: 1) due to the large data set we acquire, we use a free software (Image Composite Editor) to perform image stitching off-line. We need to convert the captured data into individual images and manually upload them to the software. The software blindly stitches the image without making use of positional information of individual segments. The entire process takes about 40 minutes for generating the image shown in Fig. 5(a). We plan to develop a memory efficient program to perform stitching during the image acquisition process. 2) The current speed limitation comes from data readout from the pinhole-modulated cameras (15 fps). A camera with higher frame rate can be used to further shorten the acquisition time by 40%. The sensor area and the total number of pixels of the pinhole-modulated camera are not important in reported approach. 3) We used plastic cases in various parts of our prototype to mount the pinhole-modulated camera. Due to the weights of the cameras, stability is a concern for the reported prototype. A metal mount with better optomechanical design is needed in the future (for example, using the commercially available eyepiece adapter with 0.5X reduction lens). 4) The reported method can be used for fluorescence imaging. In this case, the photon budget for the pinhole modulated cameras will be low. We may need to study the effect of shot noise for the phase correlation curve. 5) The use of phase correlation curve for peeking sample’s tomographic structures is an unexplored area. Further research is needed to study the phase correlation characteristics and the associated sample properties. 6) In the reported platform, we employed a programmable robotic arm for sample loading (Visualization 1 (13.1MB, MP4) ). The use of robotic arm for sample loading is not a new idea. However, low-cost and open-source robotic arms are only available very recently. We can expand their capability for handling different samples and integrate other image recognition strategies for better and affordable laboratory automation.

References and links

- 1.Liron Y., Paran Y., Zatorsky N. G., Geiger B., Kam Z., “Laser autofocusing system for high-resolution cell biological imaging,” J. Microsc. 221(2), 145–151 (2006). 10.1111/j.1365-2818.2006.01550.x [DOI] [PubMed] [Google Scholar]

- 2.Montalto M. C., McKay R. R., Filkins R. J., “Autofocus methods of whole slide imaging systems and the introduction of a second-generation independent dual sensor scanning method,” J. Pathol. Inform. 2(1), 44 (2011). 10.4103/2153-3539.86282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Liu C.-S., Hu P.-H., Wang Y.-H., Ke S.-S., Lin Y.-C., Chang Y.-H., Horng J.-B., “Novel fast laser-based auto-focusing microscope,” in Sensors, 2010 IEEE, (IEEE, 2010), 481–485. [Google Scholar]

- 4.Yazdanfar S., Kenny K. B., Tasimi K., Corwin A. D., Dixon E. L., Filkins R. J., “Simple and robust image-based autofocusing for digital microscopy,” Opt. Express 16(12), 8670–8677 (2008). 10.1364/OE.16.008670 [DOI] [PubMed] [Google Scholar]

- 5.Firestone L., Cook K., Culp K., Talsania N., Preston K., Jr., “Comparison of autofocus methods for automated microscopy,” Cytometry 12(3), 195–206 (1991). 10.1002/cyto.990120302 [DOI] [PubMed] [Google Scholar]

- 6.McKeogh L., Sharpe J., Johnson K., “A low-cost automatic translation and autofocusing system for a microscope,” Meas. Sci. Technol. 6(5), 583–587 (1995). 10.1088/0957-0233/6/5/020 [DOI] [Google Scholar]

- 7.Kinba A., Hamada M., Ueda H., Sugitani K., Ootsuka H., “Auto focus detecting device comprising both phase-difference detecting and contrast detecting methods,” (Google Patents, 1997). [Google Scholar]

- 8.Foroosh H., Zerubia J. B., Berthod M., “Extension of phase correlation to subpixel registration,” IEEE Trans. Image Process. 11(3), 188–200 (2002). 10.1109/83.988953 [DOI] [PubMed] [Google Scholar]

- 9.Awwal A. A. S., “What can we learn from the shape of a correlation peak for position estimation?” Appl. Opt. 49(10), B40–B50 (2010). 10.1364/AO.49.000B40 [DOI] [PubMed] [Google Scholar]

- 10.Zheng G., Kolner C., Yang C., “Microscopy refocusing and dark-field imaging by using a simple LED array,” Opt. Lett. 36(20), 3987–3989 (2011). 10.1364/OL.36.003987 [DOI] [PubMed] [Google Scholar]

- 11.Guo K., Bian Z., Dong S., Nanda P., Wang Y. M., Zheng G., “Microscopy illumination engineering using a low-cost liquid crystal display,” Biomed. Opt. Express 6(2), 574–579 (2015). 10.1364/BOE.6.000574 [DOI] [PMC free article] [PubMed] [Google Scholar]