Summary

Neurons in prefrontal cortex (PFC) encode rules, goals and other abstract information thought to underlie cognitive, emotional, and behavioral flexibility. Here we show that the amygdala, a brain area traditionally thought to mediate emotions, also encodes abstract information that could underlie this flexibility. Monkeys performed a task in which stimulus-reinforcement contingencies varied between two sets of associations, each defining a context. Reinforcement prediction required identifying a stimulus and knowing the current context. Behavioral evidence indicated that monkeys utilized this information to perform inference and adjust their behavior. Neural representations in both amygdala and PFC reflected the linked sets of associations implicitly defining each context, a process requiring a level of abstraction characteristic of cognitive operations. Surprisingly, when errors were made, the context signal weakened substantially in the amygdala. These data emphasize the importance of maintaining abstract cognitive information in the amygdala to support flexible behavior.

Introduction

Sensory stimuli can elicit cognitive, physiological and behavioral responses that reflect a subject’s emotional state. This capacity can be acquired through conditioning procedures in which a subject learns that a particular sensory cue predicts a rewarding or aversive event (LeDoux, 2000; Schultz, 2006). Subjects can later learn to inhibit responses to the same stimulus through extinction (Milad and Quirk, 2012), or to alter responses to the stimulus if its associated reinforcement changes in value (Pickens and Holland, 2004). However, subjects can also regulate emotional responses to stimuli flexibly by using cognitive operations. Consider the game of Blackjack. Here, being dealt the same card, such as a king, can cause joy in one hand, if a player makes ‘21’, and distress in another hand because the player goes bust. Players apply the rules of the game to regulate their emotional responses instantaneously upon seeing the king. Re-learning of stimulus-outcome associations need not occur to adjust responses. This flexible behavior exemplifies the cognitive control of emotion (Ochsner and Gross, 2005).

In this study, we investigated mechanisms relevant to the cognitive control of emotion by training monkeys to perform a task in which a cognitive strategy can be used to predict reinforcement more efficiently. Monkeys performed a serial reversal trace-conditioning task. In every block of trials, one conditioned stimulus (CS) predicted reward (unconditioned stimulus, US), and another CS did not. Both CSs switched reinforcement contingencies simultaneously upon block changes, and the two types of blocks reversed many times in every experiment.

We defined a context in each experiment as the block of trials in which a particular set of stimulus-outcome contingencies is in effect. Context thereby parallels ‘task set’ (Sakai, 2008), i.e. the set of associations characterizing a block of trials. On 40% of trials, the context was signaled by a contextual cue, but on the other 60% of trials, context information was not explicitly presented and instead had to be represented internally as a cognitive variable.

In principle, monkeys could perform this task by learning each CS-US contingency independently after every block switch, a strategy that does not rely on information about context. However, monkeys’ behavioral performance indicated that they abstracted a representation of context to perform the task more efficiently. After a block switch, upon experiencing one CS as having switched its contingency, monkeys adjusted their behavior on the first trial of the other CS even when it was not accompanied by a contextual cue and even though the new contingency for that CS had yet to be experienced. This indicates that monkeys use a representation of context to infer what reinforcement to expect. Since the context is un-cued when monkeys display inference, we refer to it as a “cognitive context”.

Many studies have established that the amygdala participates in learning and representing the relationship between sensory stimuli and upcoming reinforcement to direct behavioral and physiological expressions of emotional state (Ambroggi et al., 2008; Baxter and Murray, 2002; Carelli et al., 2003; Everitt et al., 2003; LeDoux, 2000; Paton et al., 2006; Quirk et al., 1995; Salzman and Fusi, 2010; Schoenbaum et al., 1998; Shabel and Janak, 2009; Tye et al., 2008). These studies indicate that the amygdala coordinates emotional responses, but the critical cognitive processing thought to underlie emotional flexibility is conventionally predicated to lie in the pre-frontal cortex (PFC). The PFC has been shown to encode rules and other abstract information (Buckley et al., 2009; Durstewitz et al., 2010; Salzman and Fusi, 2010; Stokes et al., 2013; Wallis et al., 2001). Furthermore, it has been proposed that a component of the PFC – orbitofrontal cortex – represents “cognitive maps” of task space, a type of representation that could be important for the implementation of reinforcement learning algorithms and flexible decision-making (Wilson et al., 2014).

The demonstration that monkeys utilize knowledge of a cognitive context to infer upcoming reinforcement afforded us the opportunity to study the neurophysiological processes that could mediate this flexible behavior. While monkeys performed the task, we recorded the activity of single neurons in the amygdala and the two parts of the PFC most densely interconnected with the amygdala, the anterior cingulate and orbitofrontal cortices (ACC and OFC) (Ghashghaei et al., 2007; Stefanacci and Amaral, 2000). Neural activity in the amygdala encoded abstract information about context even when it was not cued by sensory stimuli. This signal reflected the linked sets of CS-US associations defining each context, a process requiring the cognitive abstraction and internalization of an unobservable variable. Activity in OFC and ACC also represented cognitive (i.e. un-cued) context. In all 3 brain areas, the context signal was present when monkeys utilized inference. Notably, when monkeys’ behavioral responses did not anticipate reward accurately, the context representation weakened substantially in the amygdala. The maintenance of abstract cognitive information in the amygdala may therefore be a signature of successful flexible behavior on this task.

Results

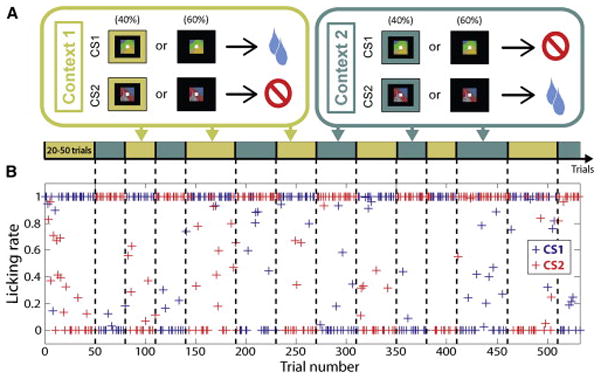

Two rhesus monkeys performed a trace-conditioning task in which the reinforcement predicted by two conditioned stimuli reversed many times within an experiment (Fig. 1A). The two CSs were computer-generated fractal patterns novel to the monkey in each session. On every trial, monkeys fixated and viewed one CS or the other. In the initial block of trials, CS1 predicted delivery of a liquid reward US after a trace interval; no reinforcement followed CS2. The reinforcement contingencies of CS1 and CS2 switched simultaneously and without warning multiple times in every experiment. This created two types of blocks of trials, one where CS1 was rewarded, and the other where CS2 was rewarded. We defined context as the block of trials in which a particular set of stimulus-outcome contingencies is in effect. Context was explicitly signaled by a visual cue on the first trial of a block switch and in a random subset of trials within the blocks; overall, this cue appeared throughout the experiment on 40% of trials.

Figure 1. Task design and example licking behavior.

(A) Task design (see Experimental Procedures). (B) Licking rate (defined as the proportion of time spent licking during the last 500ms of the trace interval) as a function of trial number throughout an example of experimental session. CS1 is paired with reward in Context 1 and paired with no reward in Context 2. Block transitions are indicated by vertical dashed lines and the context in effect for each block is indicated by the diagram in (A).

We assessed monkeys’ reward expectation by measuring anticipatory licking in the last 500 ms of the trace interval. Monkeys consistently showed higher levels of anticipatory licking on rewarded trials than on non-rewarded ones (Fig. 1B). Neither the presence of the contextual cue, nor how recently a contextual cue appeared, had a significant impact on the monkey’s propensity to anticipate reinforcement correctly (Fig. S1).

Behavioral evidence of an abstract internal representation of context

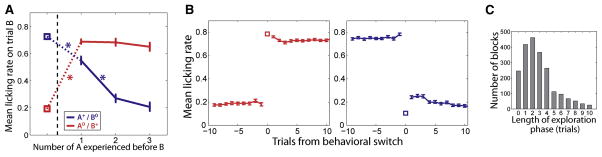

Behavioral evidence indicates that monkeys inferred the reinforcement expected from a CS viewed for the first time after a context switch, so long as they had first viewed the other switched CS. Figure 2A shows the average licking rate on the first trial in which CSB appears after a block switch as a function of the number of CSA trials experienced since the block switch (where CSA is the first CS shown in the new context and CSB is the other CS). Anticipatory licking after viewing CSB changed significantly after experiencing one or more instances of CSA, regardless of whether in the new block CSB predicted reward or not. Crucially, this analysis excluded CSB trials in which the contextual cue appeared, so the monkeys had to rely on an internal representation of the context to adjust licking. These results were observed for both monkeys, and results were similar on trials containing the contextual cue.

Figure 2. Behavioral evidence that monkeys utilize abstract representations of context.

(A) Mean licking rate on the first CS B trial after a block switch as a function of the number of CS A trials experienced since the block transition, where A and B are the two CSs of the task. Block transition is represented by dashed vertical line and squares represent the value of the licking rate for CS B on its last occurrence before the transition. The CS that is rewarded or non-rewarded after the block transition is indicated by + or 0 superscript, respectively. Error bars: s.e.m. Asterisks: significant difference between two consecutive data points (p<10e-10, Wilcoxon rank-sum test). The color code is conserved for a given CS before and after the transition. Error bars for pre-transition values are smaller than symbols. (B) Mean licking rate in response to the CS that changes from non-rewarded to rewarded (left, red) and from rewarded to non-rewarded (right, blue) as a function of trials from the ‘behavioral switch’, defined as the first rewarded trial after the block transition in which the monkey’s anticipatory licking is high (left) or as the first non-rewarded trial in which the licking is low (right) (see Experimental Procedures). The mean licking rate on behavioral switch is represented by a square. Error bars: s.e.m. Error bar on trial 0 is smaller than symbol. (C) Distribution of lengths of exploration phases for all blocks of all sessions. We define the exploration phase as the set of trials between the beginning of the block and the first non-rewarded trial in which the monkeys decreased their licking (see Experimental Procedures). Histogram was truncated at 10 for visual clarity. Mean ± s.d = 3.3 ± 3.2 trials. See also Figure S2.

The monkeys’ performance on this task did not derive from their having extensive experience viewing the two CSs in each context. The CSs were novel in each experiment, and restricting the analysis to the first block transition of each experiment showed that on average monkeys utilize inference on the very first context switch (p<0.05, Wilcoxon rank-sum test). Monkeys were therefore able to generalize their knowledge of task structure immediately to CS-US combinations never experienced previously. This knowledge was utilized to infer expected reinforcement.

The capacity to employ inference indicates that monkeys possess an abstract understanding of the nature of the contexts in this task. Consistent with this, once monkeys changed their licking rates upon viewing a CS after a block change, they did so in a switch-like fashion. Figure 2B shows the mean licking rate around a block switch for the CS that becomes rewarded (left) and for the CS that becomes non-rewarded (right) aligned to the first trial where licking rate switched. This event marks a sharp transition between, roughly, the two asymptotic levels of licking. On average, for the newly rewarded CS the switch in licking rate occurred on the very first trial, but for a newly unrewarded CS, the switch in behavior appeared after a variable number of trials.

On one or more trials immediately after a block switch, monkeys thus licked regardless of the contingencies. We label such trials immediately after the block switch as “exploration” trials. On average 3.3 exploration trials occurred after block switches (Fig. 2C). Both monkeys exited the exploration phase abruptly, often only having experienced one of the CSs. They then switched to the behavioral mode corresponding to the current context in which licking reflected the reinforcement contingencies of both CSs. Exploration has been described previously as resulting from switches in the rule linking cues and rewards (Quilodran et al., 2008) or cues and operant responses (Fusi et al., 2007).

Single neurons in the prefrontal cortex and amygdala encode all task-relevant variables

We recorded the activity of 527 individual neurons in the prefrontal cortex and amygdala in two monkeys while they performed our task (Fig. 3). Of these, 187 cells (97 and 90 in each monkey) were recorded in OFC, 160 cells (84 and 76) were recorded in ACC and 180 cells (77 and 103) were recorded in the amygdala. The inference demonstrated by monkeys relies on their knowing CS identity and the current context to compute expected reinforcement, suggesting that the brain must represent this information. Many PFC neurons encoded context, CS identity, and reinforcement expectation (Fig. 4A–F). All three of these variables, including the parameter ‘context’, were also encoded in the amygdala (Fig. 4G–I). For example, the amygdala neuron in Fig. 4G showed increased activity during context 1 relative to context 2 even though only trials that lacked a contextual cue were used to plot these data.

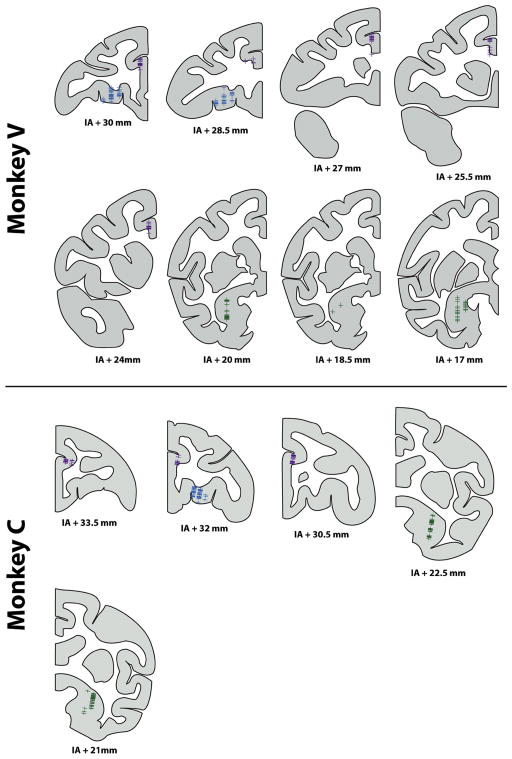

Figure 3. Recording locations.

The location of each recorded neuron is indicated by a + symbol on the corresponding coronal slice. Blue: OFC neurons; purple: ACC; green: amygdala. Anterior-posterior coordinate of each slice is specified relative to the inter-aural plane (IA). Diagrams were constructed from anatomical MRIs of each subject.

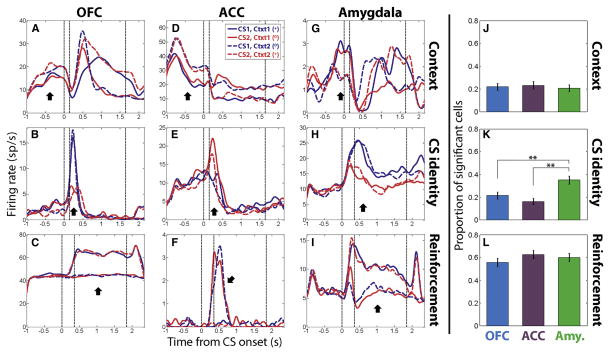

Figure 4. Single neurons in OFC, ACC and the amygdala encode context, CS identity, and reinforcement expectation.

(A–C) Example OFC cells encoding context (A), CS identity (B) and reinforcement expectation of reward or no reward (C). Each plot is a peristimulus time histogram (PSTH) aligned on CS onset. (D–F) Example neurons from ACC. (G–I) Example neurons from amygdala. For all plots, fixation is acquired 1 sec before CS onset. PSTHs of example context-selective neurons exclude trials where the contextual cue was shown. By convention, CS1 is the stimulus that is paired with reward in Context 1. Thus, blue solid lines and red dashed lines both correspond to rewarded trials (indicated by + symbol), while red solid lines and blue dashed lines both correspond to non-rewarded trials (0 symbol). Vertical dashed lines represent, in order, CS onset, CS offset and US onset. Black arrows show where in the trial the specified feature is encoded. (J–L) Proportion of neurons in each brain area that significantly encode context (J), CS identity (K) and reinforcement expectation (L) as determined by linear regression analysis (Experimental Procedures). Asterisks indicate significant differences in proportions (* p<0.05, ** p<0.01, z-test for different proportions). Error bars indicate the estimated standard error of each proportion based on a binomial distribution.

We used a linear regression model to determine whether each neuron recorded encoded CS identity, context, or reinforcement expectation (see Experimental Procedures). Approximately 20% of neurons in each brain area encoded context (p < 0.05, Fig. 4J). CS identity was also encoded in all 3 brain areas, though the amygdala contained significantly more neurons selective for this parameter (p < 0.01, z-test, Fig. 4K). Reinforcement expectation was the most frequently encoded variable in neurons from each of the 3 brain areas (Fig. 4L).

Population-level decoding of task-relevant variables

Single cells often had different types of selectivity in different time windows, such as, for example, context selectivity during the fixation interval and reinforcement expectation selectivity during the trace interval (Fig. 4A, D). The population of cells encoding a particular trial feature was therefore not necessarily identical at two different time points within a trial. Given this, we examined the encoding of task-relevant signals as a function of time by considering populations of neurons collectively (see e.g. (Rigotti et al., 2013)). A linear decoder was trained to read out trial features from the spike counts of the populations of neurons recorded in each brain area. This approach combines information from the entire population of neurons, including neurons that demonstrate mixed selectivity, defined as selectivity to specific combinations of trial features (e.g. CS1 in context 1 only) (Rigotti et al., 2013). The decoder accuracy provides a single summary statistic that quantifies how accurately the whole neuronal population represents information about any particular variable. This decoding accuracy reflects the mean difference in population spike counts between two conditions, relative to the variance of population spike counts. These spike counts are first averaged over the population by weighting each neuron according to the weight parameters found by training the decoder to discriminate maximally between two conditions (see Methods). Fig. S3 shows the relation between the weight assigned to each neuron by the decoding algorithm and the coefficients found by fitting our linear regression model to each neuron.

We used the decoder to compare and contrast the encoding of task-relevant variables within and between brain areas. The decoder was trained and tested on the same number of cells for each area (see Experimental Procedures). In our task, CS identity and context may be conceptualized as “inputs” needed to compute expected reinforcement, which is the “output” signal needed to direct behavior. Figure 5A plots cross-validated decoding accuracy for context and CS identity as a function of time on trials in which monkeys’ behavior anticipated the reinforcement correctly (“Correct trials”). The observed context selectivity was not based upon neural responses to visual contextual cues, as this analysis excluded trials in which a contextual cue appeared. Moreover, if the decoder was trained on a subset of trials that did not contain a contextual cue and then tested on held-out trials that did or did not contain a contextual cue, the decoding of context was almost the same regardless of whether a contextual cue appeared (Fig. S4). The context encoding therefore is not stimulus-driven but instead reflects the monkeys’ internal representation of context, an internal cognitive variable which was present in the amygdala as well as in PFC. Reinforcement expectation, the presumed output of the neural computation mediating task performance, also was encoded by neural representations in all 3 brain areas (Fig. 5B), consistent with prior observations (Cai and Padoa-Schioppa, 2012; Kennerley et al., 2011; Morrison et al., 2011; Niki and Watanabe, 1979; Padoa-Schioppa and Assad, 2006; Paton et al., 2006).

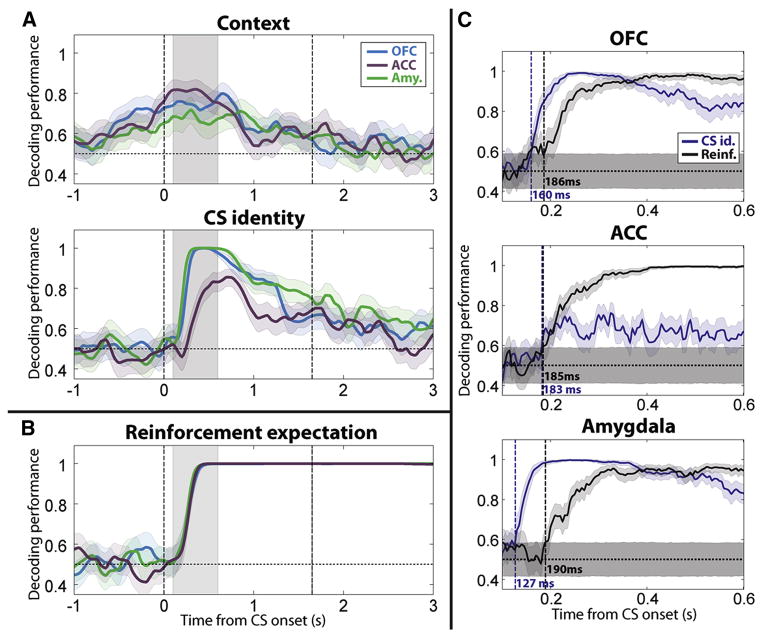

Figure 5. Population-level encoding of context and CS identity occurs fast enough to account for the correct anticipation of reinforcement.

(A) Performance of the linear decoder at reading out two task-relevant trial variables, context and CS identity, during “correct” trials (defined in the Experimental Procedures). These variables could be used as “inputs” to a neural computation of expected reinforcement. The decoding accuracy was computed on a 250-ms sliding window stepped every 50 ms across the trial for the three brain areas separately: blue, OFC; purple, ACC; green, amygdala. The number of cells from each population used in the decoder was equalized for comparisons across areas (see Experimental Procedures). Shaded areas indicate 95% confidence intervals (bootstrap). Vertical dashed lines represent CS onset and earliest possible US onset. Grey shaded area corresponds to 500-ms window from 100 to 600 ms after CS onset used in subsequent analyses. (B) Performance of the linear decoder at reading out reinforcement expectation, the output of a neural computation mediating task performance. (C) Relative timing between the CS identity signal (blue) and the reinforcement expectation signal (black) in OFC, ACC and the amygdala. The performance of the linear decoder was computed on a 50-ms sliding window stepped every 5 ms across the 500-ms time window shown in panels (A) and (B) (shaded area). Vertical dashed lines and corresponding labels indicate the first time bin where the decoding performance is significantly above chance level and remains above it for the 10 subsequent time bins. Shaded areas around chance level indicate 95% confidence intervals for the decoding performances when the two trial types (CS1 and CS2 or reward and no reward) were randomly labeled. See also Figures S3 and S4.

Neural representations of CS identity and context should become apparent at latencies as short or shorter than representations of reinforcement expectation to support the computation underlying task performance. Decoding performance for context was significantly above the 50% chance level (95% confidence intervals, bootstrap) in amygdala, OFC and ACC commencing shortly after fixation point onset, long before reinforcement expectation was encoded. We determined whether CS identity signals also emerged fast enough to account for the computation of reinforcement expectation. Two linear decoders were trained to read out CS identity and reinforcement expectation with greater temporal precision during the 500-ms time window starting 100 ms after CS onset in which decoding performance for reinforcement expectation transitioned from chance level to nearly 100% (shaded area, Fig. 5A–B). In the amygdala, the CS identity signal appeared significantly earlier than the reinforcement expectation signal (p<0.05, bootstrap; Fig. 5C). In OFC and ACC, the CS identity signal became significantly greater than chance levels at about the same time as the reinforcement expectation signal (p>0.05, bootstrap; Fig. 5C). The CS identity signal appears in the amygdala significantly earlier than it does in OFC and ACC (p<10−3 for both, bootstrap). Overall, CS identity and context information were present as early as or earlier than information about reinforcement expectation in all 3 brain areas, a requirement if these signals are being utilized to perform the task.

We next determined whether context and CS identity were encoded on trials where monkeys demonstrated inference unequivocally (i.e., in the first non-rewarded CSB trial after a block switch, Fig. 2A). Neural activity was analyzed during the 500-ms period where the reinforcement expectation signal emerges (100–600 ms after CS onset, shading in Fig. 5A, B). The amygdala, OFC, and ACC all encoded context and CS identity (Fig. 6A), indicating that the required signals were present when monkeys demonstrated inference. All 3 brain areas also signaled expected reinforcement accurately on these trials (Fig. 6B).

Figure 6. Context and CS identity signals are present during inference and reflect the linked sets of CS-US associations that define context.

(A) Decoding performance for context and CS identity on trials where subjects correctly inferred reinforcement expectation, as revealed by their licking behavior, on the first non-rewarded trial following one or more rewarded trials after a block switch. Neural activity was taken during the 500-ms time window shown starting 100 ms after CS onset. Error bars indicate 95% confidence intervals (bootstrap). (B) Decoding performance for reinforcement expectation on the same trials as in (A). (C) Decoding performance for context on trials following non-rewarded trials (CS10 and CS20) for a decoder trained on trials following rewarded trials (CS1+ and CS2+). Neural activity was analyzed during a time window extending from 400 ms before CS onset to 100 ms after CS appearance on trials without a contextual cue; this window ends before CS-related responses commence. Error bars indicate 95% confidence intervals (bootstrap).

Neural signals encoding context reflect cognitive abstraction

The utilization of inference indicates that monkeys knew the sets of CS-US associations that defined the two contexts. We therefore hypothesized that the observed neural representations of context reflect the process of abstraction that links together the pairs of CS-US associations within each context. In this case, the representation of context should be similar following both types of CS-US pairs (i.e. both trial types) that appear within a context. Alternatively, neurons might merely represent a memory of the CS-US pairing from the previous trial. In this case, decoding performance for context could be above chance since each CS-US pair appears only in one of the two contexts. However, in this scenario, the signal on trials following different CS-US pairs would not necessarily be similar.

We performed an analysis to determine whether the observed neural representations reflect the linked sets of CS-US associations defining each context, or instead the memory trace of the CS-US pair that appeared on the previous trial. The linear decoder was trained to decode context on trials preceded by two CS-US pairs belonging to different contexts (CS1 and CS2 when they were rewarded). We then tested whether one could decode context on trials preceded by the other two CS-US pairings (non-rewarded CS1 and CS2). If context can be correctly decoded, then the neural representations observed in the two types of trials of the same context must have something in common, which would indicate that CS1-Reward and CS2-NoReward trial types, defining context 1, are linked together in the neural representation. Analogously, CS1-NoReward and CS2-Reward trials, which define context 2, would also be linked together.

Context was decoded significantly at above chance levels in all 3 brain areas during the 500-ms epoch immediately before responses to CSs begin (Fig. 6C). The analysis was performed on trials that did not contain a contextual cue, so decoding could not have been based on a response to the contextual cue on the current trial. Moreover, the decoding of context was not related to the outcome of the preceding trials, since the decoder was tested on trials that followed two trial types with the same reinforcement outcome. Finally, the decoding of context was not due to the CS identity of the preceding trials, since CS1 was categorized as context 1 during training and context 2 during testing. Decoding context based on the CS identity of the previous trial would therefore produce an incorrect categorization. This analysis rules out the possibility that the decoding of context can merely be attributed to signals that reflect a memory trace of the CS-US association of the previous trial. The data indicate that the context signal instead reflects the linked sets of CS-US associations within each context.

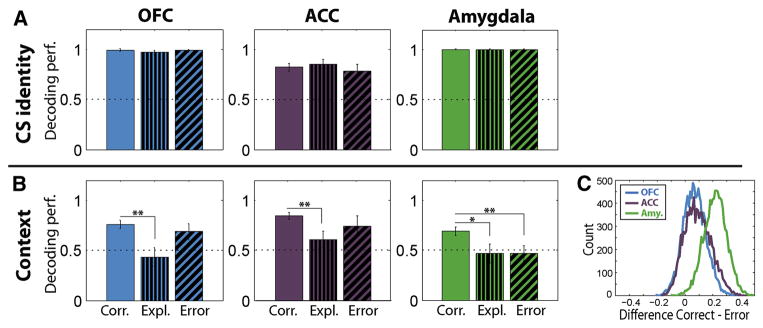

The neural representation of context during exploration and error trials

If neural encoding in OFC, ACC and/or the amygdala underlies the behavior that reflects the animal’s reward expectation, an alteration in encoding should be apparent when licking behavior does not predict reinforcement accurately. We therefore examined the relationship between neural representations and performance by separating trials into three types: exploration, error, and correct trials (Table S1). We trained the decoder to read out CS identity and context in a subset of correct trials, and we asked if using the decoder weightings established on correct trials would demonstrate reduced decoding accuracy on exploration and error trials. Training and testing were done in the time window where the reinforcement expectation signal emerges on correct trials (100–600 ms after CS onset). Both context and CS identity could be decoded with maximum accuracy within this time epoch on correct trials (Fig. 5A).

The encoding of CS identity remained strong during exploration phase and error trials in all 3 brain areas (Fig. 7A). Exploration phase and error trial behavior therefore appear unlikely to arise from an incorrect representation of CS identity. In contrast, a weakened representation of context could be responsible for exploration phase behavior: decoding accuracy of context was near chance levels and significantly reduced in all 3 brain areas (p<0.05, bootstrap, Fig. 7B). On error trials, only the decoding of context in the amygdala decreased significantly to chance level (p<0.01, bootstrap, Fig. 7B). The decoding performance for context information in OFC and ACC on error trials was not statistically different from correct trials (p>0.05, bootstrap). The amygdala exhibited a larger difference in decoding accuracy on correct vs. error trials than either OFC or ACC (p<0.01, Wilcoxon rank-sum test, Fig. 7C). The decreased decoding performance for context in the amygdala appeared to be due to a failure to maintain this representation during the CS interval. Decoding accuracy for context during the time epoch preceding responses to the CS was significantly above chance in all 3 brain areas (Fig. S5).

Figure 7. Neural correlates of correct, exploration and error behavior: decoder analyses.

Comparison of neural encoding of the two task-relevant trial features between correct trials, exploration-phase trials and error trials in the 500-ms time window starting 100 ms after CS onset (shaded area in Fig. 5A, B). (A) CS identity signal. (B) Context signal. Left: OFC. Middle: ACC. Right: amygdala. The number of trials used in the decoder was equalized across correct, exploration and error conditions for comparison purposes (see Experimental Procedures). Error bars indicate 95% confidence intervals (bootstrap). Horizontal, dotted line indicates chance level. Asterisks indicate significant differences in performance between conditions. * p<0.05, ** p<0.01, bootstrap. (C). Distribution of the differences in the decoding performance for context information between correct and error trials for OFC, ACC and the amygdala. The distributions were generated by repeated partitioning of the trials into training and testing sets (Experimental Procedures). See also Figure S5.

We next sought to understand whether the observed decreased decoding performance for context was due to decreased signal, increased noise, or both. As described above, training the decoder to discriminate between contexts during correct trials furnishes a weight for each neuron. These weights are used to compute a weighted sum of the activity of the neurons within a given population (see Experimental Procedures and Supplemental Experimental Procedures sections entitled “Definition and quantification of encoding signal”), which then determines the decision of the decoder. We computed this weighted sum within the same time interval as in Fig. 7B (0.1–0.6 ms after CS presentation). The mean difference between the weighted sum of spike counts in context 1 and context 2 trials provides a measure of context selectivity, which we label as the “context encoding signal” (Fig. 8A). Analogously, the variance of the weighted average sum of spike counts (the “context encoding variance”) was computed during the same time epoch on correct, exploration, and error trials (Fig. 8B).

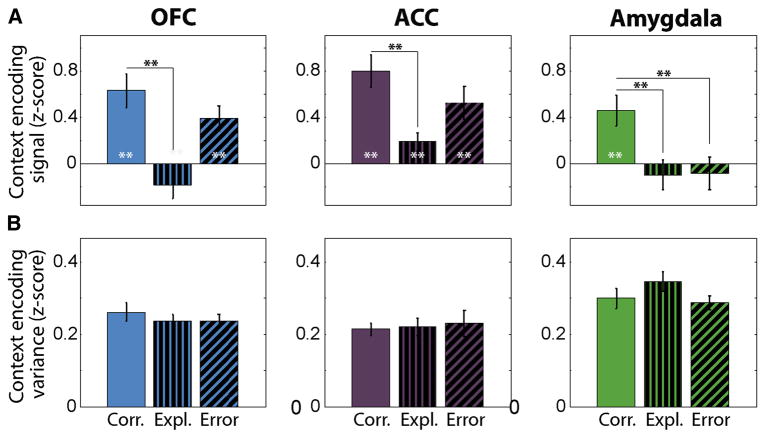

Figure 8. A decrease in encoding signal, not an increase in noise, accounts for the lower decoding accuracy of context.

(A) Context encoding signal, defined as the weighted sum (across neurons) of the mean difference in neural activity on context 1 and context 2 trials, is plotted for each brain area on correct, exploration and error trials. The weighted sum uses the weights from the decoder procedure (see Experimental Procedures and Supplemental Experimental Procedures). The context encoding signal is computed in the same time interval used in Fig. 7B (0.1–0.6 ms after CS presentation). The drops in context encoding signal are consistent with the decreases in decoding accuracy in Fig. 7B. Grey asterisks inside bars indicate a significant context encoding signal. Black asterisks indicate significant differences between correct and exploration or error trials. * p<0.05, ** p<0.01, bootstrap. (B) Context encoding variance plotted for each brain area during correct, exploration and error trials from the same time epoch as in (A). The context encoding variance is a measure of the variability of the context encoding signal quantified as the sample variance of the difference of the weighted sums of neural activity between context 1 and context 2 trials. The context encoding variance does not show a significant change between correct, exploration and error trials in any brain area (p>0.05 for all comparisons, bootstrap).

The decreased decoding of context during exploration trials in all 3 brain areas, and during error trials in the amygdala, reflected a diminished strength of the context encoding signal within the neuronal populations rather than an increase in the variance (Fig. 8). On exploration trials, the context encoding signal decreased significantly as compared to correct trials in all 3 brain areas (p <0.01, bootstrap, Fig. 8A). On error trials, the context signal decreased significantly compared to correct trials only in the amygdala (p < 0.01, bootstrap, Fig. 8A), where the signal was not significantly above chance levels. By contrast, the variance of the context signal - a measure of noise due to neural variability - did not exhibit a significant change between correct, exploration and error trials in any brain area (Fig. 8B).

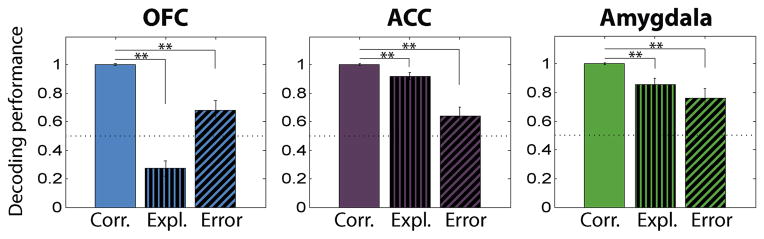

The neural representation of reinforcement expectation during exploration and error trials

The inconsistent licking behavior observed during exploration and error trials also correlated with a reduced encoding of reinforcement expectation during the trace interval. In the 1-sec time window preceding the US, performance of the population decoder during both the exploration phase and error trials decreased relative to correct trials in all 3 neural populations (p<0.01, bootstrap, Fig. 9). Although reduced, decoding accuracy for reinforcement expectation remained significantly above chance (or, for OFC during exploration trials, significantly below chance level, suggesting a persistence of the CS-US associations from the previous block). This result was qualitatively identical when performing this analysis in the same 500-ms window used for the CS identity and context signals. We do not know why monkeys fail to exploit the weakened, though still present, representation of reinforcement expectation in order to not make errors in anticipatory behavior. It is possible that neural activity in brain regions downstream from the amygdala, OFC and ACC exhibits a total lack of reinforcement expectation encoding on error trials.

Figure 9. Representation of reinforcement expectation during correct, exploration and error trials.

Comparison of neural encoding of reinforcement expectation between correct trials, exploration-phase trials and error trials in the 1-sec time window preceding the US delivery. Besides the time window used, methodology and conventions are the same as in Fig. 7.

Discussion

Emotions often arise upon seeing a stimulus associated with looming reinforcement. Adaptive emotional behavior requires brain mechanisms that regulate such emotions using cognitive operations. Here monkeys performed a trace-conditioning task in which the sets of CS-US associations reversed many times for two CSs, creating two task sets, or contexts. Monkeys used an internal representation of context to infer that the reinforcement contingencies of one CS had switched if it had first experienced the other CS-US pair after a reversal (Fig. 2A). This inference led to an abrupt and persistent behavioral transition, reflected by anticipatory licking switching in one trial to asymptotic levels (Fig. 2B). Behavioral adjustment in this task therefore appeared to be supported by a rapid switch-like activation of the internal representation of the current context (Rigotti et al., 2010). Remarkably, this even occurred on the first block switch of each session, before monkeys had experienced each CS in both contexts.

Neurophysiological recordings in the amygdala, OFC, and ACC revealed that all 3 brain areas provide a neural representation of cognitive context, or task set. This signal was present on trials that lacked contextual sensory cues (Figs. 4, 5) and in which monkeys used inference to adjust their anticipatory behavior (Fig. 6A). The context signal reflected a process of abstraction that linked the set of CS-US associations defining each context (Fig. 6C). The PFC has traditionally been proposed to provide a cognitive map of task space (O’Reilly, 2010; Wilson et al., 2014), a signal related to what we observed. The amygdala has not traditionally been described as playing this role in mediating behavior. The representation of cognitive context in the amygdala was strongly coupled to accurate reinforcement prediction (Fig. 7B and Fig. 8A). These data suggest that during cognitive regulation, the amygdala actively participates in the maintenance of cognitively relevant information.

Neural correlates of exploration

Immediately after a block switch, which was always signaled by a contextual cue, monkeys tended to lick on the first trial of the new block, and this default strategy could continue for a few trials. We refer to this behavior as “exploratory”, and it appears to compete with the strategy to employ inference based on knowledge of the task structure. Exploration involves an active sampling of the environment (Quilodran et al., 2008), and can arise from a mismatch between predictions about reward and environmental feedback (Cohen et al., 2007). Licking in response to a CS in our task is necessary to collect rewards, and it is one way a monkey can sample the environment to determine if a CS is rewarded. In our experiments, we classified trials as belonging to an exploratory phase using a behavioral criterion (Fig. 2C). Context encoding decreased dramatically in the amygdala, OFC, and ACC on these trials (Fig. 7B), which could be attributed to a decrease in signal rather than an increase in noise (Fig. 8). The decreased signal could arise from the fact that the exploration strategy does not require the active re-expression of abstract information about context.

Humans also exhibit exploratory phases, and the presence of such strategies can be adaptive. For example, if one uses a task that contains three or more contexts, or if a task employs unreliable feedback, immediate switching becomes impossible, and exploration is necessary to figure out the new context (Koechlin, 2014; Yu and Dayan, 2005) (also see (Collins and Koechlin, 2012; Donoso et al., 2014); these papers refer to a context as an “task set”). Exploration is also an important component of optimal behavior when new contexts are occasionally introduced. Humans tend to adopt the same strategy whether contexts are recurrent or new, even though learning new contexts is significantly more challenging (Collins and Koechlin, 2012). Thus subjects tend to use a strategy that is optimal for the most difficult situations, which may be encountered more often in the real world, even though this strategy may be sub-optimal for simpler cases.

Neural correlates of errors

Once monkeys exit the exploratory phase, their anticipatory licking predicts whether a trial is rewarded or not with high probability. Occasionally, however, monkeys either lick on a non-rewarded trial or they do not lick on a rewarded trial. In the amygdala, but not in OFC or ACC, we observed a significant decrease in the decoding performance of our linear decoder for the context signal on error trials during CS presentation (Fig. 7B). Further analysis revealed that this drop in decoding accuracy could be attributed to a loss in encoding signal, as indexed by changes in mean firing rates, and not to an increase in noise, as indexed by increases in firing rate variance (Fig. 8). Previous studies have also noted that activity in PFC representing abstract rules does not differ on correct compared to error trials (Mansouri et al., 2006).

Conceivably, the observed decrease in performance accuracy for decoding context could have resulted from a change in the encoding scheme on error trials rather than from a loss of context information. We consider this unlikely because the linear classifier successfully decoded context before the CS presentation on error trials (Fig. S5). The change in encoding scheme would therefore have to occur abruptly during error trials, an unlikely scenario. In general, our approach postulates that the performance of a linear decoder is a proxy for the information that can be decoded by a local downstream circuit. We assume that brain structures downstream to the areas we have studied are tuned to the encoding scheme utilized during correct trials, which are the trials we train the decoder on. It seems unlikely that these downstream areas can instantaneously adapt to arbitrary codes on error trials.

Comparison with studies of context-dependent modulation of behavior and neural activity

The neural representation of cognitive context emerges in the amygdala, OFC, and ACC before a CS appears, and then is sustained during CS presentation, even when context is not cued by a sensory stimulus. We showed that this neural signal encodes the task set that defines a context by training a linear decoder to decode context on trials following 2 CS-US trial types, one from each of the 2 contexts, and then successfully decoding context on trials following the other 2 CS-US trial types (Fig. 6C). The neural representations of the pairs of CS-US associations that define each context are thereby linked. This representation reflects a process of cognitive abstraction connecting together the sets of associations that define each context.

The method through which we define and establish context in our experiments differs from prior studies investigating contextual fear-conditioning or context-specific extinction. In those studies, contexts are typically defined by a set of sensory stimuli appearing during presentation of a CS (Hobin et al., 2003; Maren et al., 2013; Orsini et al., 2013); these paradigms do not require the brain to activate an internal, uncued representation of context to respond appropriately. As a result, those paradigms likely invoke distinct brain mechanisms from those investigated here.

Studies that employ explicit sensory cues to signal context have suggested that the hippocampus represents this information (Anderson and Jeffery, 2003; Hayman et al., 2003; Holland and Bouton, 1999). By contrast, the amygdala has been found to play a critical role in forming and storing associative links between contexts (or cues) and reinforcing stimuli (Maren and Quirk, 2004; Maren et al., 2013). Consistent with this view, context-dependent modulation of responses to sensory stimuli predicting reinforcement have previously been reported in the amygdala (Bermudez and Schultz, 2010; Hirai et al., 2009; Hobin et al., 2003; Orsini et al., 2013) and elsewhere when animals undergo context-specific extinction (Bouton and Todd, 2014; Milad and Quirk, 2012; Orsini et al., 2013). The neural responses described in those studies could be related to changes in the subjective valuation of a CS depending upon context. For example, during context-specific extinction, a subject learns that a CS no longer predicts aversive stimulus delivery in one context. In a different context, the CS is not extinguished, so it still can elicit an emotional response. The observed context-dependent modulation of responses to a CS therefore could reflect the differential associative meaning of the CSs in each context, and not context per se.

The neural signals reflecting reinforcement expectation in our study correspond to the inferred value of a CS (Stalnaker et al., 2014), a context-dependent response which is the output of the computation that underlies monkeys’ performance. By contrast, neural signals representing CS identity and context are the input signals required to perform inference. The neural representations of cognitive context in the amygdala, OFC, and ACC are therefore fundamentally different, both in their nature and in their computational role, from prior observations of context-dependent modulations of neural activity in response to a CS.

Our task design bears resemblance to occasion setting tasks in which there is not a one-to-one mapping of a CS onto a US (Schmajuk and Holland, 1998). In these tasks, the interpretation of a CS depends upon the stimuli that “set the occasion” for the CS presentation. The hippocampus, and perhaps related structures like the rhinal cortices, are likely important for occasion setting (Holland et al., 1999; Yoon et al., 2011). These structures are interconnected with the amygdala (Stefanacci et al., 1996). Two aspects of our study distinguish it from typical occasion setting investigations. First, 60% of trials in our task did not contain a contextual cue (the equivalent of an occasion setter), and information about the current context had to be represented internally across trials. Second, monkeys exhibited inference on our task, which has not typically been reported during studies of occasion setting in rodent models. Thus our task probably engages distinct neural mechanisms from those revealed during studies of occasion setting.

Neural representations of cognitive context for Reinforcement Learning algorithms

Recent influential work has suggested that goal-directed learning is instantiated by model-based Reinforcement Learning (RL) algorithms in which an agent estimates state values by implementing a “cognitive search” procedure over an internal model of the environment (Daw, 2012; Daw et al., 2005; Doya, 1999; Rangel et al., 2008; Redish et al., 2008). This proposal is empirically supported by trial-by-trial fitting procedures that reveal high correlation between BOLD signals and the variables of model-based RL algorithms (Daw, 2011). A recent study shows that BOLD signals in the amygdala representing value and precision may be better correlated with model-based, as compared to model-free, algorithms on a Pavlovian serial reversal task (Prévost et al., 2013).

Our data are compatible with the notion that the amygdala might contribute to model-based RL, since the abstraction of cognitive variables corresponding to the states of an internal representation of the environment is a necessary premise for model-based computation. Furthermore, since monkeys adjusted their behavior by using inference, a process that cannot be explained in terms of re-learning changed CS-US contingencies, their behavior would not be captured by the model-free RL algorithms considered by (Prévost et al., 2013). We emphasize, however, that once neural representations of internal states corresponding to external hidden variables like context are available, an agent might mimic goal-directed instrumental responses by implementing a reactive habitual policy over these internal states (Gershman et al., 2010; Redish et al., 2007; Rigotti et al., 2010). In this case, the agent would not need to perform an explicit search process. Our study does not determine which of these two algorithms - a model-based search over an internal representation of the environment, or a reactive policy over internal states - accounts for how inference is implemented. Instead, we demonstrate the existence of a neural representation of the element that is common to both strategies: the abstraction and representation of an internal cognitive variable in amygdala, OFC, and ACC.

Traditional views of amygdala function have held that the amygdala learns about the motivational significance of stimuli so as to coordinate emotional responses (LeDoux, 2000), but have not ascribed to it a role in the maintenance of abstract cognitive information. The conceptual framework instead suggested by our data posits that representations of abstract information in the amygdala may play an important role in supporting computations of reinforcement expectation, thereby enabling subjects to respond flexibly to stimuli whose meaning differs depending upon the situation.

Experimental Procedures

Animals and behavioral task

Two rhesus monkeys (Macaca mulatta, one female, 5 kg, one male, 10 kg) were used in these experiments. All experimental procedures were in accordance with the National Institutes of Health guide for the care and use of laboratory animals and the Animal Care and Use Committees at New York State Psychiatric Institute and Columbia University. Methods are described in further detail in Supplemental Experimental Procedures.

Monkeys performed a serial-reversal trace-conditioning task in which they were presented one of two novel conditioned stimuli (CSs, fractal patterns) for 0.35 sec (monkey V) or for 0.15 sec (monkey C). A shorter CS presentation was used for monkey C to prevent systematic fixation breaks upon seeing the non-rewarded CS. After a 1.5 sec trace epoch, either a liquid reward US or nothing was delivered depending upon which CS had appeared. Rewarded and non-rewarded trials followed a pseudo-random schedule. The association between CS and US depended on the context in which the trial occurred. In Context 1, CS1 was paired with reward and CS2 was paired with no-reward; in Context 2 the associations were reversed. Blocks were randomly selected to last 30, 40 or 50 trials for monkey V and 20, 30 or 40 trials for monkey C. Context 1 and Context 2 blocks alternated 12–30 times during experiments. A contextual cue consisting of a color frame (Context 1, yellow; Context 2, blue) at the periphery of the screen appeared from fixation point onset until the end of the trace epoch on the first trial of a new block and overall on 40% of trials randomly selected.

Behavioral measures

Anticipatory licking behavior was measured by detecting the interruption of an infrared laser beam passing between the monkey’s lips and the reward delivery spout. Licking rate was defined as the proportion of time spent licking during the last 500 ms of the trace epoch. A threshold was applied to the licking rate to separate trials with high licking from trials with low licking (Fig. S6).

We defined the exploration phase as the set of trials at the beginning of a block that ends on the trial preceding the first non-rewarded trial in which the monkey’s licking rate was below threshold (Table S1). We defined error trials as trials after the exploration phase in a block in which the binary licking rate (reflecting monkey’s reward expectation) did not match the reinforcement delivered at the end of the trial (i.e. licking in a non-rewarded trial or not licking in a rewarded trial). Correct trials were defined as non-error trials outside of exploration phases.

Electrophysiological recordings

In each session, we individually advanced up to 8 tungsten electrodes into the 3 brain areas (1–4 to each area; impedance ~2 MΩ; FHC Instruments) using a motorized multi-electrode drive (NAN Instruments). Analog signals were amplified, band-pass filtered (250 Hz – 8 kHz) and digitized (40 kHz) using a Plexon MAP system (Plexon, Inc.). Single units were isolated offline using Plexon Offline Sorter. Recording sites in OFC were located between the medial orbital sulcus and the lateral orbital sulcus (Brodmann areas 13m and 13l). Recording sites in ACC were in the ventral bank of the anterior cingulate sulcus (area 24c). Amygdala recordings were largely in the basolateral complex.

Data Analysis

Linear regression analysis

We fitted the firing rate of each cell with the following linear regression model:

where US, CTXT and CS are binary vectors representing, for each trial, the reinforcement, the context and the CS, respectively; α is a constant term; β1, β2 and β3 are the coefficients and ε is the residual error. Trials in which the contextual cue was shown were excluded from the regression. The firing rate was taken from three time windows: Fixation interval (fixation point onset to CS onset), CS/Trace interval (CS onset to US onset) and US interval (US onset to US onset + 0.5 s). Cells were defined as context-coding if the CTXT term of the model significantly explained the firing rate in any of the three time windows (p<0.05, t-statistic with Bonferroni correction for multiple comparisons). Cells were defined as CS- or reinforcement-coding if the firing rate in the CS/Trace interval was significantly explained by the CS or US terms, respectively.

Population decoding

Pseudo-simultaneous population response vectors

We used a population decoding algorithm for analyzing population neural activity; details appear in supplementary material. Briefly, the algorithm was based on a population decoder trained on pseudo-simultaneous population response vectors (Meyers et al., 2008). The components of these vectors corresponded to the spike counts of the recorded neurons in specific time bins. Within each trial we aligned the activity of neurons on CS onset and computed the spike count over time bins of 250 ms (Fig. 5A–B), 50 ms (Fig. 5C) or 500 ms (Figs. 6, 7, 8) that we displaced in steps of 50 ms (Fig. 5A–B) or 5 ms (Fig. 5C).

Given a task condition c and a time bin t (for instance, between 0 and 250 ms after CS presentation) we generated pseudo-simultaneous population response vectors by sampling, for every neuron i, the z-scored spike count in a trial in condition c, that we indicate by nci(t). This procedure resulted in the single trial population response vector nc(t) = (nc1(t), nc2(t),…, ncN(t)), where N is the number of recorded neurons in the area under consideration. We used the same number of neurons for all areas, randomly discarding excess neurons so that we could meaningful compare findings across brain areas. We also discarded neurons for which we had fewer than 10 trials of “correct” behavior in each condition (i.e. in each context-CS combination). In total, this analysis focused on 143 neurons recorded in each brain area.

Training and testing the population decoder

Every trial in our task was indexed by one of 4 conditions given by the combination of CS identity (CS1 or CS2) and the reinforcement outcome (rewarded or non-rewarded). In all decoding analyses we discarded trials where the contextual cue was presented, eliminating contextual-cue selectivity as a possible explanation for apparent context selectivity.

All decoding analyses consisted in training a population decoder to discriminate between population response vectors belonging to two distinct classes that corresponded to two sets of experimental conditions for either CS identity, context, or expected reinforcement, and then testing the performance of the trained decoder on held-out trials in discriminating between the two classes. The training of the decoder was always done on trials where the licking behavior was “correct”. We tested decoder performance on correct, error and exploration trials. This allowed us to assess how the neural patterns of activity differ between the state in which the monkey’s prediction is correct and states in which the monkey is engaged in exploratory behavior or is incorrectly anticipating reinforcement.

Population response vectors for training and testing were generated by randomly sampling (with replacement) trial pools for each neuron 1,000 times. The average decoding performance, and its statistical significance and confidence intervals were estimated by repeating this procedure either 1,000 (Fig. 5A, B) or 10,000 times (Figs. 5C, 6, 7) (Golland et al., 2005). The significance of the decoding performance was determined by the percentage of partitions yielding a performance above 0.5 (chance level). Similarly, significant decreases in performance (Fig. 7) were defined by the percentage (95% or 99%) of partitions yielding a performance difference above 0.

Definition and quantification of encoding signal

The decoding algorithm employed allowed us to quantify the signal collectively encoded in the neural population regarding context by summing the contribution of each neuron to the decoding performance in discriminating Context 1 and Context 2. This is accomplished by weighting the preference (i.e. the difference in trial average activity) of each neuron by the weight that the decoder attributes to each neuron as a consequence of the training procedure (see Supplemental Experimental Procedures for details). We define the encoding signal S as the (weighted) average preference across the population with regards to the two conditions (Contexts 1 and 2). The encoding signal is a global measure of the “strength” of the signal that our particular decoder can use in order to discriminate between the two different contexts. Analogously to how we compute the encoding signal S we compute the corresponding encoding variance N, that measures the trial-to-trial variability of S (see Supplemental Experimental Procedures).

This method allows us to examine separately the encoding signal and the encoding variance, both of which may contribute to decoding performance. We could then determine whether a decrease in decoding performance between two experimental conditions is due to a decrease in signal (that quantifies the neural population selectivity), or an increase in variance (that quantifies the spike count variability across all neurons).

Comparison with alternative population decoder algorithms

The linear decoder described differs little from those using a Fisher discriminant method (Fisher, 1936). The Fisher discriminant method is equivalent to a multidimensional ROC analysis on the firing rates of all neurons simultaneously and is therefore very directly related to the activity of individual neurons. The decoding accuracy simply represents differences in mean spike counts between different conditions, averaged over the population by weighting each neuron with the strength of its selectivity.

The linear decoder we used is also tightly linked to conventional approaches such as regression analyses of single unit data. Figure S3 shows that the average weight (averaged across bagging folds within one training instance, see Supplemental Experimental Procedures) that the trained decoder attributes to each neuron is strongly correlated with the coefficient found when fitting the firing rate of each individual neuron with a simple linear regression model.

Supplementary Material

Highlights.

Monkeys used knowledge of contexts defined by task sets to predict upcoming rewards

Neurons in prefrontal cortex and the amygdala represented these abstract contexts

These representations reflected the linked sets of associations defining each context

Errors in performance correlated with reduced encoding of context in the amygdala

Acknowledgments

This work was supported by National Institutes of Health grant R01 MH082017, the James S. McDonnell Foundation, the Brain and Behavior Research Foundation and the Kavli Institute for Brain Science. S.O. was supported by the European Community’s Seventh Framework Programme through a Marie Curie International Outgoing Fellowship for Career Development and France’s Agence Nationale de la Recherche ANR-11-0001-02 PSL* and ANR-10-LABX-0087.

Footnotes

Author Contributions

A.S. and C.D.S designed the study with contributions from S.F.; A.S. performed the experiments; A.S., M.R., S.O., S.F. and C.D.S designed analyses; A.S., M.R. and S.O. performed analyses; A.S., M.R. and C.D.S wrote the manuscript with contributions from S.O. and S.F.

References

- Agustín-Pavón C, Braesicke K, Shiba Y, Santangelo AM, Mikheenko Y, Cockroft G, Asma F, Clarke H, Man MS, Roberts AC. Lesions of ventrolateral prefrontal or anterior orbitofrontal cortex in primates heighten negative emotion. Biol Psychiatry. 2012;72:266–272. doi: 10.1016/j.biopsych.2012.03.007. [DOI] [PubMed] [Google Scholar]

- Ambroggi F, Ishikawa A, Fields HL, Nicola SM. Basolateral amygdala neurons facilitate reward-seeking behavior by exciting nucleus accumbens neurons. Neuron. 2008;59:648–661. doi: 10.1016/j.neuron.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson MI, Jeffery KJ. Heterogeneous modulation of place cell firing by changes in context. J Neurosci Off J Soc Neurosci. 2003;23:8827–8835. doi: 10.1523/JNEUROSCI.23-26-08827.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Daw ND, O’Doherty JP. Multiple forms of value learning and the function of dopamine. Neuroeconomics Decis Mak Brain. 2008:367–385. [Google Scholar]

- Baxter MG, Murray EA. The amygdala and reward. Nat Rev Neurosci. 2002;3:563–573. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Bermudez M, Schultz W. Responses of amygdala neurons to positive reward-predicting stimuli depend on background reward (contingency) rather than stimulus-reward pairing (contiguity) J Neurophysiol. 2010;103:1158–1170. doi: 10.1152/jn.00933.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Todd TP. A fundamental role for context in instrumental learning and extinction. Behav Processes. 2014;104:13–19. doi: 10.1016/j.beproc.2014.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin E, Matsumoto M, Hong S, Hikosaka O. A pallidus-habenula-dopamine pathway signals inferred stimulus values. J Neurophysiol. 2010;104:1068–1076. doi: 10.1152/jn.00158.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckley M, Mansouri F, Hoda H, Mahboubi M, Browning P, Kwok S, Phillips A, Tanaka K. Dissociable Components of Rule-Guided Behavior Depend on Distinct Medial and Prefrontal Regions. Science. 2009;325:52–58. doi: 10.1126/science.1172377. [DOI] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. J Neurosci Off J Soc Neurosci. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carelli RM, Williams JG, Hollander JA. Basolateral amygdala neurons encode cocaine self-administration and cocaine-associated cues. J Neurosci Off J Soc Neurosci. 2003;23:8204–8211. doi: 10.1523/JNEUROSCI.23-23-08204.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JD, McClure SM, Yu AJ. Should I stay or should I go? How the human brain manages the trade-off between exploitation and exploration. Philos Trans R Soc Lond B Biol Sci. 2007;362:933–942. doi: 10.1098/rstb.2007.2098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins A, Koechlin E. Reasoning, learning, and creativity: frontal lobe function and human decision-making. PLoS Biol. 2012;10:e1001293. doi: 10.1371/journal.pbio.1001293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND. Trial-by-trial data analysis using computational models. Decision Making, Affect, and Learning: Attention and Performance. 2011;XXIII:3–38. [Google Scholar]

- Daw ND. Cognitive Search: Evolution, Algorithms and the Brain. MIT Press; 2012. Model-based reinforcement learning as cognitive search: neurocomputational theories. [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Doll B, Simon D, Daw N. The ubiquity of model-based reinforcement learning. Curr Opin Neurobiol. 2012;22:1075–1081. doi: 10.1016/j.conb.2012.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donoso M, Collins AGE, Koechlin E. Human cognition. Foundations of human reasoning in the prefrontal cortex. Science. 2014;344:1481–1486. doi: 10.1126/science.1252254. [DOI] [PubMed] [Google Scholar]

- Doya K. What are the computations of the cerebellum, the basal ganglia and the cerebral cortex? Neural Netw Off J Int Neural Netw Soc. 1999;12:961–974. doi: 10.1016/s0893-6080(99)00046-5. [DOI] [PubMed] [Google Scholar]

- Durstewitz D, Vittoz N, Floresco S, Seamans J. Abrupt transitions between prefrontal neural ensemble states accompany behavioral transitions during rule learning. Neuron. 2010;66:438–448. doi: 10.1016/j.neuron.2010.03.029. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Cardinal RN, Parkinson JA, Robbins TW. Appetitive behavior: impact of amygdala-dependent mechanisms of emotional learning. Ann N Y Acad Sci. 2003;985:233–250. [PubMed] [Google Scholar]

- Fisher RA. The Use of Multiple Measurements in Taxonomic Problems. Ann Eugen. 1936;7:179–188. [Google Scholar]

- Frankland PW, Bontempi B, Talton LE, Kaczmarek L, Silva AJ. The Involvement of the Anterior Cingulate Cortex in Remote Contextual Fear Memory. Science. 2004;304:881–883. doi: 10.1126/science.1094804. [DOI] [PubMed] [Google Scholar]

- Fusi S, Asaad WF, Miller EK, Wang XJ. A neural circuit model of flexible sensorimotor mapping: learning and forgetting on multiple timescales. Neuron. 2007;54:319–333. doi: 10.1016/j.neuron.2007.03.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Gershman S, Blei D, Niv Y. Context, learning, and extinction. Psychol Rev. 2010;117:197–209. doi: 10.1037/a0017808. [DOI] [PubMed] [Google Scholar]

- Ghashghaei H, Barbas H. Pathways for emotion: interactions of prefrontal and anterior temporal pathways in the amygdala of the rhesus monkey. Neuroscience. 2002;115:1261–1279. doi: 10.1016/s0306-4522(02)00446-3. [DOI] [PubMed] [Google Scholar]

- Ghashghaei H, Hilgetag C, Barbas H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. NeuroImage. 2007;34:905–923. doi: 10.1016/j.neuroimage.2006.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golland P, Liang F, Mukherjee S, Panchenko D. Permutation Tests for Classification. In: Auer P, Meir R, editors. Learning Theory. Springer; Berlin Heidelberg: 2005. pp. 501–515. [Google Scholar]

- Hampton A, Adolphs R, Tyszka M, O’Doherty J. Contributions of the Amygdala to Reward Expectancy and Choice Signals in Human Prefrontal Cortex. Neuron. 2007;55:545–555. doi: 10.1016/j.neuron.2007.07.022. [DOI] [PubMed] [Google Scholar]

- Hayman RMA, Chakraborty S, Anderson MI, Jeffery KJ. Context-specific acquisition of location discrimination by hippocampal place cells. Eur J Neurosci. 2003;18:2825–2834. doi: 10.1111/j.1460-9568.2003.03035.x. [DOI] [PubMed] [Google Scholar]

- Hirai D, Hosokawa T, Inoue M, Miyachi S, Mikami A. Context-dependent representation of reinforcement in monkey amygdala. Neuroreport. 2009;20:558–562. doi: 10.1097/WNR.0b013e3283294a2f. [DOI] [PubMed] [Google Scholar]

- Hobin J, Goosens K, Maren S. Context-dependent neuronal activity in the lateral amygdala represents fear memories after extinction. J Neurosci Off J Soc Neurosci. 2003;23:8410–8416. doi: 10.1523/JNEUROSCI.23-23-08410.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland PC, Bouton ME. Hippocampus and context in classical conditioning. Curr Opin Neurobiol. 1999;9:195–202. doi: 10.1016/s0959-4388(99)80027-0. [DOI] [PubMed] [Google Scholar]

- Holland P, Lamoureux J, Han JS, Gallagher M. Hippocampal lesions interfere with Pavlovian negative occasion setting. Hippocampus. 1999;9:143–157. doi: 10.1002/(SICI)1098-1063(1999)9:2<143::AID-HIPO6>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Behrens TEJ, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koechlin E. An evolutionary computational theory of prefrontal executive function in decision-making. Philos Trans R Soc Lond B Biol Sci. 2014;369 doi: 10.1098/rstb.2013.0474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux JE. Emotion circuits in the brain. Annu Rev Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- Mansouri FA, Tanaka K. Behavioral evidence for working memory of sensory dimension in macaque monkeys. Behav Brain Res. 2002;136:415–426. doi: 10.1016/s0166-4328(02)00182-1. [DOI] [PubMed] [Google Scholar]

- Mansouri F, Matsumoto K, Tanaka K. Prefrontal Cell Activities Related to Monkeys’ Success and Failure in Adapting to Rule Changes in a Wisconsin Card Sorting Test Analog. J Neurosci. 2006;26:2745–2756. doi: 10.1523/JNEUROSCI.5238-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maren S, Quirk GJ. Neuronal signalling of fear memory. Nat Rev Neurosci. 2004;5:844–852. doi: 10.1038/nrn1535. [DOI] [PubMed] [Google Scholar]

- Maren S, Phan L, Liberzon I. The contextual brain: implications for fear conditioning, extinction and psychopathology. Nat Rev Neurosci. 2013;14:417–428. doi: 10.1038/nrn3492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyers EM, Freedman DJ, Kreiman G, Miller EK, Poggio T. Dynamic population coding of category information in inferior temporal and prefrontal cortex. J Neurophysiol. 2008;100:1407–1419. doi: 10.1152/jn.90248.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milad MR, Quirk GJ. Fear extinction as a model for translational neuroscience: ten years of progress. Annu Rev Psychol. 2012;63:129–151. doi: 10.1146/annurev.psych.121208.131631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron. 2011;71:1127–1140. doi: 10.1016/j.neuron.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Wise SP. Interactions between orbital prefrontal cortex and amygdala: advanced cognition, learned responses and instinctive behaviors. Curr Opin Neurobiol. 2010;20:212–220. doi: 10.1016/j.conb.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niki H, Watanabe M. Prefrontal and cingulate unit activity during timing behavior in the monkey. Brain Res. 1979;171:213–224. doi: 10.1016/0006-8993(79)90328-7. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ. The cognitive control of emotion. Trends Cogn Sci. 2005;9:242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- O’Reilly RC. The What and How of Prefrontal Cortical Organization. Trends Neurosci. 2010;33:355–361. doi: 10.1016/j.tins.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Yan C, Maren S. Ensemble coding of context-dependent fear memory in the amygdala. Front Behav Neurosci. 2013;7:199. doi: 10.3389/fnbeh.2013.00199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad J. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Holland PC. Conditioning and cognition. Neurosci Biobehav Rev. 2004;28:651–661. doi: 10.1016/j.neubiorev.2004.09.003. [DOI] [PubMed] [Google Scholar]

- Prévost C, McNamee D, Jessup RK, Bossaerts P, O’Doherty JP. Evidence for Model-based Computations in the Human Amygdala during Pavlovian Conditioning. PLoS Comput Biol. 2013;9:e1002918. doi: 10.1371/journal.pcbi.1002918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quilodran R, Rothe M, Procyk E. Behavioral Shifts and Action Valuation in the Anterior Cingulate Cortex. Neuron. 2008;57:314–325. doi: 10.1016/j.neuron.2007.11.031. [DOI] [PubMed] [Google Scholar]

- Quirk G, Mueller D. Neural mechanisms of extinction learning and retrieval. Neuropsychopharmacology. 2008;33:56–72. doi: 10.1038/sj.npp.1301555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quirk GJ, Repa C, LeDoux JE. Fear conditioning enhances short-latency auditory responses of lateral amygdala neurons: parallel recordings in the freely behaving rat. Neuron. 1995;15:1029–1039. doi: 10.1016/0896-6273(95)90092-6. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A, Kurth-Nelson Z. Reconciling reinforcement learning models with behavioral extinction and renewal: implications for addiction, relapse, and problem gambling. Psychol Rev. 2007;114:784–805. doi: 10.1037/0033-295X.114.3.784. [DOI] [PubMed] [Google Scholar]

- Redish AD, Jensen S, Johnson A. A unified framework for addiction: vulnerabilities in the decision process. Behav Brain Sci. 2008;31:415–437. doi: 10.1017/S0140525X0800472X. discussion 437–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA. Behavioral Studies of Pavlovian Conditioning. Annu Rev Neurosci. 1988;11:329–352. doi: 10.1146/annurev.ne.11.030188.001553. [DOI] [PubMed] [Google Scholar]

- Rich EL, Wallis JD. Medial-lateral organization of the orbitofrontal cortex. J Cogn Neurosci. 2014;26:1347–1362. doi: 10.1162/jocn_a_00573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigotti M, Ben Dayan Rubin D, Morrison SE, Salzman CD, Fusi S. Attractor concretion as a mechanism for the formation of context representations. NeuroImage. 2010;52:833–847. doi: 10.1016/j.neuroimage.2010.01.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigotti M, Barak O, Warden MR, Wang XJ, Daw ND, Miller EK, Fusi S. The importance of mixed selectivity in complex cognitive tasks. Nature. 2013;497:585–590. doi: 10.1038/nature12160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts AC, Reekie Y, Braesicke K. Synergistic and regulatory effects of orbitofrontal cortex on amygdala-dependent appetitive behavior. Ann N Y Acad Sci. 2007;1121:297–319. doi: 10.1196/annals.1401.019. [DOI] [PubMed] [Google Scholar]

- Rudy JW, Huff NC, Matus-Amat P. Understanding contextual fear conditioning: insights from a two-process model. Neurosci Biobehav Rev. 2004;28:675–685. doi: 10.1016/j.neubiorev.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Saddoris MP, Gallagher M, Schoenbaum G. Rapid associative encoding in basolateral amygdala depends on connections with orbitofrontal cortex. Neuron. 2005;46:321–331. doi: 10.1016/j.neuron.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Sakai K. Task Set and Prefrontal Cortex. Annu Rev Neurosci. 2008;31:219–245. doi: 10.1146/annurev.neuro.31.060407.125642. [DOI] [PubMed] [Google Scholar]

- Salzman D, Fusi S. Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annu Rev Neurosci. 2010;33:173–202. doi: 10.1146/annurev.neuro.051508.135256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmajuk NA, Holland PC. Occasion setting: Associative learning and cognition in animals. Washington, DC, US: American Psychological Association; 1998. [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Senn V, Wolff S, Herry C, Grenier F, Ehrlich I, Gründemann J, Fadok J, Müller C, Letzkus J, Lüthi A. Long-range connectivity defines behavioral specificity of amygdala neurons. Neuron. 2014;81:428–437. doi: 10.1016/j.neuron.2013.11.006. [DOI] [PubMed] [Google Scholar]

- Shabel SJ, Janak PH. Substantial similarity in amygdala neuronal activity during conditioned appetitive and aversive emotional arousal. Proc Natl Acad Sci U S A. 2009;106:15031–15036. doi: 10.1073/pnas.0905580106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Cooch NK, McDannald MA, Liu TL, Wied H, Schoenbaum G. Orbitofrontal neurons infer the value and identity of predicted outcomes. Nat Commun. 2014;5:3926. doi: 10.1038/ncomms4926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stefanacci L, Amaral DG. Topographic organization of cortical inputs to the lateral nucleus of the macaque monkey amygdala: A retrograde tracing study. J Comp Neurol. 2000;421:52–79. doi: 10.1002/(sici)1096-9861(20000522)421:1<52::aid-cne4>3.0.co;2-o. [DOI] [PubMed] [Google Scholar]

- Stefanacci L, Suzuki WA, Amaral DG. Organization of connections between the amygdaloid complex and the perirhinal and parahippocampal cortices in macaque monkeys. J Comp Neurol. 1996;375:552–582. doi: 10.1002/(SICI)1096-9861(19961125)375:4<552::AID-CNE2>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- Stokes M, Kusunoki M, Sigala N, Nili H, Gaffan D, Duncan J. Dynamic Coding for Cognitive Control in Prefrontal Cortex. Neuron. 2013;78:364–375. doi: 10.1016/j.neuron.2013.01.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- Tye KM, Stuber GD, de Ridder B, Bonci A, Janak PH. Rapid strengthening of thalamo-amygdala synapses mediates cue-reward learning. Nature. 2008;453:1253–1257. doi: 10.1038/nature06963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallis J, Anderson K, Miller E. Single neurons in prefrontal cortex encode abstract rules. Nature. 2001;411:953–956. doi: 10.1038/35082081. [DOI] [PubMed] [Google Scholar]