Abstract

There is growing consensus about the factors critical for development and productivity of multidisciplinary teams, but few studies have evaluated their longitudinal changes. We present a longitudinal study of 10 multidisciplinary translational teams (MTTs), based on team process and outcome measures, evaluated before and after 3 years of CTSA collaboration. Using a mixed methods approach, an expert panel of five judges (familiar with the progress of the teams) independently rated team performance based on four process and four outcome measures, and achieved a rating consensus. Although all teams made progress in translational domains, other process and outcome measures were highly variable. The trajectory profiles identified four categories of team performance. Objective bibliometric analysis of CTSA‐supported MTTs with positive growth in process scores showed that these teams tended to have enhanced scientific outcomes and published in new scientific domains, indicating the conduct of innovative science. Case exemplars revealed that MTTs that experienced growth in both process and outcome evaluative criteria also experienced greater innovation, defined as publications in different areas of science. Of the eight evaluative criteria, leadership‐related behaviors were the most resistant to the interventions introduced. Well‐managed MTTs demonstrate objective productivity and facilitate innovation.

Keywords: translational research, multidisciplinary teams, team evolution

Introduction

The case for teams in translational science

Biomedical investigators are increasingly challenged to integrate insights from high‐throughput “omics” research, focused hypothesis‐driven basic research, and epidemiological studies to advance human health. Consequently, to understand larger biosocial health problems requires utilization of interdisciplinary research teams.1 Major biomedical science advances are more often the result of multiinvestigator studies, and collaborative work has higher scientific impact and utility.2, 3

Translational research has also increasingly adopted interdisciplinary approaches. Accordingly, there is considerable potential to apply knowledge from the nascent field of the “Science of Team Science” to facilitate translational research within the Clinical and Translational Sciences Award (CTSA) framework.4, 5, 6 Based on experience in other fields, it is reasonable to suggest that effective adoption of team approaches would accelerate translation of new discoveries into useful products and/or interventions.7 Previously, we evaluated best practices from a systematic survey of 200 publications and proposed an academic‐industry hybrid team model, the Multidisciplinary Translational Team (MTT) to generate new knowledge and deliverable products.8 MTTs are a unique combination of several team types adapted for an academic environment with a dynamic core of scientists who interact on a common translational problem. Senior leadership provides domain‐specific expertise, practical guidance, and modeling of interprofessional and leadership skills to trainees (project managers). MTTs require complementary activity of its participants, but not necessarily domain integration, which is a goal of transdisciplinarity.8

The status of team science

Team science presently incorporates multiinstitutional collaborations,2, 3 multidisciplinary approaches to complex research,9 and training of scientists.10 Because the field is rapidly evolving, theoretical frameworks for studying the formation, evolution, and effectiveness of teams are in their infancy.5, 6, 7 The effects of institutional context and interventions on translational research teams are also unclear.6, 9

Instantiation of MTTs in the CTSA

MTTs are composed of a strategic core structure, with academically defined individual scientific roles including those of a principal investigator, collaborating scientists from multiple disciplines, a project manager, and trainees. The strategic core may change over time as projects are initiated and concluded; these dynamic changes influence collaboration and performance within the team. MTTs were selected for CTSA support through an RFA mechanism that involves competitive peer review by a Scientific Review Committee, via a two‐level review process, with the first component focused on scientific impact and innovation of the proposed science (a translational research project involving human subjects). The second tier of peer review focused on team leadership, qualities of the principal investigator(s), involvement of trainees, and a team development plan. The CTSA embedded a team coach who regularly met with the MTT, and worked to improve the development plan in order to increase team effectiveness and capacity. MTTs selected for funding received a start‐up pilot grant as well as access to CTSA‐supported Key Resources, and focused their initial effort on conducting a specific collaborative translational project. Currently, 14 teams collaborate with the CTSA, involving 273 investigators (mean = 21 ± 9.8 members/MTT); of these, 59 are graduate/postgraduate students (mean = 4.9 ± 6 trainees/MTT) and 54 are junior faculty trainees (mean = 4.1 ± 3 assistant professors/MTT). All CTSA‐supported KL2 scholars participate in MTTs. At typical MTT meetings, total attendance ranged from 4–15 attendees.

General CTSA interventions

The CTSA provided several important infrastructure and team development interventions to all MTTs. Teams underwent an “onboarding” meeting at their initiation to orient the team to the CTSA, and to familiarize team members with the constructs of team science, operational considerations of the CTSA, and mutual expectations. Subsequently, the CTSA helped each team to produce a Team Development Planner, in which specific tasks and objectives related to team and individual (e.g., a Project Manager) development were articulated. We organized a weekly lecture series in translational science, and special monthly seminars in team science. To facilitate communication and interteam collaboration within the CTSA, the CTSA leadership also organized a Team Leadership Council (TLC), which functioned as a peer mentoring network. The TLC met monthly to share best practices in team leadership, and review team progress. CTSA leadership conducted periodic meetings with MTT leadership, and periodically attended MTT meetings and provided feedback. The CTSA also organized a team‐building workshop, in which all MTTs participated. Other general support included a postdoctoral certificate in team science, a rich set of team science resources on our Web site, and ad hoc communications with CTSA leadership.

Measuring team performance

In an academic environment, an MTT may persist for years; thus, it is essential to measure how teams evolve, whether effective teams lead to increased scientific progress, and how institutional infrastructure can better promote productivity and innovation. To understand the evolution of MTTs, we generated mixed method assessments of the outcomes and team processes of MTTs using expert reviewers.11 We studied a cohort of teams and developed a reproducible classification from which to characterize the time‐dependent evolution of MTTs.

A fundamental limitation in studying team science is the lack of sophisticated and useful evaluation models, techniques, and methods.5, 12 Due to the complexity of team science, most recognize the need to employ mixed methods approaches,7, 13 including social network analysis,14, 15 interviews and focus groups,16, 17 as well as surveys.12, 18

Evaluation methodology is informed by the outcomes important for specific team structures. In some cases, outcome evaluation (e.g., milestones and timelines) should predominate, but in other cases, process evaluation (e.g., team interaction, communication, and development) may be more important.11 Hence, a key factor in evaluation is determining the components of team function that are most important to that team's scientific questions.9, 19 A range of contextual (e.g., external environment, task design, group size), process (e.g., norms, cohesion, communication patterns), and cognition variables (e.g., mental models, efficacy beliefs, and memory) influence effective team states (e.g., satisfaction, commitment) and outcomes (e.g., performance, publications, grants, turnover, etc.).20, 21, 22, 23

We built upon a previous effort11 and examined translational team longitudinal performance trajectories. There were three broad objectives of this study. First, we measured team performance (team processes and team outcomes) over time. Second, we identified team trajectory patterns, and used illustrative case information to understand context. Third, we developed recommendations for team assembly, management, and developmental interventions.

Methods

Overview of mixed method approach

Because interdisciplinary teams involve collaborations that change over time, blend diverse disciplines,6 and involve boundary‐spanning collaborations,12, 24 assessment and evaluation are inherently complex.7 Some consensus exists that mixed method approaches might best address these complexities, and allow for analyses that neither qualitative nor quantitative approaches could provide by themselves.25, 26 Application of mixed methods involves thoughtful selection of design options, careful sampling practices, and a sophisticated process of data analysis and inference.25, 27 Extending our previous efforts,8, 11 we employed an approach using various data sources: scored grant applications, milestone completion reports, citation indices, team meeting notes, Web‐based surveys, observation scales, and team development planners. Outcome‐based evaluation here refers to team‐based goal accomplishment (e.g., milestones, publications, etc.), and process evaluation refers to team‐based processes (e.g., leadership, collaboration, team development, etc.).

Team evaluation criteria

Using data from these measures and methods, we applied an existing team evaluation model.11 Data from qualitative and quantitative sources above were reduced to produce a balanced panel of eight criteria (Table 1), including four process measures and four outcomes measures.

Table 1.

Evaluation factors/criteria and list of teams

| Research/scientific outcome factors | Team process development factors |

|---|---|

| RP: research plan | VC: vision and charter |

| Novel and sophisticated plan | Established identity |

| Conceptually or technically innovative | Shared vision of future |

| Developed from team consensus | |

| RG: research generation | TL: transformative leadership |

| Productivity, data collection, analysis | Leader solicits and integrates contributions from team |

| Appropriate use of key resources | Leader builds consensus |

| Periodic exploration of new opportunities | |

| Explore synergy | |

| RC: research communication/program growth | MM: meeting management |

| Publications | Regular meetings |

| Grant application success | Agenda‐driven meeting |

| PT: progress in translation | EC: external communication/collaboration |

| Clinical or community impact | Interaction with collaborators beyond the team and outside the institution |

| Additive or synergistic research productivity | |

| Teams: | |

| Addictions and impulse control disorders | Aging muscle and sarcopenia |

| Burns injury and response | Epidemiology of estrogens |

| Hepatocellular carcinoma biomarkers | Maternal fetal medicine |

| Novel therapeutics for Clostridium difficile | Obesity and its metabolic complications |

| Pediatric respiratory infections—bronchiolitis | Pediatric respiratory infections—otitis media |

| Phenotypes of severe asthma | Reproductive women's health |

Data collection

Data from 10 different MTTs were collected at two different times during 2011 and 2013. These teams were diverse in disease focus; research areas are summarized in Table 1 and were previously described.11

Using eight criteria (Process measures: Vision and Charter [VC]; Transformative Leadership [TL]; Meeting Management [MM]; External Communication/Collaboration [EC]; Outcome measures: Research Plan [RP]; Research Generation [RG]; Research Communication [RC]; Progress in Translation [PT]; Table 1), expert panel members reviewed reports, data tables, publication statistics, narrative documents complied from interviews with team leaders, initial funding documents, scored grant applications, and annual reports. Each specific evaluative criterion (e.g., four research/scientific and four team development/maturation) was scored independently. For each team, expert panel members scored each of the eight criteria as 0 (not present), 1 (low), 2 (medium), or 3 (high). Each domain had four criteria so total scores ranged from 0 to 12 for each domain (i.e., 0–12 for research/scientific progress and 0–12 for team maturation/development).

After panel members rated each team independently, the panel came together to reach a consensus rating on each criterion. A consensus team rating on each criterion was achieved as follows: (1) presentation of independent ratings, (2) explanation from each expert panel member supporting their initial rating, (3) discussion of differences in ratings, and (4) consensus reached for each criterion. This approach was modeled on the consensus‐building approach used during scientific review panels for grant and contract applications.

Use of expert panels

Although imperfect,28, 29 expert panels are often considered the best way to evaluate scientific endeavors,30, 31 including grant application reviews and editorial boards. We used expert panels to balance objective data with contextual information to evaluate our MTTs.32, 33 Evaluative judgment is a helpful addition to pure metrics in evaluating translational research.34

Our panel consisted of five members: the CTSA Principal Investigator, the Director and Assistant Director of Coordination for the CTSA, a Consulting Team Coach (team development expertise), and a Consulting Team Evaluator (business team performance evaluation expertise). The same methodology was employed for both evaluation periods.

Quantitative and qualitative analysis

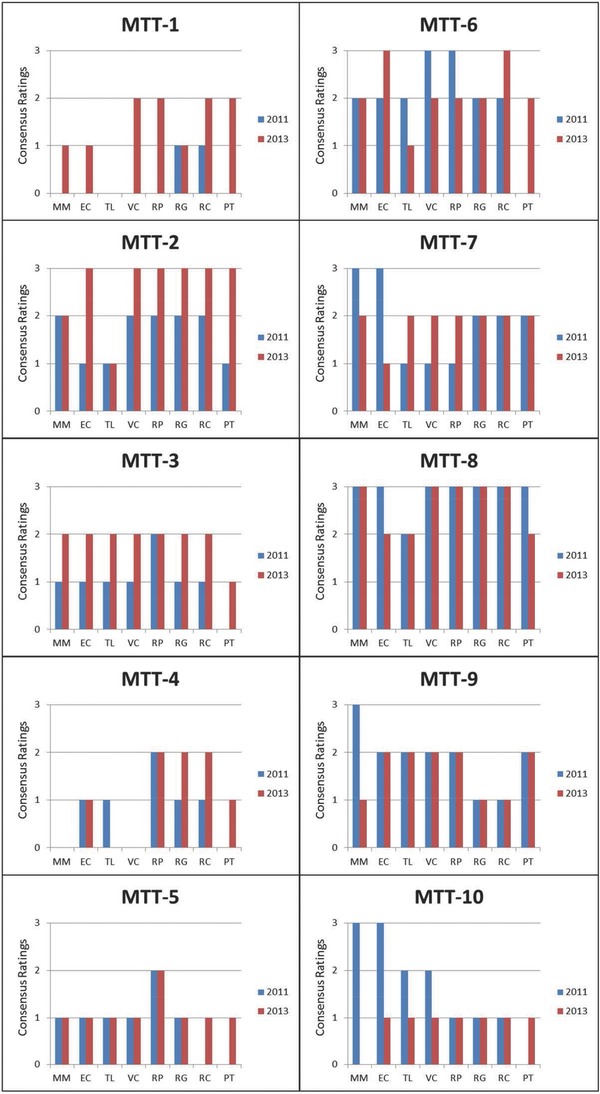

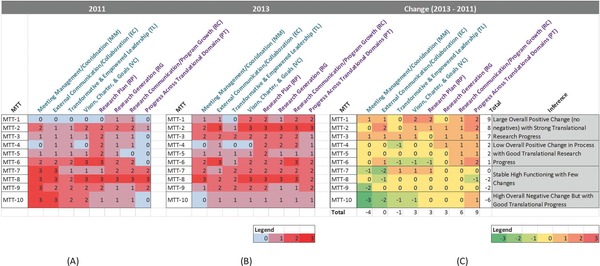

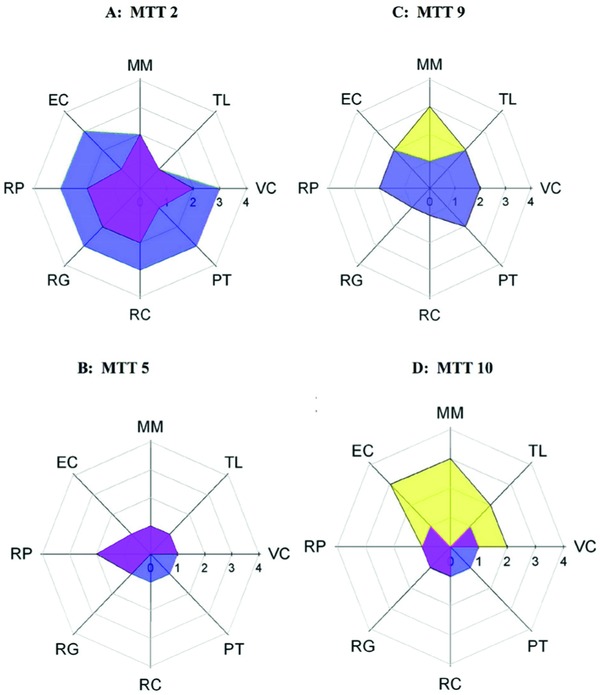

Consensus ratings were analyzed and displayed using three methods: (1) bar graphs of the entire data set (Figure 1), (2) sorted heatmaps to highlight the similarity and differences between the MTTs (Figure 2) and to identify four MTT groups, and (3) radar plots of the eight evaluation criteria plotted on the same axes to demonstrate temporal differences between 2011 and 2013 (Figure 3). We selected one team from each of the identified groups as an exemplar, and developed a case study for each of those four teams.

Figure 1.

Bar charts showing how each of the 10 MTTs changed based on eight consensus scores (four process and four outcome represented on the horizontal axis), from 2011 (blue bars) to 2013 (red bars). VC = vision, charter, goals; TL = transformative and empowered leadership; MM = meeting management/coordination; EC = external communication/collaboration; RP = research plan; RG = research generation; RC = research communication/program growth; PT = progress across translational domains.

Figure 2.

Sorted heatmaps showing the eight consensus scores (four process and four outcome) of 10 MTTs in (A) 2011, (B) 2013, and (C) the change in scores between 2011 and 2013. The sorted heatmaps revealed four categories of change across the MTTs. See Case Studies and Table 1 for additional details.

Figure 3.

Radar graphs depicting eight team variables (four outcome and four process) of four exemplar teams. Axes represent four research output factors and four team process factors. Outcomes are (clockwise from 10 o'clock) PROCESS: EC = external communication/collaboration; MM = meeting management; TL = transformative leadership; VC = vision and charter; RESEARCH: PT = progress in translation; RC = research communication and program growth; RG = research generation; RP = research plan. For other details, see Table 1. Data from 2011 are shown in yellow and data from 2013 are shown in purple. Areas of overlap, which represent outcomes that have been maintained or improved in 2013 versus 2011, are shown in magenta.

Sorted heatmaps

We constructed three heatmaps, representing the consensus scores in 2011 (Figure 2 A), in 2013 (Figure 2 B), and the change from 2011 to 2013 (delta heatmap, Figure 2 C). In each heatmap, rows represented the 10 MTTs, and the columns represented the eight process and outcome measures. The rows (MTTs) in the delta heatmap were then sorted in ascending order based on their total process and outcome scores, and the columns (outcomes) were sorted in ascending order based on their total scores across the MTTs. The resulting order of the rows and columns in the delta heatmap was subsequently used to reorder the rows and columns in the 2011 and 2013 heatmaps.

Case studies

The sorted heatmaps suggested four categories of MTT trajectories; we wished to understand the contextual details that might underlie these trajectories. Our qualitative examination was accomplished using notes (team observations, team coach notes, etc.) collected by the evaluation team. We selected one exemplar from each of the four categories and qualitatively examined its composition, dynamics, and interventions. These insights led to generalizable recommendations for interventions best suited for the four different team types.

Results

Heterogeneity in longitudinal patterns

We observed considerable heterogeneity in MTT trajectories (Figure 1) for each of the four process variables (VC, TL, MM, EC) and four outcome variables (RP, RG, RC, PT). For example, MTT‐5 had low scores overall across both years, MTT‐8 had high scores overall across both years, and MTT‐3 showed strong positive change. However, this display offered little insight on commonalities and differences among the MTTs.

Categorization of teams and variables based on longitudinal patterns

Sorted heatmaps were generated to display patterns in the evaluation scores. Figures 2 A, B show the scores for each of the 10 MTTs in 2011 and 2013, respectively. Figure 2 C shows the difference between the 2013 and 2011 scores, depicting change over time. The 2011 and 2013 heatmaps together provide context to understand the delta heatmap. For example, a no‐change result in the delta heatmap (delta = 0) could result from two equally low scores (e.g., 2011 = 0, and 2013 = 0) or two equally high scores (e.g., 2011 = 3, and 2013 = 3), both of which yield the same delta result but reflect different starting conditions.

This overall method enabled us to analyze how the MTTs changed over time and how starting and ending scores influenced the trajectories. The “Total” score (Figure 2 C) ranged from +9 to ‐6. This range had three natural breaks, which were used to categorize the MTTs into four groups.

The first group included three MTTs (MTT‐1, MTT‐2, MTT‐3) that overall had large positive changes with no negative values. These three MTTs began with low (0) to medium (2) starting scores in 2011 (Figure 2 A), and ended with several medium to high (3) scores in 2013 (Figure 2 B). These three MTTs had strong positive changes in the four research outcome measures.

The second group (MTT‐4, MTT‐5, MTT‐6) demonstrated a different profile, in which many of the consensus values either did not change (0) or decreased (–1) over time.

The third group (MTT‐7, MTT‐8, and MTT‐9) had an overall decline or no change in total measures. These three MTTs had many unchanged (0) and two high negative changes (–2). This assessment could indicate a low‐functioning group, but review of Figures 2 A, B suggests that these MTTs were high‐functioning groups, starting with maximum scores of 3 for many of the measures. Therefore, even though these MTTs had medium (2) to high (3) scores in 2013, the differential change can be described as a ceiling effect. Collectively, the three MTTs in this group can be regarded as stable high‐functioning groups over time.

Finally, the fourth group (MTT‐10) had a strong overall negative score. This MTT had negative changes for all process scores and no change (0) for three of the four outcome measures. As shown in Figure 2 A, this MTT in fact started out with medium to high process scores, which fell over time resulting in a negative change score overall (Figure 2 C).

MTTs appear to have had the most difficulty in improving “meeting management” and “transformative leadership” among the process variables and most success in improving “vision charter” (Figure 2 C). In contrast, the MTTs had higher change in outcome variables, including a relatively high score (+9) for “progress across translational domains.”

Although the above sorted heatmap analysis suggested categorical groupings of MTT trajectories, it provided little causal explanation. We therefore analyzed an exemplar MTT from each group using a qualitative case study approach.

Case Studies of Exemplar Teams

MTT‐2: Exemplar of large overall positive change in process and outcome scores with strong translational research progress

Team composition and dynamics

MTT‐2 is a “legacy MTT,” instantiated prior to the initiation of our CTSA. The team has a decades‐long history of international leadership in the field. The structure of the team was prototypical of academic teams of the 1980s and 1990s, namely, highly hierarchical, centralized decision making, leadership‐based external collaborations, strong extramural funding, and exceptional publication productivity and impact. This MTT typifies many academic research groups in structure and function.

Translational project

MTT‐2 has focused on identifying predictors of mortality in severe trauma, and proposing and testing pharmacologic interventions to mitigate mortality. In 2011, their work spanned the T1 (preclinical) through T2 (observational clinical trials). In 2013, their work progressed from T2 to T3 (influencing medical practice) domains.

CTSA interventions

The CTSA provided substantive interventions in both process and infrastructure. In the area of process, we proposed a gradual migration of leadership to a junior faculty member who took the role of “Project Manager” in the team. The purpose of this intervention was to reduce the administrative burden on the senior leader by offloading those tasks to the project manager, and to provide leadership succession planning. The project manager was funded by the CTSA as a KL2 scholar, and shouldered administrative tasks of meeting and agenda planning, meeting management, project management (milestones, timelines, and task accountability), and took a larger role in external communications (national meeting presentations, manuscript preparation, etc.).

In addition, the CTSA provided biostatistical support for the analysis of a large data set to understand the role of pathogenesis of injury responses. This analysis, coupled with additional systems biology insights from CTSA members, led to an innovative clinical trial. Other critical CTSA support included expertise to develop and implement a secure REDCap database for acquiring and managing an extensive data set, and editorial assistance for a grant application. The CTSA provided support from the Ethics Key Resource, which allowed the team to develop a comprehensive ethical framework in which to conduct clinical trials, and to develop better methods to increase participation and retention of clinical trial participants.

Process and outcomes

An important process goal was to develop a team structure that elicited and encouraged substantive contributions from all members. Accomplishing this goal entailed developing a shared vision and charter, a restructuring of team roles and dynamics, and increasing flexibility on the part of team members to accept new roles and tasks. The objective outcomes of these interventions included a large federally funded clinical trial network, an expanded network of collaboration, both within and beyond Texas, and continued high grant and publication productivity for the team. Moreover, MTT‐2 has initiated several multicenter clinical trials designed to reduce morbidity and mortality. Publication productivity for MTT‐2 remained very strong (in part, related to the large size of the team), as did journal impact index, and average journal impact factor (Table 2). As a consequence of the broader range of expertise in the team, the breadth of fields represented in team publications was also enlarged. This effect is seen not simply as a result of several disciplines being included in the team, but by real collaborative publications with authors from different disciplines on each publication. Over half (27 of 50) of the postintervention publications by MTT‐2 were in new/different scientific categories (Table 3). Balanced growth across all measured parameters over time, in both team process and team outcomes, is illustrated in Figure 3 A.

Table 2.

Bibliometrics for selected teams

| Period | Baseline 3 years | Postintervention 3 years | ||||

|---|---|---|---|---|---|---|

| Team | Total publications* | Impact index† | Average impact factor‡ | Total publications* | Impact index† | Average impact factor‡ |

| MTT‐2 | 197 | 830 | 4.21 | 169 (–14)§ | 686 (–17)§ | 4.06 |

| MTT‐5 | 6 | 34 | 5.62 | 15 (150) | 49 (44) | 3.26 |

| MTT‐9 | 17 | 156 | 9.15 | 30 (94) | 268 (72) | 8.95 |

| MTT‐10 | 15 | 92 | 6.14 | 9 (–40) | 54 (–41) | 6.01 |

*Total publications for team in 3‐year period, in PubMed‐indexed journals.

†Sum of the products of number of papers published in a specific journal multiplied by the published journal impact factor (Thompson‐Reuters) for that journal.

‡Impact index divided by total publications.

§Numbers in parentheses represent % change, period‐on‐period.

Table 3.

Changes in Web of Science published source titles and scientific categories by exemplar team

| Publication by scientific category | Publication by source title | |||||

|---|---|---|---|---|---|---|

| Team | Baseline | Postintervention | New categories | Baseline | Postintervention | New categories |

| Team 2 | 49 | 50 | 27 | 50 | 50 | 27 |

| Team 5 | 3 | 3 | 2 | 6 | 3 | 3 |

| Team 9 | 5 | 9 | 5 | 17 | 30 | 11 |

| Team 10 | 7 | 6 | 1 | 7 | 5 | 4 |

Insights about team type and appropriate interventions

The CTSA interventions were not without risk. The legacy MTT‐2 was well‐funded, highly productive, internationally known, and efficiently managed. Disruptive interventions would be counterproductive to our overall goals of enhancing translational research. Hence, our approach was to use the resources of the CTSA, noted above, to provide incentives to applying team science approaches to the clinical problem of interest to them. Moreover, as additional success accrued to the team members as a consequence of incorporating the changes, subtle but important cultural shifts occurred within the team, reinforcing the productivity of the team.

This case exemplifies the prototypical productive academic research group and shares commonalities with other MTTs in that group (MTT‐1, MTT‐2, MTT‐3). The lessons and insights gained from this MTT are likely generalizable to similar teams at other institutions. The traditional reward structure recognizes, supports, and encourages a traditional leadership style, and devalues teamwork. These observations include the critical importance of articulating team science benefits, providing tangible support, and incorporating team science values and processes as part of the ongoing workgroup productivity, rather than imposing an entirely new structure on an existing group.

MTT‐5: Exemplar of marginal overall positive change in process and outcome scores, with good translational progress

Team composition and dynamics

MTT‐5 was initiated in our first round of MTT funding (second year of the CTSA) as a “new team” and demonstrated considerable differences from MTT‐2. The expertise of MTT‐5 was highly focused on basic sciences and scientific technology, assay development, and the protein structures that underlie a predictive biomarker. The MTT however, did not have a clinical scientist with expertise and ongoing clinical interest in the disease area of focus, nor a pool of well‐characterized patients. Consequently, MTT‐5 had limited access to human clinical samples from patients with that disease, and no clinical trial coordinators to operationalize enrollment and sample collection. Finally, despite considerable basic science expertise, there was minimal experience on the team to lead an interdisciplinary project.

Translational project

MTT‐5 work had interest in viral carcinogenesis and sought to identify biomarkers that associate with the transition from benign to malignant disease. By 2013, the MTT had established a proteomic marker, developed a clinical assay for its validation, and had added an independent nucleic marker to the panel. The scope of science of MTT‐5 was largely in T1.

CTSA interventions

The purpose of the CTSA interventions was to ensure that MTT‐5 had sufficient expertise to accomplish the goal of predictive biomarker development. Necessary expertise included a physician with expertise in the disease and organ system of interest, and a clinical coordinator who could manage day‐to‐day project tasks of subject recruitment and sample collection. The Novel Methodologies Key Resource provided access to mass spectrometer‐based selective reaction monitoring (SRM) techniques that accelerated the analysis of proteins of interest in biospecimens. Biostatistics expertise was inserted into the team to facilitate interim and final analyses and to improve experimental design. Process interventions included training of a project manager in business practices, meeting management (agendas, minutes, Web‐based meeting management repository), establishing team consensus on milestones, timelines, and procedural obstacles.

Process and outcomes

The incorporation of the expertise of a clinician‐investigator accelerated the identification of patients who might be recruited as research subjects. That expertise also informed discussion about the ultimate utility of the developmental biomarker. The CTSA provided a clinical trial coordinator to the team, whose responsibility was to attend various geographically dispersed clinics, and enroll identified patients into the trial. Sample collection, indexing, preliminary processing, and biobanking were also facilitated by the coordinator. The team has developed a candidate biomarker panel which, if validated, would significantly impact the practice of medicine in this field. This example of basic science impacting clinical medicine and decision making is a prototype of translational medicine. The strong basic science performance in assay development was complemented by clinical knowledge and accelerated sample acquisition. Although the process measures have not yet improved, the measurable progress in sample acquisition, sample analysis, and biostatistical insights demonstrate translational progress. Publication productivity rose from 6 to 15 publications, the publication impact index rose from 34 to 49 (Table 2), and the team published in new areas of science and new journals (Table 3). Collectively, the intervention of the CTSA has led to measurable acceleration of translational science. MTT‐5 team growth was observed in all measured parameters, in a balanced fashion, with the initial starting points approximating zero prior to team initiation, and expanding in 2013 (Figure 3 B).

Insights about team type and appropriate interventions

A key limitation of MTT‐5 was the lack of clinical and clinical research expertise. In translational science, the perspective of clinical medicine must always be present, if not always in the forefront. This expertise is necessary for intellectual, conceptual, and practical reasons. Clinical expertise helps to frame questions and concepts properly, so that the most important questions are addressed in the most impactful manner. Clinical expertise is also required for facile access to human specimens. Advances in basic science and technology clearly can impact the diagnosis and management of clinical disorders. Teams early in translation (basic science‐rich) should be encouraged to include clinical researchers in order to minimize obstacles that will otherwise likely be encountered later in the translational pathway, and clinical teams should be encouraged to include strong basic science members to ensure that the best science and technology are applied to the clinical question.

MTT‐9: Exemplar of stable high‐functioning team with few changes in process and outcome

Team composition and dynamics

MTT‐9 was initiated coincident with funding of our CTSA program, and thus was an “initial team.” Individually, its members had significant productivity and participated in two national disease networks. The formalization of this team presaged the MTT concept, a foundation of our CTSA program. We postulated that by incorporating the expertise of other disciplines, we could augment the productivity of the national disease networks. Accordingly, systems biology, proteomics, biostatistics, and bioinformatics were formally incorporated into the team. This team intentionally used senior specialty trainees in the role of project manager to provide leadership training. Advantages of this approach included: (1) the ability of trainees, with fewer conflicting responsibilities, to manage the logistical requirements of the team, (2) the acquisition of business skills (e.g., meeting and agenda management) and interpersonal skills (e.g., conflict management) by trainees, and (3) an opportunity for the trainee to have a formalized role in determining scientific directions. However, there were important limitations of this approach. Periodic change in the project manager due to completion of training resulted in disruption of the continuity of project flow. In addition, some trainees initially had insufficiently well‐developed skill sets to manage effectively a multidisciplinary group of high‐performing, highly motivated faculty.

Translational project

The team used cytokine expression from an organ‐specific biofluid obtained both locally and from the national networks, to develop a quantitative method for producing a molecular phenotype of subjects with mild to severe expression of the disease of interest, using existing standardized biostatistical tools and approaches. The scope of MTT‐9 was largely in T1 and T2.

CTSA interventions

Standard analytical methodologies for complex diseases are largely descriptive and do not inform understanding of the mechanisms underlying disease heterogeneity. To address that concern, the CTSA introduced a new team member with strong expertise in novel visual analytic methods. The purpose of the intervention was to draw additional mechanistic insights about heterogeneity from the existing data sets. Using bipartite networks, MTT‐9 identified several spatial clusters of cytokine expression that mapped to patient clusters of varying disease severity, and suggested that activation of a specific pathway was causally linked to severe disease. These insights provided a possible mechanism‐based disease classification. The CTSA also provided important infrastructural support via the Web‐based information sharing portal iSpace for vision and charter documents, agendas, protocols, and minutes.

Process and outcomes

This team had strong publication productivity with high‐impact manuscripts, novel publication venues, and high interdisciplinarity, but faced a temporary shortfall in extramural funding, which constrained the scope of projects conducted during that time period. Because the team members had been quite productive prior to the instantiation of the MTT, team metrics demonstrate a ceiling effect. Nevertheless, publications were strong both before and after CTSA intervention; the publication impact index rose from 156 to 268 (Table 2), and an impressive change in different publications in different scientific categories and publication outlets was seen (Table 3). The negative change in process measures may have resulted from the rapid turnover among project managers. These observations of relatively stable high functioning are depicted in Figure 3 C.

Insights about team type and appropriate interventions

MTT‐9 chose to use relatively junior team members in the role of project manager. In our MTT model, the project manager serves a role similar to a chief operating officer, with responsibility for managing day‐to‐day project flow, scheduling, logistics, timelines, and deliverables, and for helping to create a culture of accountability. This approach must necessarily incorporate a formalized plan for leadership succession, as trainees have a limited time at their training institution, and potentially disruptive transitions will occur. More generally, teams with established investigators members may face similar challenges if a key leader moves to another institution. Many, but not all, trainees have the necessary skills (or can quickly acquire them with appropriate mentorship) to flourish in this role. Careful vetting of potential project managers is critical to ongoing team success and productivity, and individualized mentoring focused on acquisition of specific skills can mitigate limitations.

MTT‐10: Exemplar of strong overall negative change in process and outcome, but with translational progress

Team composition and dynamics

MTT‐10 was funded by a pilot MTT award near the middle of our CTSA funding cycle, and hence is a “new team.” It comprised three senior investigators, two of whom had a sustained record of extramural funding. The area of study had important implications for both biology and public policy. This team began in the style and structure of a collaborative work group, rather than a multidisciplinary team, in that low scores were recorded for team process measures such as empowered leadership, shared vision, etc. (see Figure 3 D). Communications among team members were judged to be less than optimal, and the project management, communication, and business management skill set of the initial project manager did not align with the needs of the team. Ultimately, a new project manager emerged.

Translational project

MTT‐10 explored the novel hypothesis that in utero exposures could result in fetal reprogramming that would lead to expression of disease in children and ultimately in adults. The scope of projects undertaken by MTT‐10 was in the T1 domain.

CTSA interventions

CTSA interventions for this team included the initial infusion of funds to support team development and scientific project work and editorial assistance for preparation of manuscripts and grant applications.

Process and outcomes

The team developed a proposal for a small federal grant which was successfully submitted, and later funded. However, objective research productivity fell both in terms of number of publications (15 to 9) and publication impact index (92 to 54), but the average journal impact factor remained stable at about 6 (Table 2). Few new scientific areas or publication titles were seen (Table 3). Contraction of the performance plot (Figure 3 D) is seen from 2011 to 2013.

Insights about team type and appropriate interventions

An important lesson from analysis of this team's trajectory is that careful vetting of the team leadership skills of the project manager is critically important to ongoing team success. This observation was also made in MTT‐1, an initial team. Hence in two teams, quite different in vision, composition, and scope, the same observation about the critical need for skills assessment in the leadership team can be made. It is important to note that the deterioration in team function was recognized and CTSA interventions included team coaching sessions, leadership meetings, and TLC interactions.

Discussion and Implications

Summary of findings and interventions for four team type trajectories

On the basis of expert panel evaluations, we observed four discrete team trajectories. Understanding team trajectories may enable CTSA investigators to provide needed leadership, resources, and proactive guidance through team interventions, to maximize the potential of multidisciplinary teams. We highlight four team types as prototypes: (1) teams with traditional leadership, (2) teams focused on basic science, (3) stable high‐functioning teams with junior project managers, and (4) teams with inexperienced leaders.

Teams with traditional leadership

This team type is a traditional academic research group in which productive and thoughtful leaders can embrace team science principles, resulting in rapid novel and productive research outcomes. As exemplified by MTT‐2, many academic research teams have a structure dominated by the Principal Investigator; within that structure, two or more relatively independent project groups may report to the PI. Because the culture of research productivity is already in place, CTSA efforts can be directed toward development of team science by providing incentives for team process development and by properly attributing the successes of the team to team members not just the team leader. The balanced growth in nearly all process and outcome measures seen in MTT‐2 (see Figure 3 A) is exemplary.

Teams focused on basic science

This team type is illustrated by basic science groups that study specific diseases and can benefit from the added perspective and advice of clinically trained team members to convey the significance and health‐relatedness of the research. Translational progress will be impaired by the lack of such expertise, as exemplified in MTT‐5. Accordingly, the CTSA intervention can be the provision of necessary expertise to the team to accelerate translation.

Stable high‐functioning teams with junior project managers

Many benefits accrue to teams with actively engaged Project Managers who can focus much of their professional time on the goals of the team. This team type and our experience in the development of project managers is chronicled elsewhere.35 Project Managers, particularly early in their career, benefit from leadership development, acquisition of business skills, positive role modeling, and management training that would otherwise not be formally provided. However, the more junior team members may also have the highest rate of transition to other stages of training, resulting in team disruption, as in MTT‐9. Careful vetting of prospective Project Managers and informed planning for leadership succession can help to mitigate costly disruptions.

Teams with inexperienced leaders

This team type is a prototype of nascent teams with a more junior PI. Here, leadership, both scientific and interpersonal, is a key element of team dynamics and a requirement for optimal team function. Both of these aspects of leadership are necessary for team success. Early assessment of the scientific and interpersonal leadership of team leaders and project managers, coupled with appropriate mitigation strategies, can prevent failures in team process and outcome.

CTSA impact on MTT productivity and innovation

In this analysis, we refer to the term “innovation” as the process of applying a novel idea or method to a new research domain, with specific actions and outcomes. We used changes (3 years before and after CTSA intervention) in Web of Science published sources (journal) titles and discrete scientific categories as indicators of MTT innovation. Our evaluation indicates that MTTs with effective team processes develop interdisciplinary concepts and publications that would not, and perhaps could not, occur without the interaction among team members, and have significantly greater impact and reach as indicated by team bibliometrics and a broader range of journals and scientific fields in which team publications occur. The consensus scores also suggested a positive correlation between the four process and four outcome scores. We interpreted these findings to indicate that CTSAs have positive impact on both scientific productivity and innovation in MTTs.

Recommendations for interventions to accelerate translational team innovation

A relatively sparse literature exists to guide the development, assessment, and interventions of translational teams.36 Although general guidance for developing team science competencies is available,37 our identification of several team types suggests that a single approach may not be appropriate for all teams, and that characteristics of the team can inform appropriate interventions. Our CTSA provided interventions in both proactive and reactive modes. When potential problems, or opportunities for improvement, are prospectively identified in MTTs, the intervention can be proactive. However, we believe that the CTSA leadership must also be closely connected to its MTTs, in order to recognize new problems quickly, and to provide reactive interventions to mitigate unforeseen problems.

We noted that one process measure, Transformative Leadership, generally did not change from year to year. One inference to be drawn from this observation is that leadership is difficult to define and more difficult to change substantively. From a programmatic perspective, we propose five types of interventions that are applicable to translational teams (Table 4) and can be applied either proactively, or reactively, as determined by the needs of the team. A testable hypothesis is that such interventions will produce balanced growth in translational teams as depicted in Figure 3 A.

Table 4.

Team development interventions to enhance team innovation

| Intervention type | Purpose | Process | Evaluative criterion |

|---|---|---|---|

| Leadership development10, 38, 39 | Transformative leadership and coleadership | Leader vision and collaboration, use of project managers and facilitators | Research plan Team vision, charter, goals Transformative and empowered leadership Meeting management and coordination External communication and collaboration |

| Team training40, 41 | Baseline capabilities and readiness | Basic team science knowledge, skills, attitudes | Research plan Meeting management and coordination External communication and collaboration |

| Team building42, 43 | Effective team processes and attitude | Goal setting, interpersonal relations, role clarification, problem solving | Meeting management and coordination External communication and collaboration |

| Knowledge management and cognitive integration44, 45, 46 | Innovation, collaboration, self‐correction | Knowledge (discipline) consideration, assimilation, accommodation, transformation, transactive memory, mental model | Research plan Team vision, charter, goals Meeting management and coordination External communication and collaboration |

| Structure and design8, 47 | Resource efficiency and disciplinary utility | Collaborative networks, strategic core, mediated information exchange, autonomy and independence, diverse disciplinarity | Research generation Meeting management and coordination External communication and collaboration |

Future research and team trajectory evaluation

There are three distinct areas of research critical to optimization of translational teams. First, the use of expert panels to evaluate both process and outcome measures has strengths and limitations that require better definition.

Second, improved delineation of the characteristics of transformational leadership skills among scientific team leaders is needed. This study suggests that leader‐specific criteria (e.g., meeting management and transformational leader behavior) were the most resistant to change. Acquisition of complex leadership skills, including transformational behavior, is a function of personality,48 and context of development,49 and requires longitudinal interventions with appropriate timing.50 Thus, how best to foster transformational leadership skills in research settings is a significant area for future inquiry.

Third, replication of our findings of team types would be useful to understand the robustness of the observations and support their generalizability. We view these four team types as having common features present both within our institution, and in other academic health centers, but additional observations are necessary to confirm that suggestion. It is possible that the four team types we observed are situation‐specific or culture‐based. The types of interventions most helpful to each team type must be determined and validated. Only when such team trajectories can be diagnosed and addressed, will maximal productivity, societal impact, and accelerated innovations produced from MTTs be most fully achieved.

Acknowledgments

The authors thank Drs. Animesh Chandra and David Konkel for thoughtfully and critically reviewing and editing this manuscript.

This study was conducted with the support of the Institute or Translational Sciences at the University of Texas Medical Branch, supported in part by a Clinical and Translational Science Award (UL1TR000071) from the National Center for Advancing Translational Sciences, National Institutes of Health.

References

- 1. NRC . National Research Council (US) and Institute of Medicine (US) Committee on the Organizational Structure of the National Institutes of Health. Enhancing the Vitality of the National Institutes of Health: Organizational Change to Meet New Challenges. Washington, D.C: National Academies Press; 2003. [PubMed] [Google Scholar]

- 2. Jones BF, Wuchty S, Uzzi B. Multi‐university research teams: shifting impact, geography, and stratification in science. Science. 2008; 322(5905): 1259–1262. [DOI] [PubMed] [Google Scholar]

- 3. Wuchty S, Jones BF, Uzzi B. The increasing dominance of teams in production of knowledge. Science. 2007; 316(5827): 1036–1039. [DOI] [PubMed] [Google Scholar]

- 4. Fiore SM. Interdisciplinarity as teamwork: how the science of team science can inform team science. Small Gr Res. 2008; 39(2): 51–77. [Google Scholar]

- 5. Hall KL, Feng AX, Moser RP, Stokols D, Taylor BK. Moving the science of team science forward: collaboration and creativity. Am J Prev Med. 2008; 35(Suppl. 2): S243–S249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Stokols D, Hall KL, Taylor BK, Moser RP. The science of team science: overview of the field and introduction to the supplement. Am J Prev Med. 2008; 35(Suppl. 2): S77–S89. [DOI] [PubMed] [Google Scholar]

- 7. Börner K, Contractor N, Falk‐Krzesinski HJ, Fiore SM, Hall KL, Keyton J, Spring B, Stokols D, Trochim W, Uzzi B. A multi‐level systems perspective for the science of team science. Sci Transl Med. 2010; 2(49): 49cm24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Calhoun WJ, Wooten K, Bhavnani S, Anderson KE, Freeman JE, Brasier AR. The CTSA as an exemplar framework for developing multidisciplinary translational teams. Clin Transl Sci. 2013; 60(1): 60–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Falk‐Krzesinski HJ, Börner K, Contractor N, Fiore SM, Hall KL, Keyton J, Spring B, Stokols D, Trochim W, Uzzi B. Advancing the science of team science. Clin Transl Sci. 2010; 3(5): 263–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Rubio DM, Schoenbaum EE, Lee LS, Schteingart DE, Marantz PR, Anderson KE, Platt, LD , Baez A, Esposito K. Defining translational research: implications for training. Acad Med. 2010; 85: 470–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Wooten K, Rose RM, Ostir GV, Calhoun WJ, Ameredes BT, Brasier AR. Assessing and evaluating multidisciplinary translational teams: a case illustration of a mixed methods approach and an integrative model. Eval Health Prof. 2014; 37(1): 33–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Masse LC, Moser RP, Stokols D, Taylor BK, Marcus SE, Morgan LD, Hall KL, Croyle RT, Trochim W. Measuring collaboration and transdisciplinary integration in team science [Supplement]. Am J Prev Med. 2008; 35(2): 151–160. [DOI] [PubMed] [Google Scholar]

- 13. Klein JT. Afterword: the emergent literature on interdisciplinary and transdisciplinary research evaluation. Res & Eval. 2006; 15(1): 75–80. [Google Scholar]

- 14. Aboelela SW, Merrill JA, Carley KM, Larson E. Social network analysis to evaluate an interdisciplinary research center. J Res Adm. 2007; 38(1): 61–78. [Google Scholar]

- 15. Dozier AM, Martinez CA, Odeh NL, Fogg TT, Lurie SJ, Rebenstein SP, Pearson TA. Identifying emerging research collaboration and networks: method development. Eval Health Prof. 2014; 37(1): 19–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Stokols D, Fuqua J, Gress J, Harvey R, Phillips K, Baezconde‐Garbanati L, Unger J, Palmer P, Clark MA, Colby SM, et al. Evaluating transdisciplinary science. Nicotine Tob Res. 2003; 5(Suppl. 1): 21–39. [DOI] [PubMed] [Google Scholar]

- 17. Kotarba JA, Wooten KC, Freeman J, Brasier AR. The culture of translational science research: a qualitative analysis. Int Rev Qual Res. 2013; 6(1): 122–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Hall KL, Stokols D, Moser RP, Taylor BK, Thornquist MD, Nebeling LG, Ehert CC, Marnett MJ, McTiernon A, Bergen NA, et al. The collaboration readiness of transdisciplinary research teams and centers: findings from the National Cancer Institute's TREC year‐one evaluation study. Am J Prev Med. 2008; 35(25): 5161–5172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Falk‐Krzesinski HJ, Contractor N, Fiore SM, Hall KL, Kane C, Keyton J, Thompson‐Klein J, Spring B, Stokols D, Trochim D. Mapping a research agenda for the science of team science. Res & Eval. 2011; 20(2): 145–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Kozlowski SWJ, Ilgen DR. Enhancing the effectiveness of work groups and teams. Psychol Sci Public. 2006; 7: 77–124. [DOI] [PubMed] [Google Scholar]

- 21. Mathieu JE, Maynard MT, Rapp TL, Gilson LL. Team effectiveness 1997–2007: a review of recent advancements and a glimpse into the future. J Manage. 2008; 34(3): 410–476. [Google Scholar]

- 22. Kozlowski SWJ, Ilgen DR. Enhancing the effectiveness of work groups and teams. Psychol Sci Public. 2006; 7(3): 77–124. [DOI] [PubMed] [Google Scholar]

- 23. Ilgen DR, Hollenbeck JR, Johnson M, Jandt D. Teams in organizations: from input‐process‐output model to IMOI models. Annu Rev Psychol. 2005; 56: 517–540. [DOI] [PubMed] [Google Scholar]

- 24. Cummings JN, Kiesler S. Collaborative research across disciplinary and organizational boundaries. Soc Stud Sci. 2005; 35(5): 703–722. [Google Scholar]

- 25. Creswell JW, Clark VLP. Designing and Conducting Mixed Methods Research (2nd ed). Los Angeles: Sage; 2011. [Google Scholar]

- 26. Teddlie C, Tashakkori A. Foundations of Mixed Methods Research: Integrating Quantitative and Qualitative Approaches in the Social and Behavioral Sciences. Los Angeles: Sage; 2009. [Google Scholar]

- 27. Tashakkori A, Teddlie C. Sage Handbook of Mixed Methods in Social and Behavioral Research. Los Angeles: Sage; 2010. [Google Scholar]

- 28. Olbrecht M, Bornman L. Panel peer review of grant applications: what do we know from research in social psychology on judgment and decision making. Res & Eval. 2010; 19(4): 293–304. [Google Scholar]

- 29. Langfeldt L. Expert panels evaluating research: decision making and sources of bias. Res & Eval. 2004; 13(1): 51–62. [Google Scholar]

- 30. Coryn CLS, Hattie JA, Scriven M, Hartman DJ. Models and mechanisms for evaluating government funded research: an international comparison. Am J Eval. 2007; 28(4): 437–547. [Google Scholar]

- 31. Lawrenz F, Thao M, Johnson K. Expert panel reviews of research centers: the site visit. Eval Program Plann. 2012; 35(3): 390–397. [DOI] [PubMed] [Google Scholar]

- 32. Huutoniemi K. Evaluating interdisciplinary research In: Frodeman R, Thompson Klein J, Mitchem C. eds. The Oxford Handbook of Interdisciplinarity. Oxford: Oxford University Press; 2010: 309–320. [Google Scholar]

- 33. Klein T, Olbrecht M. Triangulation of qualitative and quantitative methods in panel peer review research. Int J Cros S Educ. 2011; 2(2): 342–348. [Google Scholar]

- 34. Scott CS, Nagasaw PR, Abernathy NF, Ramsey BW, Martin PJ, Hack BM, Schwartz HD, Brock DM, Robins LS, Wolf FM, et al. Expanding assessments of translational research programs: supplementary metrics with value judgments. Eval Health Prof. 2014; 37(1): 83–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Wooten KC, Dann SM, Finnerty CC, Kotarba JA. Translational science project team managers: qualitative insights and implications from current and previous post doctoral experiences. Post Doc J. 2014; 2(7): 37–49. [PMC free article] [PubMed] [Google Scholar]

- 36. Wooten KC, Brasier AR. Team development interventions for the evolution of scientific teams: a proposed taxonomy for consideration by phase of research, phase of adaptation, and stage of development. Paper presented at the Fifth Annual International Science of Team Science Conference, August 2014. [Google Scholar]

- 37. Bennett LM, Galedin H, Levine‐Finley S. Collaboration & Team Science: A Field Guide. Bethesda, MD: National Institutes of Health; 2010. [Google Scholar]

- 38. Wildman JL, Bedwell WL. Practicing what we preach: teaching teams using validated team science. Small Gr Res. 2013; 44(4): 381–394. [Google Scholar]

- 39. Shuller ML, DiazGranados D, Salas E. There's a science for that: team development interventions in organizations. Curr Dir Psychol Sci. 2011; 20(6): 363–372. [Google Scholar]

- 40. Delise LA, Gorman CA, Brooks AM, Rentsch JR, Steele‐Johnson D. The effects of team training on team outcome: a meta‐analysis. Perf Improvement Qrtly. 2010; 22(4): 53–80. [Google Scholar]

- 41. Salas E, DiazGranados D, Klein C, Burke CS, Staph KL, Goodwin GF, Halpin SM. Does team training improve team performance: a meta‐analysis. Hum Factors. 2008; 50(6): 903–933. [DOI] [PubMed] [Google Scholar]

- 42. Salas E, Rozell BM, Mullen B, Driskill JE. The effect of team building on performance: an integration. Small Gr Res. 1999; 30(3): 309–329. [Google Scholar]

- 43. Klein C, DiazGranados D, Salas E, Le H, Burke CS, Lyons R, Goodwin GF. Does team building work? Small Gr Res. 2009; 40(2): 181–222. [Google Scholar]

- 44. Salazar MR, Lant TK, Fiore SM, Salas E. Facilitating innovation in diverse science teams through integrative capacity. Small Gr Res. 2012; 43(5): 523–558. [Google Scholar]

- 45. Liao J, Jamieson NL, O'Brien AT, Restuboy SLD. Developing transactive memory systems: theoretical contributions from social identity perspective. Group Organ Manage. 2012; 37(2): 204–240. [Google Scholar]

- 46. Cannon‐Bowers JA, Salas E, Converse SA. Shared mental models in expert team decision making In: Guzzo R, Salas E. eds. Team Effectiveness and Decision Making in Organizations. San Francisco: Jossey‐Bass; 1995: 333–380. [Google Scholar]

- 47. Guimera R, Uzzi B, Spiro J, Nunesamaral LA. Team assembly mechanisms determine collaboration network structure and team performance. Science. 2005; 308(5722): 697–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Harms PD, Spain SM, Hannab ST. Leader development and the dark side of personality. Leadership Quart. 2000; 22(3): 495–509. [Google Scholar]

- 49. McCall MW. Recasting leadership development. Indus Organ Psy. 2010; 3(1): 3–19. [Google Scholar]

- 50. Day DV, Sin HP. Longitudinal interpretive model of leadership development: charting and understanding developmental trajectories. Leadership Quart. 2011; 22(3): 545–560. [Google Scholar]