Abstract

Background

Developmental prosopagnosia is a disorder of face recognition that is believed to reflect impairments of visual mechanisms. However, voice recognition has rarely been evaluated in developmental prosopagnosia to clarify if it is modality-specific or part of a multi-modal person recognition syndrome.

Objective

Our goal was to examine whether voice discrimination and/or recognition are impaired in subjects with developmental prosopagnosia.

Design/Methods

73 healthy controls and 12 subjects with developmental prosopagnosia performed a match-to-sample test of voice discrimination and a test of short-term voice familiarity, as well as a questionnaire about face and voice identification in daily life.

Results

Eleven subjects with developmental prosopagnosia scored within the normal range for voice discrimination and voice recognition. One was impaired on discrimination and borderline for recognition, with equivalent scores for face and voice recognition, despite being unaware of voice processing problems.

Conclusions

Most subjects with developmental prosopagnosia are not impaired in short-term voice familiarity, providing evidence that developmental prosopagnosia is usually a modality-specific disorder of face recognition. However, there may be heterogeneity, with a minority having additional voice processing deficits. Objective tests of voice recognition should be integrated into the diagnostic evaluation of this disorder to distinguish it from a multi-modal person recognition syndrome.

Keywords: voice perception, familiarity, semantic, multimodal, person recognition

1. INTRODUCTION

Prosopagnosia is the inability to recognize faces. In line with cognitive models of face recognition as a hierarchy of operations (Bruce et al., 1986), neuropsychological studies of acquired prosopagnosia suggest at least two functional variants, an apperceptive form in which subjects have difficulty perceiving facial structure, and an associative/amnestic form in which perception is relatively spared, but the ability to access memories of previously seen faces is disrupted (Barton, 2008; Davies-Thompson et al., 2014). In acquired prosopagnosia, the apperceptive variant has been linked to right or bilateral occipitotemporal lesions, and the associative variant to right or bilateral anterior temporal lesions (Damasio et al., 1990; Barton, 2008).

The fact that voice recognition also activates the right anterior temporal lobes (Belin et al., 2003) has prompted questions as to whether some cases of associative prosopagnosia may instead have a multi-modal person recognition disorder, since voice recognition is seldom tested (Gainotti, 2013). This is not only a question with theoretical implications, but also potential clinical relevance: as there is some evidence that simultaneous voice processing can enhance face learning, (Bulthoff et al., 2015), impaired voice processing could accentuate prosopagnosic deficits while intact voice processing might offer rehabilitative avenues. To address this, a recent study of acquired prosopagnosia found that voice recognition was unaffected after right anterior temporal lesions but impaired by bilateral anterior temporal lesions (Liu et al., 2014). These data tentatively suggest that impaired face recognition can be divided into an apperceptive form linked to occipitotemporal lesions, an associative form linked to right anterior temporal lesions, and a multimodal person recognition syndrome, with parallel associative deficits in face and voice processing, typically after bilateral anterior temporal lesions. These preliminary function-structure correlations require replication.

These observations are also relevant to the developmental form of prosopagnosia, whose functional and structural basis remains uncertain. Recent studies suggest the possibility of similar apperceptive, associative and other variants in developmental prosopagnosia (Stollhoff et al., 2011; Susilo et al., 2013; Dalrymple et al., 2014). Less known is whether it is a modality-specific disorder or part of a multi-modal syndrome: currently there is only one report that studied voice recognition in one developmental prosopagnosic subject, which found some impairment (von Kriegstein et al., 2006), and brief mention of inferior voice recognition in another who also had Asperger syndrome (Kracke, 1994). Addressing these functional questions in developmental prosopagnosia may help guide and refine studies of this condition.

The goal of this study was to apply the same two tests of voice discrimination and recognition used in the study of acquired prosopagnosia (Liu et al., 2014) to a cohort with developmental prosopagnosia. We asked a) whether this condition is a modality-specific disorder of face recognition or part of a multi-modal person recognition syndrome, and b) whether the results were homogenous or heterogeneous in the cohort.

2. MATERIAL AND METHODS

2.1 Subjects

We studied 12 subjects with developmental prosopagnosia (9 females, age range 27 – 67 years, mean 41.82 years, s.d. 12.91 years). These were local residents and native English speakers recruited from www.faceblind.org. Diagnostic criteria included an in-person clinical interview that revealed a subjective report of life-long difficulty in face recognition, and objective confirmation of impaired face recognition that included a score at least 2 standard deviations below the control mean on the Cambridge Face Memory Task (Duchaine et al., 2006b) and a discordance between preserved word memory and impaired face memory on the Warrington Recognition Memory Test for Faces and Words (Warrington, 1984) that was in the bottom 5th percentile (Table 1). One subject had had a unilateral retinal detachment, since corrected and now with normal acuity and fields; no other subject reported perceptual problems. Apart from mild concussions years prior in four subjects, none had other neurologic problems. None of these subjects reported any significant changes in face perception associated with their mild concussions or other medical events. All subjects had best corrected visual acuity of better than 20/60, normal visual fields on Goldmann perimetry, and normal color vision on the Farnsworth-Munsell hue discrimination test. To exclude autism spectrum disorders, all subjects had a score less than 32 on the Autism Questionnaire (Baron-Cohen et al., 2001).

Table 1.

Demographics and face processing scores.

| CFPT | CFMT | WRMT | MRI | ||||||

|---|---|---|---|---|---|---|---|---|---|

| gender | age (yr) | upright | inverted | Faces | Words | W-F | |||

| DP003 | F | 36 | 74 | 90 | 35 | 28 | 50 | 22 | no |

| DP008 | F | 62 | 48 | 96 | 36 | 36 | 49 | 13 | yes |

| DP014 | M | 43 | 64 | 60 | 32 | 30 | 48 | 18 | yes |

| DP016 | F | 52 | 48 | 72 | 41 | 37 | 49 | 12 | yes |

| DP021 | F | 29 | 36 | 80 | 37 | 33 | 50 | 17 | yes |

| DP024 | F | 35 | 62 | 74 | 41 | 38 | 50 | 12 | yes |

| DP029 | F | 28 | 44 | 76 | 42 | 36 | 49 | 13 | no |

| DP032 | M | 67 | 68 | 84 | 42 | 37 | 47 | 10 | no |

| DP033 | F | 47 | 52 | 82 | 29 | 39 | 50 | 11 | contra |

| DP035 | M | 40 | 86 | 68 | 36 | 35 | 49 | 14 | yes |

| DP038 | F | 28 | 32 | 66 | 39 | 36 | 49 | 13 | yes |

| DP044 | F | 37 | 68 | 82 | 40 | 34 | 49 | 15 | yes |

CFMT - Cambridge face memory test

CFPT - Cambridge face perception test

WRMT - Warrington recognition memory test, W-F = words score minus faces score

Bold/Underline - Impaired performance

Although it is not part of current diagnostic criteria (Behrmann et al., 2005; Susilo et al., 2013; Bate et al., 2014), eight subjects had magnetic resonance brain imaging with T1-weighted and FLAIR sequences to exclude structural lesions that would have indicated early acquired rather than developmental prosopagnosia (Barton et al., 2003); in one subject MRI was contraindicated and in the remaining three it was declined because of time limitations (Table 1). To evaluate face discrimination, subjects also completed the Cambridge Face Perception Test (CFPT; Duchaine et al., 2007).

Seventy-three control subjects completed the voice discrimination test (50 females, age range 19 – 70 years, mean 33.6 years, s.d. 15.5 years) and 54 of whom also completed the voice recognition test (41 females, age range 19–70 years, mean age 37.2, s.d. 16.4 years). All subjects were born in North America, lived in North America for at least 5 years, and spoke English as their first language. All control subjects reported normal or corrected-to-normal vision, normal hearing, and no history of brain damage. All control subjects were remunerated ten dollars per hour for their participation.

All subjects gave informed consent to a protocol approved by the University of British Columbia and Vancouver General Hospital Ethics Review Boards.

2.2 Apparatus for testing voice processing

All tests were constructed with Superlab (www.superlab.com) software and performed on an IBM Lenovo laptop with 1280×800 pixels resolution. Subjects wore Panasonic RP-HTX7 headset throughout the test and were placed approximately 57 centimeters away from the screen, in a quiet and dimly lit room with the door closed.

2.3 Stimuli

Audio-clips were generated from volunteers aged 20 to 31 years (Liu et al., 2014): 20 males and 20 females for the discrimination test, and 21 males and 21 females for the recognition test. Each audio-clip was never used more than once as a target or as a distractor.

Voice discrimination

This used a match-to-sample design. Each volunteer read two different texts. For the initial sample, the volunteer read the phrase: “This is by far one of the most amazing books I have ever read, it tells the story of a Colombian family across generations”. For match and distractor stimuli, both voices read: “After a hearty breakfast, we decided to go for a walk on the beach. It was a lovely morning with the crisp smell of the ocean in the air”. All audio-clips began with 1s of silence, then approximately 10s of the voice, and ended with 1.5s of silence.

Voice Recognition

Two sets of audio-clips were made, with each volunteer contributing two recordings to each set. The first “question component” was recorded in interview style, and the volunteers answer two questions. For the learning phase, we asked, “What was your favorite childhood activity?”, while for the test phase, we asked, “What was your favorite vacation?” The second “passages component” was recorded in narrative style. Volunteers read a random passage from the Alice Munro’s short story “Too Much Happiness” for the learning phase and for the testing phase a random passage from the short story “Friend of My Youth”. All volunteers read different passages. For both components, each audio-clip began with 200ms of silence followed by 12.5s of speech, then 700ms of silence.

2.4 Protocol

Voice Discrimination

Each trial started with a screen displaying “Target Voice” that was accompanied by the audio-clip of the sample voice. After a 1.5s pause, there was a ring tone lasting 875ms, which served as an auditory mask. This was followed by the audio-clip of the first choice voice accompanied by a screen displaying “Choice 1”, and then the audio-clip of the second choice voice with the visual display “Choice 2”. One of the two choice voices was the match, from the same person to whom the sample voice belonged, and the other a distractor, from a different person of the same gender. Matches and distractors were given in random order. After the last choice voice, a screen prompted the subject to indicate with a keypress which choice voice matched the sample voice. There were 40 trials given in two blocks, one with 20 male and one with 20 female trials.

Voice Recognition

This tested the ability to remember voices over a short interval and with intervening interference, similar to standard tests of face familiarity such as the Warrington Recognition Memory Test (Warrington, 1984) and the Cambridge Face Memory Test (Duchaine et al., 2006b). Our pilot work found that healthy subjects performed at chance when asked to recall five voices sequentially. Hence our recognition test used sets of three trials. Each set started with a screen that displayed “Learning Phase” for 3s. During this phase, the subject heard audio-clips of 3 target voices in a row. During the first target voice the screen displayed “Voice A”, with the second it displayed “Voice B”, and with the third “Voice C”. Each audio-clip was followed by a 875ms ring tone. After completion of the learning phase, which lasted approximately 46s, the screen displayed “Testing Phase” for 3s. Subjects then heard 3 pairs of choice voices. In the first pair, one of the two choice voices was from the person who was “Voice A” in the learning phase, while the other was a distractor from a different person of the same gender. The temporal order of matches and distractors was randomized. When the first choice voice was playing, the screen displayed “Voice A Choice 1”, and with the second it displayed “Voice A Choice 2”. Each audio-clip was followed by a 875ms ring tone. A screen then prompted subjects to indicate by keypress which of the two choice voices matched Voice A. This was then followed by a similar sequence for Voices B and C. Each set of three trials contained at least one trial for each gender. Subjects completed seven sets (21 trials) with stimuli from the ‘question component’, and another seven with stimuli from the ‘passages component’, for a total of 42 trials.

All subjects completed the voice discrimination test first, with subjects randomized to start with either male or female stimuli, and the option for a short break between the two blocks. There was a break of at least 10 minutes before the voice recognition test, in which the ‘question component’ was presented first and the ‘passages component’ second.

2.5 Analysis

We calculated accuracy for each subject. Because our prior evaluation of these control subjects showed an effect of age but not gender (Liu et al., 2014), our individual-subject analysis regressed out the variance due to age in controls, and used the residual variance in the function to calculate the 95% prediction intervals appropriate for single-subject comparisons.

Second, we performed group analyses. To generate age-matched control groups we used two methods to match our prosopagnosic group. The first ‘age-paired’ method paired each prosopagnosiac subject with the control subject closest in age, giving 12 subjects in each group. For voice discrimination, this yielded a control group with mean age of 41.82 years (s.d. 12.7), equivalent to that of our prosopagnosic group (t(11) = 0.0002, p = 0.99). For voice recognition, this yielded a control group with mean age of 41.84 years (s.d. 12.7), also equivalent to that of our prosopagnosic group (t(11) = 0.005, p = 0.99).

The second ‘age-range’ method capitalized on the statistical advantage of the large number of control subjects. We included all controls whose ages fell within the range spanning the minimum and maximum ages of our prosopagnosic subjects. For voice discrimination this gave a control group of 35 subjects whose mean age of 42.24 years (s.d. 13.0) did not differ from the prosopagnosia group (t(20) = 0.09, p = 0.93), while for voice recognition this gave a control group of 32 subjects with mean age of 43.33 years (s.d. 13.0), again not differing from the prosopagnosia group (t(19) = 0.35, p = 0.73). Contrasts in voice scores were performed using t-tests for both the voice discrimination and voice recognition test.

2.6 Questionnaire about face and voice identification

Subjects with prosopagnosia often report that they rely on voices for people recognition. However, some have noted that these subjective reports may not correlate with objective testing (Boudouresques et al., 1979; Liu et al., 2014). To examine the relationship between self-report and our objective testing, we asked all subjects to complete the questionnaire about their daily life experiences in face and voice identification that we had administered to subjects with acquired prosopagnosia (Liu et al., 2014). Five questions addressed face identification and five voice identification, and subjects responded using a 7-point Likert scale, giving a face and voice score out of 35 each, with higher scores indicating more difficulty. All developmental prosopagnosia subjects and 43 control subjects (mean age 36.2 years, s.d. 15.6, range 19–70) completed the questionnaire. Our prior study found no significant effect of age or gender on questionnaire scores in control subjects (Liu et al., 2014).

3. RESULTS

Voice discrimination

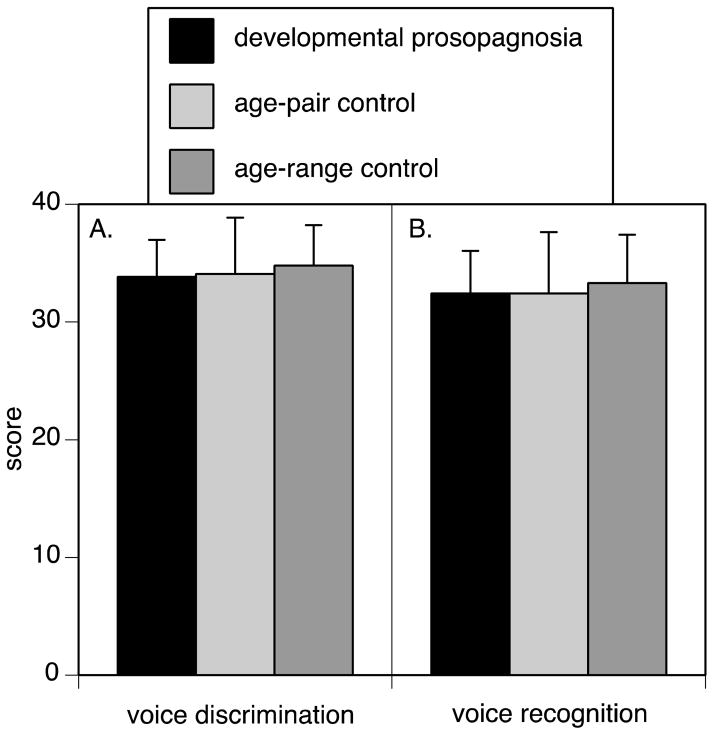

11 of 12 prosopagnosic subjects were normal, while one, DP035, had a abnormal score, at the 96.5th percentile (Figure 1A). In the group analysis (Figure 2), there was no difference compared to either the age-paired (t(11) = 0.15, p = 0.88) or age-range controls (t(30) = 0.26, p = 0.73).

Figure 1. Voice scores plotted as a function of age.

A. voice discrimination, B. voice recognition. Control subjects (small dots) in both tests show a significant decline with age. Solid line shows the mean of the linear regression and the dotted line shows the age-adjusted lower 95% prediction limit. Subject DP035 is impaired on voice discrimination and has borderline performance on voice recognition. Subject DP021 performs normally, despite reporting voice recognition difficulties on the questionnaire.

Figure 2. Age-matched group analyses.

A. voice discrimination, B. voice recognition. The prosopagnosia group is compared with the 12 control subjects closest in age (age-pair controls), and with all control subjects falling in the range of 27 to 67 years (age-range controls). Group means are shown, with error bars showing one standard deviation.

Voice recognition

All 12 prosopagnosic subjects were normal, although DP035 was borderline, at the 94th percentile (Figure 1B). The group analysis (Figure 2) showed no difference between the prosopagnosic group and either age-paired (t(11) = 0.00, p = 1.00) or age-range controls (t(21) = 0.71, p = 0.48).

To compare the severity of short-term familiarity deficits for voices and faces, we correlated the z-scores on the voice recognition and Cambridge Face Memory tests (Figure 3). Subject DP-35 has equivalent deficits for both faces and voices, but as a group, developmental prosopagnosic subjects were worse on face than voice recognition (t(11) = 9.11, p<0.0001).

Figure 3. Voice versus face recognition in developmental prosopagnosia.

Z scores for the voice recognition test are plotted against z-scores for the Cambridge Face Memory Test (CFMT), with negative z-scores indicating impairment. Subject DP035 has equivalent deficits for face and voice recognition, but other subjects are more impaired for face recognition.

Questionnaire

All but two subjects with developmental prosopagnosia reported impairment on the questionnaire’s face recognition portion: DP029 and DP008 had borderline scores (Table 2). Only one subject (DP021) reported difficult voice recognition. However, this subject scored close to control age-adjusted mean score for both voice discrimination and recognition (Figure 1). Of note, DP035 was unaware of problems with voice recognition.

Table 2.

Voice and face processing questionnaire, results.

| Questionnaire results (score 0–35). | ||

|---|---|---|

| faces | voices | |

| control mean | 10.16 | 13.14 |

| s.d. | 3.88 | 4.10 |

| 95% upper limit | 19.39 | 22.89 |

| DP003 | 20 | 9 |

| DP008 | 18 | 12 |

| DP014 | 27 | 7 |

| DP016 | 22 | 13 |

| DP021 | 31 | 27 |

| DP024 | 24 | 8 |

| DP029 | 18 | 8 |

| DP032 | 28 | 12 |

| DP033 | 26 | 18 |

| DP035 | 32 | 14 |

| DP038 | 22 | 21 |

| DP044 | 23 | 17 |

bold underline indicates abnormal scores

4. DISCUSSION

Most of our subjects with developmental prosopagnosia had intact voice discrimination and recognition for newly-learned voices, indicating a modality-specific visual agnosia rather than a multi-modal person recognition syndrome. On the other hand, one of 12 subjects had borderline results that were not definitive for either normality or abnormality. Nevertheless, the fact that he scored low on both voice tests, and that his voice recognition deficit was of similar severity to his face recognition deficit, are consistent with a plausible impairment, which could indicate that a subset of developmental prosopagnosic subjects have voice recognition deficits. This is reminiscent of the report of impaired voice recognition in subject SO (von Kriegstein et al., 2006), although the parallel is not exact, since SO was impaired not on a similar test of recognition of newly learned voices, but on recognition of voices from daily life. Compared to our acquired prosopagnosic subjects (Liu et al., 2014), DP035 is most functionally similar to B-ATOT2, a subject with bilateral fusiform and anterior temporal lesions, in that he is impaired not only on both voice and face recognition tests, but also on voice and face discrimination tests. This suggests the co-occurrence of voice and face apperceptive defects, rather than a single amodal recognition deficit.

The potential for voice recognition to be affected in developmental prosopagnosia rests on several grounds. First, some studies have suggested that the fusiform region is anomalous in developmental prosopagnosia (Garrido et al., 2009b; Furl et al., 2011), and neuroimaging studies indicate that the fusiform face area is sensitive to voice processing (von Kriegstein et al., 2005; von Kriegstein et al., 2006; Schweinberger et al., 2011) and has connections with voice-sensitive areas in the superior temporal sulcus (Blank et al., 2011). Against this, our study of acquired prosopagnosia failed to find any impairment of voice discrimination or recognition of recently heard voices when lesions were limited to the fusiform gyri (Liu et al., 2014). Similarly, voice recognition was intact in subject MS, an acquired prosopagnosic subject who had damage to bilateral fusiform gyri and the right anterior temporal lobe (Arnott et al., 2008) and even superior to controls in subject SB, who had extensive right-sided damage including the fusiform gyrus and less extensive left occipitotemporal injury (Hoover et al., 2010).

A second scenario focuses on the anterior temporal lobe. Investigators noting normal activation of the fusiform face area in developmental prosopagnosia (Avidan et al., 2005; Avidan et al., 2009) have performed morphometric studies reporting abnormalities in anterior fusiform cortex and the anterior and middle temporal lobes (Behrmann et al., 2007). Functional neuroimaging has revealed temporal voice areas in the middle and anterior superior temporal sulcus, more so on the right (Belin et al., 2000), which show sensitivity to voice identity in adaptation studies (Belin et al., 2003; Warren et al., 2006) and activity correlations with the familiarity of voices (Bethmann et al., 2012). It must be noted, though, that the anterior temporal regions responding to facial identity are more ventral than temporal voice areas (Belin et al., 2000) and do not overlap (Joassin et al., 2011). Nevertheless, if anterior or middle temporal anomalies underlie developmental prosopagnosia, these could theoretically disrupt voice processing also. However, our previous study found that impairments of both face and voice recognition were more likely to occur with bilateral than just right anterior temporal damage (Liu et al., 2014).

Both of these last points are consistent with the literature on multi-modal person recognition syndromes. First, of the six cases documenting impaired familiarity for both voices and faces with preserved discrimination, all four with lesion information had anterior temporal lesions, including QR and KL (Hailstone et al., 2010), as well as B-AT1 and B-AT2 (Liu et al., 2014). Such information was not available for case 13 and 37 (Neuner et al., 2000). Second, lesions were either bilateral, as in B-AT1, BAT2, and case 13 (Liu et al., 2014; Neuner et al., 2000), or if predominantly right-sided as in QR and KL (Hailstone et al., 2010), had atrophic degeneration, in which damage is diffuse and highly likely to involve the left hemisphere to some degree. Case 13 of (Neuner et al., 2000) had a right-sided lesion, but their pathology was not given. Even if we extend this list to include subjects in whom the data on face or voice discrimination is insufficient to exclude apperceptive deficits - e.g. Emma (Gentileschi et al., 2001), Maria (Gentileschi et al., 1999), CD (Gainotti et al., 2008) and case 31 (Neuner et al., 2000) – these two points still hold: mainly anterior temporal damage with either bilateral lesions or right dominant lesions with etiologies that cannot exclude a degree of bilaterality. Third, in functional neuroimaging of healthy subjects, temporal voice areas are found in the middle and anterior superior temporal sulci, and while these show right dominance, they are found bilaterally (Belin et al., 2000). Likewise, fMRI adaptation studies reveal sensitivity to voice identity in the right anterior superior temporal sulcus in some studies (Belin et al., 2003) but in bilateral posterior superior temporal sulci in others (Warren et al., 2006).

At this point it is not definitive whether the pathology in developmental prosopagnosia is focal or diffuse, unilateral or bilateral, anterior temporal or fusiform, homogenous or heterogeneous. Do the behavioural data help? First, although it was not our focus, the fact that many but not all of our subjects performed well when discriminating between faces on the Cambridge Face Perception Test suggests that most have a form of anterior temporal rather than fusiform dysfunction. This inference aligns with the conclusions of some morphometric studies suggesting abnormalities in more anterior aspects of inferotemporal cortex (Behrmann et al., 2007; Garrido et al., 2009b). Second, the preservation of voice recognition suggests a resemblance to acquired prosopagnosia with right anterior temporal rather than bilateral lesions. Some structural studies of developmental prosopagnosia have found temporal abnormalities only in the right hemisphere (Garrido et al., 2009b), while others failed to find a hemispheric asymmetry (Behrmann et al., 2007; Thomas et al., 2009). All told, though, observations by us and others (Stollhoff et al., 2011) that some developmental prosopagnosia subjects show face discrimination deficits while others do not, and some have voice recognition deficits (von Kriegstein et al., 2006) while others do not, suggests a spectrum of structural and functional anomalies in developmental prosopagnosia.

Finally, we close with a discussion of two technical points. First, our data underline the value of formal perceptual testing. This point has been made regarding face recognition, that subjective impressions or questionnaires are not always corroborated by formal face processing tests (De Haan, 1999). Similarly, although most prosopagnosic subjects claim to rely on voice to identify others, objective tests have revealed asymptomatic voice recognition deficits in some (Boudouresques et al., 1979; Liu et al., 2014). Here we found one subject with an asymptomatic impairment and another who performed well despite claiming poor voice recognition. Thus objective tests rather than subjective reports are required to clarify if a face-recognition impairment is a modality-specific deficit or part of a multi-modal person recognition syndrome, to situate the deficit correctly in the hierarchy of cognitive operations involved in recognizing people.

Second, our test of voice recognition involved recently learned voices, as is also used in some other studies (Hoover et al., 2010; Garrido et al., 2009a; Roswandowitz et al., 2014), rather than famous or personally familiar voices. These almost certainly have behavioural and neural differences (Blank et al., 2014), given the richer emotional, episodic and semantic associations of known faces and voices. Nevertheless, there are pragmatic advantages to using recently familiarized rather than personally known voices in testing. For one, the degree of exposure to famous face and voice stimuli will vary widely across subjects for individual and cultural reasons, whereas exposure to faces made familiar during testing can be controlled and made uniform in a cohort. Personally familiar people such as relatives and friends can be used in a single case study, but it is impossible to do so in a fashion that is equivalent across subjects and controls in a cohort study. For these reasons, the diagnosis of developmental prosopagnosia has come to rest on two things: first, self-report of lifelong impairment in face recognition and second, impaired performance on a test of face memory, most often the Cambridge Face Memory Test (Duchaine et al., 2006a), which assesses familiarity of recently viewed faces (e.g. DeGutis et al., 2014; Shah et al., 2015). In many cases, studies also require impairment on an additional test of face processing which could be a test of face discrimination, configural face processing, or face familiarity (famous or newley learned; e.g. Avidan et al., 2014; Johnen et al., 2014). Importantly, in either case, it is typically not a requirement that subjects show impairment on a test of famous or personally familiar face memory. With far fewer published cases and consequent lack of esteemed objective tests, the diagnostic protocol for developmental phonagnosia is less established. Yet, a recent study used a similar approach for developmental phonagnosia, with inclusion criteria resting on reported lifelong impairment in voice recognition and impaired performance on a test of newly learned voices, but not famous voices (Roswandowitz et al., 2014).

Furthermore, while we and others still test famous face recognition despite the above reservations, famous voice recognition is notoriously poor in healthy subjects. On one test of famous voice recognition controls could name only about 50% of 79 voices (Meudell et al., 1980), and on another only 18 of 96 voices (Garrido et al., 2009a). In another study (Hailstone et al., 2010), control subjects on a 48-item test of familiarity for famous voices scored as low as 29/48, which by binomial proportions is at the upper limit of chance performance.

On a conceptual level, the use of recognition tests for recently viewed faces reflects the fact that prosopagnosia is not just difficulty recognizing long-known faces, but also the inability to learn to recognize new faces (Damasio et al., 1990). In fact, while anterograde prosopagnosia exists, in which subjects cannot learn to recognize new faces but can still recognize long familiar faces (Tranel et al., 1985; Young et al., 1995), we are not aware of any subject with the converse pattern. In this respect, the behavioural and neural differences between recently learned and long familiar faces are immaterial for the diagnosis of prosopagnosia for most subjects. For all of these reasons, and until there is evidence to the contrary, we believe that the testing of familiarity for recently learned voices and faces is a justifiable and possibly superior means of assessing for the presence of phonagnosia and prosopagnosia respectively.

Acknowledgments

This work was supported by a CIHR operating grant (MOP-102567) to JB. JB was supported by a Canada Research Chair and the Marianne Koerner Chair in Brain Diseases. BD was supported by grants from the Economic and Social Research Council (UK) (RES-062-23-2426) and the Hitchcock Foundation. SC was supported by National Eye Institute of the National Institutes of Health under award number F32 EY023479-02.

Footnotes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Ran R Liu, Email: r.liu@alumni.ubc.ca.

Raika Pancaroglu, Email: raikap@gmail.com.

Brad Duchaine, Email: bradley.c.duchaine@dartmouth.edu.

Jason J S Barton, Email: jasonbarton@shaw.ca.

References

- Arnott SR, Heywood CA, Kentridge RW, Goodale MA. Voice recognition and the posterior cingulate: An fmri study of prosopagnosia. J Neuropsychol. 2008;2(Pt 1):269–86. doi: 10.1348/174866407x246131. [DOI] [PubMed] [Google Scholar]

- Avidan G, Behrmann M. Functional mri reveals compromised neural integrity of the face processing network in congenital prosopagnosia. Curr Biol. 2009;19(13):1146–50. doi: 10.1016/j.cub.2009.04.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avidan G, Hasson U, Malach R, Behrmann M. Detailed exploration of face-related processing in congenital prosopagnosia: 1. Functional neuroimaging findings. J Cogn Neurosci. 2005;17(7):1150–1167. doi: 10.1162/0898929054475145. [DOI] [PubMed] [Google Scholar]

- Avidan G, Tanzer M, Hadj-Bouziane F, Liu N, Ungerleider LG, Behrmann M. Selective dissociation between core and extended regions of the face processing network in congenital prosopagnosia. Cerebral Cortex. 2014;24:1565–1578. doi: 10.1093/cercor/bht007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E. The autism-spectrum quotient (aq): Evidence from asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J Autism Dev Disord. 2001;31(1):5–17. doi: 10.1023/a:1005653411471. [DOI] [PubMed] [Google Scholar]

- Barton J, Cherkasova M, Press D, Intriligator J, O’Connor M. Developmental prosopagnosia: A study of three patients. Brain and Cognition. 2003;51:12–30. doi: 10.1016/s0278-2626(02)00516-x. [DOI] [PubMed] [Google Scholar]

- Barton JJ. Structure and function in acquired prosopagnosia: Lessons from a series of 10 patients with brain damage. J Neuropsychol. 2008;2(Pt 1):197–225. doi: 10.1348/174866407x214172. [DOI] [PubMed] [Google Scholar]

- Bate S, Cook SJ, Duchaine B, Tree JJ, Burns EJ, Hodgson TL. Intranasal inhalation of oxytocin improves face processing in developmental prosopagnosia. Cortex. 2014;50:55–63. doi: 10.1016/j.cortex.2013.08.006. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Avidan G. Congenital prosopagnosia: Face-blind from birth. Trends Cogn Sci. 2005;9(4):180–7. doi: 10.1016/j.tics.2005.02.011. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Avidan G, Gao F, Black S. Structural imaging reveals anatomical alterations in inferotemporal cortex in congenital prosopagnosia. Cereb Cortex. doi: 10.1093/cercor/bhl144. epub, 2007. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ. Adaptation to speaker’s voice in right anterior temporal lobe. Neuroreport. 2003;14(16):2105–9. doi: 10.1097/00001756-200311140-00019. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–12. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Bethmann A, Scheich H, Brechmann A. The temporal lobes differentiate between the voices of famous and unknown people: An event-related fmri study on speaker recognition. PLoS One. 2012;7(10):e47626. doi: 10.1371/journal.pone.0047626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blank H, Anwander A, von Kriegstein K. Direct structural connections between voice- and face-recognition areas. J Neurosci. 2011;31(36):12906–15. doi: 10.1523/JNEUROSCI.2091-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blank H, Wieland N, von Kriegstein K. Person recognition and the brain: Merging evidence from patients and healthy individuals. Neurosci Biobehav Rev. 2014;47:717–34. doi: 10.1016/j.neubiorev.2014.10.022. [DOI] [PubMed] [Google Scholar]

- Boudouresques J, Poncet M, Cherif AA, Balzamo M. Agnosia for faces: Evidence of functional disorganization of a certain type of recognition of objects in the physical world. Bulletin de l’Académie Nationale de Medecine. 1979;163(7):695–702. [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Brit J Psychol. 1986;77:305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Bulthoff I, Newell FN. Distinctive voices enhance the visual recognition of unfamiliar faces. Cognition. 2015;137:9–21. doi: 10.1016/j.cognition.2014.12.006. [DOI] [PubMed] [Google Scholar]

- Dalrymple KA, Garrido L, Duchaine B. Dissociation between face perception and face memory in adults, but not children, with developmental prosopagnosia. Dev Cogn Neurosci. 2014;10:10–20. doi: 10.1016/j.dcn.2014.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damasio AR, Tranel D, Damasio H. Face agnosia and the neural substrates of memory. Annu Rev Neurosci. 1990;13:89–109. doi: 10.1146/annurev.ne.13.030190.000513. [DOI] [PubMed] [Google Scholar]

- Davies-Thompson J, Pancaroglu R, Barton J. Acquired prosopagnosia: Structural basis and processing impairments. Front Biosci (Elite Ed) 2014;6:159–74. doi: 10.2741/e699. [DOI] [PubMed] [Google Scholar]

- De Haan EH. A familial factor in the development of face recognition deficits. J Clin Exp Neuropsychol. 1999;21(3):312–5. doi: 10.1076/jcen.21.3.312.917. [DOI] [PubMed] [Google Scholar]

- DeGutis J, Cohan S, Nakayama K. Holistic face training enhances face processing in developmental prosopagnosia. Brain. 2014;137(Pt 6):1781–98. doi: 10.1093/brain/awu062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K. The cambridge face memory test: Results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia. 2006a;44(4):576–85. doi: 10.1016/j.neuropsychologia.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Yovel G, Nakayama K. No global processing deficit in the navon task in 14 developmental prosopagnosics. Soc Cogn Affect Neurosci. 2007;2(2):104–13. doi: 10.1093/scan/nsm003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine BC, Nakayama K. The cambridge face memory test: Results for neurologically intact individuals and an investigation of its validityt using inverted face stimuli and prosopagnosic patients. Neuropsychologia. 2006b;44:576–85. doi: 10.1016/j.neuropsychologia.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Furl N, Garrido L, Dolan RJ, Driver J, Duchaine B. Fusiform gyrus face selectivity relates to individual differences in facial recognition ability. J Cogn Neurosci. 2011;23(7):1723–40. doi: 10.1162/jocn.2010.21545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gainotti G. Is the right anterior temporal variant of prosopagnosia a form of ‘associative prosopagnosia’ or a form of ‘multimodal person recognition disorder’? Neuropsychol Rev. 2013;23(2):99–110. doi: 10.1007/s11065-013-9232-7. [DOI] [PubMed] [Google Scholar]

- Gainotti G, Ferraccioli M, Quaranta D, Marra C. Cross-modal recognition disorders for persons and other unique entities in a patient with right fronto-temporal degeneration. Cortex. 2008;44(3):238–48. doi: 10.1016/j.cortex.2006.09.001. [DOI] [PubMed] [Google Scholar]

- Garrido L, Eisner F, McGettigan C, Stewart L, Sauter D, Hanley JR, Schweinberger SR, Warren JD, Duchaine B. Developmental phonagnosia: A selective deficit of vocal identity recognition. Neuropsychologia. 2009a;47(1):123–31. doi: 10.1016/j.neuropsychologia.2008.08.003. [DOI] [PubMed] [Google Scholar]

- Garrido L, Furl N, Draganski B, Weiskopf N, Stevens J, Tan GC, Driver J, Dolan RJ, Duchaine B. Voxel-based morphometry reveals reduced grey matter volume in the temporal cortex of developmental prosopagnosics. Brain. 2009b;132(Pt 12):3443–55. doi: 10.1093/brain/awp271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentileschi V, Sperber S, Spinnler H. Progressive defective recognition of people. Neurocase. 1999;5:407–424. [Google Scholar]

- Gentileschi V, Sperber S, Spinnler H. Crossmodal agnosia for familiar people as a consequence of right infero polar temporal atrophy. Cogn Neuropsychol. 2001;18(5):439–63. doi: 10.1080/02643290125835. [DOI] [PubMed] [Google Scholar]

- Hailstone JC, Crutch SJ, Vestergaard MD, Patterson RD, Warren JD. Progressive associative phonagnosia: A neuropsychological analysis. Neuropsychologia. 2010;48(4):1104–14. doi: 10.1016/j.neuropsychologia.2009.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoover AE, Demonet JF, Steeves JK. Superior voice recognition in a patient with acquired prosopagnosia and object agnosia. Neuropsychologia. 2010;48(13):3725–32. doi: 10.1016/j.neuropsychologia.2010.09.008. [DOI] [PubMed] [Google Scholar]

- Joassin F, Pesenti M, Maurage P, Verreckt E, Bruyer R, Campanella S. Cross-modal interactions between human faces and voices involved in person recognition. Cortex. 2011;47(3):367–76. doi: 10.1016/j.cortex.2010.03.003. [DOI] [PubMed] [Google Scholar]

- Johnen A, Schmukle SC, Huttenbrink J, Kischka C, Kennerknecht I, Dobel C. A family at risk: Congenital prosopagnosia, poor face recognition and visuoperceptual deficits within one family. Neuropsychologia. 2014;58:52–63. doi: 10.1016/j.neuropsychologia.2014.03.013. [DOI] [PubMed] [Google Scholar]

- Kracke I. Developmental prosopagnosia in asperger syndrome: Presentation and discussion of an individual case. Develop Med Child Neurol. 1994;36:873–86. doi: 10.1111/j.1469-8749.1994.tb11778.x. [DOI] [PubMed] [Google Scholar]

- Liu RR, Pancaroglu R, Hills CS, Duchaine B, Barton JJ. Voice recognition in face-blind patients. Cereb Cortex. 2014 doi: 10.1093/cercor/bhu240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meudell PR, Northen B, Snowden JS, Neary D. Long term memory for famous voices in amnesic and normal subjects. Neuropsychologia. 1980;18(2):133–9. doi: 10.1016/0028-3932(80)90059-7. [DOI] [PubMed] [Google Scholar]

- Neuner F, Schweinberger SR. Neuropsychological impairments in the recognition of faces, voices, and personal names. Brain Cogn. 2000;44(3):342–66. doi: 10.1006/brcg.1999.1196. [DOI] [PubMed] [Google Scholar]

- Roswandowitz C, Mathias SR, Hintz F, Kreitewolf J, Schelinski S, Kriegstein Kv. Two cases of selective developmental voice-recognition impairments. Current Biology. 2014;24:2348–2353. doi: 10.1016/j.cub.2014.08.048. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Kloth N, Robertson DM. Hearing facial identities: Brain correlates of face--voice integration in person identification. Cortex. 2011;47(9):1026–37. doi: 10.1016/j.cortex.2010.11.011. [DOI] [PubMed] [Google Scholar]

- Shah P, Gaule A, Gaigg SB, Bird G, Cook R. Probing short-term face memory in developmental prosopagnosia. Cortex. 2015;64:115–122. doi: 10.1016/j.cortex.2014.10.006. [DOI] [PubMed] [Google Scholar]

- Stollhoff R, Jost J, Elze T, Kennerknecht I. Deficits in long-term recognition memory reveal dissociated subtypes in congenital prosopagnosia. PLoS One. 2011;6(1):e15702. doi: 10.1371/journal.pone.0015702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Susilo T, Duchaine B. Advances in developmental prosopagnosia research. Curr Opin Neurobiol. 2013;23(3):423–9. doi: 10.1016/j.conb.2012.12.011. [DOI] [PubMed] [Google Scholar]

- Thomas C, Avidan G, Humphreys K, Jung KJ, Gao F, Behrmann M. Reduced structural connectivity in ventral visual cortex in congenital prosopagnosia. Nat Neurosci. 2009;12(1):29–31. doi: 10.1038/nn.2224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tranel D, Damasio A. Knowledge without awareness: An autonomic index of facial recognition by prosopagnosics. Science. 1985;228:1453–1454. doi: 10.1126/science.4012303. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Kleinschmidt A, Giraud AL. Voice recognition and cross-modal responses to familiar speakers’ voices in prosopagnosia. Cereb Cortex. 2006;16(9):1314–22. doi: 10.1093/cercor/bhj073. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Kleinschmidt A, Sterzer P, Giraud AL. Interaction of face and voice areas during speaker recognition. J Cogn Neurosci. 2005;17(3):367–76. doi: 10.1162/0898929053279577. [DOI] [PubMed] [Google Scholar]

- Warren JD, Scott SK, Price CJ, Griffiths TD. Human brain mechanisms for the early analysis of voices. Neuroimage. 2006;31(3):1389–97. doi: 10.1016/j.neuroimage.2006.01.034. [DOI] [PubMed] [Google Scholar]

- Warrington E. Warrington recognition memory test. Los Angeles: Western Psychological Services; 1984. [Google Scholar]

- Young A, Aggleton J, Hellawell D, Johnson M, Broks P, Hanley J. Face processing impairments after amygdalotomy. Brain. 1995;118:15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]