Abstract

Study Objectives:

Manual scoring of polysomnograms is a time-consuming and tedious process. To expedite the scoring of polysomnograms, several computerized algorithms for automated scoring have been developed. The overarching goal of this study was to determine the validity of the Somnolyzer system, an automated system for scoring polysomnograms.

Design:

The analysis sample comprised of 97 sleep studies. Each polysomnogram was manually scored by certified technologists from four sleep laboratories and concurrently subjected to automated scoring by the Somnolyzer system. Agreement between manual and automated scoring was examined. Sleep staging and scoring of disordered breathing events was conducted using the 2007 American Academy of Sleep Medicine criteria.

Setting:

Clinical sleep laboratories.

Measurements and Results:

A high degree of agreement was noted between manual and automated scoring of the apnea-hypopnea index (AHI). The average correlation between the manually scored AHI across the four clinical sites was 0.92 (95% confidence interval: 0.90–0.93). Similarly, the average correlation between the manual and Somnolyzer-scored AHI values was 0.93 (95% confidence interval: 0.91–0.96). Thus, interscorer correlation between the manually scored results was no different than that derived from manual and automated scoring. Substantial concordance in the arousal index, total sleep time, and sleep efficiency between manual and automated scoring was also observed. In contrast, differences were noted between manually and automated scored percentages of sleep stages N1, N2, and N3.

Conclusion:

Automated analysis of polysomnograms using the Somnolyzer system provides results that are comparable to manual scoring for commonly used metrics in sleep medicine. Although differences exist between manual versus automated scoring for specific sleep stages, the level of agreement between manual and automated scoring is not significantly different than that between any two human scorers. In light of the burden associated with manual scoring, automated scoring platforms provide a viable complement of tools in the diagnostic armamentarium of sleep medicine.

Citation:

Punjabi NM, Shifa N, Dorffner G, Patil S, Pien G, Aurora RN. Computer-assisted automated scoring of polysomnograms using the Somnolyzer system. SLEEP 2015;38(10):1555–1566.

Keywords: automated scoring, polysomnography

INTRODUCTION

The current standards for scoring polysomnography require that each 30-sec epoch of the overnight recording be classified as wake, nonrapid eye movement (NREM) sleep, or rapid eye movement (REM) sleep.1 Annotating the polysomnogram also entails that a trained technologist identify electroencephalographic (EEG) arousals. Additionally, information garnered from measurements of oro-nasal airflow, respiratory effort, and oxyhemoglobin saturation must be evaluated for disordered breathing events using the criteria established by the American Academy of Sleep Medicine (AASM).1–3 Given the numerous requirements, manual scoring is a time-consuming and tedious process. Even with substantial training and supervision, manual scoring is fraught with problems of interscorer and intra-scorer reliability.4 Although low interrater reliability is a known limitation with manual scoring of arousals and of specific sleep stages (e.g., N1), manual scoring of disordered breathing events is also not without its challenges.4–9 Efforts have been made to facilitate the scoring of polysomnograms with computer-aided algorithms that utilize characteristics of various electrophysiological signals to discriminate sleep stages and to identify disordered breathing events. Technological advancements have brought forth computerized scoring systems which are being increasingly used in the research and clinical arenas.10 Studies examining the validity of automated scoring systems have generally focused on how well these systems approximate the results obtained by visual scoring.11–13 An inherent assumption in these studies is that manual scoring represents a criterion standard. In light of the substantial interscorer variability, manual scoring should be considered, at best, an imperfect reference. Because of the obvious variability in manual scoring, assessing validity of an automated system should not principally be based on how well it approximates the results of manual scoring. Rather, an automated scoring system should be considered a worthy alternative if the level of agreement between the automated and manual scoring is comparable to the level of agreement observed between human scorers. The overall objective of the current study was to describe the performance characteristics of a computerized scoring system by examining how well it estimates manually scored results and whether the level of agreement between automated and manual scoring parallels that between human scorers. An additional objective was to determine the value of expert review of polysomnograms after subjecting them to automated analysis. To accomplish these objectives, we used the Somnolyzer (Philips-Respironics, Murrysville, PA) scoring system, an automated system for scoring of polysomnograms, and compared it to manual scoring performed by four sleep laboratories.

METHODS

Study Sample

To assemble a heterogeneous set of polysomnograms, four sleep laboratories certified by the AASM were identified. These laboratories, which were contracted by the sponsor (Philips-Respironics, Murrysville, PA), were as follows: Arkansas Center for Sleep Medicine, Little Rock AR; Lifeline Sleep Centers, Pittsburgh PA; Delaware Sleep Disorder Centers, Wilmington DE; and Center Pointe Sleep Associates, Mc-Murray PA. Each laboratory was asked to select a convenient sample of full-montage recordings from patients (age ≥ 18 y) undergoing polysomnography for diagnosis, titration of positive airway pressure therapy, or both (i.e., a split-night study). The decision to select a diverse set of recordings was motivated by the need to evaluate the performance characteristics of the automated scoring system in a sample representative of clinical practice. The polysomnograms were selected such that the reason for testing was equally the same across the sites. Inclusion of a polysomnogram did not require that the recording be of optimal quality. All of the technicians involved with the study were certified registered polysomnographic technologists (RPSGTs). There was no oversight of the manual scoring either by the sponsor or the investigators. The protocol was approved by the institutional review board of each clinical site.

Polysomnography

The polysomnograms were performed on an Alice 4 (Philips-Respironics, Murrysville, PA) or higher recording system with a montage based on the 2007 recommendations by the AASM.1 The montage was as follows: O2-M1, C4-M1, F4-M1, O1-M2, C3-M2, and F3-M2 electroencephalograms, right and left electrooculograms, submental and bilateral anterior tibialis surface electromyograms, nasal pressure, thermo-couples at the nose and mouth, thoracic and abdominal strain gauges, and a pulse oximeter. Sleep-stage scoring was performed on 30-sec epochs according to the 2007 AASM criteria to estimate the percentages of the following sleep stages: N1, N2, slow wave (N3), and REM. Apneas were identified if there was a 90% or greater reduction in thermistor-based airflow for at least 10 sec. Hypopneas were identified if there was a 30% or more reduction in nasal pressure transducer airflow for at least 10 sec, that was associated with an oxyhemoglobin desaturation of at least 4% (AASM Recommended Rule 4A). Disordered breathing events were classified as obstructive, central, and mixed based on presence or absence of respiratory effort. The overall apnea-hypopnea index (AHI) was calculated as the total number of apneas and hypopneas per hour of total sleep time. Arousals were scored if there was an abrupt shift in the EEG frequency for at least 3 sec. Sleep recordings were anonymized at the field site and transmitted to a central repository. From this repository, recordings were subsequently accessed by the other sites, as well as by one other scorer from a fourth sleep laboratory for independent scoring. All scorers were certified (i.e., RPSGT) and were blinded to the results of automated scoring. Concurrently, each recording was subjected to automated analysis by the Somnolyzer system followed by expert review. The automated scoring was performed on the premises of the sponsor and the scored files were not edited in any manner prior to the expert review. Because of the expert review by a certified RPSGT, two sets of values were generated: (1) the raw automated analysis without expert review and (2) automated analysis with the expert review. Thus, in total, six values of each variable were derived from every polysomnogram—four from manual scoring and two from the Somnolyzer system. Financial support for expert review was provided by the sponsor. Details regarding the Somnolyzer automated scoring system have been previously published.14

Statistical Analysis

Data on manual and automated scoring was available for the following variables: (1) AHI; (2) sleep stage percentages (N1, N2, N3, and REM sleep); (3) overall arousal index; (4) total sleep time; (5) wake after sleep onset; and (6) sleep efficiency. Given that the AHI, percentages of sleep stage N1 and N3, and the arousal index were not normally distributed, descriptive statistics include the mean, standard deviation, and the 25th, 50th (median) and 75th percentiles. The log-transform was used to normalize the AHI values, whereas the square root transform was used to normalize the distribution of sleep stage N1 and the arousal index. No appropriate transform could be identified for sleep stage N3. Differences in scoring between the four sites and between manual versus automated scoring were assessed for each variable using analysis of variance after invoking the appropriate transformation. Nonparametric methods were used to compare the percentages of sleep stage N3 because of the inability to identify an appropriate transform. Subsequently, the level of agreement between parameters derived from manual and automated scoring was assessed using the Pearson product-moment correlation coefficient. Agreement between manual and automated scoring was also examined using the method proposed by Bland and Altman.15 In addition to the graphical methods for assessing agreement, two model-based approaches were also used. First, to compare the results from manual and automated scoring, the mixed model was employed using the PROC MIXED routine of the SAS 9.4 statistical software (SAS Institute, Inc., Cary, NC). Second, the intraclass correlation coefficient (ICC) was used to quantify the level of agreement between any pairwise set of parameters. The underlying analysis of variance model assumptions for the ICC were checked and were met.

RESULTS

Sample Characteristics

The study sample consisted of 97 patients (62.6% men) and had an average age of 40.2 y and a mean body mass index of 31.2 kg/m2. The distribution of the polysomnograms was as follows: 27.5% were diagnostic studies, 36.3% were split-night studies, and the remaining 36.3% were for positive airway pressure titration. Most of the sample (> 95%) had obstructive sleep apnea as the primary sleep related diagnosis. Table 1 summarizes various statistics for the AHI, percentages of sleep stages, and the arousal index derived from each of the four clinical sites and from the raw and expert reviewed automated scoring. Overall, no statistically significant differences were observed in the AHI derived from manual scoring by the four sites (P = 0.13). Moreover, comparisons of the manual versus automated expert-edited scoring revealed no significant differences in the AHI (P = 0.09). Differences were noted, however, in the percentages of stage N1 (P < 0.0001), N2 (P < 0.0001), and N3 (P < 0.001) and the arousal index (P < 0.001) between manual and automated expert-edited scoring. The percentage of REM sleep and total sleep time, however, was equivalent between the manual and automated scoring results (P = 0.44 for REM sleep and P = 0.30 for total sleep time). Examining only the manually scored results revealed a similar pattern where the percentage of REM sleep (P = 0.34) was equiva -lent across the four technologists, whereas statistically signifi-cant interscorer differences were present for the arousal index and for the percentages of sleep stages N1 (P < 0.0001), N2 (P = 0.0005), and N3 (P < 0.0001).

Table 1.

Descriptive statistics from manual and computer-aided automated scoring.

Manual Versus Automated Scoring of the AHI

There was a high degree of correlation in the AHI between the four sites and also between the AHI derived from manual and automated scoring (Figure 1, left panel). The average correlation for the AHI across the four sites was 0.92 (95% confidence interval [CI]: 0.90, 0.93). Similarly, the average correlation between the manual and automated derived values of AHI was 0.93 (95% CI: 0.91, 0.96). The degree of inter-scorer correlation between the manually scored results was no different than derived from manual and automated scoring. A matrix of all possible pairwise Bland-Altman plots, which is shown in Figure 1 (right panel), revealed that there was no systematic bias in the AHI values derived by manual scoring by the four sites or between manual scoring versus automated scoring. The average bias in the manually scored AHI values across the four sites was 1.61 events/h (95% CI: −0.93, 4.17), whereas the average bias in AHI values derived from manual versus automated scoring was 2.48 events/h (95% CI: 0.40, 4.55).

Figure 1.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for the apnea-hypopnea index (AHI). Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

In addition to the bivariate and the Bland-Altman plots, a mixed-model approach with a variance components covariance structure was used to assess heterogeneity in the AHI values from manual and automated scoring. The mixed-model approach showed that there were no statistically significant differences in the AHI when automated and manual scoring results were compared (P = 0.14). ICCs were then computed for all pairwise combinations of AHI from manual and automated scoring (Table 2). As before, irrespective of whether the AHI values from manual scoring were compared to each other or to the values from automated scoring, a high degree of agreement between AHI values was observed. Analyses that examined the level of agreement for the hypopnea index, obstructive apnea index, and central apnea index are also shown in Table 2. ICC values comparing the automated to manual scoring for each of the aforementioned measures were materially not different from the ICC values comparing manual scoring across the four sites.

Table 2.

Pairwise intraclass correlation coefficients (95% confidence intervals) for metrics of disordered breathing.

Manual Versus Automated Scoring of Sleep Architecture

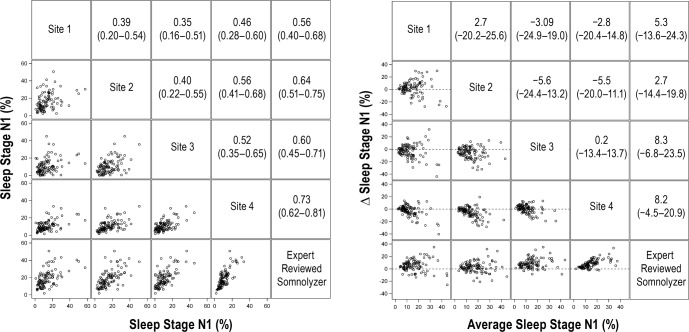

Comparisons of the arousal index and percentages of sleep stages N1, N2, and N3 revealed statistically significant differences between manual and automated scoring (Table 1). The Somnolyzer derived percentages of stage N1, N2, and N3 sleep were consistently higher than any of the manually scored values, whereas the arousal index from automated scoring was similar to the results from three of the four sites. In contrast, no differences were observed in the percentage of REM sleep between manual and automated scoring. Figures 2 through 6 display the bivariate (left panels) and Bland-Altman plots (right panels) for sleep architecture variables comparing the results of manual and automated scoring. For stage N1, the average correlation between the four clinical sites was 0.44 (95% CI: 0.39, 0.51), whereas the average correlation between the manual versus automated scoring was 0.63 (95% CI: 0.57, 0.70). Bland-Altman analysis (Figure 2, right panel) revealed that were there was a systematic bias with the percentage of stage N1 sleep from automated scoring being generally higher than that derived by manual scoring (mean bias: 6.1%; 95% CI: 3.8%, 8.4%).

Figure 2.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for sleep stage N1. Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

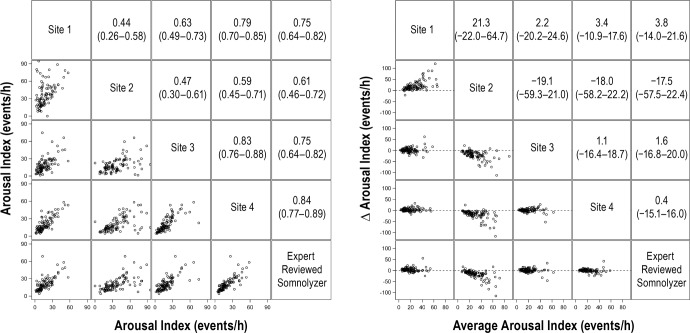

Figure 6.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for the arousal index. Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

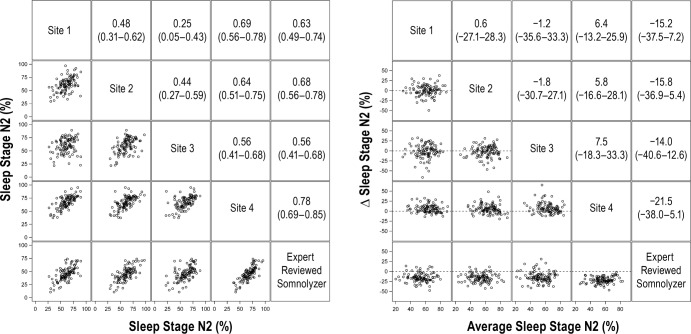

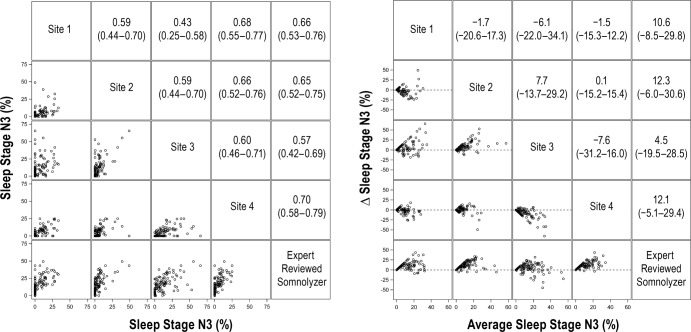

For stage N2 sleep, the average correlation for manually scored results was 0.52 (95%: 0.40, 0.63). Although the average correlation between the manual versus automated scoring for stage N2 sleep was slightly higher (0.66; 95% CI: 0.59, 0.74), this difference was not statistically significant (P = 0.14). Bland-Altman analysis for stage N2 sleep (Figure 3, right panel) showed that the average value from automated scoring was lower by 16.6% (95% CI: −19.5%, −13.7%) when compared to manual scoring. Results for comparisons of stage N3 and REM sleep percentages are shown in Figure 4 and Figure 5, respectively. Although the correlations for stage N3 sleep were all greater than 0.50, the level of agreement was low (Figure 4, left panel). Bland-Altman plots for stage N3 sleep (Figure 4, right panel) showed that the automated scoring results were generally higher in absolute magnitude by 9.9% (95% CI: 6.7%, 13.0%) when compared to manual scoring. In contrast to sleep stages N1, N2, and N3, there was substantial agreement in the scoring of REM sleep across the four clinical sites and between manual and automated scoring (Figure 5). Bland-Altman analysis for percentage of REM sleep (Figure 5) showed no significant bias between manual and automated scoring of REM sleep with an average difference of 0.57% (95% CI: −0.80%, 1.2%). Comparisons of the arousal index revealed strong correlations (Figure 6, left panel) with minimal bias between the manually and automated scored results (Figure 6, right panel). Values of total sleep time and sleep efficiency derived from the four clinical sites were also highly concordant as were values obtained from manual and automated scoring for these metrics. For example, the average correlation for total sleep time for the manually scored results was 0.92 (95% CI: 0.88, 0.95), whereas the correlation between the manual and automated scoring was 0.93 (95% CI: 0.90, 0.96). Similar findings were also noted regarding the level of agreement on sleep efficiency across the various scoring sessions, with correlations being in the range of 0.74 to 0.94.

Figure 3.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for sleep stage N2. Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

Figure 4.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for sleep stage N3. Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

Figure 5.

Correlation matrix (left panel) and Bland-Altman (right panel) plots for sleep stage rapid eye movement. Pearson correlation coefficients (95% confident interval) and the average bias (95% confidence interval) are in the left and right panels, respectively.

In addition to the bivariate assessments of agreement, mixed-model analysis was also conducted for each of the sleep architecture metrics along with computation of the ICC values. The mixed models with variance component analysis confirmed the observations from the aforementioned bivariate analyses. Compared to manual scoring, automated scoring by the Somnolyzer system generally yielded greater percentages for stages N1 (P < 0.0001) and N3 (P < 0.0001) and resulted in a lower percentage of stage N2 (P < 0.0001). In contrast, no differences in the percentage of REM sleep (P = 0.44) were observed when the manual and automated scoring results were compared using the mixed-models approach. For the arousal index, the Somnolyzer results were generally comparable to those derived from sites 1, 3, and 4 (P = 0.14) but were markedly different from those derived from site 2 (P < 0.001). Mixed-model analyses of total sleep time and sleep efficiency revealed no systematic differences between the magnitudes of either parameter when manually scored results are compared to the Somnolyzer system. Table 3 summarizes the pairwise ICC values for each of the sleep stages and the arousal index comparing the results derived not only from the four clinical sites but also the results derived from automated scoring. When sleep stage data were examined, there was low to modest agreement for the arousal index and the percentage of sleep stages N1, N2, and N3 across the four clinical sites. The observed level of agreement between manual scoring for these metrics (e.g., N1, N2, N3, and arousal index) was not significantly different than the agreement between manual and automated scoring. Finally, high interrater agreement was observed in the scoring of REM sleep whether human scorers were compared to each other or to the automated analysis by the Somnolyzer system.

Table 3.

Pairwise intraclass correlation coefficients (95% confidence intervals) for sleep stage data and the arousal index.

Utility of Expert Review

Analyses were also undertaken to determine the added value of reviewing the Somnolyzer scored polysomnograms by a certified scorer. Figure 7 displays the bivariate plots of the expert edited versus unedited results for the AHI, arousal index, and sleep stages N1, N2, N3, and REM. Irrespective of the metric examined, a strong correlation was observed between the results of the unedited and edited polysomnograms with correlations in the range from 0.91 to 0.99. Systematic differences were, however, noted between the results from the edited and unedited scored polysomnograms. Editing of the automatically scored polysomnograms led to a higher AHI (mean bias: 2.03 events/h, 95% CI: 1.49, 2.56) and percentage of stage N1 sleep (mean bias: 1.64%, 95% CI: 0.86, 2.42). Moreover, expert editing led to a statistically significant decrease in the arousal index (mean bias: −0.76 events/h, 95% CI: −1.04, −0.48) and percentage of stage N3 sleep (mean bias: −0.92%, 95% CI: −1.36, −0.48). In contrast, the differences in percentages of stage N2 and REM sleep between the edited and un-edited results were not statistically different. Similar analyses for total sleep time, wake after sleep onset, and sleep efficiency revealed that expert review led to an average increase of 14.0 min in total sleep time (95% CI: 8.2, 19.8), a decrease in wake after sleep onset by 12.7 min (95% CI: −19.8, −5.7), and an increase in sleep efficiency by 3.1% (95% CI: 1.8, 4.4).

Figure 7.

Bivariate plots comparing the unedited and expert edited results after automated scoring. Dashed line is the line of identity. Pearson correlation coefficients and the average bias (Mean Δ = Edited − Unedited) are provided for each variable. AHI, apnea-hypopnea index; REM, rapid eye movement.

DISCUSSION

The results of the current study demonstrate a high degree of concordance between manual and automated scoring for several commonly used metrics including the AHI, total sleep time, sleep efficiency, arousal index, and the percentage of REM sleep. Differences were observed, however, between automated and manual scoring for the percentages of sleep stages N1, N2, and N3. Most importantly, interrater agreement between manual scoring from the four distinct sites was not substantively different from the interrater agreement between manual and automated scoring irrespective of the metric examined. Thus, the reliability of the automated scoring by the Somnolyzer system is similar to that of human scorers and as such provides a viable alternative for the laborious process of manual scoring. Finally, expert review of polysomnograms that are subjected to automated scoring does not alter the results to any degree of clinical significance.

Extensive use of polysomnography for population-wide screening of sleep disorders is limited, in part, by the burden imposed by manual scoring. This burden is likely to increase further as home sleep testing is adopted broadly for sleep disorders that are associated with cardiovascular morbidity and mortality. Sole reliance on manual scoring will constrain the armamentarium and limit patient throughput. Therefore, it is not surprising that computerized algorithms to identify sleep and breathing abnormalities have proliferated over the past two decades.10 In fact, algorithms for the automated detection of oxyhemoglobin desaturation events were incorporated in some of the earliest reports16–19 of portable monitors dating back to the early 1990s.20 Subsequent to these pioneering efforts, various statistical and signal processing techniques have been used to identify microarousals, distinguish sleep from wakefulness, automate sleep staging, and detect disordered breathing events. Techniques for accomplishing these tasks are generally based on extracting specific features from various electrophysiological signals and classifying these features for the purposes of discrimination. Approaches such as thresholding spectral power of frequency bands, conventional linear and nonlinear classification, and waveform detection by pattern recognition algorithms are some of the techniques that have been previously employed. A repertoire of similar approaches has also been used for the detection of apneas and hypopneas. For delineating respiratory events, some investigators have relied on single-channel recordings (e.g., oximetry or airflow),21–25 whereas others have leveraged multiple channels (i.e., airflow, effort).26–30 The specific approach notwithstanding, automated algorithms have noticeably proliferated and are now ubiquitous in most commercially available digital acquisition systems. Despite this proliferation, however, empirical data regarding their validity and reliability are either limited or virtually absent. Therefore, it is not surprising that many of the embedded algorithms are not commonly used and manual scoring remains the standard in clinical practice.

Over the past several years, three comprehensive automated scoring systems have been developed and are being increasingly validated across different patient samples. In one of earliest reports, Pittman et al.26 examined the validity of the Morpheus (WideMed, Ltd., Israel) automated scoring system in patients referred to a sleep laboratory using two manual scorers as the comparators. A high degree of concordance was noted between automated and manual scoring of the respiratory disturbance index with ICCs that exceeded 0.95. Nonetheless, the automated system scored a slightly greater number of respiratory events than either of the two human scorers. The automated system also scored less stage N2 sleep and wakefulness while scoring a greater amount of slow wave sleep than manual scoring. Other notable differences in the results were that the automated system had a shorter latency to sleep onset, higher total sleep time, and a lower arousal index when compared to manual scoring. However, the observed differences were small and not of clinical significance. In a more recent study, Malhotra et al.13 similarly examined the utility of another automated scoring system using 70 polysomno-grams obtained from five sleep laboratories. Each parameter from automated scoring was compared to the corresponding value averaged from manual scoring by 10 certified technologists. Interrater agreement between the automated and manual scoring of the AHI was high (ICC: 0.96) if the recommended criteria for scoring disordered breathing events were used. Not surprisingly, the level of agreement between manual and automated scoring decreased if less stringent scoring criteria were used. Other parameters with a high degree of interrater agreement between automated and manual scoring were total sleep time (ICC: 0.87), stage N2 sleep (ICC: 0.84), and sleep efficiency (ICC: 0.74). In contrast, discrepancies between the automated and manual scoring were most evident for stage N1 sleep (ICC: 0.56), stage N3 sleep (ICC: 0.47), and arousals during REM sleep (ICC: 0.36).

Results on the validity of automated scoring by the Somnolyzer system reported herein indicate that its performance is comparable to the two aforementioned automated scoring systems for disordered breathing events, total sleep time, and sleep stages N1, N2, and N3. In contrast, the agreement between automated and manual scoring of REM sleep and arousals was greater with the Somnolyzer system than either of the other two systems. However, direct comparisons across automated scoring platforms should be tempered given the variability in the study samples, the number and expertise of the scorers, and the analytical methods used in the validation studies. In the only head-to-head comparison of automated scoring by the Morpheus and Somnolyzer systems, both were similarly comparable to manual scoring on sleep stage data.11 However, inferences regarding how well the existing systems cross-compare on other metrics (e.g., AHI) will require additional efforts that address the limitations highlighted above using a sample of polysomnograms that sufficiently capture the heterogeneity of clinical practice.

One of the fundamental assumptions in validating an automated system is that the derived results are being compared to a reference standard that is error free. However, there is substantial a body of literature4,30 documenting that manual scoring has substantial inter-rater variability and thus it is, at best, an imperfect standard. Failure to consider such errors in the reference standard can lead to biased assessments of performance characteristics of any automated system. The current study and the two previous studies13,26 addressed the problem of an imperfect reference by including multiple scorers. In their assessment of the Morpheus scoring system, Pittman et al.26 compared the automated results to those derived from manual scoring by two scorers. In contrast, Malhotra et al.13 used data from all 10 scorers and computed averages for the various metrics of interest. While such averaging is a reasonable approach to estimate a consensus reference, it is not without limitations. For example, variables such as the AHI and stage N3 sleep have skewed distributions and consequently the overall average is not a good measure of central tendency. Even if the average provides a summary measure that is unbiased, it remains susceptible to outliers particularly with limited sample sizes. Given the variability between human scorers, the probability of outlying values is not insignificant. Hence, in the current study, rather than utilizing a summary measure based on the four scoring sites, we employed a model-based repeated measures approach that incorporated appropriate mathematical transformations so as to not violate the assumptions of the underlying model. Moreover, our analysis placed considerable emphasis on demonstrating that the level of agreement between automated and manual scoring was equivalent to the level of agreement between any two human scorers while concurrently examining how well the automated system approximated the results from manual scoring. A notable difference between the current and previous studies is that results from automated scoring of sleep stages N1, N2, and N3 were notably different from manual scoring in the current but not the other two studies. However, it is important to emphasize that major differences between automated and manual scoring cannot be used to infer lower accuracy on the part of the Somnolyzer system given that the exact source of the error (computer or human) is not known.

There are several strengths and limitations of the current study that merit discussion. First, the relative large study sample that included polysomnograms of various types increases the generalizability of the results. Second, the use of certified technicians for manual scoring from four different clinical sites provides a comprehensive view of the disagreement between human experts. Third, in addition to the traditional methods such as bivariate correlations and Bland-Altman analyses, the technique of mixed-models was used for assessing agreement. These models facilitated comparisons of the automated and manual scoring results while incorporating the repeated measures structure of the dataset with appropriate transformations as needed. The mixed models also provided a unique approach for comparing various scorers as it does not require underlying assumptions that are needed to compute the ICC. Finally, the current study also examined the added value of expert review after completion of the automated scoring. Limitations of the study include the lack of contrasts with other automated systems for scoring and inclusion of patients with movement or neurological disorders. Such exclusions could certainly limit the generalizability of the results presented. Additionally, the scoring of apneas and hypopneas was based on the 2007 AASM criteria for scoring. Varying the criteria such as the desaturation or flow thresholds and including arousals for hypopneas could certainly influence the results and represent a future extension of the current work. These limitations notwithstanding, the results of the current study provide support that the Somnolyzer automated scoring system provides a viable complement to human experts and can assist with tedious process of scoring particularly at a time when there is a desperate need to constrain health-care related costs. Finally, and perhaps most importantly, benefits of high reproducibility of an automated system that required limited review by an expert are invaluable not only for clinical practice but also for the research arena.

DISCLOSURE STATEMENT

This study was supported by an unrestricted grant by Philips-Respironics. Dr. Punjabi was supported by a grant from the National Institutes of Health (HL075078). The other authors have indicated no financial conflicts of interest.

REFERENCES

- 1.Iber C, Ancoli-Israel S, Chesson AL, Jr., et al. The AASM manual for the scoring of sleep and associated events: rules, terminology and technical specifications. 1 ed. Westchester, IL: American Academy of Sleep Medicine; 2007. [Google Scholar]

- 2.Berry RB, Budhiraja R, Gottlieb DJ, et al. Rules for scoring respiratory events in sleep: update of the 2007 AASM Manual for the Scoring of Sleep and Associated Events. Deliberations of the Sleep Apnea Definitions Task Force of the American Academy of Sleep Medicine. J Clin Sleep Med. 2012;8:597–619. doi: 10.5664/jcsm.2172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ruehland WR, Rochford PD, O'Donoghue FJ, Pierce RJ, Singh P, Thornton AT. The new AASM criteria for scoring hypopneas: impact on the apnea hypopnea index. Sleep. 2009;32:150–7. doi: 10.1093/sleep/32.2.150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Whitney CW, Gottlieb DJ, Redline S, et al. Reliability of scoring respiratory disturbance indices and sleep staging. Sleep. 1998;21:749–57. doi: 10.1093/sleep/21.7.749. [DOI] [PubMed] [Google Scholar]

- 5.Bliwise D, Bliwise NG, Kraemer HC, Dement W. Measurement error in visually scored electrophysiological data: respiration during sleep. J Neurosci Methods. 1984;12:49–56. doi: 10.1016/0165-0270(84)90047-5. [DOI] [PubMed] [Google Scholar]

- 6.Loredo JS, Clausen JL, Ancoli-Israel S, Dimsdale JE. Night-to-night arousal variability and interscorer reliability of arousal measurements. Sleep. 1999;22:916–20. doi: 10.1093/sleep/22.7.916. [DOI] [PubMed] [Google Scholar]

- 7.Collop NA. Scoring variability between polysomnography technologists in different sleep laboratories. Sleep Med. 2002;3:43–7. doi: 10.1016/s1389-9457(01)00115-0. [DOI] [PubMed] [Google Scholar]

- 8.Redline S, Budhiraja R, Kapur V, et al. The scoring of respiratory events in sleep: reliability and validity. J Clin Sleep Med. 2007;3:169–200. [PubMed] [Google Scholar]

- 9.Bonnet MH, Doghramji K, Roehrs T, et al. The scoring of arousal in sleep: reliability, validity, and alternatives. J Clin Sleep Med. 2007;3:133–45. [PubMed] [Google Scholar]

- 10.Penzel T, Hirshkowitz M, Harsh J, et al. Digital analysis and technical specifications. J Clin Sleep Med. 2007;3:109–20. [PubMed] [Google Scholar]

- 11.Svetnik V, Ma J, Soper KA, et al. Evaluation of automated and semiautomated scoring of polysomnographic recordings from a clinical trial using zolpidem in the treatment of insomnia. Sleep. 2007;30:1562–74. doi: 10.1093/sleep/30.11.1562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kuna ST, Benca R, Kushida CA, et al. Agreement in computer-assisted manual scoring of polysomnograms across sleep centers. Sleep. 2013;36:583–9. doi: 10.5665/sleep.2550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Malhotra A, Younes M, Kuna ST, et al. Performance of an automated polysomnography scoring system versus computer-assisted manual scoring. Sleep. 2013;36:573–82. doi: 10.5665/sleep.2548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Anderer P, Gruber G, Parapatics S, et al. An E-health solution for automatic sleep classification according to Rechtschaffen and Kales: validation study of the Somnolyzer 24 x 7 utilizing the Siesta database. Neuropsychobiology. 2005;51:115–33. doi: 10.1159/000085205. [DOI] [PubMed] [Google Scholar]

- 15.Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;1:307–10. [PubMed] [Google Scholar]

- 16.Stoohs R, Guilleminault C. Investigations of an automatic screening device (MESAM) for obstructive sleep apnoea. Eur Respir J. 1990;3:823–29. [PubMed] [Google Scholar]

- 17.Rauscher H, Popp W, Zwick H. Quantification of sleep disordered breathing by computerized analysis of oximetry, heart rate and snoring. Eur Respir J. 1991;4:655–9. [PubMed] [Google Scholar]

- 18.Stoohs R, Guilleminault C. MESAM 4: an ambulatory device for the detection of patients at risk for obstructive sleep apnea syndrome (OSAS) Chest. 1992;101:1221–7. doi: 10.1378/chest.101.5.1221. [DOI] [PubMed] [Google Scholar]

- 19.Koziej M, Cieslicki JK, Gorzelak K, Sliwinski P, Zielinski J. Hand-scoring of MESAM 4 recordings is more accurate than automatic analysis in screening for obstructive sleep apnoea. Eur Respir J. 1994;7:1771–5. doi: 10.1183/09031936.94.07101771. [DOI] [PubMed] [Google Scholar]

- 20.Penzel T, Amend G, Meinzer K, Peter JH, von WP. MESAM: a heart rate and snoring recorder for detection of obstructive sleep apnea. Sleep. 1990;13:175–82. doi: 10.1093/sleep/13.2.175. [DOI] [PubMed] [Google Scholar]

- 21.Vazquez JC, Tsai WH, Flemons WW, et al. Automated analysis of digital oximetry in the diagnosis of obstructive sleep apnoea. Thorax. 2000;55:302–7. doi: 10.1136/thorax.55.4.302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Varady P, Micsik T, Benedek S, Benyo Z. A novel method for the detection of apnea and hypopnea events in respiration signals. IEEE Trans Biomed Eng. 2002;49:936–42. doi: 10.1109/TBME.2002.802009. [DOI] [PubMed] [Google Scholar]

- 23.Nakano H, Tanigawa T, Furukawa T, Nishima S. Automatic detection of sleep-disordered breathing from a single-channel airflow record. Eur Respir J. 2007;29:728–36. doi: 10.1183/09031936.00091206. [DOI] [PubMed] [Google Scholar]

- 24.Alvarez D, Hornero R, Marcos JV, del Campo F. Multivariate analysis of blood oxygen saturation recordings in obstructive sleep apnea diagnosis. IEEE Trans Biomed Eng. 2010;57:2816–24. doi: 10.1109/TBME.2010.2056924. [DOI] [PubMed] [Google Scholar]

- 25.Caseiro P, Fonseca-Pinto R, Andrade A. Screening of obstructive sleep apnea using Hilbert-Huang decomposition of oronasal airway pressure recordings. Med Eng Phys. 2010;32:561–8. doi: 10.1016/j.medengphy.2010.01.008. [DOI] [PubMed] [Google Scholar]

- 26.Pittman SD, MacDonald MM, Fogel RB, et al. Assessment of automated scoring of polysomnographic recordings in a population with suspected sleep-disordered breathing. Sleep. 2004;27:1394–403. doi: 10.1093/sleep/27.7.1394. [DOI] [PubMed] [Google Scholar]

- 27.Barbanoj MJ, Clos S, Romero S, et al. Sleep laboratory study on single and repeated dose effects of paroxetine, alprazolam and their combination in healthy young volunteers. Neuropsychobiology. 2005;51:134–47. doi: 10.1159/000085206. [DOI] [PubMed] [Google Scholar]

- 28.Saletu B, Prause W, Anderer P, et al. Insomnia in somatoform pain disorder: sleep laboratory studies on differences to controls and acute effects of trazodone, evaluated by the Somnolyzer 24 x 7 and the Siesta database. Neuropsychobiology. 2005;51:148–63. doi: 10.1159/000085207. [DOI] [PubMed] [Google Scholar]

- 29.Anderer P, Moreau A, Woertz M, et al. Computer-assisted sleep classification according to the standard of the American Academy of Sleep Medicine: validation study of the AASM version of the Somnolyzer 24 x 7. Neuropsychobiology. 2010;62:250–64. doi: 10.1159/000320864. [DOI] [PubMed] [Google Scholar]

- 30.Magalang UJ, Chen NH, Cistulli PA, et al. Agreement in the scoring of respiratory events and sleep among international sleep centers. Sleep. 2013;36:591–6. doi: 10.5665/sleep.2552. [DOI] [PMC free article] [PubMed] [Google Scholar]