Abstract

Medical robots have been widely used to assist surgeons to carry out dexterous surgical tasks via various ways. Most of the tasks require surgeon’s operation directly or indirectly. Certain level of autonomy in robotic surgery could not only free the surgeon from some tedious repetitive tasks, but also utilize the advantages of robot: high dexterity and accuracy. This paper presents a semi-autonomous neurosurgical procedure of brain tumor ablation using RAVEN Surgical Robot and stereo visual feedback. By integrating with the behavior tree framework, the whole surgical task is modeled flexibly and intelligently as nodes and leaves of a behavior tree. This paper provides three contributions mainly: (1) describing the brain tumor ablation as an ideal candidate for autonomous robotic surgery, (2) modeling and implementing the semi-autonomous surgical task using behavior tree framework, and (3) designing an experimental simulated ablation task for feasibility study and robot performance analysis.

I. Introduction

a) Surgical Robotics

Taylor et al. [1] has classified the medical robots into two categories: surgical CAD/CAM and surgical assistant, depending on their role in the surgery. The commercial daVinci™ telerobotic surgical system from Intuitive Surgical Inc. is a highly successful surgical assistant robot in minimally invasive surgery [2]. An additional category, automated surgical robots, is a new focus of research groups in which the cycle of measurement, diagnosis and treatment is automatically closed by the robotic device. In this project, a laboratory test is reported of initial technical steps as part of a larger project with the longer term goal to treat cancerous neural tissue at margins which may remain after the bulk of a brain tumor is removed.

b) Clinical Scenario

The removal fraction of brain tumors is extremely critical to the patient’s survival and long term quality of life. After the bulk of the tumor, and margins of up to 1cm, are removed, leaving a cavity, any remaining cancerous material is very dangerous.

In our proposed clinical scenario, we will apply biomarkers for brain tumors, ’Tumor Paint’, developed by Dr. James Olson [3]. ’Tumor Paint’, a molecule derived from scorpion toxin, selectively binds to brain tumor cells and fluoresces with illumination of conjugated dye. Our ultimate system will scan the cavity for fluorescently labeled tissue exposed by bulk tumor removal, and automatically treat that material. Two posited treatment modalities are laser ablation and morcellation/ suction. Because fluorescence responses of residual tumor cells can be weak, significant integration time is required for the image collection. Because of this image integration time, manual treatment of the fluorescently labeled material is very tedious.

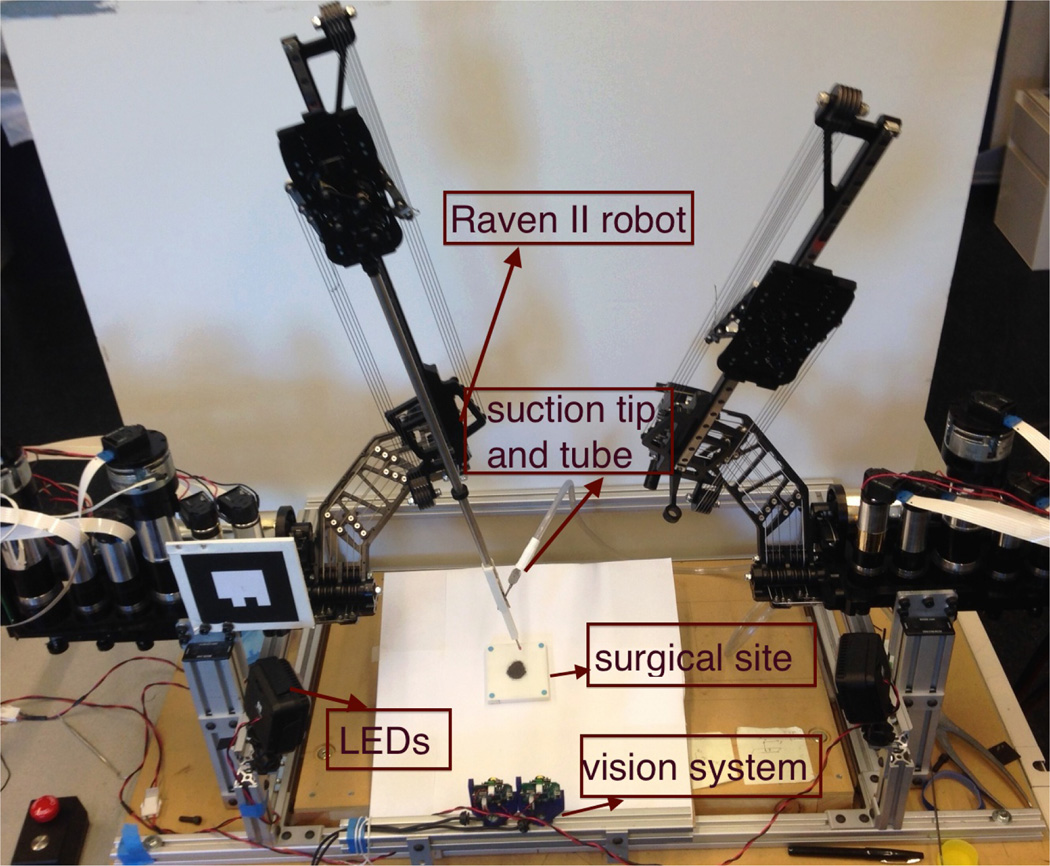

Our experimental system consists of the Raven II™ [4] surgical robotics research platform for positioning the treatment probe as well as a near-infrared(NIR) fluorescence-based imaging system using the 1.6mm diameter Scanning Fiber Endoscope (SFE) [5] for detecting the tissue needing treatment (Figure 1).

Fig. 1.

Detection of mouse brain tumor injected with Tumor Paint in NIR fluorescence image captured by SFE: (a) standard fluorescence image of a mouse brain. (b) post-processed image of the same mouse brain ex vivo.

Our scenario assumes that the surgeon will remove the majority of the tumor manually, leaving a surgical cavity whos walls contain possibly cancerous material. Our medium term project objective aims towards a system which can detect and ablate labeled tumor material in an ex-vivo mouse brain. The present paper represents an intermediate milestone towards this capability.

We divide semi-automated tumor ablation into six subtasks: intra operative imaging, trajectory planning, plan selection, plan execution and performance checking, as well as an optional recovery procedure if a suboptimal outcome happens during execution.

Three technical contributions are reported here. 1) The desired surgical task is encoded into the Behavior Tree framework. 2) Positioning accuracy of our cable-driven flexible robotic system is improved with 3D stereo vision tracking. 3) We demonstrate the system performance by removing small particles (iron filings) from a planar surface under image guidance.

c) Behavior Trees

We represent the task with Behavior Trees (BT): a behavior modeling framework emerging from video games. BTs express each sub-task as a leaf, and combine them into treatment behaviors through higher order nodes to express sequential and other relations. More background on BTs is given below in Section III.

d) Raven

Raven II™ is an open research platform for surgical robotics research now in use in 12 laboratories worldwide. Like the da Vinci, Raven was originally designed as a robot for teleoperation. As medical robotics research has moved from teleoperation to increasing automation [6], limitations in positional accuracy, such as cable stretching and friction, mechanical backlash, as well as the imperfection of the kinematic model, which were not apparent under teleoperation become significant obstacles.

e) 3D Vison

To achieve the required positioning precision (about 0.5mm), we set up a stereo vision system consisting of two Logitech QuickCam® Communicate Deluxe™ webcams to obtain more accurate pose information of the robot end-effector similar to [6]. A four-state Kalman Filter was implemented for each imaging channel. This economical and effective vision system is able to track the color marker within a noise of 0.3mm when powered in full resolution(1280×960) at maximum 7.5Hz under an evenly distributed illumination system. The robotic system augmented by a stereo-vision tracking system is depicted in Figure 2.

Fig. 2.

(a) RAVEN II robotic system with stereo-vision tracking system (b)Stereo-vision system consists of two webcams.

This paper is organized as follows: Section II reviews literature in autonomy in robotic surgery. Section III describes the behavior tree framework for modeling our surgical task. In section IV, subtasks of the ablation procedure are defined in detail and in Section V, the experimental ablation procedure is designed and implemented, results are presented and discussed.

II. Related Work in Autonomous Robotic Surgery

Autonomy in robotic surgery does not mean absence of surgeon [7]. Instead, it involves perception of the environment by the robotic system and a corresponding adaption of its behavior to the changing environmental parameters [8] under the supervision of the surgeon. Combined with advanced medical imaging technology and modeling techniques, as well as the traditional CAD/CAM related technologies from the industry, a certain degree of autonomy in robotic surgery may relieve the surgeon from tedious receptive work and exhibit better overall precision and accuracy of the surgical intervention.

A few clinical applications of autonomous robotics have achieved clinical approvals. These include orthopaedic surgery, neurosurgery, and radiotherapy. ROBODOC™ executed pre-planned cuts in orthopaedic surgery [9]. NeuroMate™ is a stereotactic robot to perform neurosurgical procedures [10], [11], e.g. deep brain simulation (DBS) and stereotactic electroencephalography (SEEG). Autonomous robotics is also widely used in the area of radiosurgery, such as CyberKnife [12], and head positioning in the GammaKnife [13].

Recent experimental work presents more intelligent behavior of autonomous surgical procedures, with the integration of visual tracking, advanced control theory, and machine learning techniques. Knot tying in suturing is one of the most intensively studied automated surgical tasks by various researchers [14]–[17]. Other applications include automated debridement [6] and cochlear implantation [18], [19].

III. Surgical Procedure Modeling with Behavior Trees

The behavior tree (BT) is a graphical modeling language which has become popular for modeling artificial intelligence (AI) opponents in games [20], [21]. While simple behaviors can be also modeled using finite state machines(FSM), for large and complicated systems, BTs provide more scalable and modular logic [22]. All BT nodes are periodically interrogated and return one of three states: ’running’, ’failure’ and ’success’. Transitions between nodes are specified by the type of parent node. In robotics, BTs have been recently tested in humanoid robot control [23]–[25]. Here, we explore the potential utility of BTs as a modeling language for intelligent robotic surgical procedures. The flexibility, reusability, and simple syntax of BTs, will potentially help the surgeon and engineer model complicated surgical procedures and implement them in robotic surgery.

Our BT framework is adopted from the recent work of Marzinotto et al. [23]. Leaves of the BT are subtask execution modules. We add a new type of BT node called Recovery Node to handle a failure or incomplete result in the robotic intervention. This node can initiate a recovery procedure and repeat a procedure until a specified status is reached. A shortcoming of existing BTs is a lack of mechanism for communication between nodes. To address this, we implemented a Blackboard data store in addition to the BT structure. The blackboard contains data generated and consumed by leaf nodes and provides a set of methods to manipulate the data, such as read, write, and update.

Overall, surgical procedures are pre-planned. As the plan is executed however, autonomous robotic surgery involves three important steps: sense, plan and act [8] which are further divided into sub-steps. We divide tumor margin ablation into five subtasks: tumor scan, ablation path planning, plan selection, plan execution, and examination. To combine those sub-tasks, our version of BTs contain six node types:

Root Node ∅: generates “ticks” (enabling signals which propogate down the tree) with a certain frequency.

Sequential Node →: enables its children sequentially and returns success iff all children return success.

Selection Node ?: enables children sequentially and returns success the first time a child returns success (This node type was not used in modeling the ablation task).

Parallel Node ⇉: enables its children simultaneously and returns success iff the fraction of its children reporting success is greater than 0 < S < 1.

Action Node ◊: performs a certain subtask and at each tick, returns busy, failure, or success. Action nodes form the leaves of the BT.

Recovery Node ↻: has only two children and returns success iff the first child returns success. If the first child returns failure, the second child (the recovery procedure) will be executed and brings the system to an initial state. After that, the first child is executed again.

In our BT representation of a semi-autonomous brain tumor ablation procedure (Figure 3) each subtask is an action node (leaf) of the tree. Higher level nodes provide the logic to combine the subtasks. The blackboard provides data storage so that, in our example, the planning nodes can deliver plans to the selection and execution nodes.

Fig. 3.

Behavior tree representation of semi-autonomous brain tumor ablation

The software architecture of the entire system is based on the Robotic Operating System(ROS) platform in a three-level structure (Figure 4). At the top level, a BT coordinates the execution. The middle level consists of stereo-vision tracking and autonomous motion control modules. At the bottom level is robot and camera control, which connect to and control the hardware via drivers. The communication across different levels and between the same level is enabled via ROS Messages and Actions.

Fig. 4.

Software architecture

IV. problem Definition and Methods

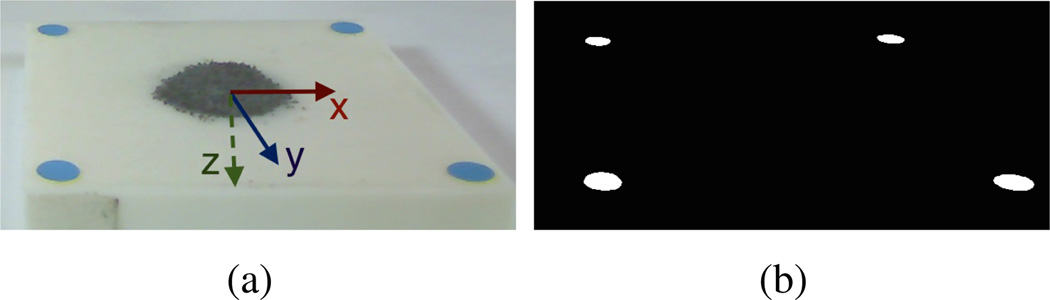

The semi-autonomous tumor margin ablation task in this study is simplified to a planar surface. In the clinical scenario, the cancerous cells are distinguished by the fluorescence image taken by SFE (Figure 1 [26]). For this inital simulation we use a relatively high contrast “surgical field”, which is a square white 3d printed plane with a 5mm blue marker at each corner under visible light. Dark iron filings represent the cancerous material and are randomly placed inside the square (Figure 5). The robotic motion was constrained 3mm above the planar surgical field for ablation purpose.

Fig. 5.

Surgical Field representation (top view)

A. Scan

The aim of the scan subtask is to locate the shape and extent of the target in 3D space using vision. We use a 2D webcam to detect the “tumor” and locate it in 3D with the assistance of stereo-vision. The location of the simulated tumor target is obtained in three steps: (1) Localization of the surgical field, (2) Localization of target with respect to surgical field and (3) Transform into vision frame.

In pending work, we will generalize this method to non-planar surfaces. Gong et al. [27] showed the feasibility and quality of 3D target reconstruction in a curved phantom using the SFE mechanically scanned by same platform in this study (Figure 2).

First, the hue-saturation-value(HSV) bounds of the blue markers are found by careful tuning and fixing the lighting of the workspace. Then, the centroid of each blue marker is found in pixel space after threshholding of the image based on the identified HSV bounds (Figure 6). The 3D locations of the four corner markers are calculated using the disparity of the centroids in the stereo-pair. Finally, according to the geometry constraints of the four corner markers, we compute the transform VTS from the surgical field frame to the vision frame.

Fig. 6.

Detection of surgical field: (a) original left channel image with annotation of surgical field frame. (b) processed image after color segmentation.

The contour (outline) of the simulated tumor material is extracted by intensity thresholding. To avoid the large depth noise caused by disparity error in stereo vision, we used a single image instead and a Perspectiven-Point(PnP) solver from the openCV library to find the contour position in the frame from the 3D to 2D correspondences of the blue corner markers. The contour (consisting of a string of points around the segmented blob) is denoted as SC.

The contour of the identified target in the vision frame is computed by premultiplying the transform VTS:

| (1) |

Finally, the contour in the vision frame V C is posted to the blackboard.

B. Plan

This subtask is very close to the traditional CAD/CAM procedure for pocket milling; a heavily studied area since end of the last century. Based on the contour found in the scan step, and retrieved from the blackboard, the plan subtask generates the ablation paths. We implemented two algorithms for generating two types of ablation or treatment paths: zigzag and spiral.

The zigzag path planner is adopted from Park et al. [28] in three steps:

find the monotone chain along the sweeping direction.

find the intersection points between the monotone chains and parallel lines.

link the path elements into a zigzag pattern and consider possible tool retraction.

The spiral path is generated by calculating a set of inwards offset contours. The open library CGAL is utilized to compute the offset contours. After achieving a list of offset contours, they are linked into a spiral shaped path (multiple paths are possible if tool retraction is found).

Both generated paths are computed in the surgical site frame and then converted to the vision frame and posted to the blackboard.

C. Selection

The selection node is a user interface step in which the user (surgeon) can select between the two plans. In the eventual application we expect that the surgeon will see a representation of the plans superimposed on the imaging data and select the best plan in his or her judgement. Furthermore, the surgeon will be able to approve only portions of the computed plans.

D. Execution

Execution of the actions derived from the selected plan is facilitated through ROS Action Protocol and ROS Messages. ROS Action protocol allows a “client” to send goals and receive feedback callbacks and a “server” executes goals and posts status updates through callbacks. Figure 8 illustrates this client-server communication structure as well as the relationship to BT.

Fig. 8.

Action protocol illustration: the execution node (leaf of behavior tree) is client and autonomous motion control node at middle level is server.

We implement the BT action node, “execution”, as a ROS action client. The action client retrieves the selected path pattern from the blackboard. Based on the plan, it requests robot motion by sending desired paths as the goal to the action server, checks the feedback from the server, and updates the goal as necessary.

The action server functions as a bridge between the BT and robot control. It receives the desired goal path from the client and breaks it down into a set of destinations. Finally, it sends the corresponding motion commands to the robot via ROS Message using an existing Raven interface. The motion control with stereovision augmentation is performed in three steps: move, check, correct (Figure 9). Motion control is checked with the 3D vision system and corrective movements are generated until the accuracy is within a defined threshold.

Fig. 9.

Robot position control with stereo-vision augmentation: the actual path deviates from the ideal path due to imprecise angle information of joints. Motion commands continue updating until the end-effector reaches within tolerance of the destination.

V. Experiment and Results

An experiment for mimicking the surgical ablation procedure was designed to verify the feasibility of the aforementioned concepts.

A. Hardware Setup

The 60×60mm surgical field was 3D printed and marked with a blue marker at each corner. Dark gray iron filings representing the tumor were randomly poured onto the white surgical field (Figure 5). We attached a magnet to the back of the phantom to hold the iron filings roughly in place.

A tool attachment was designed and 3D printed to attach the 2mm diameter suction tool to the Raven arm (Figure 10(b)). The suction tip was marked with a 3mm wide pink band for easier vision tracking. Two surgical suction tubes connect the suction tip through a filter (0.2bar) (Figure 10). The suction tool clears filings in a 5mm diameter area when the tool is placed perpendicularly 1mm above the iron filings.

Fig. 10.

(a) Suction system overview: suction waste container and vacuum pump. (b) suction tip with pink marker attached to robot end-effector.

To prevent occlusion of the tool tip, the stereo-vision system was mounted parallel to the robot base frame which results in simple registration between the robot and vision frames. White LEDs are provided for even illumination. For more accurate results from the PnP solver (Section IV-A), the surgical field was tilted with a small angle (Figure 11).

Fig. 11.

The entire system setup for the experiment

B. Software Environment

We implemented the top level BT, the middle level tracking algorithm, motion control, and the bottom level camera control, in C++ using the ROS platform on a computer with 3.5Ghz Intel® Core® i7 CPU and 4GB memory, running Ubuntu 12.04 with Linux kernel 3.2.0.

C. Results and Discussion

One of the biggest challenges in this demonstration task is to make the robot perform precise motion along the desired path automatically while also clearing the iron filings by effective vacuum action. Experimentation showed that a tip speed of 10mm/sec struck a performance balance between movement accuracy and successful particle removal (ablation). For performance comparison between the zigzag and spiral ablation plans, we ran 3 trials of each plan type. In each trial, the simulated tumor size was approximately the same. The BT root node was ticked at a frequency of 1Hz.

Table I shows the average completion time of the two plans extracted from video recordings. Completion time for the other subtasks was read from the program.

TABLE I.

Completion time of each automated subtask in semi-automated ablation procedure

| Zigzag Plan | Spiral Plan | |

|---|---|---|

| Avg. time for scanning | 30s | |

| Avg. time for plan generation | 29ms | 26ms |

| Avg. time for plan execution | 181s | 598s |

| Avg. time for checking | 51ms | |

Table I shows that it took the system much longer using the spiral plan. This was due to the large number of intermediate steps and the stereo-vision-based motion correction at each step (Figure 9). Our stereo-vision system operates at 7.5Hz due to camera bandwidth limit. For each correction, it took the vision system about 1 second to provide a stable and accurate position reading of the robot tip. For the same simulated ablation target size, the spiral plan (blue nodes, Figure 12 right) usually generated more intermediate steps than the zigzag plan (blue nodes, Figure 12 left). For better suction performance, we added intermediate steps to the generated zigzag plan (red nodes, Figure 12 left).

Fig. 12.

Generated ablation plan of zigzag path and spiral path. Left - zigzag path with auxiliary points in red. Right - spiral path.

Table II shows the correction times while executing the zigzag ablation plan depicted in Figure 12. An average of 2.4 steps were needed for the robot end-effector to approach the goal point (“end position” of Figure 9) within a tolerance of 0.5mm.

TABLE II.

Performance of the zigzag plan

| Completion time of ablation | 176s |

|---|---|

| Total number of points(incl. finishing up) | 57 |

| Total motion steps | 139 |

| Average motion steps to each point | 2.4 |

Tip position from optical tracking is transformed into surgical field frame and compared to the generated zigzag path in Figure 13(a). An enlarged version on xy plane is displayed in Figure 13(b). The RMS error between the actual reached positions and desired viapoints is computed as 0.318mm in 3D space.

Fig. 13.

(a) Robot motion and generated paths in surgical frame. (b)Motion cccuracy on xy plane of the surgical field.

Our current Kalman filter for each image channel functions well in de-noising but is relatively slow. By improving the tracking noise model used in Kalman filter, the execution time could be further reduced.

We also noticed that the spiral plan did not clean up the iron filings as thoroughly as the zigzag plan. The residues usually remained in the location where a relatively large motion occurred. This is because the points in the spiral plan are less equally distributed compared to the zigzag plan. One solution is adding auxiliary points to the spiral path, but the execution time will be extended in that case. For better ablation performance and comparison, both planners will be modified to equalize the distance between motion endpoints. Despite the fact that spiral plan is slow and leaves residue, it might be preferred in tumor ablation, because the edge of the tumor is covered more accurately, and the healthy tissue is less cut in spiral plan compared to the zigzag plan.

VI. Conclusion and Future Work

We modeled and implemented a bench-top analog of semi-automated brain tumor ablation using a ROS implementation of the BT framework. We introduced a new BT node type, the “Recovery Node”. In the current system (Figure 3) the last step of the “Autonomous Ablation” task (AA) visually checks the field for missed particles (corresponding to labeled tumor material in the final application). If any are detected, the AA node fails and the recovery node restores the system to a state where the AA node can be restarted.

We demonstrated that the system is able to detect and locate the simulated tumor on a planar surgical site by a binocular vision system, and generate two ablation plans automatically. In the current version, the surgeon is required to select and approve one of the generated plans before the autonomous execution by the robot.

To increase the precision of the robot’s light-weight, cable-driven mechanism and control, we introduced an autonomous motion control method with visual end-effector sensing. Experimental performance comparison using two ablation plans, showed the feasibility of the software and hardware setup for further work.

Our future work will focus on integration of the SFE imaging reconstruction, and ablation planning and execution in a convex surface (i.e. a 2D manifold), which is closer in shape to the real surgical field. In that case, the stereo-vision tracker may not be able to provide enough position information due to the occlusion of the color marker on the robot end-effector. Nonetheless, the position of the robot end-effector may be estimated from the SFE image using machine vision techniques (visual SLAM [29]). Additional work on accuracy of the light-weight cable-driven Raven structure is being pursued with improved joint sensing and control.

Fig. 7.

Detection of simulated tumor (a) original image taken from left camera. (b) processed image after thresholding

Acknowledgement

This work is supported by the U.S National Institutes of Health NIBIB R01 EB016457 The authors extend thanks to Angelique Berens MD for providing surgical suction equipment for the experiment and Di Zhang’s assistance in 3D printing.

Contributor Information

Danying Hu, Email: fdanying@uw.edu.

Yuanzheng Gong, Email: fgong7@uw.edu.

Blake Hannaford, Email: blakeg@uw.edu.

Eric J. Seibel, Email: eseibelg@uw.edu.

References

- 1.Taylor RH, Stoianovici D. Medical robotics in computer-integrated surgery. IEEE Trans. on Robotics and Automation. 2003;19(5):765–781. [Google Scholar]

- 2.Mack MJ. Minimally invasive and robotic surgery. Jama. 2001;285(5):568–572. doi: 10.1001/jama.285.5.568. [DOI] [PubMed] [Google Scholar]

- 3.Veiseh M, Gabikian P, Bahrami S-B, Veiseh O, Zhang M, Hackman RC, Ravanpay AC, et al. Tumor paint: a chlorotoxin: Cy5. 5 bioconjugate for intraoperative visualization of cancer foci. Cancer research. 2007;67(14):6882–6888. doi: 10.1158/0008-5472.CAN-06-3948. [DOI] [PubMed] [Google Scholar]

- 4.Hannaford B, Rosen J, Friedman DW, King H, Roan P, Cheng L, Glozman D, Ma J, Kosari SN, White L. Raven-ii: an open platform for surgical robotics research. IEEE Trans. on Biomedical Engineering. 2013;60(4):954–959. doi: 10.1109/TBME.2012.2228858. [DOI] [PubMed] [Google Scholar]

- 5.Seibel EJ, Johnston RS, Melville CD. A full-color scanning fiber endoscope. Proc. Optical Fibers and Sensors for Medical Diagnostics and Treatment Applications VI. 2006:6083–9–16. [Google Scholar]

- 6.Kehoe B, Kahn G, Mahler J, Kim J, Lee A, Lee A, Nakagawa K, Patil S, Boyd WD, Abbeel P, et al. Autonomous multilateral debridement with the raven surgical robot. Robotics and Automation (ICRA:2014 IEEE International Conference on. 2014 [Google Scholar]

- 7.Dario P, Hannaford B, Menciassi A. Smart surgical tools and augmenting devices. IEEE Trans. on Robotics and Automation. 2003;19(5):782–792. [Google Scholar]

- 8.Moustris G, Hiridis S, Deliparaschos K, Konstantinidis K. Evolution of autonomous and semi-autonomous robotic surgical systems: a review of the literature. The Int. Journal of Medical Robotics and Computer Assisted Surgery. 2011;7(4):375–392. doi: 10.1002/rcs.408. [DOI] [PubMed] [Google Scholar]

- 9.Bargar WL, Bauer A, Börner M. Primary and revision total hip replacement using the robodoc system. Clinical orthopaedics and related research. 1998;354:82–91. doi: 10.1097/00003086-199809000-00011. [DOI] [PubMed] [Google Scholar]

- 10.Li QH, Zamorano L, Pandya A, Perez R, Gong J, Diaz F. The application accuracy of the neuromate robot—a quantitative comparison with frameless and frame-based surgical localization systems. Computer Aided Surgery. 2002;7(2):90–98. doi: 10.1002/igs.10035. [DOI] [PubMed] [Google Scholar]

- 11.Varma T, Eldridge P. Use of the neuromate stereotactic robot in a frameless mode for functional neurosurgery. The Int. Journal of Medical Robotics and Computer Assisted Surgery. 2006;2(2):107–113. doi: 10.1002/rcs.88. [DOI] [PubMed] [Google Scholar]

- 12.Adler JR, Jr, Chang S, Murphy M, Doty J, Geis P, Hancock S. The cyberknife: a frameless robotic system for radiosurgery. Stereotactic and functional neurosurgery. 1997;69(1–4):124–128. doi: 10.1159/000099863. [DOI] [PubMed] [Google Scholar]

- 13.Wu A, Lindner G, Maitz A, Kalend A, Lunsford L, Flickinger J, Bloomer W. Physics of gamma knife approach on convergent beams in stereotactic radiosurgery. Int. Journal of Radiation Oncology* Biology* Physics. 1990;18(4):941–949. doi: 10.1016/0360-3016(90)90421-f. [DOI] [PubMed] [Google Scholar]

- 14.Kang H, Wen JT. Autonomous suturing using minimally invasive surgical robots. Proc. IEEE International Conf. on Control Applications. 2000:742–747. [Google Scholar]

- 15.Mayer H, Gomez F, Wierstra D, Nagy I, Knoll A, Schmidhuber J. A system for robotic heart surgery that learns to tie knots using recurrent neural networks. Advanced Robotics. 2008;22(13–14):1521–1537. [Google Scholar]

- 16.Bell M, Balkcom D. Knot tying with single piece fixtures. Robotics and Automation (ICRA), 2008 IEEE International Conference on. 2008:379–384. [Google Scholar]

- 17.Van Den Berg J, Miller S, Duckworth D, Hu H, Wan A, Fu X-Y, Goldberg K, Abbeel P. Superhuman performance of surgical tasks by robots using iterative learning from human-guided demonstrations. Robotics and Automation (ICRA), 2010 IEEE International Conference on. 2010:2074–2081. [Google Scholar]

- 18.Coulson C, Taylor R, Reid A, Griffiths M, Proops D, Brett P. An autonomous surgical robot for drilling a cochleostomy: preliminary porcine trial. Clinical Otolaryngology. 2008;33(4):343–347. doi: 10.1111/j.1749-4486.2008.01703.x. [DOI] [PubMed] [Google Scholar]

- 19.Hussong A, Rau TS, Ortmaier T, Heimann B, Lenarz T, Majdani O. An automated insertion tool for cochlear implants: another step towards atraumatic cochlear implant surgery. Int. Journal of computer assisted radiology and surgery. 2010;5(2):163–171. doi: 10.1007/s11548-009-0368-0. [DOI] [PubMed] [Google Scholar]

- 20.Isla D. Building a better battle: The halo 3 ai objectives system. http://web.cs.wpi.edu/~rich/courses/imgd4000-d09/lectures/halo3.pdf. [Google Scholar]

- 21.Lim CU, Baumgarten R, Colton S. Applications of Evolutionary Computation. Springer; 2010. Evolving behaviour trees for the commercial game defcon; pp. 100–110. [Google Scholar]

- 22.Champendard AJ. Understanding behavior trees. http://aigamedev.com/open/article/bt-overview/. [Google Scholar]

- 23.Marzinotto A, Colledanchise M, Smith C, Ogren P. Towards a unified behavior trees framework for robot control. Robotics and Automation (ICRA), 2014 IEEE International Conference on. 2014 May;:5420–5427. [Google Scholar]

- 24.Colledanchise M, Marzinotto A, Ogren P. Robotics and Automation (ICRA), 2014 IEEE International Conference on. IEEE; 2014. Performance analysis of stochastic behavior trees; pp. 3265–3272. [Google Scholar]

- 25.Bagnell JA, Cavalcanti F, Cui L, Galluzzo T, Hebert M, Kazemi M, Klingensmith M, Libby J, Liu TY, Pollard N, et al. An integrated system for autonomous robotics manipulation. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems. 2012:2955–2962. [Google Scholar]

- 26.Yang C, Hou VW, Girard EJ, Nelson LY, Seibel EJ. Target-to-background enhancement in multispectral endoscopy with background autofluorescence mitigation for quantitative molecular imaging. Journal of biomedical optics. 2014;19(7):076014–1–14. doi: 10.1117/1.JBO.19.7.076014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gong Y, Hu D, Hannaford B, Seibel EJ. Accurate 3d virtual reconstruction of surgical field using calibrated trajectories of an image-guided medical robot. Journal of Medical Imaging. 2014;1:035002. doi: 10.1117/1.JMI.1.3.035002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Park SC, Choi BK. Tool-path planning for direction-parallel area milling. Computer-Aided Design. 2000;32(1):17–25. [Google Scholar]

- 29.Davison AJ, Reid ID, Molton ND, Stasse O. Monoslam: Real-time single camera slam. IEEE Trans on Pattern Analysis and Machine Intelligence. 2007;29(6):1052–1067. doi: 10.1109/TPAMI.2007.1049. [DOI] [PubMed] [Google Scholar]