Abstract

This paper presents a fully-actuated robotic system for percutaneous prostate therapy under continuously acquired live magnetic resonance imaging (MRI) guidance. The system is composed of modular hardware and software to support the surgical workflow of intra-operative MRI-guided surgical procedures. We present the development of a 6-degree-of-freedom (DOF) needle placement robot for transperineal prostate interventions. The robot consists of a 3-DOF needle driver module and a 3-DOF Cartesian motion module. The needle driver provides needle cannula translation and rotation (2-DOF) and stylet translation (1-DOF). A custom robot controller consisting of multiple piezoelectric motor drivers provides precision closed-loop control of piezoelectric motors and enables simultaneous robot motion and MR imaging. The developed modular robot control interface software performs image-based registration, kinematics calculation, and exchanges robot commands and coordinates between the navigation software and the robot controller with a new implementation of the open network communication protocol OpenIGTLink. Comprehensive compatibility of the robot is evaluated inside a 3-Tesla MRI scanner using standard imaging sequences and the signal-to-noise ratio (SNR) loss is limited to 15%. The image deterioration due to the present and motion of robot demonstrates unobservable image interference. Twenty-five targeted needle placements inside gelatin phantoms utilizing an 18-gauge ceramic needle demonstrated 0.87 mm root mean square (RMS) error in 3D Euclidean distance based on MRI volume segmentation of the image-guided robotic needle placement procedure.

Keywords: Image-guided Therapy, MRI-Guided Robotics, Biopsy, Brachytherapy, Piezoelectric Actuation

I. INTRODUCTION

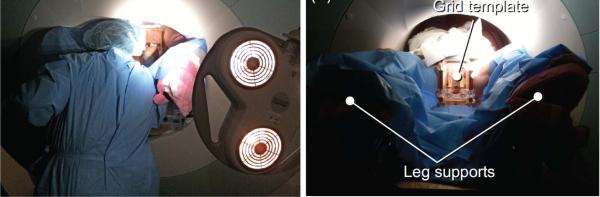

Magnetic Resonance Imaging (MRI) offers high-resolution tissue imaging at arbitrary orientations and is also able to monitor therapeutic agents, surgical tools, tissue properties, and physiological function, which make MRI uniquely suitable for guiding, monitoring and controlling a wide array of localized interventions [1]. Nevertheless, the limited space inside the bore is typically 60–70 cm in diameter and 170 cm in length. The ergonomics of manual needle placement prove very difficult in the confines of the scanner bore. Fig. 1 (left) shows a radiologist reaching into the center of the scanner bore to perform a needle placement through the perineum of a patient, and illustrates the challenging ergonomics of this manual insertion scenario.

Fig. 1.

(Left) A radiologist reaching the perineum of the patient inside closed-bore MRI while unable to see the navigation software, and (right) a mechanical grid template to guide manual needle placement. Figures are adapted from [2].

A. Robot-Assisted Prostate Interventions with MRI Guidance

Numerous studies [3], [4] have shown that transrectal ultrasound (TRUS)-guided prostate biopsy, the most common approach to sample suspicious prostate tissue, provides relatively low quality images of the tissue and needles, and thus has limited ability to localize brachytherapy seeds, especially if there is a shadowing effect in a dense distribution of seeds. Due to concerns about MRI safety and compatibility of instrumentation, MRI is not at this point commonplace for guiding prostate cancer procedures.

To overcome the aforementioned challenges, robotics has been introduced. In the order of increased active actuation DOFs, early MRI-guided prostate interventional systems start from template-guided manual straight needle placement with transperineal approach as a proof of concept [5] at Brigham and Women's Hospital. Fig. 1 (right) shows a patient lying on a MRI scanner table with leg supports during the clinical case. Beyersdorff et al. [6] reported transrectal needle biopsies in clinical studies employing 4-DOF passive alignment (rotation, angulation and two linear translations) of a needle sleeve (Invivo Corp, USA). Krieger et al. presented a 3-DOF passive arm and a 2-DOF motorized arm to aim a needle guide for transrectal prostate biopsy [7]. Song et al. [8] developed 2-DOF motorized smart template guide consisting of vertical and horizontal crossbars that are driven by ultrasonic motors. Stoianovici et al. [9] described a pneumatic stepper motor and applied it to a new generation of robotic prostate interventions [10]. Our previous work presented a pneumatic servo system and sliding mode control [11] which was later adapted as a parallel manipulator for position based teleoperation [12].

B. Robotic Actuation in the MRI Environment

Following the definition by American Society for Testing Materials (ASTM) standard F2503-05, in 2008 the U.S. Food and Drug Administration (FDA) redefined the classifications of MRI devices as “MR Safe”, “MR Conditional” and “MR Unsafe”, while the term “MR Compatible” was not redefined and is not used in current standards. The latest ASTM F2503-13 clearly defines “MR Safe” as “composed of materials that are electrically nonconductive, nonmetallic, and nonmagnetic”. Therefore, the proposed robot, like all electromechanical systems, strives for demonstrating “safety in the MR environment within defined conditions” and the “MR Conditional” classification. Note that these terms are about safety, but neither image artifact nor device functionality is covered. This manuscript demonstrates that the proposed robot is both safe in the MR environment in the intended configuration, and that it does not significantly adversely affect image quality.

From an actuation perspective, hydraulic actuation provides large power output and could be potentially MR Safe, but it is not ideal due to cavitation and fluid leakage [13]. Pneumatic actuation inherently could be designed intrinsically MR Safe. Thus far only the transperineal access robot [9] and transrectal access robot [10] by Stoianovici et al. at the Johns Hopkins University and the robot by Yakar et al. [14] at the Radboud University Nijmegen Medical Center have proven to be completely MR Safe. A major issue of actuating with pneumatic cylinders is to maintain stability which may result in overshooting due to the nonlinear friction force and long pneumatic transmission line induced slow response. Yang et al. [15] identified that the peak-to-peak amplitude of the oscillations before stabilization at the target location ranges in 2.5 – 5 mm with sliding mode control. Our experience with a pneumatic robotic using sliding mode control [11] demonstrated 0.94 mm RMS accuracy for a single axis. Alternatively, current pneumatic stepper motors achieve 3.3° [9] and 60° step size [16] demonstrating limited positioning capability.

Piezoelectric actuators can be very compact, provide sub-micron precision, and good dynamic performance without overshooting. Compared with pneumatics, piezoelectricity have unparalleled positioning accuracy and power density [17]. For example, the rotary piezoelectric motor in our robot (PiezoLegs, LR80, PiezoMotor AB, Sweden) has a 5.73× 10−6 degree step angle and is 23 mm diameter by 34.7 mm long, as compared with the novel pneumatic stepper motor described in [18] with a 3.3° step angle and is 70 × 20 × 25 mm. However, piezoelectric motors utilizing commercially available motor drivers cause unacceptable MR imaging noise (up to 40–80% signal loss) during synchronous robot motion [7]. In previous work, Fischer and Krieger et al. evaluated various types and configurations of piezoelectric actuation options, demonstrating the limitations of commercially available piezoelectric drive systems [19]. A primary contribution of this paper is “minimizing” the image artifact caused by standard motor drivers and enable high-performance motion control through development of a custom control system, and leveraging the advantages of these actuators through design of a piezoelectrically-actuated robot. A historical review of MRI-guided robotics and the challenges related to piezoelectric actuation can be found in [13], [20], and [21].

C. Contribution

Generally, previous work in MRI-guided prostate intervention utilizing piezoelectric actuation is limited in the following three aspects. First, a majority of the previously developed systems are not fully actuated, thus remains time-consuming and necessitating moving the patient inside the scanner for imaging, and moving the patient out of the scanner bore for the interventional procedure. Second, robots utilizing commercially available drivers have to interleave motion with imaging [22] to prevent electrical noise that causes 40–80% signal degradation [7], precluding real-time MR image Third, most of the prior research systems are either scanner-dependent (i.e. they require specific electrical, mechanical, and/or software interfaces to a scanner model/vendor or scanner room, or they are not readily transported and setup for use in an arbitrary MRI scanner). Or, they are platform dependent (e.g., based on a specific control interface [23]), or lack integrated navigation, communication, visualization and hardware control.

Hence, the primary contributions of the paper are: 1) a fully actuated 6-DOF robot capable of performing both prostate biopsy and brachytherapy procedures with MR image guidance while keeping the patient inside the MRI bore; 2) a feature-rich motion control system capable of effectively driving piezoelectric motors during real-time continuously acquired 3-Tesla MRI imaging; 3) a modular hardware (robotic manipulator and the control system) and software (image processing, semi-autonomous robot control, registration, and navigation) system that supports network communication and is also readily extendable to other clinical procedures; and 4) a vendor-neutral and scanner room-independent system. This paper demonstrates evaluation with a Philips Achieva 3-Tesla scanner; similar results have been identified with Siemens Magnetom 3-Tesla MRI scanner [24].

II. DESIGN REQUIREMENTS AND SYSTEM ARCHITECTURE

A. System Concept and Specifications

The prostate interventional robot design aims to model the procedure after a radiologist's hand motion and fit between patient legs inside an MRI scanner bore. Beyond the compatibility with the MRI environment, the following are the primary design considerations:

1) Workspace

The patient lies inside MRI scanner in the semilithotomy position, and the robot accesses the prostate through the perineal wall. The typical prostate is 50 mm in the lateral direction by 35 mm in the anterior-posterior direction by 40 mm in length. To cover all volume of prostate and accommodate patient variability, the prostate is assumed to have the shape of a sphere with 50 mm diameter. Thus, the required motion range is as follows: vertical motion of 50 mm (100–150 mm above the scanner bed surface), lateral motion of ±25 mm from the center of the workspace, and needle insertion depth is 150 mm to reach the back of the prostate from the skin entry point at the perineum.

2) Sterilizability

Only the plastic needle guide, collet, nut and guide sleeve have direct contact with the needle or the patient, and are removable and sterilizable. The remainder of the robot can be draped with a sterilized plastic cover.

B. System Architecture

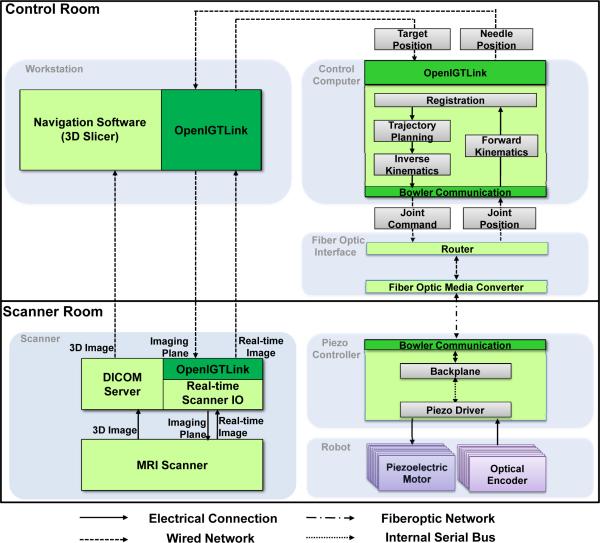

The system architecture comprises the following six modules depicted in Fig. 2: 1) a needle placement robot inside MRI scanner; 2) a piezoelectric robot controller inside MRI scanner room, 3) an interface box with a fiber optic media converter and router; 4) a control computer running robot control software; 5) surgical planning, navigation and visualization software 3D Slicer; and 6) an MRI scanner and image acquisition interface. The system is designed from the ground up to be modular, readily reconfigurable, and scanner-independent. The hardware architecture enables scanner independence by only requiring a grounded AC socket in the scanner room and a waveguide tube to pass out a fiberoptic cable. There is no requirement for specific patch panel configurations which may not have the required connections (or must be customized), may allow noise to pass into the scanner room, and often have filters that degrade (or completely prevent) piezoelectric motor diver signals from passing. The control system is modular in the sense that communication between all modules (except the one between robot controller and robot itself which are adjacent) is through a network connection as indicated with dashed lines, which implies that not only each module's computational platforms, but also programming languages are not required to be identical.

Fig. 2.

System architecture and data flow of the robotic system. Six modules are shown in gray block and OpenIGTLink is used to exchange control, image and position data.

Surgical navigation software 3D Slicer, serves as a user interface for the surgeon. The system workflow follows a preoperative planning, fiducial frame registration, targeting and verification procedure as presented in Section V. OpenIGTLink [25] is used to exchange control, position, and image data. The details of the module design are described in the following sections.

Communication from the control computer (inside the console room) to the robot controller (inside scanner room) is through fiber optic Ethernet running through a readily available waveguide tube in the patch panel. Computer control signals and joint positions are communicated through fiber optic conversion between MRI scanner room and console room, eliminating any electrical signal passing in/out of the scanner room and thus a large source of noise that is introduced when electrical signal passes through the patch panel or wave guide since the cables act as antennas to induce stray RF noise.

III. MECHANISM DESIGN

This new design has been significantly improved over our prior work [26] with increased structural rigidity, mechanical reliability and ease of assembly. The major construction components of the robot (e.g. motor fixture, and belt tensioner) are made with fused deposition modeling (Dimension 1200es, Stratasys, Inc., USA) and polyjet 3D printing (Objet Connex260, Stratasys, Inc., USA). Plastic bearings and aluminum guide rails (Igus Inc., USA) are used in the transmission mechanisms. Optical encoders (U.S. Digital, USA) with PC5 differential line drivers that have been shown to cause no visible MRI artifact when appropriately configured are used for position encoding [11].

Clinical brachytherapy and biopsy needles are composed of two concentric shafts, an outer hollow cannula and an inner solid stylet. 18-gauge needles are typically used for clinical prostate brachytherapy, and radioactive seeds are typically pre-loaded with spacers between them, often 5.5 mm apart. Straight needle placement typically includes three decoupled tasks: (1) moving the needle tip to the entry point with 3-DOF Cartesian motion; (2) inserting the needle into the body using 1-DOF translation along a straight trajectory; and (3) firing the spring loaded mechanism of the biopsy gun to harvest tissue or retracting the stylet for placing radioactive seeds. These three tasks are implemented with two modules as described in the subsection that follows. The described device is a unified mechanism capable of being configured for the two separate procedures of biopsy and brachytherapy.

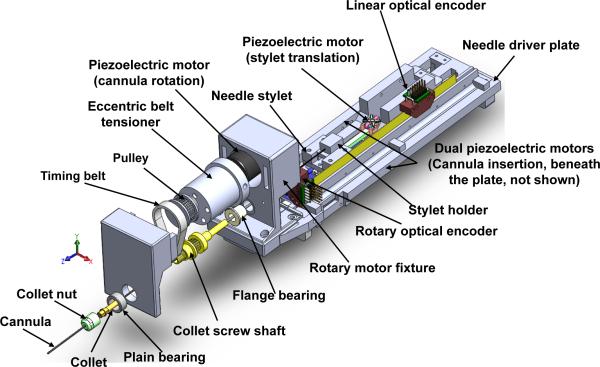

A. Needle Driver Module

For transperineal prostate needle placement, which requires 18 N force to puncture the capsule of the prostate [27], dual linear piezoelectric motors (PiezoLegs LL1011C, PiezoMotor AB, Sweden), each providing 10 N holding force and 1.5 cm/s speed are placed in parallel to drive 1-DOF insertion motion. This mechanism design is illustrated in Fig. 3; these motors are not clearly visible since they are placed beneath the needle driver plate. Another 1-DOF of collinear translational stylet motion is driven by another PiezoLegs actuator to coordinate the motion with respect to the cannula. For biopsy, the firing motion is also implemented as rapid cannula-stylet coordinated motion using the same coaxial mechanism. This idea unifies the two procedures and simplified mechanism design.

Fig. 3.

An exploded view of the needle driver module, including a rotary axis, a translation axis and a collinear stylet translation axis.

The needle driver's design allows standard needles with different diameters, ranging from 25 gauge (0.51 mm diameter) to 16 gauge (1.65 mm diameter) to be used. A collet clamping device rigidly couples the cannula shaft (outer needle) to the driving motor mechanism as shown in Fig. 3. The clamping device is connected to the rotary motor through a timing belt. A plastic bushing with an eccentric extruded cut is used as pulley tensioner, where the extruded cut is used to house a rotary piezoelectric motor (PiezoLegs, LR80, PiezoMotor AB, Sweden). Rotation of the belt tensioner can adjust the distance between the motor shaft and the collet shaft of clamping mechanism. In the previous robot iteration [26], collet screw shaft bending was observed, and the mechanism has been significantly improved with a newly designed tensioner that includes a shaft support with plain bearing to prevent motor shaft bending. The plastic needle guide with a press-fit quick release mechanism, collet nut, and guide sleeve that have direct contact with the needle are therefore readily detachable and sterilizable.

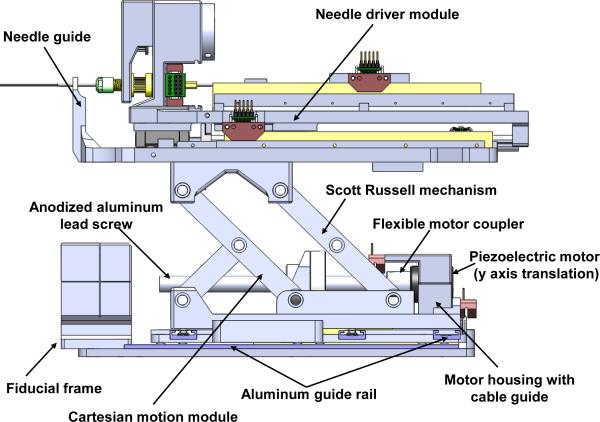

B. Cartesian Motion Module

The needle driver is placed on top of a 3-DOF Cartesian positioning module shown in Fig. 4. The mechanism design was developed in a decoupled manner, thus the kinematics are simple and safe by separating the alignment and insertion motions. The horizontal 2-DOF motions are achieved using the same linear motors with direct drive. Motion in the vertical plane enables 40 mm of vertical travel. To increase rigidity of the vertical mechanism over the previous prototype [26] [28] that has a single scissor mechanism, the new design utilized a one-and-half Scott-Russell scissor mechanism which is compact and attenuates structural flexibility due to plastic bars and bearings as identified in Fig. 4. Vertical motion is achieved by actuating this mechanism with a aluminum anodized lead screw (2 mm pitch). The piezoelectric motors exhibit inherent braking when not actively driven providing further safety.

Fig. 4.

A side view of the 3-DOF needle driver module providing cannula translation and rotation (2-DOF) and stylet translation (1-DOF) and the 3-DOF actuated Cartesian stage module.

The piezoelectric motors used here are capable of micrometer level positioning accuracy, and the limiting factor is the closed-loop position sensor. The optical encoders in this robot have encoding resolution of 0.0127 mm/count (EM1-0-500-I) and 0.072° /count (EM1-1-1250-I) for linear and rotary motion, respectively.

IV. ELECTRICAL DESIGN

A. Piezoelectric Motors

Piezoelectric motors are one of the most commonly utilized classes of actuators in MRI-guided devices, as they operate on the reverse piezoelectric effect without a magnetic field as required by traditional motors. In terms of driving signal, piezoelectric motors fall into two main categories: harmonic and non-harmonic. Both have been demonstrated to cause interference within the scanner bore with commercially available drive systems [7]. While these motors operate on similar basic principles, signals required to effectively utilize and control them are quite different. Harmonic motors, such as Nanomotion motors (Nanomotion Ltd., Israel), are generally driven with fixed frequency sinusoidal signal on two channels at 38-50 kHz and velocity control is through amplitude modulation of 80-300 V RMS. Shinsei harmonic motors (Shinsei Corporation, Japan), however, are speed controlled through frequency modulation. Non-harmonic motors, such as PiezoLegs motors, operate at a lower frequency (750-3000 Hz) than harmonic motors. These actuators require a complex shaped waveform on four channels generated with high precision at fixed amplitude (typically a low voltage of < 50 V), and speed is controlled through modulating the drive frequency.

B. Piezoelectric Motor Driver and Control

Though there have been efforts to shield motors (such as with RF shielding cloth) and ground the shielded control cables (e.g. the most recent one from Krieger et al. [7]), the MRI compatibly results demonstrate that signal noise ratio reduction is up to 80%. From our prior study [19], the source of noise is primarily from the driving signal rather than the motor itself. Commercially available piezoelectric motor drivers typically use a class-D style amplification system that generates the waveforms by low-pass filtering high frequency square waves. By using switching drivers (the typical approach since the manufacturers primary design goal was power efficiency instead of noise reduction), significant RF emissions and noise on the motor drive lines are introduced which cause interference with imaging as demonstrated in [19], [7], and many other works. Although filtering can improve the results, it has not been effective in eliminating the interference and often significantly degrades motor performance.

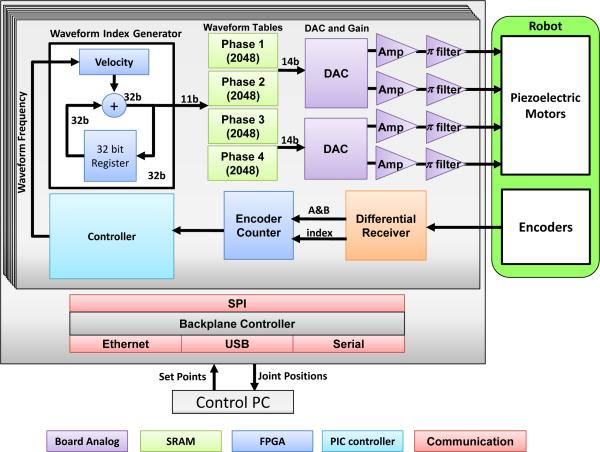

This manuscript describes our implementation of a piezoelectric motor driver with signals generated from a direct digital synthesizer (DDS), high performance multi-channel digital-to-analog converter (DAC), high power linear amplifiers, and π filtered outputs. In contrast to commercial drivers based on high frequency switching voltage regulators, it is capable of cleanly generating both high voltage sinusoidal signals and low voltage precise waveforms such that it could be used to drive both harmonic (e.g. Shinsei and Nanomotion) and non-harmonic (e.g. PiezoLegs) commercial motors [24]. A block diagram of the driver, shown in Fig. 5, is based on linear amplifiers with very high speed digital to analog converters that allow precise waveform shape control while eliminating any unwanted noise (both by defining a smooth waveform and through the circuit design including isolation, filtering, and shielding), thus fundamentally addressing the driving signal-induced image interference.

Fig. 5.

Block diagram of the system components of each piezoelectric motor driver board. An FPGA is configured as a waveform synthesizer generating the four phases of the drive signal which are passed to a linear amplifier stage with integrated filtering.

PiezoLegs motors were the actuators of choice in this robot, and therefore are the focus of the detailed discussion and evaluation. They consist of four quasi-static legs (A, B, C, and D legs) forming a stator which interchangeably establish frictional contact to a ceramic drive preloaded with a beryllium copper leaf spring, and are operated below their resonant frequency. Each bimorph leg consists of two electrically isolated piezoelectric stacks. The legs elongate when an equal voltage is applied to the two stacks of one leg. Applying different voltages on the two stacks of one leg causes the leg to bend.

As shown in Fig. 5, the developed custom motor driver utilizes a microcontroller (PIC32MX460F512L, Microchip Tech.) and a field-programmable gate array (FPGA, Cyclone EP2C8Q208C8, Altera Corp.). The functionality of the micro-controller includes: 1) Loading waveform from SD card to the internal RAM of FPGA; 2) Communication with PC through Ethernet to receive position or velocity set points; 3) Real-time position and velocity servo control loops. The microcontroller incorporates a joint-level controller that controls the output sample rate of the FPGA's waveform synthesizer. The functionality of the FPGA includes: 1) DDS; 2) Encoder decoding, where differential encoder signals pass through a differential receiver for improved robustness to noise, are interpreted by the FPGA, and are fed to on-board microcontroller; 3) Over-heat and fault protection. An internal temperature sensor in the motor amplifier (OPA549, Texas Instruments, USA) is used to manage thermal shutdown of the output; 4) Stall and limit detection. The system allows the control PC to communicate with the driver in three ways: Ethernet, Universal Serial Bus (USB), and serial communication - in this application Ethernet is used. Communication with the robot controller between driver boards utilized a Serial Peripheral Interface (SPI) bus. A watchdog mechanism detects the stall motion of the motor after 5 ms time out as a safety mechanism during joint limit detection and the homing procedure.

To drive the motor, arbitrary shaped waveforms provided by the manufacturer are re-sampled and loaded onto the SD card. One pair of legs (A and C legs) typically has the same waveform, while the other pair (B and D legs) uses the same waveform with 90° phase shift. The DDS on the FPGA generates these four waveforms, and the signal frequency is expressed as:

| (1) |

where M is the frequency control tuning word from the microcontroller, n is the bit of phase accumulator (32 bit), and Fclock is the clock frequency (50 MHz). For a desired frequency, the corresponding M is calculated in the FPGA. A 32-bit register in the FPGA is used as phase accumulator (shown as blue block in Fig. 5) to produce the digital phase, whose top 11 bits are used as an index to the 11-bit waveform table (2048 element long look up table, shown as green block in Fig. 5) that maps the phase to corresponding waveform.

The generated digital waveform is converted to an analog signal through a pair of two-channel high speed DACs (DAC2904, Texas Instruments, USA) shown as purple block in Fig. 5. The driver boards include a high power output amplification module, which passes the signals from four linear amplifiers to the actuators through high efficiency π filters to further remove high frequency noise. This module includes three stages of linear amplifiers in sequence.

As shown in Equation 2, a variation of a discrete nonlinear proportional integral derivative (PID) controller is implemented in microcontroller to control the motor motion. Since these piezoelectric motors have inherent breaking, high bandwidth, and low inertia, damping with a derivative term is typically not required for smooth motion with no overshoot.

| (2) |

where, u is the control signal, e is the position error, fmin, and fmax are the minimum (750 Hz) and maximum (3 kHz) driving frequencies, respectively. kp and ki are the PI control parameters, although a kd is implemented it is set to zero and therefore left out of this equation. d and l are the minimum and maximum position threshold values for linear frequency control. d is the dead-band and it is set to one encoder tick to eliminate chatter, it is implemented inside the FPGA running at 50 MHz and is capable of stopping the low inertia motors at the setpoint almost instantaneously. umax is saturation that ensures the motor to run at the specified maximum velocity associated with fmax when the position error is greater than a predetermined value. To maintain high speed motion while not damaging the motor, the control frequency is limited by minimum speed (which should be reached at the target) and maximum speed (which it should never exceed). kp is tuned to be large to allow the motor to run at the capped maximum speed until very close to the target.

Fig. 6 shows the portable shielded aluminum robot controller enclosure which houses the custom-made piezoelectric motor driver boards configured for up to 8-axis control. A key step in reducing image degradation from electrical noise is to encase all electronics in a continuous Faraday cage to block as much electromagnetic interference being emitted from the equipment as possible. This cage is extended through the shielded cables carrying electrical signals out of the cage through serpentine wave guides located on the vertical sides of the control box. Standard 120 V AC power is filtered and passes to a linear regulator with 48 V output which provides power to the piezoelectric motor drivers and additional regulators to generate lower voltage power for the control electronics.

Fig. 6.

(Left) MRI robot controller enclosed inside a carry on travel case. (Right) Piezoelectric motor controller. Red box shows the digital control section with microcontroller for closed loop control and FPGA for waveform synthesis. Blue box shows the high speed parallel DACs and preamplifier circuits. Yellow box shows the high power filtered linear amplifier stage.

V. REGISTRATION, CONTROL SOFTWARE, AND WORKFLOW

The primary functionality of the robot control interface software developed as part of this work is to enforce and integrate the system workflow, including fiducial registration, robot registration, kinematics and motion control. The robot control interface software connects navigation software to the robot controller to exchange robot commands using the OpenIGTLink protocol. Three-dimensional surgical navigation software 3D Slicer serves as a user interface to visualize and define target in image space; however, the modular system may be readily adapted to other platforms.

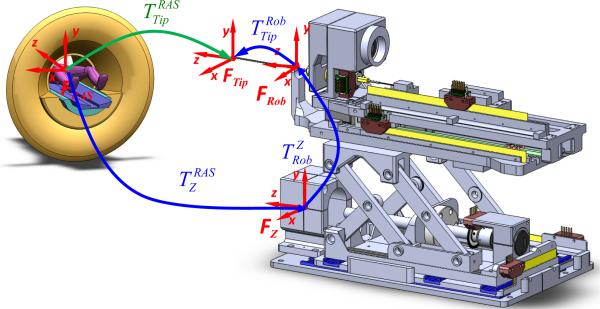

A. Surgical Navigation Coordinates

The robot is registered to the patient coordinate system (referred to as right-anterior-superior (RAS) coordinates) based on imaging a fiducial frame (referred to as the Z-frame) attached to the robot as shown in Fig. 7. Details of the multi-slice based fiducial registration can be found in our recent work [29]; this evaluation of registration accuracy showed sub-pixel resolution with a mean error of 0.27 mm in translation and 0.16° in orientation.

Fig. 7.

Coordinate frames of the robotic system for registration of the robot to MR image space, robot kinematics and encoded needle insertion.

After the registration phase, the robot can accept target coordinates represented in RAS image coordinates.

The corresponding series of homogeneous transformations is used to determine the robot's tip location in MR image coordinates (i.e. RAS coordinates):

| (3) |

where is the needle tip in the RAS patient coordinate system, is the fiducial's 6-DOF coordinate in RAS coordinates as determined by the Z-frame fiducial based registration, is the robot needle guide location with respect to the robot base (coincident with fiducial's 6-DOF coordinate) as determined from the forward kinematics of the robot as defined in Section V-B, and is the needle tip position with respect to the front face of the needle guide (typically at the skin entry point) of the robot as measured by optical encoder along the needle insertion axis.

B. Robot Kinematics

With the navigation coordinate relation, we can substitute the kinematics into the kinematic chain to calculate the needle tip position and orientation. The vertical motion of the robot is provided by actuation of the scissor mechanism. A linear motion in the Superior-Inferior (SI) direction produces a motion in the Anterior-Posterior (AP) direction. The forward kinematics of the robot with respect to the fiducial frame is defined as:

| (4) |

where is the horizontal linear motion of the lead screw due to the rotary motor motion and p is the lead screw pitch. q1,q2,q3,q4 are joint space motion of the x-axis motor translation (unit: mm), y-axis rotary motor ration (unit: degree), z-axis motor translation (unit: mm) and needle driver insertion translation (unit: mm). L is the length of the scissor bar. The three offset terms in Equation 4 are corresponding to the homogeneous transformation matrix in Equation 3. q4 is corresponding to the transformation and the remainder of Equation 4 is corresponding to the transformation .

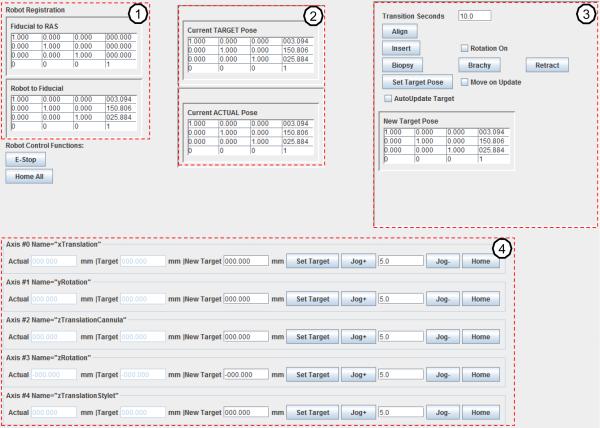

C. Control Software for Communication and Kinematics

The control software is transparent to clinician, and is developed for communication and kinematics computation based on the calculation from the last subsections. A robot kinematics and OpenIGTLink communication library was developed in Java with a graphical user interface (GUI) that coordinates system input, communication, and procedure workflow, while the clinician interfaces with the higher level navigation software (e.g. 3D Slicer). As shown in Fig. 8, the technical/engineering GUI for the robot control application based upon the developed Java library includes four major modules: 1) Robot registration and calibration module (upper left column). It displays the registration matrix and matrix ; 2) The upper middle column displays the current target and current actual 6-DOF pose as homogeneous transformation matrices; 3) The upper right column contains a homogeneous transformation representing the desired new target. Additional buttons are included to control biopsy or brachytherapy procedure steps; 4) The bottom row is the joint space panel which is automatically generated for a robot mechanism configuration through an Extensible Markup Language (XML) configuration file containing the name of each joint, the actual joint position, the desired joint position, motor jogging and home buttons.

Fig. 8.

The GUI of the robot control software running on the control computer in control room. The upper left column (1) is robot registration and calibration, the upper middle column (2) displays the current target point transformation and current actual robot 6-DOF pose. The upper right column (3) contains the desired target transformation and buttons to control biopsy or brachytherapy procedures. The bottom row (4) is the joint space panel.

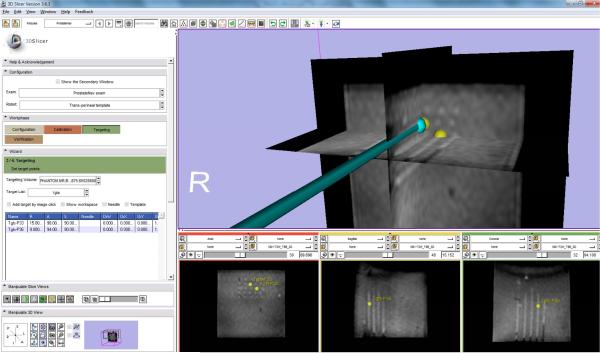

D. Clinical Software and Workflow

On top of the control software, a clinical software module was developed in 3D Slicer to manage clinical workflow and communicate with robot and imaging workstation. A virtual needle of the clinical software module accepts the pose information through OpenIGTLink from the robot and displays with MRI volume to assure placement safety and confirm placement accuracy as shown in Fig. 9, where the yellow spheres indicate the selected targets inside MRI volume in RAS coordinates.

Fig. 9.

The clinical GUI integrated inside 3D Slicer shows a virtual needle whose position was streamed from control software, was inserted to move to the selected targets (shown as yellow spheres) during needle placement accuracy evaluation. The needle tracks were visualized inside the MRI volume. The first column of the bottom sub-figures shows the 5 × 5 needle tracks.

The workflow mimics traditional TRUS-guided prostate insertions. The system components of the control software follow the workflow and enable the following five phases:

1) System Initialization

The hardware and software system is initialized. In this phase, the operator prepares the robot by connecting the robot controller and attaching sterilized needle and needle attaching components to the robot. The robot is calibrated to a pre-defined home position and loads the robot configuration from the XML file.

2) Planning

Pre-operative MR images are loaded into 3D Slicer and targets are selected or imported.

3) Registration

A series of transverse images of the fiducial frame are acquired. Multiple images are used to perform multi-slice registration to enhance system accuracy.

4) Targeting

Needle target is selected from 3D Slicer and this desired position is transmitted to the robot to process inverse kinematics and the calculated joint command is used to drive piezoelectric motors. Targets or adjustments may also be directly entered or adjusted in the robot control interface software. Real-time MR images can be acquired during insertion that enables visualization of the tool path in MRI with the overlaid reported robot position.

5) Verification

The robot forward kinematics calculate actual needle tip position (from encoder measurements and registration results) which is displayed in 3D Slicer. Post-insertion MR images are acquired and displayed with overlaid target and actual robot position.

VI. EXPERIMENTS AND RESULTS

To demonstrate compatibility with the MRI environment and accuracy of the robotic system, comprehensive MRI phantom experiments were performed. Imaging quality and needle placement accuracy were systematically analyzed.

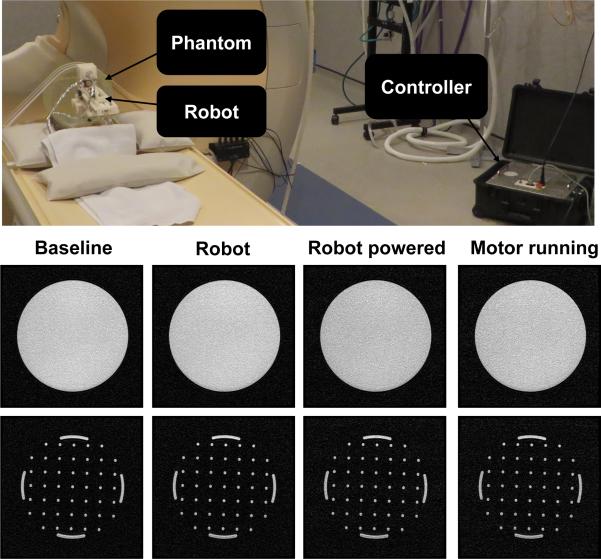

A. MR Image Quality Evaluation

We evaluated compatibility of the needle placement robot in a Philips Achieva 3-Tesla system. This evaluation includes two assessments to quantify the image quality: 1) Signal-noise ratio analysis based on National Electrical Manufacturers Association (NEMA) standard MS1-2008 and 2) Image deterioration factor analysis based on the method in [30]. The analysis utilized a Periodic Image Quality Test (PIQT) phantom (Philips, Netherlands) that has complex geometric features, including uniform cylindrical cross section, and arch/pin section. The robot is placed in close proximity (5 mm) to the phantom. The controller was placed approximately 2 meters away from the scanner bore inside the scanner room. Fig. 10 (first row) shows the system setup.

Fig. 10.

(First row) The robot prototype configuration in a 3-Tesla MRI scanner with a feature-rich PIQT phantom for the evaluation studies. (Second row) Representative T1-weighted fast field echo images of the cylindrical cross section for each of four configurations. (Third row) Coresponding T1-weighted fast field echo images of the arch/pin cross sections.

The image quality analysis was performed with four imaging protocols shown in Table I (the first three is for quantitative analysis with a phantom and the fourth is for qualitative assessment with a human volunteer): 1) diagnostic imaging T1-weighted fast field echo (T1W-FFE), 2) diagnostic imaging T2-weighted turbo spin echo for initial surgical planning scan (T2W-TSE-Planning), 3) diagnostic imaging T2-weighted turbo spin echo for needle confirmation (T2W-TSE-Diagnosis), and 4) a standard T2-weighted prostate imaging sequence (T2W-TSE-prostate). All sequences were acquired with field of view (FOV) 256 mm ×256 mm, 512×512 image matrix and 0.5 mm×0.5 mm pixel size.

TABLE I.

SCAN PARAMETERS FOR COMPATIBILITY EVALUATION

| Protocol | TE (ms) | TR (ms) | FA (deg) | Slice (mm) | Bandwidth (Hz/pixel) |

|---|---|---|---|---|---|

| T1W-FFE | 2.3 | 225 | 75 | 2 | 1314 |

| T2W-TSE-Planning | 90 | 4800 | 90 | 3 | 239 |

| T2W-TSE-Diagnosis | 115 | 3030 | 90 | 3 | 271 |

| T2W-TSE-Prostate | 104 | 4800 | 90 | 3 | 184 |

Four configurations were evaluated for SNR analysis: 1) baseline of the phantom only, 2) robot present, but controller and robot power off (controller power is the power from AC socket and robot power is controller by emergency stop), 3) controller and robot powered, and 4) motor powered on and robot is in motion during imaging. Fig. 10 (second row) illustrates the representative images of SNR test with T1WFFE images in the different configurations.

The following imaging sets were acquired twice for each imaging sequence in the Philips 3-Tesla scanner to calculate the image deterioration factors:

Baseline (BL1 & BL2): baseline image of the phantom without the robot.

Robot Present (RO1 & RO2): image of the phantom with the robot in place, powered off.

Robot Motion (RM1 & RM2): image of the phantom with the robot in motion.

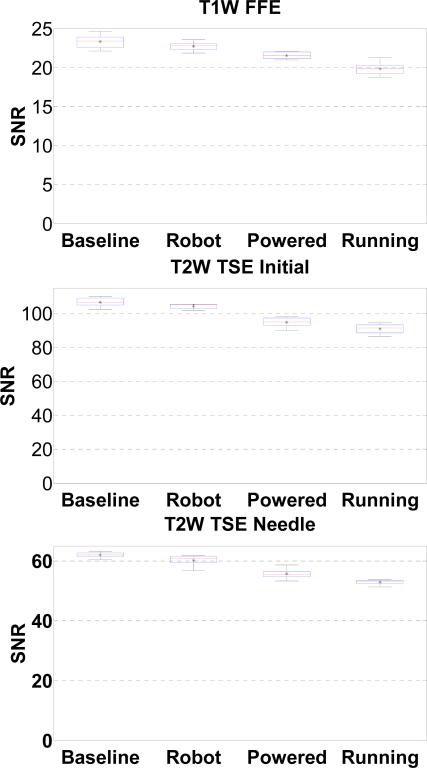

For statistical analysis, SNR based upon the NEMA standard definition is utilized as the metric for evaluating MR image quality. SNR was calculated as the mean signal in the center of the phantom divided by the noise outside the phantom. Mean signal is defined as the mean pixel intensity in the region of interest. The noise is defined as the average mean signal intensity in the four corners divided by 1.25 [31]. In Fig. 11, the boxplot shows the variation in SNR for images taken in each configuration for the three imaging sequence. The change of SNR mean value is shown in Table II.

Fig. 11.

Boxplot of the SNR for four robot configurations under three scan protocols. The “*” represents the mean SNR, the horizontal line in the middle of the box represents the median, the top and bottom represent the quartiles, and the whiskers represent the limits.

TABLE II.

MEAN SNR CHANGE IN DIFFERENT CONFIGURATIONS

| Protocol | Baseline | Robot Present (% Change) | Powered (% Change) | Motor Running (% Change) |

|---|---|---|---|---|

| T1W-FFE | 23.32 | 22.73(2.79%) | 21.54(7.63%) | 19.86(14.87%) |

| T2W-TSE-Planning | 106.69 | 104.27(2.27%) | 94.80(11.14%) | 90.96(14.74%) |

| T2W-TSE-Diagnosis | 61.98 | 60.19 (2.89%) | 55.65(10.23%) | 52.91(14.64%) |

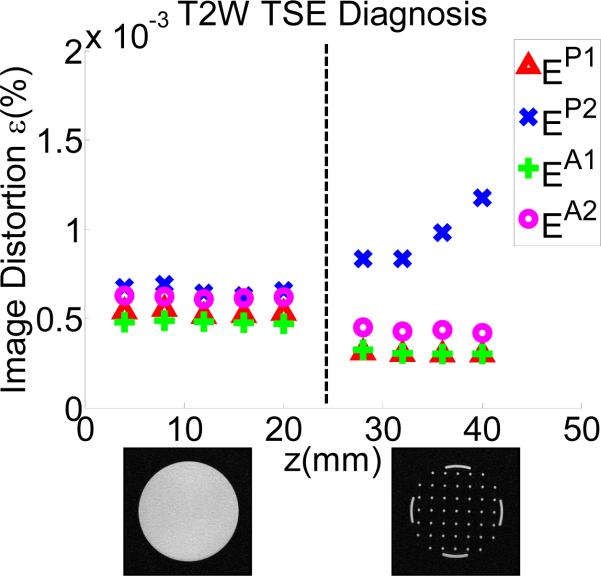

Although SNR analysis shows promising result, it cannot fully characterize the property of image deterioration. Therefore, image deterioration analysis [30] was conducted to establish a quantitative image deterioration metric due to the present of the robot in the imaging field (EP - passive test) and by the robot motion (EA - active test). As defined in [30], measures EP ≤ 2% and EA ≤ 1% are associated with unobservable image interference. Utilizing the same notation [30], T notates passive or active configuration set. The error εT1 reflects the normal level of noise from the imaging system itself. The factors EP and EA indicate the image deterioration due to materials in the passive test (P) and the electronics in the active test (A), respectively. Similar to [10],

εP1 reflects differences between two sets taken without the robot (BL1-BL2).

εP2 reflects differences between two sets taken without and with the robot (BL1-RO1), a representation of the materials-induced image deterioration.

εA1 reflects differences between two sets taken with the robot present and without robot motion (RO1-RO2).

εA2 reflects differences between two sets taken with the robot present (no motion) and with robot motion (RO1-RM1), a representation of the electronics-induced image deterioration.

Fig. 12 shows the plot of one representative protocol (T2WTSE-Diagnosis) with these parameters. The average difference between εP2 and εP1 produces the passive image deterioration factor EP , and the average difference between εA2 and εA1 produces the active factor EA. Their experimental values are shown in table III.

Fig. 12.

Passive (P) and Active (A) image deterioration factor ε variation due to the present and motion of the robot.

TABLE III.

DETERIORATION FACTOR FOR PASSIVE AND ACTIVE TESTS

| Protocol | EP(%) | EA(%) |

|---|---|---|

| T1W-FFE | 0.000015 | 0.00012 |

| T2W-TSE-Planning | 0.00139 | 0.00019 |

| T2W-TSE-Diagnosis | 0.00036 | 0.00013 |

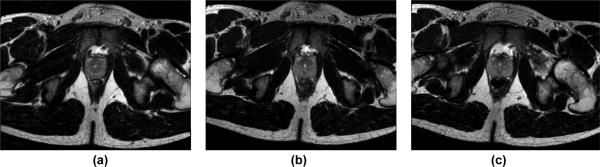

Based on the promising qualitative analysis of the robotic system, it was furthered evaluated with human prostate anatomy. A healthy volunteer lay in the semilithotomy position and was imaged with T2W-TSE-Prostate protocol in Table I. Fig. 13 depicts the MR images under each of the conditions: baseline, without robot, controller present and power off, and robot running.

Fig. 13.

MRI images of human prostate under different conditions: baseline, without robot (a), controller present and unpowered (b), and robot running (c).

The major observations from these two quantitative and one qualitative evaluation include:

The different imaging sequences demonstrated similar behavior SNR reduction. This is also observed in [7];

The present of the robot with power off introduces 2.79%, 2.27% and 2.89% SNR reduction for T1W-FFE, T2W-TSE-Planning and T2W-TSE-Diagnosis respectively. This reflects the material caused SNR reduction;

The SNR reduction in the configuration of the robot powered but not running (7.63%, 11.14% and 10.23% for each sequence) is less than that the motor running (14.87%, 14.74% and 14.64%). This reflects the electrical noise caused SNR reduction. In comparison, the mean SNR of baseline and robot motion during T1 imaging by Krieger et al. [7], reduced approximately from 250 to 50 (80%) while it changed from 380 to 70 (72%). Our system shows significant improvement over [7] that utilized commercial motor drivers when the robot was in motion. As shown in Figs. 10 and 13, there is no readily visible difference;

The deterioration factor shows unobservable interference for all protocols in all configurations tested, according to the requirement EP ≤ 2% and EA ≤ 1% [30].

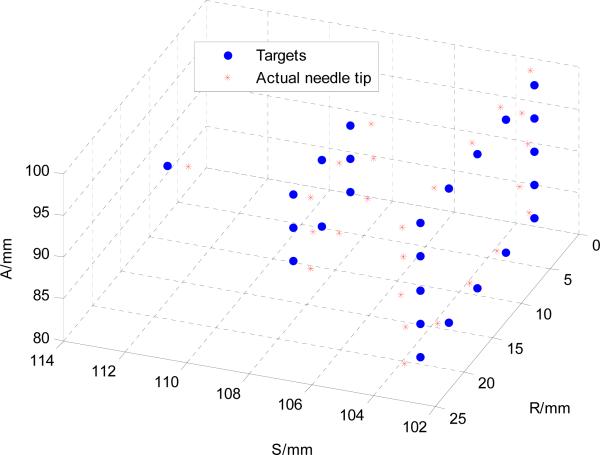

B. Phantom-based Accuracy Evaluation of Multiple Needle Placements in MRI

Joint space accuracy has been evaluated in [32] demonstrating an average 0.03 mm tracking accuracy with the piezoelectric motors. The robot was further evaluated in a phantom study under MR imaging. The phantom used for the experiments was muscle-like ballistic test media (Corbin Inc., USA). The rubber-like material was molded into a 10 cm × 10 cm × 15 cm rectangular form. This phantom was placed inside a standard flex imaging coil configuration, and the robot was initialized to its home position in front of the phantom similar to that shown in Fig. 10.

The goal of this trial was to assess the robot's inherent accuracy, and therefore the authors strove to limit paramagnetic artifact of the needles which would affect assessment of the robot's accuracy. Clinical MRI needles are typically made of titanium which still produces a needle track artifact greater than the actual needle size, therefore 18-gauge ceramic needles were inserted into a gelatin phantom to assess robot instrument tip position. The ceramic needle was custom made from ceramic rods (Ortech Ceramics, USA) and the tip was ground to symmetric diamond shape with a 60° cone shape. In the clinical procedures, 18-gauge needle made of low artifact titanium (Invivo Corp, USA) are typically used.

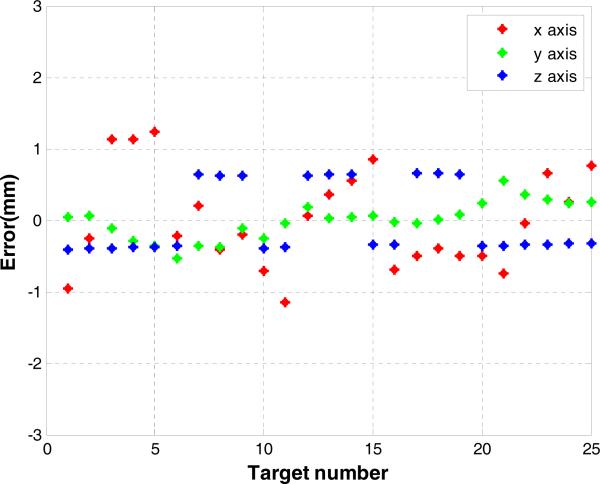

T2W-TSE-Diagnosis is utilized to visualize the needle insertion trajectory. 25 needle placement targets (virtual targets specified in MRI volume) that form a semi-ellipsoid shape are selected from the MRI volume, as shown in Fig. 14. The transverse plane distance of the grid is 5 mm to mimic a mechanical template of TRUS procedure. Needles were inserted without using the needle rotation motion to demonstrate the robot accuracy independent of additional control methods that may further be used to improve accuracy.

Fig. 14.

25 targets forming a semi ellipse to simulate the superior part of a prostate were selected from the MRI volume. Actual needle tip position determined from segmented MRI image are superimposed with the 25 targets.

Needle placement accuracy was evaluated along each direction as shown in Fig. 15. The actual needle tip position is manually segmented from post-insertion MRI volume images. The desired targets and the needle tip positions were registered with point cloud to cloud registration. The maximum error is less than 1.2 mm as shown in Fig. 15 and it indicates a consistent accuracy trend of the image-guided robotic system. The RMS error as calculated with 3D Euclidean distance between the desired and actual needle tip position is 0.87 mm with a standard deviation of 0.24 mm which is on par with the MR image resolution.

Fig. 15.

Needle placement errors of 25 targeted needle placements along x-y-z axis components with the 3D Euclidean distance RMS error 0.87 mm.

VII. Discussion and Conclusion

This paper presents a fully-actuated robotic system for MRI-guided prostate interventions using piezoelectric actuation. In this work, we developed and evaluated an integrated modular hardware and software system to support the surgical workflow of intra-operative MRI, with percutaneous prostate intervention as an illustrative case. Extensive compatibility experiments have been conducted to quantitatively evaluate the SNR reduction and image deterioration factor and qualitatively the image quality of human prostate tissue. Since the latest ASTM F2503-13 standard requires only fully non-conductive components to be considered “MR Safe,” this system (based upon piezoelectric actuation which still relies on electrical currents and wires) has been demonstrated to be “MR Conditional” which indicates that it is safe in the intended configuration. The SNR analysis and image deterioration analysis experiments with the complex phantom demonstrate that this robotic system causes no major image artifacts or distortion, paving for the route to regulatory clearance.

Phantom experiments validated the capability of the system to execute actuated prostate biopsy placement, and the spacial accuracy is 0.87 mm ± 0.24 mm. In comparison with pneumatics, Yang et al. [15] reported 2.5 – 5 mm oscillations for each axis with sliding mode control. Our pneumatic robotic system with sliding mode control [11] demonstrated 0.94 mm RMS accuracy for single axis. The piezoelectric actuation demonstrate a clear advantage over the pneumatic counterpart. Since this motor is capable of micrometer level accuracy, encoder resolution is the effective limit. For our application, 0.03 mm single joint motion accuracy is more than sufficient. In general, the root cause of needle placement error is from two sources, namely the needle-caused error and robotic system itself. Even though our transperineal approach with MRI guidance can avoid the TRUS probe-induced prostate motion and deformation, needle-caused error includes prostate translation, rotation and deformation during needle insertion, needle deflection due to needle tissue interaction, and susceptibility artifact shift of needle due to titanium or nickel titanium material of the needle itself. In terms of the robot-related error, it includes the error from fiducial registration, and intrinsic error of the manipulator itself due to positioning accuracy of the motor, structure flexibility, and misalignment. In this paper, we aim to isolate the targeting accuracy of the robotic system by utilizing virtual targets and ceramic needles. So the error in this experiment reflects the accumulation of errors due to the robot positioning limitation, image based registration and needle deflection, excluding the error due to susceptibility artifact shift or organ motion.

Future work would focus on addressing the related issues including utilizing more rigid material (e.g. ultem and PEEK rather than ABS and acrylic), using needle rotation during insertion to decrease deflection, and modeling susceptibility artifact. As the 3D position of the needle is available, we can dynamically adjust the scan plane of MRI to visualize needle insertion process, which could be used to track needle trajectory to increase the safety and accuracy.

Acknowledgments

This work is supported in part by the Congressionally Directed Medical Research Programs Prostate Cancer Research Program (PCRP) New Investigator Award W81XWH-09-1-0191, NIH 1R01CA111288-01A1, P41EB015898, R01CA111288, Link Foundation Fellowship, and Dr. Richard Schlesinger Award from American Society for Quality (ASQ). The content is solely the responsibility of the authors and does not necessarily represent the official views of the PCRP, NIH or ASQ. Nobuhiko Hata is a member of the Board of Directors of AZE Technology, Cambridge, MA and has an equity interest in the company. His interests were reviewed and are managed by BWH and Partners HealthCare in accordance with their conflict of interest policies.

Biography

Hao Su received the B.S. degree from the Harbin Institute of Technology and M.S. degree from the Mechanical and Aerospace Engineering from the State University of New York at Buffalo, and received his Ph.D. degree in Mechanical Engineering from the Worcester Polytechnic Institute. He received the Best Medical Robotics Paper Finalist Award in the IEEE International Conference on Robotics and Automation. He was a recipient of the Link Foundation Fellowship and Dr. Richard Schlesinger Award from American Society for Quality. He is currently a research scientist at Philips Research North America.

Hao Su received the B.S. degree from the Harbin Institute of Technology and M.S. degree from the Mechanical and Aerospace Engineering from the State University of New York at Buffalo, and received his Ph.D. degree in Mechanical Engineering from the Worcester Polytechnic Institute. He received the Best Medical Robotics Paper Finalist Award in the IEEE International Conference on Robotics and Automation. He was a recipient of the Link Foundation Fellowship and Dr. Richard Schlesinger Award from American Society for Quality. He is currently a research scientist at Philips Research North America.

Weijian Shang received his B.S. degree in Precision Instruments and Mechanology from Tsinghua University in Beijing, China in 2009. He also received his M.S. degree in Mechanical Engineering from Worcester Polytechnic Institute in 2012. He is currently working towards his Ph.D. degree in Mechanical Engineering at Worcester Polytechnic Institute.

Weijian Shang received his B.S. degree in Precision Instruments and Mechanology from Tsinghua University in Beijing, China in 2009. He also received his M.S. degree in Mechanical Engineering from Worcester Polytechnic Institute in 2012. He is currently working towards his Ph.D. degree in Mechanical Engineering at Worcester Polytechnic Institute.

Gregory Cole received his B.S. and M.S. degree in Mechanical Engineering from Worcester Polytechnic Institute, and he received his Ph.D. degree in Robotic Engineering in 2013. He is currently a research scientist at Philips Research North America.

Gregory Cole received his B.S. and M.S. degree in Mechanical Engineering from Worcester Polytechnic Institute, and he received his Ph.D. degree in Robotic Engineering in 2013. He is currently a research scientist at Philips Research North America.

Gang Li received the B.S. degree and M.S. degree in Mechanical Engineering from the Harbin Institute of Technology, Harbin, China, in 2008 and 2011, respectively. He is currently a Doctoral Candidate in the Department of Mechanical Engineering at Worcester Polytechnic Institute, Worcester, MA.

Gang Li received the B.S. degree and M.S. degree in Mechanical Engineering from the Harbin Institute of Technology, Harbin, China, in 2008 and 2011, respectively. He is currently a Doctoral Candidate in the Department of Mechanical Engineering at Worcester Polytechnic Institute, Worcester, MA.

Kevin Harrington received his B.S. degree in Robotic Engineering from Worcester Polytechnic Institute, Worcester, MA.

Kevin Harrington received his B.S. degree in Robotic Engineering from Worcester Polytechnic Institute, Worcester, MA.

Alex Camilo received the B.S. degree in Electrical and Computer Engineering from Worcester Polytechnic Institute, Worcester, MA.

Alex Camilo received the B.S. degree in Electrical and Computer Engineering from Worcester Polytechnic Institute, Worcester, MA.

Junichi Tokuda received the B.S. degree in engineering, the M.S. degree in information science and technology, and the Ph.D. degree in information science and technology in 2002, 2004, and 2007, respectively, all from the University of Tokyo, Tokyo, Japan. He is currently an Assistant Professor of Radiology at Brigham and Women's Hospital and Harvard Medical School, Boston, MA.

Junichi Tokuda received the B.S. degree in engineering, the M.S. degree in information science and technology, and the Ph.D. degree in information science and technology in 2002, 2004, and 2007, respectively, all from the University of Tokyo, Tokyo, Japan. He is currently an Assistant Professor of Radiology at Brigham and Women's Hospital and Harvard Medical School, Boston, MA.

Clare M. Tempany received the MB BAO, BCH degrees from the Royal College of Surgeons, Ireland. She is currently the Ferenc Jolesz Chair and the Vice-Chair of Radiology Research, Department of Radiology, Brigham and Women's Hospital, Boston, and a Professor of Radiology at Harvard Medical School. She is also the Clinical Director of the National Image Guided Therapy Center.

Clare M. Tempany received the MB BAO, BCH degrees from the Royal College of Surgeons, Ireland. She is currently the Ferenc Jolesz Chair and the Vice-Chair of Radiology Research, Department of Radiology, Brigham and Women's Hospital, Boston, and a Professor of Radiology at Harvard Medical School. She is also the Clinical Director of the National Image Guided Therapy Center.

Nobuhiko Hata received the B.E. degree in precision machinery engineering in 1993, and the M.E. and D.Eng. degrees in precision machinery engineering in 1995 and 1998, respectively, all from the University of Tokyo, Tokyo, Japan. He is currently an Associate Professor of Radiology at Harvard Medical School, Boston, MA.

Nobuhiko Hata received the B.E. degree in precision machinery engineering in 1993, and the M.E. and D.Eng. degrees in precision machinery engineering in 1995 and 1998, respectively, all from the University of Tokyo, Tokyo, Japan. He is currently an Associate Professor of Radiology at Harvard Medical School, Boston, MA.

Gregory Fischer received B.S. degrees in Electrical and Mechanical Engineering from Rensselaer Polytechnic Institute, NY and an M.S.E. in Electrical Engineering from Johns Hopkins University, MD. He received his Ph.D. in Mechanical Engineering from Johns Hopkins University in 2008. He is currently an Associate Professor of Mechanical Engineering at Worcester Polytechnic Institute and is Director of the WPI Automation and Interventional Medicine (AIM) Laboratory.

Gregory Fischer received B.S. degrees in Electrical and Mechanical Engineering from Rensselaer Polytechnic Institute, NY and an M.S.E. in Electrical Engineering from Johns Hopkins University, MD. He received his Ph.D. in Mechanical Engineering from Johns Hopkins University in 2008. He is currently an Associate Professor of Mechanical Engineering at Worcester Polytechnic Institute and is Director of the WPI Automation and Interventional Medicine (AIM) Laboratory.

Footnotes

This paper was presented in part at the IEEE ICRA 2011 International Conference on Robotics and Automation, Shanghai, China, May 9-13.

Contributor Information

Hao Su, Philips Research North America, were with the Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute.

Weijian Shang, Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute, 100 Institute Road, Worcester, MA 01609, USA.

Gregory Cole, Philips Research North America, were with the Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute.

Gang Li, Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute, 100 Institute Road, Worcester, MA 01609, USA.

Kevin Harrington, Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute, 100 Institute Road, Worcester, MA 01609, USA.

Alexander Camilo, Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute, 100 Institute Road, Worcester, MA 01609, USA.

Junichi Tokuda, National Center for Image Guided Therapy (NCIGT), Brigham and Women's Hospital, Department of Radiology, Harvard Medical School, Boston, MA, 02115 USA.

Clare M. Tempany, National Center for Image Guided Therapy (NCIGT), Brigham and Women's Hospital, Department of Radiology, Harvard Medical School, Boston, MA, 02115 USA

Nobuhiko Hata, National Center for Image Guided Therapy (NCIGT), Brigham and Women's Hospital, Department of Radiology, Harvard Medical School, Boston, MA, 02115 USA.

Gregory S. Fischer, Automation and Interventional Medicine (AIM) Robotics Lab, Department of Mechanical Engineering, Worcester Polytechnic Institute, 100 Institute Road, Worcester, MA 01609, USA (gfischer@wpi.edu).

REFERENCES

- 1.Jolesz FA. Intraoperative imaging in neurosurgery: where will the future take us? In: Pamir MN, Seifert V, Kiris T, editors. Intraoperative Imaging. Acta Neurochirurgica Supplement. Springer; Vienna: 2011. pp. 21–25. [Google Scholar]

- 2.Tokuda J, Tuncali K, Iordachita I, Song S-E, Fedorov A, Oguro S, Lasso A, Fennessy FM, Tempany CM, Hata N. In-bore setup and software for 3T MRI-guided transperineal prostate biopsy. Physics in Medicine and Biology. 2012 Sep;:5823–40. doi: 10.1088/0031-9155/57/18/5823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bassan HS, Patel RV, Moallem M. A novel manipulator for percutaneous needle insertion: design and experimentation. Mechatronics, IEEE/ASME Transactions on. 2009;14(6):746–761. [Google Scholar]

- 4.Hungr N, Baumann M, Long J-A, Troccaz J. A 3-D ultrasound robotic prostate brachytherapy system with prostate motion tracking. Robotics, IEEE Transactions. 2012 Dec;28:1382–1397. [Google Scholar]

- 5.D'Amico AV, Tempany CM, Cormack R, Hata N, Jinzaki M, Tuncali K, Weinstein M, Richie JP. Transperineal magnetic resonance image guided prostate biopsy. Journal of Urology. 2000 Aug;164:385–387. [PubMed] [Google Scholar]

- 6.Beyersdorff D, Winkel A, Hamm B, Lenk S, Loening SA, Taupitz M. MR imaging-guided prostate biopsy with a closed MR unit at 1.5 T: initial results. Radiology. 2005 Feb;234:576–581. doi: 10.1148/radiol.2342031887. [DOI] [PubMed] [Google Scholar]

- 7.Krieger A, Song S, Bongjoon Cho N, Iordachita I, Guion P, Fichtinger G, Whitcomb LL. Development and evaluation of an actuated MRI-compatible robotic system for MRI-guided prostate intervention. Mechatronics, IEEE/ASME Transactions on. 2012;(99):1–12. doi: 10.1109/TMECH.2011.2163523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Song S-E, Tokuda J, Tuncali K, Tempany C, Zhang E, Hata N. Development and preliminary evaluation of a motorized needle guide template for MRI-guided targeted prostate biopsy. Biomedical Engineering, IEEE Transactions on. 2013 Nov;60:3019–3027. doi: 10.1109/TBME.2013.2240301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Stoianovici D, Song D, Petrisor D, Ursu D, Mazilu D, Muntener M, Mutener M, Schar M, Patriciu A. MRI stealth robot for prostate interventions. Minimally Invasive Therapy and Allied Technologies. 2007;16(4):241–248. doi: 10.1080/13645700701520735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Stoianovici D, Kim C, Srimathveeravalli G, Sebrecht P, Petrisor D, Coleman J, Solomon S, Hricak H. MRI-safe robot for endorectal prostate biopsy. Mechatronics, IEEE/ASME Transactions on. 2014;PP(99):1–11. doi: 10.1109/TMECH.2013.2279775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fischer GS, Iordachita I, Csoma C, Tokuda J, DiMaio SP, Tempany CM, Hata N, Fichtinger G. MRI-compatible pneumatic robot for transperineal prostate needle placement. Mechatronics, IEEE/ASME Transactions. 13(3):2008. doi: 10.1109/TMECH.2008.924044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Seifabadi R, Song S-E, Krieger A, Cho NB, Tokuda J, Fichtinger G, Iordachita I. Robotic system for MRI-guided prostate biopsy: feasibility of teleoperated needle insertion and ex vivo phantom study. International Journal of Computer Aided Radiology and Surgery. 2011 doi: 10.1007/s11548-011-0598-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gassert R, Yamamoto A, Chapuis D, Dovat L, Bleuler H, Burdet E. Actuation methods for applications in MR environments. Concepts in Magnetic Resonance Part B: Magnetic Resonance Engineering. 2006;29B(4):191–209. [Google Scholar]

- 14.Yakar D, Schouten MG, Bosboom DGH, Barentsz JO, Scheenen TWJ, Futterer JJ. Feasibility of a pneumatically actuated MR-compatible robot for transrectal prostate biopsy guidance. Radiology. 2011;260(1):241–247. doi: 10.1148/radiol.11101106. [DOI] [PubMed] [Google Scholar]

- 15.Yang B, Tan U-X, McMillan AB, Gullapalli R, Desai JP. Design and control of a 1-DOF MRI-compatible pneumatically actuated robot with long transmission lines. Mechatronics, IEEE/ASME Transactions on. 2011;16(6):1040–1048. doi: 10.1109/TMECH.2010.2071393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chen Y, Mershon C, Tse Z. A 10-mm MR-conditional unidirectional pneumatic stepper motor. Mechatronics, IEEE/ASME Transactions on. 2014;PP(99):1–7. doi: 10.1109/TMECH.2014.2305839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Huber J, Fleck N, Ashby M. The selection of mechanical actuators based on performance indices. Proceedings of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences. 1997;453(1965):2185–2205. [Google Scholar]

- 18.Stoianovici D, Patriciu A, Petrisor D, Mazilu D, Kavoussi L. A new type of motor: pneumatic step motor. Mechatronics, IEEE/ASME Transactions on. 2007;12(1):98–106. doi: 10.1109/TMECH.2006.886258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fischer GS, Krieger A, Iordachita I, Csoma C, Whitcomb LL, Fichtinger G. MRI compatibility of robot actuation techniques - a comparative study. International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) 2008 Sep; doi: 10.1007/978-3-540-85990-1_61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Elhawary H, Tse ZTH, Hamed A, Rea M, Davies BL, Lamperth MU. The case for MR-compatible robotics: a review of the state of the art. The International Journal of Medical Robotics and Computer Assisted Surgery. 2008;4(2):105–113. doi: 10.1002/rcs.192. [DOI] [PubMed] [Google Scholar]

- 21.Fütterer JJ, Misra S, Macura KJ. MRI of the prostate: potential role of robots. Imaging. 2010;2(5):583–592. [Google Scholar]

- 22.Goldenberg A, Trachtenberg J, Kucharczyk W, Yi Y, Haider M, Ma L, Weersink R, Raoufi C. Robotic system for closed-bore MRI-guided prostatic interventions. Mechatronics, IEEE/ASME Transactions on. 2008 Jun;13:374–379. [Google Scholar]

- 23.Elhawary H, Tse Z, Rea M, Zivanovic A, Davies B, Besant C, de Souza N, McRobbie D, Young I, Lamperth M. Robotic system system,” for transrectal biopsy of the prostate: real-time guidance under MRI. Engineering in Medicine and Biology Magazine, IEEE. 2010 Mar;29:78–86. doi: 10.1109/MEMB.2009.935709. [DOI] [PubMed] [Google Scholar]

- 24.Fischer GS, Cole GA, Su H. Approaches to creating and controlling motion in MRI. Engineering in Medicine and Biology Society, EMBC, Annual International Conference the IEEE. 2011:6687–6690. doi: 10.1109/IEMBS.2011.6091649. [DOI] [PubMed] [Google Scholar]

- 25.Tokuda J, Fischer GS, Papademetris X, Yaniv Z, Ibanez L, Cheng P, Liu H, Blevins J, Arata J, Golby AJ, Kapur T, Pieper S, C Burdette E, Fichtinger G, Tempany CM, Hata N. OpenlGTLink: an open network protocol for image-guided therapy environment. The International Journal of Medical Robotics and Computer Assisted Surgery. 2009;5(4):423–434. doi: 10.1002/rcs.274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Su H, Zervas M, Cole G, Furlong C, Fischer GS. Realtime MRI-guided needle placement robot with integrated fiber optic force sensing. Robotics and Automation (ICRA), IEEE International Conference on. 2011 [Google Scholar]

- 27.Yu Y, Podder T, Zhang Y, Ng W, Misic V, Sherman J, Fu L, Fuller D, Messing E, Rubens D, et al. Robot-assisted prostate brachytherapy. Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2006:41–49. doi: 10.1007/11866565_6. [DOI] [PubMed] [Google Scholar]

- 28.Su H, Cardona D, Shang W, Camilo A, Cole G, Rucker D, Webster R, Fischer G. MRI-guided concentric tube continuum robot with piezoelectric actuation: a feesibility study. Robotics and Automation (ICRA) IEEE International Conference on. 2012:1939–1945. [Google Scholar]

- 29.Shang W, Fischer GS. Proceedings of SPIE Medical Imaging. San Diego, USA: 2012. A high accuracy multi-image registration method for tracking MRI-guided robots. [Google Scholar]

- 30.Stoianovici D. Multi-imager compatible actuation principles in surgical robotics. The International Journal of Medical Robotics and Computer Assisted Surgery. 2005 Jan;1:86–100. doi: 10.1002/rcs.19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.The Association of Electrical and Medical Imaging Equipment Manufacturers. Determination of Signal-to-Noise Ratio (SNR) in Diagnostic Magnetic Resonance Imaging, NEMA Standard Publication MS 1-2008. 2008 [Google Scholar]

- 32.Su H, Shang W, Harrngton K, Camilo A, Cole GA, Tokuda J, Tempnny C, Hata N, Fischer GS. SPIE Medical Imaging. San Diego, USA: 2012. A Networked Modular Hardware and Software system for MRI-guided Robotic Prostate Interventions. [Google Scholar]