Abstract

In a variety of behavioral tasks, subjects exhibit an automatic and apparently suboptimal sequential effect: they respond more rapidly and accurately to a stimulus if it reinforces a local pattern in stimulus history, such as a string of repetitions or alternations, compared to when it violates such a pattern. This is often the case even if the local trends arise by chance in the context of a randomized design, such that stimulus history has no real predictive power. In this work, we use a normative Bayesian framework to examine the hypothesis that such idiosyncrasies may reflect the inadvertent engagement of mechanisms critical for adapting to a changing environment. We show that prior belief in non-stationarity can induce experimentally observed sequential effects in an otherwise Bayes-optimal algorithm. The Bayesian algorithm is shown to be well approximated by linear-exponential filtering of past observations, a feature also apparent in the behavioral data. We derive an explicit relationship between the parameters and computations of the exact Bayesian algorithm and those of the approximate linear-exponential filter. Since the latter is equivalent to a leaky-integration process, a commonly used model of neuronal dynamics underlying perceptual decision-making and trial-to-trial dependencies, our model provides a principled account of why such dynamics are useful. We also show that parameter-tuning of the leaky-integration process is possible, using stochastic gradient descent based only on the noisy binary inputs. This is a proof of concept that not only can neurons implement near-optimal prediction based on standard neuronal dynamics, but that they can also learn to tune the processing parameters without explicitly representing probabilities.

1 Introduction

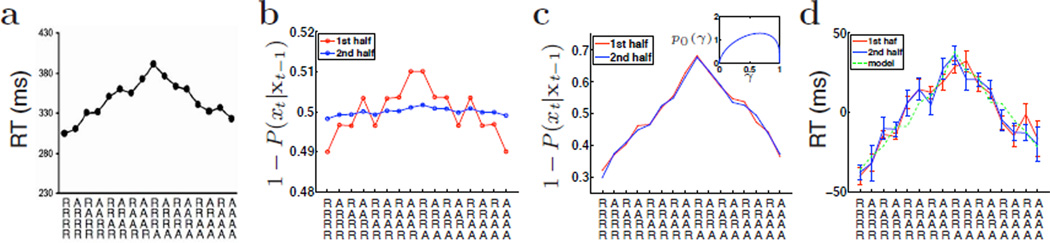

One common error human subjects make in statistical inference is that they detect hidden patterns and causes in what are genuinely random data. Superstitious behavior, or the inappropriate linking of stimuli or actions with consequences, can often arise in such situations, something also observed in non-human subjects [1, 2]. One common example in psychology experiments is that despite a randomized experimental design, which deliberately de-correlate stimuli from trial to trial, subjects pick up transient patterns such as runs of repetitions and alternations, and their responses are facilitated when a stimulus continues to follow a local pattern, and impeded when such a pattern is violated [3]. It has been observed in numerous experiments [3–5], that subjects respond more accurately and rapidly if a trial is consistent with the recent pattern (e.g. AAAA followed by A, BABA followed by B), than if it is inconsistent (e.g. AAAA followed by B, BABA followed by A). This sequential effect is more prominent when the preceding run has lasted longer. Figure 1a shows reaction time (RT) data from one such experiment [5]. Error rates follow a similar pattern, reflecting a true expectancy-based effect, rather than a shift in RT-accuracy trade-off.

Figure 1.

Bayesian modeling of sequential effects. (a) Median reaction time (RT) from Cho et al (2002) affected by recent history of stimuli, in which subjects are required to discriminate a small “o” from a large “O” using button-presses. Along the abscissa are all possible four-trial sub-sequences, in terms of repetitions (R) and alternations (A). Each sequence, read from top to bottom, proceeds from the earliest stimulus progressively toward the present stimulus. As the effects were symmetric across the two stimulus types, A and B, each bin contains data from a pair of conditions (e.g. RRAR can be AAABB or BBBAA). RT was fastest when a pattern is reinforced (RRR followed by R, or AAA followed by A); it is slowest when an “established” pattern is violated (RRR followed by A, or AAA followed by R). (b) Assuming RT decreases with predicted stimulus probability (i.e. RT increases with 1 − P(xt|xt−1), where xt is the actual stimulus seen), then FBM would predict much weaker sequential effects in the second half (blue: 720 simulated trials) than in the first half (red: 840 trials). (c) DBM predicts persistently strong sequential effects in both the first half (red: 840 trials) and second half (blue: 720 trials). Inset shows prior over γ used; the same prior was also used for the FBM in (b). α = .77. (d) Sequential effects in behavioral data were equally strong in the first half (red: 7 blocks of 120 trials each) and the second half (blue: 6 blocks of 120 trials each). Green dashed line shows a linear transformation from the DBM prediction in probability space of (c) into the RT space. The fit is very good given the errorbars (SEM) in the data.

A natural interpretation of these results is that local patterns lead subjects to expect a stimulus, whether explicitly or implicitly. They readily respond when a subsequent stimulus extends the local pattern, and are “surprised” and respond less rapidly and accurately when a subsequent stimulus violates the pattern. When such local patterns persist longer, the subjects have greater confidence in the pattern, and are therefore more surprised and more strongly affected when the pattern is violated. While such a strategy seems plausible, it is also sub-optimal. The experimental design consists of randomized stimuli, thus all runs of repetitions or alternations are spurious, and any behavioral tendencies driven by such patterns are useless. However, compared to artificial experimental settings, truly random sequential events may be rare in the natural environment, where the laws of physics and biology dictate that both external entities and the observer’s viewpoint undergo continuous transformations for the most part, leading to statistical regularities that persist over time on characteristic timescales. The brain may be primed to extract such statistical regularities, leading to what appears to be superstitious behavior in an artificially randomized experimental setting.

In section 2, we use Bayesian probability theory to build formally rigorous models for predicting stimuli based on previous observations, and compare differentially complex models to subjects’ actual behavior. Our analyses imply that subjects assume statistical contingencies in the task to persist over several trials but non-stationary on a longer time-scale, as opposed to being unknown but fixed throughout the experiment. We are also interested in understanding how the computations necessary for prediction and learning can be implemented by the neural hardware. In section 3, we show that the Bayes-optimal learning and prediction algorithm is well approximated by a linear filter that weighs past observations exponentially, a computationally simpler algorithm that also seems to fit human behavior. Such an exponential linear filter can be implemented by standard models of neuronal dynamics. We derive an explicit relationship between the assumed rate of change in the world and the time constant of the optimal exponential linear filter. Finally, in section 4, we will show that meta-learning about the rate of change in the world can be implemented by stochastic gradient descent, and compare this algorithm with exact Bayesian learning.

2 Bayesian prediction in fixed and changing worlds

One simple internal model that subjects may have about the nature of the stimulus sequence in a 2-alternative forced choice (2AFC) task is that the statistical contingencies in the task remain fixed throughout the experiment. Specifically, they may believe that the experiment is designed such that there is a fixed probability γ, throughout the experiment, of encountering a repetition (xt = 1) on any given trial t (thus probability 1−γ of seeing an alternation xt = 0). What they would then learn about the task over the time course of the experiment is the appropriate value of γ. We call this the Fixed Belief Model (FBM). Bayes’ Rule tells us how to compute the posterior:

where rt denotes the number of repetitions observed so far (up to t), xt is the set of binary observations (x1, …, xt), and the prior distribution p(γ) is assumed to be a beta distribution: p(γ) = p0(γ) = Beta(a, b). The predicted probability of seeing a repetition on the next trial is the mean of this posterior distribution: P(xt+1 = 1|xt) = ∫ γp(γ|xt)dγ = 〈γ|xt〉.

A more complex internal model that subjects may entertain is that the relative frequency of repetition (versus alternation) can undergo discrete changes at unsignaled times during the experimental session, such that repetitions are more prominent at times, and alternation more prominent at other times. We call this the Dynamic Belief Model (DBM), in which γt has a Markovian dependence on γt−1, so that with probability α, γt = γt−1, and probability 1 − α, γt is redrawn from a fixed distribution p0(γt) (same Beta distribution as for the prior). The observation xt is still assumed to be drawn from a Bernoulli process with rate parameter γt. Stimulus predictive probability is now the mean of the iterative prior, P(xt = 1|xt−1) = 〈γt|xt−1〉, where

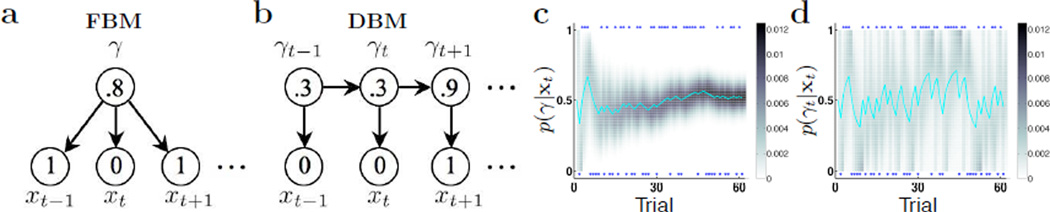

Figures 2a;b illustrate the two graphical models. Figures 2c;d demonstrate how the two models respond differently to the exact same sequence of truly random binary observations (γ = .5). While inference in FBM leads to less variable and more accurate estimate of the underlying bias as the number of samples increases, inference in DBM is perpetually driven by local transients. Relating back to the experimental data, we plot the probability of not observing the current stimulus for each type of 5-stimulus sequences in Figure 1 for (b) FBM and (c) DBM, since RT is known to lengthen with reduced stimulus expectancy. Comparing the first half of a simulated experimental session (red) with the second half (blue), matched to the number of trials for each subject, we see that sequential effects significantly diminish in the FBM, but persist in the DBM. A re-analysis of the experimental data (Figure 1d) shows that sequential effects also persist in human behavior, confirming that Bayesian prediction based on a (Markovian) changeable world can account for behavioral data, while that based on a fixed world cannot. In Figure 1d, the green dashed line shows that a linear transformation of the DBM sequential effect (from Figure 1c) is quite a good fit of the behavioral data. It is also worth noting that in the behavioral data there is a slight over all preference (shorter RT) for repetition trials. This is easily captured by the DBM by assuming p0(γt) to be skewed toward repetitions (see Figure 1c inset). The same skewed prior cannot produce a bias in the FBM, however, because the prior only figures into Bayesian inference once at the outset, and is very quickly overwhelmed by the accumulating observations.

Figure 2.

Bayesian inference assuming fixed and changing Bernoulli parameters. (a) Graphical model for the FBM. γ ∈ [0, 1], xt ∈ {0, 1}. The numbers in circles show example values for the variables. (b) Graphical model for the DBM. γt = αδ(γt − γt−1) + (1 − α)p0(γt), where we assume the prior p0 to be a Beta distribution. The numbers in circles show examples values for the variables. (c) Grayscale shows the evolution of posterior probability mass over γ for FBM (darker color indicate concentration of mass), given the sequence of truly random (P(xt) = .5) binary data (blue dots). The mean of the distribution, in cyan, is also the predicted stimulus probability: P(xt = 1|xt−1) = 〈γ|xt−1〉. (d) Evolution of posterior probability mass for the DBM (grayscale) and predictive probability P(xt = 1|xt−1) (cyan); they perpetually fluctuate with transient runs of repetitions or alternations.

3 Exponential filtering both normative and descriptive

While Bayes’ Rule tells us in theory what the computations ought to be, the neural hardware may only implement a simpler approximation. One potential approximation is suggested by related work showing that monkeys’ choices, when tracking reward contingencies that change at unsignaled times, depend linearly on previous observations that are discounted approximately exponentially into the past [6]. This task explicitly examines subjects’ ability to track unsignaled statistical regularities, much like the kind we hypothesize to be engaged inadvertently in sequential effects.

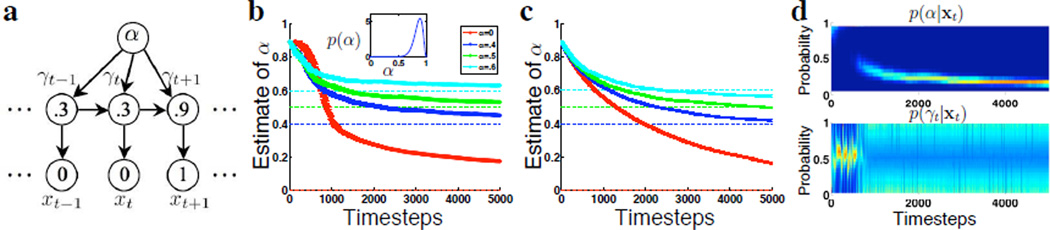

First, we regressed the subjects’ reward rate (RR) against past observations and saw that the linear coefficients decay approximately exponentially into the past (Figure 3a). We define reward rate as mean accuracy/mean RT, averaged across subjects; we thus take into account both effects in RT and accuracy as a function of past experiences. We next examined whether there is also an element of exponential discounting embedded in the DBM inference algorithm. Linear regression of the predictive probability Pt ≜ P(xt = 1|xt−1), which should correlate positively with RR (since it correlates positively with accuracy and negatively with RT) against previous observations xt−1, xt−2, … yields coefficients that also decay exponentially into the past (Figure 3b): . Linear exponential filtering thus appears to be both a good descriptive model of behavior, and a good normative model approximating Bayesian inference.

Figure 3.

Exponential discounting a good descriptive and normative model. (a) For each of the six subjects, we regressed RR on repetition trials against past observations, RT ≈ C + b1xt−1 + b2xt−2 + …, where xτ is assigned 0 if it was repetition, and 1 if alternation, the idea being that recent repetition trials should increase expectation of repetition and decrease RR, and recent alternation should decrease expectation of repetition and increase RR on a repetition trial. Separately we also regressed RR’s on alternation trials against past observations (assigning 0 to alternation trials, and 1 to repetitions). The two sets of coefficients did not differ significantly and were averaged togther (red: average across subjects, error bars: SEM). Blue line shows the best exponential fit to these coefficients. (b) We regressed Pt obtained from exact Bayesian DBM inference, against past observations, and obtained a set of average coefficients (red); blue is the best exponential fit. (c) For different values of α, we repeat the process in (b) and obtain the best exponential decay parameter β (blue). Optimal β closely tracks the 2/3 rule for a large range of values of α. β is .57 in (a), so α = .77 was used to generate (b). (d) Both the optimal exponential fit (red) and the 2/3 rule (blue) approxiate the true Bayesian Pt well (green dashed line shows perfect match). α = .77. For smaller values of α, the fit is even better; for larger α, the exponential approximation deteriorates (not shown). (e) For repetition trials, the greater the predicted probability of seeing a repetition (xt = 1), the faster the RT, whether trials are categorized by Bayesian predictive probabilities (red: α = .77, p0 = Beta(1.6, 1.3)), or by linear exponential filtering (blue). For alternation trials, RT’s increase with increasing predicted probability of seeing a repetition. Inset: for the biases b ∈ [.2, .8], the log prior ratio (shift in the initial starting point, and therefore change in the distance to decision boundary) is approximately linear.

An obvious question is how this linear exponential filter relates to exact Bayesian inference, in particular how the rate of decay relates to the assumed rate of change in the world (parameterized by α). We first note that the linear exponential filter has an equivalent iterative form:

We then note that the nonlinear Bayesian update rule can also be written as:

| (1) |

where , and we approximate Pt by its mean value 〈Pt〉 = 1/2, and Kt by its mean value 〈Kt〉 = 1/3. These expected values are obtained by expanding Pt and Kt in their iterative forms and assuming 〈Pt〉 = 〈Pt−1〉 and 〈Kt〉 = 〈Kt−1〉, and also assuming that p0 is the uniform distribution. We verified numerically (data not shown) that this mean approximation is quite good for a large range of α (though it gets progressively worse when α≈1, probably because the equilibrium assumptions deviate farther from reality as changes become increasingly rare).

Notably, our calculations imply , which makes intuitive sense, since slower changes should result in longer integration time window, whereas faster changes should result in shorter memory. Figure 3c shows that the best numerically obtained β (by fitting an exponential to the linear regression coefficients) for different values of α (blue) is well approximated by the 2/3 rule (black dashed line). For the behavioral data in Figure 3a, β was found to be .57, which implies α =.77; the simulated data in Figure 3b are in fact obtained by assuming α = .77, hence the remarkably good fit between data and model. Figure 3d shows that reconstructed Pt based on the numerically optimal linear exponential filter (red) and the 2/3 rule (blue) both track the true Bayesian Pt very well.

In the previous section, we saw that exact Bayesian inference for the DBM is a good model of behavioral data. In this section, we saw that linear exponential filtering also seems to capture the data well. To compare which of the two better explains the data, we need a more detailed account of how stimulus history-dependent probabilities translate into reaction times. A growing body of psychological [7] and physiological data [8] support the notion that some form of evidence integration up to a fixed threshold underlies binary perceptual decision making, which both optimizes an accuracy- RT trade-off [9] and seems to be implemented in some form by cortical neurons [8]. The idealized, continuous-time version of this, the drift-diffusion model (DDM), has a well characterized mean stopping time [10], , where A and c are the mean and standard deviation of unit time fluctuation, and z is the distance between the starting point and decision boundary. The vertical axis for the DDM is in units of log posterior ratio . An unbiased (uniform) prior over s implies a stochastic trajectory that begins at 0 and drifts until it hits one of the two boundaries ±z. When the prior is biased at b ≠ .5, it has an additive effect in the log posterior ratio space and moves the starting point to . For the relevant range of b (.2 to .8), the shift shift in starting point is approximately linear in b (Figure 3e inset), so that the new distance to the boundary is approximately z + kb. Thus, the new mean decision time is . Typically in DDM models of decision-making, the signal-to-noise ratio is small, i.e. A ≪ c, such that tanh is highly linear in the relevant range. We therefore have , implying that the change in mean decision time is linear in the bias b, in units of probability.

This linear relationship between RT and b was already born out by the good fit between sequential effects in behavioral data and for the DBM in Figure 1d. To examine this more closely, we run the exact Bayesian DBM algorithm and the linear exponential filter on the actual sequences of stimuli observed by the subjects, and plot median RT against predicted stimulus probabilities. In Figure 3e, we see that for both exact Bayesian (red) and exponential (blue) algorithms, RT’s decrease on repetition stimuli when predicted probability for repetition increased; conversely, RT’s increase on alternation trials when predicted probability for repetition increase (and therefore predicted probability for alternation decrease). For both Bayesian inference and linear exponential filtering, the relationship between RT and stimulus probability is approximately linear. The linear fit in fact appears better for the exponential algorithm than exact Bayesian inference, which, conditioned on the DDM being an appropriate model for binary decision making, implies that the former may be a better model of sequential adaptation than exact Bayesian inference. Further experimentation is underway to examine this prediction more carefully.

Another implication of the SPRT or DDM formulation of perceptual decision-making is that incorrect prior bias, such as due to sequential effects in a randomized stimulus sequence, induces a net cost in accuracy (even though the RT effects wash out due to the linear dependence on prior bias). The error rate with a bias x0 in starting point is [10], implying error rate rises monotonically with bias in either direction. This is a quantitative characterization of our claim that extrageneous prior bias, such as due to sequential effects, induces suboptimality in decision-making.

4 Neural implementation and learning

So far, we have seen that exponential discounting of the past not only approximates exact Bayesian inference, but fits human behavioral data. We now note that it has the additional appealing property of being equivalent to standard models of neuronal dynamics. This is because the iterative form of the linear exponential filter in Equation 1 has a similar form to a large class of leaky integration neuronal models, which have been used extensively to model perceptual decision-making on a relatively fast time-scale [8, 11–15], as well as trial-to-trial interactions on a slower time-scale [16–20]. It is also related to the concept of eligibility trace in reinforcement learning [21], which is important for the temporal credit assignment problem of relating outcomes to states or actions that were responsible for them. Here, we provided the computational rationale for this exponential discounting the past – it approximates Bayesian inference under DBM-like assumptions.

Viewed as a leaky-integrating neuronal process, the parameters of Equation 1 have the following semantics: can be thought of as a constant bias, as the feed-forward input, and as the leaky recurrent term. Equation 1 suggests that neurons utilizing a standard form of integration dynamics can implement near-optimal Bayesian prediction under the non-stationary assumption, as long as the relative contributions of the different terms are set appropriately. A natural question to ask next is how neurons can learn to set the weights appropriately. We first note that xt is a sample from the distribution P(xt|xt−1). Since P(xt|xt−1) has the approximate linear form in Equation 1, with dependence on a single parameter α, learning about near-optimal predictions can potentially be achieved based on estimating the value of α via the stochastic samples x1, x2, …. We implement a stochastic gradient descent algorithm, in which α̂ is adjusted incrementally on each trial in the direction of the gradient, which should bring α̂ closer to the true α.

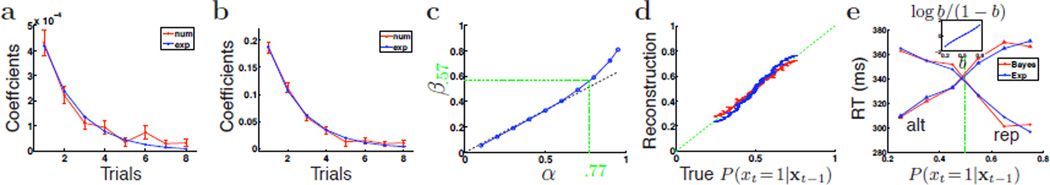

where α̂t is the estimate of α after observing xt, and P̂t is the estimate of Pt using the estimate α̂t−1 (before seeing xt). Figure 4c shows that learning via the binary samples is indeed possible: for different true values of α (dashed lines) that generated different data sets, stochastic gradient descent produced estimates of α̂ that converge to the true values, or close to them (thick lines; widths denote SEM estimated from 50 sessions of learning). A key challenge for future work is to clarify whether and how the gradient, , can be computed by neural machinery (perhaps approximately).

Figure 4.

Meta-learning about the rate of change. (a) Graphical model for exact Bayesian learning. Numbers are example values for the variables. (b) Mean of posterior p(α|xt) as a function of timesteps, averaged over 30 sessions of simulated data, each set generated from different true values of α (see legend; color-coded dashed lines indicate true α). Inset shows prior over α, p(α) = Beta(17, 3). Time-course of learning is not especially sensitive to the exact form of the prior (not shown). (c) Stochastic gradient descent with a learning rate of .01 produce estimates of α (thick lines, width denotes SEM) that converge to the true values of α (dashed lines). Initial estimate of α, before seeing any data, is .9. Learning based on 50 sessions of 5000 trials for each value of α. (d) Marginal posterior distributions over α (top panel) and γt (bottom panel) on a sample run, where probability mass is color-coded: brighter color is more mass.

For comparison, we also implement the exact Bayesian learning algorithm, which augments the DBM architecture by representing α as a hidden variable instead of a fixed known parameter:

Figure 4a illustrates this augmented model graphically. Figure 4b shows the evolution of the mean of the posterior distribution over α, or 〈α|xt〉. Based on sets of 30 sessions of 5000 trials, generated from each of four different true values of α, the mean value of α under the posterior distribution tends toward the true α over time. The prior we assume for α is a beta distribution (Beta(17, 3), shown in the inset of Figure 4b).

Compared to exact Bayesian learning, stochastic gradient descent has a similar learning rate. But larger values of α (e.g. α = .6) tend to be under-estimated, possibly due to the fact that the analytical approximation for β is under-estimated for larger α. For data that were generated from a fixed Bernoulli process with rate .5, an equivalently appropriate model is the DBM with α = 0 – stochastic gradient descent produced estimates of α (thick red line) that converge to 0 on the order of 50000 trials (details not shown). Figure 4d shows that the posterior inference about α and γt undergoes distinct phases when true α = 0 and there is no correlation between one timestep and the next. There is an initial phase where marginal posterior mass for α tends toward high values of α, while marginal posterior mass for γt fluctuates around .5. Note that this combination is an alternative, equally valid generative model for completely randomized sequence of inputs. However, this joint state is somehow unstable, and α tends toward 0 while γt becomes broad and fluctuates wildly. This is because as inferred α gets smaller, there is almost no information about γt from past observations, thus the marginal posterior over γt tends to be broad (high uncertainty) and fluctuates along with each data point. α can only decrease slowly because so little information about the hidden variables is obtained from each data point. For instance, it is very difficult to infer from what is believed to be an essentially random sequence whether the underlying Bernoulli rate really tends to change once every 1.15 trials or 1.16 trials. This may explain why subjects show no diminished sequential effects over the course of a few hundred trials (Figure 1d). While the stochastic gradient results demonstrate that, in principle, the correct values of α can be learned via the sequence of binary observations x1, x2, …, further work is required to demonstrate whether and how neurons could implement the stochastic gradient algorithm or an alternative learning algorithm .

5 Discussion

Humans and other animals constantly have to adapt their behavioral strategies in response to changing environments: growth or shrinkage in food supplies, development of new threats and opportunities, gross changes in weather patterns, etc. Accurate tracking of such changes allow the animals to adapt their behavior in a timely fashion. Subjects have been observed to readily alter their behavioral strategy in response to recent trends of stimulus statistics, even when such trends are spurious. While such behavior is sub-optimal for certain behavioral experiments, which interleave stimuli randomly or pseudo-randomly, it is appropriate for environments in which changes do take place on a slow timescale. It has been observed, in tasks where statistical contingencies undergo occasional and unsignaled changes, that monkeys weigh past observations linearly but with decaying coefficients (into the past) in choosing between options [6]. We showed that human subjects behave very similarly in 2AFC tasks with randomized design, and that such discounting gives rise to the frequently observed sequential effects found in such tasks [5]. We showed that such exponential discounting approximates optimal Bayesian inference under assumptions of statistical non-stationarity, and derived an analytical, approximate relationship between the parameters of the optimal linear exponential filter and the statistical assumptions about the environment. We also showed how such computations can be implemented by leaky integrating neuronal dynamics, and how the optimal tuning of the leaky integration process can be achieved without explicit representation of probabilities.

Our work provides a normative account of why exponential discounting is observed in both stationary and non-stationary environments, and how it may be implemented neurally. The relevant neural mechanisms seem to be engaged both in tasks when the environmental contingencies are truly changing at unsignaled times, and also in tasks in which the underlying statistics are stationary but chance patterns masquerade as changing statistics (as seen in sequential effects). This work bridges and generalizes previous descriptive accounts of behavioral choice under non-stationary task conditions [6], as well as mechanistic models of how neuronal dynamics give rise to trial-to-trial interactions such as priming or sequential effects [5, 13, 18–20]. Based the relationship we derived between the rate of behavioral discounting and the subjects’ implicit assumptions about the rate of environmental changes, we were able to “reverse-engineer” the subjects’ internal assumptions. Subjects appear to assume α = .77, or changing about once every four trials. This may have implications for understanding why working memory has the observed capacity of 4–7 items.

In a recent human fMRI study [22], subjects appeared to have different learning rates in two phases of slower and faster changes, but notably the first phase contained no changes, while the second phase contained frequent ones. This is a potential confound, as it has been observed that adaptive responses change significantly upon the first switch but then settle into a more stable regime [23]. It is also worth noting that different levels of sequential effects/adaptive response appear to take place at different time-scales [4, 23], and different neural areas seem to be engaged in processing different types of temporal patterns [24]. In the context of our model, it may imply that there is sequential adaptation happening at different levels of processing (e.g. sensory, cognitive, motor), and their different time-scales may reflect different characteristic rate of changes at these different levels. A related issue is that brain needs not to have explicit representation of the rate of environmental changes, which are implicitly encoded in the “leakiness” of neuronal integration over time. This is consistent with the observation of sequential effects even when subjects are explicitly told that the stimuli are random [4]. An alternative explanation is that subjects do not have complete faith in the experimenter’s instructions [25]. Further work is needed to clarify these issues.

We used both a computationally optimal Bayesian learning algorithm, and a simpler stochastic gradient descent algorithm, to learn the rate of change (1−α). Both algorithms were especially slow at learning the case when α = 0, which corresponds to truly randomized inputs. This implies that completely random statistics are difficult to internalize, when the observer is searching over a much larger hypothesis space that contains many possible models of statistical regularity, which can change over time. This is consistent with previous work [26] showing that discerning “randomness” from binary observations may require surprisingly many samples, when statistical regularities are presumed to change over time. Although this earlier work used a different model for what kind of statistical regularities are allowed, and how they change over time (temporally causal and Markovian in ours, an acausal correlation function in theirs), as well as the nature of the inference task (on-line in our setting, and off-line in theirs), the underlying principles and conclusions are similar: it is very difficult to discriminate a truly randomized sequence, which by chance would contain runs of repetitions and alternations, from one that has changing biases for repetitions and alternations over time.

Contributor Information

Angela J. Yu, Department of Cognitive Science, University of California, San Diego, ajyu@ucsd.edu

Jonathan D. Cohen, Department of Psychology, Princeton University, jdc@princeton.edu

References

- 1.Skinner BF. J. Exp. Psychol. 1948;38:168–172. doi: 10.1037/h0055873. [DOI] [PubMed] [Google Scholar]

- 2.Ecott CL, Critchfield TS. J. App. Beh. Analysis. 2004;37:249–265. doi: 10.1901/jaba.2004.37-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Laming DRJ. Information Theory of of Choice-Reaction Times. London: Academic Press; 1968. [Google Scholar]

- 4.Soetens E, Boer LC, Hueting JE. JEP: HPP. 1985;11:598–616. [Google Scholar]

- 5.Cho R, et al. Cognitive, Affective, & Behavioral Neurosci. 2002;2:283–299. doi: 10.3758/cabn.2.4.283. [DOI] [PubMed] [Google Scholar]

- 6.Sugrue LP, Corrado GS, Newsome WT. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- 7.Smith PL, Ratcliff R. Trends Neurosci. 27:161–168. doi: 10.1016/j.tins.2004.01.006. [DOI] [PubMed] [Google Scholar]

- 8.Gold JI, Shadlen MN. Neuron. 2002;36:299–308. doi: 10.1016/s0896-6273(02)00971-6. [DOI] [PubMed] [Google Scholar]

- 9.Wald A, Wolfowitz J. Ann. Math. Statisti. 1948;19:326–339. [Google Scholar]

- 10.Bogacz, et al. Psychological Review. 2006;113:700–765. doi: 10.1037/0033-295X.113.4.700. [DOI] [PubMed] [Google Scholar]

- 11.Cook EP, Maunsell JHR. Nat. Neurosci. 2002;5:985–994. doi: 10.1038/nn924. [DOI] [PubMed] [Google Scholar]

- 12.Grice GR. Perception & Psychophysics. 1972;12:103–107. [Google Scholar]

- 13.McClelland JL. Attention & Performance XIV. MIT Press; pp. 655–688. [Google Scholar]

- 14.Smith PL. Psychol. Rev. 1995;10:567–593. [Google Scholar]

- 15.Yu AJ. Adv. in Neur. Info. Proc. Systems. 2007;19:1545–1552. [Google Scholar]

- 16.Dayan P, Yu AJ. IETE J. Research. 2003;49:171–181. [Google Scholar]

- 17.Kim C, Myung IJ. 17th Ann. Meeting. of Cog. Sci. Soc; 1995. pp. 472–477. [Google Scholar]

- 18.Mozer MC, Colagrosso MD, Huber DE. Adv. in Neur. Info. Proc. Systems. 2002;14:51–57. [Google Scholar]

- 19.Mozer MC, Kinoshita S, Shettel M. Integrated Models of Cog. Sys. 2007:180–193. [Google Scholar]

- 20.Simen P, Cohen JD, Holmes P. Neur. Netw. 2006;19:1013–1026. doi: 10.1016/j.neunet.2006.05.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. MIT Press; 1998. [Google Scholar]

- 22.Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Nat. Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 23.Kording KP, Tenenbaum JB, Shadmehr R. Nat. Neurosci. 2007;10:779–786. doi: 10.1038/nn1901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Huettel SA, Mack PB, McCarthy G. Nat. Neurosci. 2002;5:485–490. doi: 10.1038/nn841. [DOI] [PubMed] [Google Scholar]

- 25.Hertwig R, Ortmann A. Behavioral & Brain Sciences. 2001;24:383–403. doi: 10.1037/e683322011-032. [DOI] [PubMed] [Google Scholar]

- 26.Bialek W. Preprint q-bio.NC/0508044. Princeton University; 2005. [Google Scholar]