Abstract

In this paper, the importance of modern technology in forensic investigations is discussed. Recent technological developments are creating new possibilities to perform robust scientific measurements and studies outside the controlled laboratory environment. The benefits of real-time, on-site forensic investigations are manifold and such technology has the potential to strongly increase the speed and efficacy of the criminal justice system. However, such benefits are only realized when quality can be guaranteed at all times and findings can be used as forensic evidence in court. At the Netherlands Forensic Institute, innovation efforts are currently undertaken to develop integrated forensic platform solutions that allow for the forensic investigation of human biological traces, the chemical identification of illicit drugs and the study of large amounts of digital evidence. These platforms enable field investigations, yield robust and validated evidence and allow for forensic intelligence and targeted use of expert capacity at the forensic institutes. This technological revolution in forensic science could ultimately lead to a paradigm shift in which a new role of the forensic expert emerges as developer and custodian of integrated forensic platforms.

Keywords: forensic science, technology, integrated forensic platform, digital forensic evidence, illicit drugs, forensic DNA analysis

1. Introduction: the interface of science and technology in forensic science

Technology can be regarded as a vital catalyst in the transition of scientific findings and insights into innovation. Added value of science is materialized through technology enabling society to fully benefit from new discoveries. Such benefits are very diverse (e.g. health, economic, trade, transport, communication, sustainability, conservation of cultural heritage, safety, security and justice) but have in common that they raise the quality of life and provide progress and prosperity in societies (assuming that these benefits outweigh the potential misuse and threats that are also associated with new scientific findings). The cycle of science, innovation and growth is the rationale behind the very substantial and structural investment of developed countries in science programmes. From this broad and generic perspective, it is very interesting to take an in-depth look at the interface between technology and forensic science. Contemporary forensic institutes operate state-of-the-art laboratories where evidence is studied with modern instruments. Without this often high-tech and expensive equipment, the forensic expert would not be able to generate the forensic findings that so often are of vital importance to solve a crime and assure high-quality rulings in a court of law [1]. Ideally, new technology is made ‘fit-for-forensic-purpose’ in such a way that accredited and efficient use in the forensic laboratory is enabled, evidential value is established and criminalistic interpretation is incorporated. Contemporary forensic STR DNA profiling is the most obvious and striking example of such a merger of scientific discovery, technological advancements and forensic application and interpretation.

At the Netherlands Forensic Institute (NFI), forensic innovation efforts are currently undertaken to create new forensic methods that can be broadly applied in the criminal justice system and do not serve only to increase the capability of forensic laboratories. Also outside the forensic domain, new technological advances can be noted in recent years that allow scientific data, information and insights to be obtained outside a controlled laboratory environment, as is discussed in more detail in §2. In ‘connecting the forensic laboratory to the scene’, the added value can be greatly increased especially if field methods do not generate just indicative information but rather robust data and findings that can directly be used as evidence in court. Within the Dutch Criminal Justice System, a huge gain in speed, efficiency and quality is anticipated especially for high-volume cases with limited forensic interpretation (e.g. chemical identification of drugs of abuse or securing and classifying digital data). However, the regular use of forensic methodologies outside the controlled laboratory environment by untrained forensic experts requires substantial technological efforts. Quality of the findings must be guaranteed at all times, the equipment needs to function in a robust manner under variable and unfavourable conditions, and it must be very easy to operate. Only through technology can such requirements be met and efforts should be aimed at automated forensic interpretation, reporting uncertainties and minimizing potential errors by the operators. Wireless communication could form the basis of creating a forensic platform for quality assurance and central analysis of the data gathered by numerous field devices. This technological revolution in forensic science could lead to a paradigm shift in which a new role of the forensic expert will emerge as developer of evidence analysers and custodian of integrated forensic platforms. Forensic expertise and interpretation would then find its way in evidential value algorithms and quality control procedures that form the basis of the forensic field methodology. In the criminal justice chain, this ultimately could lead to a shared interdisciplinary forensic platform allowing the rapid and very efficient investigation of evidence first hand by case officers with off-site support from forensic experts.

In this contribution, as part of the Royal Society meeting on ‘The paradigm shift for UK forensic science’, the potential of the novel approach of integrated forensic platforms will be illustrated through current NFI innovation efforts on mobile DNA technologies (§3a), on a platform for the criminal investigation of large amounts of digital data (§3b) and on a platform allowing rapid and robust chemical identification of illicit drugs in police stations and on crime scenes (§3c).

2. The integrated forensic platform, technology to ‘connect the laboratory to the scene’

In 2009, Microsoft External Research presented a vision on the future of science and introduced the fourth paradigm in science: data-intensive scientific discovery [2]. From initial experimental science to theoretical science, the introduction of computers in the last decades enabled the step to computational science. The fourth paradigm is based on the exponential availability of data to scientists through the global growth of science and the distribution of findings through worldwide networks. In the vision of Microsoft, a sustainable e-infrastructure could facilitate and accelerate the generation of scientific knowledge by supporting new ways of data acquisition, modelling, sharing, visualization, mining and archiving.

New ways of data acquisition include the use of sensors and sensor networks to gather scientific data on an unprecedented scale not only in terms of size but also in terms of temporal and spatial resolution. Indeed, in recent literature, many scientific studies on wireless sensor networks have emerged reporting on sensor technology, network design, communication protocols, data aggregation and platform security [3]. Sensor networks are used for so-called tracking and monitoring applications. Tracking networks are employed in, for example, military, animal conservation and logistic domains, whereas monitoring networks can have a function in, for example, health (patient monitoring) [4] and environment settings (environmental conditions, weather) [5]. The nodes typically used in sensor networks still have limited measurement capability and usually determine a single or limited set of physical or chemical parameters (e.g. temperature, pressure, oxygenation, conductivity and pH). A wireless sensor network for the online monitoring of water quality was recently constructed using ion selective electrodes to simultaneously measure nitrate, ammonium and chloride [5].

To be able to gather much more detailed physical, chemical and biochemical data, complex devices and equipment should be connected in a network. However, this poses significant technological challenges as such measurement devices and set-ups are normally operated stand-alone in controlled laboratory conditions. Furthermore, instrument maintenance and data processing require skilled operators. A first step is made through the development of ‘point-of-care’ devices based on regular laboratory instrumentation. Point-of-care, a term referring to healthcare, in this context relates to benchtop equipment that does not require strict laboratory conditions and can be operated on a regular basis by trained but not necessarily skilled operators. This typically requires instrument miniaturization, robust methodology, simplified user interfaces, automation, intrinsic calibration and quality control and integrated data processing and reporting. The final step is the deployment of fully portable, ideally hand-held devices that are connected to the sensor platform and can be used for on-site operation in real-time. The technological challenge to create portable devices with measurement capabilities similar to regular laboratory equipment is enormous. However, the reward would be equally substantial as this would result in a mobile sensor network capable of retrieving information at any desired location and time.

The current mobile smart phones offer an interesting technology platform for mobile sensors in a wireless network given the intrinsic capability to capture temporal and spatial information and transfer data and the optimized interface allowing users to perform a wide range of tasks. In recent literature, the use of the mobile phone camera as spectral analyser has been reported for the chemical analysis of potassium [6] and chlorine [7], for the detection of amplified DNA [8] and for banana ripeness estimation [9]. A striking example of the potential of smartphone sensor networks is the iSPEX project to monitor and map atmospheric aerosols in The Netherlands [10]. Over 3000 citizen scientists participated in this study by measuring the degree of polarization of the cloud-free sky by using a special add-on for the iPhone that was provided by the research team.

The scientific insights and developments described above can be of great value to forensic science, specifically in a high-volume casework setting with limited forensic interpretation. There is a strong intrinsic motivation in the criminal justice system to make forensic information available as rapidly as possible as this assists in solving crime and making legal proceedings more efficient. To prevent delays that naturally occur when evidence has to be dispatched to and analysed by forensic laboratories, there is an interest in law enforcement conducting forensic analyses in-house and directly at the scene of crime. However, the lack of controlled laboratory conditions, rigorous quality control procedures and forensic expert knowledge usually prevents findings being used as evidence in court. With the results being of a presumptive nature, subsequent analysis at the forensic laboratory often remains necessary. Additionally, the fragmented gathering of forensic information leads to reduced forensic oversight and insight. These problems could be tackled and the full potential of point-of-care and mobile forensic analyses could be realized if measurement devices could be operated in an integral forensic network. Through the network, the necessary calibration and quality control measures could be taken that would enable deployable forensic instrumentation to yield robust findings that can directly be used as evidence. The network would allow forensic experts to assess data generated outside the forensic laboratory and to provide direct assistance to the operators on location. From these activities, it also becomes apparent for which samples a more detailed follow-up investigation is required at the forensic laboratory. The forensic expert capacity is thus used more effectively and findings can be fed into the platform creating a continuous cycle of platform and data development. This approach would combine central data gathering allowing forensic intelligence and knowledge management with rapid and efficient decentralized forensic analysis. This novel concept, although technologically challenging, could lead to a step change in the efficiency and efficacy of the forensic information gathering process. It could also cause a paradigm shift in the role of forensic institutes and forensic experts in the criminal justice system: a shift towards a new role for forensic institutes and laboratories as custodians of the forensic platforms and point-of-care and portable equipment and methods. It would also allow forensic institutes to develop powerful forensic intelligence tools to reveal potential case and evidence connections, to better understand criminal activities, to monitor and optimize policing, to improve the efficiency of forensic investigations and to assist in crime prevention and disruption [11–14]. The design, implementation and consequences of integrated forensic platforms will be discussed in more detail in §3.

3. Integrated forensic platform projects at the Netherlands Forensic Institute

(a). The potential and challenges of mobile DNA technologies

The first hours of a crime scene investigation, the so-called ‘golden hours’, are often of crucial importance for the police to get more information about the identity of potential suspects and to obtain relevant facts and data. Especially in high profile cases, the criminal justice system has a strong need for immediate information to focus the investigation and formulate plausible scenarios. In the forensic setting, the time from when the crime scene sample is secured to when the results are reported in the forensic testimony is defined as the turnaround time. A recent study in The Netherlands has shown that average turnaround times (from crime scene sampling to DNA report) are 66 days for traces from serious crimes and 44 days for traces from high-volume crimes [15]. This clearly illustrates that although the forensic DNA investigation chain meets all the volume and quality requirements, it does not meet the needs of the criminal justice system and specifically those of law enforcement with respect to delivery times of results.

To assist the criminal justice system in their demand for fast DNA analysis results, forensic scientists have attempted to integrate fast technologies into protocols that speed up DNA analysis and delivers a DNA profile under stringent laboratory conditions within a short, predetermined time frame. Recently, a 6-h DNA typing service has been developed and validated by the NFI [16]. This has been achieved by integrating a fast PCR protocol with an inhibitor tolerant DNA polymerase. The input of DNA is regulated by the use of small lifting tapes that transfers enough but not too much DNA from a swab or fabric. By this procedure, the DNA quantification step which is usually applied in routine analysis to avoid PCR artefacts by the addition of too much template DNA can be skipped.

Speed optimized methods in the forensic laboratory offer the advantage of operating in a high-quality laboratory environment minimizing risks of contamination and other experimental errors. Additionally, forensic DNA experts are available for the evaluation of complex profiles and criminalistic interpretation. Approved DNA profiles can quickly be added to and searched against the database. However, the disadvantage of this approach is that the samples still have to be transported to the laboratory, which takes additional time, logistic processes and paperwork. Stringent time-consuming procedures in the chain of custody (from the crime scene going to the forensic facility of the police and finally to the forensic laboratory) are required to minimize the risk of sample loss, sample mix-up or incorrect labelling. The main issue remains, however, that the DNA analysis process should only minimally delay the progress of the criminal investigation. Ideally, forensic DNA information is provided instantly and on the crime scene location while meeting all the quality standards of the forensic laboratory.

Therefore, it is expected that in the near future, research efforts will focus on the development of technologies that will improve the speed of DNA evidence analysis. This technology will aim at robust, mobile, all-in-one platforms for STR profiling to reduce the actual turnaround time from days to hours. Currently, rapid analysis of reference material such as buccal swabs and samples containing vast amounts of DNA such as blood is possible using the all-in-one platforms. The technical innovations in the area of fast and mobile DNA analyses are towards creating fully integrated instruments for the analysis of DNA traces [17]. These instruments will enable the analysis of biological traces on or near the scene and connect the results directly to a reference profile from the DNA database to identify suspects, witnesses and/or victims. With these opportunities, it will be possible for an investigation team to obtain identification knowledge already at the initial stages of the crime scene investigation. Such a technological innovation will influence future criminal investigations compared with today's standards.

When evaluating instruments for local DNA analysis, it is important to be aware of the limits of performance. Although these instruments must produce the same correct and reliable DNA typing results, the mobile systems are not necessarily as sensitive as testing under laboratory conditions. The course of action of the existing mobile DNA platforms consists of integrated extraction, PCR amplification, fragment separation and detection without human intervention. Interpretation and statistical evaluation of the results need human intervention and technical review [18]. One technical mobile platform was validated by the NFI and initial results were published [19]. Recent additional tests on the updated device showed great improvements with successful typing results from reference samples and crime scene stains like saliva and blood that inherently contain adequate amounts of template DNA. Future improvements such as quantification and increased sensitivity are to be expected in the near future. Due to the limited sensitivity of the available systems, an evidence-based selection system of biological samples is of key importance to make a decision on whether to process the sample for rapid analysis at the crime scene or secure the sample for laboratory testing. This requires a substantial body of expert knowledge on the properties of biological traces in their potential to allow for fast mobile analysis or the necessity for processing at a fully equipped forensic DNA typing laboratory. To assist this selection process, a novel ‘Lab-on-a-chip’ technology is being studied in The Netherlands [20]. The aim is to develop a semi-quantitative method to screen traces at the crime scene for human DNA. The results can be provided immediately to the forensic investigation team. Pre-analysis of trace samples for human DNA may also indicate whether or not a trace is likely to be relevant for further forensic DNA analysis. Currently, biological traces are frequently secured from the crime scene without any knowledge on the presence of human DNA in the trace of interest. It is expected that the future availability of a fast and sensitive DNA semi-quantification method that can be used at the crime scene will improve the selection process to obtain higher quality biological samples from the crime scene.

Despite the technological challenges that still exist, matters such as storage of DNA traces, possibility of performing a second analysis, data protection, securing privacy and a clear legal framework should also be addressed. In The Netherlands, for example, DNA profiling is only allowed upon authorization by the Public Prosecutor, the analysis should be performed by an accredited laboratory and sufficient DNA material should be stored for additional (control/second opinion) analyses. The legal and quality aspects are of equal importance to create a secure, just and robust environment for the integration of DNA profiling at or near the crime scene.

Regulations and procedures regarding the integration of mobile DNA platforms should also consider crime scene investigation strategies. A lack of scientific data and understanding of human factors and effective procedures at the crime scene still exists today, making it challenging to fully understand the potential and pitfalls of real-time DNA profiling of biological traces. Finally, the limitations with respect to complex stains and forensic interpretation need to be carefully considered. For complex forensic DNA investigations, the laboratory will stay the first choice for getting optimal typing results from mixed and low-level biological traces. As these complex analyses and associated criminalistic interpretation (e.g. crime relatedness, support for prosecution and defence hypotheses) are often of crucial importance, the investigation at the scene or police station should preserve all options for advanced forensic analysis at a later stage in the DNA laboratory.

(b). Hansken, a new approach to big data forensics

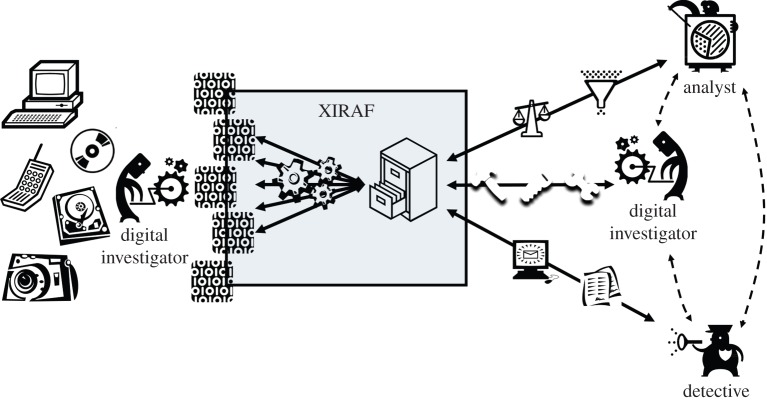

Within the NFI, a new approach has been realized for processing data from various sources of digital evidence, such as data storage media from computers and mobile phones, called XIRAF [21]. As the amount of data is expanding exponentially each year, a scalable solution for processing the data is needed [22]. This XIRAF approach has been shown to reduce the case backlog by helping the end-user understand the digital material in a timely fashion. Forensic procedures in use today place the burden of providing relevant digital evidence in the domain of the digital investigator, as well as maintaining the digital analysis and storage equipment and finally providing in-depth knowledge on the origin of the digital traces found. The implementation of an automated analysis framework shifts the task of finding relevant digital traces from the digital investigator to the tactical investigator, who has more knowledge of the facts and circumstances surrounding the case. In this way, the digital investigator can put all his knowledge and education into supporting the tactical investigator with interpretation of the digital traces found, as is illustrated in figure 1. Evidence from various law enforcement agencies have shown that investigations in any urban population of roughly 15 million people generate between 4 and 20 PB of data to be analysed annually, with on average 4 TB of data per case. XIRAF presents all the data from a case in one overall view of the digital devices pertaining to the case. The investigator then applies queries to the data in order to reduce the set of traces. However, the XIRAF approach reached its limits at 1 PB of raw data, and a more scalable successor, Hansken, was designed.

Figure 1.

Infographic of NFI's XIRAF/HANSKEN technology illustrating the concept of a national platform for processing digital evidence in the Dutch Criminal Justice System.

A scalable solution can only be arranged by cutting overhead costs and predominantly computing and storing the data in an aggregated fashion in one (logical) place. However, it is our opinion that an aggregation on this scale can only be done when the following principles are adhered to from the earliest architecture and design phase to deployment:

— security;

— privacy;

— transparency;

— multi-tenancy;

— future proof;

— data retention;

— reliability;

— high availability.

Security, privacy and transparency are necessary to demonstrate to the public and magistrates that the data were handled responsibly, by whom and at what point in time. But it most of all creates a platform where analysis methods can be developed, shared and used, thus addressing the bottleneck of knowledge transfer. The rate of knowledge transfer in the digital forensics arena is not keeping up with the rate of technology change, when the methods themselves become more complicated—both to execute and to understand. By implementing the analysis in a platform, it gets executed in a lot more cases with more investigators using its result. In this way also deficiencies in the analysis are found more rapidly and often, which will lead to a continuous improvement cycle.

As the amounts of data grow very rapidly, there is a need for data reduction to remain cost effective. Several methods exist for intelligent data analysis and triage. In future approaches, several methods for intelligent data analysis can be linked to Hansken. Developments in deep learning are important to use, and with experience of the user queries new strategies for more efficient searches will be developed. In deep learning, different representations can provide different explanations of the factors for the data. Specific domain knowledge can be used to help the design of the representations, with the possibility to use generic priors. New developments can be implemented in unsupervised feature learning and can include probabilistic models [23].

Within digital data analysis, it is important to provide information on the uncertainty of the digital data acquired and the conclusions that can be drawn from the data. Casey describes the different potential sources of error within digital evidence [24]. These sources vary from corrupt log files to partial data that are restored from information sources as well as errors in interpretation of the data. These uncertainties are also characterized further within best practice guides on digital evidence, within the ISO 17025 framework [25]. As the ground truth in big datasets is often not known, and the determination of priors and likelihood ratios which are preferred within forensic science can be complex, alternative approaches such as the Big Data Bootstrap method have been suggested where an absolute confidence interval is provided instead of a relative error [26].

Images from other sources can be linked, such as databases of patterns of cameras which have been used in child pornographic cases. With the use of Camera Identification based on Photo Response Non-Uniformity, new links can be made with cameras [27]. Also other databases such as biometric databases of faces and other body features could be linked in the future. For improving the speed of the system, approaches of improving the performance with a GPU cluster are in development [28,29].

It is expected that in 2018 more than 70% of the data on the Internet will be video data [30]. The challenge with large amounts of video and image data is, however, that the data are heterogeneous and not standardized. For example, face recognition software is often developed for faces that are taken from frontview passport photographs. With variable views with respect to angles, distance, lighting and contrasts, it becomes more difficult to process the data automatically, although improvements are seen [31]. Approaches where user interaction is necessary will help to reduce the examination time [32].

(c). The NFiDENT project, reliable drug analysis within a day

The problem of illicit drugs is a perpetual and wide-spread phenomenon [33]. One of the tasks in the high-volume process of drug analysis is to reliably identify the nature of the seized material for court. Nowadays, the logistics and bureaucracy in the complete process of the proper identification of these illicit substances is often more time-consuming than the chemical analysis itself, making the overall endeavour suboptimal. In order to obtain useful information in an early stage of the investigation and to efficiently use the capacity of both police and forensic institutes, all kinds of presumptive testing are used [34]. However, this is not a solution for the real problem at hand: the process of identifying the nature of illicit substances is inefficient and consumes too much time and thus capacity in relation to the nature of the cases.

When one might design an ideal solution for this situation:

— the processes should be simple and fast, but of such standard that immediate proof is attained. In this way, cases only have to be handled once. This will lead to an enormous reduction in paperwork and bureaucracy;

— the cases should be handled and finished/reported the same day that they are received. In this way, no logistic process is needed other than transport of the sample to the final storage location (if this is not already close to the place where the illicit drugs are registered and investigated);

— the identification apparatus should be close to the place where the illicit drugs are stored;

— the technology and equipment used for identification should be robust and easy to use by non-experts and preferably based on generally accepted methodologies;

— a quality system should be designed that is safe, easy to use and capable to signal those cases where further analysis is needed, for example at a forensic laboratory with additional techniques; and

— the acquired chemical data are accessible by the forensic expert and no information is lost that is crucial/relevant for the forensic interpretation of case work.

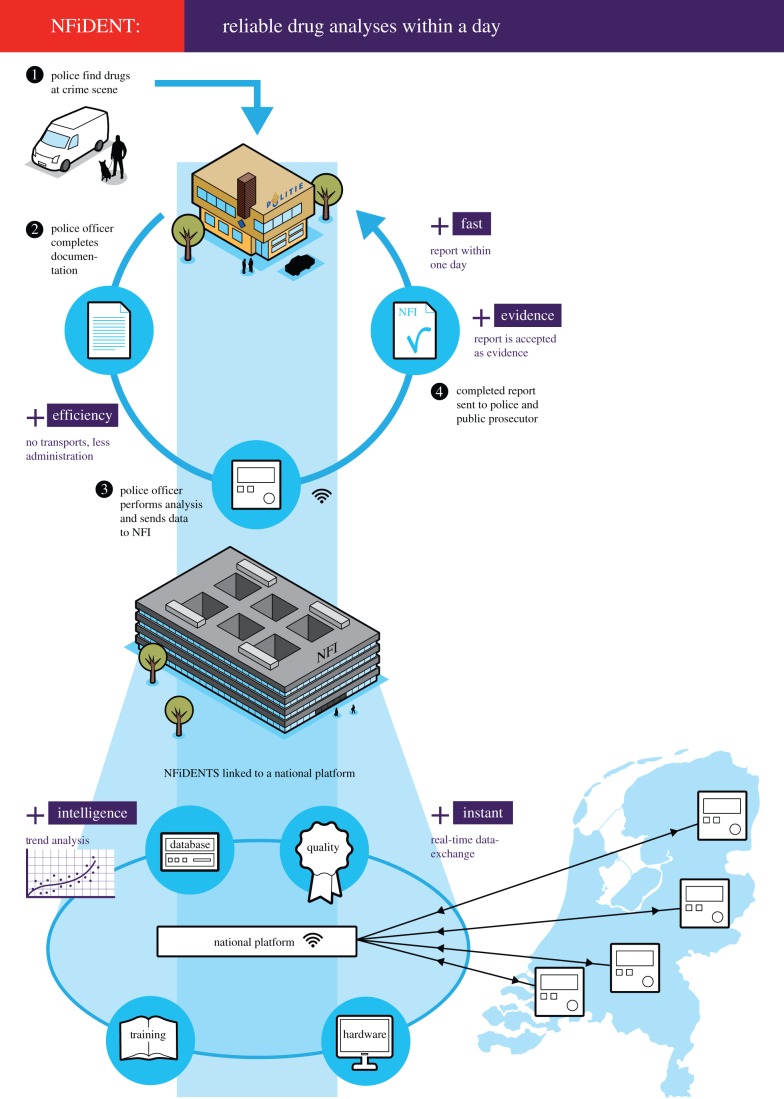

In The Netherlands, a new concept, illustrated in figure 2, has been designed based on the ideal situation described above. This required a new way of cooperation between the National Dutch Police, Public Prosecution and the NFI.

Figure 2.

Infographic of the NFiDENT project illustrating the concept of a national platform for accredited drug analysis and identification in the Dutch Criminal Justice System. (Online version in colour.)

The key elements in this new concept are:

— the placement of mobile analysis equipment at police stations;

— drug analysis measurements that are initiated by the police;

— a platform for the mobile analysis equipment that is hosted by the NFI which facilitates and integrates analysis, quality control and database management.

The main objective and challenge in this new way of working is that the report for court is accepted as reliable evidence with the same status as regular forensic reports.

To identify illicit drugs in complex mixtures encountered in case work, the golden analytical standard is a combination of a separation technique and a spectroscopic technique. Typically, for the most prevalent drugs encountered on the street, gas chromatography is used in conjunction with a mass spectrometer (GC–MS) or an infrared detector (GC–IR). The traditional analysis of drug samples using a GC–MS or GC–IR system often requires time-consuming sample preparation, a chromatographic separation, and finally the (automated) recording of the MS or IR spectrum. Conventionally, a gas chromatograph coupled to an MS or IR detector is a heavy instrument that consumes substantial watts and is a complex system that needs skilled people to operate it. To move the analysis outside the forensic laboratory, while still being confirmatory, it has to be simplified so that no specific analytical skills are needed. This includes sample preparation, which should be minimized as much as possible, and the whole process of identification of the targeted illicit drugs, which should be automated. A number of producers of GC–MS equipment have put effort in miniaturization for on-site analysis of (semi-)volatiles. Transportable or hand-held systems are developed to aid homeland security at the airport, in military operations and in environmental pollution control [35–37]. The developments led to commercially available analytical equipment that moved out of the forensic laboratory to places where less technical users need them. In the case of drug samples, the equipment is applied to confirm indicative results already obtained with presumptive testing used by law enforcement. When using the well-established GC–MS technique for the analysis of solid street drugs in the field without sample preparation, direct thermal desorption is a fast and reliable way of sample introduction. This type of sample introduction is made available on a number of mobile GC–MS systems under different names, e.g. prepless sample introduction probe, direct sample introduction probe or Chromatoprobe [38]. To reduce the size of the mobile GC–MS systems, a transition to ion trap based mass spectrometric detectors is seen [39,40]. The advantage of this type of analyser is that the pumping system does not need to maintain a very high vacuum and ions can be analysed at relatively high pressures. In addition, the possibility to implement automated tandem mass spectrometry (MS/MS) is beneficial for the identification process.

Inevitably, the transition of analytical equipment from laboratory-scale to a miniaturized version leads to a compromise in analytical performance. This is not necessarily an obstacle for mobile GC–MS systems as long as the forensic experts are confident with the identification process of the drugs being reported. This forces the forensic expert to validate the new GC–MS method when the transition is made from the laboratory to the field, i.e. the police station or scene of crime. An implication of this might be that only a limited set of illicit drugs can be identified compared with the traditional GC–MS methods. As long as this covers the larger fraction of drug samples seized, the transition still is beneficial. This also holds for the mobile FLIR G460 GC–MS system that is currently tested at the NFI and a Dutch police station. The aim of the project is that at least 80% of all illicit drugs cases can be dispatched at the police station.

When it comes to the analysis of illicit drugs a number of other analytical methods are available that might be suitable for a fast and easy identification without requiring sample preparation. These methods are either based on mass spectrometry only, through direct ionization of substances from (solid) drug samples followed by MS(n) [41], or are based on spectroscopic techniques like (near) infrared [42] or RAMAN-spectroscopy [43,44]. The use of spectroscopic techniques is underestimated in many forensic laboratories largely because of the difficulty to get robust results when illicit drugs are mixed with rare cutting agents or contain unusual and unknown excipients. However, the capabilities of modern spectroscopic equipment have improved, including direct sampling devices to minimize sample preparation and multi-component algorithms to identify illicit drugs in typical street samples [45]. The two main advantages of a spectroscopic analysis are the analysis time and the costs involved. A drawback of these techniques used to be the relatively high limits of detection compared with traditional GC–MS methods used for the most prevalent drugs, but improvements in chemometrics have pushed the boundaries to lower levels. Unfortunately, in contrast to GC–MS analysis, a thorough investigation/validation of the discriminative power of these methodologies has never been reported/published for illicit drugs like cocaine or amphetamine type stimulants, and it is unknown whether all possible stereoisomers and/or homologues can be distinguished from these substances with these spectroscopic techniques. Depending on the spectroscopic technique and the composition of the drug sample, the spectra obtained are not always interpretable and it all comes down to the ability of the software used for chemometric analysis to identify illicit drugs present and the way results are displayed for court presentation.

The use of direct ionization techniques, also known as ambient ionization techniques, for the mass spectrometric analysis of illicit drugs with limited or without sample preparation is a proved analytical method for easy and fast on-site identification. A large number of ambient ionization methods has been developed, with desorption electrospray ionization [46] and direct analysis in real time [47] being the most familiar modes. When first introduced, these ionization techniques were directly coupled to expensive, sophisticated commercial mass spectrometers which require skilled operators and laboratory conditions. Nowadays, a number of miniaturized MS systems have been developed based on different types of ion trap mass spectrometers which use an ambient ionization source for direct analysis [48].

Two promising ambient ionization techniques that can be easily installed and operated in combination with miniaturized MS systems are low-temperature plasma (LTP) desorption and paper-spray ionization. LTP desorption is based on active species that are formed in a low power plasma. This gentle desorption/ionization technique has the advantage that the surface is hardly modified when chemicals are sampled directly from the surface of the illicit drug containing material [49]. With paper-spray ionization ions are generated, comparable to (nano-)electrospray desorption, directly from a sample that has been placed on a triangular-shaped paper substrate by applying a high voltage to the paper while adding a small amount of solvent(s) [48,50]. The application of the drug sample to the paper can be done by wiping or by the application of a solution to the paper.

In the case of complex mixtures, a lot of different substances can be desorped, ionized and introduced into the ion trap. For further identification, an automated MS–MS analysis (Multiple Reaction Monitoring) should be performed on selected drug analytes expected to be present based on the results of the presumptive tests. In addition, the presence of a combination of m/z values in the overall MS spectrum that can be ascribed to known by-products or regular cutting agents of the targeted drugs might assist the identification process.

The current trend in analytical instrumentation development is to high performance, smaller instrumentation with sophisticated software, and a simplified user interface. Ideally, the function and capability of these modern devices should be tailored to the drugs substances that need to be identified. For the analysis of solid drugs samples, it is anticipated that the development of a robust and easy operable small mass spectrometer in connection with direct ionization capability, adequate MS analysis performance and fit-for-purpose software will eliminate the requirement for complex, time-consuming sample pretreatment and chromatographic separation.

4. Concluding remarks on the future of forensic science

Further advancements in forensic science in combination with the introduction of new technology and methods that create an added value (innovation) for the end-user will definitely be able to cause a paradigm shift within the criminal justice system. However, according to Downes' law of disruption, technology changes exponentially while social, economic and legal systems change incrementally [51]. This makes it difficult for new technology to be introduced and implemented. Although Downes' law points at the fast developments in the digital world, it also holds for many other areas and especially for the criminal justice system as new legislation and extensive quality control measures are required to allow for new forensic methodology to be regularly applied.

The application of new science and technology during the past decades, e.g. in the areas of microbiology, chemistry and information technology, have already created a considerable growth in demand of forensic science services. If new technology is available that can provide valuable information to solve crime, there will immediately be a strong demand for it.

It is important to realize that within the context of the scientific areas mentioned above, new classes of trace evidence came into play. These classes were simply not taken into consideration previously, either because the evidence did not exist before and/or no methods were available to investigate the evidence. This is definitely the case for digital forensic science that has created a completely new world of trace classes. Nowadays, people live in a hybrid world in which they leave both physical and digital traces. In The Netherlands, in the twenty-first century, there is hardly any criminal investigation in which digital traces do not play a role. It is, therefore, crucial for forensic service suppliers to be able to retrieve and analyse traces from all available digital sources.

Another reason for the growth in the demand of forensic services is the awareness of the end-users of what forensic science has to offer. This has also created a more demanding end-user, that is able to formulate its innovative needs [52]. The latter is extremely important to be able to determine those areas in which R&D and innovation are necessary. These efforts should be focused on providing investigators and legal experts with more timely and relevant information.

The success of forensic science over the past decades has come at a price. Due to the organizational structure of the sector and the forensic institutes within it, the growth in demand resulted in many instances in severe backlogs and consequently long turnaround times. The resulting pressure on the operational side of the organizations has created a barricade for continued innovation and is thus delaying the expected paradigm shift. The basic problem is that most forensic science institutes are typically budget-oriented organizations with limited resources that cannot grow rapidly and significantly enough to keep up with the increase in demand.

Although it is tempting to try and find ways to increase capacity, the backlog problem has to be tackled from a much more fundamental perspective based on the role and efficacy of the overall criminal justice system. At the NFI, these insights have resulted in innovation efforts aimed at integrating forensic science and state-of-the-art technology to initiate the construction of forensic platforms allowing a much more efficient investigation of evidence. It takes courage to work on technology that aims for forensic investigations to be conducted outside the laboratory by non-experts. Can the required robustness and quality be guaranteed and will successful implementation in the end result in the reduction of the expertise and capacity at the forensic institutes? However, our experiences in the innovation projects described in this paper have so far been very positive. These projects have fuelled enthusiasm and strong support and participation from our key partners. Additionally, the forensic experts have started to discover the possibilities to increase the added value from the advanced methods at the laboratory. By providing robust field solutions for standard conditions, the integrated forensic platform will not only allow for more expert capacity for complex cases it will also signal the cases where such additional investigation is needed. This ultimately leads to a much more efficient use of relatively scarce skills and knowledge by replacing a large volume of standard cases by a lower volume of complex cases.

Clearly, one of the most important requirements to design, develop and implement fully integrated forensic platforms is sufficient financial resources to perform the necessary R&D and quality studies. Fortunately, national research funding organizations (e.g. science foundations) are starting to become more aware of the opportunities forensic science can offer. This is also true for the European Union. Research funds from DG Home Affairs and, last but not least, the Horizon 2020 funds from DG Research offer relatively large funding opportunities for forensic-science-related research. Additionally, there is a need to continuously implement the latest technology in these platforms. Such technology is often created outside the forensic domain and needs to be made ‘fit-for-forensic-purpose’ including forensic scientific validation. This requires an interdisciplinary ‘triple helix’ approach in which academic institutes, innovative companies and forensic experts collaborate. Ideally, such collaborations are of an international nature and yield solutions that can be used by a multitude of countries. A more international oriented structure would definitely help to create more innovation potential despite the strong national nature of judicial systems. The current initiatives within the European Union to create a European Forensic Science Area (EFSA 2020) and within the USA to create a Forensic Science Center of Excellence will hopefully fuel a more open and less fragmented structure in which integrated and international forensic solutions such as integrated platforms can emerge.

Competing interests

We declare we have no competing interests.

Funding

We received no funding for this study.

References

- 1.van Asten AC. 2014. On the added value of forensic science and grand innovation challenges for the forensic community. Sci. Justice 54, 170–179. ( 10.1016/j.scijus.2013.09.003) [DOI] [PubMed] [Google Scholar]

- 2.Tolle KM, Tansley DSW, Hey AJG. (eds). 2009. The fourth paradigm: data-intensive scientific discovery. Microsoft Corporation See http://research.microsoft.com/en-us/collaboration/fourthparadigm/4th_paradigm_book_complete_lr.pdf. [Google Scholar]

- 3.Yick J, Mukherjee B, Ghosal D. 2008. Wireless sensor network survey. Comput. Netw. 52, 2292–2330. ( 10.1016/j.comnet.2008.04.002) [DOI] [Google Scholar]

- 4.Alemdar H, Ersoy C. 2010. Wireless sensor networks for healthcare: a survey. Comput. Netw. 54, 2688–2710. ( 10.1016/j.comnet.2010.05.003) [DOI] [Google Scholar]

- 5.Capella JV, Bonastre A, Ors R, Peris M. 2010. A wireless sensor network approach for distributed in-line chemical analysis of water. Talanta 80, 1789–1798. ( 10.1016/j.talanta.2009.10.025) [DOI] [PubMed] [Google Scholar]

- 6.Garcia A, Erenas MM, Marinetto ED, Abad CA, de Orbe-Paya I, Palma AJ, Capitan-Vallvey LF. 2011. Mobile phone platform as portable chemical analyzer. Sens. Actuators B 156, 350–359. ( 10.1016/j.snb.2011.04.045) [DOI] [Google Scholar]

- 7.Sumriddetchkajorn S, Chaitavon K, Intaravanne Y. 2013. Mobile device-based self-referencing colorimeter for monitoring chlorine concentration in water. Sens. Actuators B 182, 592–597. ( 10.1016/j.snb.2013.03.080) [DOI] [Google Scholar]

- 8.Lee D, Chou WP, Yeh SH, Chen PJ, Chen PH. 2011. DNA detection using commercial mobile phones. Biosens. Bioelectron. 26, 4349–4354. ( 10.1016/j.bios.2011.04.036) [DOI] [PubMed] [Google Scholar]

- 9.Intaravanne Y, Sumriddetchkajorn S, Nukeaw J. 2012. Cell phone-based two-dimensional spectral analysis for banana ripeness. Sens. Actuators B 168, 390–394. ( 10.1016/j.snb.2012.04.042) [DOI] [Google Scholar]

- 10.Snik F, et al. 2014. Mapping atmospheric aerosols with a citizen science network of smartphone spectropolarimeters. Geophys. Res. Lett. 41, 7351–7358. ( 10.1002/2014GL061462) [DOI] [Google Scholar]

- 11.Bell C. 2006. Concepts and possibilities in forensic intelligence. Forensic Sci. Int. 162, 38–43. ( 10.1016/j.forsciint.2006.06.030) [DOI] [PubMed] [Google Scholar]

- 12.Ribaux O, Baylon A, Roux C, Delemont O, Lock E, Zingg C, Margot P. 2010. Intelligence-led crime scene processing. Part I: forensic intelligence. Forensic Sci. Int. 195, 10–16. ( 10.1016/j.forsciint.2009.10.027) [DOI] [PubMed] [Google Scholar]

- 13.Morelato M, Baechler S, Ribaux O, Beavis A, Tahtouh M, Kirkbride P, Roux C, Margot P. 2014. Forensic intelligence framework—Part I: introduction of a transversal model by comparing illicit drugs and false identity documents monitoring. Forensic Sci. Int. 236, 181–190. ( 10.1016/j.forsciint.2013.12.045) [DOI] [PubMed] [Google Scholar]

- 14.Ross A. 2015. Elements of a forensic intelligence model. Aus. J. Forensic Sci. 47, 8–15. ( 10.1080/00450618.2014.916753) [DOI] [Google Scholar]

- 15.Mapes AA, Kloosterman AD, de Poot CJ. In press DNA in the criminal justice system: the DNA success story in perspective. J. Forensic Sci. ( 10.1111/1556-4029.12779) [DOI] [PubMed] [Google Scholar]

- 16.Verheij S, Harteveld J, Sijen T. 2012. A protocol for direct and rapid multiplex PCR amplification on forensically relevant samples. Forensic Sci. Int. Genet. 6, 167–175. ( 10.1016/j.fsigen.2011.03.014) [DOI] [PubMed] [Google Scholar]

- 17.Vallone PM. 2014. Rapid DNA testing at NIST. See http://www.cstl.nist.gov/strbase/pub_pres/Vallone_GIS_Sept_2014.pdf.

- 18.Information on rapid DNA profiling provided by the Federal Bureau of Investigation on the FBI website. 2014. USA. See http://www.fbi.gov/about-us/lab/biometric-analysis/codis/rapid-dna-analysis.

- 19.Verheij S, Clarisse L, van den Berge M, Sijen T. 2013. RapidHIT™ 200, a promising system for rapid DNA analysis. Forensic Sci. Int. Genet. 4, e254–e255. ( 10.1016/j.fsigss.2013.10.130) [DOI] [Google Scholar]

- 20.Bruijns BB, Kloosterman AD, de Bruin KG, van Asten AC, Gardeniers JGE. 2012. Presumptive human biological trace test for crime scenes, based on lab-on-a-chip technology—‘HuBiTT’. In Poster presented at the EAFS 2012 Conf., Hague, The Netherlands, 20–24 August, 2012 ENFSI and NFI. [Google Scholar]

- 21.van Baar RB, van Beek HMA, van Eijk EJ. 2014. Digital forensics as a service: a game changer. Digit. Investig. 11, 54–62. ( 10.1016/j.diin.2014.03.007) [DOI] [Google Scholar]

- 22.Quick D, Choo K-KR. 2014. Impacts of increasing volume of digital forensic data. Digit. Investig. 11, 273–294. ( 10.1016/j.diin.2014.09.002) [DOI] [Google Scholar]

- 23.Bengio Y, Courville A, Vincent P. 2013. Representation learning: a review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 35–38, 1798–1828. ( 10.1109/TPAMI.2013.50) [DOI] [PubMed] [Google Scholar]

- 24.Casey E. 2002. Error, uncertainty, and loss in digital evidence. Int. J. Dig. Evid. 1. [Google Scholar]

- 25.ENFSI. 2009. Guidelines for best practice in the forensic examination of digital technology. ENFSI Best Practice Guide, v. 6. See http://www.enfsi.eu/sites/default/files/documents/forensic_it_best_practice_guide_v6_0.pdf.

- 26.Kleiner A, Talwalkar A, Sarkar P, Jordan M. 2012. The big data bootstrap. In Proc. 29th Int. Conf. on Machine Learning, Edinburgh, UK, 26 June–1 July 2012 ICML 2012 Handbook, p.112. [Google Scholar]

- 27.Gisolf F, Malgoezar A, Baar T, Geradts Z. 2013. Improving source camera identification using a simplified total variation based noise removal algorithm. Digit. Invest. 10–3, 207–214. ( 10.1016/j.diin.2013.08.002) [DOI] [Google Scholar]

- 28.van Werkhoven B, Maassen J, Bal HE, Seinstra FJ. 2014. Optimizing convolution operations on GPUs using adaptive tiling. Future Gener. Comput. Syst. 30, 14–26. ( 10.1016/j.future.2013.09.003) [DOI] [Google Scholar]

- 29.Daniel G, Neeb M, Gipp M, Kugel A, Männer R. 2011. Correlation analysis on GPU systems using NVIDIA's CUDA. J. Real-Time Image Process. 64, 275–280. [Google Scholar]

- 30.Cisco company. 2014. The Zettabyte era. White Paper. See http://www.cisco.com/c/en/us/solutions/collateral/service-provider/visual-networking-index-vni/VNI_Hyperconnectivity_WP.pdf.

- 31.NIST. 2013. NIST Face recognition vendor test, NIST report, 2013. See http://biometrics.nist.gov/cs_links/face/frvt/frvt2013/NIST_8009.pdf.

- 32.de Rooij O, Worring M. 2012. Efficient targeted search using a focus and context video browser. ACM Trans. Multimedia Comput. Commun. Appl. 8, 51 ( 10.1145/2379790.2379793) [DOI] [Google Scholar]

- 33.Executive Office of the President of the USA, Office of National Drug Control Policy. 2011. How illicit drug use affects business and the economy. See http://www.whitehouse.gov/sites/default/files/ondcp/Fact_Sheets/effects_of_drugs_on_economy_jw_5-24-11_0.pdf.

- 34.Tsumura Y, Mitome T, Kimoto S. 2005. False positives and false negatives with a cocaine-specific field test and modification of test protocol to reduce false decision. Forensic Sci. Int. 155, 158–164. ( 10.1016/j.forsciint.2004.11.011) [DOI] [PubMed] [Google Scholar]

- 35.Eckenrode BA. 2001. Environmental and forensic applications of field-portable GC–MS: an overview. J. Am. Soc. Mass Spectrom. 12, 683–693. ( 10.1016/S1044-0305(01)00251-3) [DOI] [PubMed] [Google Scholar]

- 36.Smith PA, Jackson Lepage CR, Koch D, Wyatt HDM, Hook GL, Betsinger G, Erickson RP, Eckenrode BA. 2004. Direct detection of gas-phase chemical warfare agents using field-portable gas chromatography-mass spectrometry systems: instrument and sampling strategy considerations. Trends Anal. Chem. 23, 296–306. ( 10.1016/S0165-9936(04)00405-4) [DOI] [Google Scholar]

- 37.Sekiguchi H, Matsushita K, Yamashiro S, Sano Y, Seto Y, Okuda T, Sato A. 2006. On-site determination of nerve and mustard gases using a field-portable gas chromatograph-mass spectrometer. Forensic Toxicol. 24, 17–22. ( 10.1007/s11419-006-0004-4) [DOI] [Google Scholar]

- 38.Wainhaus SB, Tzanani N, Dagan S, Miller ML, Amirav A. 1998. Fast analysis of drugs in a single hair. J. Soc. Mass. Spectrom. 12, 1311–1320. ( 10.1016/S1044-0305(98)00108-1) [DOI] [PubMed] [Google Scholar]

- 39.Contreras JA, Murray JA, Tolley SE, Oliphant JL, Tolley HD, Lammert SA, Lee ED, Later DW, Lee M. 2008. Hand-portable gas chromatograph-toroidal ion trap mass spectrometer (GC-TMS) for detection of hazardous compounds. J. Am. Soc. Mass Spectrom. 19, 1425–1434. ( 10.1016/j.jasms.2008.06.022) [DOI] [PubMed] [Google Scholar]

- 40.Gao L, Song Q, Patterson GE, Cooks RG, Ouyang Z. 2006. Handheld rectilinear ion trap mass spectrometer. Anal. Chem. 78, 5994–6002. ( 10.1021/ac061144k) [DOI] [PubMed] [Google Scholar]

- 41.Wells JM, Roth MJ, Keil AD, Grossenbacher JW, Justes DR, Patterson GE, Barket DJ., Jr 2008. Implementation of DART and DESI ionization on a fieldable mass spectrometer. J. Am. Soc. Mass Spectrom. 19, 1419–1424. ( 10.1016/j.jasms.2008.06.028) [DOI] [PubMed] [Google Scholar]

- 42.Moros J, Galipienso N, Vilches R, Garrigues S, de la Guardia M. 2008. Nondestructive direct determination of heroin in seized illicit street drugs by diffuse reflectance near-infrared spectroscopy. Anal. Chem. 80, 7257–7265. ( 10.1021/ac800781c) [DOI] [PubMed] [Google Scholar]

- 43.Vitek P, Ali EMA, Edwards HGM, Jehlicka J, Cox R, Page K. 2012. Evaluation of portable Raman spectrometer with 1065 nm excitation for geological and forensic applications. Spectrochim. Acta A 86, 320–327. ( 10.1016/j.saa.2011.10.043) [DOI] [PubMed] [Google Scholar]

- 44.Hargreaves MD, Page K, Munshi T, Tomsett R, Lynch G, Edwards HGM. 2008. Analysis of seized drugs using portable Raman spectroscopy in an airport environment—a proof of principle study. J. Raman Spectrosc. 39, 873–880. ( 10.1002/jrs.1926) [DOI] [Google Scholar]

- 45.Katainen E, Elomaa M, Laakkonen UM, Sippola E, Niemelä P, Suhonen J, Järvinen K. 2007. Quantification of the amphetamine content in seized street samples by Raman spectroscopy. J. Forensic Sci. 52, 88–92. ( 10.1111/j.1556-4029.2006.00306.x) [DOI] [PubMed] [Google Scholar]

- 46.Cooks RG, Ouyang Z, Takats Z, Wiseman JM. 2006. Ambient mass spectrometry. Science 311, 1566–1570. ( 10.1126/science.1119426) [DOI] [PubMed] [Google Scholar]

- 47.Cody RB, Laramée JA, Durst HD. 2005. Versatile new ion source for the analysis of materials in open air under ambient conditions. Anal. Chem. 77, 2297–2302. ( 10.1021/ac050162j) [DOI] [PubMed] [Google Scholar]

- 48.Li L, Chen T-C, Ren Y, Hendricks PI, Cooks RG, Ouyang Z. 2014. Mini 12, miniature mass spectrometer for clinical and other applications—introduction and characterization. Anal. Chem. 86, 2909–2916. ( 10.1021/ac403766c) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Harper JD, Charipar NA, Mulligan CC, Zhang X, Graham Cooks R, Ouyang Z. 2008. Low-temperature plasma probe for ambient desorption ionization. Anal. Chem. 80, 9097–9104. ( 10.1021/ac801641a) [DOI] [PubMed] [Google Scholar]

- 50.Wang H, Liu J, Cooks RG, Ouyang Z. 2010. Paper spray for direct analysis of complex mixtures using mass spectrometry. Angew. Chem. 122, 889–892. ( 10.1002/ange.200906314) [DOI] [PubMed] [Google Scholar]

- 51.Downes L. 2009. The laws of disruption. New York, NY: Basic Books. [Google Scholar]

- 52.ACPO. 2012. Harnessing science and innovation for forensic investigation in policing. Vision document from Association of Chief Police Officers. See https://connect.innovateuk.org/web/forensics/article-view/-/blogs/harnessing-science-and-innovation-for-forensic-investigation-in-policing.