Abstract

People with facial paralysis (FP) report social difficulties, but some attempt to compensate by increasing expressivity in their bodies and voices. We examined perceivers’ emotion judgments of videos of people with FP to understand how they interpret the combination of an inexpressive face with an expressive body and voice. Results suggest perceivers form less favorable impressions of people with severe FP, but compensatory expression is effective in improving impressions. Perceivers seemed to form holistic impressions when rating happiness and possibly sadness. Findings have implications for basic emotion research and social functioning interventions for people with FP.

The importance of facial expression during social interaction is well-documented in psychology. It communicates emotions, initiates and regulates the dynamics of conversation, helps people develop rapport, and builds social connectedness (Ekman, 1986; Tickle-Degnen, 2006). In contrast to the extensive research on facial expression, there is an extreme paucity of research on the social consequences for those who have facial paralysis or palsy (FP). An estimated 127,000 Americans develop or are born with FP each year (Bleicher, Hamiel, Gengler, & Antimarino, 1996). Although there are a variety of conditions that result in FP, all share an important social ramification: difficulty forming facial expression. FP varies in severity and can be congenital or acquired (occurring at birth or sometime later in life). Congenital FP can result from prenatal maldevelopments (e.g. Moebius syndrome or Hemifacial Microsomia) or birth trauma (e.g. from forceps delivery). Acquired FP can result from a variety of causes, including Bell’s palsy, infections, damage to the facial nerve from neoplasms (e.g. acoustic neuroma, parotid tumors), and trauma. For many of these conditions, FP may become chronic; the underlying cause for the paralysis (e.g. disease or injury) is no longer active, and the patient’s primary concern becomes social functioning (Coulson, O’Dwyer, Adams, & Croxson, 2004).

Although the way people form impressions of people with FP has not yet been examined, several studies have examined judgments of social attributes of people with Parkinson’s disease (PD), a condition which often results in an expressive mask: a poverty of expression in the face, body, and voice (Hemmesch, Tickle-Degnen, & Zebrowitz, 2009; Pentland, Pitcairn, Gray, Riddle, 1987; Tickle-Degnen, & Lyons, 2004; Tickle-Degnen, Zebrowitz, & Ma, 2011). These studies used a thin slice design in which perceivers observed video or audio clips of people with PD as short as 20 s and rated their impressions of them. Compared to people with PD who did not have masking, perceivers rated those with masking as less extraverted and more depressed (Tickle-Degnen & Lyons, 2004), and perceivers were less interested in pursuing friendships with them (Hemmesch et al., 2009).

A crucial difference between FP and PD is that FP leaves the other communication channels intact (i.e. the body and voice), and people with FP may compensate for their inexpressive faces by increasing expression in these channels (called compensatory expression; Bogart, Tickle-Degnen, & Joffe, 2012). For example, in a qualitative study of Moebius syndrome, one participant remarked:

I have this theory that my voice is my face. Obviously, we can’t express all the emotion that we want to express with our faces, but I’m a really expressive woman…I have learned over the years that because I’m musical I’ve been able to train my voice to convey the emotion that I want to show

(Bogart, Tickle-Degnen, & Joffe, 2012, p. 1216).

In a videotaped behavioral study, Bogart, Tickle-Degnen, and Ambady (2012) found that people with congenital FP displayed more compensatory expression than people with acquired FP, possibly because they have been adapting to others’ reactions for their entire lives to facilitate social interaction. However, the effectiveness of these compensations in improving others’ first impressions has not yet been tested.

Social Perceivers’ Impressions of People with Facial Paralysis

The purpose of this study was to examine how people perceive the emotions of individuals with FP, which would not only help identify applications for people with facial paralysis, but would also illuminate our understanding of emotion perception in the general population. Drawing from basic social psychological research, we propose three possible ways that perceivers may integrate an inexpressive face with an expressive body and voice when judging emotion.

Holistic perception

The first possibility is that emotion may be perceived holistically, based on a blend of information from the face (which appears to have little to no emotion) and the expressive body and voice. Consider a person with completely paralyzed face, but a normally expressive body and voice. Based on a combination of these channels, this person may be perceived as having a moderate level of emotion but less than someone with normal facial, bodily, and vocal expression. In this case, compensatory expression would be effective in increasing the apparent emotion.

A burgeoning area of research suggests that people perceive emotional information holistically (Aviezer, Trope & Todorov, 2012a, 2012b; Van den Stock, Righart, & de Gelder, 2007). During social interaction, we typically observe a combination of cues embedded in context, the combination of which can provide the best information about the person’s emotions. Thus, holistic perception may be adaptive (Aviezer et al., 2012b). Holistic perception studies involve presenting perceivers with stimuli that are a combination of facial and bodily expressions or facial and vocal expressions that depict either congruent or incongruent emotions. For example, a happy face combined with a happy body was more likely to be categorized as happy than when combined with a fearful body (Van den Stock et al., 2007), suggesting multiple channels influence emotion perception. Based on evidence for holistic emotion perception, and the reports that compensatory expression may be a useful strategy, we predicted that emotion would be perceived holistically in this study.

Incongruence effect

Although there is stronger evidence to support the holistic hypothesis, there are alternative possibilities. Studies often find that relative to emotionally congruent pairings of channels, pairings of incongruent channels (e.g. a fearful face and a sad body) are judged less quickly and accurately (Aviezer, Trope & Todorov, 2012b; Meeren, van Heijnsbergen, & de Gelder, 2005). These findings, commonly cited as evidence for holism, may actually be better described as an incongruence effect. This indicates that participants are attempting to incorporate information from multiple channels when judging emotion, but instead of blending channel information, their emotion judgments are impaired by the conflicting information. If this is the case for perception of FP, the contrast of an inexpressive face combined with an expressive body and voice may be perceived as incongruent and distracting to perceivers, further impairing emotion recognition. If this is the case, compensatory expression would actually increase this perceptual incongruence and hinder emotional communication.

Face primacy

The third possibility is that emotion judgments may be based primarily on the face, referred to here as face primacy. In this case, the person with FP would be perceived as showing little emotion, and compensatory expression would not be effective, regardless of how expressive the body and voice are. Historically, the social perception literature has focused on facial expression, concluding that it is often the primary cue on which people base their impressions (Rosenthal, Hall, Archer, DiMatteo, & Rogers, 1979; Wallbott & Scherer, 1986). Using the Profile of Nonverbal Sensitivity, Rosenthal and colleagues found that the channels of vocal tone, body, and face contribute to emotion recognition in a ratio of 1:2:4. Barkhuysen, Krahmer, and Swerts (2010) found a more nuanced pattern, in which face primacy occurs when judging happiness, but sadness may be judged more holistically. Participants were video recorded while they read neutral or affective sentences and posed facial expressions. Positive emotions were detected equally well when perceivers viewed dynamic faces or when they observed a combination of dynamic faces and speech but were detected less well when perceivers listened to speech only. However, negative emotions were easier to detect when observing dynamic faces and speech, or when listening to speech only, compared to when viewing only the face. This may suggest that perception of positive emotions is mostly reliant on the face, while perception of negative emotions is more reliant on the voice and speech.

Methodological Innovations

It is difficult to integrate the above emotion perception findings due to several conceptual and methodological issues. First, previous studies examining holism had participants observe multiple channels but rate the emotion of only one channel. For example, the participant would view a happy face combined with a fearful body but was instructed to rate the emotion of the face only (Van den Stock, et al., 2007). Thus, it is not known how perceivers would rate the emotion of the whole person, a much more naturalistic task. Second, previous studies have examined the juxtaposition of two congruent (e.g. happy face and happy body) or incongruent channels expressing emotion (e.g. a happy face and fearful body; Aviezer, Trope & Todorov, 2012b; Meeren, van Heijnsbergen, & de Gelder, 2005; Van den Stock, et al., 2007). There has been little research examining the combination of an inexpressive or neutral channel and an expressive channel. By examining how a relatively neutral channel (FP) is combined with an expressive body and voice, and how this combination affects ratings of emotional intensity, this study will disambiguate whether channels are blended together (holism) or whether they are perceived as incongruent. Third, extant work has relied on artificial stimuli (for instance, those that digitally affix a facial expression on another body, are posed, or static). Stimuli that involve splicing together expressions may appear unnatural, and the novelty of these stimuli may be distracting. Moreover, posed expressions may not reflect the way emotions are naturally expressed, but rather, the actors’ stereotype or exaggeration of that expression. When attempting to draw conclusions about the way people integrate different channels in emotion judgments, using posed expressions limits these findings because some channels may be more exaggerated, clear, or salient than others, simply as an artifact of the posing.

Examining these questions in people with FP using a thin slice design is an innovative way to address these issues. FP allows us to examine the way perceivers judge emotion in the face—a channel that is usually a rich source of emotional information but which provides very little information in the case of FP—and determine whether additional channels influence emotion judgments. A thin slice design involves showing very short clips, called thin slices, of interpersonal behavior to participants and collecting their impressions (Ambady & Rosenthal, 1992). Thin slices have greater ecological validity than many previous channel integration study designs because they involve spontaneous, dynamic, and naturalistic stimuli which involve a greater degree of emotional richness and complexity. Thin slices have been shown to be efficient, reliable, and relatively stable across time (Ambady, Bernieri, & Richeson, 2000). People are able to make accurate judgments at levels far better than chance about others’ social attributes based on thin slices (Ambady & Rosenthal, 1992). However, thin slice research on PD shows this accuracy breaks down when people form impressions about people with expressive masking (Tickle-Degnen & Lyons, 2004).

The Present Study and Hypotheses

In the present study, participants (perceivers) observed short videos of people with FP (targets) and rated the intensity of targets’ happiness and sadness. We examined happiness because people with FP report impairment in smiling as the most challenging symptom of their condition (Neely & Neufeld, 1996). Communication of negative emotions in people with expressive disorders such as PD and FP has not yet been examined, so we also examined sadness. Thus our hypotheses are presented with more confidence for happiness, and are more exploratory for sadness. Perceivers were randomly assigned to observe different isolated or combined channels (e.g. face only or voice+speech+body, etc.) to examine the way perceivers form impressions based on different channels. Hypotheses, statistical predictions, and findings are summarized in Table 1.

Table 1.

Summary of Hypotheses, Statistical Predictions, and Results

| Hypothesis | Statistical Predictions | Hypothesis supported? | ||

|---|---|---|---|---|

| H1: Perceivers would rate the emotions of people with severe FP as less intense than those of people with mild FP | Significant main effects indicating that people with severe FP are rated as less happy and sad. Significant interaction and simple effects finding people with severe FP are viewed as less happy and less sad than people with mild FP specifically in the face and all channels conditions | Supported for happiness but not sadness | ||

|

| ||||

| Holistic | Incongruence | Face Primacy | ||

|

|

||||

| H2a: Perceivers who observe only the face would rate targets’ emotions as less intense compared to perceivers who observe any other channel condition | Significant main effect of channel for both happy and sad ratings and planned contrasts between the face condition and each other condition indicating the following pattern for emotion intensity ratings: face < every other channel condition | Significant main effect of channel for both happy and sad ratings and planned contrasts indicating the following pattern for emotion intensity ratings: all channels < every other channel condition | Null effect | Holistic supported for both happiness and sadness |

| H2b: Compared to when perceivers observe all channels, the difference between intensity ratings of targets with severe versus mild FP would be larger when observing the face and smaller when observing conditions that do not include the face | Planned comparisons separate for happy and sad ratings indicating the following pattern for severity dampening scores: face > all channels > nonface channels | Planned comparisons separate for happy and sad ratings indicating the following pattern for severity dampening scores: all channels > face > nonface channels | Null effect | Holistic supported for happiness; inconclusive for sadness |

| H2c: Perceivers would rate the emotions of people with FP who used more compensatory expression as more intense than those who used less | Significant main effects for both happy and sad ratings indicating that targets who used more compensatory expression are rated as having more intense emotions | Significant main effects for both happy and sad ratings indicating that targets who used more compensatory expression are rated as having less intense emotions | Null effect | Holistic supported for happiness; incongruence supported for sadness |

Note. Severity dampening scores were calculated by subtracting perceivers’ ratings of severe targets from their ratings of mild targets, separate for perceivers’ ratings of targets’ happiness while describing a happy event and ratings of targets’ sadness while describing a sad event. Higher numbers indicate that perceivers rated people with mild compared to severe FP as having stronger emotions.

Hypothesis 1

Perceivers would rate the emotions of people with severe FP as less intense than people with mild FP. Emotional intensity was operationally defined as rating people with FP as more happy when they were describing a happy event and more sad when they were describing a sad event.

The remaining hypotheses test the holistic hypothesis against two competing accounts, the incongruence effect and the face primacy effect, with the following predictions:

Hypothesis 2a

Perceivers who observe only the face would rate targets’ emotions as less intense compared to perceivers who observe any other channel condition. This finding would be evidence for holism because it would indicate that participants rely on additional channels besides the face when forming impressions. In contrast, an incongruence effect would be supported if the all channels condition is rated as less intense than every other channel condition. This would indicate that the all channels condition, which includes a paralyzed face combined with an expressive body and voice, is perceived as incongruent, and this decreases perceivers’ ability to recognize emotion intensity, supporting the incongruence effect. If ratings in the face condition do not differ from the all channels condition, this would suggest that addition of other channels does not change perceivers’ impressions, suggesting the face primacy account.

Hypothesis 2b

If Hypothesis 1 were supported, we also predicted that, compared to when perceivers observe all channels, the difference between intensity ratings of targets with severe versus mild FP would be larger when observing the face only and smaller when perceivers could not see the face. This would suggest that the emotions of people with FP are viewed as a combination of their inexpressive faces and their expressive bodies and voices, supporting the holistic hypothesis. In contrast, if the difference between intensity ratings of targets with severe versus mild FP were larger when perceivers observed all channels compared to when they observed the face only, and smaller in conditions that do not contain the face, this would indicate that the incongruence between the face and other channels decreases perceivers’ ability to recognize emotion intensity. If there are no significant differences in severity dampening between the all channels condition and the face only condition, this would suggest that perceivers base their impressions primarily on the face and they do not take other channels into account.

Hypothesis 2c

Perceivers would rate the emotions of people with FP who used more compensatory expression as more intense than those who used less compensation. This would indicate that perceivers incorporate compensatory expression into their impressions, providing additional evidence for holism. The opposite pattern of findings, that perceivers rate people who use more compensatory expressive behavior as less emotional compared to those who use less compensatory expressive behavior, would indicate that the incongruence of compensatory expression impairs the ability to recognize emotional intensity. If no significant effects are found, this would suggest that perceivers base their impressions primarily on the face, and do not take other expressions into account.

Method

Stimuli

The target stimuli were drawn from an existing stimuli set of videos of people with FP, and the process for obtaining and coding the stimuli is described in Bogart, Tickle-Degnen, and Ambady (2012) and summarized here. Twenty-seven people with FP (Mage = 44.59, SDage = 12.60, 66.7% female) were recruited from support groups. Inclusion criteria were as follows: 18 years or older, paralysis or palsy of at least part of the face, and ability to hold a comprehensible conversation in English. Targets’ reported diagnoses were as follows: Moebius syndrome (n=13), benign facial tumors (n=6), unremitted Bell’s palsy (n=4), infection (n = 2), facial nerve trauma (n=1), and brainstem tumor (n=1). Targets were asked to perform autobiographical recall of a sad event, followed by a happy event (Ekman, Levenson, & Friesen, 1983) while being videotaped. They were instructed to remember an event when they were feeling sad/happy and describe it while reliving that emotion. As a manipulation check, targets rated how happy and sad they were feeling at the beginning of the interview and after each recall. Bogart, Tickle-Degnen, and Ambady (2012) reported that targets’ self-reported emotions changed during the autobiographical recall task in the expected direction. Interestingly, targets with severe FP reported feeling happier after recalling a happy event and sadder after recalling a sad event than those with mild FP.1

Coding compensatory expression

We extracted 20 s thin slice clips from each video beginning at a standardized time point from both the happy and sad autobiographical recall for each of 27 targets, yielding 54 clips in total. Targets’ verbal responses were analyzed using Linguistic Inquiry and Word Count (LIWC), a quantitative language analysis program. This measured the extent to which targets used positive (e.g. happy, joy) and negative emotion words (e.g. sad, hate; Pennebaker et al., 2007).

Five coders viewed the happy and sad clips in a random order and rated the quality, frequency, and intensity of each of the targets’ compensatory expression on a scale from 1 (low expressivity) to 5 (high expressivity), based on each of the following behaviors in the following order: inflection, laugh, talkativeness, loudness, gesture, head movement, trunk movement, and leg movement. Coders were blind to the hypotheses as well as FP severity. They observed and rated one channel at a time to avoid being influenced by other channels. When rating talkativeness and laughter, the video was not visible. When rating inflection and loudness in the voice channel, the video was not visible, and in order to prevent raters from being influenced by speech content, the audio track was content-filtered to remove speech content but retain the sound qualities of the voice (van Bezzoijen & Boves, 1986). When rating the remaining behaviors, the audio was turned off and the face was cropped out. The effective reliability, or the reliability of the average of the five raters as a group (Rosenthal & Rosnow, 2008), of each of the eight items ranged from .75 to .95. Behavioral ratings were averaged across raters, separate for happy and sad recall clips. To form compensatory expression scores, the behavioral ratings and LIWC emotion scores were standardized and averaged together, separate for happy (α = .88) and sad recall clips (α= .66), and dichotomized by median split to create high and low compensatory expression categories. This resulted in 14 targets in the high and 13 in the low compensatory expression group.

Coding FP severity

Five coders viewed videos of the face (with the body cropped out and no audio) and rated the frequency, duration, and intensity of the overall expressivity of each target’s face on a scale from 1 (low expressivity) to 5 (high expressivity). Coders rated the left side of all targets, then the right side of all targets to reduce carryover effects. In order to measure facial movement ability, only clips from the happy event were rated because this is the context in which the face is maximally expressive (Takahashi, Tickle-Degnen, Coster, & Latham, 2010). For each target, ratings were averaged across raters (effective reliability r = .87) and face side (α = .90), and then dichotomized by median split into high and low FP severity. This resulted in 14 targets in the severe FP group (overall facial expressivity rating: M = 1.60, SE = .11) and 13 targets in the mild FP group, (overall facial expressivity rating: M = 2.84, SE = .09). Control targets without FP were undesirable because they would differ from people with FP in appearance, life experience, and ascribed stigma. Thus, people with mild FP, who generally had moderate levels of facial expressivity, served as a comparison group for people with severe FP, who had low facial expressivity. FP severity was not significantly related to compensatory expression when recalling a happy event, r = .24, p = .23, or a sad event, r = .25, p = .21.

Procedure

Participant perceivers were 121 undergraduates at Tufts University who participated in exchange for partial course credit. See Table 2 for participant characteristics and condition descriptions. There were no differences across conditions for perceiver age, F(5,119) = 0.86, p = .051, ηp2 = .01, gender, χ2(5) = 8.14, p = .15, or ethnicity, χ2(25) = 23.45, p = .55. Perceivers were informed that the targets had FP because otherwise, perceivers assigned to conditions that did not include the face would never know that individuals in the videos had a disability. This ensured that all perceivers in each channel condition held a similar level of disability stigma. One limitation of this design is that informing participants of targets’ facial paralysis may not have allowed a fair test of the incongruence effect. However, mere awareness of the cause of targets’ limited facial movement would be unlikely to remove the perceptual incongruence when watching the videos. Even experts in PD, who are aware of facial masking symptoms, rate targets with reduced expressivity unfavorably (Tickle-Degnen & Lyons, 2004). This suggests that it is difficult to override automatic social perception processes to take into account information that may adjust an impression, like knowledge of a diagnosis.

Table 2.

Participant Characteristics

| Condition | Condition description | Age M (SD) | Female n | Ethnicity

|

Total n | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| African descent n | Asian n | Southeast Asian n | Caucasian n | Hispanic/Latino n | Middle Eastern n | |||||

| Face | video only; body cropped out | 19 (1.12) | 10 | 0 | 4 | 0 | 16 | 0 | 0 | 20 |

| Body | video only; face cropped out | 18.57 (1.33) | 13 | 0 | 2 | 0 | 18 | 1 | 0 | 21 |

| Voice | content-filtered audio only | 18.60 (0.75) | 17 | 3 | 3 | 0 | 13 | 1 | 0 | 20 |

| Voice+speech | audio only | 19.11 (3.16) | 11 | 2 | 3 | 1 | 13 | 0 | 0 | 19 |

| Voice+speech+body | video and audio; face cropped out | 20.79 (9.31) | 12 | 2 | 3 | 0 | 11 | 3 | 0 | 19 |

| All channels | full video and audio | 18.67 (1.06) | 10 | 1 | 2 | 1 | 16 | 1 | 1 | 22 |

|

| ||||||||||

| Total | 19.10 (4.00) | 73 | 8 | 17 | 2 | 87 | 6 | 1 | 121 | |

Perceivers were randomly assigned to one of the following six channel conditions: face, voice, voice+speech, body, voice+speech+body, all channels, and one of two randomized clip orders. Although this design included a more complete parsing of channels than previous studies (Barkhuysen et al., 2010; Meeren et al., 2005; Van den Stock et al., 2007; Wallbott & Scherer, 1986), we did not include all possible combinations of channels. Rather, we chose channel conditions that allowed for examination of most single channels as well as specific combinations of channels of interest, such as voice+speech, which are commonly observed together (e.g. when speaking on the phone), and voice+speech+body, which included all channels except for the face, so it could be compared with all channels condition to observe whether the addition of the face in the all channels condition resulted in different emotion ratings. After each of the 54 clips, perceivers rated their impressions of each targets’ happiness and sadness on 1 (not at all) to 5 (extremely) scales.

Data Analysis Plan

The perceiver was the unit of analysis. To test Hypotheses 1, and 2a, we conducted 6 (channel: face, body, voice, voice+speech, voice+speech+body, all channels) × 2 (severity: severe or mild) ANOVAs with repeated measures on severity. These ANOVAs were conducted separately for perceivers’ ratings of targets’ happiness when describing a happy event and for perceivers’ ratings of targets’ sadness when describing a sad event. Bonferroni corrections were used for all contrasts to account for multiple corrections. To test Hypothesis 2b, severity dampening scores were calculated by subtracting perceivers’ ratings of severe targets from their ratings of mild targets, separate for perceivers’ ratings of targets’ happiness while describing a happy event and ratings of targets’ sadness while describing a sad event. Higher numbers would indicate that perceivers rated targets with mild FP as having stronger emotions than targets with severe FP. Hypothesis 2c was tested with two one-way repeated measures ANOVAs comparing impressions of targets who used high vs. low compensatory expressivity, separate for perceivers’ ratings of targets’ happiness when describing a happy event and for ratings of targets’ sadness when describing a sad event. Because only perceivers assigned to the all channels condition were able to observe all compensatory expressions, we included only those participants.

Results

Hypothesis 1 and 2a

Happiness ratings

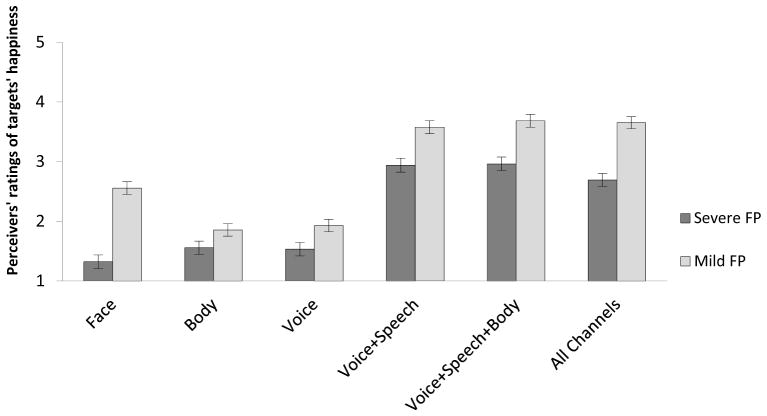

Supporting Hypothesis 1, there was a main effect indicating that, when describing a happy event, severe targets were rated as less happy (M = 2.17, SE = 0.05) than mild targets (M = 2.88, SE = .04), F(1, 115) = 475.07, p < .001, ηp2=.81. This main effect was qualified by an interaction of severity x channel, F(5, 115) = 19.73, p < .001, ηp2 = .46. See Figure 1 for M and SE. Simple effects of severity at each level of condition showed that targets with severe FP were rated as less happy than targets with mild FP in every channel (face, F(1,115) = 240.44, p < .001, ηp2 = .68; body, F(1,115) = 14.55, p < .001, ηp2 = .11; voice, F(1,115) = 24.71, ηp2 = .18; voice+speech, F(1,115) = 60.56, p < .001, ηp2 = .35; voice+speech+body, F(1,115) = 77.82, p < .001, ηp2 = .40; all channels, F(1,115) = 158.57, p < .001, ηp2 = .58.

Figure 1.

Perceivers’ ratings of targets recalling a happy event. Error bars represent standard errors.

Supporting Hypothesis 2a, a main effect of channel, F(5, 115) = 62.27, p < .001, ηp2 = .73, and contrasts indicated that the happiness ratings of perceivers observing the face did not differ from perceivers in other single-channel conditions (face, body, voice), but were significantly lower than the ratings of perceivers in multiple channel conditions (voice+speech, voice+speech+body).See Table 3 for mean differences of emotion ratings by channel condition. This supports the holistic hypothesis in that the more channels other than the face perceivers observe, the better they are at detecting happiness in people with FP.

Table 3.

Mean differences of emotion ratings by channel condition

| Perceivers’ ratings of targets’ happiness

| |||||

|---|---|---|---|---|---|

| Channel condition | Face | Body | Voice | Voice+speech | Voice+speech+body |

| Body | −0.23 | ||||

| Voice | −0.21 | 0.02 | |||

| Voice+speech | 1.32* | 1.55* | 1.53* | ||

| Voice+speech+body | 1.38* | 1.62* | 1.59* | 0.07 | |

| All channels | 1.23* | 1.47* | 1.44* | −0.09 | −0.15 |

| Perceivers’ ratings of targets’ sadness

| |||||

| Body | −0.11 | ||||

| Voice | 0.37* | 0.48* | |||

| Voice+speech | 1.36* | 1.48* | 0.99* | ||

| Voice+speech+body | 1.46* | 1.58* | 1.09* | 0.10 | |

| All channels | 1.15* | 1.26* | 0.78* | −0.21 | −0.32* |

Note. Numbers are mean differences (rows minus columns). SE range = .14–16.

denotes p < .05

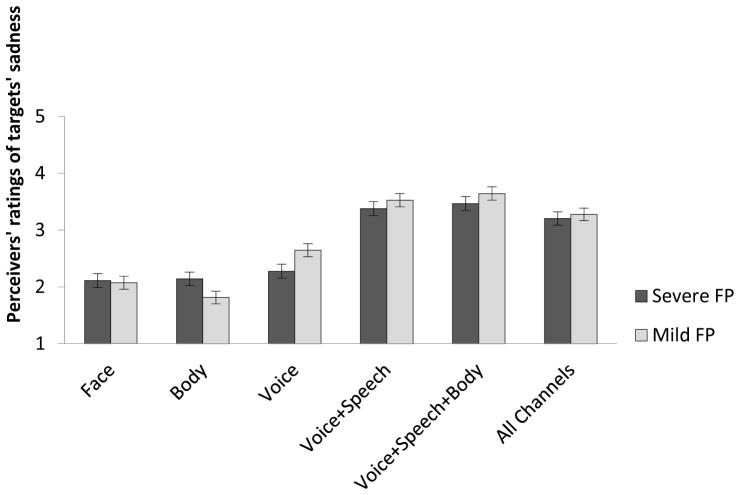

Sadness ratings

There was a main effect showing that, when describing a sad event, severe targets were rated as less sad (M = 2.76, SE = .05) than mild targets (M = 2.83, SE = .05), F(1,115) = 5.10, p = .03, ηp2 = .04. This main effect was qualified by an interaction of severity x channel, F(5, 115) = 10.97, p < .001, ηp2 = .32. See Figure 2 for M and SE. Simple effects of severity at each level of channel condition revealed that targets with severe FP were rated as less sad than targets with mild FP in the voice, F(1,115) = 26.39, p < .001, ηp2 = .19, voice+speech+body, F(1,115) = 5.74, p = .02, ηp2 = .05, and marginally in the voice+speech channel, F(1,115) = 3.91, p =.05, ηp2 = .03. On the other hand, targets with severe FP were viewed as sadder in the body channel, F(1,115) = 21.86, p < .001, ηp2 = .16. There were no differences in the sadness ratings of people with severe and mild FP in the face, F(1,115) = .29, p = .59, ηp2 = .00, or all channels condition, F(1,115) = 1.08, p = .30, ηp2 = .01. This interaction suggests that the difference between ratings of severe vs. mild targets was larger in conditions that did not involve the face, which does not support Hypothesis 1 for sadness ratings. Indeed, surprisingly, when perceivers observed the face, they rated people with severe and mild FP similarly in levels of sadness.

Figure 2.

Perceivers’ ratings of targets recalling a sad event. Error bars represent standard errors.

Supporting Hypothesis 2a, a main effect of channel, F(5, 115) = 39.05, p < .001, ηp2 = .63, and contrasts indicated that perceivers observing the face rated targets describing a sad event as significantly less sad compared to perceivers in four other channel conditions (voice, voice+speech, voice+speech+body, all channels), and there was no difference compared to perceivers in the body condition.

Hypothesis 2b

Happiness ratings

As predicted, planned contrasts revealed that severity dampening scores were significantly smaller in the all channels condition compared to the face condition, and the all channels condition had significantly greater severity dampening compared to conditions that did not include the face. This suggests that emotions are perceived as a blend of intensities of available channels, supporting the holistic hypothesis. See Table 4 for mean differences of severity dampening scores by channel.

Table 4.

Mean differences of severity dampening scores by channel condition

| Perceivers’ ratings of targets’ happiness

| |||||

|---|---|---|---|---|---|

| Channel condition | Face | Body | Voice | Voice+speech | Voice+speech+body |

| Body | −0.94* | ||||

| Voice | −0.84* | 0.10 | |||

| Voice+speech | −0.60* | 0.34* | 0.24* | ||

| Voice+speech+body | −0.51* | 0.43* | 0.33* | 0.09 | |

| All channels | −0.28* | 0.66* | 0.56* | 0.32* | 0.24* |

| Perceivers’ ratings of targets’ sadness

| |||||

| Body | −0.29* | ||||

| Voice | 0.41* | 0.70* | |||

| Voice+speech | 0.19 | 0.48* | −0.23* | ||

| Voice+speech+body | 0.22* | 0.51* | −0.19 | 0.03 | |

| All channels | 0.11 | 0.40* | −0.30* | −0.07 | −0.11 |

Note. Numbers are mean differences (rows minus columns). SE range = .10–12.

denotes p < .05

Sadness ratings

Although Hypothesis 2b was supported for happiness, it could not be tested for sadness because the test of Hypothesis 1 revealed that there was no severity dampening in the face or all channels condition when rating sadness.

Hypothesis 2c

Happiness ratings

In support of this hypothesis, there was a main effect indicating that targets who used more compensatory expression were viewed as happier (M = 3.45, SE = .12) than those who used less (M = 2.83, SE = .16), F(1,21) = 37.80, p < .001, ηp2 = .64.

Sadness ratings

Contrary to our hypothesis, when rating targets describing a sad event, perceivers rated targets who used more compensatory expression as less sad (M = 2.86, SE = .11) than those who used less compensatory expression (M = 3.5, SE = .13), F(1,21) = 167.21, p < .001, ηp2 = .89. Thus, the holistic hypothesis was supported for happiness, but the incongruence hypothesis was supported for sadness.

Discussion

We predicted that social perceivers would rate the emotional expressions of people with severe FP as less intense than those with mild FP. Our hypothesis was supported for happiness, but not for sadness: perceivers rated targets with severe FP as much less happy than targets with mild FP, but in general, rated targets with severe and mild FP as equally sad. This is the first study to document that people with severe FP are judged as less happy than those with mild FP, but it compliments previous findings of studies of PD in which individuals with expressive masking were viewed as having less positive psychosocial attributes than individuals without masking (Hemmesch et al., 2009; Tickle-Degnen, & Lyons, 2004; Tickle-Degnen, et al., 2011). Similar to these PD studies, the difference between ratings of people with severe and mild FP showed a very large effect size, indicating that people with severe FP are at an extreme disadvantage when communicating happiness. Perceivers misperceived an inexpressive face to as indicating less happiness despite the finding that the targets with severe FP had previously reported feeling happier than targets with mild FP when recalling emotional events (Bogart, Tickle-Degnen, & Ambady, 2012).

Previous studies have involved targets with PD describing positive events or favorite activities (Hemmesch et al., 2009; Tickle-Degnen, & Lyons, 2004; Tickle-Degnen et al., 2011), but this is the first study to examine how perceivers form impressions about targets with expressive disorders who are discussing negative events. This study suggests that perceivers’ judgments of sadness may not be affected by the severity of the FP. Inspection of Figure 2 reveals that these findings were not due to a floor or ceiling effect. It is important to note that severe FP targets had previously reported feeling sadder than those with mild FP (Bogart, Tickle-Degnen, & Ambady, 2012), so perceivers may have still have underreported the emotional intensity of severe targets’ sadness. It is safe to conclude that perceivers have much greater difficulty recognizing happiness than sadness in people with severe compared to mild FP, indicating that people with severe FP are at a particular disadvantage when expressing happiness. Happiness should be more difficult to communicate than sadness among people with FP because facial expressions of happiness involve greater facial activity than those of sadness (Neely & Neufeld, 1996; Wallbott & Scherer, 1986). Thus, people are likely to form more unfavorable first impressions of people with severe FP compared to those with mild FP.

In general, results supported the holistic hypothesis, with stronger support for ratings of happiness than for sadness. Little support was found for the alternative predictions that perceivers’ judgments would be hampered by incongruence between an inexpressive face and an expressive body and voice, or that they would be based primarily on the face. Hypothesis 2a found, in general, that the more communication channels that perceivers observed, the more intense they perceived targets’ happiness and sadness. This suggests they take multiple channels into account when they are available. Testing Hypothesis 2b revealed that perceivers seemed to base their happiness judgments on a blend of the inexpressive face and the expressive body and voice. When perceivers could only see the face, there was a large difference in the ratings of people with severe and mild FP. However, when they were able to observe all channels, the difference in ratings of severe and mild targets was still present, but smaller. Finally, the difference between severe and mild targets was smallest when perceivers were in conditions that did not show the face. Hypothesis 2b could not be tested for sadness ratings because it was contingent on Hypothesis 1, which was not supported for sadness.

Why would perceivers rate people with severe FP as having less intense emotions compared to people with mild FP even when they could not see the face in the voice, voice+speech, and voice+speech+body conditions? Although people with FP who did not have understandable speech were excluded, targets with severe FP may have had subtle decrements in vocal expressivity and speech clarity due to their conditions which could contribute to the severity dampening in these conditions. Supporting this, as can be seen in Table 4, severity dampening in the body condition was lower than the voice and voice+speech condition for both sadness and happiness.

As predicted, in Hypothesis 3, people with FP who used more compensatory expression were perceived as happier when describing a happy event. This is the first evidence that the compensatory expression used by people with FP is effective in improving others’ impressions of their happiness, and it provides further evidence that perceivers form impressions about happiness holistically. Compensatory expression is a useful strategy for people with FP to improve the positivity of others’ impressions of them. Because a person’s adaptation to his or her disability is shaped by his or her social ecology (Livneh & Antonak, 1997), it is likely that people with FP developed these adaptations because others responded positively to such behaviors.

Surprisingly, people who used more compensatory expression when discussing a sad event were rated as less sad than those who used less compensatory expression. This pattern of results is consistent with the incongruence account. An alternative explanation for this result is that compensatory expression is better suited to express happiness because happiness typically involves increased animation, while sadness involves decreases in expressiveness (Van den Stock et al., 2007). Indeed, since facial paralysis puts people at risk of being viewed as unhappy, compensatory expression may be an attempt to appear more positive overall to create more favorable first impressions.

Our design allowed for improvements in ecological validity compared to previous holistic perception studies. The task could be likened to overhearing a person with FP telling a story at a party. Thin slices are a sufficient amount of time for people to form accurate first impressions on many everyday social attributes, and their impressions are unlikely to differ from ones based on longer observation (Ambady & Rosenthal, 1992). Although it might be argued that perceivers may need more time to form accurate impressions about people with expressive disorders, it is not known whether they would actually devote the time and energy to doing so. Unfortunately, if a perceiver forms a negative impression within the first 20 seconds, he or she may decide to avoid a person with FP based on this snap judgment and may never have the opportunity to adjust his or her impression. Even if the perceiver does interact with the person with FP, his or her impression may be anchored by the initial judgment (Epley & Gilovich, 2006). Interaction studies would be an ideal next step to examine the behavior of both the person with FP and the person interacting with the person with FP and how it might change over time. For example, a downward spiral of negative affect through behavioral mimicry may occur, particularly if people with FP do not use compensatory expression (Chartrand & Bargh, 1999).

Implications

This study has broad implications for our understanding of the way emotions are perceived. It advances the holistic emotion perception literature by demonstrating that, even when asked to form impressions about a whole person, rather than an isolated channel, and under more naturalistic circumstances, perceivers blend information from multiple channels when judging the intensity of a person’s happiness and possibly their sadness. Further, this research suggests that a relative lack of facial expression does not impair the ability to communicate sadness, supporting previous findings that the face may be less important for communicating sadness than happiness (Barkhuysen et al., 2010). While FP may be the most pronounced example of a naturally occurring “missing channel,” there are many situations in which one channel of communication may be less expressive than others, such as when one’s body is occupied with carrying an object or when one is talking over loud music. When communicating via email, we only have access to the verbal channel, but when talking on the phone, we have access to the vocal and verbal channels. People often bemoan email communication as being rife with emotional miscommunications, while phone calls provide more channels and are generally more effective in communicating nuanced emotional meaning. This study suggests that in these situations, others are able to recognize a person’s emotions based on a combination of all available channels, a highly adaptive ability.

There are many other health conditions that impair the ability to produce recognizable facial expressions and/or distort the facial features, skin, or muscles, such as PD, facial burns, cleft lip, and cerebral palsy. Reduced facial expressivity may be a powerful, yet previously unexplored, factor influencing social functioning for people with these already socially disabling conditions. No social skills interventions for people with facial paralysis currently exist, but our findings suggest two potential lines of intervention. First, we recommend developing interventions that encourage individuals with these conditions to use available compensatory channels to communicate their emotions. As speech was a particularly powerful cue to happiness, future interventions might incorporate training on verbal emotional disclosure. The second line of intervention should examine whether perceivers can be trained to focus more on the body and voice to improve impressions of people with FP. This intervention would be the first of its kind to educate people how to interact with people with FP, and may be particularly useful for people who are likely to encounter people with FP, such as healthcare providers and educators.

Acknowledgments

Funding

This research was supported by the National Institute of Dental and Craniofacial Research NRSA Predoctoral Fellowship award number F31DE021951 awarded to Kathleen R. Bogart. We thank Michael Slepian for his comments on this article.

Footnotes

This is a surprising finding in light of the facial feedback hypothesis, which would suggest that people with severe FP might experience emotion less intensely. Follow-up work is needed to examine this hypothesis in people with FP. For the purposes of this study, these results present a robust test of our hypotheses because we predicted that participants would perceive the opposite: that people with severe FP are less emotional than people with mild FP.

Contributor Information

Kathleen Bogart, Oregon State University.

Linda Tickle-Degnen, Tufts University.

Nalini Ambady, Stanford University.

References

- Ambady N, Rosenthal R. Thin slices of expressive behavior as predictors of interpersonal consequences: A meta-analysis. Psychological Bulletin. 1992;111(2):256–274. doi: 10.1037/0033-2909.111.2.256. [DOI] [Google Scholar]

- Ambady N, Bernieri FJ, Richeson JA. Toward a histology of social behavior: Judgmental accuracy from thin slices of the behavioral stream. Advances in Experimental Social Psychology. 2000;32:201–271. [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012a;338(6111):1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Holistic person processing: Faces with bodies tell the whole story. Journal of personality and social psychology. 2012b;103(1):20. doi: 10.1037/a0027411. [DOI] [PubMed] [Google Scholar]

- Barkhuysen P, Krahmer E, Swerts M. Crossmodal and incremental perception of audiovisual cues to emotional speech. Language and speech. 2010;53(1):3–30. doi: 10.1177/0023830909348993. [DOI] [PubMed] [Google Scholar]

- Bleicher JN, Hamiel S, Gengler JS, Antimarino J. A survey of facial paralysis: Etiology and incidence. Ear, Nose, & Throat Journal. 1996;75(6):355–358. [PubMed] [Google Scholar]

- Bogart KR, Tickle-Degnen L, Ambady N. Compensatory expressive behavior for facial paralysis: Adaptation to congenital or acquired disability. Rehabilitation Psychology. 2012;57(1):43–51. doi: 10.1037/a0026904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogart KR, Tickle-Degnen L, Joffe M. Social interaction experiences of adults with Moebius syndrome: A focus group. Journal of Health Psychology. 2012;17(8):1212–1222. doi: 10.1177/1359105311432491. [DOI] [PubMed] [Google Scholar]

- Chartrand TL, Bargh JA. The chameleon effect: The perception-behavior link and social interaction. Journal of Personality and Social Psychology. 1999;76(6):893–910. doi: 10.1037/0022-3514.76.6.893. [DOI] [PubMed] [Google Scholar]

- Coulson SE, O’Dwyer N, Adams R, Croxson GR. Expression of emotion and quality of life following facial nerve paralysis. Otology and Neurotology. 2004;25:1014–1019. doi: 10.1097/00129492-200411000-00026. [DOI] [PubMed] [Google Scholar]

- Ekman P. Psychosocial aspects of facial paralysis. In: May M, editor. The Facial Nerve. New York: Thieme; 1986. [Google Scholar]

- Ekman P, Levenson RW, Friesen WV. Autonomic nervous system activity distinguishes among emotions. Science. 1983;221(4616):1208–1210. doi: 10.1126/science.6612338. [DOI] [PubMed] [Google Scholar]

- Epley N, Gilovich T. The anchoring-and-adjustment heuristic. Psychological Science. 2006;17(4):311. doi: 10.1111/j.1467-9280.2006.01704.x. [DOI] [PubMed] [Google Scholar]

- Hemmesch AR, Tickle-Degnen L, Zebrowitz LA. The influence of facial masking and sex on older adults’ impressions of individuals with Parkinson’s disease. Psychology and aging. 2009;24(3):542. doi: 10.1037/a0016105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Livneh H, Antonak RF. Psychosocial adaptation to chronic illness and disability. Gaithersburg, MD: Aspen; 1997. [Google Scholar]

- Meeren HKM, van Heijnsbergen CCRJ, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102(45):16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neely JG, Neufeld PS. Defining functional limitation, disability, and societal limitations in patients with facial paresis: initial pilot questionnaire. Otology & Neurotology. 1996;17(2):340–342. [PubMed] [Google Scholar]

- Pennebaker J, Booth R, Francis M. Linguistic Inquiry Word Count 2007 Operator’s Manual. Austin, Texas: LIWC.net; 2007. [Google Scholar]

- Pentland B, Pitcairn TK, Gray JM, Riddle WJR. The effects of reduced expression in Parkinson’s disease on impression formation by health professionals. Clinical Rehabilitation. 1987;1:307–313. [Google Scholar]

- Rosenthal R, Rosnow R. Essentials of behavioral research: Methods and data analysis. New York, NY: McGraw-Hill; 2008. [Google Scholar]

- Rosenthal R, Hall JA, Archer D, DiMatteo MR, Rogers PL. Advances in Psychological Assessment. Vol. 1977 San Francisco: Josser-Bass; 1977. The PONS Test: Measuring sensitivity to nonverbal cues. [Google Scholar]

- Takahashi K, Tickle-Degnen L, Coster WJ, Latham NK. Expressive behavior in Parkinson’s disease as a function of interview context. The American Journal of Occupational Therapy. 2010;64(3):484–495. doi: 10.5014/ajot.2010.09078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tickle-Degnen L. Nonverbal behavior and its functions in the ecosystem of rapport. In: Manusov V, Patterson M, editors. The SAGE Handbook of Nonverbal Communication. Thousand Oaks, CA: Sage; 2006. [Google Scholar]

- Tickle-Degnen L, Lyons KD. Practitioners’ impressions of patients with Parkinson’s disease: The social ecology of the expressive mask. Social Science & Medicine. 2004;58(3):603–614. doi: 10.1016/s0277-9536(03)00213-2. [DOI] [PubMed] [Google Scholar]

- Tickle-Degnen L, Zebrowitz LA, Ma H. Culture, gender and health care stigma: Practitioners’ response to facial masking experienced by people with Parkinson’s disease. Social Science & Medicine. 2011;73(1):95–102. doi: 10.1016/j.socscimed.2011.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Bezzoijen R, Boves L. The effects of low-pass filtering and random splicing on the perception of speech. Journal of Psycholinguistic Research. 1986;15(5):403–417. doi: 10.1007/BF01067722. [DOI] [PubMed] [Google Scholar]

- Van den Stock J, Righart R, de Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7(3):487–494. doi: 10.1037/1528-3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- Wallbott HG, Scherer KR. Cues and channels in emotion recognition. Journal of Personality and Social Psychology. 1986;51(4):690. doi: 10.1037/0022-3514.51.4.690. [DOI] [Google Scholar]