Abstract

Periodic visual stimulation and analysis of the resulting steady-state visual evoked potentials were first introduced over 80 years ago as a means to study visual sensation and perception. From the first single-channel recording of responses to modulated light to the present use of sophisticated digital displays composed of complex visual stimuli and high-density recording arrays, steady-state methods have been applied in a broad range of scientific and applied settings.The purpose of this article is to describe the fundamental stimulation paradigms for steady-state visual evoked potentials and to illustrate these principles through research findings across a range of applications in vision science.

Keywords: visual evoked potentials, steady-state, spectrum analysis, vision, attention, perceptual organization

Introduction

Evoked potentials, consisting of stereotypic changes of electrical activity evoked by sensory stimuli and measured at the scalp, were first recorded in the middle of the last century (Adrian, 1944; Adrian & Matthews, 1934b; Dawson, 1954; Walter, Dovey, & Shipton, 1946). Since then, they have become an important tool for understanding the relationships between physical stimuli, brain activity, and human cognition (Handy, 2005; Luck & Kappenman, 2012; Regan, 1989). The goal of this article is to describe, at both the conceptual and technical levels, the core principles underlying a particular type of visual evoked potential—the steady-state visual evoked potential (SSVEP)—and its application to human sensory and cognitive processing.

Evoked potentials can be generated not only as a result of physical stimulation by a sensory stimulus (exogenously generated evoked potentials) but also by internal cognitive or motor processes (endogenously generated evoked potentials). These two types of responses comprise event-related potentials (ERPs), which are “the general class of potentials that display stable time relationships to a definable reference event” (Vaughan, 1969, p. 46). In their most common form, ERPs are recorded in response to an isolated, discrete stimulus event. In order to achieve this isolation, stimuli in an ERP experiment are typically separated from each other by a long and/or variable interstimulus interval, allowing for the estimation of a stimulus-independent baseline reference. In contrast to these transient ERPs, exogenous ERPs can also be generated in response to a train of stimuli presented at a fixed rate. Because the responses to such periodic stimuli can be very stable in amplitude and phase over time, those responses have been referred to as the steady-state visually evoked potential (Regan, 1966).

Steady-state evoked potentials in response to visual stimuli were first reported by Adrian and Matthews (1934a) in a remarkable article that also demonstrated suppression of the alpha rhythm by attention. As was typical of the time, some details are left to the imagination, but the following quote from the article leaves little doubt: “At a signal the eyes are opened and the shutter lifted to turn on the flickering light. The result is a series of potential waves having the same frequency as that of the flicker” (p. 378). Interest crescendoed in the 1960s among researchers who studied the processing of luminance information (Kamp, Sem Jacobsen, Storm Van Leeuwen, & van der Tweel, 1960; Regan, 1964, 1966; Spekreijse, 1967; van der Tweel & Lunel, 1965; van der Tweel & Spekreijse, 1969). The signal processing methods used at the time were rudimentary (Regan, 1989) and have been steadily improved over time (Nelson, Seiple, Kupersmith, & Carr, 1984; Norcia, Clarke, & Tyler, 1985; Tang & Norcia, 1995; Tyler, Apkarian, Levi, & Nakayama, 1979). More importantly for the purpose of this article, the technique has been extended to stimuli of increasing complexity, from luminance flicker to pictures of faces, and therefore this method has become more broadly applicable in visual science research.

In this article, we describe the key features of the SSVEP and its generalization from single stimuli to multiple simultaneous stimuli. In the process, we cover many different applications of the SSVEP for understanding visual perception and attention. At each stage, we point to prominent results that have been obtained with the method. We end with a discussion of work that has used the method in conjunction with computational modeling to understand nonlinear processing in the visual cortex.

Responses to single periodic visual inputs

When a single stimulus attribute is modulated periodically as a function of time, the evoked response generated by that stimulus has a periodic time course. While SSVEPs can be recorded at a wide range of frequencies, in most studies the stimulus frequency (i.e., presentation rate) tends to be above 8–10 Hz. At these high frequencies, the interval between stimuli is substantially shorter than the duration of the response that follows an individual stimulus presented in isolation, so that responses to individual stimuli overlap. If the rate is above 10 Hz, the SSVEP is nearly sinusoidal (i.e., an externally driven oscillation), and some researchers consider this to be the prototypical case of an SSVEP response. Below this stimulation rate, responses to individual stimuli overlap but some features of the responses to each event remain preserved, such as responses at frequencies that are multiples of the stimulus frequency (Heinrich, 2010). Here we consider that what is critical for the definition of a response as an SSVEP is not the temporal frequency of the stimulus but rather the fact that the stimulus and the response are each periodic. SSVEPs have been recorded at very low frequencies (Eizenman et al., 1999; Norcia, Candy, Pettet, Vildavski, & Tyler, 2002); we will review several examples in what follows.

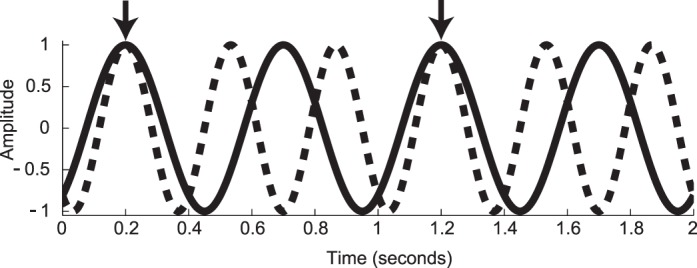

Because the SSVEP response is periodic, it is confined to a specific set of frequencies, and it is thus natural to analyze it in the frequency domain instead of the time domain. The stimulus frequency determines the response frequency content: The response spectrum has narrowband peaks at frequencies that are directly related to the stimulus frequency. To distinguish stimulus frequencies from response frequencies, we will use the following notation: A capital letter F will be used to refer to a stimulus frequency, and a lowercase italic letter f will be used to refer to a response frequency. This distinction in notation will become important when we discuss stimuli that contain more than one frequency (e.g., F1 and F2) and responses occurring at multiple harmonics of a given stimulus frequency (e.g., 1f, 2f, and 3f).

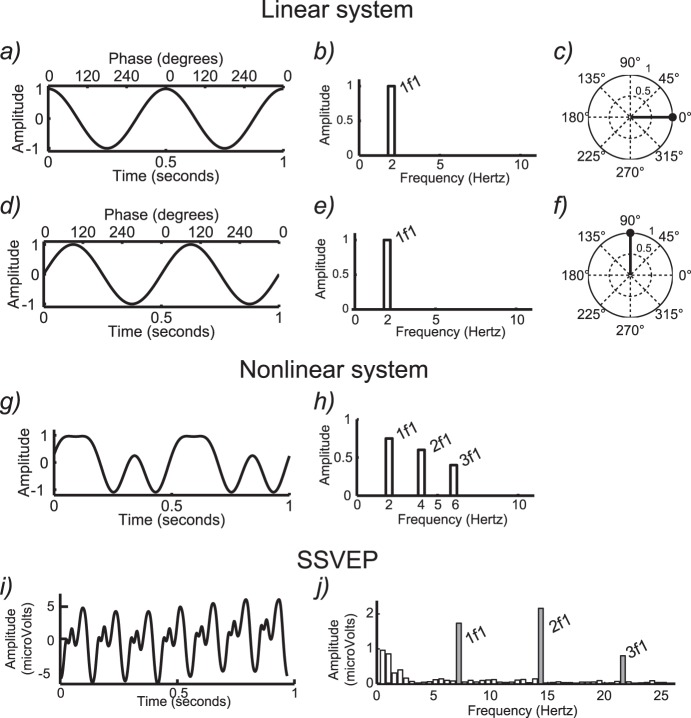

The relationship between periodic signals in the frequency domain and their corresponding representation in the time domain is shown in Figure 1, with the first column showing the signal waveform in the time domain and the second column showing the spectrum, which is the corresponding representation of the signal in the frequency domain. The example in Figure 1a consists of the simplest case—a periodic signal consisting of a single sine wave. The sine wave shown has a frequency F of 2 Hz. In the time domain, there are two peaks and troughs over a period of 1 s. In the frequency domain (Figure 1b), the response consists of a single line (i.e., spectral component) at 2 Hz (1f).

Figure 1.

Conceptual illustration of steady-state responses in time and frequency domains. (a) Simulated purely sinusoidal response from a linear system in the time domain. (b) The corresponding response spectrum for the single-sine-wave response in (a). (c) Vector representation of amplitude and phase of the signal in (a). (d) Single sinusoidal response with a quarter-cycle delay relative to the response in (a). (e) The corresponding amplitude spectrum is the same as in (b) because the signal amplitude in (d) is the same as in (a). (f) The vector plot shows a response with the same amplitude as in (a), but with a phase shift of 90°. (g) Simulated multicomponent response of a nonlinear system in the time domain. (h) The more highly structured nonlinear response in the frequency domain contains multiple harmonics (1f, 2f, 3f). (i) Time-domain SSVEP with a stimulus frequency of 7.2 Hz. (j) The SSVEP response spectrum contains multiple harmonics (1f, 2f, 3f).

An important concept in spectrum analysis is the notion of spectral resolution. Spectral resolution is the fineness of the bins on the frequency axis of the spectrum. The frequency resolution is inversely proportional to the duration of the electroencephalograph (EEG) segment that is transformed to the frequency domain. For example, the frequency domain representation of a 20-s duration periodic response has a frequency resolution of 1/20 s, i.e., 0.05 Hz, and this resolution is independent of the periodic stimulation frequency (see Bach & Meigen, 1999, for details on SSVEP spectral analysis techniques). The spectrum analysis requires that at least one full period of the response of interest be present. Spectrum resolution is important in determining the signal-to-noise ratio (SNR) of the measurement and in being able to separately analyze responses that have multiple frequency components in them (see Multiple periodic visual inputs).

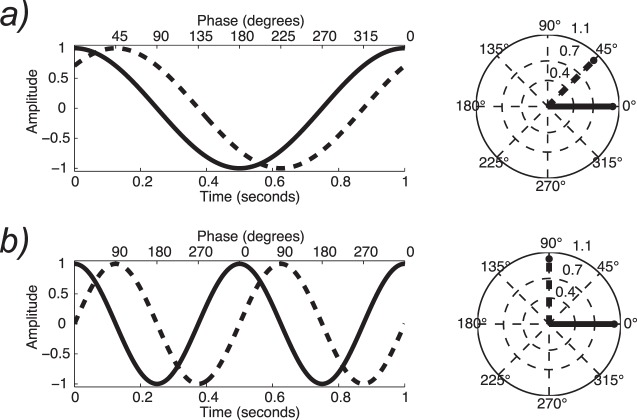

In addition to response amplitude, SSVEPs have a second parameter: response phase. The phase value is related to processing delays in the visual system and is a composite of temporal integration times in the retina and cortex and temporal propagation delays between retina and cortex and between areas in cortex. Phase is a circular variable running over 360° or 2π radians. The response amplitude and phase can be represented as a vector in a polar coordinate system (see Figure 1c and f). The length of the vector codes the response amplitude, and the polar angle codes the response phase. In Figure 1, the origin for the phase parameter is at 3 o'clock, where the phase is 0. The waveform in Figure 1a has a phase of 0, consistent with it being a cosine wave with its peak at time 0. The next row (Figure 1d through f) shows another simulated response with the same amplitude but a different temporal delay. Here the delay corresponds to one quarter of a cycle (compare Figure 1a and c). The amplitude and frequency of the response are the same and thus the amplitude spectrum is identical (Figure 1b and e). In the vector representation, the phase has now shifted by 90° (Figure 1f), which directly corresponds to one quarter of the period of the sine wave in the time-domain plot. The relationship between phase and temporal delay is discussed in detail in Appendix 1.

SSVEP responses can contain activity not only at the stimulus frequency F but also at its harmonics. This occurs because either because the stimulus contains multiple temporal frequencies (as when a square-wave temporal modulation profile is used) or the system is nonlinear, or both. A harmonically related response component is one that occurs at an exact integer multiple of the stimulus frequency—2f, 3f, and so on—where the use of a lowercase f indicates that what is being referred to is a response frequency rather than a stimulus frequency F.

The case of multiple response frequencies is illustrated with synthetic data in Figure 1g, where we again show a periodic signal that repeats exactly two times per second, as did the sine-wave signal in Figure 1a. This signal, however, contains additional frequency components that are readily apparent in the spectrum shown in Figure 1h. Here we see additional response components at 2 (2f) and 3 times (3f) the input frequency F. These higher harmonic components distort the shape of the time-domain waveform away from that of a single sine-wave profile.

If the visual stimulus is a perfect sine wave, it does not contain higher harmonics; if the visual response is linear, the response to this stimulus will be confined to the frequency bin corresponding to the stimulus frequency. Only the amplitude and/or phase of the response varies if the system is linear. In contrast, if the system is nonlinear, this will manifest in the presence of higher harmonic responses (for more details on nonlinearity in the SSVEP, see Multi-input interactions as an objective measurement of system nonlinearities and neural convergence). The nonlinear nature of the visual response is illustrated in Figure 1i, which shows data from an actual SSVEP recording where the stimulus comprised a sinusoidal modulation of contrast at a frequency F of 7.2 Hz. Here again, the time waveform is periodic but not sinusoidal, and the response spectrum thus contains narrow lines at exact integer multiples of the input frequency (Figure 1j). The presence of frequencies in the response (the output) that were not present in the stimulus (the input) indicates that the response of the visual system is due to the activity of nonlinear neural mechanisms.

In a real SSVEP recording, the signal of interest is inevitably contaminated by measurement noise. This measurement noise consists predominantly of additive EEG noise (Victor & Mast, 1991). The experimental noise is present over all frequencies in the spectrum (white bars in Figure 1j), with more noise in low frequencies and in specific broadband frequency ranges such as the alpha band (8–12 Hz; see, e.g., Klimesch, 2012). By contrast, the SSVEP signal that one seeks to isolate experimentally is confined to a set of narrow frequency bins that are directly related to the stimulus frequency. If the frequency resolution of the analysis is high, it has been shown that the SSVEP itself is very narrowband (Regan & Regan, 1989). This means that the SNR of the SSVEP can thus be very high, because only a small fraction of the noise—the noise that is present in the same bins as the response—is relevant (Regan, 1989). Appendix 2 provides technical details on statistical analysis procedures appropriate for determining when an SSVEP is present and distinguishable from the background noise. The appendices also discuss procedures for calculating error statistics on the SSVEP parameters.

One of the key questions in SSVEP recording is the choice of the stimulus frequency. In a seminal study, Regan (1966) reported a maximal response at about 10 Hz for luminance flicker. Subsequent studies have reported a similar (Fawcett, Barnes, Hillebrand, & Singh, 2004; Regan, 1989; Srinivasan, Bibi, & Nunez, 2006) or slightly higher (Hermann, 2001) frequency range. Typically, studies such as these have used low-level visual stimuli and recordings from medial occipital sites. However, the stimulation frequency that gives rise to the largest SSVEP response may depend on the kind of stimulus used and the recording site (Srinivasan et al., 2006). Under the hypothesis that the stimulation rate generating the largest SSVEP is inversely related to the time needed to fully process the stimulus, lower stimulation rates may be necessary to record SSVEPs generated by higher level visual processes, for instance the discrimination of complex stimuli such as faces (i.e., about 6 Hz; Alonso-Prieto, Belle, Liu-Shuang, Norcia, & Rossion, 2013). Relatively low frequencies can also be advantageous because, as we will see later, the phase of the response becomes interpretable (Appelbaum & Norcia, 2009; Cottereau, McKee, Ales, & Norcia, 2011).

In common practice, stimulus frequencies tend to be in the range of 3–20 Hz, but this is not a requirement for recording an SSVEP: Several studies have recorded extremely narrowband SSVEPs at very low frequencies (Alonso-Prieto et al., 2013; Norcia et al., 2002; see also Regan & Regan, 1988), while SSVEP components at fundamental frequencies up to 100 Hz have been reported (Herrmann, 2001). As noted already—and this is an important point—what is critical for the definition of a response as an SSVEP is not the temporal frequency of the stimulus but rather the fact that the stimulus and the response are each periodic.

The sweep VEP

One of the leading applications of the SSVEP has been the sweep VEP. In this paradigm, the SSVEP is measured in response to a stimulus that is parametrically varied (swept) over a range of values, rather than being presented at a fixed, unchanging value (Regan, 1973). The sweep VEP was first used for objective refraction: SSVEP amplitude was measured as the power of a lens was continuously varied (Regan, 1973). The best correcting lens power was taken as the one that led to the largest SSVEP. The sweep VEP has since been mainly used to measure spatial acuity (Nelson, Kupersmith, Seiple, Weiss, & Carr, 1984; Norcia & Tyler, 1985; Regan, 1977; Tyler et al., 1979) and contrast sensitivity (Allen, Norcia, & Tyler, 1986; Nelson, Kupersmith, et al., 1984; Norcia, Tyler, & Hamer, 1990; Regan, 1975). Spatial acuity is measured by sweeping the spatial frequency (e.g., pattern size) of a high-contrast pattern over a wide range. Similarly, contrast sensitivity can be measured by varying the contrast of a fixed-spatial-frequency pattern over a wide range of contrast values.

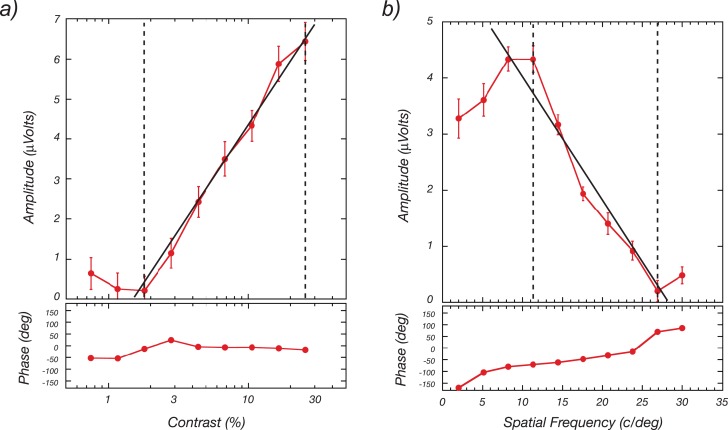

Measurement of contrast response and spatial frequency tuning functions is illustrated in Figure 2. On the left is a contrast response function (solid red curve) that was measured by increasing the contrast of a binary noise pattern between 0.8% and 26% contrast while the pattern was exchanged with a blank field at F = 5.1 Hz. The sweeps each lasted 10 s, and the data are from the average of 10 of these 10-s trials. The response at 5.1 Hz increases systematically as contrast increases. As Campbell and colleagues (Campbell & Kulikowski, 1972; Campbell & Maffei, 1970) first noted, the SSVEP is a linear function of log stimulus contrast for a substantial range of suprathreshold contrasts, starting near psychophysical threshold. This behavior can be seen in the data of Figure 2a. Given this, a sensory threshold can be estimated from a linear extrapolation of the contrast response function to zero amplitude. The assumption here is that the evoked response will continue to decrease in the same fashion until it disappears entirely. The point at which this occurs is obscured by the background EEG. By regressing through the ambient noise level, one can estimate this point as a constant criterion (zero response). If one has access to several well-measured points on the response function, this estimation technique shows little bias (Norcia, Tyler, Hamer, & Wesemann, 1989).

Figure 2.

Contrast and spatial-frequency responses measured with the swept-parameter technique. (a) VEP amplitude and phase as a function of log contrast measured as the average of ten 10-s sweeps. (b) VEP amplitude and phase as a function of linear spatial frequency measured as the average of ten 10-s sweeps. See text for details.

The right panel of Figure 2 shows a spatial frequency tuning function measured over 2 to 30 c/°. Here the steps are linear, based on the fact that contrast sensitivity falls off linearly when plotted as log sensitivity versus linear spatial frequency (Tyler et al., 1979). Again, a threshold, which in this case reflects the participant's grating acuity, can be estimated by extrapolating the response function to zero amplitude. Sweep VEPs have proven to be useful for measuring acuity and contrast thresholds in a wide range of research and clinical contexts (Almoqbel, Leat, & Irving, 2008).

Stimulus symmetry leads to symmetrical SSVEP responses

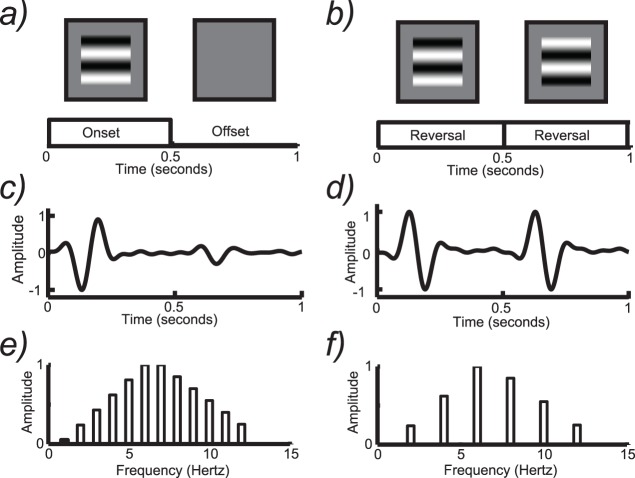

In two of the actual SSVEP recordings discussed already (Figures 1i and 2a), the stimulus modulated in a pattern onset/offset mode, in which a spatially structured field (a random checkerboard) was alternated with a spatially uniform field (a gray field of the same mean luminance). It is intuitively simple that the visual system should have a large response after the transition from the uniform field to the patterned field but a small(er) one at its offset. This is illustrated schematically in Figure 3a. The pattern of large/small responses repeats at the stimulus repetition rate (indicated by the brackets), and therefore a response is present at the fundamental frequency (f = 1/T) and possibly higher harmonics (2f, 3f, etc.; see Figure 3e). The direct connection between stimulus frequency and response frequency, plus a simple asymmetry consideration (onset is not equivalent to offset), can be generalized to any periodic stimulus containing the onset and offset of patterns going from simple ones such as checkerboards (Spekreijse, van der Tweel, & Zuidema, 1973) to more complex ones such as faces (Ales, Farzin, Rossion, & Norcia, 2012; Rossion & Boremanse, 2011).

Figure 3.

(a) Schematic illustration of the pattern onset/offset stimulation mode. Here a patterned field is alternated with a uniform field of the same mean luminance. (b) Schematic illustration of the pattern-reversal stimulation mode. Here the same pattern is presented in both phases, but with a 180° shift of spatial phase that causes bright bars to be exchanged for dark ones and vice versa. (c) Schematic illustration of one cycle of the response to pattern onset/offset consisting of a large response at pattern onset and a smaller response at offset. (d) Schematic illustration of one cycle of the response to pattern reversal. Here the response to each reversal event in the display is accompanied by an equal response. Note that the period of the response is now one half of the full stimulus cycle in (b). (e) The response spectrum of the pattern onset/offset response contains both odd (1f, 3f, etc.) and even (2f, 4f, etc.) harmonics of the stimulus frequency. (f) The response spectrum of the pattern-reversal response contains only even harmonics (2f, 4f, etc.) of the stimulus frequency.

Another common presentation mode for SSVEPs is pattern reversal (see Figure 3b). In this presentation mode, a pattern (e.g., a checkerboard or grating) alternates between states in which the luminance of bright elements shifts to an equivalent dark value and vice versa. In this way the mean luminance of the pattern is constant and only the contrast of the pattern changes at the stimulation rate. Pattern reversal thus has translational symmetry—one spatial phase of the pattern reversal is simply a (spatial) translation of the other. Pattern reversal stimulation is often referred to as counterphase modulation to reflect this property. The two spatial alternations comprising the pattern reversal stimulus evoke equivalent neural population responses, i.e., there are just as many of the same kinds of neurons that respond to one phase of the stimulus as the other. Because the two phases of the stimulus activate the same number and type of neurons, the response to each reversal is the same, and this leads to an EEG spectrum that contains only even harmonics (2f, 4f, 6f, etc.; Cobb, Morton, & Ettlinger, 1967; Millodot & Riggs, 1970). Pattern reversal stimuli are sometimes referred to by the number of reversals per second they contain (e.g., eight reversals/s for 4-Hz stimulation). There are two pattern reversals per cycle of the stimulus, and the response is largest at the pattern reversal frequency when high reversal rates (∼6 Hz and above) are used. For consistency, it is useful to refer to the frequency of a pattern reversal stimulus as the frequency at which the stimulus returns to its original state (e.g., 1 Hz in the example of Figure 3b) and refer to the response harmonics (e.g., 2f = 2 Hz) as being multiples of this rate rather than the pattern alternation rate (see spectrum in Figure 3f).

The concept of translational symmetry in the stimulus leading to translational symmetry in the response can be generalized to more complex patterns, as will be described later in Generalizations of the pattern onset–offset VEP. The conceptual relationship between symmetry/asymmetry in stimuli and responses and odd and even harmonics exists due to symmetry properties of the Fourier transform—asymmetry is encoded in the odd harmonics and symmetry in the even harmonics.

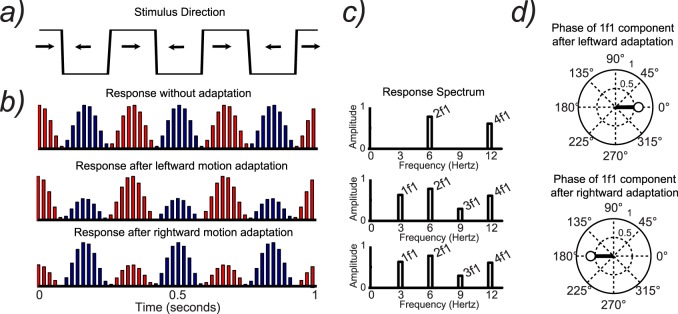

Breaking symmetry by adaptation: Uncovering tuned populations

The normal relationship between symmetric stimuli and symmetric population responses can be perturbed experimentally; this is useful for uncovering information about the tuning of the neurons in the underlying population. A concrete example comes from studies of the motion-processing system. At early stages of the visual pathway, starting especially in V1, single cells become selective for direction of motion (Hubel & Wiesel, 1965, 1968), and each direction of motion is represented by roughly equivalent numbers of direction-tuned cells. Thus when a stimulus changes direction, the population response after each direction change is approximately the same. This is illustrated schematically in Figure 4. Figure 4a depicts a stimulus that moves leftward for 167 ms followed by rightward motion for 167 ms (for a total stimulus-defined repetition period of 333 ms, or F = 3 Hz). This stimulus evokes identical responses for the leftward and the rightward motion (Figure 4b, first row). Because of these identical responses, the repetition rate of the measured response is not 3 but 6 Hz, or a 167-ms period (Figure 4c). This frequency doubling is generated because even though independent sets of neurons tuned for each direction motion are present in the cortex, their summed population response measured at the scalp is not different for left and right directions of motion. Information about the underlying neural tuning (i.e., that there are separate populations of neurons for leftward and rightward motion) has thus been lost.

Figure 4.

(a) Schematic illustration of a stimulus that changes direction at 3 Hz. (b) Schematic illustration of hypothetical neural responses tuned to rightward motion (red) and leftward motion (blue). Shown before and after adaptation to different directions of motion. (c) Response spectrum generated from the neural responses in (b). (d) Phase of the 1f1 component after adaptation to different directions of motion.

It is possible to uncover the presence of underlying tuned populations by using selective adaptation (Ales & Norcia, 2009; Hoffmann, Unsold, & Bach, 2001; Tyler & Kaitz, 1977). In the unadapted state, as just noted, both populations respond equally well to each direction and the summed population response at the scalp results in a frequency doubling of the response. However, prolonged exposure to one direction of motion reduces the responsiveness of direction-selective cells tuned to the exposed direction of motion (Priebe, Lampl, & Ferster, 2010), a process known as direction-specific adaptation.

After adaptation to a leftward motion, the responses of individually tuned neurons to leftward motion from the population are reduced, resulting in an imbalance in the overall response to the periodic stimulus. In Figure 4b, the unadapted response has six equal responses to three cycles of the input, an even multiple of the input frequency. In contrast, when a population is adapted, the response for the adapted direction is reduced (Figure 4b). This imbalanced response creates responses at odd multiples of the input frequency (Figure 4c).

The production of odd harmonics after adaptation, while consistent with direction-specific adaptation, does not directly show a difference in response for different directions of adaptation, as the odd harmonic amplitude, which is a scalar quantity, cannot specify a direction. It is possible to use the other parameter of the SSVEP—its phase—to definitively identify direction-specific adaptation (Ales & Norcia, 2009). The imbalanced adapted response has a different temporal sequence, either strong/weak or weak/strong, depending on which of the adapting stimuli was presented (leftward vs. rightward in this example). The different temporal ordering results in a 180° phase difference in the odd harmonic components (e.g., a shift by one half of the period of the response; Figure 4d). The presence of odd harmonics that are 180° phase-shifted after adapting to two selected adapters is thus a strong diagnostic criterion—at the population level—of direction-selective cells. Adaptation can thus be used to reveal an underlying tuning property that is not apparent in the SSVEP recorded under unadapted conditions.

Generalizations of the pattern onset–offset VEP

If we adopt a more general definition of pattern, it is possible to extend the concepts of pattern reversal and pattern onset–offset VEPs to more complex stimuli. The first recorded pattern onset–offset VEPs modulated stimulus contrast: Pattern onset consisted of the replacement of a blank field with a high-contrast patterned field of the same mean luminance. Because the mean luminance was constant over time, the evoked response could be attributed specifically to mechanisms sensitive to spatial contrast (Spekreijse et al., 1973). The logic here is general and very useful in terms of controlling what aspect of visual processing is being measured by the SSVEP. The argument goes as follows: There is some lower level stimulus attribute—in this case luminance—that the experimenter wishes to control for in order to isolate responses to a higher level attribute, e.g., spatial contrast. In the original studies, because the blank field had the same mean luminance as the patterned field, any evoked response had to be the result of pattern- or contrast-specific mechanisms rather than luminance processes. This simple notion can be generalized to more complex stimuli by ensuring that the on and off phases of the stimulus are equivalent on some set of low-level features that the experimenter wishes to control for, but differ on some higher level “pattern” that does not need to be controlled for.

An early example of a higher level pattern onset–offset response is the so-called texture-segmentation VEP (Bach & Meigen, 1992; Lamme, Van Dijk, & Spekreijse, 1992). In a texture-segmentation VEP, a figure or pattern is created by texture rather than luminance discontinuities; this has been useful for studying image segmentation properties underlying object perception. A review of the older literature is available (Bach & Meigen, 1998).

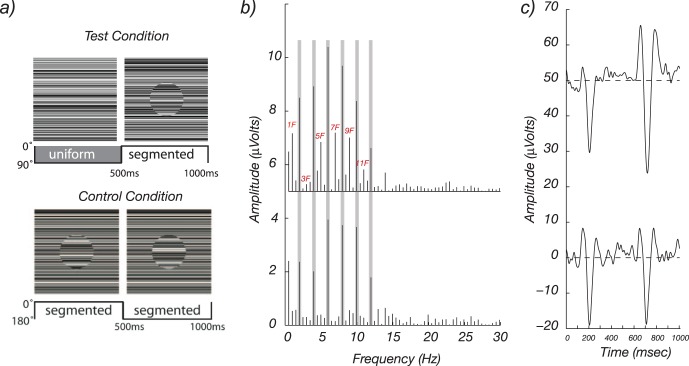

A variant of the texture-segmentation paradigm is shown in Figure 5. Here pattern onset consisted of the appearance of a texture-defined disk region from an otherwise uniform texture field (Appelbaum, Ales, & Norcia, 2012). The off state consisted of a 13° field covered with static, one-dimensional contrast noise. The on state was created by rotating the texture by 180° at 1 Hz within a central 3° disk. This modulation caused the central disk to either mismatch with the background, creating a perceptually segmented state (Figure 5a, top left panel), or match exactly, blending into a perceptually uniform one-dimensional texture (Figure 5a, top right panel).

Figure 5.

SSVEPs to higher level stimuli contain both figure-segmentation-related (odd harmonic) and nonfigure-related (even harmonic) responses. (a) Test condition: A spatially segmented display created by texture discontinuity cues was alternated with a spatially uniform field at 1 Hz. Control condition: The same local stimulus change, a 180° rotation of the texture within the disk region, does not change the segmentation state because the texture inside the disk never matches that of the background. (b) Top panel: The response spectrum from the test condition contains both odd and even harmonics. Bottom panel: The response spectrum from the control condition contains only even harmonics. (c) Top panel: The cycle average time course for the test condition shows larger responses to onset of the segmented image (at 500 ms) than to offset (0 ms). Bottom panel: Control-condition responses are equivalent after image transient at 500 and 0 ms.

The responses to the segmented/uniform test condition are show in the frequency domain in Figure 5b and in the time domain in Figure 5c. The frequency spectrum for the uniform-segmented display consists of a series of narrow spikes at 1-Hz intervals, i.e., at 1f, 2f, 3f, 4f, etc. The gray bands indicate the responses at even harmonics of the 1-Hz stimulus frequency. The corresponding time-domain waveform shows a larger response at pattern onset (which occurred at 500 ms in the plot) than at pattern offset (at 0 ms). This asymmetry in the response (onset bigger than offset) manifests in the spectrum as the presence of odd harmonics.

To show that image segmentation rather than some other low-level cue is driving the odd harmonic responses, a control condition in which the local texture changes within the disk were the same was used. Critically, these image transients were not associated with changes in the segmentation state of the display. To produce equivalent local feature changes without a change in global organization, the texture inside the disk region was cut from a different random texture sample, and the disk region thus never matched the background after rotation by 180°. By comparing the responses in the top and bottom panels of Figure 5b, we can see that the even harmonic components are very similar in the two conditions, but that the uniform-segmented response contains multiple odd harmonic components that are absent from the segmented-segmented response. The odd harmonics are thus specific to the global segmentation state of the display. The even harmonics comprise responses due to local contrast changes within the central disk region, and these are equivalent in the two stimuli. The time-domain waveforms reflect these properties: The response to the segmented-segmented display consists of two nearly identical transient responses, consistent with the fact that the two image states are perceptually identical in this condition as well as being physically identical within the disk region. The figure- segmentation-specific activity can be measured directly from the odd harmonics of the test condition. In this example, the figure-segmentation related activity can be isolated from a single stimulus condition—the Test condition—rather than through a more time-consuming and error-prone process of subtracting test and control conditions.

Other examples of generalized pattern onset–offset responses come from the onset of twofold symmetry in random-dot patterns (Norcia et al., 2002), the onset of orientation structure in Glass patterns (Pei, Pettet, Vildavski, & Norcia, 2005), the onset of collinearity in arrays of Gabor patches (Norcia, Pei, et al., 2005; Norcia, Sampath, Hou, & Pettet, 2005), the onset of objects defined by binocular disparity (Cottereau et al., 2011; Cottereau, McKee, Ales, & Norcia, 2012; Cottereau, McKee, & Norcia, 2012), and the onset of phase correlations across orientation and scale in face or object images (Ales et al., 2012; Farzin, Hou, & Norcia, 2012). In each of these cases, it is possible to design a set of images in which the spatial structure of the local contrast elements is randomized (the off state of the pattern). These off images can be exchanged with a set of on images that have a comparable set of local elements but in which a higher level of structure has been imposed on the local elements. Through careful matching of the local contrast changes between on and off phases, responses to this level of structure are rendered symmetric for the two transitions, and thus these responses are projected onto the even harmonics of the stimulus frequency. By design, the higher order structure differs across stimulus phases, producing a difference between the two transitions (off to on and on to off), yielding odd harmonic responses.

SSVEP responses reflecting higher level visual processes

Historically, the primary applications of SSVEPs have been in studies of lower level visual processes, as just described, and attention (see Multiple temporal inputs for the study of visual attention). Some researchers have concluded that because an SSVEP response is often maximal over medial occipital electrode sites (typically Oz), it originates mainly from the primary visual cortex (Müller et al., 1997; Di Russo et al., 2007) and that “the” SSVEP is primarily a tool for studying sensory processes and low-level vision (Regan, 1989). However, SSVEPs vary according to frequency and the type of stimuli presented (Alonso-Prieto et al., 2013), and as we discuss later, the SSVEP approach can be used to study higher level visual processes (i.e., object, face, or visual-scene perception). We will distinguish two approaches in which the SSVEP has been used to study higher level processes, one indirect and the other direct. An indirect approach is one in which the influence of a higher level process is revealed through its modulatory effect on an SSVEP generated by a low-level process, and measured over low-level visual areas. Direct approaches frequency-tag the high-level process itself.

Indirect approaches

Silberstein et al. (1990) have developed an indirect SSVEP approach to study high-level (visual) processes such as vigilance. This approach consists of the superimposition of a rapid (13-Hz) sinusoidal contrast modulation (45%) on a static visual stimulus that engages the participant in a cognitive task. Using this approach, a frontal SSVEP amplitude increase associated with the hold period of a working-memory task has been identified (Ellis, Silberstein, & Nathan, 2006; Perlstein et al., 2003; Silberstein, Nunez, Pipingas, Harris, & Danieli, 2001). Similarly, Peterson et al. (2014) have recently used the SSVEP approach to tag individual stimulus elements as they are encoded into working memory. By demonstrating that SSVEP amplitudes are larger for remembered items, relative to those that were forgotten, this finding illustrates how neural resources allocated during memory encoding directly contribute to working-memory capacity limits.

In another study, a 13-Hz flicker was superimposed on static, dynamic, and scrambled images of faces, and the SSVEP response was shown to be different across these three conditions (Mayes, Pipingas, Silberstein, & Johnston, 2009). This approach is indirect because the visual stimulus of interest is always present and unmodulated, but the stimulus modulation that actually generates the SSVEP is of a low-level feature (45% contrast modulation). The measured alterations of this low-level response are presumably due to diversion of attention towards the high-level feature (Hindi Attar, Andersen, & Muller, 2010). In another indirect approach, complex visual stimuli have been presented at periodic rates of 10 Hz or more (Gruss, Wieser, Schweinberger, & Keil, 2012; Kaspar, Hassler, Martens, Trujillo-Barreto, & Gruber, 2010; Keil et al., 2003; McTeague, Shumen, Wieser, Lang, & Keil, 2011; Moratti, Keil, & Stolarova, 2004). In these studies, the exact same image—namely a visual scene, an isolated object, or a face—appears and disappears at a fixed rate in a given trial of a few seconds. The SSVEP response obtained is compared across different kinds of stimuli flickering at the same rate in other trials (for instance, by comparing the SSVEP obtained to affective and nonaffective pictures). In this approach, the visual stimulus appears and disappears at a periodic rate from a background that is not equalized for low-level features (e.g., contrast). Thus the SSVEP response contains a mixture of low-level (e.g., populations of neurons responding to the change of contrast) and high-level (e.g., populations of shape-related neurons) visual responses. Moreover, in these studies the SSVEP is typically observed and recorded on medial occipital sites (around electrode Oz), suggesting that it essentially reflects low-level visual processes. The modulation of the periodic responses at Oz, by affective content for instance, may be secondary to sustained/unmodulated feedback from higher level areas that code the high-level content rather than being a direct time-locking to the high-level stimulus information.

Direct approaches

We consider an approach to higher level processing to be direct when the paradigm triggers the higher level process at the tagging frequency and when this higher level activity can be isolated from low-level visual processes either in the design or in the analysis. For instance, the sweep VEP described earlier has been extended to higher level vision by generalizing the pattern onset/offset VEP (Ales et al., 2012). In that study, a face-containing image was alternated at 3 Hz with an image whose phase spectrum had been randomized (Figure 6a). This process leaves the power spectrum the same between the two images, and thus mechanisms such as local filters that only measure power spectral content cannot distinguish the two stimulus states. Figure 6b shows a portion of the amplitude spectrum of the response to the alternation between intact and scrambled face images. The left panel shows the spectrum at Oz. Here the response is dominated by the second harmonic at 6 Hz. By contrast, the response over the right occipitotemporal cortex is dominated by the first harmonic (3 Hz). This is consistent with the face-selective responses observed over the right occipitotemporal cortex in standard ERP studies of the N170 component (Rossion & Jacques, 2011; for reviews, see Bentin, Allison, Puce, Perez, & McCarthy, 1996).

Figure 6.

Face-detection sweep VEP (adapted from Ales et al., 2012). (a) Six images are presented per second, with every other image being (partially) intact and the others scrambled. Over a 20-s trial, a new level of phase randomization is presented over a series of 20 equal steps of phase coherence. The power spectrum is constant during the stimulation. Populations of neurons coding for faces independent of low-level information should respond exactly 3 times/s, the rate at which partially intact faces are presented. (b) Grand-averaged (N = 10) EEG spectrum elicited by the stimulation depicted in (a). This stimulation leads to a response dominated by 6 Hz (2f) at medial occipital sites (left panel). A 3-Hz (1f) response dominates the recording over right occipitotemporal sites, reflecting face perception (right panel). (c) SSVEP voltage versus stimulus coherence (0% to 100%) at 3 Hz (filled squares). The 3-Hz signal is compared to the activity in the neighboring frequency bins of the spectrum (e.g., 2.5 and 3.5 Hz), which serve as a noise baseline during the stimulus sequence that evolves from a fully phase-scrambled face to a clearly visible face stimulus. Face detection emerges in the EEG at about 35% of phase coherence, before the behavioral report of face detection (arrow). The topographical map is extracted at the level of the blue dotted line (about 35% of phase coherence), indicating the emergence of face detection over right occipitotemporal electrode sites.

The visibility of the face images was then varied by progressive undoing of the phase scrambling over a series of 20 equally spaced steps during a 20-s sweep sequence. At the beginning of the sequence, a scrambled image alternated with another scrambled image at 3 Hz, leading to a symmetrical response at 6 Hz only (second harmonic) on medial occipital sites. After a certain level of descrambling, the face is perceived and the spatial asymmetry of the stimulus alternation leads to a robust 3-Hz (first harmonic) response, which can be taken as an objective signature of face detection (see Figure 6c). The first harmonic (3 Hz) emerged abruptly between 30% and 35% phase coherence of the face and was most prominent on right occipitotemporal sites. Thresholds for face detection were estimated reliably in single participants from 15 trials, or on each of the 15 individual face trials. The SSVEP-derived thresholds correlated with (i.e., predicted) the concurrently measured perceptual face-detection thresholds. This first application of the sweep VEP approach to high-level vision provides a sensitive and objective method that could be used to measure and compare visual perception thresholds for various object shapes and levels of categorization in different human populations, including infants and individuals with developmental delay.

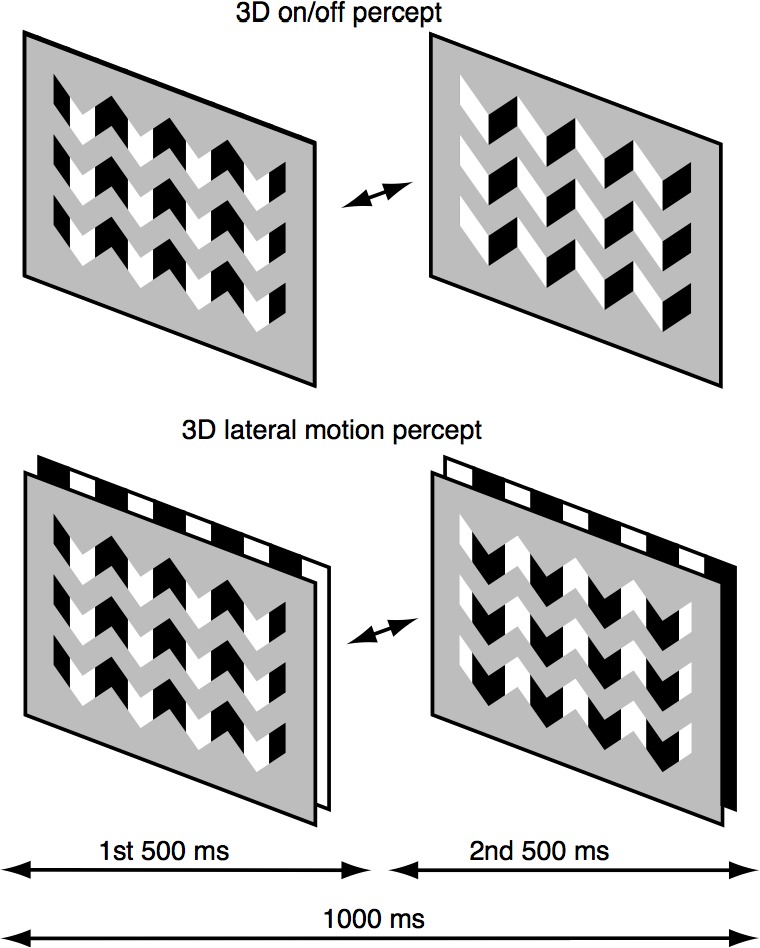

Another example of temporally modulating high-order image structure comes from a study of visual processing of 3-D pictorial cues that arise from shading relationships in images (Hou, Pettet, Vildavski, & Norcia, 2006). The researchers developed a stimulus paradigm in which the spatial relationships between shading information could be made to be consistent with either a 3-D interpretation in which the perceived structure varied in apparent depth across the image in one state or a flat plane in another. This stimulus thus comprised a generalized onset/offset stimulus in which the on state had a different perceived depth structure than the off state: The on state appeared 3-D and the off state appeared flat. This perceptual interpretation of the stimulus is illustrated in the top panels of Figure 7. Critically for the study, after viewing the display for a long period of time, a second percept became apparent—one in which the two states of the display had equivalent depth interpretations. Instead of the appearance of the display alternating between flat and undulating in depth, the display appeared to consist of a symmetric left/right movement of a flat pattern seen through a set of cutout apertures (illustrated schematically in the bottom panels of Figure 7).

Figure 7.

Bistable display creating two different depth interpretations of the same image. The top image depicts the perceptual state during which the observer perceives a change in depth structure. In this state, the display alternates between a surface that appears to be undulating in depth and another surface that is flat. In the second perceptual state (bottom panel), the display is organized into two layers—an aperture through which a grating is seen moving left and right in a fixed depth plane located behind the aperture.

In order to characterize the properties of the SSVEP corresponding to the two perceptual interpretations, the observers were given a button to indicate which state was dominant. These perceptual labels were then use to form separate data sets that were spectrum analyzed. Due to the asymmetric perceptual interpretation, alternations between the on and off stimulus states at F = 1 Hz led to the generation of strong odd harmonic responses (i.e., 1f [1 Hz] and 3f [3 Hz]). When the display was perceived as a flat plane moving left and right, the relative amplitude of the odd and even harmonics shifted towards being more dominated by the even harmonics. The conclusion from this experiment is that the SSVEP reads out a perceptually relevant population response, including whether states of the stimulus generate responses in the same or different populations. It should also be noted here that the SSVEP to this higher level stimulus was recorded at a very low stimulus frequency (1 Hz).

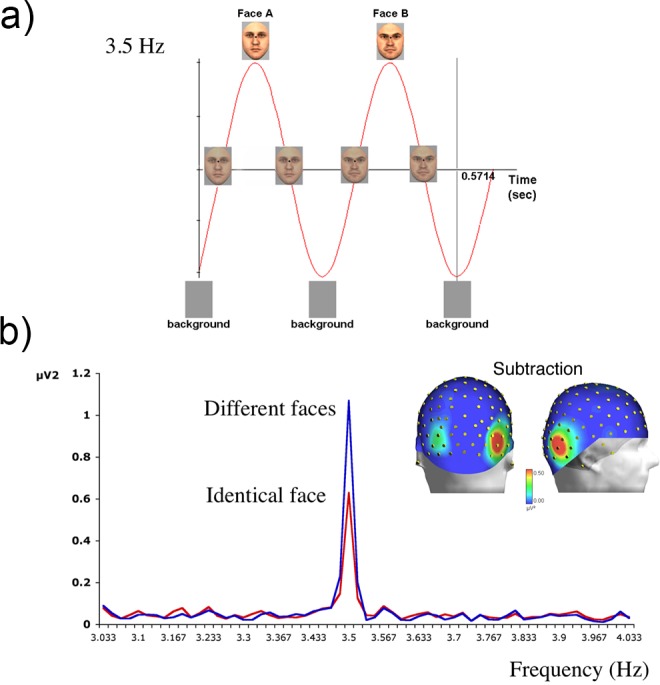

Higher level processes can also be investigated by periodic onset and offset of the visual stimulus against its background, by changing the higher level content of the stimulus at every cycle. Rossion and Boremanse (2011) presented different face pictures to human observers for about 1 min at a fixed rate of 3.5 Hz (Figure 8a). High-SNR EEG responses were confined to 3.5 Hz and its harmonics (Figure 8b). Although this response contains a mixture of low- and high-level visual processes, contrasting it to the response obtained when the exact same face picture was repeated at the same rate allowed the isolation of the higher level process of interest—individual face discrimination. A large repetition suppression effect—i.e., reduction of the SSVEP when the exact same face is repeated—is illustrated in Figure 8b. The differential SSVEP response was obtained despite significant changes of stimulus size at every stimulation cycle and was maximal over the right occipitotemporal cortex rather than medial occipital sites (i.e., Oz). In a subsequent study, this differential SSVEP was reduced when the face stimuli were presented upside down or contrast reversed (Rossion, Prieto, Boremanse, Kuefner, & Van Belle, 2012), two manipulations known to reduce the efficiency of individual face discrimination (e.g., Freire, Lee, & Symons, 2000; Russell, Sinha, Biederman, & Nederhouser, 2006). The advantage of the SSVEP approach in this paradigm is that it provides a robust implicit measure of individual face discrimination in a few minutes (Rossion, 2014). Interestingly, in order to provide this high-level visual discrimination measure, not only must the high- level content of the stimulus change at every cycle, but the response has to be measured over high-level visual areas. The frequency also matters: The SSVEP response decreases significantly over the occipitotemporal cortex at rates above 8 Hz, and the differential response cannot be obtained at such high frequencies (Alonso-Prieto et al., 2013).

Figure 8.

(a) Sinusoidal contrast modulation (0%–100%) of different face identities at a periodic rate of 3.5 Hz (Rossion & Boremanse, 2011). A new face appears every stimulus cycle. (b) EEG power spectrum and topographical maps of the difference between different-faces and same-face conditions, as obtained following a single trial of 70 s of stimulation at 3.5 Hz (grand-averaged data over 12 participants; Rossion & Boremanse, 2011). Stimulation with mixed-identity faces leads to a larger response than does repetitive stimulation with the same identity.

This section has introduced several general principles that underlie the SSVEP paradigm. The first general principle is that a temporally periodic stimulus leads to a narrowband response in the frequency domain. The second is that because the visual system is nonlinear, responses will often be present at multiple harmonics of the stimulus frequency. The third is that considerations of symmetry in stimuli reflect themselves in the particular set of harmonic responses that are observed in the recorded spectrum. Asymmetric stimuli are expected to generate responses with strong odd harmonic components, while symmetric stimuli, in most cases, will lead to responses that are dominated by even harmonics. These symmetry relationships are reflected in pattern onset/offset stimuli, which are asymmetric, and pattern reversal stimuli, which are symmetric. As a rule, asymmetric stimuli lead to asymmetric responses and symmetric stimuli lead to symmetric responses, with corresponding reflections in the relative strength of odd and even harmonics in the response spectrum. These normal relationships can be perturbed by adaptation and perceptual interpretation in ways that usefully relate to the underlying encoding mechanisms. Finally, the notion of pattern onset and offset can be generalized to higher order stimuli.

Multiple periodic visual inputs

In the previous section we described studies that have used EEG to track the neural responses to a single periodic visual input. In this section, we will describe one of the most important virtues of the SSVEP approach: its ability to measure responses from different visual processes simultaneously through the use of multiple stimulation frequencies. The fundamental insight here is that because the stimulus input frequencies strictly determine the response frequencies, it is possible to recover responses to several simultaneously presented stimuli via spectrum analysis if each one has a distinct frequency. This method was introduced by Regan (Regan & Cartwright, 1970; Regan & Heron, 1969) in the context of visual field mapping (i.e., perimetry) and was dubbed frequency tagging by Tononi, Srinivasan, Russell, and Edelman (1998). By careful choice of stimulation frequencies, this form of multi-input stimulation allows an experimenter to assign tags to different stimuli and recover the respective responses in the frequency bins that correspond to each of the stimulation frequencies. Conceptually, this allows for the extraction of evoked responses from populations of cells that are selective to each of the unique input frequencies, even if they are spatially overlapping or embedded within the same stimulus element.

This section will cover applications of multiple-input frequency tagging in studies using multiple frequency inputs embedded in the same time sequence, multiple stimuli presented simultaneously, or stimuli comprising more than one “part,” as well as important applications of the tagging approach to the study of attention. Multi-input interactions as an objective measurement of system nonlinearities and neural convergence will cover a second and very fundamental advantage of the multi-input approach: the ability to model the dynamic properties of the nonlinear processes through measurement of the nonlinear interactions between multiple input frequencies.

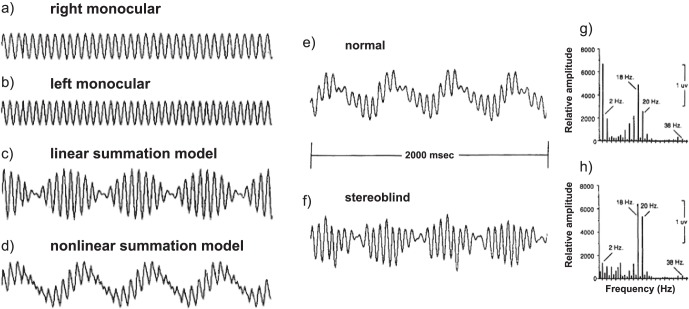

Multiple temporal inputs: Embedded frequency paradigms

Local/global processing in hierarchical stimuli

Many visual stimuli contain structure at multiple levels of complexity, and visual analysis of these patterns may reflect this feature hierarchy. A powerful way of separating responses driven by global structure from those driven by local structure is to dissociate the rates at which global and local structures are updated in the stimulus. The first example of this approach was the VEP generated by dynamic random-dot correllograms and stereograms (Julesz & Kropfl, 1982; Julesz, Kropfl, & Petrig, 1980). In these studies, the researchers wished to separate evoked responses to a higher level feature (binocular correlation or binocular disparity) from those to a lower level one (monocular image cues). To accomplish this goal, a binocularly matched set of dots was presented to each eye on a given display frame. Several frames later, a new set of matching dots was presented that portrayed the same global image. After several more updates of the monocular images, the global structure was changed (e.g., from uncorrelated to correlated or from crossed to uncrossed disparity). Purely monocular responses were generated at the rapid rate of monocular image updating. Because the successive monocular updates were temporally uncorrelated within an eye, there was no information coding the binocular state of the image at this frequency. By contrast, a purely binocular response was recorded at the frequency at which the binocular (global) status of the image was changed.

Subsequent researchers used this two-frequency tagging of local and global structure to measure orientation and direction selectivity in infants (Braddick, Atkinson, & Wattam-Bell, 1986; Wattam-Bell, 1991). For instance, Braddick et al. (1986) isolated an orientation-specific response by using two embedded frequency rates of stimulus change in a sequence. The phase of a grating changed at a fast rate of 25 Hz, generating a 25-Hz SSVEP (in adults subjects who participated in that experiment), and the orientation of the grating changed every three stimuli, i.e., at a rate of 8.3 Hz. While the orientation response was present in adults and in infants a few weeks old, it was absent in newborns, showing that orientation-specific cortical activity is not present at birth but develops early in infancy (see Braddick, Birtles, Wattam-Bell, & Atkinson, 2005, for more recent work on motion- and orientation-specific cortical responses in infancy).

An advantage of this approach is that the processes of interest, e.g., contrast change and orientation selectivity, can be assessed simultaneously by referring to the distinct frequency rates associated with their different update rates (i.e., tags). By measuring these processes simultaneously, it is possible to both shorten the overall duration of the experiment and, importantly, avoid complications that arise from attentional fluctuations or other types of state changes that arise when comparing responses from separate processes measured at different times (i.e., subtraction).

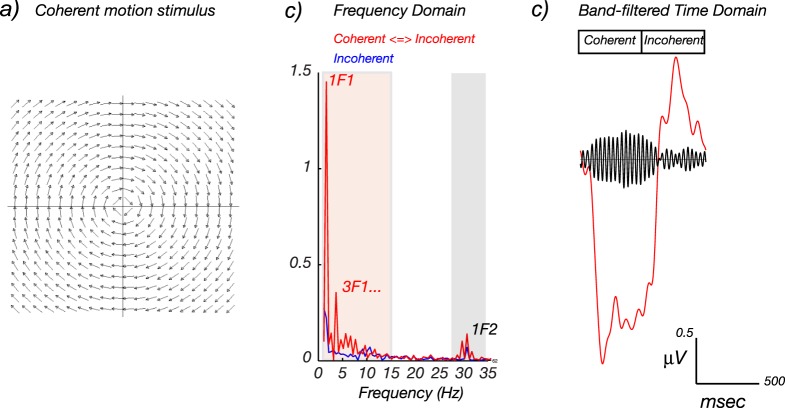

More recently, similar embedded-frequency designs have been used to study coherent-motion responses (Aspell, Tanskanen, & Hurlbert, 2005; Handel, Lutzenberger, Thier, & Haarmeier, 2007; Hou, Pettet, & Norcia, 2008; Lam et al., 2000; Nakamura et al., 2003; Niedeggen & Wist, 1999; Wattam-Bell et al., 2010), responses to global orientation structure in dynamic Glass and line patterns (Palomares, Ales, Wade, Cottereau, & Norcia, 2012; Wattam-Bell et al., 2010), texture segmentation (Ales, Appelbaum, Cottereau, & Norcia, 2013), and binocular disparity processing (Cottereau, Ales, & Norcia, 2014b; Cottereau et al., 2011; Cottereau, McKee, Ales, et al., 2012; Cottereau, McKee, & Norcia, 2012).

Figure 9 illustrates the embedded-frequency paradigm with a coherent-motion- processing example. In displays of this type, many small dots are presented on a given stimulus frame. The trajectory of the dots is subject to a rule that is applied to all dots. Because the rule is applied to all of the dots, the motion is coherent across the display. In this example, the coherently moving dots were constrained to move in trajectories along concentric arcs around fixation (see Figure 9a for a schematic illustration). In the first 500 ms of the 1-Hz display cycle, the dots all moved either clockwise or counterclockwise (e.g., CW, CCW, CW, CCW, …), jumping 17 arcmin in the prescribed direction every 33 ms (30 Hz). In the second 500 ms of the display cycle, the position of each dot was shifted by 17 arcmin in a random direction. Coherent motion thus appeared and disappeared at 1 Hz; this is the first frequency in the display (F1). Because the display also contained local contrast and motion transients at 30 Hz, there is a second frequency (F2) in the display. The spectrum of the response (Figure 9b) contains several narrowband peaks that are harmonics of the 1-Hz global motion-update rate. The first harmonic of the global update frequency is labeled 1f1 and is by far the largest evoked response component in the data. A higher harmonic response associated with the global update frequency F1 occurs at 3f1. The blue curve shows data for a control condition in which the dots were moving randomly in both halves of the 1-s stimulus cycle. Because of this, no coherent motion was seen, but locally random motion was visible. In this case, there are no peaks at the harmonics of F1, but there is a spike in the spectrum at the dot-update frequency (1f2, or 30 Hz).

Figure 9.

Hierarchical SSVEPs to a coherent-motion stimulus. (a) Schematic illustration of the coherent-motion onset/offset VEP stimulus in the coherent-motion phase. The position of a large number of bright dots is shifted, either in a consistent fashion for each dot (coherent global motion) or in a random fashion (incoherent local motion), at a rapid rate (F2 = 30 Hz). The display also alternates between coherent and incoherent states, but at a much lower frequency (F1 = 1 Hz). (b) In the frequency domain, a response is visible at the rapid 30-Hz update rate of the individual dots (f2) and at 1 Hz and its harmonics (1f1, 2f1, 3f1, …), which is the rate at which the global motion structure changes. Responses are also visible at 1f1 ± 1f2 (see Multi-input interactions as an objective measurement of system nonlinearities and neural convergence). (c) The cycle average of the coherent-motion onset/offset response in the time domain shows a mixture of long- and short-period fluctuations. The red curve is synthesized from the signals at nf1, where n ranges up to 15; see red shading in (b). The black curve is synthesized from responses over a band of frequencies centered on 1f2, as indicated by the gray shading in (b). Figure derived from Hou et al. (2008).

Figure 9c shows time-domain reconstructions of the coherent-motion response. The red time course was created by inverse-Fourier transforming the response components between 1 and 15 Hz of data from the coherent-motion onset-offset VEP (red-shaded region of Figure 9b) and setting all other response frequencies to zero. The time course of the response resembles a 1-Hz sine wave, consistent with the dominance of the 1f1 component in the spectrum. The black curve was reconstructed from frequencies around the local motion-update rate of the stimulus (gray-shaded region of Figure 9b). A rapidly oscillating response (30 Hz) can be seen that is maximal during the period of coherent motion (the first 500 ms of the display).

In studies of this type, the frequencies of the local and global update rates can differ by a factor of 10 or more. Because of this wide separation between stimulation frequencies, and because of the relative sluggishness of global evoked responses, both the odd and even harmonics of the global update response rate are interpretable as being due to the modulation of global structure. The responses to the local structure are generated at a much higher frequency and do not overlap with the global responses that are generated at low frequencies. There is thus a clear separation of brain responses to these attributes of the stimulus.

In paradigms of this type, the frequency tags are associated with stimulus attributes that exist at conceptually different hierarchical levels (e.g., high-frequency tags are associated with local attributes and low-frequency tags with global attributes). The level of analysis done by the brain on the stimulus is thus tagged by frequency, and one can then ask how different brain regions process the different stimulus attributes (for an example of this approach, see Palomares et al., 2012).

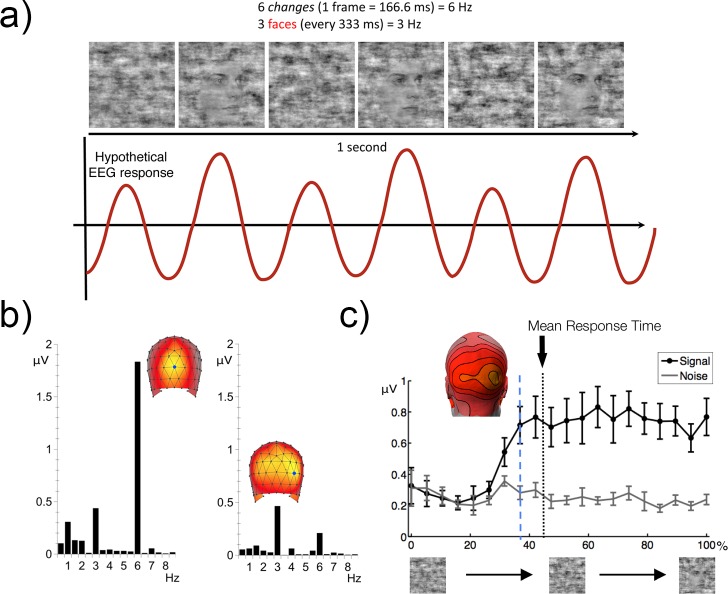

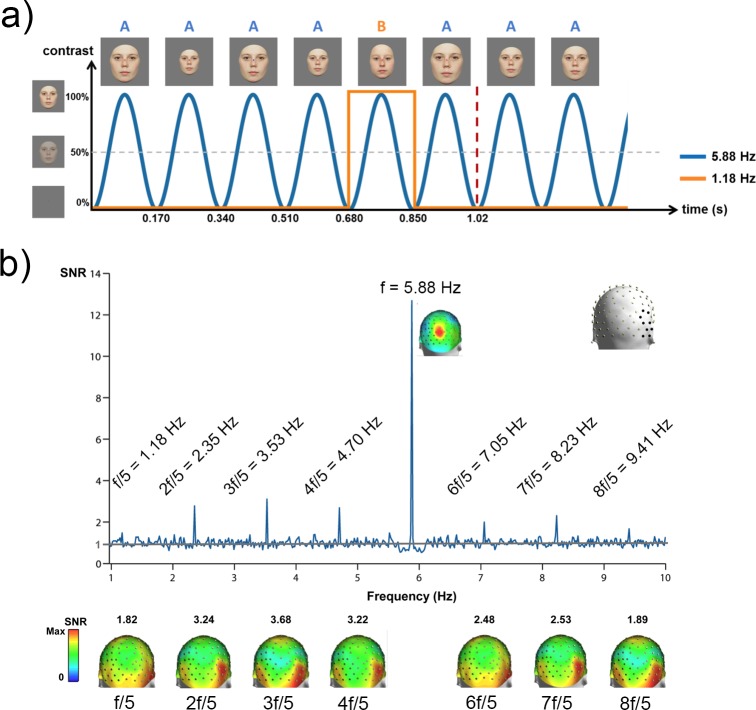

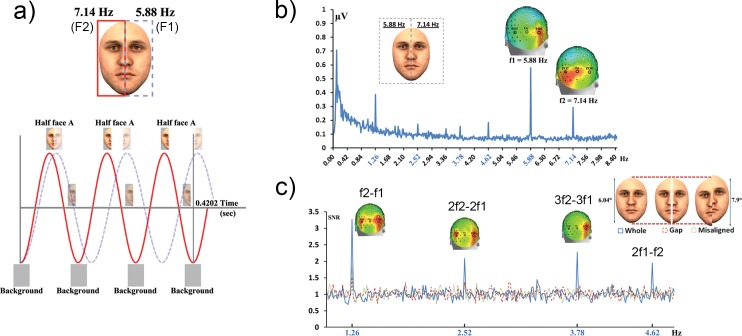

Temporally embedded frequency rates and periodic oddball paradigms

Embedding an infrequent stimulus of one type at random within a stream of frequent stimuli of another type has been used extensively to study feature discrimination in the auditory and visual domains (Naatanen, 1990; Pazo-Alvarez, Cadaveira, & Amenedo, 2003), as well as target selection (P3) mechanisms (the P3 response; Sutton, Braren, Zubin, & John, 1965). In the mismatch negativity (MMN) paradigm, averaged responses to the frequent and infrequent stimuli are subtracted from one another, and the difference potential is used to indicate that the two stimulus classes represented by the frequent and infrequent stimuli have been discriminated. A similar set of questions can be addressed with the SSVEP by embedding the rare oddball stimulus in a rapid periodic train of standards, with the oddball frequency being a submultiple of the faster rate of the standard (Heinrich, Mell, & Bach, 2009). This approach has been used to study face individuation—the ability to discriminate one individual from another. Liu-Shuang, Norcia, and Rossion (2014) presented their participants with 60-s sequences containing a base face (A) presented at a 5.8-Hz frequency rate. Different oddball faces (B, C, D, …) were introduced at fixed intervals (every fifth stimuli = 5.88 Hz/5 = 1.18 Hz: AAAABAAAACAAAAD…; see Figure10a). Significant responses were found in the EEG spectrum at 1.18 Hz and harmonics (e.g., 2f1/5 = 2.35 Hz) over the right occipitotemporal cortex (Figure 10b). This high-level discrimination response was present in all participants after a few minutes of recording, for both color and grayscale faces, providing a robust neural measure of face discrimination in individual brains. Face inversion or contrast reversal did not affect the basic 5.88-Hz periodic response over medial occipital channels. However, these manipulations substantially reduced the 1.18-Hz oddball discrimination response over the right occipitotemporal region, indicating that this response reflects high-level processes that are partly face specific. The oddball response obtained with fast periodic visual stimulation has several advantages over a traditional visual MMN paradigm with faces (e.g., Kimura, Kondo, Ohira, & Schroger, 2012): (a) It is identified objectively at the frequency of the oddball and its harmonics, (b) the response can be measured in only a few minutes thanks to the high SNR of the approach, and (c) there is no need for a subtraction between two conditions (targets and standards) to isolate the discrimination response. This approach has been most recently extended to the categorization of natural face images (Rossion et al., 2015) and word/nonword discrimination (Lochy, Van Belle, & Rossion, 2014). It should prove useful in future studies that seek to identify visual discrimination responses in patient populations and infants.

Figure 10.

The periodic oddball paradigm (Heinrich et al., 2009) extended to face stimuli by Liu-Shuang et al. (2014). (a) Faces are presented by sinusoidal contrast modulation at a rate of 5.88 Hz. At fixed intervals of every fifth base face of a single individual (5.88/5 Hz = 1.18 Hz), a set of different-identity oddball faces are presented. (b) SNR spectrum of the right occipitotemporal ROI for faces (grand average, four sequences of 60 s for each of 12 participants). The channels composing this ROI are indicated with black dots on the 3-D head in the upper right. On the SNR spectrum, only significant oddball responses are labeled. Note that while the 1.18-Hz response appears small, it has an SNR of 1.49, corresponding to a 49% response increase. Below the spectrum, 3-D topographies of each harmonic response are displayed. The largest oddball response is observed over the occipitotemporal regions, with a clear right hemisphere lateralization.

Multiple temporal inputs and the tagging of spatial locations and perceptual organizations

Vision is inherently a spatial sense, and therefore many of the questions at the core of visual neuroscience address the representation and manipulation of visual space in the nervous system. The multi-input SSVEP approach is particularly well suited for addressing these questions and was, in fact, first applied to questions of spatial-location processing (Regan & Cartwright, 1970; Regan & Heron, 1969). The fundamental insight here is that because the stimulus input frequencies strictly determine the response frequencies, it is possible to recover responses to several simultaneously presented stimuli via spectrum analysis if each one has a distinct frequency. In the following section we will discuss research that has used the multi-input approach to study neural mechanisms that underlie spatial vision, scene perception, and perceptual organization.

Locations/perimetry

Visual function can vary over the spatial extent of the visual field as a result of normal aging or various medical conditions, such as glaucoma, stroke, or brain tumors. Visual field testing is therefore widely used by clinicians and researchers to diagnose and study the spatial characteristics of human vision. The multi-input SSVEP approach has long served as an important tool in this pursuit. The first use of multiple frequency-tagged inputs was to record responses generated by the left and right visual hemifields simultaneously, rather than sequentially as in traditional clinical perimetry (Regan & Cartwright, 1970; Regan & Heron, 1969). Modern versions have included up to 17 regions (Abdullah et al., 2012). The approach has been successful in dramatically reducing the time needed for visual field assessment. The primary limitation of this approach is that as the number of tagged locations goes up, either the range of temporal frequencies in the display must increase or the bandwidth of the recording must decrease. The disadvantage of increasing the range of frequencies is that sensitivity of the different parts of the visual field may itself depend on temporal frequency, and thus temporal-frequency differences are confounded with spatial position. The disadvantage of decreasing the frequency bandwidth to accommodate more stimuli in a given range of temporal frequency is that the recording time must be increased commensurately. This approach, in addition to being useful for perimetry, has been a major focus of brain–computer interface research (Vialatte, Maurice, Dauwels, & Cichocki, 2010; Zhu, Bieger, Garcia Molina, & Aarts, 2010). Tagging of locations for studying spatial attention will be described in detail in Multiple temporal inputs for the study of visual attention.

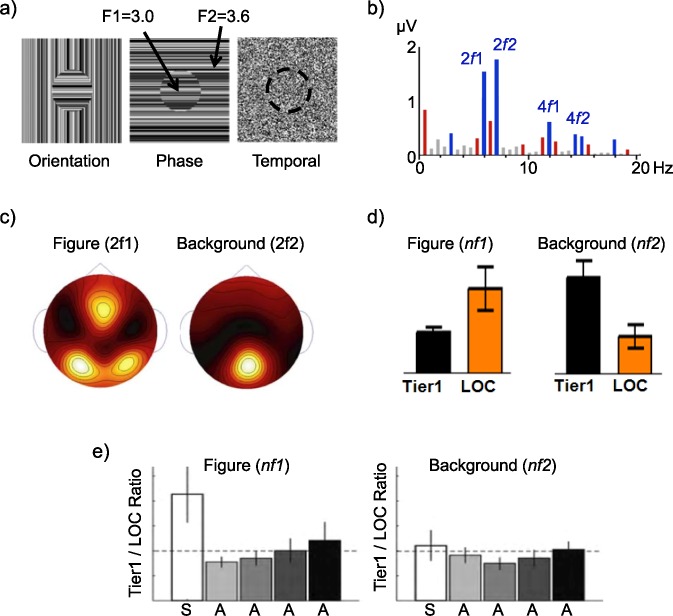

Figure–ground segregation networks

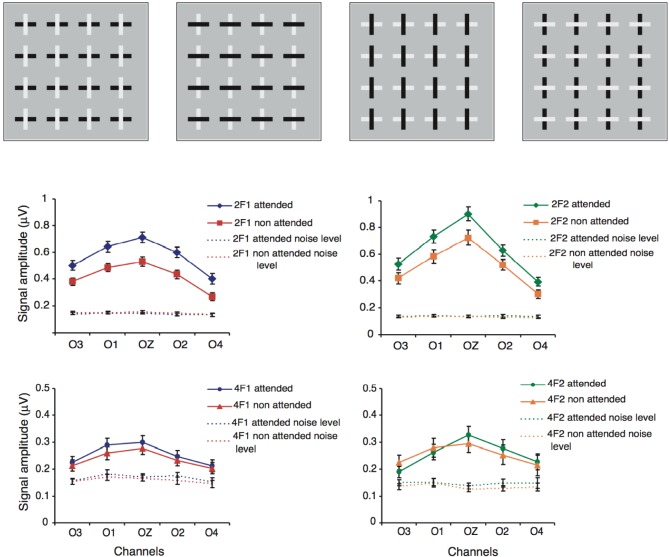

Visual-scene perception relies on the segregation of objects from their supporting backgrounds—so-called figure–ground segmentation. The multi-input SSVEP approach lends itself favorably to the study of scene segmentation because different regions of the scene can be tagged with different temporal frequencies. In such designs, the response spectrum can be evaluated at harmonics of the various region frequency tags to isolate the cortical activity that is specific to figure versus background processing. Researchers have used this approach to achieve a very precise experimental control of visual space and test the spatial dependency of cortical networks underlying figure–ground segmentation.

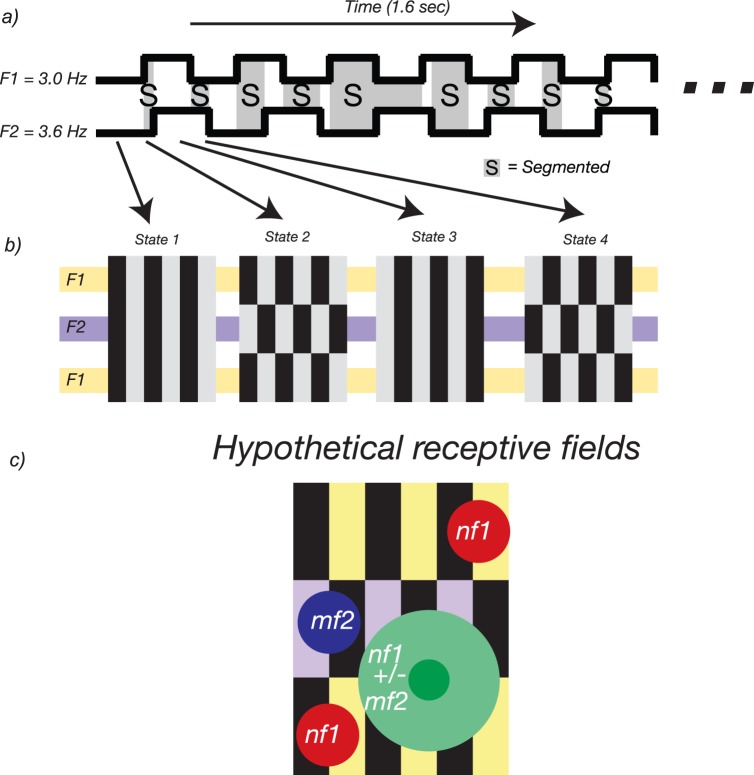

Using this two-input approach, Appelbaum and colleagues carried out a series of studies identifying and investigating distinct cortical networks responsible for the processing of both figure and background regions. In the first of these studies (Appelbaum, Wade, Vildavski, Pettet, & Norcia, 2006) texture cues were modulated such that a central circular region alternated at 3.0 Hz while the surrounding texture alternated at 3.6 Hz (Figure 11a). Using different orientation, phase, and temporally defined white-noise texture cues, the researchers found that the evoked responses (Figure 11b) attributed to the background produced a single medial occipital response, but the figure regions consistently produced bilateral occipital responses (Figure 11c).

Figure 11.

Figure–ground segmentation studies of Appelbaum and colleagues (Appelbaum et al., 2010; Appelbaum et al., 2006, 2008). (a) Stimuli in these studies were comprised of two-frequency textures in which a central circular figure alternated at 3.0 Hz (f1) and the surround alternated at 3.6 Hz (f2). These stimuli were composed of three different texture cues: orientation modulation of one-dimensional textures, phase modulation of one-dimensional textures, and white noise. (b) Example EEG response spectra, with significant responses colored (blue are harmonics of the figure and background, red are IM terms). (c) Topographic response distributions of second harmonics show distinct patterns. (d) Source-localized ROI profiles show a double dissociation, wherein the figure response (summed over harmonics) is large in the LOC and the background response is larger in first-tier retinotopic areas V1–V3. This pattern is present for all three cues, figure sizes of 2° and 5°, and figures presented at different retinal eccentricities. (e) Figure selectivity, as a ratio of retinotopic to LOC response, is only present for the surrounded stimulus (S), which shows a figure surrounded by a background, not for ambiguous (A) stimuli that do not have this Gestalt arrangement.

To define which cortical areas were involved in these two networks, a source-imaging approach based on functional MRI (fMRI) was used to show that activity related to the figure region, but not the background region, was preferentially routed between first-tier visual areas (V1–V3) and the lateral occipital cortex (LOC), a visual brain area known from fMRI studies to demonstrate strong object selectivity (Malach et al., 1995). Here, separate fMRI mapping sessions were used to define the visual areas and a minimum-norm, distributed-source model was used to estimate each participant's SSVEP in these regions of interest (ROIs; for a summary of the technique, see Cottereau, Ales, & Norcia, 2014a). A separate network, extending from the first tier through more dorsal areas, responded preferentially to the background region (Figure 11d).

In a subsequent study, Appelbaum, Ales, Cottereau, and Norcia (2010) extended the analysis of the stimulus preferences of the LOC by including several ambiguous figure–ground arrangements in order to determine whether the Gestalt property of surroundedness (i.e., a smaller figure on a larger background) was necessary to selectively activate the LOC. For this purpose they compared the magnitude of frequency-tagged activity in the object-selective LOC across several different spatial arrangements that portrayed surrounded figure–ground arrangements (S) or contained two spatially symmetric, temporally modulating regions that were ambiguous (A) in their figure–ground arrangement. Using this approach, they found that replacing the classic surrounded figure–ground organization with a symmetric one greatly reduced the specificity of the LOC response to the different image regions (i.e., produced a Tier1/LOC ratio near 1; Figure 11e). They concluded that the surrounded Gestalt organization therefore exerts a powerful controlling effect on the routing of information about image regions through first-tier areas and object-selective cortex. Collectively, these studies illustrate how the multi-input SSVEP can be used to investigate the mechanisms supporting the early stages of visual-scene processing. As discussed in subsequent sections, these multi-input, figure–ground designs can also be used to study spatial interactions (Appelbaum et al., 2008) and spatial attention (Appelbaum & Norcia, 2009).

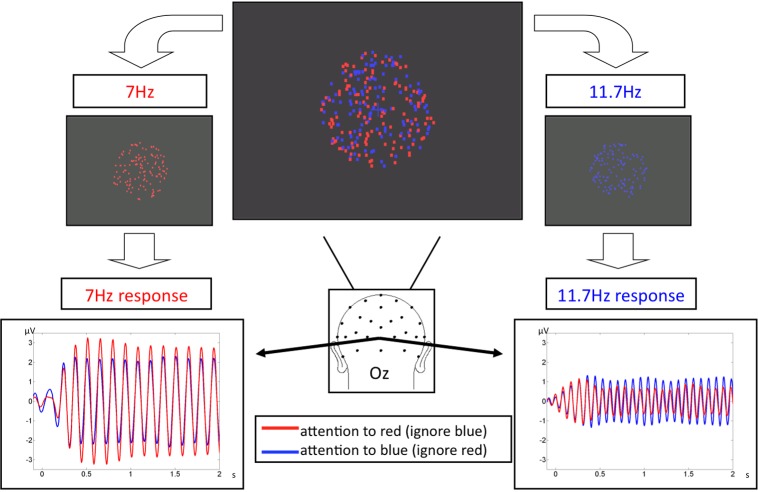

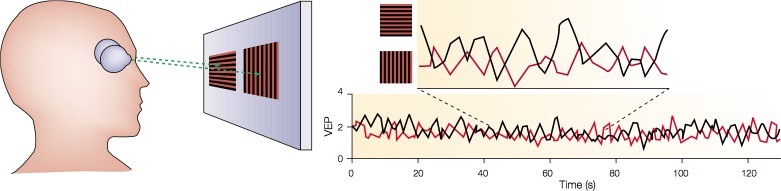

Perceptual bistability and perceptual organization

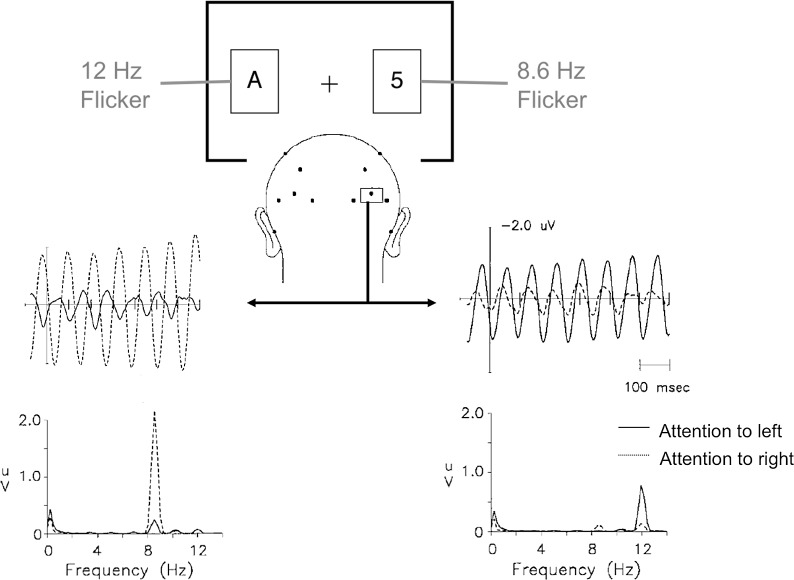

A particularly powerful means of studying the relationship between perception and neural activity involves the use of a physical stimulus that has more than one perceptual interpretation. A hallmark feature of such stimuli is perceptual bistability: The perceptual interpretations alternate stochastically over time. Binocular rivalry, the fluctuation in perception that occurs when different images are presented to the two eyes, is probably the best-studied bistable percept (for a review, see Blake & Logothetis, 2002). SSVEPs provide a very powerful way to determine in real time the alternations in perceptual dominance across the eyes. As early as 1964, Lansing reported an intermittent suppression of the SSVEP under conditions promoting rivalry. That work established that the amplitude at the flicker frequency of a monocular grating was sometimes suppressed during the presentation of a constant grating in the other eye. This intermittent suppression coincided well with the subject's perceived suppression of the flickering grating by the constant pattern. Building on this approach, Brown and Norcia (1997) developed a gold-standard technique to detect perceptual fluctuations in binocular rivalry. In that study, observers viewed dichoptically two differently oriented gratings that oscillated in counterphase at distinct frequencies (F1 = 5.5 Hz for the grating viewed by the left eye and F2 = 6.6 Hz for the grating viewed by the right eye; see Figure 12).

Figure 12.

Frequency tagging used to measure rivalry in real time. The participant viewed a vertical grating oscillating at 5 Hz in one eye and a horizontal grating oscillating at 6 Hz in the other eye. Because the two stimuli were not binocularly fusable, they alternated in perceptual dominance. The curves on the right plot the time course of the evoked responses at the separate eye-tagging frequencies. The two time courses alternate in counterphase in sync with the perceptual alterations. From Blake and Logothetis (2002).

This stimulus produced strong SSVEPs over occipital electrodes at frequencies that were twice the two input frequencies (2f1 and 2f2). Fourier analysis revealed that the amplitudes at these frequencies were negatively correlated between the two eyes (i.e., when the amplitude at the frequency associated with one grating was large, the response associated with the other grating was invariably small). Moreover, these modulations were tightly phase-locked to the observers' perceptual reports of dominance and suppression (i.e., the grating associated with the frequency of the highest amplitude was the one that was perceived). This approach therefore permits a determination of which image a subject perceives without a behavioral report and can thus be used to measure rivalry in various populations such as infants and animal subjects. The Brown and Norcia (1997) study had several follow-ups that aimed at investigating the neural correlates of perceptual dominance (Cosmelli et al., 2004; Srinivasan, Russell, Edelman, & Tononi, 1999; Sutoyo & Srinivasan, 2009; Tononi et al., 1998), the influence of emotional stimuli on rivalry (Alpers, Ruhleder, Walz, Muhlberger, & Pauli, 2005), and the relationship between attention and binocular rivalry (Zhang, Jamison, Engel, He, & He, 2011).

Beyond binocular rivalry, other types of multistable stimuli have been used in SSVEP designs in order to relate neural activity to perception. Most notably, Parkkonen, Andersson, Hamalainen, and Hari (2008) used a modified Rubin face–vase illusion in which the face and vase regions were tagged with dynamic white noise at two different frequencies. Using this design and magnetoencephalographic (MEG) recording, they demonstrated that activity in the early visual cortex covaried with the perceptual states reported by the observers, indicating cortical loci which the visual system uses to achieve object-level representations.

Multiple temporal inputs for the study of visual attention

Attention is an essential neurobiological function that allows an organism to select the most important stimuli in the environment for enhanced neural processing. Because at any moment there is more information in the environment than can be processed, efficient attentional control is regarded as a critical cognitive faculty by which to filter out irrelevant distractions and focus on the stimuli that are most likely to lead to successful behaviors. Attention is not, however, a unitary construct or mechanism, but rather a set of interrelated processing schemas that are applied to many perceptual and cognitive operations (Chun, Golomb, & Turk-Browne, 2011). A major goal of vision science has been to identify the core behavioral processes and neural mechanisms that constitute the diverse taxonomy of internal (endogenous) and external (exogenous) attention. Over the last two decades, the use of SSVEP designs has provided substantial insight.

The SSVEP is particularly well suited to attention-research questions, as it provides a high-SNR measure of neural activity that can be unambiguously associated with specific external stimuli, even when multiple stimuli are present at the same time. Importantly, it allows monitoring of responses made to stimuli that are outside of the focus of attention, something that is difficult to do with behavioral methods. Moreover, the SSVEP can be flexibly deployed over a number of configurations, including the tagging of both spatially distinct and spatially overlapping stimuli. In light of these attributes, the SSVEP approach has gained possibly its greatest utility in studies that have addressed the cognitive and neural mechanisms underlying volitional attention in human beings.