Abstract

Gestures represent an integral aspect of interpersonal communication, and they are closely linked with language and thought. Brain regions for language processing overlap with those for gesture processing. Two types of gesticulation, beat gestures and metaphoric gestures are particularly important for understanding the taxonomy of co‐speech gestures. Here, we investigated gesture production during taped interviews with respect to regional brain volume. First, we were interested in whether beat gesture production is associated with similar regions as metaphoric gesture. Second, we investigated whether cortical regions associated with metaphoric gesture processing are linked to gesture production based on correlations with brain volumes. We found that beat gestures are uniquely related to regional volume in cerebellar regions previously implicated in discrete motor timing. We suggest that these gestures may be an artifact of the timing processes of the cerebellum that are important for the timing of vocalizations. Second, our findings indicate that brain volumes in regions of the left hemisphere previously implicated in metaphoric gesture processing are positively correlated with metaphoric gesture production. Together, this novel work extends our understanding of left hemisphere regions associated with gesture to indicate their importance in gesture production, and also suggests that beat gestures may be especially unique. This provides important insight into the taxonomy of co‐speech gestures, and also further insight into the general role of the cerebellum in language. Hum Brain Mapp 36:4016–4030, 2015. © 2015 Wiley Periodicals, Inc.

Keywords: cerebellum, beat, neuroimaging, gesture, timing, superior temporal gyrus, language

INTRODUCTION

Interpersonal communication is a foundational component of our day‐to‐day lives. In addition to verbal communication through speech, gesture also plays a key role in these interactions. Motoric gestures can provide additional meaning and emphasis during social interactions, and speech and gestures may interact to help convey meaning and semantic information [Holle et al., 2008; Hubbard et al., 2009]. The close relationship between gesture and language is highlighted by shared neural substrates for speech and gesture processing as demonstrated using patient samples and functional neuroimaging [Green et al., 2009; Holle et al., 2008; Hubbard et al., 2009; Willems and Hagoort, 2007; Xu et al., 2009]. Indeed, one landmark study observed that people who are blind from birth use gesture when they speak in the same manner that sighted people do [Iverson and Goldin‐Meadow, 1998], and gesture is also impacted in callosotomy patients [Lausberg et al., 2003]. This is further supported by evidence from patients with aphasia who show deficits in gesture discrimination in addition to language deficits [Nelissen et al., 2010]. However, although there are deficits in gesture discrimination in aphasia, these patients are also reliant on gesture to aid in word retrieval, supporting the link between gesture and language [Cocks et al., 2009, 2011; Lanyon and Rose, 2009]. Further, patients with Broca's aphasia show a slow and halting presentation of meaningful gestures while Wernicke's aphasia patients exhibit rapid but vague and meaningless gestures [McNeill and Pedelty, 1995]. Finally, in interesting investigations of children with language delay, gesture use was related to the eventual development of normal language skills [Thal and Tobias, 1992; Thal et al., 1991]. Indeed, it has been suggested that together language and gesture make up a more broad and integrated system of communication [McNeill, 1992, 2005; McNeill and Pedelty, 1995]. Thus, understanding gesture is important for our understanding of language and communication more generally. This may provide important information about the emergence of language; however, it may be especially important to consider gesture subtypes.

Classifying gestures by subtype serves several functions. First, it provides a more detailed picture of gesture performance. Second, gestures likely serve a range of interpersonal and communicative functions which may differ by gesture type [Kendon, 1994; McNeill, 2005; Streeck, 1993]. Third, because gesture behavior is intrinsically tied to language [McNeill, 1985], partitioning of gestures allows for the measurement of different language‐related cognitive processes (e.g., abstract thinking, working memory). Finally, similar to spoken language, different gesture types are likely controlled by different neural networks and thus may offer a window into the neuropsychological mechanisms associated with gesture function and dysfunction [Bates and Dick, 2002; Jeannerod, 1994; Straube et al., 2011, 2014]. Direct investigation of gesture subtypes with respect to neural substrates may provide insight into the taxonomy of gesture, and perhaps their phylogeny as well. Investigating the neural substrates underlying the production of gesture subtypes will allow us to better understand the similarities and differences across these gestures. While they are all important for conveying and emphasizing linguistic content, an understanding of the distinct and overlapping neural substrates of different gestures may provide insight into the specific aspects of language they are most associated with and their associated cognitive processes.

The categorization of gesticulation often depends on motor coordination itself as well as the content or context of its co‐occurring speech. In contrast to other gesture subtypes, beat gestures lack abstract semantic content [Andric and Small, 2012]. Instead, beats place emphasis on the particular word or phrase they accompany with a baton movement which is often metronomic and is characterized by two movement phases (e.g., up/down, left/right) [Leonard and Cummins, 2011]. While they are temporally linked to spoken language and provide important emphasis on semantic information, beat gestures lack abstract components. These gestures can be thought of as a “rhythmical pulse” (Leonard and Cummins, 2011). Indeed, the timing of beats can influence their identification and interpretation [Leonard and Cummins, 2011]. To our knowledge, the neural correlates of beat gestures have not been extensively investigated, with the exception of work by Hubbard et al. [2009]. Conversely, metaphoric gestures occur when an individual creates a physical representation of an abstract idea or concept, and these gestures provide additional semantic meaning that complements the ongoing speech [Andric and Small, 2012]. For example, a subject may delineate a space in front of them in reference to time, or may clench their fist in reference to pain. During the processing of metaphoric gestures, the brain recruits regions of the left hemisphere in the temporal lobe, the inferior frontal gyrus, and premotor cortex, along with some right hemisphere activations [Kircher et al., 2009; Straube et al., 2011]. These regions are similar to those involved in language processing, [Green et al., 2009; Holle et al., 2008; Hubbard et al., 2009; Ozyürek et al., 2007; Willems and Hagoort, 2007; Xu et al., 2009].

As noted, the current literature has focused more broadly on metaphoric and other gesture subtypes as opposed to beat gestures [Leonard and Cummins, 2011]. On the surface level, nonsemantic emphatic beat gestures seem quite different from metaphoric gestures. Although they are associated with language and speech, their temporal and emphatic components are quite unique. We, therefore, might expect to see additional brain areas associated with beat gestures, but also given their close ties to speech, some overlap as well. In one of the few investigations of beat gestures, Hubbard et al. [2009] found that relative to nonsense gestures or no gestures, when beat gestures were perceived together with speech, there was stronger activity in the left superior temporal gyrus and sulcus. This supports the notion that language and gesture processing regions may also be involved with beat gesture perception and production.

However, given the temporal components of beat gestures, one might expect that the neural substrates of this gesture type would include those that have been previously implicated in temporal processing. One interesting possible candidate region is the cerebellum. The cerebellum has been implicated in timing, particularly with respect to the timing of movements [Ivry and Keele, 1989; Ivry and Spencer, 2004; Ivry et al., 2002; Spencer et al., 2003, 2007]. Furthermore, the timing of discrete events, such as distinct finger tapping, is especially linked to the cerebellum [Spencer et al., 2003, 2007]. The discrete nature of beat gestures, as well as their precise timing with respect to spoken language [Leonard and Cummins, 2011], suggest potential cerebellar contributions to their production. Furthermore, it is of note that the cerebellum has been implicated in language and language processing more generally [Ackermann, 2008; Ackermann et al., 2007; De Smet et al., 2013; Leiner et al., 1991; Lesage et al., 2012; Mariën et al., 2014; Murdoch, 2010], particularly with respect to the timing of the motor aspects of speech [Ackermann et al., 2007]. Investigating the cerebellum, as well as cortical areas that have been implicated in the processing of other co‐speech gesture subtypes might provide interesting insight into beat gestures. Furthermore, the cerebellum may be involved with metaphoric gestures as well, given its contributions to language more generally and the fact that metaphoric gestures are also timed to co‐occur with speech.

The goals of this study were twofold. First, we aimed to better understand the neural correlates of beat gestures, in comparison to metaphoric gestures. Beat gestures are relatively understudied, but are an interesting and important component of interpersonal communication. Understanding the relationships between this gesture type and the brain will help clarify the taxonomy of these gestures, and may provide insight into their role in language. There is theoretical evidence to indicate that beat gestures represent a distinct gesture type predicated on the idea that beat gestures are emphatic gestures, as opposed to other gesture types which support the semantic content of spoken language and may provide feedback to language systems to aid in thought and speech. Investigating the unique and overlapping neural substrates associated with these gestures stands to provide more direct evidence for this gesture taxonomy, and the uniqueness of beat gestures. Second, we were interested in investigating gesture production in a more natural setting (a taped interview), as opposed to the processing and perception of gestures, which has typically been investigated in the neuroimaging environment [e.g., Straube et al., 2011; Xu et al., 2009]. The focus on gesture processing is likely due to the confounds of movement and speech in the neuroimaging environment. Lindenberg et al. asked individuals to imagine producing a symbolic gesture while undergoing an fMRI scan [Lindenberg et al., 2012], and while the associated brain activity differed with respect to that seen during gesture processing, this study was limited as it did not involve the spontaneous production of communicative gestures during natural speech. To investigate, this would require investigating speech in natural contexts outside of the brain scanner with respect to brain morphology. Similarly, asking individuals to produce gestures in the scanner environment would potentially result in motion artifacts, but would also remove the natural speech components that are coupled with gesturing. The context of speech is key for the production of naturalistic gestures. To our knowledge, there has only been one study to investigate gesture production and brain volume [Wartenburger et al., 2010]. This investigation implicated cortical thickness in the left temporal lobe and the inferior frontal gyrus; however, gestures with semantic content (representation gestures) were looked at broadly, and beat gestures were not included in these analyses [Wartenburger et al., 2010]. Thus, our work provides a novel investigation of beat and metaphoric gesture production in a naturalistic setting, with respect to brain morphology.

Here, using natural speech from recorded interviews, we investigated beat and metaphoric gesture production in relation to brain volume in both the cortex and cerebellum in a sample of healthy young adults. Targeted regions of interest in the cortex were selected based on prior work on metaphoric gesture processing [Kircher et al., 2009; Straube et al., 2011], and additional work investigating communicative gesture and gesture processing more generally [Frey, 2008; Xu et al., 2009]. Thus, our cortical regions included the left rostral middle frontal gyrus (MFG), left superior temporal gyrus (STG), left inferior frontal gyrus pars opercularis (IFGoperc), and left inferior parietal lobule (IPL). In the cerebellum, we focused on regions that have been implicated in discrete timing, which include the anterior cerebellum and superior vermis [Penhune et al., 1998; Spencer et al., 2007]. This resulted in two regions, the anterior cerebellum, consisting of bilateral lobules I–VI (I–IV are part of the vermis), as well as vermis lobule VI. We hypothesized that the relative number of beat gestures produced during a discrete time period would be positively associated with volume in both of our cerebellar regions of interest, but also with cortical regions associated with gesture processing given their close ties with speech and language more generally. In addition, because of the coupling of beat gestures with speech, we also expected to see positive correlations with left hemisphere cortical volumes previously associated with gesture processing. In particular, we predicted a positive correlation with the STG, given the findings of Hubbard et al. [2009] implicating this region in beat gesture processing. With respect to metaphoric gestures, we hypothesized that they would be positively associated with brain volume in the cortical regions, given the findings of Wartenburger et al. [2010] and the existing functional imaging evidence [Kircher et al., 2009; Nelissen et al., 2010; Straube et al., 2011]. Wartenburger et al. [2010] demonstrated that individuals who produced representational gestures had higher cortical thickness. As such, we hypothesized that greater regional volume would be associated with the production of more gestures.

METHODS

Participants

Forty healthy subjects between the ages of 15 and 21 (mean ± standard deviation; 18.6 ± 1.7 years, 21 female) were recruited from the community using flyers, internet advertising, and email postings as part of a larger clinical investigation. Because this was part of a larger clinical investigation an additional six young controls (12 and 13 years old) were also recruited for the purposes of age‐matching with the clinical group (for a total of 46 recruited participants). They were not included in our analyses here due to the potential confounds associated with brain and cognitive development. Videotaped interviews were unavailable for gesture coding in three participants, and brain data were unavailable for an additional five participants due to contraindications to the scanner environment (e.g., braces, new piercings; n = 2) or failures in brain normalization or preprocessing (n = 3). Thus, our final sample included 32 participants (18.6 ± 1.7 years of age, 15 female). All study procedures were approved by the University of Colorado Institutional Review Board. Informed consent was obtained from all participants prior to study entry. If subjects were <18 years old, written consent was also obtained from parents. Exclusion criteria were any history of head injury, the presence of a neurological disorder, and any contraindication to the magnetic resonance imaging procedure. Additionally, having a first‐degree relative with a psychotic disorder or meeting criteria for an Axis I psychiatric disorder, as determined by the structured clinical interview for DSM‐IV (SCID), were grounds for exclusion. Of note, volumetric investigations of the cerebellum in a subset of these participants were undertaken as part of a control group in a clinical investigation with respect to motor learning [Dean et al., 2013], and a lifespan developmental investigation focusing on cognition [Bernard et al., 2015]. These participants also served as controls in a comparison of gesture‐language mismatch between healthy individuals and a clinical group [Millman et al., 2014].

This study took place within a larger investigation of motor behavior and risk for psychosis. Thus, in addition to the SCID, participants were also administered the structured interview for prodromal syndromes (SIPS), an instrument used to rate attenuated psychotic symptoms in the present age range. This measure further confirmed that the included individuals do not suffer from any psychopathology. Most importantly for our investigation here, gesture coding was completed during the SIPS interview. Interviews took place in a quiet laboratory setting equipped with video technology.

Gesture Coding

The coding scheme utilized was adapted from the Handbook of Methods in Nonverbal Behavior Research [Scherer and Ekman, 1982], and was used in two other studies by our group [Millman et al., 2014; Mittal et al., 2006]. Additional criteria for the coding of gesture subtypes were based off of McNeill [1992]. The gesture subtypes coded in this study were chosen because they represent unique categories of nonverbal communication [Kendon, 1994; McNeill, 2005; Streeck, 1993] and have been used in prior research [e.g., Leonard and Cummins, 2011; Straube et al., 2011]. Of note, metaphoric gestures were coded so as to exclude iconic gestures, consistent with McNeill's taxonomy [McNeill, 1992]. For all gesture coding, we used high definition video recordings of the interviews, which allowed coders to pause and slow down the video as needed. To document incidences of metaphoric gestures, raters first noted whether an abstract concept was present in the participant's speech. If present, raters then noted whether the speech was accompanied by a gesture. Raters documented the occurrence of a metaphoric gesture if a coherent relationship between the gesture and the corresponding lexical content was observed (e.g., a conduit made with the hands in reference to feelings). As noted above, beat gestures resemble a baton movement involving consecutive strokes of the hand(s) in at least two directions. Raters counted each such stroke as a single beat and, when necessary, used slow‐motion video to ensure an accurate tally of beat gestures.

For each subject, study staff rated the first 15 min of either the SIPS (n = 30) or SCID (n = 2) portion of the recorded interviews. Although our prior work comparing controls to clinical populations focused on coding the SIPS only [Millman et al., 2014], because the control individuals investigated here at times gave very simple one‐word answers to the SIPS questions (typically “no”), it was not ideal for eliciting gestures in several participants. Thus, in two individuals, we coded the SCID, as the first questions tap into depression and anxiety, which have higher base rates across the population. Raters began coding when the first question in the psychotic symptoms section of the SIPS was asked (the material includes questions concerning a range of topics such as unusual thoughts and perceptual experiences), or when the first question of the SCID was asked. For each study participant, the frequency of metaphoric and beat gestures produced during a 15‐min coding period was noted. Additionally, the total number of gestures for each subject was recorded, as was the total speech time. Raters were kept blind to the hypotheses of the current study. The average number and range of beat and metaphoric gestures, the total gestures, and total speech time are presented in Table 1.

Table 1.

The mean number and range of beat and metaphoric gestures, total number of gestures, and total speech time across the 32 participants included in our analyses

| Beat gestures | Metaphoric gestures | Total gestures | Speech time (s) | |

|---|---|---|---|---|

| All participants | 30.19 ± 33.94 (0–155) | 6.72 ± 6.02 (0–27) | 41.28 ± 40.62 (1–185) | 292.34 ± 134.15 (103–618) |

| SIPS (n = 30) | 40.5 ± 0.71 (40–41) | 8.5 ± 2.12 (7–10) | 53.5 ± 0.71 (53–54) | 254.00 ± 79.19 (198–310) |

| SCID (n = 2) | 29.50 ± 34.98 (0–155) | 6.60 ± 6.19 (0–27) | 40.47 ± 41.86 (1–185) | 294.90 ± 137.53 (103–618) |

Breakdowns for those coded with the SIPS and SCID are also provided. The mean and standard deviations are presented, with the range presented in parentheses.

As with our prior gesture studies, three independent raters underwent 3 months of training in which video recordings of structured interviews were rated for gesture behavior. Data collection began when Cronbach's alpha exceeded 0.80 for both gesture types. Raters then participated in regular reliability checks throughout the coding period. If alphas for any category of gesture fell below 0.80, raters ceased data collection and resumed training until the reliability again exceeded this value.

Structural Brain Imaging and Processing

A structural magnetic resonance imaging (MRI) scan for each participant was acquired using a 3‐Tesla TIM Trio Siemens MRI scanner with 12 channel parallel imaging. This scan was acquired as part of a larger imaging session that included additional structural and functional measures (diffusion tensor imaging and resting‐state BOLD), beyond the scope of our analysis here. Structural images were acquired using a T1‐weighted three‐dimensional magnetization prepared rapid gradient multiecho sequence (MPRAGE; sagittal plane; repetition time (TR) = 2,530 ms; echo times (TE) = 1.64 ms, 3.5 ms, 5.36 ms, 7.22 ms, 9.08 ms; GRAPPA parallel imaging factor of 2; 1 mm3 isomorphic voxels, 192 interleaved slices; FOV = 256 mm; flip angle = 7°; time = 6:03 min). A turbo spin echo proton density (PD)/T2‐weighted acquisition (TSE; axial oblique aligned with anterior commissure‐posterior commissure line (AC‐PC line); TR = 3,720 ms; TE = 89 ms; GRAPPA parallel imaging factor 2; 0.9 × 0.9 mm voxels; FOV = 240 mm; flip angle: 120°; 77 interleaved 1.5 mm slices; time = 5:14 min) was acquired to check for incidental pathology. The total scan session lasted for approximately 45 min.

Lobular volumes for lobules I–IV, V, and VI, as well as vermis lobule VI were calculated using the lobular regions defined by the spatially unbiased infra‐tentorial template (SUIT) [Diedrichsen, 2006; Diedrichsen et al., 2009], using methods previously devised to investigate regional cerebellar volume [Bernard and Seidler, 2013b]. Lobules I–IV, V, and VI have been shown to cluster together based on their volumes as an anterior cerebellar region [Bernard and Seidler, 2013b], and as such these lobules were used here to calculate the volume for our anterior cerebellum region of interest. Furthermore, these regions are strongly implicated in discrete timing [Spencer et al., 2007]. Volumes were combined across both the left and right hemispheres to determine the volume of the anterior cerebellum. For all cerebellar structural analyses, the MPRAGE images were used. First, we created masks of each lobule and a mask for the whole cerebellum using the probabilistic SUIT atlas. Next, the cerebellum was extracted from the MPRAGE and separated from the rest of the brain using the SUIT toolbox (version 2.5.2) [Diedrichsen, 2006; Diedrichsen et al., 2009] implemented in SPM8 (Welcome Department of Cognitive Neurology, London, UK; http://www.fil.ion.ucl.ac.uk). The extracted anatomical image was masked with the thresholded classification map that was produced during the isolation procedure. This resulted in a high‐resolution image of the cerebellum, excluding all surrounding cortical matter. Third, we normalized the SUIT cerebellum template to each individual's cerebellar anatomical image in native space using Advanced Normalization Tools (Penn Image Computing & Science Lab, http://www.picsl.upenn.edu/ANTS/) [Avants et al., 2008]. We chose ANTS as the nonlinear warping algorithm has been shownt to perform better in comparison to algorithms such as those used in SPM [Klein et al., 2009]. After applying the transformation to the SUIT cerebellum, the resulting warp vectors were applied to the individual lobular masks. The result was a mask of each lobule normalized to indivdiual subject space.

Finally, these masks were loaded into MRICron (http://www.mccauslandcenter.sc.edu/mricro/mricron/index.html) and converted to volumes of interest. We overlaid the volumes of interest onto each individual subject's structural scan to inspect them. This ensured accurate registration. We then used MRICron to calculate the descriptive statistics for each lobule, providing us with the gray matter volume of each lobule in cubic centimeters. Notably, the methods used here are comparable to several recent automatic methods [Park et al., 2014; Weier et al., 2014], and the volumes derived from this method are consistent with those found using hand tracing and the automated approach of Weier et al. [2014].

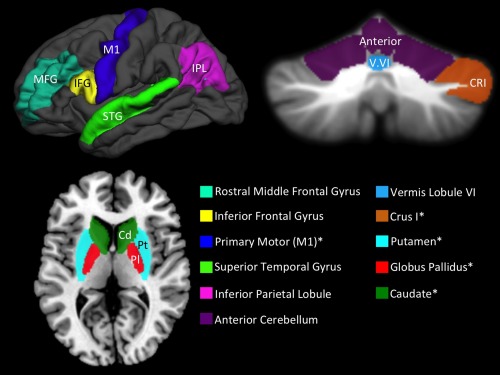

Regional cortical volumes (left STG, IPL, MFG, and IFGoperc) were automatically delineated on the MPRAGE using the Freesurfer suite of automated tools [Fischl et al., 2002]. The processing stream involved motion correction, removal of non‐brain tissue using a hybrid watershed/surface deformation procedure [Ségonne et al., 2004], automated Talairach transformation, segmentation of the subcortical white matter and deep gray matter volumetric structures [Fischl et al., 2001, 2004], intensity normalization [Sled et al., 1998], tessellation of the gray matter/white matter boundary, automated topology correction [Fischl et al., 2001; Ségonne et al., 2007], and surface deformation following intensity gradients. This process optimally places the gray/white and gray/cerebrospinal fluid borders at the location where the greatest shift in intensity defines the transition to the other tissue class [Dale and Sereno, 1993; Dale et al., 1999; Fischl and Dale, 2000]. Freesurfer returned separate volumes for all of our cortical regions of interest. The cortical and cerebellar regions of interest are presented in Figure 1. All Freesurfer segmentations were quality checked by three trained individuals to ensure accurate segmentation and labeling of cortical and subcortical regions.

Figure 1.

Cortical (upper left; left hemisphere) regions of interest as measured and delineated using Freesurfer, and cerebellar regions of interest (upper right; coronal view). Cerebellar volume was determined using semiautomated methods developed for investigating lobular cerebellar volume. [Bernard and Seidler, 2013b]. Post hoc regions of interest in the basal ganglia (bottom left; axial view) were also delineated with Freesurfer. Asterisks indicate areas that were included as control regions in our post hoc analyses. Anterior: anterior cerebellum (lobules I–VI); Cd, caudate; CRI: Crus I; IFG: inferior frontal gyrus pars opercularis; IPL, inferior parietal lobule; M1, primary motor cortex; MFG, rostral middle frontal gyrus; Pl, globus pallidus (pallidum); Pt, putamen; STG, superior temporal gyrus; V.VI, vermis lobule VI.

To normalize the volumes of the cerebellar and cortical regions of interest from Freesurfer, we calculated the estimated total intracranial volume (TIV) for each participant. First, we segmented gray matter, white matter, and cerebrospinal fluid using the segment function in SPM8. The VBM8 (voxel based morphometry) toolbox (http://dbm.neuro.uni-jena.de/vbm/download/) was used to get the total volume for each of these tissue types, in each individual subject, in native space. While we used raw anatomical images in our analyses, the segmentation algorithm includes corrections for image intensity inhomogeneities. We calculated the sum of the gray matter, white matter, and cerebrospinal fluid volume for each individual to produce the estimated TIV. Recently, Ridgway et al. [2011] demonstrated that the SPM8 segmentation method implemented here does not differ in median TIV when compared with that calculated using manual methods, and the values were highly correlated with one another. Furthermore, although Freesurfer provides an estimated TIV value as well, the analyses of Ridgway et al. [2011] found that the SPM segmentation method had a median value closer to that of the manual methods than did Freesurfer. While these values did not differ significantly, we have chosen to use the SPM estimation for all brain volumes in these analyses to ensure consistency in our methods. Normalized volumes were calculated by dividing the regional volume by the estimated TIV. All regional volumes are presented as a percentage of the estimated TIV.

Statistical Analysis

All statistical analyses were performed using IBM SPSS 22 (IBM Corporation, Armonk, NY, 2012). To investigate the relationships between gesture type and regional brain volume, we used partial correlations between each brain region and gesture type. These partial correlations controlled for age, the total number of gestures that each participant completed during the 15 min of the interview that were coded (to control for variability in communicative style and content during the structured interview), and the total speech time (to control for variability in speech rate and the amount of speech that was associated with these gestures). In cases where there were significant findings, to investigate whether or not relationships with brain volume differed by gesture type, the correlations were statistically compared using a Fisher's r‐to‐z transformation implemented in VassarStats. A z‐test was then computed to investigate the differences in the two correlations.

To explore possible confounding explanations for our findings, we completed several post hoc analyses using additional brain regions. We investigated relationships with the caudate, putamen, pallidum, primary motor cortex, and right Crus I of the cerebellum. Again we used partial correlations. However, given the post hoc nature of these analyses, we also used a Bonferroni correction to account for each of these additional brain regions, such that findings were significant if P < 0.01.

Finally, we completed additional post hoc analyses on deictic (pointing gestures) and iconic (gestures mirroring the semantic content more literally than metaphoric gestures; for example, a person talking about climbing and simultaneously making a climbing motion with hands) gestures [McNeil, 1992]. During the coding process, these gesture types were coded as well, although they were not the primary focus of our analyses. The coding scheme and guidelines paralleled those used for metaphoric and beat gestures. Given the additional gesture types and the post hoc nature of this analysis, we again used a Bonferroni correction, such that findings were significant if P < 0.01.

RESULTS

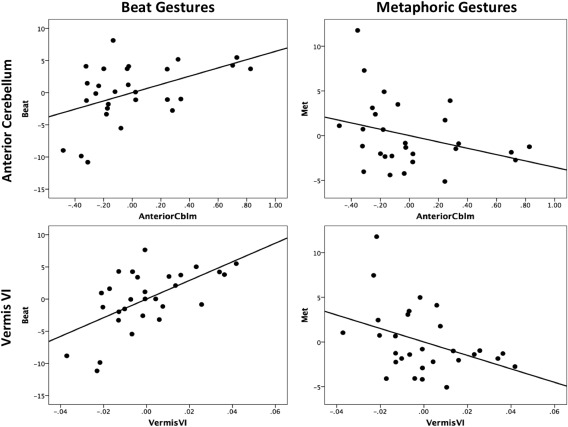

Controlling for both age, and the total number of gestures completed, the anterior cerebellum was significantly correlated with beat gestures (r (23) =0.471, P = 0.018; Fig. 2). Individuals with larger volume in this region produce more beat gestures. Metaphoric gestures showed a nonsignificant relationship with the anterior cerebellum in the opposite direction (r (23) = −0.320, P = 0.12). Importantly, we found that there was a significant difference between the two correlations (z = 2.67, P < 0.01). A similar pattern was seen when we investigated vermis lobule VI. As in the anterior cerebellum, larger volume in vermis lobule VI was associated with more beat gestures that were produced during the coding period (r (24) = 0.604, P = 0.001) when controlling for age and total number of gestures, and a strong trend in the opposite direction was seen for metaphoric gestures (r (24) = −0.387, P = 0.05). Again, the correlations with the two gesture types in this cerebellar region were significantly different (z = 3.59, P < 0.001).

Figure 2.

Partial correlations between the total number of beat (left) and metaphoric (right) gestures (vertical axes) and regional volume (as a % of TIV) in the cerebellum. Residuals, controlling for age, the total number of gestures completed, and total speech time are plotted. Both correlations with beat gestures are statistically significant. There is significant negative relationship between the number of metaphoric gestures and vermis VI, controlling for age, the total gestures produced during the coding period, and total speech time. Negative values indicate smaller volume or fewer gestures. The negative values are present due to the fact that these are the residuals from our partial correlations after controlling for the total number of gestures, speech time, and age.

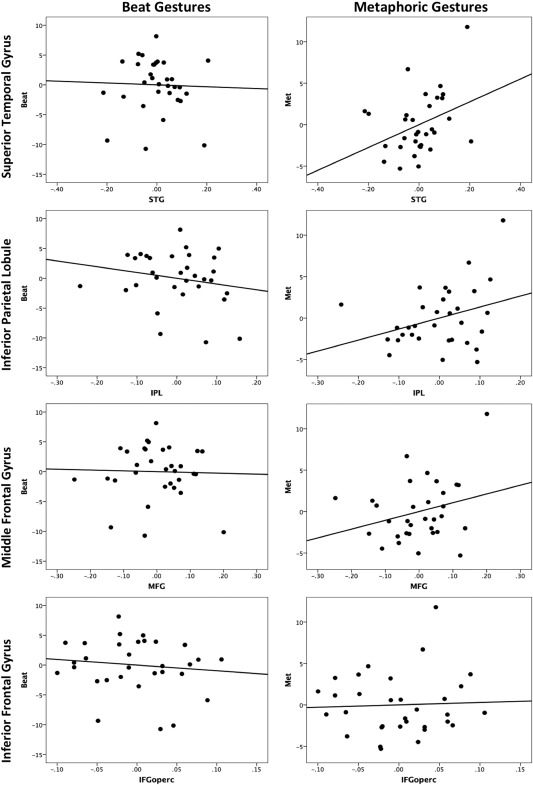

Differing relationships between regional brain volume and beat and metaphoric gestures were also seen when we investigated our cortical regions of interest. Scatterplots illustrating the partial correlations between gesture and cortical brain regions are presented in Figure 3. While there was no relationship between beat gestures and volume in the STG (r (27) = −0.033, P > 0.8), there was a marginally significant relationship, opposite in sign, for metaphoric gestures (r (27) = 0.358, P = 0.056). Thus, greater STG volume is associated with production of more metaphoric gestures. The difference between these two correlations, however, was only trend level (z = −1.41, P = 0.1).

Figure 3.

Partial correlations between the total number of beat (left) and metaphoric (right) gestures (vertical axes) and regional volume (as a % of TIV) in the cortex. Residuals, controlling for age, the total number of gestures completed, and total speech time are plotted. Controlling for age and total number of gestures completed, there is a marginally significant correlation between metaphoric gestures and STG volume, and the relationships with both the IPL and MFG are trend‐level. Beat gestures were not associated with volume in any of the cortical regions of interest. Negative values indicate smaller volume or fewer gestures. The negative values are present due to the fact that these are the residuals from our partial correlations after controlling for the total number of gestures, speech time, and age.

In the IPL, again, there was a nonsignificant although negative relationship between volume and beat gestures (r (27) = −0.195, P = 0.3), and a positive trend with metaphoric gestures (r (27) = 0.327, P = 0.08). This pattern matches that in the STG, and there was a marginally significant difference between the two correlations (z = −2.04, P = 0.06), although the metaphoric finding is in itself only a trend. The rostral MFG also showed this pattern with a trend‐level positive relationship with metaphoric gestures, and no relationship with beat gestures (beat: r (27) = −0.030, P > 0.8; metaphoric: r (27) = 0.276, P = 0.1), although these correlations did not differ significantly (z = −1.09, P = 0.27). Finally, in IFGoperc, there were no significant relationships with either beat (r (27) = −0.117, P > 0.5) or metaphoric (r (27) = 0.04, P > 0.8) gestures.

Post Hoc Analyses

The results described above provide evidence for a dissociation between the neural substrates of beat and metaphoric gesture production. That is, beat gestures are positively associated with cerebellar volumes, and metaphoric gestures are positively associated with cortical regions previously implicated in language and gesture processing (the STG, and to some extent IPL and rostral MFG as well). However, we pursued post hoc analyses particularly as beat gestures may also be associated with motor cortical regions that would be important for their production, or the basal ganglia, which have been implicated in beat processing and perception and timing [Grahn, 2009; Grahn and Brett, 2007; Grahn and Rowe, 2009; Harrington et al., 1998; Ivry and Spencer, 2004; Nenadic et al., 2003] given that these gestures are carefully timed with respect to spoken language [Leonard and Cummins, 2011]. Furthermore, the lateral cerebellum, particularly in the region of right Crus I, has been implicated in language [Koziol et al., 2013; Mariën et al., 2014; Stoodley and Schmahmann, 2009a; Stoodley et al., 2012], and as such it may also be positively associated with metaphoric gestures. Thus, we completed post hoc partial correlation analyses of the left primary motor cortex, caudate, putamen, globus pallidus, and right Crus I (corrected using estimated TIV; Fig. 1). Cortical and basal ganglia volumes were from Freesurfer, as described above, and the volume of right Crus I was calculated in a manner identical to the other cerebellar regions of interest. Again, we controlled for age and the total number of gestures made during the coding period.

None of the additional cortical seeds were associated with beat gestures (for all correlations: r (27) > −0.225, all Ps > 0.2), or with metaphoric gestures (for all correlations: r (27) < 0.235, all Ps > 0.2). Thus, the relationship between regional cerebellar volumes and beat gestures appears to be specific, and not associated with cortical regions that are implicated in beat/timing and motor processing. Right cerebellar Crus I was not associated with metaphoric gestures (r (24) = −0.214, P = 0.3), although there was an association with beat gestures, although it was not significant after Bonferonni correction (r (24) = 0.431, P = 0.032). Although not statistically significant, this trend indicates that the role of the cerebellum with respect to language related gestures may be limited to beat gestures.

Finally, to further probe the gesture taxonomy with respect to associations with brain volume, we investigated partial correlations between deictic (average of 2.72 ± 2.85) and iconic (average of 1.65 ± 1.86) gestures and our primary brain regions of interest (anterior cerebellum, Vermis VI, STG, IPL, MFG, IFG). Paralleling our primary analyses above, we controlled for age, total number of gestures produced, and total speech time. Iconic gestures were negatively associated with the STG, but this was not significant after multiple comparisons correction (r (23) = −0.43, P = 0.03), and there were no significant associations with any of our other primary brain regions of interest (for all correlations, r (23) > −0.29, P > 0.15). There were trend level relationships between deictic gestures and Vermis VI (r (23) = −0.35, P = 0.08) and MFG (r (23) = −0.34, P = 0.09), although again these were not significant after multiple comparisons, and were negative in direction. There were no significant relationships with our other regions of interest (r (23) > −0.24, P > 0.24). Together, this further supports the unique nature of beat gestures, although more work is needed to better understand the neural underpinnings of iconic and deictic gestures, as they are not strongly associated with any of the cortical regions investigated here.

DISCUSSION

Here, we investigated both beat and metaphoric gestures with respect to regional brain volumes, in a hypothesis driven manner. This novel investigation of gesture production combines naturalistic speech from interviews along with structural neuroimaging, taking advantage of novel analysis methods that allow for the investigation of cerebellar subregions [Bernard and Seidler, 2013b]. This study represents important new results about the neural underpinnings of gesture production, which is relatively understudied due to the challenges of brain imaging during co‐speech gesture production. Our results demonstrate that beat and metaphoric gestures are differentially associated with the cerebellum and cortical brain regions, respectively. In particular, beat gestures were correlated with volume in cerebellar regions that are associated with motor functioning and motor networks [Bernard and Seidler, 2013a; Bernard et al., 2012; Stoodley and Schmahmann, 2009a], as well as beat and timing [Penhune et al., 1998; Spencer et al., 2007]. Somewhat surprisingly, and contrary to our initial hypothesis, there were no associations between beat gestures and cortical regions, particularly the STG, previously implicated in language and gesture processing [Frey, 2008; Hubbard et al., 2009; Kircher et al., 2009; Straube et al., 2011; Xu et al., 2009]. However, as this is the first investigation to examine the neural substrates of the production of this gesture subtype, this information is important in its own right. Conversely, metaphoric gestures were correlated with cortical brain regions that have been previously been linked to gesture production and understanding [Kircher et al., 2009; Lindenberg et al., 2012; Straube et al., 2011; Wartenburger et al., 2010], as well as language more generally [Hubbard et al., 2009; Xu et al., 2009]. This finding suggests that beat gestures are a unique subtype of gesture mediated by the cerebellum, whereas metaphoric gestures are related to cortical regions more classically associated with language and gesture processing.

This is particularly interesting given the cerebellum's role in motor‐speech planning, and the timing of vocalizations [Ackermann et al., 2007] and the apparent function of beat gestures, which appear to keep time with the rhythm of speech to emphasize certain words or phrases. This finding is important for our understanding of both the taxonomy of gesture and gesture subtypes, but also for our understanding of the role of the cerebellum in language. Our results provide important evidence in support of the theoretical notion that beat gestures are a unique gesture subtype, related to timing as opposed to the semantic content of language. Unlike metaphoric gestures, which are tied to cortical language regions and the semantic content of speech, beat gestures do not share any of these language substrates and are uniquely tied to the cerebellum.

Beat gestures are distinct from metaphoric gestures not only due to their lack of semantic context, but also with respect to their neural correlates. The production of beat gestures seems to be uniquely related to cerebellar volume, particularly in regions that have been previously implicated in the timing of discrete movements [Penhune et al., 1998; Spencer et al., 2007]. This is not surprising, given that beat gestures themselves are indeed discrete movements. Our post hoc analyses revealed the basal ganglia, which have also been implicated in timing [Grahn, 2009; Grahn and Brett, 2007], are not associated with the production of beat gestures, nor is the primary motor cortex. Coupled with the null findings in our hypothesized language and gesture related areas, particularly the STG, this supports the idea that beat gestures are a unique gesture type, reliant specifically on the cerebellum. The precise timing of beat gestures with respect to the spoken language they are meant to emphasize [Leonard and Cummins, 2011] coupled with their relationship with timing regions associated with the cerebellum indicate that perhaps beat gestures are an artifact of an internal speech‐related timing mechanism.

Indeed, it has been suggested that the cerebellum is involved in the sequencing and production of speech vocalizations [Ackermann, 2008; Ackermann et al., 2007], particularly with respect to the timing of these vocalizations. It may be that beat gestures are an artifact of this language timing process, and provide further emphasis on key words or syllables. While there have been mixed results in the more recent literature regarding the role of the cerebellum in speech production and sequencing, it is known that the cerebellum plays a role in speech and language broadly, but more specifically in speech motor control [Manto et al., 2012; Mariën and Beaton, 2014; Mariën et al., 2014], and indeed it has been included as an important region in models of speech production [Hickok, 2012]. Thus, these beat gestures might be a more overt indicator of the precise timing involved in speech production, but are not related to the semantic content of speech further supporting their distinction as a unique gesture subtype. This is also consistent with the role of the cerebellum in motor timing more generally [Penhune et al., 1998; Spencer et al., 2007]. The trend‐level correlation between beat gestures and Crus I revealed in our post hoc analyses seems to support this to some degree. If the cerebellum is important for the timing of speech and vocalizations [Ackermann, 2008; Ackermann et al., 2007], and speech production more generally, we would expect to see relationships in areas of the cerebellum that have been implicated in language and language processing, as is the case with Crus I. Further investigation of the cerebellum and beat gestures is clearly warranted.

More generally, our findings also provide interesting new insight in the role of the cerebellum in language, particularly with respect to co‐speech gestures. The cerebellum has been implicated in language with respect to the timing of speech as discussed above, but also with respect to language processing and verbal working memory [Ackermann, 2008; Ackermann et al., 2007; Chen and Desmond, 2005; Koziol et al., 2013; Mariën et al., 2014; Stoodley and Schmahmann, 2009b]. However, the specific role of the cerebellum in language is not entirely clear, and remains open to speculation [Mariën et al., 2014]. Our findings indicate that although the cerebellum seems to be involved in motor speech planning, and the timing of vocalizations [Ackermann, 2008; Ackermann et al., 2007], the structure does not seem to be involved in the production of co‐speech gestures with abstract semantic content. Crucially, these associations were in an unexpected direction, although future work would benefit from investigating metaphoric gesture and the cerebellum further, particularly with respect to Vermis VI. This region showed a counterintuitive negative relationship with metaphoric gestures, making it difficult to interpret. The relationships between gesture and the cerebellum are largely related to beat gestures, and we suggest that this may be an artifact of the role of the cerebellum in the timing of speech vocalization.

Past work investigating gesture has largely been focused on the processing of gesture. That is, participants are watching individuals speak while performing gestures, or perform gestures without speech. These studies have revealed that gesture processing is supported by a network of left hemisphere regions (with some contributions from the right hemisphere), including the IFG, temporal lobe regions, MFG, and IPL [Hubbard et al., 2009; Kircher et al., 2009; Straube et al., 2011; Xu et al., 2009]. Work investigating gesture production with respect to the brain, particularly in a more naturalistic setting, has been much more limited [Wartenburger et al., 2010]. In part, this is due to the challenges of having an individual speak and gesticulate in an MRI scanner, given the resulting movement confounds. As such, this type of analysis is largely limited to correlations between gesture production and brain morphology, as done here and by Wartenburger et al. [2010]. While participants could produce gestures when signaled in the scanner, this takes away important context related to speech, and also limits the naturalistic aspects of gesture and language more generally.

Our findings here extend our understanding of metaphoric gestures to indicate that production of metaphoric gestures is associated with brain volumes in regions that have been linked to gesture processing, particularly the STG, but also to some extent the IPL and MFG. However, it is crucial to note that the IPL and MFG associations were only trends, and the relationship with the STG was marginally significant (P = 0.056). Greater volume in these regions was associated with more gesture production. Gesture discrimination is positively associated with gray matter in the STG [Nelissen et al., 2010], and it also seems to be associated with the production of metaphoric gestures. Thus, this network of brain regions that is important for gesture processing, also seems to be linked to gesture production at least to a degree. Future work taking advantage of naturalistic speech outside of a clinical setting will provide important insights into the associations between gray matter volume and metaphoric gesture production.

With that said, it is of note that we did not find any associations between metaphoric gestures and IFGoperc. Prior work investigating metaphoric gestures has implicated this region during metaphoric gesture processing [Kircher et al., 2009; Straube et al., 2011]. However, this region may be more important for the integration of speech and gesture information [Willems and Hagoort, 2007; Willems et al., 2009]. Furthermore, Straube et al. [2011] noted that activation in the IFG was more common in their conditions where the gesture and speech were mismatched. The authors speculate that this may be due to conflict monitoring and conflict resolution of the gesture‐speech mismatch [Straube et al., 2011]. As such, despite the semantic content of metaphoric gestures, this region may not be involved in their production, and is perhaps more important for gesture processing within the context of interpersonal communication.

Overall, our findings provide important new insights into relationships between both the cerebellum and regions of the left cortical hemisphere and gesture production. However, there are several limitations to consider. First, our population includes a wide age range, from 15 to 21 years of age. Although we controlled for the effects of age in all of our analyses, it is important to note that this time frame is also associated with further cortical and cerebellar development [Bernard et al., 2015; Gogtay et al., 2004; Hedman et al., 2013]. Furthermore, gesture production may differ further in older populations. Second, our hypotheses regarding greater volume and gesture production were determined based on the little existing work in this domain [Wartenburger et al., 2010]. While we suggest that greater regional volume allows for more use of co‐speech gestures, the directionality of this suggestion could certainly be reversed. It may also be that in individuals that gesticulate more, brain volume increases due to use, consistent with the notion of use‐dependent plasticity [Hänggi et al., 2010; Imfeld et al., 2009]. Testing this notion directly in our dataset is impossible, but it is important to consider both possible interpretations. Relatedly, the production of gesture, particularly metaphoric gesture, is tied to the semantic content of speech. Although we looked at the relative use of metaphoric and beat gestures, the interview format may not be ideal for producing the semantic content of speech needed to elicit these gestures. Indeed, as seen in Table 1, metaphoric gestures were not produced as frequently as beat gestures. Different interview contexts may be needed to produce more of this speech to better investigate metaphoric gestures. However, this also supports the notion that beat gestures are a distinct gesture subtype. Given the lack of semantic content, and our proposal that beat gestures are an artifact of cerebellar contributions to speech timing, having more beat gestures regardless of speech type and context would be expected. Thus, while metaphoric gestures are likely dependent on speech context and the use of metaphoric language, beat gestures would not be present regardless of context. Again, future analyses in different interview contexts are needed to test this notion directly. Similarly, our analyses depended on a taped interview, as opposed to a controlled task where all participants completed the same gesture, or set of gestures. As such, additional variability and noise may have been introduced into our dataset. However, we believe the more naturalistic interview is a useful approach given the context of gesture and speech in everyday life, although further work using a more controlled paradigm is necessary for replication and a better understanding of gesture production. Finally, our analyses were restricted to gray matter volume in healthy individuals, and were limited to only gesture production. However, both we and others have recently demonstrated that gestures differ in important clinical populations (e.g., psychosis and psychosis risk) [Millman et al., 2014; Straube et al., 2013, 2014] although this work has not included beat gestures. Investigating beat gestures in patient populations with respect to brain volumes in the future may be informative for our understanding of both the disease processes and co‐speech gestures, particularly in psychosis populations given their known cerebellar motor deficits [Bernard and Mittal, 2014, 2015; Bernard et al., 2014; Dean et al., 2013]. In addition, the inclusion of both white matter and resting state connectivity analyses is also likely to yield important new findings in future work, as both structural and functional connectivity between the IFG and temporal lobe regions has been implicated in gesture processing [Nelissen et al., 2010; Straube et al., 2014]. Considering these cortical and cerebellar regions as networks stands to provide important new insight into our understanding of gesture production.

CONCLUSIONS

Here, we provide evidence to indicate that beat gestures may be an especially unique gesture type. Not only do they lack semantic content and instead provide emphasis during communication, but they are uniquely associated with volume in regions of the cerebellum associated with the timing of discrete movements. Follow‐up control analyses of an additional cerebellar regions, as well as the basal ganglia and primary motor cortex provide further support for this idea. While there was a trend to implicate additional cerebellar regions in beat gesture production, both the cortical and subcortical regions associated with timing and motor production were not associated with these gestures (nor were they associated with metaphoric gestures). Somewhat surprisingly given their links to speech, there were no relationships in cortical regions previously associated with gesture processing and shown here to be linked to metaphoric gesture production. We suggest that beat gestures may be an artifact and overt representation of the role of the cerebellum in the timing of speech vocalizations. Furthermore, we found that left hemisphere regions that have previously been implicated in gesture processing are also related to metaphoric gesture production during naturalistic communication. Finally, these results indicate that although the cerebellum has been implicated in language (processing, verbal working memory, sequencing, and timing of vocalizations), the anterior and right lateral regions of the structure are not associated with metaphoric gesture. That is, these regions are not involved in the production of gestures with semantic content. Not only do these findings extend our knowledge of the left hemisphere language and gesture regions to indicate involvement in gesture production, but they provide important information regarding the taxonomy of co‐speech gestures. Beat gestures are distinct from metaphoric gestures not only due to their lack of semantic context, but also with respect to their neural correlates.

ACKNOWLEDGMENT

The authors wish to thank Tina Gupta and Johana Mejias for their time and help with the gesture coding process.

REFERENCES

- Ackermann H (2008): Cerebellar contributions to speech production and speech perception: Psycholinguistic and neurobiological perspectives. Trends Neurosci 31:265–272. [DOI] [PubMed] [Google Scholar]

- Ackermann H, Mathiak K, Riecker A (2007): The contribution of the cerebellum to speech production and speech perception: Clinical and functional imaging data. Cerebellum 6:202–213. [DOI] [PubMed] [Google Scholar]

- Andric M, Small SL (2012): Gesture's neural language. Front Psychol 3:99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants BB, Epstein CL, Grossman M, Gee JC (2008): Symmetric diffeomorphic image registration with cross‐correlation: Evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal 12:26–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates E, Dick F (2002): Language, gesture, and the developing brain. Dev Psychobiol 40:293–310. [DOI] [PubMed] [Google Scholar]

- Bernard JA, Mittal VA (2014): Cerebellar‐motor dysfunction in schizophrenia and psychosis‐risk: The importance of regional cerebellar analysis approaches. Front Psychiatry Schizophr 5:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Seidler RD (2013a): Cerebellar contributions to visuomotor adaptation and motor sequence learning: An ALE meta‐analysis. Front Hum Neurosci 7:27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Seidler RD (2013b): Relationships between regional cerebellar volume and sensorimotor and cognitive function in young and older adults. Cerebellum 12:721–737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Seidler RD, Hassevoort KM, Benson BL, Welsh RC, Wiggins JL, Jaeggi SM, Buschkuehl M, Monk CS, Jonides J, Peltier SJ (2012): Resting state cortico‐cerebellar functional connectivity networks: A comparison of anatomical and self‐organizing map approaches. Front Neuroanat 6:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Dean DJ, Kent JS, Orr JM, Pelletier‐Baldelli A, Lunsford‐Avery J, Gupta T, Mittal VA (2014): Cerebellar networks in individuals at ultra high‐risk of psychosis: Impact on postural sway and symptom severity. Hum Brain Mapp 35:4064–4078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Leopold DR, Calhoun VD, Mittal VA (2015): Regional cerebellar volume and cognitive function from adolescence to late middle age. Hum Brain Mapp 1120:1102–1120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernard JA, Mittal VA (2015): Dysfunctional activation of the cerebellum in schizophrenia: An ALE meta‐analysis. Clin Psychol Sci 3:545–566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen SHA, Desmond JE (2005): Cerebrocerebellar networks during articulatory rehearsal and verbal working memory tasks. Neuroimage 24:332–338. [DOI] [PubMed] [Google Scholar]

- Cocks N, Sautin L, Kita S, Morgan G, Zlotowitz S (2009): Gesture and speech integration: An exploratory study of a man with aphasia. Int J Lang Commun Disord 44:795–804. [DOI] [PubMed] [Google Scholar]

- Cocks N, Dipper L, Middleton R, Morgan G (2011): What can iconic gestures tell us about the language system? A case of conduction aphasia. Int J Lang Commun Disord 46:423–436. [DOI] [PubMed] [Google Scholar]

- Dale AM, Sereno MI (1993): Improved localization of cortical activity by combining EEG and MEG with MRI cortical surface reconstruction: A linear approach. J Cogn Neurosci 5:162–176. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fishcl B, Sereno MI (1999): Cortical surface‐based analysis. I. Segmentation and surface reconstruction. Neuroimage 9:179–194. [DOI] [PubMed] [Google Scholar]

- Dean DJ, Bernard JA, Orr JM, Pelletier‐Baldelli A, Gupta T, Carol EE, Mittal VA (2013): Cerebellar morphology and procedural learning impairment in neuroleptic‐naive youth at ultrahigh risk of psychosis. Clin Psychol Sci 2:152–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Smet HJ, Paquier P, Verhoeven J, Mariën P (2013): The cerebellum: Its role in language and related cognitive and affective functions. Brain Lang 127:334–342. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J (2006): A spatially unbiased atlas template of the human cerebellum. Neuroimage 33:127–138. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Balsters JH, Flavell J, Cussans E, Ramnani N (2009): A probabilistic MR atlas of the human cerebellum. Neuroimage 46:39–46. [DOI] [PubMed] [Google Scholar]

- Fischl B, Dale AM (2000): Measuring the thickness of the human cerebral cortex from magnetic resonance images. Proc Natl Acad Sci USA 97:11050–11055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Liu A, Dale AM (2001): Automated manifold surgery: Constructing geometrically accurate and topologically correct models of the human cerebral cortex. IEEE Trans Med Imaging 20:70–80. [DOI] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, van der Kouwe A, Killiany R, Kennedy D, Klaveness S, Montillo A, Makris N, Rosen B, Dale AM (2002): Whole brain segmentation: Automated labeling of neuroanatomical structures in the human brain. Neuron 33:341–355. [DOI] [PubMed] [Google Scholar]

- Fischl B, Salat DH, van der Kouwe AJW, Makris N, Ségonne F, Quinn BT, Dale AM (2004): Sequence‐independent segmentation of magnetic resonance images. Neuroimage 23 Suppl 1:S69–84. [DOI] [PubMed] [Google Scholar]

- Frey SH (2008): Tool use, communicative gesture and cerebral asymmetries in the modern human brain. Philos Trans R Soc Lond B Biol Sci 363:1951–1957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, Hayashi KM, Greenstein D, Vaituzis AC, Nugent TF, Herman DH, Clasen LS, Toga AW, Rapoport JL, Thompson PM (2004): Dynamic mapping of human cortical development during childhood through early adulthood. Proc Natl Acad Sci USA 101:8174–8179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grahn JA (2009): The role of the basal ganglia in beat perception: Neuroimaging and neuropsychological investigations. Ann NY Acad Sci 1169:35–45. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Brett M (2007): Rhythm and beat perception in motor areas of the brain. J Cogn Neurosci 19:893–906. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Rowe JB (2009): Feeling the beat: Premotor and striatal interactions in musicians and nonmusicians during beat perception. J Neurosci 29:7540–7548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green A, Straube B, Weis S, Jansen A, Willmes K, Konrad K, Kircher T (2009): Neural integration of iconic and unrelated coverbal gestures: A functional MRI study. Hum Brain Mapp 30:3309–3324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hänggi J, Koeneke S, Bezzola L, Jäncke L (2010): Structural neuroplasticity in the sensorimotor network of professional female ballet dancers. Hum Brain Mapp 31:1196–1206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrington DL, Haaland KY, Hermanowicz N (1998): Temporal processing in the basal ganglia. Neuropsychology 12:3–12. [DOI] [PubMed] [Google Scholar]

- Hedman AM, van Haren NEM, van Baal CGM, Kahn RS, Hulshoff Pol HE (2013): IQ change over time in schizophrenia and healthy individuals: A meta‐analysis. Schizophr Res 146:201–208. [DOI] [PubMed] [Google Scholar]

- Hickok G (2012): Computational neuroanatomy of speech production. Nat Rev Neurosci 13:135–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holle H, Gunter TC, Rüschemeyer S‐A, Hennenlotter A, Iacoboni M (2008): Neural correlates of the processing of co‐speech gestures. Neuroimage 39:2010–2024. [DOI] [PubMed] [Google Scholar]

- Hubbard AL, Wilson SM, Callan DE, Dapretto M (2009): Giving speech a hand: Gesture modulates activity in auditory cortex during speech perception. Hum Brain Mapp 30:1028–1037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imfeld A, Oechslin MS, Meyer M, Loenneker T, Jancke L (2009): White matter plasticity in the corticospinal tract of musicians: A diffusion tensor imaging study. Neuroimage 46:600–607. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin‐Meadow S (1998): Why people gesture when they speak. Nature 396:228. [DOI] [PubMed] [Google Scholar]

- Ivry RB, Keele SW (1989): Timing functions of the cerebellum. J Cogn Neurosci 1:136–152. [DOI] [PubMed] [Google Scholar]

- Ivry RB, Spencer RMC (2004): The neural representation of time. Curr Opin Neurobiol 14:225–232. [DOI] [PubMed] [Google Scholar]

- Ivry RB, Spencer RMC, Zelaznik HN, Diedrichsen J (2002): The cerebellum and event timing. Ann NY Acad Sci 978:302–317. [DOI] [PubMed] [Google Scholar]

- Jeannerod M (1994): The representing brain: Neural correlates of motor intention and imagery. Behav Brain Sci 17:187–201. [Google Scholar]

- Kendon A (1994): Do gestures communicate? A review. Res Lang Soc Interact 27:175–200. [Google Scholar]

- Kircher T, Straube B, Leube D, Weis S, Sachs O, Willmes K, Konrad K, Green A (2009): Neural interaction of speech and gesture: Differential activations of metaphoric co‐verbal gestures. Neuropsychologia 47:169–179. [DOI] [PubMed] [Google Scholar]

- Klein A, Andersson J, Ardekani BA, Ashburner J, Avants B, Chiang M‐C, Christensen GE, Collins DL, Gee J, Hellier P, Song JH, Jenkinson M, Lepage C, Rueckert D, Thompson P, Vercauteren T, Woods RP, Mann JJ, Parsey RV (2009): Evaluation of 14 nonlinear deformation algorithms applied to human brain MRI registration. Neuroimage 46:786–802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koziol LF, Budding D, Andreasen N, D'Arrigo S, Bulgheroni S, Imamizu H, Ito M, Manto M, Marvel C, Parker K, Pezzulo G, Ramnani N, Riva D, Schmahmann J, Vandervert L, Yamazaki T (2013): Consensus paper: The cerebellum's role in movement and cognition. Cerebellum 13:151–177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lanyon L, Rose ML (2009): Do the hands have it? The facilitation effects of arm and hand gesture on word retrieval in aphasia. Aphasiology 23:809–822. [Google Scholar]

- Lausberg H, Cruz RF, Kita S, Zaidel E, Ptito A (2003): Pantomime to visual presentation of objects: Left hand dyspraxia in patients with complete callosotomy. Brain 126:343–360. [DOI] [PubMed] [Google Scholar]

- Leiner HC, Leiner AL, Dow RS (1991): The human cerebro‐cerebellar system: Its computing, cognitive, and language skills. Behav Brain Res 44:113–128. [DOI] [PubMed] [Google Scholar]

- Leonard T, Cummins F (2011): The temporal relation between beat gestures and speech. Lang Cogn Process 26:1457–1471. [Google Scholar]

- Lesage E, Morgan BE, Olson AC, Meyer AS, Miall RC (2012): Cerebellar rTMS disrupts predictive language processing. Curr Biol 22:R794–R795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindenberg R, Uhlig M, Scherfeld D, Schlaug G, Seitz RJ (2012): Communication with emblematic gestures: Shared and distinct neural correlates of expression and reception. Hum Brain Mapp 33:812−823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manto M, Bower JM, Conforto AB, Delgado‐García JM, da Guarda SNF, Gerwig M, Habas C, Hagura N, Ivry RB, Mariën P, Molinari M, Naito E, Nowak DA, Oulad Ben Taib N, Pelisson D, Tesche CD, Tilikete C, Timmann D (2012): Consensus paper: Roles of the cerebellum in motor control—The diversity of ideas on cerebellar involvement in movement. Cerebellum 11:457–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mariën P, Beaton A (2014): The enigmatic linguistic cerebellum: clinical relevance and unanswered questions on nonmotor speech and language deficits in cerebellar disorders. Cerebellum Ataxias 1:12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mariën P, Ackermann H, Adamaszek M, Barwood CHS, Beaton A, Desmond J, De Witte E, Fawcett AJ, Hertrich I, Küper M, Leggio M, Marvel C, Molinari M, Murdoch BE, Nicolson RI, Schmahmann JD, Stoodley CJ, Thürling M, Timmann D, Wouters E, Ziegler W (2014): Consensus paper: Language and the cerebellum: An ongoing enigma. Cerebellum 13:386–410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeill D (1985): So you think gestures are nonverbal? Psychol Rev 92:350–371. [Google Scholar]

- McNeill D (1992): Hand and Mind. Chicago: The University of Chicago Press. [Google Scholar]

- McNeill D (2005): Gesture and Thought. Chicago: The University of Chicago Press. [Google Scholar]

- McNeill D, Pedelty LL (1995): Right brain and gesture In: Emorey K, Reilly JS, editors. Language, Gesture, and Space. Hillsdale, NJ: Erlbaum; pp 63–85. [Google Scholar]

- Millman ZB, Goss J, Schiffman J, Mejias J, Gupta T, Mittal VA (2014): Mismatch and lexical retrieval gestures are associated with visual information processing, verbal production, and symptomatology in youth at high risk for psychosis. Schizophr Res 158:64–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mittal VA, Tessner KD, McMillan AL, Delawalla Z, Trotman HD, Walker EF (2006): Gesture behavior in unmedicated schizotypal adolescents. J Abnorm Psychol 115:351–358. [DOI] [PubMed] [Google Scholar]

- Murdoch BE (2010): The cerebellum and language: Historical perspective and review. Cortex 46:858–868. [DOI] [PubMed] [Google Scholar]

- Nelissen N, Pazzaglia M, Vandenbulcke M, Sunaert S, Fannes K, Dupont P, Aglioti SM, Vandenberghe R (2010): Gesture discrimination in primary progressive aphasia: The intersection between gesture and language processing pathways. J Neurosci 30:6334–6341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nenadic I, Gaser C, Volz H‐P, Rammsayer T, Häger F, Sauer H (2003): Processing of temporal information and the basal ganglia: New evidence from fMRI. Exp Brain Res 148:238–246. [DOI] [PubMed] [Google Scholar]

- Ozyürek A, Willems RM, Kita S, Hagoort P (2007): On‐line integration of semantic information from speech and gesture: Insights from event‐related brain potentials. J Cogn Neurosci 19:605–616. [DOI] [PubMed] [Google Scholar]

- Park MTM, Pipitone J, Baer L, Winterburn JL, Shah Y, Chavez S, Schira MM, Lobaugh NJ, Lerch JP, Voineskos AN, Mallar Chakravarty M (2014): Derivation of high‐resolution MRI atlases of the human cerebellum at 3T and segmentation using multiple automatically generated templates. Neuroimage 95:217–231. [DOI] [PubMed] [Google Scholar]

- Penhune VB, Zattore RJ, Evans aC (1998): Cerebellar contributions to motor timing: A PET study of auditory and visual rhythm reproduction. J Cogn Neurosci 10:752–765. [DOI] [PubMed] [Google Scholar]

- Ridgway G, Barnes J, Pepple T, Fox N (2011): Estimation of total intracranial volume: A comparison of methods. Alzheimer's Dement J Alzheimer's Assoc 7:S62–S63. [Google Scholar]

- Scherer KR, Ekman P (1982): Handbook of methods in nonverbal behavior research. Cambridge: Cambridge University Press. [Google Scholar]

- Ségonne F, Dale AM, Busa E, Glessner M, Salat D, Hahn HK, Fischl B (2004): A hybrid approach to the skull stripping problem in MRI. Neuroimage 22:1060–1075. [DOI] [PubMed] [Google Scholar]

- Ségonne F, Pacheco J, Fischl B (2007): Geometrically accurate topology‐correction of cortical surfaces using nonseparating loops. IEEE Trans Med Imaging 26:518–529. [DOI] [PubMed] [Google Scholar]

- Sled JG, Zijdenbos AP, Evans AC (1998): A nonparametric method for automatic correction of intensity nonuniformity in MRI data. IEEE Trans Med Imaging 17:87–97. [DOI] [PubMed] [Google Scholar]

- Spencer RMC, Zelaznik HN, Diedrichsen J, Ivry RB (2003): Disrupted timing of discontinuous but not continuous movements by cerebellar lesions. Science 300:1437–1439. [DOI] [PubMed] [Google Scholar]

- Spencer RMC, Verstynen T, Brett M, Ivry R (2007): Cerebellar activation during discrete and not continuous timed movements: An fMRI study. Neuroimage 36:378–387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoodley CJ, Schmahmann JD (2009a): NeuroImage Functional topography in the human cerebellum: A meta‐analysis of neuroimaging studies. Neuroimage 44:489–501. [DOI] [PubMed] [Google Scholar]

- Stoodley CJ, Schmahmann JD (2009b): The cerebellum and language: Evidence from patients with cerebellar degeneration. Brain Lang 110:149–153. [DOI] [PubMed] [Google Scholar]

- Stoodley CJ, Valera EM, Schmahmann JD (2012): Functional topography of the cerebellum for motor and cognitive tasks: An fMRI study. Neuroimage 59:1560–1570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straube B, Green A, Bromberger B, Kircher T (2011): The differentiation of iconic and metaphoric gestures: Common and unique integration processes. Hum Brain Mapp 32:520–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straube B, Green A, Sass K, Kircher T (2014): Superior temporal sulcus disconnectivity during processing of metaphoric gestures in schizophrenia. Schizophr Bull 40:936–944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Straube B, Green A, Sass K, Kirner‐Veselinovic A, Kircher T (2013): Neural integration of speech and gesture in schizophrenia: Evidence for differential processing of metaphoric gestures. Hum Brain Mapp 34:1696–1712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Streeck J (1993): Gesture as communication I: Its coordination with gaze and speech. Commun Monogr 60:275–299. [Google Scholar]

- Thal DJ, Tobias S (1992): Communicative gestures in children with delayed onset of oral expressive vocabulary. J Speech Hear Res 35:1281–1289. [DOI] [PubMed] [Google Scholar]

- Thal DJ, Tobias S, Morrison D (1991): Language and Gesture in Late Talkers A 1‐Year Follow‐up. J Speech Hear Res 34:604–612. [DOI] [PubMed] [Google Scholar]

- Wartenburger I, Kühn E, Sassenberg U, Foth M, Franz EA, van der Meer E (2010): On the relationship between fluid intelligence, gesture production, and brain structure. Intelligence 38:193–201. [Google Scholar]

- Weier K, Fonov V, Lavoie K, Doyon J, Collins DL (2014): Rapid automatic segmentation of the human cerebellum and its lobules (RASCAL)‐implementation and application of the patch‐based label‐fusion technique with a template library to segment the human cerebellum. Hum Brain Mapp 35:5026–5039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willems RM, Hagoort P (2007): Neural evidence for the interplay between language, gesture, and action: a review. Brain Lang 101:278–289. [DOI] [PubMed] [Google Scholar]

- Willems RM, Ozyürek A, Hagoort P (2009): Differential roles for left inferior frontal and superior temporal cortex in multimodal integration of action and language. Neuroimage 47:1992–2004. [DOI] [PubMed] [Google Scholar]

- Xu J, Gannon PJ, Emmorey K, Smith JF, Braun AR (2009): Symbolic gestures and spoken language are processed by a common neural system. Proc Natl Acad Sci USA 106:20664–20669. [DOI] [PMC free article] [PubMed] [Google Scholar]