Abstract

The process for using statistical inference to establish personalized treatment strategies requires specific techniques for data-analysis that optimize the combination of competing therapies with candidate genetic features and characteristics of the patient and disease. A wide variety of methods have been developed. However, heretofore the usefulness of these recent advances has not been fully recognized by the oncology community, and the scope of their applications has not been summarized. In this paper, we provide an overview of statistical methods for establishing optimal treatment rules for personalized medicine and discuss specific examples in various medical contexts with oncology as an emphasis. We also point the reader to statistical software for implementation of the methods when available.

1. Introduction

Cancer is a set of diseases characterized by cellular alterations the complexity of which is defined at multiple levels of cellular organization [1, 2]. Personalized medicine attempts to combine a patient's genomic and clinical characteristics to devise a treatment strategy that exploits current understanding of the biological mechanisms of the disease [3, 4]. Recently the field has witnessed successful development of several molecularly targeted medicines, such as Trastuzumab, a drug developed to treat breast cancer patients with HER2 amplification and overexpression [5, 6]. However, successes have been limited. Only 13% of cancer drugs that initiated phase I from 1993 to 2004 attained final market approval by the US Food and Drug Administration (FDA) [7]. Moreover, from 2003 to 2011, 71.7% of new agents failed in phase II, and only 10.5% were approved by the FDA [8]. The low success rate can be partially explained by inadequate drug development strategies [3] and an overreliance on univariate statistical models that fail to account for the joint effects of multiple candidate genes and environmental exposures [9]. For example, in colorectal cancer there have been numerous attempts to develop treatments that target a single mutation, yet only one, an EGFR-targeted therapy for metastatic disease, is currently used in clinical practice [10].

In oncology, biomarkers are typically classified as either predictive or prognostic. Prognostic biomarkers are correlates for the extent of disease or extent to which the disease is curable. Therefore, prognostic biomarkers impact the likelihood of achieving a therapeutic response regardless of the type of treatment. By way of contrast, predictive biomarkers select patients who are likely or unlikely to benefit from a particular class of therapies [3]. Thus, predictive biomarkers are used to guide treatment selection for individualized therapy based on the specific attributes of a patient's disease. For example, BRAF V600-mutant is a widely known predictive biomarker which is used to guide the selection of Vemurafenib for treatment metastatic melanoma [11]. Biomarkers need not derive from single genes as those aforementioned and yet may arise from the combination of a small set of genes or molecular subtypes obtained from global gene expression profiles [6]. Recently, studies have shown that the Oncotype DX recurrence score, which is based on 21 genes, can predict a woman's therapeutic response to adjuvant chemotherapy for estrogen receptor-positive tumors [12, 13]. Interestingly, Oncotype DX was originally developed as a prognostic biomarker. In fact, prognostic gene expression signatures are fairly common in breast cancer [12, 14]. The reader may note that Oncotype DX was treated as a single biomaker and referred to as a gene expression based predictive classifier [3].

Statistically, predictive associations are identified using models with an interaction between a candidate biomarker and targeted therapy [15], whereas prognostic biomarkers are identified as significant main effects [16]. Thus, analysis strategies for identifying prognostic markers are often unsuitable for personalized medicine [17, 18]. In fact, the discovery of predictive biomarkers requires specific statistical techniques for data-analysis that optimize the combination of competing therapies with candidate genetic features and characteristics of the patient and disease. Recently, many statistical approaches have been developed providing researchers with new tools for identifying potential biomarkers. However, the usefulness of these recent advances has not been fully recognized by the oncology community, and the scope of their applications has not been summarized.

In this paper, we provide an overview of statistical methods for establishing optimal treatment rules for personalized medicine and discuss specific examples in various medical contexts with oncology as an emphasis. We also point the reader to statistical software when available. The various approaches enable investigators to ascertain the extent to which one should expect a new untreated patient to respond to each candidate therapy and thereby select the treatment that maximizes the expected therapeutic response for the specific patient [3, 19]. Section 2 discusses the limitations of conventional approaches based on post hoc stratified analysis. Section 3 offers an overview of the process for the development of personalized regimes. Section 4 discusses the selection of an appropriate statistical method for different types of clinical outcomes and data sources. Section 5 presents technical details for deriving optimal treatment selection rules. In Section 6, we discuss approaches for evaluating model performance and assessing the extent to which treatment selection using the derived optimal rule is likely to benefit future patients.

2. Limitations of Subgroup Analysis

Cancer is an inherently heterogeneous disease. Yet, often efforts to personalize therapy rely on the application of analysis strategies that neglect to account for the extent of heterogeneity intrinsic to the patient and disease and therefore are too reductive for personalizing treatment in many areas of oncology [20–23]. Subgroup analysis is often used to evaluate treatment effects among stratified subsets of patients defined by one or a few baseline characteristics [23–26]. For example, Thatcher et al. [21] conducted a series of preplanned subgroup analyses for refractory advanced non-small-cell lung cancer patients treated with Gefitinib plus best supportive care against placebo. Heterogeneous treatment effects were found in subgroups defined by smoking status; that is, significant prolonged survival was observed for nonsmokers, while no treatment benefit was found for smokers.

Though very useful when well planned and properly conducted, the reliance on subgroup analysis for developing personalized treatment has been criticized [24, 25]. Obviously, a subgroup defined by a few factors is inadequate for characterizing individualized treatment regimes that depends on multivariate synthesis. Moreover, post hoc implementation of multiple subgroup analyses considers a set of statistical inferences simultaneously (multiple testing), and errors, such as incorrectly rejecting the null hypothesis, are likely to occur. The extent to which the resulting inference inflates the risk of a false positive finding can be dramatic [23]. Take, for example, a recent study that concluded that chemotherapy followed by tamoxifen promises substantial clinical benefit for postmenopausal women with ER negative, lymph node-negative breast cancer [27] through post hoc application subgroup analysis. Subsequent studies failed to reproduce this result, concluding instead that the regime's clinical effects were largely independent of ER status [28], but may depend on other factors including age.

3. Personalized Medicine from a Statistical Perspective

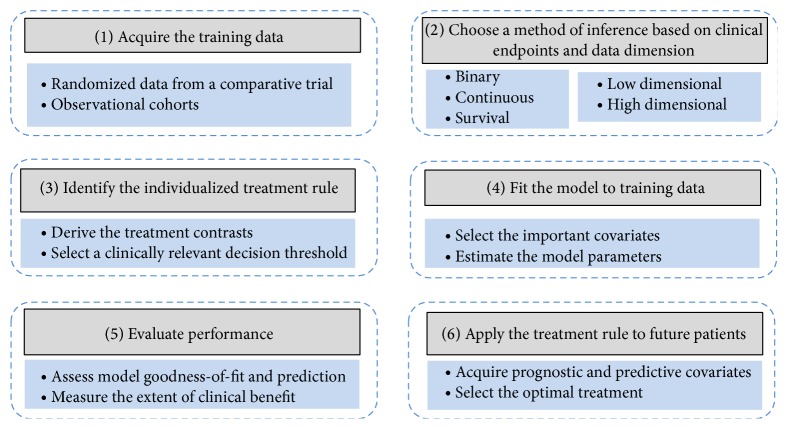

From a statistical perspective, personalized medicine is a process involving six fundamental steps provided in Figure 1 [20, 29, 30]. Intrinsic to any statistical inference, initially one must select an appropriate method of inference based on the available source of training data and clinical endpoints (e.g., steps (1) and (2)). Step (3) is the fundamental component of personalized treatment selection, deriving the individualized treatment rule (ITR) for the chosen method of inference. An ITR is a decision rule that identifies the optimal treatment given patient/disease characteristics [31, 32]. Section 5 is dedicated to the topic of establishing ITRs for various statistical models and types of clinical endpoints that are commonly used to evaluate treatment effectiveness in oncology.

Figure 1.

The process for using statistical inference to establish personalized treatment rules.

Individualized treatment rules are functions of model parameters (usually treatment contrasts reflecting differences in treatment effects) which must be estimated from the assumed statistical model and training data. Statistical estimation takes place in step 4. The topic is quite general, and it thus is not covered in detail owning to the fact that other authors have provided several effective expositions on model building strategies in this context [29, 33]. After estimating the optimal treatment rule in step (4), the resulting estimated ITR's performance and reliability must be evaluated before the model can be used to guide treatment selection [34]. The manner in which one assesses the performance of the derived ITR depends on the appropriate clinical utility (i.e., increased response rate or prolonged survival duration). Evaluation of model goodness-of-fit and appropriate summary statistics that use the available information to measure the extent to which future patients would benefit from application of the ITR is conducted in step (5) and will be discussed in Section 6. The ITR is applied to guide treatment selection for a future patient based on his/her baseline clinical and genetic characteristics as the final step.

4. Selecting an Appropriate Method of Inference

The quality of a treatment rule depends on the aptness of the study design used to acquire the training data, clinical relevance of the primary endpoints, statistical analysis plans for model selection and inference, and quality of the data. Randomized clinical trials (RCT) remain the gold standard study design for treatment comparison, since randomization mitigates bias arising from treatment selection. Methods for deriving ITRs using data from RCTs are described in Section 5.1. Data from well conducted observational studies provide useful sources of information as well, given that the available covariates can be used to account for potential sources of confounding due to selection bias. Predominately, methods based on propensity scores are used to adjust for confounding [35, 36]. Approaches for establishing ITRs using observational studies are discussed in Section 5.2.

The predominate statistical challenge pertaining to the identification of predictive biomarkers is the high-dimensional nature of molecular derived candidate features. Classical regression models cannot be directly applied since the number of covariates, for example, genes, is much larger than number of samples. Many approaches have been proposed to analyze high-dimensional data for prognostic biomarkers. Section 5.3 discusses several that can be applied to detect predictive biomarkers under proper modification.

In oncology, several endpoints are used to compare clinical effectiveness. However, the primary therapeutic goal is to extend survivorship or delay recurrence/progression. Thus, time-to-event endpoints are often considered to be the most representative of clinical effectiveness [37]. The approaches aforementioned were developed for ordinal or continuous outcomes and were thus not directly applicable for survival analysis. Methods for establishing ITRs from time-to-event endpoints often use Cox regression or accelerated failure time models [38, 39]. The later approach is particularly appealing in this context since the clinical benefits of prolonged survival time can be easily obtained [40, 41]. In Section 5.4, we will discuss both models.

The performance of ITRs for personalized medicine is highly dependent upon the extent to which the model assumptions are satisfied and/or the posited model is correctly specified. Specifically, performances may suffer from misspecification of main effects and/or interactions, random error distribution, violation of linear assumptions, sensitivity to outliers, and other potential sources of inadequacy [42]. Some advanced methodologies have been developed to overcome these issues [43], including semiparametric approaches that circumvent prespecification of the functional form of the relationship between biomarker and expected clinical response [32, 40]. In addition, optimal treatment rules can be defined without regression models, using classification approaches where patients are assigned to the treatment that provides the highest expected clinical benefit. Appropriate class labels can be defined by the estimated treatment difference (e.g., >0 versus ≤0), thereby enabling the use of machine learning and data mining techniques [42, 44, 45]. These will be discussed in Section 5.5.

5. Methods for Identifying Individualized Treatment Rules

This section provides details of analytical approaches that are appropriate identifying ITRs using a clinical data source. The very nature of treatment benefit is determined by the clinical endpoint. While extending overall survival is the ultimate therapeutic goal, often the extent of reduction in tumor size as assessed by RECIST criteria (http://www.recist.com/) is used as a categorical surrogate for long-term response. Alternatively, oncology trials often compare the extent to which the treatment delays locoregional recurrence or disease progression. Therefore, time-to-event and binary (as in absence/presence of partial or complete response) are the most commonly used endpoints in oncologic drug development [37, 46].

Let Y denote the observed outcome such as survival duration or response to the treatment, and let A ∈ {0,1} denote the treatment assignment with 0 indicating standard treatment and 1 for a new therapy. Denote the collection of observable data for a previously treated patient by (Y, A, X), where X = X 1, X 2,…, X p, represents a vector of values for the p biomarkers under study. Quantitatively, the optimal ITR derives from the following equation relating the observed response to the potential outcome attained under the alternative treatment

| (1) |

where Y (1) and Y (0) denote the potential outcomes that would be observed if the subject had been assigned to the new therapy or the standard treatment, respectively [32, 43]. Let E(Y∣A, X) = μ(A, X) denote the expected value of Y given A and X. The optimal treatment rule follows as

| (2) |

where I(·) is the indicator function. For instance, if I{μ(1, age > 50) − μ(0, age > 50) > 0} = 1, then the optimal rule would assign patients who are older than 50 to the new treatment. However, E(Y∣A, X) is actually a function of parameters, μ(A, X; β), denoted by β. The model needs to be “fitted” to the training data to obtain estimates of β, which we denote by . Hence for a patient with observed biomarkers X = x, the estimated optimal treatment rule is

| (3) |

The above equation pertains to steps (3) and (4) in Figure 1; that is, the parameter estimates from a fitted model are used to construct the personalized treatment rule. The remainder of this section instructs the readers how to identify ITRs for the various data types.

We classify the statistical methods presented in this section into five categories: methods based on multivariate and generalized linear regression for analysis of data acquired from RCT (Section 5.1) and observational studies (Section 5.2); methods based on penalized regression techniques for high-dimensional data (Section 5.3); methods for survival data (Section 5.4); and advanced methods based on robust estimation and machine learning techniques (Section 5.5).

5.1. Multiple Regression for Randomized Clinical Trial Data

Classical generalized linear models (GLM) can be used to develop ITRs in the presence of training data derived from randomized clinical study. The regression framework assumes that the outcome Y is a linear function of prognostic covariates, X 1; putative predictive biomarkers, X 2; the treatment indicator, A; and treatment-by-predictive interaction, AX 2:

| (4) |

Let Δ(X) = E(Y∣A = 1, X) − E(Y∣A = 0, X) = μ(A = 1, X) − μ(A = 0, X) denote the treatment contrast. The optimal treatment rule assigns a patient to the new treatment if Δ(X) > 0. For binary endpoints, the logistic regression model for μ(A, X) = P(Y = 1∣A, X) is defined such that

| (5) |

The treatment contrast Δ(X) can be calculated using E(Y∣A = a, X) = P(Y = 1∣A = a, X) = e ω(A,X)/(1 + e ω(A,X)) for a = 0,1, respectively. Similarly, an optimal ITR assigns a patient to the new treatment if Δ(X) > 0. This optimal treatment rule can be alternatively defined as g opt(X) = I{(β 3 + β 4 X 2) > 0} without the need to calculate the treatment contrast Δ(X) [43, 45].

Often one might want to impose a clinically meaningful minimal threshold, Δ(X) > δ, on the magnitude of treatment benefit before assigning patients to a novel therapy [45, 47]. For example, it may be desirable to require at least a 0.1 increase in response rate before assigning a therapy for which the long-term safety profile has yet to be established. The use of a threshold value can be applied to all methods. Without loss of generality, we assume δ = 0 unless otherwise specified. In addition, the reader should note that the approaches for constructing an ITR described above can be easily applied to linear regression models for continuous outcomes.

This strategy was used to develop an ITR for treatment of depression [19] using data collected from a RCT of 154 patients. In this case, the continuous outcome was based on posttreatment scores from the Hamilton Rating Scale for Depression. The authors constructed a personalized advantage index using the estimated treatment contrasts Δ(X), derived from five predictive biomarkers. A clinically significant threshold was selected, δ = 3, based on the National Institute for Health and Care Excellence criterion. The authors identified that 60% of patients in the sample would obtain a clinically meaningful advantage if their therapy decision followed the proposed treatment rule. The approaches discussed in this section can be easily implemented with standard statistical software, such as the R (http://www.r-project.org/) using the functions lm and glm [48].

5.2. Methods for Observational Data

Randomization attenuates bias arising from treatment selection, thereby providing the highest quality data for comparing competing interventions. However, due to ethical or financial constraints RCTs are often infeasible, thereby necessitating an observational study. Treatment selection is often based on a patient's prognosis. In the absence of randomization, the study design fails to ensure that patients on competing arms exhibit similar clinical and prognostic characteristics, thereby inducing bias.

However, in the event that the available covariates capture the sources of bias, a well conducted observational study can also provide useful information for constructing ITRs. For example, the two-gene ratio index (HOXB13:IL17BR) was first discovered as an independent prognostic biomarker for ER+ node-negative patients using retrospective data from 60 patients [49]. These findings were confirmed on an independent data set comprising 852 tumors, which was acquired from a tumor bank at the Breast Center of Baylor College of Medicine [50]. Interestingly, the two-gene ratio index (HOXB13:IL17BR) was reported to predict the benefit of treatment with letrozole in one recent independent study [51].

Methods based on propensity scores are commonly used to attenuate selection bias [35]. In essence, these approaches use the available covariates to attempt to diminish the effects of imbalances among variables that are not of interest for treatment comparison. Moreover, they have been shown to be robust in the presence of multiple confounders and rare events [52]. Generally, after adjusting for bias using propensity scores, the same principles for deriving ITRs from RCTs may be applied to the observational cohort.

The propensity score characterizes the probability of assigning a given treatment A from the available covariates, X [35]. Using our notation, the propensity score is π(X, ξ) = P(A = 1∣X, ξ), which can be modeled using logistic regression

| (6) |

where p is the number of independent variables used to construct the propensity score and ξ j represents the jth regression coefficient, which characterizes the jth covariate's partial effect. After fitting the data to obtain estimates for the regression coefficients, , the estimated probability of receiving new treatment can be obtained for each patient, , by inverting the logit function. The event that implies that the measured independent variables are reasonably “balanced” between treatment cohorts. In practice, one often includes as many baseline covariates into the propensity score model as permitted by the sample size.

Methods that use propensity scores can be categorized into four categories: matching, stratification, adjusting, and inverse probability weighted estimation [36, 53]. Matching and stratification aim to mimic RCTs by defining a new dataset using propensity scores such that outcomes are directly comparable between treatment cohorts [53]. These two approaches are well suited for conventional subgroup analysis but their application to personalized medicine has been limited. Regression adjustment or simply adjusting can be used to reduce bias due to residual differences in observed baseline covariates between treatment groups. This method incorporates the propensity scores as an independent variable in a regression model and therefore can be used in conjunction with all regression-based methods [36]. Methods involving inverse probability weighted estimators will be discussed in Section 5.5.1 [43].

Of course, propensity scores methods may only attenuate the effects of the important confounding variables that have been acquired by the study design. Casual inference in general is not robust to the presence of unmeasured confounders that influenced treatment assignment [35, 54, 55]. For the development of ITRs, predictive and important prognostic covariates can be incorporated in the regression model for the clinical outcome Y along with the propensity scores, while other covariates may be utilized only in the model for estimating the propensity scores. Hence, propensity score methods may offer the researcher a useful tool for controlling for potential confounding due to selection bias and maintaining a manageable number of prognostic and predictive covariates.

5.3. Methods for High-Dimensional Biomarkers

The methods presented in the previous sections are appropriate for identifying an ITR using a small set of biomarkers (low-dimensional). However, recent advances in molecular biology in oncology have enabled researchers to acquire vast amounts of genetic and genomic characteristics on individual patients. Often the number of acquired genomic covariates will exceed the sample size. Proper analysis of these high-dimensional data sources poses many analytical challenges. Several methods have been proposed specifically for analysis of high- dimensional covariates [56], although the majority of these methods are well suited only for the analysis of prognostic biomarkers. In what follows, we introduce variable selection methods that were developed to detect predictive biomarkers from high-dimensional sources as well as describing how to construct optimal ITRs from the final set of biomarkers.

An appropriate regression model can be defined generally as , where h 0(X) is an unspecified baseline mean function, β = (β 0, β 1,…, β q)T is a column vector of regression coefficients, and the design matrix. Subscript q denotes the total number of biomarkers, which may be larger than the sample size n. An ITR derives from evaluating the interactions in , not the baseline effect of the high-dimensional covariates h 0(X) [32]. Technically, function cannot be uniquely estimated using traditional maximum likelihood-based methods when q > n [57]. Yet, practically, many of the available biomarkers may not influence the optimal ITR [31]. Thus, the process for identify ITRs from a high-dimensional source requires that we first identify a sparse subset of predictive biomarkers that can be utilized for constructing the ITR.

Parameters for the specified model can be estimated using the following loss function:

| (7) |

where ϕ(X; γ) represents any arbitrary function characterizing the “baseline” relationship between X and Y (e.g., an intercept or an additive model). Here we let π(X i) = P(A i = 1∣X i) denote either a propensity score (for observational data) or a randomization probability (e.g., 0.5 given 1 : 1 randomization) for RCT data. If π(X) is known, estimation using this model yields unbiased estimates (asymptotically consistent) of the interaction effects β even if the main effects are not correctly specified, providing a robustness [32].

Penalized estimation provides the subset of relevant predictive markers that are extracted from the nonzero coefficients of the corresponding treatment-biomarker interaction terms of

| (8) |

where λ n is a tuning parameter which is often selected via cross validation and J is a shrinkage penalty. Different choices of J lead to different types of estimators. For example, the lasso penalized regression corresponds to J = 1 [58] and the adaptive lasso to , where is an initial estimate of β j [59]. With little modification, 8 can be solved using the LARS algorithm implemented with the R package of lars [32, 60, 61]. As we have shown before, a treatment rule can be defined from the parameter estimates as I{β 0 + β 1 X 1 + β 2 X 2 + ⋯+β q X q > 0}. Note this generic form may have zero estimates for some coefficients (e.g., ); hence an ITR can be equivalently constructed from the final estimated nonzero coefficients and the corresponding covariates.

Alternative penalized regression approaches include SCAD [62] and elastic-net [63]. All penalized approaches produce sparse solutions (i.e., identifying a small subset of predictive biomarkers); however the adaptive lasso is less effective when p > n. Methods that produce nonsparse models, such as ridge regression [57], are less preferable since ITRs based on many biomarkers are often unstable and less useful in practice [31]. Several packages in R offer implementation of penalized regression, such as parcor for ridge, lasso and adaptive lasso, and ncvreg for SCAD [64, 65].

Lu et al. [32] used a penalized regression approach to analyze data from the AIDS Clinical Trials Group Protocol 175 (ACTG175) [66]. In this protocol, 2,000 patients were equally randomized to one of four treatments: zidovudine (ZDV) monotherapy, ZDV + didanosine (ddI), ZDV + zalcitabine, and ddI monotherapy. CD4 count at 15–25 weeks postbaseline was the primary outcome and 12 baseline covariates were included in the analysis. The resulting treatment rule favored the combined regimes over ZDV monotherapy. Moreover, the treatment rule determined that ZDV + ddI should be preferred to ddI when I(71.59 + 1.07 × age − 0.18 × CD40 − 33.57 × homo) = 1, where CD40 represents baseline CD4 counts and homo represents homosexual activity. Based on this treatment rule, 878 patients would have benefited from treatment with ZDV + ddI.

5.4. Survival Analysis

Heretofore, we have discussed methods for continuous or binary outcomes, yet often investigators want to discern the extent to which a therapeutic intervention may alter the amount of time required before an event occurs. This type of statistical inference is referred to broadly as survival analysis. One challenge for survival analysis is that the outcomes may be only partially observable at the time of analysis due to censoring or incomplete follow-up. Survival analysis has been widely applied in cancer studies, often in association studies aimed to identify prognostic biomarkers [56, 67]. Here we discuss two widely used models for deriving ITRs using time-to-event data, namely, Cox regression and accelerated failure time models.

The Cox regression model follows as

| (9) |

where t is the survival time, λ 0(t) is an arbitrary baseline hazard function, and X 1, X 2 represent prognostic and predictive biomarkers, respectively. Each β characterizes the multiplicative effect on the hazard associated with a unit increase in the corresponding covariate. Therefore, Cox models are referred to as proportional hazards (PH) models.

Several authors have provided model building strategies [29] and approaches for treatment selection [20, 30, 68]. Following the previously outlined strategy, a naive approach for deriving an ITR uses the hazard ratio (new treatment versus the standard) as the treatment contrast, which can be calculated as Δ(X) = exp(β 4 + β 5 X 2). The ITR therefore is I{(β 4 + β 5 X 2) < 0}. There are obvious limitations to this approach. First, violations of the PH assumption yield substantially misleading results [69]. Moreover, even when the PH assumption is satisfied, because the Cox model does not postulate a direct relationship between the covariate (treatment) and the survival time, the hazard ratio fails to measure the extent to which the treatment is clinically valuable [38, 70].

Accelerated failure time (AFT) models provide an alternative semiparametric model. Here we introduce its application for high-dimensional data. Let T and C denote the survival and censoring times, and denote the observed data by where and δ = I(T < C). Define the log survival time as Y = log(T); a semiparametric regression model is given as , where h 0(X) is the unspecified baseline mean function. Similar to the previous section, the treatment rule is I{(β 0 + β 1 X 1 + β 2 X 2 + ⋯+β q X q) > 0}. Under the assumption of independent censoring, the AFT model parameters can be estimated by minimizing the following loss function:

| (10) |

where , π(X i) = P(A i = 1∣X i) is the propensity score or randomization probability, is the Kaplan-Meier estimator of the survival function of the censoring time, and ϕ(X; γ) characterizes any arbitrary function.

This method can be extended to accommodate more than two treatments simultaneously by specifying appropriate treatment indicators. For instance, the mean function can be modeled as for two treatment drugs versus the standard care. The ITR assigns the winning drug. Note this work was proposed by [40] and is an extension of [32] to the survival setting. Hence, it shares the robustness property and can be applied to observational data. For implementation, the same procedure can be followed to obtain estimates, with one addition step of calculating . There are several R packages for Kaplan-Meier estimates and Cox regression models. These sources can be found at http://cran.r-project.org/web/views/Survival.html. More details pertaining to statistical methods for survival analysis can be found here [71]. To compare treatment rules constructed from Cox and AFT models, for example, methods for measuring the extent of clinical effectiveness for an ITR will be discussed in Section 6.

We here present an example when an AFT model was used to construct an ITR for treatment of HIV [40]. The example derives from the AIDS Clinical Trials Group Protocol 175 that was discussed in Section 5.3 [32, 66]. In this case, the primary outcome variable was time (in days) to first ≥50% decline in CD4 count or an AIDS-defining event or death. A total of 12 covariates and four treatments (ZDV, ZDV + ddI, ZDV + zalcitabine, and ddI) were included. The four treatments were evaluated simultaneously. Patients receiving the standard care of ZDV monotherapy were considered as the reference group. Hence, three treatment contrasts (I ZDV+ddI, I ZDV+zalcitabine, and I ddI) were combined with various putative predictive covariates and compared with ZDV monotherapy. For example, gender was detected as the predictive covariate only for ddI monotherapy. The investigators assumed ϕ(X; γ) = γ 0. The treatment rule recommended 1 patient for ZDV monotherapy, while 729, 1216, and 193 patients were recommended for ZDV + ddI, ZDV + zalcitabine, and ddI, respectively.

5.5. Advanced Methods

5.5.1. Robust Inference

The performances of ITRs heretofore presented depend heavily on whether the statistical models were correctly specified. Recently there has been much attention focused on the development of more advanced methods and modeling strategies that are robust to various aspects of potential misspecification. We have already presented a few robust models that avoid specification of functional parametric relationships for main effects [32, 40]. Here, we introduce two more advanced methods widely utilized for ITRs that are robust to the type of misspecification issues commonly encountered in practice [42, 43].

Recall that the ITR for a linear model E(Y∣A = a, X) = μ(A = a, X; β) with two predictive markers follows as g(X, β) = I{(β 4 + β 5 X 2 + β 6 X 3) > 0}, where a = 0,1. The treatment rule of g(X, β) may use only a subset of the high-dimensional covariates (e.g., {X 2, X 3}), but it always depends on the correct specification of E(Y∣A = a, X). Defining a scaled version of β as η(β), the corresponding ITR is g(η, X) = g(X, β) = I(X 3 > η 0 + η 1 X 2), where η 0 = −β 4/β 6 and η 1 = β 5/β 6. If the model for μ(A, X; β) is indeed correctly specified, the treatment rules of g(X, β) and g(η, X) lead to the same optimal ITR. Hence, the treatment rule parameterized by η can be derived from a regression model or may be based on some key clinical considerations which enable evaluation of g(η, X) directly without reference to the regression model for μ(A, X; β).

Let C η = Ag(η, X)+(1 − A){1 − g(η, X)}, where C η = 1 indicates random assignment to an intervention that is recommended by the personalized treatment rule g(η, X). Let denote the randomization ratio or the estimated propensity score (as in previous section), and denote the potential outcome under the treatment rule estimated from the following model E(Y∣A = a, X) = μ(A, X; β). For example, if the treatment rule g(η, X) = 1, then . Two estimators of the expected response to treatment, the inverse probability weighted estimator (IPWE) and doubly robust AIPWE, are given as follows:

| (11) |

where . The optimal treatment rule follows as , where is estimated from the above models; a constraint, such as ‖η‖ = 1, is imposed to obtain a unique solution [43]. If the propensity score is correctly specified, the IPWE estimator yields robust (consistent) estimates; AIPWE is considered a doubly robust estimator since it produces consistent estimates when either propensity score or the model E(Y∣A = a, X) is misspecified, but not both [42, 43]. The companion R code is publicly available at http://onlinelibrary.wiley.com/doi/10.1111/biom.12191/suppinfo.

5.5.2. Data Mining and Machine Learning

The methods presented in Section 5.5.1 are robust against misspecification of regression models. Yet, they often require prespecification of the parametric form for the treatment rule (e.g., I(X 3 > η 0 + η 1 X 2)), which can be practically challenging [44]. Well established classification methods and other popular machine learning techniques can alternatively be customized to define treatment selection rules [44, 72, 73]; these methods avoid prespecification of the parametric form of the ITR. An ITR can be defined following a two-step approach: in the first step, treatment contrasts are estimated from a posited model and in the second step classification techniques are applied to determine the personalized treatment rules. For example, when only two treatments are considered, a new variable Z can be defined based on the treatment contrast; that is, Z = 1 if Δ(X) = {μ(A = 1, X) − μ(A = 0, X)} > 0 and Z = 0 otherwise. The absolute value of the treatment contrast W i = |Δ(X)| can be used in conjunction with a classification technique to define an appropriate ITR [44].

Unlike classification problems wherein the class labels are observed for the training data, the binary “response” variable Z, which serves as the class label, is not available in practice. Specifically, patients who are in the class Z = 1 have {μ(A = 1, X) > μ(A = 0, X)} and should therefore be treated with the new therapy; however these quantities need to be estimated, since patients are typically assigned to only one of the available treatments. This imparts flexibility for estimation of the optimal treatment regimes, since any of the previously discussed regression models and even some ensemble prediction methods such as random forest [74] can be used to construct the class labels and weights [44]. An ITR can be estimated from the dataset using any classification approach, where are subject specific misclassification weights [44, 45]. This includes popular classification methods such as adaptive boosting [75], support vector machines [76], and classification and regression trees (CART) [77]. At least one study has suggested that SVM outperforms other classification methods in this context, whereas random forest and boosting perform comparatively better than CART [78]. However, the performances of these classification algorithms are data dependent. Definitive conclusion pertaining to their comparative effectiveness in general has yet to be determined [78]. It shall be also noted that these classification methods can be also applied to high-dimensional data [45, 72].

One special case of this framework is the “virtue twins” approach [45]. Specifically, in the first step a random forest approach [74] is used to obtain the treatment contrasts. Then in the second step CART is used to classify subjects to the optimal treatment regime. The approach can be easily implemented in R using packages of randomForest [79] and rpart [80]. Very recently, Kang et al. [42] proposed a modified version of the adaptive boosting technique of Friedman et al. [75]. The algorithm iteratively fits a simple logistic regression model (“working model”) to estimate P(Y = 1∣A, X) and at each stage assigns higher weights to subjects whose treatment contrast is near zero. After a prespecified stopping criterion is met, an average of the treatment contrasts is calculated for each patient using all models fitted at each iteration. A subject is assigned to the new therapy if . The R code for the aforementioned boosting methods is publicly available at http://onlinelibrary.wiley.com/doi/10.1111/biom.12191/suppinfo.

Lastly, we present a breast cancer example where several biomarkers were combined to construct an optimal ITR. The data was collected in the Southwest Oncology Group (SOWG)-SS8814 trial [13] and analyzed with the machine learning approach of Kang et al. [42]. Three hundred and sixty-seven node-positive, ER-positive breast cancer patients were selected from the randomized trial of SOWG. A total of 219 received tamoxifen plus adjuvant chemotherapy and 148 was given tamoxifen alone. The outcome variable was defined as breast cancer recurrence at 5 years. The authors selected three genes, which had presented treatment-biomarker interactions in a multivariate linear logistic regression model [42]. Data were analyzed with logistic models, IPWE, AIPWE, logistic boosting, a single classification tree with treatment-biomarker interactions, and the proposed boosting approach with a classification tree as the working model. Each method identified different patient cohorts that could benefit from tamoxifen alone: these cohorts consisted of 184, 183, 128, 86, 263, and 217 patients, respectively (see Table 5 in [42]). In this analysis, the clinical benefits provided by these 6 treatment rules were not statistically different. Hence, investigators need to evaluate and compare ITRs in terms of the extent of expected clinical impact. This is considered in the next section.

6. Performance Evaluation for Individualized Treatment Rules

Heretofore, we have discussed various methodologies for the construction of ITR, while their performances need to be assessed before these rules can be implemented in clinical practice. Several aspects pertaining to the performance of a constructed ITR need to be considered. The first one is how well the ITR fits the data, and the second is how well the ITR performs compared with existing treatment allocation rules. The former is related to the concept of goodness-of-fit or predictive performance [34]. As the true optimal treatment groups are hidden, model fits may be evaluated by measuring the congruity between observed treatment contrasts and predicted ones [34, 47]. More details can be found in a recent paper by Janes et al. [47]. Performances of ITRs can be compared via assessment of a global summary measure, for example, prolonged survival time or reduced disease rate [40, 42]. Summary measures are also very useful for evaluating the extent to which an ITR may benefit patients when applied in practice. Moreover, it is essential that performance of an ITR is considered in comparison to business-as-usual procedures such as a naive rule that randomly allocates patients to treatment [81]. Summary measures will be discussed in Section 6.1. The effectiveness of an ITR should go beyond the training data set used to construct a treatment rule; cross-validation and bootstrapping techniques are often employed to assess the impact of ITRs on future patients [81] and will be discussed in Section 6.2.

6.1. Summary Measures

ITRs may be derived from different methodologies, and comparisons should be conducted with respect to the appropriate clinically summaries. A few summary measures for different types of outcomes have been proposed [19, 40, 42]; these measures quantify the direct clinical improvements obtained by applying an ITR in comparison with default methods for treatment allocation.

Binary Outcomes. Clinical effectiveness for binary clinical response is represented by the difference in disease rates (or treatment failure) induced by ITR versus a default strategy that allocates all patients to a standard treatment [42, 47, 82]. Let g opt(X) = I{μ(A = 1, X) − μ(A = 0, X) < 0}, be an optimal ITR. This difference is formally defined as

| (12) |

Note μ(A, X) needs to be estimated to construct the ITR yet parameters β are omitted for simplicity. Larger values of ΘB{g opt(X)} indicate increased clinical value for the biomarker driven ITR. A subset of patients that are recommended for new treatment (A = 1) under an ITR may have been randomly selected to receive it, while the remaining subset of “unlucky” patients would have received the standard treatment [19]. The summary measure of ΘB{g opt(X)} characterizes a weighted difference in the disease rates between the standard and the new treatments in a population wherein the constructed optimal ITR would recommend the new treatment g opt(X = 1). The weight is the proportion of patients identified by the optimal ITR for the new treatment and can be empirically estimated using the corresponding counts. For example, P{g opt(X) = 1} can be estimated using the number of patients recommended for the new treatment divided by the total sample size. A similar summary statistic can be derived for an alternative strategy allocating all patients to the new treatment. The summary could be applied to the aforementioned breast cancer example [42], for example, with the aim of finding a subgroup of patients who were likely to benefit from adjuvant chemotherapy, while those unlikely to benefit would be assigned tamoxifen alone to avoid the unnecessary toxicity and inconvenience of chemotherapy.

Continuous Variables. Another strategy for continuous data compares outcomes observed for “lucky” subjects, those who received the therapy that would have been recommended by the ITR based [81]. Further, one business-as-usual drug allocation procedure is randomizing treatment and standard care at the same probability of 0.5. A summary statistic is to measure the mean outcome under ITR compared to that obtained under random assignment, for instance, the mean decrease in Hamilton Rating Scale for Depression as discussed in Section 5.1 [19]. Define the summary measure as ΘC{g opt(X)} = μ{g opt(X), X} − μ{g rand(X), X}, where g rand(X) represents the randomization allocation procedure. The quantity of μ{g opt(X), X} represents the mean outcome under the constructed IRT that can be empirically estimated from the “lucky” subjects, and μ{g rand(X), X} can be estimated empirically from the sample means.

Alternatively, an ITR may be compared to an “optimal” drug that has showed universal benefits (a better drug on average) in a controlled trial. The clinical benefits of an “optimal” drug can be defined as μ{g best(X), X} = max{μ(A = 0, X), μ(A = 1, X)}; μ(A = a, X), and can be empirically estimated from the sample means of the new and standard treatments, respectively. Then the alternative summary measure is defined as ΘCalt = {g opt(X)} = μ{g opt(X), X} − μ{g best(X), X}.

Survival Data. For survival data, a clinically relevant measure is mean overall (or progression free) survival time. As survival time is continuous in nature, the identical strategy provided above for continuous outcomes can be employed here. However, because the mean survival time may not be well estimated from the observed data due to a high percentage of censored observations [40], an alternative mean restricted survival duration was proposed and defined as the population average event-free durations for a restricted time of t * [41, 83]. Often t * is chosen to cover the trial's follow-up period. Mathematically, it can be calculated by integrating the survival function of S(t) over the domain of (0, t *), that is, μ{g opt(X), X, t *} = ∫0 t* S(t)dt, and often estimated by the area under the Kaplan-Meier curve up to t * [84]. Thus, an ITR's potential to prolong survival can be calculated as ΘS{g opt(X), t *} = μ{g opt(X), X, t *} − μ{g rand(X), X, t *}.

6.2. Assessing Model Performance

The summaries heretofore discussed evaluate an optimal ITR for a given model and estimating procedure. Because these quantities are estimated conditionally given the observed covariates, they neglect to quantify the extent of marginal uncertainty for future patients. Hence an ITR needs to be internally validated if external data is not available [34]. Cross-validation (CV) and bootstrap resampling techniques are commonly used for this purpose [19, 42, 45, 81], and expositions on both approaches are well described elsewhere [33, 85, 86].

We here briefly introduce a process that was proposed by Kapelner et al. [81] in the setting of personalized medicine. Tenfold CV is commonly used in practice, where the whole data is randomly partitioned into 10 roughly equal-sized exclusive subsamples. All methods under consideration are applied to 9/10 of the data, excluding 1/10 as an independent testing data set. The process is repeated 10 times for each subsample. Considering the assignments recommended by the optimal ITRs, the summary measures can be calculated using results from each testing fold [45]. The CV process gives the estimated summary measures, and its variation can be evaluated using bootstrap procedures. Specifically, one draws a sample with replacement from the entire data and calculates the summary measure from 10-fold CV. This process will be repeated B times, where B is chosen for resolution of the resulting confidence intervals [81]. Using the summary measures as B new random samples, the corresponding mean and variances can be calculated empirically. Note that the summary measures compare two treatment rules, one for the optimal ITR and another naive rule (e.g., randomization).

The above procedure can be applied to all the methods we have discussed so far. The R software package TreatmentSelection (http://labs.fhcrc.org/janes/index.html) can be used to implement these methods for evaluating and comparing biomarkers for binary outcomes [47]. Very recently, an inferential procedure was proposed for continuous outcomes that is implemented in the publicly available R package “Personalized Treatment Evaluator” [81, 87]. Both methods consider data from RCTs with two arms for comparative treatments. These methods are, in general, applicable to regression model based methods but are not suitable for approaches based on classification techniques or penalized regression.

Next we present two examples. Recall in Section 5.5 that Kang et al. [42] reported the estimated clinical benefits of an ITR for breast cancer when compared to the default strategy of assigning all patients to adjuvant chemotherapy. The proposed approach (based on boosting and classification trees) achieved the highest value of the summary measure at 0.081 with 95% confidence interval (CI) (0.000,0.159) [42]. In the second example, introduced in Section 5.1 [19], the authors calculated the mean score of the Hamilton Rating Scale for Depression for two groups of subjects; groups were defined by randomly assigning patients to the “optimal” and “nonoptimal” therapy as defined by the ITR. The reported difference between the two groups was −1.78 with a P value of 0.09, which fails to attain a clinical significant difference of 3 [19]. The same data was analyzed by Kapelner et al. [81]. Following the discussed procedure, the authors reported the estimated values (and 95% CI) of ΘC{g opt(X)} and ΘCalt{g opt(X)} as −0.842(−2.657, −0.441) and −0.765(−2.362,0.134), respectively. The results, which fail to achieve clinical significance, were based on rigorous statistical methods and thus can be considered reliable estimates of the ITR's performance.

7. Discussion

As our understanding tumor heterogeneity evolves, personalized medicine will become standard medical practice in oncology. Therefore, it is essential that the oncology community uses appropriate analytical methods for identifying and evaluating the performance of personalized treatment rules. This paper provided an exposition of the process for using statistical inference to establish optimal individualized treatment rules using data acquired from clinical study. The quality of an ITR depends on the quality of the design used to acquire the data. Moreover, an ITR must be properly validated before it is integrated into clinical practice. Personalized medicine in some areas of oncology may be limited by the fact that biomarkers arising from a small panel of genes may never adequately characterize the extent of tumor heterogeneity inherent to the disease. Consequently, the available statistical methodology needs to evolve in order to optimally exploit global gene signatures for personalized medicine.

The bulk of our review focused on statistical approaches for treatment selection at a single time point. The reader should note that another important area of research considers optimal dynamic treatment regimes (DTRs) [88, 89], wherein treatment decisions are considered sequentially over the course of multiple periods of intervention using each patient's prior treatment history. Zhao and Zeng provide a summary of recent developments in this area [90].

Acknowledgments

Junsheng Ma was fully funded by the University of Texas MD Anderson Cancer Center internal funds. Brian P. Hobbs and Francesco C. Stingo were partially supported by the Cancer Center Support Grant (CCSG) (P30 CA016672).

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Reimand J., Wagih O., Bader G. D. The mutational landscape of phosphorylation signaling in cancer. Scientific Reports. 2013;3, article 2651 doi: 10.1038/srep02651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hanahan D., Weinberg R. A. Hallmarks of cancer: the next generation. Cell. 2011;144(5):646–674. doi: 10.1016/j.cell.2011.02.013. [DOI] [PubMed] [Google Scholar]

- 3.Simon R. Clinical trial designs for evaluating the medical utility of prognostic and predictive biomarkers in oncology. Personalized Medicine. 2010;7(1):33–47. doi: 10.2217/pme.09.49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bedard P. L., Hansen A. R., Ratain M. J., Siu L. L. Tumour heterogeneity in the clinic. Nature. 2013;501(7467):355–364. doi: 10.1038/nature12627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pegram M. D., Pauletti G., Slamon D. J. Her-2/neu as a predictive marker of response to breast cancer therapy. Breast Cancer Research and Treatment. 1998;52(1–3):65–77. doi: 10.1023/a:1006111117877. [DOI] [PubMed] [Google Scholar]

- 6.Kelloff G. J., Sigman C. C. Cancer biomarkers: selecting the right drug for the right patient. Nature Reviews Drug Discovery. 2012;11(3):201–214. doi: 10.1038/nrd3651. [DOI] [PubMed] [Google Scholar]

- 7.DiMasi J. A., Reichert J. M., Feldman L., Malins A. Clinical approval success rates for investigational cancer drugs. Clinical Pharmacology and Therapeutics. 2013;94(3):329–335. doi: 10.1038/clpt.2013.117. [DOI] [PubMed] [Google Scholar]

- 8.Hay M., Thomas D. W., Craighead J. L., Economides C., Rosenthal J. Clinical development success rates for investigational drugs. Nature Biotechnology. 2014;32(1):40–51. doi: 10.1038/nbt.2786. [DOI] [PubMed] [Google Scholar]

- 9.Knox S. S. From ‘omics’ to complex disease: a systems biology approach to gene-environment interactions in cancer. Cancer Cell International. 2010;10, article 11 doi: 10.1186/1475-2867-10-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Deschoolmeester V., Baay M., Specenier P., Lardon F., Vermorken J. B. A review of the most promising biomarkers in colorectal cancer: one step closer to targeted therapy. The Oncologist. 2010;15(7):699–731. doi: 10.1634/theoncologist.2010-0025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sosman J. A., Kim K. B., Schuchter L., et al. Survival in braf V600–mutant advanced melanoma treated with vemurafenib. The New England Journal of Medicine. 2012;366(8):707–714. doi: 10.1056/nejmoa1112302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Paik S., Shak S., Tang G., et al. A multigene assay to predict recurrence of tamoxifen-treated, node-negative breast cancer. The New England Journal of Medicine. 2004;351(27):2817–2826. doi: 10.1056/nejmoa041588. [DOI] [PubMed] [Google Scholar]

- 13.Albain K. S., Barlow W. E., Shak S., et al. Prognostic and predictive value of the 21-gene recurrence score assay in postmenopausal women with node-positive, oestrogen-receptor-positive breast cancer on chemotherapy: a retrospective analysis of a randomised trial. The Lancet Oncology. 2010;11(1):55–65. doi: 10.1016/S1470-2045(09)70314-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lang J. E., Wecsler J. S., Press M. F., Tripathy D. Molecular markers for breast cancer diagnosis, prognosis and targeted therapy. Journal of Surgical Oncology. 2015;111(1):81–90. doi: 10.1002/jso.23732. [DOI] [PubMed] [Google Scholar]

- 15.Werft W., Benner A., Kopp-Schneider A. On the identification of predictive biomarkers: detecting treatment-by-gene interaction in high-dimensional data. Computational Statistics and Data Analysis. 2012;56(5):1275–1286. doi: 10.1016/j.csda.2010.11.019. [DOI] [Google Scholar]

- 16.Jenkins M., Flynn A., Smart T., et al. A statistician's perspective on biomarkers in drug development. Pharmaceutical Statistics. 2011;10(6):494–507. doi: 10.1002/pst.532. [DOI] [PubMed] [Google Scholar]

- 17.Vickers A. J., Kattan M. W., Sargent D. J. Method for evaluating prediction models that apply the results of randomized trials to individual patients. Trials. 2007;8(1, article 14) doi: 10.1186/1745-6215-8-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Janes H., Pepe M. S., Bossuyt P. M., Barlow W. E. Measuring the performance of markers for guiding treatment decisions. Annals of Internal Medicine. 2011;154(4):253–259. doi: 10.7326/0003-4819-154-4-201102150-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.DeRubeis R. J., Cohen Z. D., Forand N. R., Fournier J. C., Gelfand L. A., Lorenzo-Luaces L. The personalized advantage index: translating research on prediction into individualized treatment recommendations. A demonstration. PLoS ONE. 2014;9(1) doi: 10.1371/journal.pone.0083875.e83875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Byar D. P., Corle D. K. Selecting optimal treatment in clinical trials using covariate information. Journal of Chronic Diseases. 1977;30(7):445–459. doi: 10.1016/0021-9681(77)90037-6. [DOI] [PubMed] [Google Scholar]

- 21.Thatcher N., Chang A., Parikh P., et al. Gefitinib plus best supportive care in previously treated patients with refractory advanced non-small-cell lung cancer: results from a randomised, placebo-controlled, multicentre study (iressa survival evaluation in lung cancer) The Lancet. 2005;366(9496):1527–1537. doi: 10.1016/s0140-6736(05)67625-8. [DOI] [PubMed] [Google Scholar]

- 22.Vickers A. J. Prediction models in cancer care. CA: A Cancer Journal for Clinicians. 2011;61(5):315–326. doi: 10.3322/caac.20118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Simon R. M. Wiley Encyclopedia of Clinical Trials. Hoboken, NJ, USA: John Wiley & Sons; 2007. Subgroup analysis. [Google Scholar]

- 24.Pocock S. J., Assmann S. E., Enos L. E., Kasten L. E. Subgroup analysis, covariate adjustment and baseline comparisons in clinical trial reporting: current practice and problems. Statistics in Medicine. 2002;21(19):2917–2930. doi: 10.1002/sim.1296. [DOI] [PubMed] [Google Scholar]

- 25.Rothwell P. M., Mehta Z., Howard S. C., Gutnikov S. A., Warlow C. P. From subgroups to individuals: general principles and the example of carotid endarterectomy. The Lancet. 2005;365(9455):256–265. doi: 10.1016/s0140-6736(05)17746-0. [DOI] [PubMed] [Google Scholar]

- 26.Wang R., Lagakos S. W., Ware J. H., Hunter D. J., Drazen J. M. Statistics in medicine—reporting of subgroup analyses in clinical trials. The New England Journal of Medicine. 2007;357(21):2108–2194. doi: 10.1056/nejmsr077003. [DOI] [PubMed] [Google Scholar]

- 27.International Breast Cancer Study Group. Endocrine responsiveness and tailoring adjuvant therapy for postmenopausal lymph node-negative breast cancer: a randomized trial. Journal of the National Cancer Institute. 2002;94(14):1054–1065. doi: 10.1093/jnci/94.14.1054. [DOI] [PubMed] [Google Scholar]

- 28.Early Breast Cancer Trialists' Collaborative Group (EBCTCG) Effects of chemotherapy and hormonal therapy for early breast cancer on recurrence and 15-year survival: an overview of the randomised trials. The Lancet. 2005;365(9472):1687–1717. doi: 10.1016/s0140-6736(05)66544-0. [DOI] [PubMed] [Google Scholar]

- 29.Harrell F. E., Lee K. L., Mark D. B. Tutorial in biostatistics multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Statistics in Medicine. 1996;15(4):361–387. doi: 10.1002/(SICI)1097-0258(19960229)15:4<361::AID-SIM168>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- 30.Gill S., Loprinzi C. L., Sargent D. J., et al. Pooled analysis of fluorouracil-based adjuvant therapy for stage II and III colon cancer: who benefits and by how much? Journal of Clinical Oncology. 2004;22(10):1797–1806. doi: 10.1200/jco.2004.09.059. [DOI] [PubMed] [Google Scholar]

- 31.Qian M., Murphy S. A. Performance guarantees for individualized treatment rules. The Annals of Statistics. 2011;39(2):1180–1210. doi: 10.1214/10-AOS864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lu W., Zhang H. H., Zeng D. Variable selection for optimal treatment decision. Statistical Methods in Medical Research. 2013;22(5):493–504. doi: 10.1177/0962280211428383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI '95); 1995; pp. 1137–1145. [Google Scholar]

- 34.Steyerberg E. W., Vickers A. J., Cook N. R., et al. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology. 2010;21(1):128–138. doi: 10.1097/ede.0b013e3181c30fb2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rosenbaum P. R., Rubin D. B. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41–55. doi: 10.1093/biomet/70.1.41. [DOI] [Google Scholar]

- 36.d'Agostino R. B., Jr. Tutorial in biostatistics: propensity score methods for bias reduction in the comparison of a treatment to a non-randomized control group. Statistics in Medicine. 1998;17(19):2265–2281. doi: 10.1002/(sici)1097-0258(19981015)17:1960;2265::aid-sim91862;3.0.co;2-b. [DOI] [PubMed] [Google Scholar]

- 37.Pazdur R. Endpoints for assessing drug activity in clinical trials. The Oncologist. 2008;13(supplement 2):19–21. doi: 10.1634/theoncologist.13-s2-19. [DOI] [PubMed] [Google Scholar]

- 38.Spruance S. L., Reid J. E., Grace M., Samore M. Hazard ratio in clinical trials. Antimicrobial Agents and Chemotherapy. 2004;48(8):2787–2792. doi: 10.1128/AAC.48.8.2787-2792.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kalbeisch J. D., Prentice R. L. The Statistical Analysis of Failure Time Data. Vol. 360. John Wiley & Sons; 2011. [Google Scholar]

- 40.Geng Y. Flexible Statistical Learning Methods for Survival Data: Risk Prediction and Optimal Treatment Decision. North Carolina State University; 2013. [Google Scholar]

- 41.Li J., Zhao L., Tian L., et al. A Predictive Enrichment Procedure to Identify Potential Responders to a New Therapy for Randomized, Comparative, Controlled Clinical Studies. Harvard University; 2014. (Harvard University Biostatistics Working Paper Series, Working Paper 169). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kang C., Janes H., Huang Y. Combining biomarkers to optimize patient treatment recommendations. Biometrics. 2014;70(3):695–720. doi: 10.1111/biom.12192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhang B., Tsiatis A. A., Laber E. B., Davidian M. A robust method for estimating optimal treatment regimes. Biometrics. 2012;68(4):1010–1018. doi: 10.1111/j.1541-0420.2012.01763.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zhang B., Tsiatis A. A., Davidian M., Zhang M., Laber E. Estimating optimal treatment regimes from a classification perspective. Stat. 2012;1(1):103–114. doi: 10.1002/sta.411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Foster J. C., Taylor J. M. G., Ruberg S. J. Subgroup identification from randomized clinical trial data. Statistics in Medicine. 2011;30(24):2867–2880. doi: 10.1002/sim.4322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.US Food and Drug Administration. Guidance for Industry: Clinical Trial Endpoints for the Approval of Cancer Drugs and Biologics. Washington, DC, USA: US Food and Drug Administration; 2007. [Google Scholar]

- 47.Janes H., Brown M. D., Pepe M., Huang Y. Statistical methods for evaluating and comparing biomarkers for patient treatment selection. UW Biostatistics Working Paper Series, Working Paper. 2013;(389)

- 48.R Development Core Team. R: a language and environment for statistical computing, 2008, http://www.R-project.org/

- 49.Ma X.-J., Wang Z., Ryan P. D., et al. A two-gene expression ratio predicts clinical outcome in breast cancer patients treated with tamoxifen. Cancer Cell. 2004;5(6):607–616. doi: 10.1016/j.ccr.2004.05.015. [DOI] [PubMed] [Google Scholar]

- 50.Ma X.-J., Hilsenbeck S. G., Wang W., et al. The HOXB13:IL17BR expression index is a prognostic factor in early-stage breast cancer. Journal of Clinical Oncology. 2006;24(28):4611–4619. doi: 10.1200/JCO.2006.06.6944. [DOI] [PubMed] [Google Scholar]

- 51.Sgroi D. C., Carney E., Zarrella E., et al. Prediction of late disease recurrence and extended adjuvant letrozole benefit by the HOXB13/IL17BR biomarker. Journal of the National Cancer Institute. 2013;105(14):1036–1042. doi: 10.1093/jnci/djt146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Cepeda M. S., Boston R., Farrar J. T., Strom B. L. Comparison of logistic regression versus propensity score when the number of events is low and there are multiple confounders. The American Journal of Epidemiology. 2003;158(3):280–287. doi: 10.1093/aje/kwg115. [DOI] [PubMed] [Google Scholar]

- 53.Austin P. C. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate Behavioral Research. 2011;46(3):399–424. doi: 10.1080/00273171.2011.568786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Heinze G., Jüni P. An overview of the objectives of and the approaches to propensity score analyses. European Heart Journal. 2011;32(14):1704–1708. doi: 10.1093/eurheartj/ehr031.ehr031 [DOI] [PubMed] [Google Scholar]

- 55.Braitman L. E., Rosenbaum P. R. Rare outcomes, common treatments: analytic strategies using propensity scores. Annals of Internal Medicine. 2002;137(8):693–695. doi: 10.7326/0003-4819-137-8-200210150-00015. [DOI] [PubMed] [Google Scholar]

- 56.Witten D. M., Tibshirani R. Survival analysis with high-dimensional covariates. Statistical Methods in Medical Research. 2010;19(1):29–51. doi: 10.1177/0962280209105024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hoerl A. E., Kennard R. W. Ridge regression: biased estimation for nonorthogonal problems. Technometrics. 2000;42(1):80–86. doi: 10.1080/00401706.2000.10485983. [DOI] [Google Scholar]

- 58.Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B. Methodological. 1996;58(1):267–288. [Google Scholar]

- 59.Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101(476):1418–1429. doi: 10.1198/016214506000000735. [DOI] [Google Scholar]

- 60.Efron B., Hastie T., Johnstone I., Tibshirani R. Least angle regression. The Annals of Statistics. 2004;32(2):407–499. doi: 10.1214/009053604000000067. [DOI] [Google Scholar]

- 61.Hastie T., Efron B. lars: Least angle regression, lasso and forward stagewise. R package version 1.2, 2013, http://cran.r-project.org/web/packages/lars/index.html.

- 62.Fan J., Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96(456):1348–1360. doi: 10.1198/016214501753382273. [DOI] [Google Scholar]

- 63.Zou H., Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society. Series B. Statistical Methodology. 2005;67(2):301–320. doi: 10.1111/j.1467-9868.2005.00503.x. [DOI] [Google Scholar]

- 64.Krämer N., Schäfer J., Boulesteix A.-L. Regularized estimation of large-scale gene association networks using graphical gaussian models. BMC Bioinformatics. 2009;10(1, article 384) doi: 10.1186/1471-2105-10-384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Breheny P., Huang J. Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. The Annals of Applied Statistics. 2011;5(1):232–253. doi: 10.1214/10-AOAS388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Hammer S. M., Katzenstein D. A., Hughes M. D., et al. A trial comparing nucleoside monotherapy with combination therapy in HIV-infected adults with CD4 cell counts from 200 to 500 per cubic millimeter. The New England Journal of Medicine. 1996;335(15):1081–1090. doi: 10.1056/nejm199610103351501. [DOI] [PubMed] [Google Scholar]

- 67.Bøvelstad H. M., Nygård S., Størvold H. L., et al. Predicting survival from microarray data—a comparative study. Bioinformatics. 2007;23(16):2080–2087. doi: 10.1093/bioinformatics/btm305. [DOI] [PubMed] [Google Scholar]

- 68.Kehl V., Ulm K. Responder identification in clinical trials with censored data. Computational Statistics and Data Analysis. 2006;50(5):1338–1355. doi: 10.1016/j.csda.2004.11.015. [DOI] [Google Scholar]

- 69.Royston P., Parmar M. K. The use of restricted mean survival time to estimate the treatment effect in randomized clinical trials when the proportional hazards assumption is in doubt. Statistics in Medicine. 2011;30(19):2409–2421. doi: 10.1002/sim.4274. [DOI] [PubMed] [Google Scholar]

- 70.Royston P., Parmar M. K. B. Restricted mean survival time: an alternative to the hazard ratio for the design and analysis of randomized trials with a time-to-event outcome. BMC Medical Research Methodology. 2013;13(1, article 152) doi: 10.1186/1471-2288-13-152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Lee E. T., Wang J. W. Statistical Methods for Survival Data Analysis. Hoboken, NJ, USA: John Wiley & Sons; 2013. [Google Scholar]

- 72.Zhao Y., Zeng D., Rush A. J., Kosorok M. R. Estimating individualized treatment rules using outcome weighted learning. Journal of the American Statistical Association. 2012;107(499):1106–1118. doi: 10.1080/01621459.2012.695674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Rubin D. B., van der Laan M. J. Statistical issues and limitations in personalized medicine research with clinical trials. The International Journal of Biostatistics. 2012;8(1):1–20. doi: 10.1515/1557-4679.1423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Breiman L. Random forests. Machine Learning. 2001;45(1):5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 75.Friedman J., Hastie T., Tibshirani R. Additive logistic regression: a statistical view of boosting. The Annals of Statistics. 2000;28(2):337–407. doi: 10.1214/aos/1016218223. [DOI] [Google Scholar]

- 76.Cortes C., Vapnik V. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 77.Breiman L., Friedman J., Stone C. J., Olshen R. A. Classification and Regression Trees. New York, NY, USA: CRC Press; 1984. [Google Scholar]

- 78.Dudoit S., Fridlyand J., Speed T. P. Comparison of discrimination methods for the classification of tumors using gene expression data. Journal of the American Statistical Association. 2002;97(457):77–87. doi: 10.1198/016214502753479248. [DOI] [Google Scholar]

- 79.Liaw A., Wiener M. Classification and regression by randomforest. R News. 2002;2(3):18–22. http://CRAN.R-project.org/doc/Rnews/ [Google Scholar]

- 80.Therneau T., Atkinson B., Ripley B. rpart: Recursive Partitioning and Regression Trees. R package version 4.1-3, http://cran.r-project.org/web/packages/rpart/index.html.

- 81.Kapelner A., Bleich J., Cohen Z. D., DeRubeis R. J., Berk R. Inference for treatment regime models in personalized medicine. http://arxiv.org/abs/1404.7844. [Google Scholar]

- 82.Song X., Pepe M. S. Evaluating markers for selecting a patient's treatment. Biometrics. 2004;60(4):874–883. doi: 10.1111/j.0006-341x.2004.00242.x. [DOI] [PubMed] [Google Scholar]

- 83.Karrison T. Restricted mean life with adjustment for covariates. Journal of the American Statistical Association. 1987;82(400):1169–1176. doi: 10.1080/01621459.1987.10478555. [DOI] [Google Scholar]

- 84.Barker C. The mean, median, and confidence intervals of the kaplan-meier survival estimate—computations and applications. Journal of the American Statistical Association. 2009;63(1):78–80. doi: 10.1198/tast.2009.0015. [DOI] [Google Scholar]

- 85.Efron B., Tibshirani R. J. An Introduction to the Bootstrap. Vol. 57. CRC Press; 1994. [Google Scholar]

- 86.Arlot S., Celisse A. A survey of cross-validation procedures for model selection. Statistics Surveys. 2010;4:40–79. doi: 10.1214/09-SS054. [DOI] [Google Scholar]

- 87.Kapelner A., Bleich J. PTE: Personalized Treatment Evaluator. 2014, R package version 1.0, http://CRAN.R-project.org/package=PTE.

- 88.Murphy S. A. Optimal dynamic treatment regimes. Journal of the Royal Statistical Society, Series B: Statistical Methodology. 2003;65(2):331–355. doi: 10.1111/1467-9868.00389. [DOI] [Google Scholar]

- 89.Robins J. M. Proceedings of the Second Seattle Symposium in Biostatistics. Vol. 179. Berlin, Germany: Springer; 2004. Optimal structural nested models for optimal sequential decisions; pp. 189–326. (Lecture Notes in Statistics). [DOI] [Google Scholar]

- 90.Zhao Y., Zeng D. Recent development on statistical methods for personalized medicine discovery. Frontiers of Medicine in China. 2013;7(1):102–110. doi: 10.1007/s11684-013-0245-7. [DOI] [PubMed] [Google Scholar]