Abstract

Objective. To increase the percentage of state, national, or international student presentations and publications.

Design. A multi-faceted intervention to increase student scholarly output was developed that included: (1) a 120-minute lecture on publication of quality improvement or independent study research findings; (2) abstract workshops; (3) poster workshops; and (4) a reminder at an advanced pharmacy practice experience (APPE) meeting encouraging students to publish or present posters. The intervention effect was measured as the percent of students who presented at meetings and the number of student projects published.

Assessment. A significant increase occurred in the percent of students who presented posters or published manuscripts after the intervention (64% vs 81%). Total student productivity increased from 84 to 147 posters, publications, and presentations. The number of projects presented or published increased from 50 to 77 in one year.

Conclusion. This high-impact, low-cost intervention increased scholarly output and may help students stand out in a competitive job market.

Keywords: research, quality improvement, student outcomes, national presentations, national posters

INTRODUCTION

Nationally, colleges and schools of pharmacy recognize the importance of incorporating student research into curricula.1 While this takes a variety of forms and is still not required at most pharmacy schools, many institutions are trying to expose students to research.2 The 2011-2012 Argus Commission, a body composed of the American Association of Colleges of Pharmacy’s (AACP) past five presidents, was charged with answering a series of questions, including how to infuse an attitude of inquisitiveness and scholarly thinking in pharmacists and health care professionals and how to nurture emerging scientists among students and young faculty members. In their report, the Argus Commission suggested requiring students to engage in research projects and to prepare subsequent papers and/or presentations as a way of sharing in the “culture of scholarship and the excitement of discovery.”3

With support from AACP leadership, the Accreditation Council for Pharmacy Education (ACPE) incorporated statements about research into its standards. Specifically, the 2007 and 2016 ACPE Standards state that a school’s commitment to research and scholarship must be incorporated into it’s missions and goals.4,5 The guidance document for the 2016 Standards suggests that for Standard 10j (Guidance for Curriculum Design, Delivery, and Oversight: Encouraging Scholarship), “elective courses that engage students in the research/scholarship programs of faculty are encouraged.”6 Conducting research projects and presenting them is a way for students to achieve the 2013 Center for the Advancement of Pharmacy Education (CAPE) Outcomes because each project invariably teaches students to be learners, problem solvers, educators, communicators, leaders, innovators, entrepreneur, and professional—all of which are mentioned as desired outcomes in the CAPE guidelines.7

The University of Arizona (UA) College of Pharmacy curriculum offers a unique combination of courses and projects that expose students to research throughout the doctor of pharmacy (PharmD) program. Required courses include: (1) a 3-course series consisting of biostatistics (2 credit hours), research methods (2 credit hours), and drug literature evaluation (2 credit hours); (2) a 2-course sequence on quality improvement and medication error reduction involving a didactic component (2 credit hours) and an experiential portion with project and a local poster presentation (1 credit hour); and (3) a senior research requirement involving a proposal writing class (2 credit hours) and a project with a local poster presentation (4 credit hours across 2 semesters). Students may also choose to engage in independent study research projects. This unique sequence positions students to publish and present their research and quality improvement projects while still enrolled in the PharmD program. Quality improvement projects are completed by the end of the second professional year, and many students finish their senior or independent study research projects before going on to APPEs. Additionally, UA students graduate having completed two research projects, two local poster presentations, and one research paper.

There is a paucity of published studies on pharmacy student research and quality improvement project outcomes, the few that exist focus on student and faculty perceptions of the projects and on comparisons of research project types across schools. Kim et al surveyed students who completed the required senior research project. Results indicated that, of the 229 students responding, 88.2% of students agreed or strongly agreed that the project was a valuable learning experience, and 73.2% agreed or strongly agreed that the project made them more qualified/marketable for postgraduate opportunities they were exploring.8 Nykamp et al surveyed student authors to examine what motivated them to engage in scholarly activities. The authors reported that, among the students who responded, factors that most frequently influenced publication were personal fulfillment and making a contribution to the literature.9 In a study on preceptor attitudes toward student research projects, Kao et al found that while most preceptors (N=115) agreed that projects provided a valuable learning experience (87.5%), a much smaller percent reported sharing project results (23.7% developed poster presentations, 4% platform presentations, and 5.3% resulted in peer-reviewed publications). The authors used a retrospective survey to get an estimate of time spent on research projects and found that while 37 655 hours were spent on the 153 projects, only 5 publications resulted.10 Together, these studies demonstrate that students and advisors find research projects valuable learning tools, and that students can be intrinsically motivated to pursue publication.

To our knowledge, no published study has reported on an intervention intended to increase the scholarly output of pharmacy student research and quality improvement projects. Thus, the purpose of this study was to measure the outcome of an intervention directed at increasing student publications and presentations at local and national meetings.

DESIGN

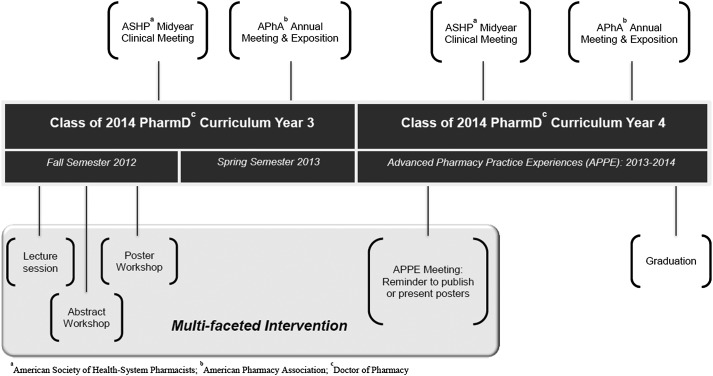

While students at the UA College of Pharmacy are exposed to a research-focused curriculum, they are not specifically educated about the process of submitting abstracts or manuscripts over the 4-year professional PharmD program. The multi-faceted intervention was created to address this education gap. Using students in the class of 2013 as a baseline, the intervention began with the class of 2014. In the fall semester of the third year, the quality improvement projects were finished and all students completed a poster for the school poster session. Many students had also completed independent study research projects by this time. The timing of the session benefited students who were beginning to think about attending the American Society of Health-System Pharmacists (ASHP) Residency Showcase at the end of the semester. See Figure 1 for timing of intervention in the curriculum.

Figure 1.

Timeline of the Multi-faceted Intervention within the PharmD Curriclum.

The intervention included a 120-minute lecture style class on publication of quality improvement or independent study research findings, abstract workshops, poster workshops, and a reminder at an APPE class meeting to publish or present posters. During the in-class portion of the intervention, students were encouraged to bring a laptop and sit with their quality improvement or independent study teams. The course coordinator for the quality improvement course lectured on the importance of publishing. The students were then guided step-by-step through the abstract submission process for the American Pharmacists Association (APhA) Annual Meeting and Exposition and the ASHP Mid-Year Clinical Meeting. Following the lecture, the course coordinator hosted abstract and poster workshops to provide students with individualized feedback and submission support. The students were exposed to the final facet of the intervention in the summer of their fourth year. During an APPE meeting, students were reminded of the importance of publishing or presenting posters based on their senior projects, quality improvement projects, or independent study projects and given deadlines for abstract submission. Lastly, they were invited to participate in scheduled abstract and poster workshops.

The intervention can be described within the framework of Fink’s Taxonomy of Significant Learning, which provides a nonhierarchical, inter-relational, and interactive taxonomy for developing learning objectives, learning activities, and assessments.11 This intervention used the following major categories of the taxonomy: application, integration, human dimension, and caring. During the in-class portion of the intervention, students’ applied and integrated knowledge from the previous year. The human dimension was incorporated as students worked in teams to negotiate the process. Students were given an opportunity to demonstrate caring as they worked to understand the implications of their research and the importance of sharing meaningful results to improve the profession.

For the purposes of evaluation, “presentation” was defined as a poster presentation or podium presentation at a venue outside the college or beyond the local level. Presentations at state, national, and international meetings were included. Print-based or online publications were included if they had at least a statewide audience; college publications and organization newsletters were not included. Thus, “publication” referred to a student manuscript published in a peer-reviewed or editorially reviewed journal.

The three measures of student presentation and publication included: (1) participation percentages per class (ie, the number of students per class presenting/publishing); (2) overall student productivity; and (3) the total number of projects presented or published. To calculate participation percentages per class for presentations and publications, individual student presenters/authors were counted only once. If a student was involved in several projects, the student was counted once, providing an active proportion of each class. The total number of student quality improvement and research projects for the classes of 2013 and 2014 were obtained from the course coordinators. Unfortunately, the total number of independent study projects could not be obtained. To determine overall student productivity, all student presentations and publications were counted; a single student publishing or presenting multiple times would be counted multiple times. The total number of projects presented or published was determined by counting each individual presentation and publication (encore presentations were excluded).

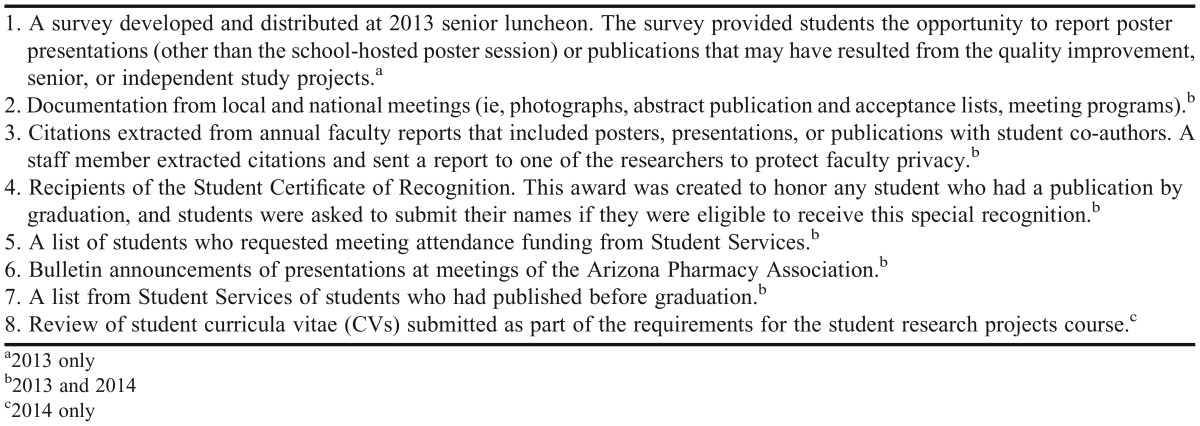

Multiple methods were used each year to collect data, including analysis of student surveys, documentation from meetings, citations from faculty reports, student services reports, student recipients of recognition awards, bulletin announcements, and student CVs. Data results from all sources were compiled to get the clearest picture of student presentations and publications. Data collection methods are described in detail in Table 1.

Table 1.

Data Collection Methods for Tracking Student Productivity

Chi-square tests were used to determine the change in proportion of students who presented posters or published manuscripts.12 An alpha of 0.05 was selected as a priori. Frequencies and percentages were used to summarize descriptive data (ie, overall student productivity and the number of unique posters or presentations). This program assessment was approved by the University of Arizona Human Subjects Protection Program.

EVALUATION AND ASSESSMENT

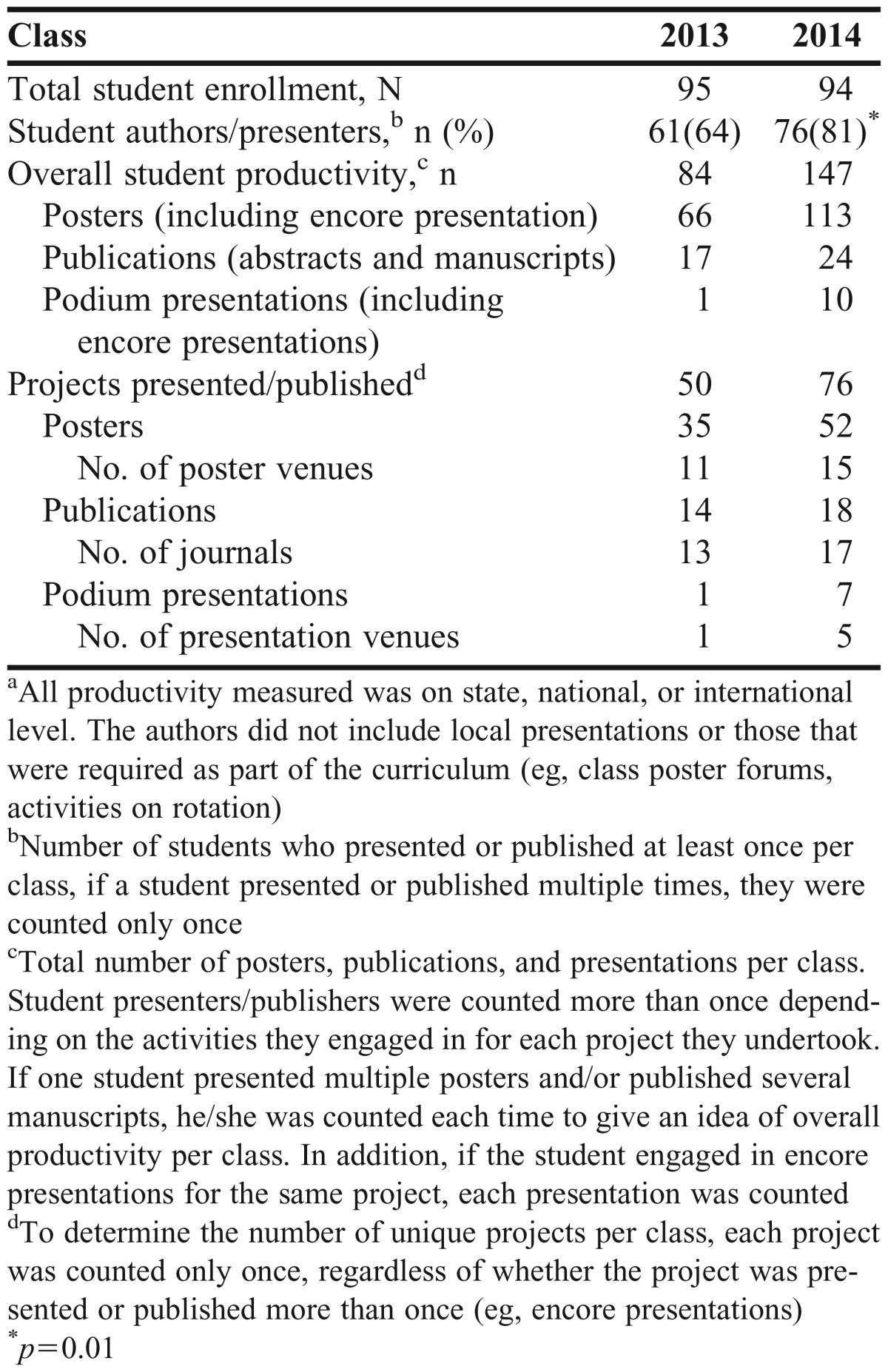

According to class statistics, the 2013 (baseline) and 2014 (intervention) classes were similar. There were 95 students in 2013 and 94 in 2014. The average age and range of ages for classes of 2013 and 2014 was 25 (18-57) and 23 (19-44), respectively. Gender was also similar between the classes; 59% female in 2013 and 63% in 2014. Table 2 details the productivity for each class. Sixty-four percent of the 2013 class presented posters or published manuscripts at the state, national, or international level, but this figure rose to 81% for the class of 2014 (p=0.01). Eighty-four scholarly activities (posters, publications, and presentations), or overall student productivity, occurred in the 2013 class, and this increased to 147 activities for the 2014 class. Total projects presented or published at state, national, or international levels rose from 50 in 2013 to 77 in 2014. While data were not available regarding study designs, project advisor practice areas provided insight into the types of research activities completed. In 2013, projects were completed with advisors in the practice areas of clinical practice (50%), outcomes research (30%), external clinical practice (11%) basic sciences (7%), and industry (2%). Advisor practice areas were similar in 2014, but also included research in the community setting clinical practice (47%), external clinical practice (27%), community practice (12%), outcomes research (10%), basic sciences (4%), and industry (1%). This description of the project advisors shows not only the potential breadth of projects completed during 2013 and 2014, but also demonstrates that the advisors who work with student groups fluctuate each year and may have varying external pressures within their own careers to present or publish.

Table 2.

Student Productivity per Classa

DISCUSSION

While the college curriculum has a focus on research methods and quality improvement, many students are not transitioning their results to publications or presentations outside the college. All students are required to complete both a senior project and a quality improvement project, and both are presented at the local level before graduation. Thus, each student already has project report and 2 project posters completed upon graduation at the local level. This intervention was designed to encourage students to present work they had already completed (ie, posters) on the state, national, and international levels. The authors told students doing so might help to set them apart in the job and residency marketplaces. The authors recognized that many research projects may not have been appropriate for national level dissemination, but didn’t want students to be intimidated by the process. Moreover, if a particular project was suited for a local audience, the student authors would still gain exposure to and knowledge of the submission process and be better prepared to consider submission of future residency projects on a national level. Another goal was to provide additional structure and support needed to see the quality improvement and research projects through to presentation or publication at a large cohort level as opposed to each advisor expending additional time and resources teaching the process individually with their student researchers.

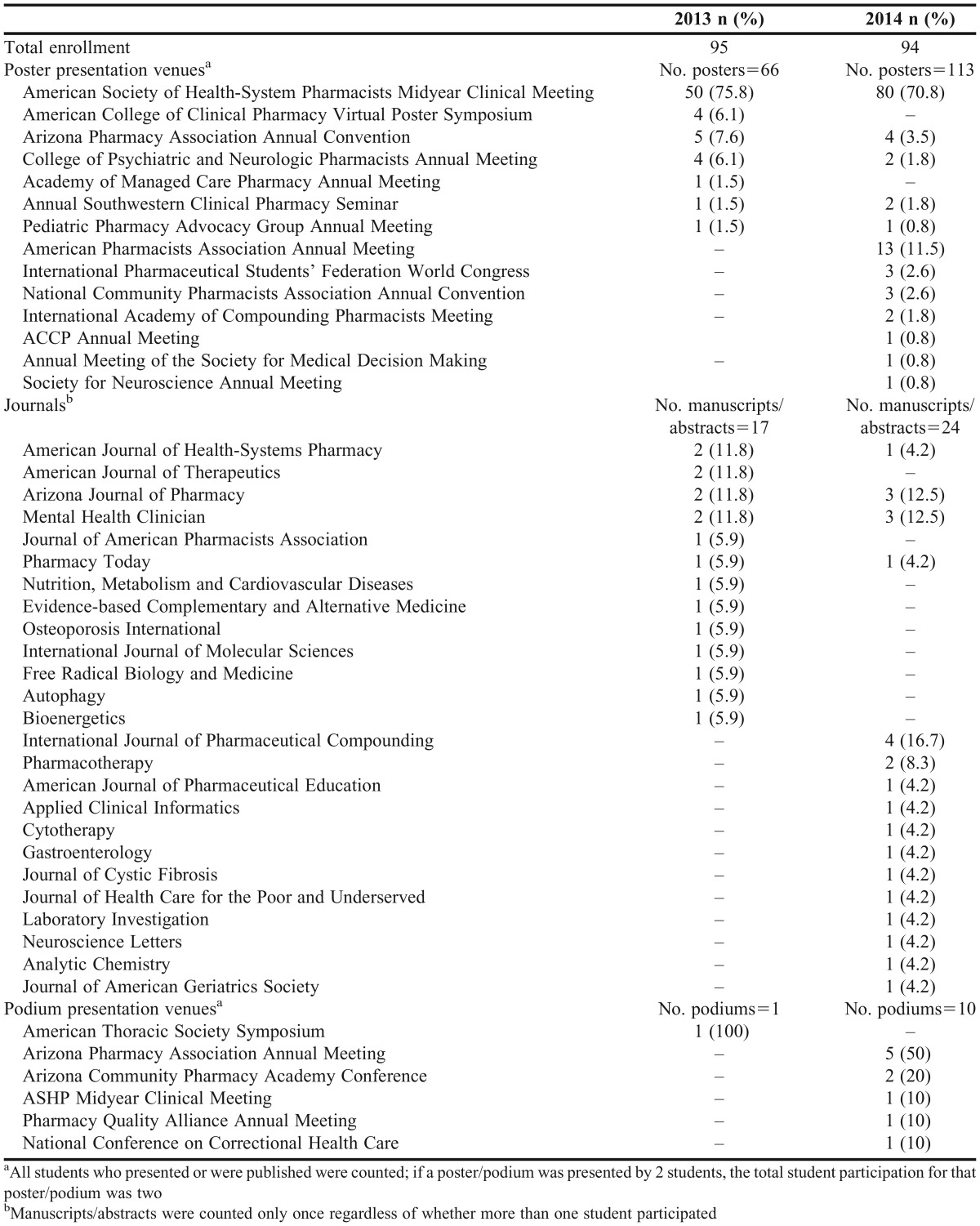

The intervention was effective, with a significant increase in the percentage of students who presented or published (from 64% to 81% in one year). An increase in diversity of venues where students disseminated their work also occurred. The poster presentation venues represented a wide range of specialties and disciplines; some with peer-reviewed abstract acceptance and some with editorial review acceptance for student projects. While not all student poster presentations represented the same level of rigor or competition, there was an increase in the number of presentations in peer-review venues (Table 3), which suggested the breadth of student projects and the value in sharing project outcomes.

Table 3.

Student Presentation and Publication Venues and Journals per Class

The success of the intervention suggests that publication rates for faculty advisors, residents, and pharmacy students may also increase at other schools using similar interventions. Kehrer and Svensson reported two major barriers to scholarship for faculty members: (1) time and (2) research expertise and confidence among pharmacy faculty members.13 While our intervention was built on existing research classes, it provided structure and support for students in order to aid faculty members and preceptors in dissemination of existing research projects that may have otherwise not made it to publication or presentation. Additionally, graduates from this program will have had the experience needed to think critically, problem solve, and execute future research projects. In fact, Murphy and Valenzuela completed a survey of graduates from the UA College of Pharmacy and reported the results in 2002, when only a portion of the current required research curriculum was in place, and found that 46.2% of their respondents (n=30) had conducted one or more research projects after graduation. Notably, 34.4% of their respondents (n=23) published or presented their PharmD project findings.14 Our intervention may also address barriers identified by Irwin et al in their research regarding pharmacy residents and their research projects. Almost half the residents surveyed (43%) identified mentorship and structural issues as a barrier to their success.15 Our intervention provided additional mentorship to students while requiring a limited commitment from faculty members (after the project was completed). The structure of the intervention also had the flexibility to be tailored to a smaller group (eg, residents or an elective).

The rate of presentations and publications for the student projects reported from this study is somewhat higher than what is found in some of the literature. For example, Kao et al surveyed project preceptors and reported that out of a total of 224 projects, 53 (23.7%) were presented as posters, 9 (4%) were presented as podium presentations, and 12 (5.3%) were published.10 Johnson et al evaluated an elective student research program involving several laboratory disciplines.16 The authors reported, based on a student survey sent to 25 students with 22 responses, that 6 students had published (27%) and 6 had national presentations (27%), although it is not clear whether the 6 publications and 6 presentations represented different projects. Assemi et al found that among the 111 senior research projects reviewed, 56 (50.4%) were “disseminated externally.”17 Several factors may contribute to the differences in rates. First, the goal of our intervention was to expose students to aspects of scholarship and increase their publication rates and as such, the data are focused on student outcomes. Other rates in the literature are reported as percentages of projects. While we knew the total number of quality improvement and senior projects completed by each class, we did not know the number of independent research projects for each class; therefore an accurate denominator to report a percent of projects for this data was not available, which made it difficult to compare data directly. Second, UA students were required to be involved in at least two different projects, a quality improvement project and a research project, so there were many opportunities for publication and presentation that may not have been present at other institutions. Third, there may have been differences in the incentives offered to students. UA students who presented at a conference were given a minor travel stipend, which may have encouraged submission and presentation. However, this stipend was not part of the intervention and was established prior to the study. Another factor that may explain higher rates at UA could be that some faculty members required students to present. Finally, the relatively low rates reported for other student research programs may reflect the methods used to collect data. For example, a single method of identifying student presentations and publications is likely to substantially underestimate the actual number.

The presentation and publication rates reported here are also higher than those reported for medical student research. Beier and Chen’s review of reports on medical student research found that publication rates in peer-reviewed journals varied from 8% to 85%, and presentations at regional or national meetings ranged from 10% to 41%.18 Data on student publications and presentations were most commonly collected from student self-reports or through reviews of school records, though Beier and Chen reported using Medline searches to identify student publications.18 Similar to reports of pharmacy student research, studies involving medical students relied primarily on student self-reports, which could have resulted in underreporting.

The multi-faceted intervention developed in this study was low cost. The principle investment was the time required to develop and implement the lecture-style class, organize the “abstract workshop,” and review student presentations and publications. While this sometimes involved heavy editing, it ensured better submission quality. To ease the workload, the intervention evolved to a 60-minute lecture-style class. The poster and abstract review workshops also changed over time. Initially, workshops involved one faculty member but eventually involved assistance from graduate students in the Pharmaceutical Economics, Policy, and Outcomes PhD program. Such assistance took the burden off the faculty member and provided opportunities for teaching and mentorship between graduate students and PharmD students. Other strengths that contributed to the low cost included administrative support services, such as access to a college-owned poster printer, and technical support for students who printed on campus. However, like the small travel stipend, these administrative services were in place before the intervention was developed and implemented.

This intervention would not have been possible without the underlying support and structure of quality improvement, student research project programs, and the faculty advisors who guided the students throughout their projects. Once faculty members and students finish a project, many resources will have been expended. However, unless a state, national, or international poster presentation or peer-reviewed publication occurs, the time spent and skills gained may not be recognized on annual reports or on curriculum vitae. Thus, ensuring publication or presentation occurs increases the return on investment.

An incidental finding from this evaluation was that information on student presentations and publications proved difficult to collect. Initially, the primary method of data collection was student self-report (ie, survey); however, it quickly became evident that students were not accurately self-reporting their accomplishments. In fact, student self-report accounted for only 64% (n= 54) of the 84 total activities in 2013. For other schools wishing to collect data on student productivity, see Table 1 for a complete listing of data collection methods used in this study. Data collection did evolve over the course of this project and it was noted during both years by the senior research project and quality improvement project coordinators that self-reported data (student survey and student CV collection) were incomplete. In both years, further exploration was required to ensure data accuracy and a full reporting. There were likely several reasons for the underreporting in student self-reports. Neither presentation (outside of the college) nor publication was required as part of the quality improvement or research project classes so students may not have recognized the importance of reporting this data, and recall bias may have been a factor. All state or national presentations and publications occurred outside class time, and the course coordinators did not oversee the process as part of their courses. Timing was also an issue, particularly for publication. Project findings may not have been published until well after the course was completed, or even after the student graduated. In that case, the only method available to collect those data was searching annual reports to determine if the faculty advisor listed the publication there.

Accurate data collection is important because presentations and publications are the primary criteria on which success of student research programs are judged, however the best method for collecting data is under discussion. As mentioned above, student self-reporting resulted in substantial underreporting. Kao et al addressed this phenomenon by surveying faculty preceptors rather than surveying students and found a response rate of 92%.10 Assemi et al also used preceptor reporting to determine project dissemination and reported a similar response rate of 90.2%.17 While these are excellent response rates, this data collection method puts the burden on faculty members who have already spent a considerable amount of time on student projects and can also result in underreporting if not all faculty members respond. UA data are now collected through a number of sources and cross-referenced in an attempt to achieve completeness and accuracy (see Table 1).

Since this study, new methods have been identified to improve the process of data collection. Students are now provided a CV template file that includes specific sections on research and on publications and presentations. In addition, the senior research project course coordinator includes instruction on how to report research on one’s CV because the data collection process revealed students did not know which presentations should be reported. Course coordinators may also want to create an online presentation on preparing manuscripts for publication. To provide students additional support in drafting and submitting manuscripts before graduation, the research project timeline may be revised to begin earlier in the curriculum. Finally, a more concerted effort could be made to request that advisors encourage publication and presentation.

This study was a pre/post program evaluation, not a controlled study and, therefore, has a number of limitations and potential confounding factors. First, with the increasing number of pharmacy students in the United States, competition for residencies and jobs has increased. Thus, students may have sought opportunities to present or publish regardless of the intervention. Second, project advisors may have offered varying levels of encouragement to publish or present, which would have been difficult to measure. Also, Assemi et al noted as preceptors gain experience mentoring senior research projects, they might have a stronger influence on publication and presentation. Future studies will need to be done to measure advisors attitudes toward the intervention and student publication and presentation.17 Third, it was unknown how many students worked on independent study projects or other projects not associated with classes, which made it impossible to calculate accurate denominators for some metrics. Finally, the slight difference in data collection methods between 2013 and 2014 (self-report on a questionnaire in 2013 compared to self report on CV on 2014) is a limitation. However, while data collection methods differed slightly, the researchers made a concerted effort each year to reach out personally to students ensuring any missing data from self-report was recorded.

CONCLUSION

This evaluation suggests that a low-cost, multi-faceted intervention consisting of a lecture and workshops can substantially increase the number of publications and presentations resulting from quality improvement and student research projects.

REFERENCES

- 1.Lee MW, Clay PG, Kennedy WK, et al. The Essential Research Curriculum for Doctor of Pharmacy Degree Programs. Pharmacotherapy. 2010;30(9):344e–349e. doi: 10.1592/phco.30.9.966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Murphy JE, Slack MK, Boesen KP, Kirkling DM. Research-related Coursework and Research Experiences in Doctor of Pharmacy Programs. Am J Pharm Educ. 2007;71(6):113. doi: 10.5688/aj7106113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Speedie MK, Baldwin JN, Carter RA, Raehl CL, Yanchick VA, Maine LL. Cultivating ‘Habits of Mind’ in the Scholarly Pharmacy Clinician: Report of the 2011-12 Argus Commission. Am J Pharm Educ. 2012;76(6):S3. doi: 10.5688/ajpe766S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Accreditation Council for Pharmacy Education. 2006. Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. https://www.acpe-accredit.org/standards/. Accessed February 23, 2015.

- 5.Accreditation Council for Pharmacy Education. Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree: Standards 2016. 2015. https://www.acpe-accredit.org/pdf/Standards2016FINAL.pdf . Accessed February 17, 2015.

- 6.Accreditation Council for Pharmacy Education. Guidance for the Accreditation Standards and Key Elements for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree: Guidance for Standards 2016. 2015. https://www.acpe-accredit.org/pdf/GuidanceforStandards2016FINAL.pdf . Accessed February 17, 2015.

- 7.Medina MS, Plaza CM, Stowe CD, et al. Center for the Advancement of Pharmacy Education (CAPE) educational outcomes 2013. Am J Pharm Educ. 2013;77(8) doi: 10.5688/ajpe778162. Article 162. http://www.aacp.org/documents/CAPEoutcomes071213.pdf. Accessed February 23, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kim SE, Whittington JI, Nguyen LM, Ambrose PJ, Corelli RL. Pharmacy Students’ Perceptions of a Required SeniorResearch Project. Am J Pharm Educ. 2010;74(10):190. doi: 10.5688/aj7410190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nykamp D, Murphy JE, Marshall LL, Bell A. Pharmacy Students’ Participation in a Research Experience Culminating in Journal Publication. Am J Pharm Educ. 2010;74(3):47. doi: 10.5688/aj740347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kao DJ, Suchanek Hudman K, Corelli RL. Evaluation of a Required Senior Research Project in a Doctor of Pharmacy Curriculum. Am J Pharm Educ. 2011;75(1):5. doi: 10.5688/ajpe7515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fink LD. San Francisco, CA: Jossey-Bass; 2003. Creating significant learning experiences: An integrated approach to designing college courses. Jossey-Bass. [Google Scholar]

- 12.Preacher KJ. Calculation for the chi-square test: An interactive calculation tool for chi-square tests of goodness of fit and independence [Computer software] Available from http://quantpsy.org. April 2001.

- 13.Kehrer JP, Svensson CK. Advancing Pharmacist Scholarship and Research Within Academic Pharmacy. Am J Pharm Educ. 2012;76(10) doi: 10.5688/ajpe7610187. Article 187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Murphy JE, Valenzuela R. Attitudes of Doctor of Pharmacy Graduates of One U.S. College Toward Required Evaluative Projects and Research-related Coursework. Pharm Educ. 2002;2(2):69–73. [Google Scholar]

- 15.Johnson JA, Moore MJ, Shin J, Frye RF. A summer research training program to foster PharmD students’ interest in research. Am J Pharm Educ. 2008;72(2) doi: 10.5688/aj720223. Article 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Irwin AN, Olson KL, Joline BR, Witt DM, Patel RJ. Challenges to publishing pharmacy resident research projects from the perspectives of residency program directors and residents. Pharm Pract. 2013;11(3):166–172. doi: 10.4321/s1886-36552013000300007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Assemi M, Ibarra F, Mallios R, Corelli RL. Scholarly contributions of required senior research projects in a doctor of pharmacy curriculum. Am J Pharm Educ. 2015;79(2) doi: 10.5688/ajpe79223. Article 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Beier SB, Chen HC. How to measure success: the impact of scholarly concentrations on students—A literature review. Acad Med. 2010;85:438–452. doi: 10.1097/ACM.0b013e3181cccbd4. [DOI] [PubMed] [Google Scholar]