Abstract

Objective. To examine the relationship between admissions, objective structured clinical examination (OSCE), and advanced pharmacy practice experience (APPE) scores.

Methods. Admissions, OSCE, and APPE scores were collected for students who graduated from the doctor of pharmacy (PharmD) program in spring of 2012 and spring of 2013 (n=289). Pearson correlation was used to examine relationships between variables, and independent t test was used to compare mean scores between groups.

Results. All relationships among admissions data (undergraduate grade point average, composite PCAT scores, and interview scores) and OSCE and APPE scores were weak, with the strongest association found between the final OSCE and ambulatory care APPEs. Students with low scores on the final OSCE performed lower than others on the acute care, ambulatory care, and community APPEs.

Conclusion. This study highlights the complexities of assessing student development of noncognitive professional skills over the course of a curriculum.

Keywords: admissions, objective structured clinical examination, advanced pharmacy practice experiences, assessment, student development

INTRODUCTION

Understanding and evaluating student development throughout a health professions curriculum is a complex undertaking.1 Growing demands for transparency, evidence-based quality improvement, and data-informed decision making from accreditors, stakeholders, and other constituents highlight the need to rethink approaches to assessing student development and aligning assessment strategies to demonstrate achievement of desired programmatic outcomes.2 As curricular reform efforts proceed at an increasing number of pharmacy colleges and schools, attention may need to be given to the design and implementation of evidence-based assessment strategies that span admission to graduation and beyond.3-5 This approach is particularly important as the role of pharmacy expands within the rapidly changing health care system, pointing to the need to develop and foster critical interpersonal and interprofessional skills in student pharmacists.6

While some pharmacy education studies have focused on the longitudinal assessment of cognitive measures of student learning,7 challenges associated with measuring and predicting noncognitive or professional skill development (eg, adaptability, collaboration, and moral reasoning) over time remain. During the admissions process, pharmacy schools generally seek to admit applicants who are academically prepared for the curriculum and possess personal characteristics identified as valuable for practicing pharmacists. However, a growing body of literature demonstrates the challenges associated with predicting an applicant’s future performance.8-10 Previous studies suggest that prepharmacy academic indicators, such as undergraduate grade point average (uGPA) and Pharmacy College Admission Test (PCAT) scores can be predictive of performance in didactic coursework. However, the strength of these relationships tends to decrease for each subsequent year of the program.11,12 When examining the predictive validity of uGPA and standardized admissions tests in medicine, researchers have noted mixed findings and subsequently highlighted the importance of strengthening admissions models to better select students who will be successful in a clinical setting.13,14 Further, the relationship between admissions interviews designed to assess relevant skills (such as empathy, teamwork, and communication), and clinical performance in pharmacy remains unclear.

Future clinical performance may be best predicted by OSCE scores.15,16 These examinations are standardized patient interactions designed to assess student competence in various clinical knowledge and skills necessary for success in advanced practice experiences and future practice. In medical education, for example, Peskun et al reported correlations between clerkship performance and second-year OSCE scores.16 Similarly, Meszaros et al reported a significant relationship between APPE performance and a 3-tiered evaluation that included OSCEs.15

The development of professional knowledge and skills that include both content or cognitive knowledge and noncognitive or professional attributes is critical for the profession, particularly as the role of pharmacists continues to expand. To date, little research in pharmacy education examines metrics designed to assess noncognitive or professional attributes, in addition to content knowledge. Specifically, the relationship between admissions, OSCEs, and clinical performance remains unclear. The purpose of this study was to examine the association between admissions scores, OSCE performance, and APPE grades. This study extends the literature as one of the first to examine admissions parameters and OSCEs as indicators of student pharmacists’ APPE performance.

METHODS

Admissions data, OSCE scores, and final evaluation scores from all APPEs were collected from students who graduated from the PharmD program at the UNC Eshelman School of Pharmacy in spring 2012 and spring 2013. Students who did not graduate 4 years from the time of enrollment were excluded from the study. For the 2012 and 2013 classes, the 4-year professional program required 6 semesters of coursework in the classroom and 11 months of pharmacy practice experiences consisting of 2 introductory pharmacy practice experiences and 9 APPEs.

Collected admissions data included uGPA, PCAT composite scores, and composite interview scores. As a part of the application process, the classes of 2012 and 2013 were interviewed by 2 faculty interviewers using a structured interview method. The interview form consisted of 7 questions, each designed to assess a distinct attribute, such as integrity, communication skills, and understanding of the profession. Although the 2 faculty members interviewed a candidate together, they were required to independently score the candidate on a scale of 1 (lowest) to 4 (highest) for each question. For this study, interview scores were converted to a percentage based on the number of points awarded by the 2 interviewers divided by total possible points.

Scores from OSCEs included a single score for each OSCE completed during 3 consecutive semesters: fall of the second year (P2); spring of P2; and fall of the third year (P3). The OSCEs were administered as end-of-semester examinations in the Pharmaceutical Care Lab course sequence. Student OSCE performance was assessed by standardized patients using 2 instruments: (1) a case-specific clinical checklist designed to assess pharmacy topic competency; and (2) a relationship and communication (R+C) instrument designed to assess empathic communication. Clinical checklists consisted of 8 to12 items that were each scored on a scale of 0 to 2, with 0 indicating “did not attempt;” 1 indicating “incorrect;” and 2 indicating “correct.” The instrument was adapted from the Accreditation Council for Graduate Medical Education competencies for interpersonal and communication skills and professionalism and consisted of 8 items scored on a 5-point Likert scale, (1=poor and 5=excellent).17 Standardized patients were recruited and trained by the Schools of Medicine and Pharmacy from a pool used throughout the year in OSCEs for multiple health professions programs. Final OSCE scores were converted to a percentage based on the number of points awarded by the standardized patients divided by total possible points.

In the fourth year (P4) of the curriculum, students were assigned to a region of the state where they completed the majority of their APPEs. Regional directors assigned students to 9 APPEs based on student requests and preceptor availabilities. Every student was assigned to the required APPEs of acute care, ambulatory care, community pharmacy, and hospital pharmacy in any order. Each APPE lasted one month, during which preceptors provided a midpoint evaluation and a final evaluation. All evaluations were completed using an online evaluation form via RxPreceptor (RxInsider, West Warwick, RI), which provides a final numeric score representing total points awarded by the preceptor out of a possible 100 points. This numeric score was then converted to a “pass, fail, honors” designation.

For this study, APPE final evaluation scores were collected and categorized according to the 4 basic types of APPEs: acute care, clinical specialty, ambulatory, and community pharmacy. The final evaluation score received for the first occurrence of each of the 4 types of APPEs was used for each student. In addition, the first clinical APPE grade, regardless of APPE type, was analyzed as this was believed to provide a chronologically consistent measure across all students. The first APPE score was for the first APPE of 9 and, for some of the students, was also one of the 4 required APPEs. In those cases, the score for the APPE was entered into both categories to analyze. This study was deemed exempt from full review by the Institutional Review Board of the University of North Carolina at Chapel Hill.

All data analyses were conducted using SPSSv22 (IBM, Armonk, NY). Pearson correlation (rp) was used to examine the relationship between variables of interest. Correlations between ±0.20 were considered negligible and correlations between 0.21 and 0.29 or between -0.21 and -0.29 were considered weak. This study examined correlations between: (a) the 3 admission variables and overall OSCE scores, followed by additional examination of correlations between admission variables and OSCE subscores (ie, communication, counseling, history taking); (b) OSCE scores and APPE scores; and (c) admissions variables and APPE scores. Independent t tests were used to compare mean scores among groups. Continuous data are represented as mean (standard deviation, SD). Significance was established at α=0.05 for all analyses.

RESULTS

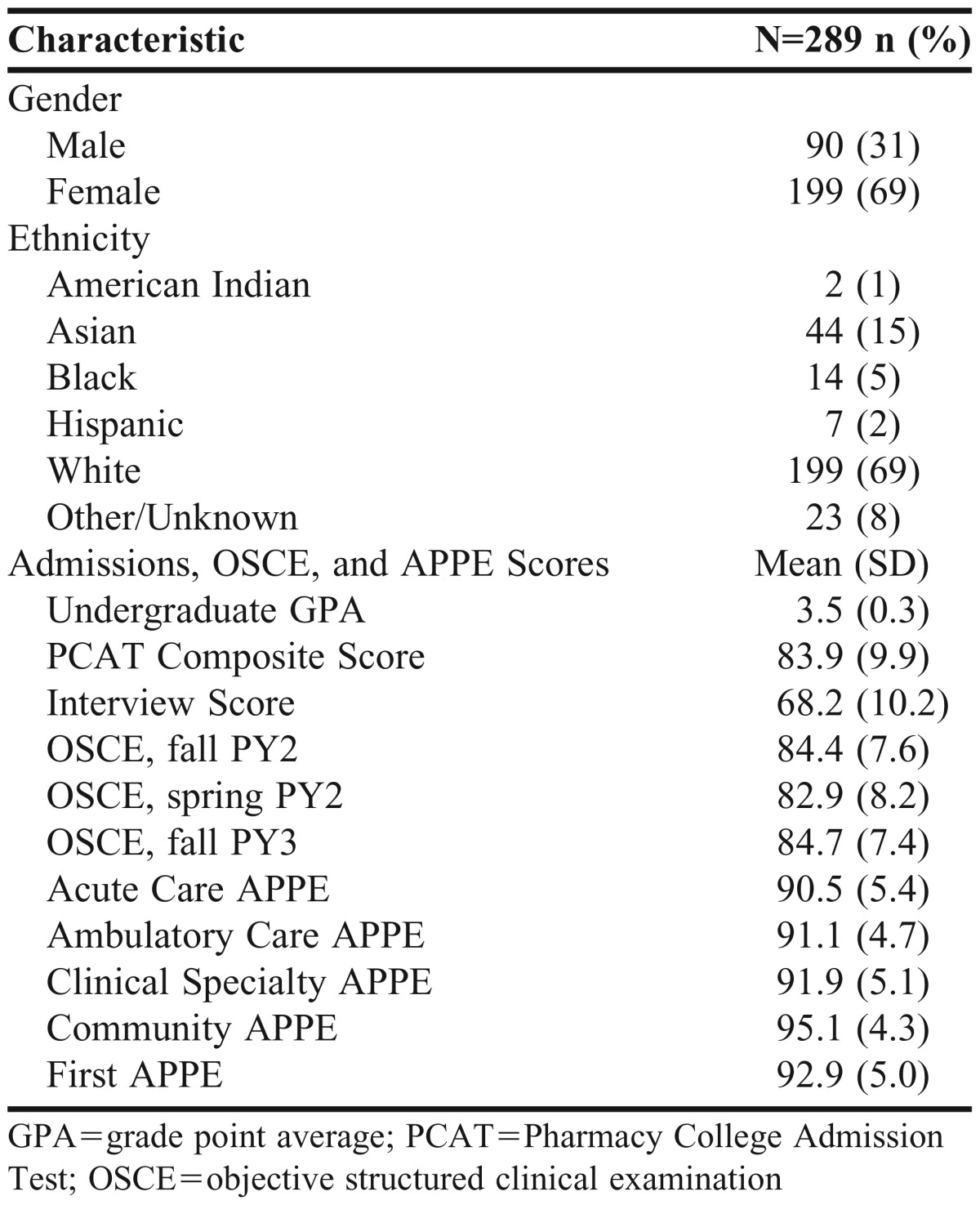

Two-hundred eighty-nine students were included in the study. Ninety (31%) students were male and 109 (69%) were white. The mean uGPA for this sample was 3.5 (0.3), mean PCAT composite score was 83.9 (9.9), and mean interview score was 68.2 (10.2). Table 1 details the demographic characteristics and average scores for the study sample.

Table 1.

Study Sample Demographic Characteristics and Average Scores

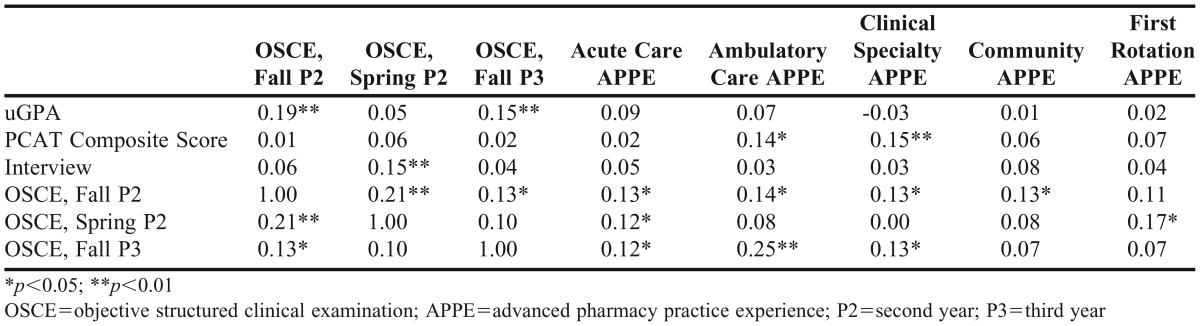

Table 2 details the correlations between APPE, admission, and OSCE scores. When examining the relationship between admission variables and OSCE performance, significant correlations were found between uGPA and fall P2 OSCE (rp=0.19), uGPA and fall P3 OSCE (rp=0.15), and interview score and spring P2 OSCE (rp=0.15). Additional analyses of associations between admission variables and OSCE subscores found negligible and insignificant correlations (rp<0.2). When examining the relationship between admission variables and APPE performance, PCAT composite score was the only variable demonstrating a significant relationship with APPEs (rp=0.14 for the ambulatory care APPE and rp=0.15 for the clinical specialty APPE). Each OSCE was significantly correlated with at least one APPE type, with the strongest correlation found between fall P3 OSCE and ambulatory care APPE scores (rp=0.25).

Table 2.

Correlations among Admission Variables, OSCE Scores, and APPE Performance (N=289)

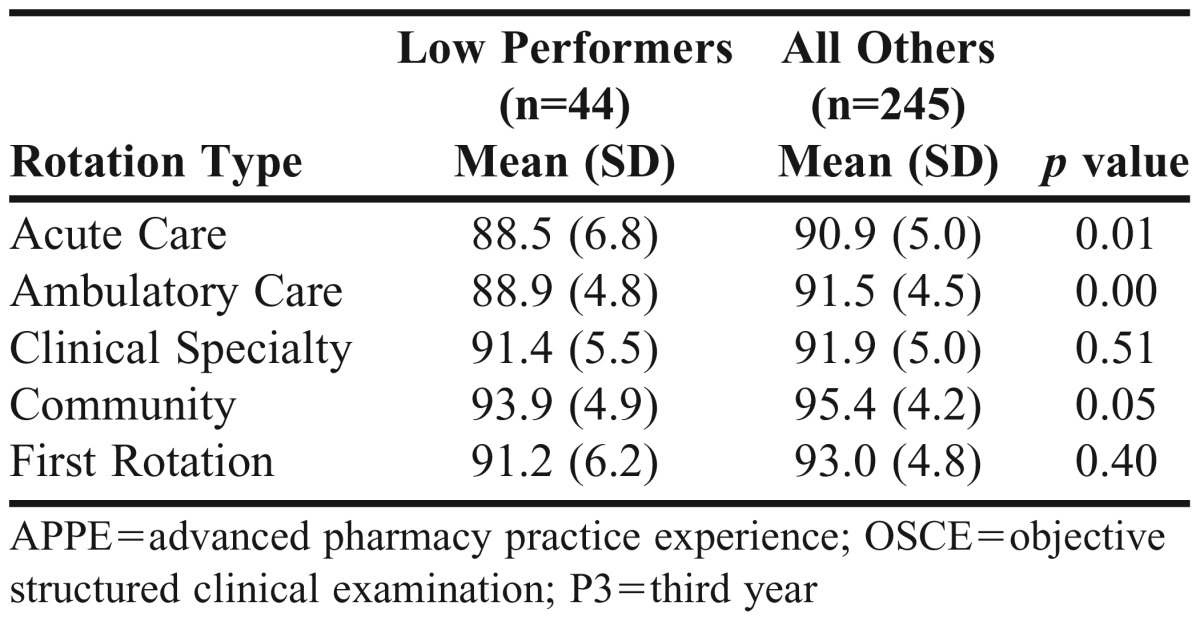

To better understand the relationship between OSCE and APPE performance, the mean APPE performance of students with low OSCE scores on the OSCE closest to APPEs (fall P3 OSCE) was compared with the mean APPE performance of the remaining students. A student was categorized as low performing if the fall P3 final OSCE score was more than one SD below the mean (n=44). As seen in Table 3, mean APPE scores for low OSCE performers were lower than those for the rest of the cohort. Significant differences between low OSCE performers and the rest of the study sample were seen for the acute care (p=0.006), ambulatory care (p=0.001), and community (p=0.046) APPEs.

Table 3.

Comparison of APPE Scores for Low Fall P3 OSCE Performers and All Others*

DISCUSSION

Understanding the development of cognitive and noncognitive skills across the course of a pharmacy curriculum is critical to preparing student pharmacists for success in the workplace. Curricular reform and transformation efforts provide opportunities to design and implement valid, reliable, and effective approaches to assessing student development longitudinally.18,19 By evaluating current practices, evidence can be generated to inform decision making and approaches to assessing student development as curricula undergo change.

This study provided insight into key aspects of student learning assessment that can inform thinking and shape future practices. Namely, the study found that identifying students with low OSCE scores immediately prior to APPEs could help identify specific types of APPEs that may challenge those students. In addition, while some significant associations were found, all correlation analyses revealed weak to negligible relationships between admissions interviews, OSCE scores, and APPE performance, suggesting the need to rethink the alignment of these assessments. Although this study was limited to a single institution, it demonstrated a process that others can follow to examine the strengths and weaknesses of their assessment strategies, including admissions, OSCEs, APPEs, and benchmarking.

By strengthening assessment strategies and leveraging data with transformational potential, pharmacy educators can discover needs, improve student development, customize educational programs, and innovate new educational models. Coupled with demands for more reliable and valid assessments from stakeholders, the literature reflects an emergent interest in issues associated with the identification and assessment of noncognitive skills considered necessary for success in contemporary health care environments.1,2,6,20 The 2010-2011 Academic Affairs Standing Committee of the American Association of Colleges of Pharmacy (AACP) recently identified self-efficacy, self-assessment, entrepreneurship, leadership, and advocacy as traits essential for graduates to function in the emerging “learning health-care system.” 20 As described in recommendation 7 by the 2011-2012 AACP Argus Commission, “Colleges and schools of pharmacy should identify the most effective validated assessments of inquisitiveness, critical thinking, and professionalism to include as part of their assessment plans, for use as both admissions assessments and as measurement of curricular outcomes.”6

The current study complements a growing body of research that highlights the complexities of effectively assessing the development of noncognitive skills. Namely, the findings support research that specifically demonstrates the limited predictive utility of admissions scores and OSCE grades for clinical performance.13,14,21,22 For example, admissions research suggests that standard or structured interviews are poor predictors of future clinical performance.12,13 A number of psychological tests, including tests of empathy and emotional intelligence, are also studied in health professions admissions and shown to be unreliable in predicting future clinical competencies.7,23 Similarly, tools designed to assess clinically relevant competencies, such as the Health Professions Admission Test and Health Sciences Reasoning Test, tend to be weakly correlated with OSCEs and/or clinical practice experience performance.8,24,25

During the admissions process, schools should consider more reliable and effective practices for assessing noncognitive and professional skills considered vital to success as a practitioner.22,26-28 Although several studies have reported correlations between grades in the didactic curriculum of pharmacy schools with uGPA and PCAT scores,11,29,30 strategies for predicting a student’s potential to be successful in clinical settings warrant further attention and development. The multiple mini-interview (MMI), for example, is a recently developed approach to the admissions interview that overcomes limitations associated with the standard or structured interview.31 Research suggests that the MMI is the best predictor of OSCE and clerkship performance.27 For these reasons, in the fall of 2013, the MMI was implemented in the UNC Eshelman School of Pharmacy admissions process.32

In addition to improving admissions strategies, aligning admissions with OSCE and APPE assessments could provide a more accurate representation of student pharmacist development throughout a curriculum. At the time of this study, OSCEs at the school were aligned with content learned in the didactic curriculum and specifically designed to assess empathy, communication, and relationship building; however, efforts had not been made to specifically align OSCE assessments with admissions or APPEs. Although OSCEs measure different constructs than examinations,33,34 aligning these measures from admission to graduation could provide insight to students and faculty members concerning persistent student needs. As noted by the National Institute for Learning Outcomes Assessment, assessment fosters wider improvement when representatives from across the educational community are engaged in its design and implementation, with faculty members playing an important role.35

Issues associated with data quality can further limit the ability to identify meaningful relationships between assessments across time. Context specificity, rater bias, and instrument differences, among other things, can challenge the reliability and usefulness of assessment outcomes. One reason for weak correlations between clinical performance and admission data or OSCE scores, for example, could be the lack of meaningful differentiation among scores. As seen in this study, average APPE scores were relatively high with low variance. Even students who were low performers on the fall P3 OSCE performed well on all APPE types, with no more than a 2-point separation between the 2 groups on average. Reasons for this may include lack of precision of the APPE evaluation instrument or rater-leniency associated with preceptor grading. Since preceptors often cite improving evaluation skills as an area that they would like to develop further,36 a preceptor development program may reduce rater bias while instilling confidence and courage to better assess students. Further, aligning instruments across the curriculum and conducting more rigorous psychometric analyses on those instruments also may help improve data quality.

Despite these challenges to measurement of noncognitive and professional skill development, a significant difference was found between high and low OSCE performers on acute care, ambulatory care, and community APPEs. This finding has implications for the pharmacy academy, as it indicates OSCEs may help differentiate student preparedness for specific types of pharmacy practice experiences. Ambulatory care APPEs, for example, often have greater emphasis on skills such as patient counseling than do acute care APPEs, yet more therapeutic decision-making than do community APPEs, which may make ambulatory care APPEs more predictable by the skills tested in the respective OSCE. Perhaps students who struggle on other APPEs, such as acute care, would be better identified by an OSCE that is specifically designed to test the skills and knowledge required for success on those types of practice experiences.

This study provides insight into the predictive validity of admission and OSCE scores. However, there are several limitations. First, the single institution sample limits generalizability of results. Admission, OSCE, and APPE outcomes are all likely to vary as a function of the organization, the instruments, and the raters. In addition, the framework for this study looks beyond the value of assessment at a single point in time. Although the predictive validity of the assessments examined in this study may be limited, these data can still demonstrate student development at various points in the curriculum. This type of feedback helps students identify areas of strength and weakness as they work to fulfill the requirements of a curriculum. Weak associations between admission, OSCE, and APPE scores do not mean the data failed to provide useful feedback to faculty members, students, and staff over the course of the curriculum.

Despite these limitations, the study provided timely results for informing the school’s curriculum transformation efforts and, specifically, rethinking its approach to designing and implementing strategies for assessing student development of core competencies over time.17 To inform these strategies, future studies could focus on: (1) evaluating the school’s new admissions model and examining the psychometric properties of the MMI; (2) understanding how to facilitate the design and implementation of a longitudinal assessment of student learning plan that effectively captures the development of key noncognitive skills from admission to graduation; (3) more rigorously assessing APPE performance and identifying best practices for preceptor development of evaluation skills; (4) and ongoing evaluation of utility of assessment practices.

CONCLUSION

Rethinking assessment with a systems approach and engaging faculty members in the collection, analysis, and dissemination of reliable and valid data is important to improving student learning and the training of future health professions leaders. In general, results from this study found an absence of moderate to strong associations between admission variables, OSCE scores, and APPE grades. However, students who struggled on the final OSCE scored lower on specific types of APPEs. This study highlights the complexities of assessing student development of noncognitive or professional skills over the course of a curriculum. Subsequent studies should focus on improving strategies for noncognitive assessment and aligning these strategies longitudinally.

ACKNOWLEDGMENTS

At the UNC Eshelman School of Pharmacy, OSCEs are administered under contract with the UNC School of Medicine Clinical Skills and Patient Simulation Center. The authors would like to thank Jackson Szeto for his help with data collection.

REFERENCES

- 1.Epstein RM. Assessment in medical education. N Engl J Med. 2007;356:387–96. doi: 10.1056/NEJMra054784. [DOI] [PubMed] [Google Scholar]

- 2.Accreditation Council for Pharmacy Education. Accreditation standards and key elements for the professional program in pharmacy leading to the Doctor of Pharmacy degree: Draft standards 2016. https://www.acpe-accredit.org/standards. Accessed July 1, 2014.

- 3.American Association of Colleges of Pharmacy. Curricular change summit supplement. http://www.ajpe.org/page/curriculuar-change-summit. Accessed June 11, 2014.

- 4.Blouin RA, Joyner PU, Pollack GM. Preparing for a renaissance in pharmacy education: the need, opportunity, and capacity for change. Am J Pharm Educ. 2008;72(2) doi: 10.5688/aj720242. Article 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hubball H, Burt H. Learning outcomes and program-level evaluation in a four-year undergraduate pharmacy curriculum. Am J Pharm Educ. 2007;71(5) doi: 10.5688/aj710590. Article 90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Speedie MK, Baldwin JN, Carter RA, Raehl CL, Yanchick VA, Maine LL. Cultivating “habits of mind” in the scholarly pharmacy clinician: report of the 2011–12 Argus Commission. Am J Pharm Educ. 2012;76(6):S3. doi: 10.5688/ajpe766S3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lahoz MR, Belliveau P, Gardner A, Morin A. An electronic NAPLEX review program for longitudinal assessment of pharmacy students' knowledge. Am J Pharm Educ. 2010;74(7) doi: 10.5688/aj7407128. Article 128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cox WC, McLaughlin JE. Association of Health Sciences Reasoning Test scores with academic and experiential performance. Am J Pharm Educ. 2014;78(4) doi: 10.5688/ajpe78473. Article 73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lobb WB, Wilkin NE, McCaffrey DJ, III, Wilson MC, Bentley JP. The predictive utility of nontraditional test scores for first-year pharmacy student academic performance. Am J Pharm Educ. 2006;70(6) doi: 10.5688/aj7006128. Article 128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mar E, Barnett MJ, Tang TTL, Sasaki-Hill D, Kuperberg JR, Knapp K. Impact of previous pharmacy work experience on pharmacy school academic performance. Am J Pharm Educ. 2010;74(3) doi: 10.5688/aj740342. Article 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kuncel NR, Credé M, Thomas LL, Klieger DM, Seiler SN, Woo SE. A meta-analysis of the validity of the Pharmacy College Admission Test (PCAT) and grade predictors of pharmacy student performance. Am J Pharm Educ. 2003;69(3) Article 51. [Google Scholar]

- 12.Meagher DG, Lin A, Stellato CP. A predictive validity study of the Pharmacy College Admission Test. Am J Pharm Educ. 2006;70(3) doi: 10.5688/aj700353. Article 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Basco WT, Jr, Gilbert GE, Chessman AW, Blue AV. The ability of a medical school admission process to predict clinical performance and patient's satisfaction. Acad Med. 2000;75(7):743–747. doi: 10.1097/00001888-200007000-00021. [DOI] [PubMed] [Google Scholar]

- 14.Basco WT, Jr, Lancaster CJ, Gilbert GE, Carey ME, Blue AV. Medical school application interview score has limited predictive validity for performance on a fourth year clinical practice examination. Adv Health Sci Educ Theory Pract. 2008;13(2):151–162. doi: 10.1007/s10459-006-9031-5. [DOI] [PubMed] [Google Scholar]

- 15.Mészáros K, Barnett MJ, McDonald K, et al. Progress examination for assessing students' readiness for advanced pharmacy practice experiences. Amer J Pharm Educ. 2009;73(6) doi: 10.5688/aj7306109. Article 109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Peskun C, Detsky A, Shandling M. Effectiveness of medical school admissions criteria in predicting residency ranking four years later. Med Educ. 2007;41(1):57–64. doi: 10.1111/j.1365-2929.2006.02647.x. [DOI] [PubMed] [Google Scholar]

- 17.Accreditation Council for Graduate Medical Education. Common program requirements IV.A.5d and IV.A.5e. Available at. https://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/CPRs2013.pdf. Accessed July 19, 2014.

- 18.McLaughlin JE, Dean MJ, Mumper RJ, Blouin RA, Roth MT. A Roadmap for educational research in pharmacy. Am J Pharm Educ. 2013;77(10) doi: 10.5688/ajpe7710218. Article 218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Roth MT, Mumper RJ, Singleton SF, et al. A renaissance in pharmacy education at the University of North Carolina at Chapel Hill. NC Med J. 2014;75(1):48–52. doi: 10.18043/ncm.75.1.48. [DOI] [PubMed] [Google Scholar]

- 20.Mason HL, Assemi M, Brown B, et al. Report of the 2010-2011 Academic Affairs Standing Committee. Am J Pharm Educ. 2011;75(10) doi: 10.5688/ajpe7510S12. Article S12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dahlin M, Söderberg S, Holm U, Nilsson I, Farnebo LO. Comparison of communication skills between medical students admitted after interviews or on academic merits. BMC Med Educ. 2012;12(1):46. doi: 10.1186/1472-6920-12-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Groves MA, Gordon J, Ryan G. Entry tests for graduate medical programs: is it time to re-think? Med J Australia. 2007;186(3):120. doi: 10.5694/j.1326-5377.2007.tb01228.x. [DOI] [PubMed] [Google Scholar]

- 23.Humphrey-Murto S, Leddy JJ, Wood TJ, Puddester D, Moineau G. Does emotional intelligence at medical school admission predict future academic performance? Acad Med. 2014;89(4):638–643. doi: 10.1097/ACM.0000000000000165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hemmerdinger JM, Stoddart SDR, Lilford RJ. A systematic review of tests of empathy in medicine. BMC Med Educ. 2007;7(1):24. doi: 10.1186/1472-6920-7-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kelly ME, Regan D, Dunne Z, Henn P, Newell J, O’Flynn S. To what extent does the Health Professions Admission Test-Ireland predict performance in early undergraduate tests of communication and clinical skills? – an observational cohort study. BMC Med Educ. 2013;13(1):68. doi: 10.1186/1472-6920-13-68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Eva KW, Reiter HI, Rosenfeld J, Norman GR. The ability of the multiple mini-interview to predict preclerkship performance in medical school. Acad Med. 2004;79(10):S40–S42. doi: 10.1097/00001888-200410001-00012. [DOI] [PubMed] [Google Scholar]

- 27.Reiter HI, Eva KW, Rosenfeld J, Norman GR. Multiple mini-interviews predict clerkship and licensing examination performance. Med Educ. 2007;41(4):378–384. doi: 10.1111/j.1365-2929.2007.02709.x. [DOI] [PubMed] [Google Scholar]

- 28.Sedlacek WE. Why we should use noncognitive variables with graduate and professional students. The Advisor: The Journal of the National Association of Advisors for the Health Professions. 2004;24(2):32–39. [Google Scholar]

- 29.Allen DD, Bond CA. Prepharmacy predictors of success in pharmacy school: grade point averages, pharmacy college admissions test, communication abilities, and critical thinking skills. Pharmacotherapy. 2001;21(7):842–849. doi: 10.1592/phco.21.9.842.34566. [DOI] [PubMed] [Google Scholar]

- 30.Cunny KA, Perri M. Historical perspective on undergraduate pharmacy student admissions: the PCAT. Am J Pharm Educ. 1990;54(1):1–6. [Google Scholar]

- 31.Eva KW, Rosenfeld J, Reiter HI, Norman GR. An admissions OSCE: the multiple mini-interview. Med Educ. 2004;38(3):314–326. doi: 10.1046/j.1365-2923.2004.01776.x. [DOI] [PubMed] [Google Scholar]

- 32.Cox WC, McLaughlin JE, Singer D, Lewis M, Dinkins MM. Development and assessment of the multiple mini-interview in a school of pharmacy admissions model. Am J Pharm Educ. 2015;79(4) doi: 10.5688/ajpe79453. Article 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kirton SB, Kravitz L. Objective structured clinical examinations (OSCEs) compared with traditional assessment methods. Am J Pharm Educ. 2011;75(6) doi: 10.5688/ajpe756111. Article 111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Salinitri FD, O'Connell MB, Garwood CL, Lehr VT, Abdallah K. An objective structured clinical examination to assess problem-based learning. Am J Pharm Educ. 2012;76(3) doi: 10.5688/ajpe76344. Article 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hutchings P, Astin AW, Banta TW, et al. Principles of good practice for assessing student learning. Assessment Update. 1993;5(1):6–7. [Google Scholar]

- 36.Assemi M, Corelli RL, Ambrose PJ. Development needs of volunteer pharmacy practice preceptors. Am J Pharm Educ. 2011;75(1) doi: 10.5688/ajpe75110. Article 10. [DOI] [PMC free article] [PubMed] [Google Scholar]