Summary

Objectives

Previous research has shown that medication alerting systems face usability issues. There has been no previous attempt to systematically explore the consequences of usability flaws in such systems on users (i.e. usage problems) and work systems (i.e. negative outcomes). This paper aims at exploring and synthesizing the consequences of usability flaws in terms of usage problems and negative outcomes on the work system.

Methods

A secondary analysis of 26 papers included in a prior systematic review of the usability flaws in medication alerting was performed. Usage problems and negative outcomes were extracted and sorted. Links between usability flaws, usage problems, and negative outcomes were also analyzed.

Results

Poor usability generates a large variety of consequences. It impacts the user from a cognitive, behavioral, emotional, and attitudinal perspective. Ultimately, usability flaws have negative consequences on the workflow, the effectiveness of the technology, the medication management process, and, more importantly, patient safety. Only few complete pathways leading from usability flaws to negative outcomes were identified.

Conclusion

Usability flaws in medication alerting systems impede users, and ultimately their work system, and negatively impact patient safety. Therefore, the usability dimension may act as a hidden explanatory variable that could explain, at least partly, the (absence of) intended outcomes of new technology.

Keywords: Human engineering; decision support system, clinical; review; usability; usage; patient safety; alerting functions

1 Introduction

Health Information Technology (HIT) is a promising tool to improve the efficiency, the effectiveness, and the safety of healthcare [1]. Nonetheless, around 40% of HIT tool implementations fail or are rejected by users [2]. Identified factors of failure include the new system not meeting users’ needs and poor interface specifications [2, 3]. These problems refer to usability issues.

Usability is the “extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specific context of use” [4]. Usability goes beyond the features of the graphical user interface (e.g. legibility of the texts, layout, and prompting of information), and includes issues related to how the system responds to users’ actions, the organization and accuracy of the knowledge embedded in the system, and the availability of the features required to support users’ (cognitive) tasks.

Usability is considered as a critical component of effective and safe use of HIT [5]. For instance, the analysis of incident reports by Magrabi and colleagues shows that 45% of incidents affecting patient safety originate from problems of usability [6]. The analysis of an incident report database of a large tertiary hospital by Samaranayake found that 17% of reported incidents were related to the use of technology and that many of them stemmed from poor usability [7]. Usability flaws (also known as usability problems, infractions, or defects) refer to “aspect[s] of the system and / or a demand on the user which makes it unpleasant, inefficient, onerous, perturbing, or impossible for the user to achieve their [sic] goals in typical usage situations” [8].

Usability is an intrinsic characteristic of technology. Due to the integration of technology in the work system, technology further interacts with work system components, and therefore usability also impacts those interactions. A work system “represents the various elements of work that a health care provider uses, encounters, and experiences to perform his or her job. (...) The work system is comprised of five elements: the person performing different tasks with various tools and technologies in a physical environment under certain organizational conditions” [9]. The interactions between these elements impact quality of work life, performance, safety, and health [9].

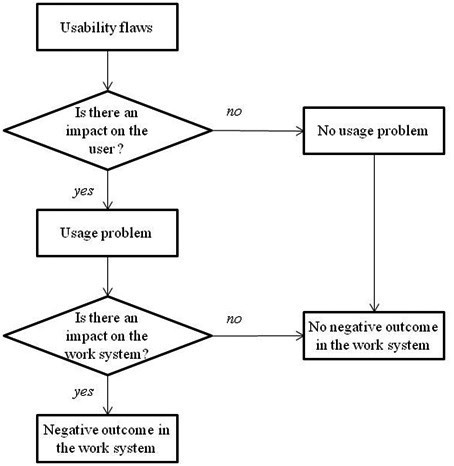

Usability flaws impact primarily the user and the tasks to be performed. These conscious or unconscious issues are referred to as “usage problems”. Other components of the work system are subsequently impacted through the user. These issues are referred to as “negative outcomes”. Figure 1 illustrates how the violation of usability design principles results in usability flaws that then have consequences on the work system through the user.

Fig. 1.

Schematic representation of the propagation of usability flaws through the user up to the work system.

The chain of consequences of usability flaws is not linear and depends on several factors. Some factors are independent of technology (e.g. users’ training, experience, and expertise, clinical skills, workload, fit of resilience level, and the needs of a situation), and may either favor or mitigate usability flaws. These non-technology dependent factors impact the usability flaws both at the level of outcomes and usage. For instance, if clinicians are not trained in the use of technology and are overwhelmed, it is unlikely that they will be able to handle and overcome usability flaws; this results in the risk of usage problems and negative outcomes. On the contrary, if clinicians are trained in the use of technology, and if they have a regular workload, they may be able to counteract the consequences; this results in fewer negative consequences of usability flaws.

Scientific literature reports numerous case studies where the introduction of HIT in a work system has negative consequences on clinicians, and on different components of the work system, including patient safety [10-15]. However, to the authors’ knowledge there has been no attempt at systematically exploring the consequences of HIT usability flaws in terms of usage problems and outcomes and at providing a comprehensive synthesis of these consequences.

2 Study Context

The present study focuses on medication alerting systems. The promising impact of such systems, e.g. the increase of hospital drug management safety, is not always observed [16, 17]. Their usability is often highlighted as a key factor impeding acceptance and implementation [18].

In a previous study [19], we performed a systematic review that aimed at identifying and categorizing usability flaws of medication alerting systems reported in published studies. This systematic review included 26 papers:

Original evaluation studies of medication alerting functions supporting e-prescribing

Objective descriptions of usability flaws

Papers evaluating the perceived usability were excluded. Usability flaws were identified and classified in categories based on an inductive content analysis. Details are described in [19]. Table 1 describes the categories.

Table 1.

Summary of the general and specific types of usability flaws reported in the literature on medication alerting functions and their description. Names of the categories were adapted from [20] for general flaws and from [19] for specific flaws.

| Types of usability flaws | Categories | Descriptions |

|---|---|---|

| General | Guidance issues | Prompting issues due to e.g. unclear text, deficiency in information highlight. No visual distinction of different types and severity of alerts. Legibility issues. No feedback to inform the user that (s)he has just missed an alert. No grouping of same severity alerts. |

| Workload issues | Too many actions for entering and obtaining information. Too much information in an alert and several alerts in the same window. Lack of concision. | |

| Explicit control issues | System’s actions do not correspond to the action requested by the user. There is no way to undo an action. | |

| Adaptability issues | The alerting system does not support all user types. | |

| Error management issues | Problem messages are unclear. | |

| Consistency issues | Inconsistency of the behavior of the system for similar tasks and according to the type of data analyzed. | |

| Significance of codes issues | Non-intuitive wording and icons. | |

| Specific | Low alerts’ signal-to-noise ratio | Alerts may be irrelevant (regarding expertise/ward habits, existing good practices, pharmaceutical knowledge, data considered, patient case, actions engaged, clinician’s interest for at risk situations, care logic) or redundant (appear very frequently/several times during decision making, clinically relevant solutions from the clinicians are not accepted, there is no feature to turn off a specific alert). |

| Alerts’ content issues | Missing information (about actions that could be taken, patient’s data, the problem detected, its evidence and its severity, and information to interpret data within the alert) or erroneous proposed action according to the clinical context. | |

| System not transparent enough for the user | About the way the system works (no information on alert severity scale, on the up-to-dateness of alerts’ rules), about the data it uses (every available data is not used to trigger the alert or incomplete mapping). | |

| Alert’s appearance issues (timing and mode) | Alert appears before the decision process has started, at the incorrect moment, or after the decision is made; alerts are not sufficiently intrusive or too intrusive; data processing is too slow. | |

| Tasks and control distribution issues | Alerts are not displayed to the right clinician or only to one clinician; users can enter comments on alerts that are displayed to no one; alerts are not transferable from one clinician to another. | |

| Alerts’ features issues | Missing features (to reconsider an alert later; from the alert, there is no access to additional information nor is there an action tool to solve the problem); existing features do not suit users’ needs. |

Following the identification of usability flaws in medication alerting systems [19], this paper presents a secondary analysis of the 26 papers that aims at identifying and categorizing a different set of data: usage problems and negative outcomes reported by authors secondary to the identified usability flaws. Two questions guided this analysis:

What types of usage problems and negative outcomes stemming from identified usability flaws are reported in usability studies of medication alerting functions?

What are the cause-consequence links reported between usability flaws, usage problems, and negative outcomes in medication alerting functions?

3 Method

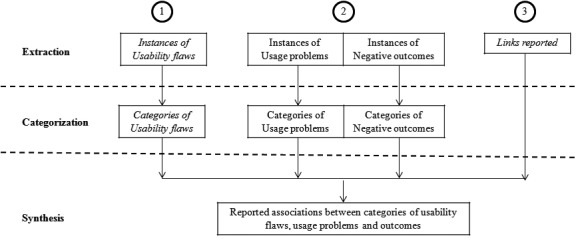

The overall methodology is synthesized in Figure 2.

Fig. 2.

Graphical representation of the study plan. First, usability flaws were identified and categorized in the aforementioned systematic review [19]. Second, usage problems and negative outcomes were identified and categorized. Third, the links reported between these elements were extracted and used to synthesize the associations between categories of flaws, usage problems, and negative outcomes.

3.1 Extraction of Usage Problems, Outcomes, and Links

Usage problems and outcomes were collected from the 26 papers included in the aforementioned systematic review through an independent analysis by two experts (MCBZ and RM). All usage problems and outcomes reported by the authors of the 26 papers secondary to any usability flaw were extracted. The usage problems and outcomes were identified through meaningful semantic units, i.e. sets of words representing a single idea that is sufficiently self-explanatory to be analyzed. In order to obtain reliable data, only objective descriptions of usage problems and outcomes were extracted; hypotheses drawn by the authors of the studies were not included. Specifically:

For usage problems, we targeted descriptions of how usability flaws impacted the experience of the user interacting with the alerting function, including the user’s cognitive processes, behaviors and feelings.

For negative outcomes, we targeted descriptions of the negative consequences of the usability flaws mediated through usage problems.

Three types of links reported by the authors were extracted: (a) links between usability flaws and usage problems, (b) links between usability flaws and negative outcomes, and (c) links between usability flaws, usage problems, and negative outcomes.

3.2 Analysis of Usage Problems, Outcomes, and Links

3.2.1 Question 1: Types of Usage Problems and Negative Outcomes

Usage problems were inductively categorized through an open card sorting [21], a Human Factor method in which topics related to a theme are written down on a set of cards (one topic per card). Open card sorting requires participants to organize the cards/topics into categories that make sense to them. Next, they have to name each category in a way that they feel describes the content. In the present study, the exact wording of each extracted usage problem was recorded on a paper card. Both experts independently sorted these cards into logical categories (cf. Figure 3, left) and named each category. A reconciliation meeting was organized to find an agreement on the number of categories and the respective names. During this meeting, internal consistency of categories and subcategories was improved by developing new categories and subcategories.

Fig. 3.

Pictures of cards sorted during the classification of usage problems (left, open card sorting) and negative outcomes (right, closed card sorting).

The outcomes were categorized using closed card sorting [21]. This method is similar to the open card sorting method except that participants have to sort cards/topics into pre-defined categories. Both experts defined four categories of negative outcomes after reading all of the extracted negative outcomes. Then, the experts together sorted the whole set of paper cards on which the exact wording of extracted negative outcomes was recorded (cf. Figure 3, right). During the sorting process, the experts discussed their choices with each other.

3.2.2 Question 2: Links between Usability Flaws, Usage Problems, and Negative Outcomes

The identified links between the categories of usability flaws, usage problems, and negative outcomes were then summarized. All complete links between usability flaws, usage problems, and negative outcomes were analyzed along with links between usability flaws and usage problems only. Both experts independently drew inferences on usage problems based on their experience with usability in cases where links between a usability flaw and an outcome did not explicitly mention the mediating usage problem. Once the links had been determined, both experts assessed the plausibility of the links. When the experts could not understand a link due to missing information, this link was excluded. The remaining links were summarized using double-entry tables that put in relation types of flaws, usage problems, and negative outcomes.

This analysis made it possible to represent the diversity of negative consequences from usability flaws on the users and the work system. However, it mutes the clinicians’ actual experience. A narration based on the analysis of the links was written in order to help understand what users were actually experiencing while using poorly designed alerting systems, and what the consequences on the work system were.

4 Results

From the 26 analyzed papers, 21 reported at least one instance of usage problems [10, 22-41] and 15 reported at least one instance of a negative outcome [10, 22-33, 37, 42]. The study authors associated the 168 usability flaws extracted during the previous systematic review [19] with 111 usage problems and 20 negative outcomes.

4.1 Question 1: Types of Usage Problems and Negative Outcomes

4.1.1 Usage Problems

The complete list of usage problems is provided in the online appendix. The open card sorting resulted in 15 or 23 categories (depending on the reviewer); however, the same themes were highlighted in both categorizations. After a reconciliation meeting, the classification scheme was finalized to four main categories (behavioral, cognitive, emotional, and attitudinal issues) divided into 25 subcategories, as described in Table 2.

Table 2.

Categories of usage problems identified, illustrative instances and references to the papers they are retrieved from. Users’ comments are in italic font.

| Usage problems | Illustrative instances from the 111 items | References |

|---|---|---|

| Behavioral issues | ||

| Increased workload due to the alerting function | “If I have to consider every DDI, then I am busy with it, all day, and that is not my job.” [32] | [22; 24; 27; 29; 31; 32; 37; 38; 40; 41] |

| Users do not use the system at all | “Two subjects did not use the decision support feature” [25] | [25; 28; 29] |

| Users voluntarily ignore the alerts | “Five nurses and two providers were observed to skip all or some of the reminders” [37] | [10; 22; 26; 28; 29; 32; 34-37; 40; 41] |

| Users use the system ineffectively | “The physician reported that specific features of the system (…) were hindering the use” [41] | [24; 29; 37; 41] |

| Users use workarounds | “Provider arbitrarily selected a date to satisfy the reminder” [37] | [25; 37; 38] |

| Users blindly follow the advice even if they do not understand it | “MD clicks through [the alert]” [accepts the advice without understanding the alert] [26] | [26] |

| Users are lost/stuck: they do not know how to go on | “Physicians were lost” [27] | [27; 37] |

| Cognitive issues | ||

| Information involuntarily missed: they cannot access or find it | “Not having noticed the DDI alert that appeared as a second DDI alert” [32] | [10; 24; 29; 30; 32; 35] |

| Increased memory load while using the alerting system: users must rely on their memory | “Some prescribers relied solely on their memory of the patient profile” [29] | [29] |

| Users experience difficulties in understanding the alert | “Had difficulty identifying the patient’s risk factors for the interaction” [30] | [26; 27; 29; 30; 33; 41] |

| Users experience difficulties in identifying alert’s components (including icons, features or specific data) | “They misidentified the alert as a general guideline reminder and did not notice the dose calculations embedded in text.” [25] | [25; 36; 37] |

| Users misinterpret alerts’ components (including icons, features or specific data) | “A user thought that the appearance of the ‘stamp’ window implied that the patient had a chronic pain problem or diagnosis” [36] | [36;39] |

| Users misinterpret alerts’ content | “Misinterpretation was rife, as shown by the high numbers of wrong or inapplicable rules and reasoning.” [32] | [23;32] |

| Users are interrupted by alerts while making their decision or interviewing the patient | “There were several cases where inadequate alert design (…) disrupted their workflow.” [22] | [22;24;29;34] |

| Emotional issues | ||

| Annoyance/irritation | “Repetitive alerts are both annoying and unnecessary.” [29] | [24; 26; 28; 29; 32; 41] |

| Frustration | “Physicians became frustrated” [27] | [22; 24; 27; 28] |

| Ugly experience | “Reading them is ugly” [22] | [22] |

| Stress, pressure | “Place prescribers under pressure” [29] | [29] |

| Cynicism | “This lack of information led to prescriber cynicism.” [22] | [22] |

| Attitudinal issues | ||

| Users question the behavior of the system: how the system is working, how it responds to users’ actions | “Did it accept my changes?” [26] | [24-26; 29] |

| Users question the triggering and sorting model of alerts | “I am not confident it’s checking all the interactions that I want it to check.” [29] | [22; 26; 29; 38] |

| Users question the usefulness of the alerting system | “The alerts were most likely to be helpful if they [were] presented when the users were entering orders or were otherwise at the point of making a decision about the issue in question or closely related issues.” [24] | [24; 33; 36] |

| Users question the validity of alerts | “That’s not true to my knowledge. The patient doesn’t like to take it; I doubt he’s taking it [from a non-VA source]. I will talk to the patient about it.” [31] | [22; 29; 31; 33; 34] |

| Users experience alert fatigue/desensitization | “Some doctors recognized that they had become desensitized to the alerts.”[40] | [22; 29; 31-34; 37; 40] |

| Users have negative feelings towards the system | “Justification requirement often viewed as time burden” [29] | [24; 29; 35; 36] |

4.1.2 Negative Outcomes

The complete list of negative outcomes is provided in the online appendix. Four categories were defined:

“Workflow issues” include instances related to the increase of communication among clinicians or between clinicians and patients, along with one instance of shift in alert responsibility from physicians to pharmacists.

“Technology effectiveness issues” include instances such as the non-achievement of the expected gain in the speed of work processes.

“Medication management process issues” include instances of slowing down clinicians’ work.

“Patient safety issues” include instances of errors in ordering. However, no lethal consequences were reported, even when a physician involuntarily ordered a double dose of aspirin [26].

No disagreement was observed between the two experts during the closed card sorting. A synthesis of data categorization is provided in Table 3.

Table 3.

Categories of negative outcomes identified, their description, illustrative instances, and references of the papers they are retrieved from.

| Issue | Description | Illustrative instances from the 20 items | References |

|---|---|---|---|

| Workflow issues | Increased communication between clinicians and patients. The responsibility of the alert may also be shifted. | “House staff claimed post hoc alerts unintentionally encourage house staff to rely on pharmacists for drug allergy checks, implicitly shifting responsibility to pharmacists.” [10] “Pharmacists call house staff to clarify questionable orders” [10] |

[10; 22; 26; 29; 31] |

| Technology effectiveness issues | The expected usefulness of the technology to manage the care is not noticed. | “Consequently they did not derive all the speed and accuracy benefit and did not reduce their cognitive effort the feature was in part designed to.” [25] | [23-25; 29; 33; 37] |

| Medication management process issues | The efficiency of the medication management process is bothered by the use of the alerting system. | Problems experienced with the alerting system “slowed down [users’] work” [28] | [22; 27; 28] |

| Patient safety issues | The use of the alerting system produces the conditions for decreasing the quality of care and even endangering the patient. | “MD goes back to the medication list. Aspirin is now listed both under VA list and non-VA medication list” [double order of aspirin] [26] | [26; 30; 32; 42] |

4.2 Question 2: Links between Usability Flaws, Usage Problems, and Negative Outcomes

4.2.1 Descriptive Results

Forty-seven complete cause-consequence links between usability flaws, usage problems, and negative outcomes were reported. In addition, 129 links between usability flaws and usage problems with no mention of related outcomes were reported. There were 6 links between usability flaws and outcomes for which the resulting usage problems had to be inferred, leading to a total of 53 complete chains of links between flaws, usage problems, and negative outcomes.

Usability flaws linked to usage problems

A total of 182 links between usability flaws and usage problems were synthesized, including 129 links between flaws and problems and the 53 associations between flaws and problems described in the 53 complete chains of links.

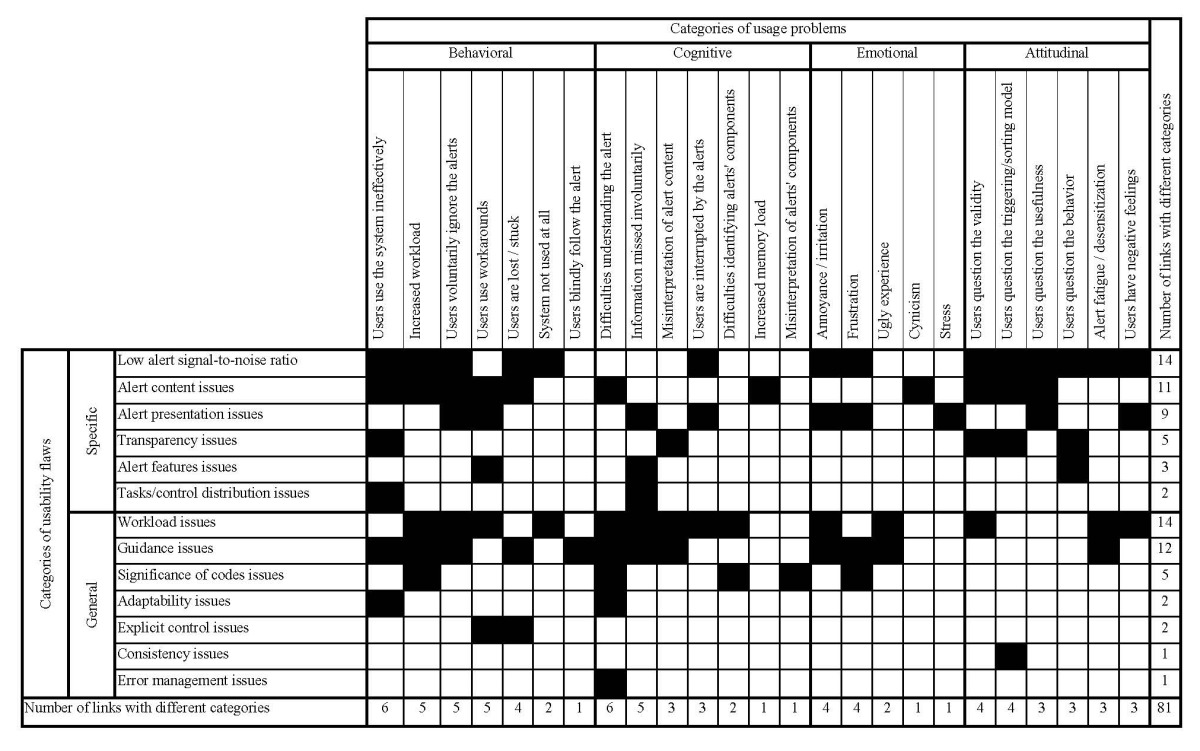

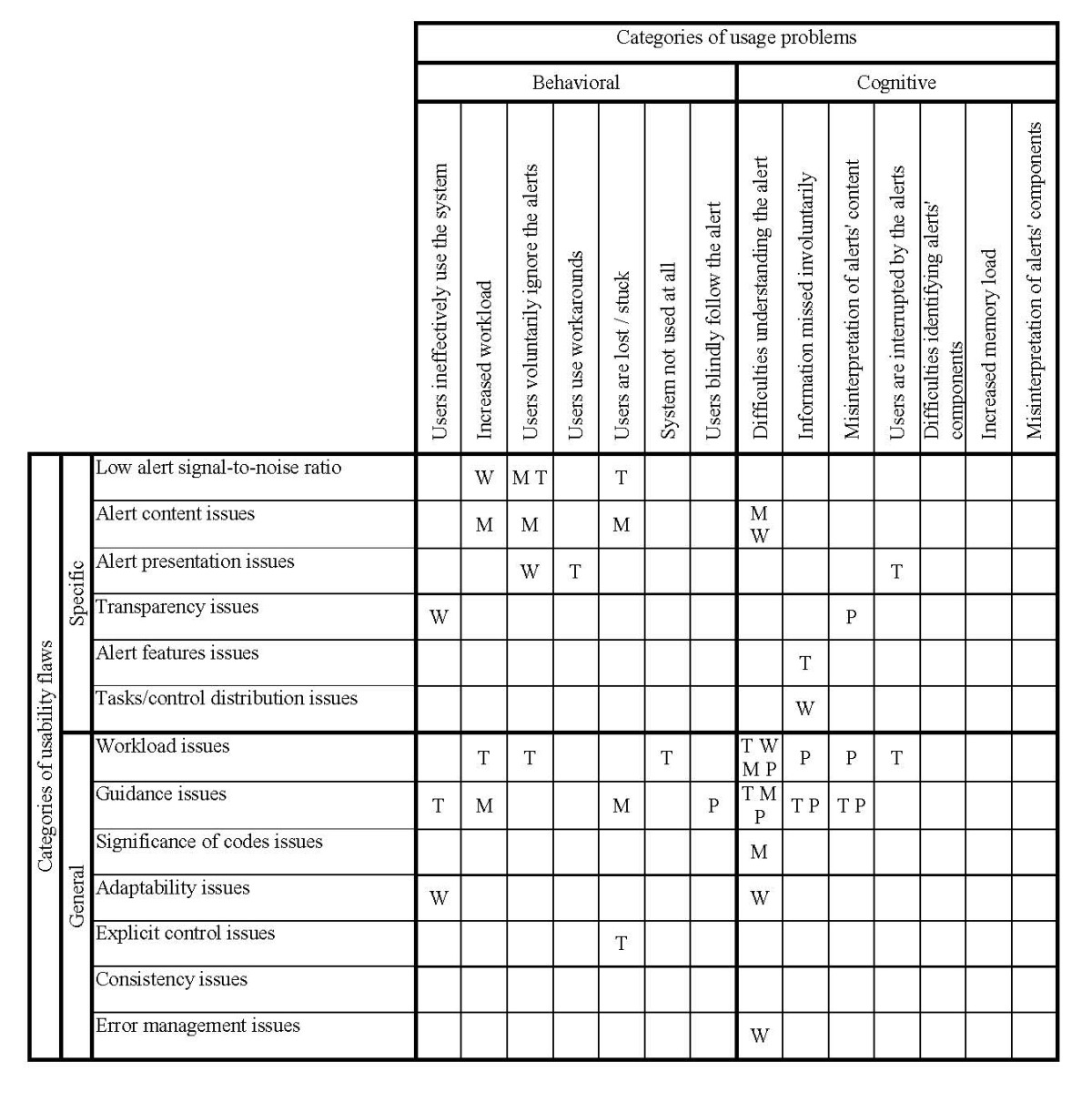

As shown in Table 4, all categories of usability flaws caused usage problems. A total of 81 different associations between categories of usability flaws and categories of usage problems were identified.

Table 4.

Associations between usability flaws and usage problems. Black boxes indicate that the link between the related flaw and problem is reported in the literature review.

Almost all types of flaws were not specific to one type of usage problem, with up to 14 types of usage problems linked to one category of usability flaw. Only two types of flaws, “consistency issues” and “error management issues”, led to one type of usage problem.

Overall, the type of usability flaws (i.e. general vs. specific) did not appear to lead to the same type of usage problems:

Specific types of usability flaws were increasingly linked to attitudinal usage problems than the general usability flaws (15 links between categories vs. 5).

General types of flaws were more often linked to cognitive issues than to specific ones (13 vs. 8).

Both types of flaws were related more or less equally to behavioral and emotional issues (respectively 13 for general problems vs. 15 for specific problems for behavioral issues and 6 vs. 6 for emotional issues).

Complete chains of links between flaws, usage problems, and negative outcomes

The 53 complete chains between categories of usability flaws, usage problems, and negative outcomes represented 42 different types of associations. These are summarized in Table 5.

Table 5.

Synthesis of the 53 complete links between usability flaws (row), usage problems (column), and negative outcomes (cell) categories. Emotional and attitudinal usage problems are not represented because these are never related directly to negative outcomes. Negative outcomes are represented by the following letters: W, workflow issues; T, technology effectiveness issues; M, medication management process issues; and P, patient safety issues.

All categories of usability flaws apart from “consistency issues” caused negative outcomes through usage problems. Emotional and attitudinal usage problems were never directly related to negative outcomes.

Six types of usability flaws were associated to only one type of negative outcome. “Significance of codes issues” and “alert content issues” were only associated to “medication management process issues”. “Error management issues”, “adaptability issues”, and “tasks and control distribution issues” were only associated to “workflow issues”. “Explicit control issues” was only associated to “technology effectiveness issues”. Conversely, “workload issues” was linked to all four types of outcomes. “Guidance issues” and “low signal-to-noise ratio” were associated to three types of negative outcomes.

Patient safety issues were mainly identified in workload and guidance issues but also appeared in transparency issues. The main usage problems associated to patient safety issues were those dealing with the understanding of the alert (misinterpretation, missed information, alert not understood but blindly followed). Medication management process issues were also caused by guidance and alert content issues.

4.2.2 A Journey through the Use of a Poorly Designed Medication Alerting System

The synthetic analysis enabled a clear representation of the usage problems and negative outcomes arising from poorly designed alerting systems. However, this approach camouflages the actual impact of flaws on clinicians and their work system. A narration based on these results was proposed to help assess what users actually experience while using alerting systems and what the resulting consequences on their work system are.

During the actual interaction of the user with an alerting system, all types of usability flaws, usage problems, and outcomes are tightly intertwined. In order to describe flaws, problems, and outcomes in an integrative manner, presenting (i) issues of interactions and (ii) their consequences in terms of feelings has been preferred over following the aforementioned categorizations.

Interacting with the alert: Not an easy task

Missed alerts

The first step in the clinicians’ interaction with alerting systems is to correctly read the message conveyed by the alert. Various problems in the presentation of the alert may prevent users from receiving the information when they need it. These problems include the timing, the alert mode (i.e. intrusive or non-intrusive alert), and the requirement to use the scrolling bar to see the information. Additionally, clinicians may miss the alert entirely if it is not sufficiently noticeable [24]. Another reason for missing the alert is the lack of integration into the work flow and the delayed availability of the alert to the clinician: some clinicians received the alert after the patient had left the examination room [35]. When the alert appeared too late, clinicians had to perform the operation the alerting system was supposed to do. For instance, “six subjects computed, estimated, or used a heuristic to get the dose amount at some point before the system-calculated dose presentation” [25].

Clinicians also missed the alert by accident, overriding it unintentionally because it appeared in place of another alert that had just been dismissed [32]: not noting this change, they dismissed the second alert, too, thinking the system had not taken into account their prior dismiss action. In addition, some alerting systems did not provide the opportunity to display the alert a second time: therefore, clinicians did not have a second chance to read it [32] and they may have ultimately “[forgotten] what alert(s) appeared” [29].

Even when the alert was seen, display issues also caused clinicians to miss the information they needed. For instance, clinicians “misidentified the alert as a general guideline reminder and did not notice the dose calculations embedded in text” [25]. In a simulation study, half of the participants missed the information about the duration of the patient’s therapy given in the alert, although this information was important for the resulting clinical decision [30]. In the same study, clinicians made incorrect clinical decisions because they missed the patient’s risk factors that were hidden in a tab.

Finally, the required clinical decision-making information might simply not have been displayed by the alerting system. This lack of information increased the clinicians’ memory load, requiring them to rely “solely on their memory of the patient profile” [29] or to make “assumptions about patient history” [29].

Misunderstanding alerts

Clinicians have to understand and successfully interpret the alerts displayed. At this step, several kinds of usability issues led to misunderstandings. First, the alerts’ language “which [did] not adequately support all prescriber types (…) [was] difficult for prescriber to interpret” [26; 29]. As one clinician stated: “It’s hard to see what [the alert] is trying to tell you” [26]. Alerts were “not understandable by physicians” [27], “difficult to interpret in content and purpose” [28], “precluding [the users] to understand the problem that generated the alert or how to solve [it]” [27].

These difficulties in understanding alert messages prevented clinicians from using the alerting functions in an optimal way and often prompted clinicians to ask for help: “‘Physicians often come and ask about an alert triggered by the combination of amiodarone and simvastatin,’ [said] a pharmacist, ‘the doctors don’t know what the order check really means’” [22]. It was at times necessary for nurses and physicians to have “real time, face-to-face communication with clinical pharmacists” [29]. Conversely, sometimes “pharmacists call[ed] house staff to clarify questionable orders” because they had been alerted by the pharmacy information system about clinical issues that physicians and nurses were unaware of due to their own information system illiteracy [10]. Consequently, these issues “slow[ed] down their work” [28] by increasing their need for communication.

The difficulties in understanding the alerts led to misinterpretation and incorrect decisions. Numerous issues were observed “as shown by the high number [of alerts handled incorrectly]” [32]. For instance, directives in the alert explaining that a comment had to be entered (e.g. reason for not adhering to the suggestion), were sometimes difficult to interpret; clinicians therefore did not understand the request, leading to a “relatively high proportion of content-free comments” [23]. More severe instances showed that patient safety could have been endangered. Indeed, misinterpretation issues caused “respondents [to make] a wrong selection [of drugs], because they trusted the alerting system (and followed the incorrect dose recommendation for an unfamiliar drug)” [32]. In another instance, the misinterpretation of the alert’s actual meaning caused confusion: “Physician (MD) orders [VA] aspirin - 162 mg. An order check [alerts] appears. Says duplicate drug order. Non-VA ASPIRIN. [Alert] mentions 325 mg... MD is looking at it also and [appears] confused. MD to Observer (Obs): ‘What’s it going to do? Is it going to switch the patient to 325mg?’” [26]. The clinician was not sure of the meaning of the alert: the actual meaning of the alert was to inform her/him about a duplicate order of aspirin, but (s) he interpreted it as information about an automatic change of dosage and so (s)he “click[ed] [it] through” [26], mistakenly validating two orders of aspirin (“aspirin is now listed both under VA list and non-VA list” [26]). Another study reported on “various examples of complex registrations that [led] to medication errors” in a Danish hospital. These errors were due to clinicians not understanding how alerts were triggered. Therefore, clinicians had to infer the cause of the alerts, potentially leading to incorrect clinical decisions [42].

The difficulties in understanding alert messages were not only related to their content. Icons and labels also prevented clinicians from handling the alerts efficiently: “three providers misinterpret[ed] this question mark” [37], “several users (…) did not realize [that the arrows under the clinical recommendations] provided additional more detailed information about the basic recommendation when clicked on” [36]. Another instance: “the appearance of the ‘stamp’ window implied that the patient had a chronic pain problem or diagnosis. In actuality, the ‘stamp’ indicated that the patient had a scheduled appointment (…) and that ATHENA-OT had recommendations available.” [36]. And also: “two participants misinterpreted the meaning of “when” to represent the last time the current patient received the intervention instead of the frequency the intervention is due for all patients” [39].

Increased workload and bothered interactions

Clinicians have to act on the alerting system, either to handle or to respond to the alert. Several usability issues were observed that negatively impacted this step of the interaction and increased clinician workload. First, the incorrect setting of alerts disrupted the clinicians’ workflow [22] and their “thought process” [24], making the decision-making process more difficult and forcing clinicians to use greater levels of concentration to keep their thoughts on track. These recurring interruptions ultimately hindered alert effectiveness [29].

At a behavioral level, the overall poor quality of alert messages, their repetition, and their length compelled clinicians to waste “time searching information in the [Electronic Health Record]” [29], scrolling down [32], and reading the messages [40]. Clinicians also “resort[ed] to trial-and-error behavior exemplified by the extra mouse clicks and keystrokes they needed for locating and executing the right action in response to the message” [27]. Tasks related to documenting alerts (especially for clinical reminders) also contributed to the increase of clinicians’ workload. Clinicians experienced “double documentation” burdens as, besides documenting the alert, they also generally kept track of this information outside the alerting system [37]. The high demand on documentation led them to satisfy alerts once “the patient had left the room”, “after the clinic closed”, and even to delegate this task to “case managers” [38]. The absence of features to share alert messages also compelled clinicians to utilize “paper-based workarounds” (copy the information of interest on a paper) to share it with other clinicians [37].

Usability issues related to the system features also hindered clinician interactions with the alerting system. Clinicians were unable to continue their workflow due to the inability of the alerting system to efficiently support their cognitive activities and their clinical tasks. For instance, there may be no appropriate option to satisfy the alert [37]. To overcome this dead-end, clinicians developed workaround behaviors: one clinician was observed to “arbitrarily select a date to satisfy the reminder” because none of the options within the dialogue box matched her/his intentions; another clinician “had to leave the reminder unsatisfied” [37]. The interface impeded the “prescribers’ ability to act on alerts” [29]. For instance, clinicians did “not always seem to understand how to use and manage the alerts effectively”, leading to “unnecessary repetitions of alerts” [24]. Similarly, when clinicians were unable to satisfy an alert because of the response choices provided by the system, “the [clinical reminder] (…) continue[d] to appear” [37]. When clinicians wanted to cancel a clinical reminder without losing the data entered previously, they “select[ed] each [reminder] individually from the list rather than using the “Next” button to navigate through a sequence of [reminders]” [37]. The usability problem at the root of this behavior “introduce[ed] the possibility of losing data previously inputted” [37].

In summary, the improvement of the medication management process that clinicians expected from alerting systems was not observed because these systems actually impaired the ordering efficiency by increasing their workload [22, 27]. Clinicians “did not derive all the speed and accuracy benefit and did not reduce their cognitive effort the [alerting system] was in part designed to” [25].

Emotional and long-term consequences

Clinicians’ direct emotional reactions

The previous section reported on interaction aspects that were impacted by the poor usability of alerting systems and that, in turn, impacted the work system. However, the clinicians’ interaction was not the only aspect impacted from daily exposure to poorly designed alerting systems. Clinicians were also emotionally impacted. Clinicians found it unpleasant to read alerts with display issues [22]. The delayed display of the alerts “place[d] the prescribers under pressure” [29] because they were supposed to make an informed decision quickly. Unsurprisingly “the lack of information [in the alert] led to prescriber cynicism” [22]. In various instances, clinicians became frustrated [22], overwhelmed [29], and were irritated with the repetitive appearance of the same alert: “the same alert appears a 3rd time when [nurse practitioner] goes to sign the order. [Nurse practitioner] gestures to the screen, ‘See – three times!’” [29]. The high number of alerts was reported by clinicians to “drive you mad” [32]. In addition, alerts that appeared repeatedly in spite of attempts by clinicians to cancel them (e.g. by modifying the order) “might freak someone out” [26].

Over time, the repetitive daily use, several times a day, of alerting systems with usability flaws also impacted clinician attitudes towards the system. It was demonstrated that there were “numerous complaints about getting too many alerts or alerts at an inappropriate time” [35]. Clinicians even “complain[ed] vociferously” [24] that “it [was] hard to use the tool” [36]. These complaints are completely understandable, but unfortunately the impact on the user is deeper than complaints initially indicate.

Alert fatigue and skipping alerts

Indeed, the low signal-to-noise ratio of alerts compelled clinicians to continually dismiss numerous alerts. The low signal-to-noise ratio creates alert fatigue and desensitizes clinicians, i.e. they lose interest for alerts.

The authors noticed numerous “remarks [of clinicians] suggesting alert fatigue” [32]. Clinicians themselves recognized that “they had become desensitized to the alerts” [40]. One of the clinicians explained that there were “too many things popping at [him]” [34]. The alert fatigue impacted everyone since “even prescribers with a very positive view of the alert system showed signs of desensitization” [29]. In turn, alert fatigue caused users to voluntarily ignore alerts, resulting in the clinicians overriding alerts [29]. There were numerous descriptions of how clinicians “rapidly [overrode] these alert types once they recognized that they had seen the alert before” [28]. Some noted: “it’s gotten to the point that [they] didn’t hardly look at significant (interactions) anymore” [26]. They were “often inclined to rapidly click [the alerts] away (…) [to] simply skip them” [32]. If there was more than “one [alert in the popup window], [they] didn’t read through them all” [22]. They “click[ed] off by rote and [risked] not see[ing] something that [was] different” [26]; they developed “a sort of mechanism” to dismiss alerts [40]; one clinician explained that she had “memorized the location of the override button” for these situations [28]. A clinician summarized this point as follows: “Once you realize that most of the information is useless or superfluous or not relevant, you stop looking at it” [34]. Instead of relying on the alerts, clinicians relied on their “own clinical judgment” [29]. The unnecessary redundancy of alerts, which led to increased alert fatigue and ignoring of alerts, ultimately “imped[ed] the medication ordering process” [22] and led “to low response levels to the alerts” [33]. Moreover, alerts that were ignored because of their misplaced timing “encourage[d] house staff to rely on pharmacists for drug allergy checks, implicitly shifting responsibility to pharmacists” [10].

Loss of confidence

The desensitization process was linked to a loss of confidence in the alerting function. One clinician explained: “I see it does say ‘active’ though. Technically, the [old] medication [order] isn’t ‘active’ because I just changed them to discontinued” [26]. Logically, (s)he wondered: “Did it accept my changes?” [26]. Clinicians had doubts about the way the alerting system was working. In other cases, clinicians were “unsure if the pharmacists review” the override justifications they entered in the system [29]. Even for the management of alerts, they were “uncertain how long the reminders would be turned off “ [38]. Clinicians expressed the same kind of doubts regarding the triggering of alerts by the system: they were not sure “that the system based its recommendation on the same assumptions that [they] would have made” [25]. Clinicians were also “not sure why [the alert] didn’t come up this time” [26]. They even were not sure if the “order check system automatically check[s] when [they] order medications” [26].

Moreover, the clinical validity of alerts was also questioned because “it was unclear if the warnings were ‘evidence-based’” [22] and because the system provided clinicians with information which they think was not right or not updated. Clinicians doubted that “the system has up-to-date information” [33]. In another example, “upon seeing [a duplicate order alert about two orders of iron], the physician stated, ‘that’s not true to my knowledge. The patient doesn’t like to take it; I doubt he’s taking it’” [31]. The physician was compelled to ask the patient whether he was obtaining iron from an outside source or not [31]: using the alerting system changed his communication. Ultimately, clinicians seriously questioned the usefulness of the alerting system, considering it unhelpful [24]. Clinicians described the alerting system as “it’s just crying wolf “ [34]. Their “perceptions of the credibility and trustworthiness of the alert system” [29] was negatively impacted by the poor usability of the system. In summary, poorly designed alerting functions in terms of usability encouraged clinicians not to use the alerting system [38].

The instances of usage problems and negative outcomes take on their full meaning in this narration. Altogether, these actual consequences of usability flaws draw up a negative report about alerting systems that are deficient in terms of usability. The existence of usability flaws in an alerting system used daily actually negatively impacts the users, their cognitive activities, their behaviors, and their feelings. Ultimately, these usability flaws hinder other work system’s components, potentially endangering the patient.

5 Discussion

The present study aimed at answering two questions: “What types of usage problems and negative outcomes coming from usability flaws are reported in medication alerting functions?” and “What are the cause-consequence links reported between usability flaws, usage problems, and negative outcomes in medication alerting functions?”

Results show the consequences of usability flaws were various and clearly identified in the literature. Additionally, the consequences of usability flaws concerned both the user and the different components of the work system. A total of 111 usages problems, along with 20 negatives outcomes, were identified in the 26 papers. Usage problems were categorized into four main categories, “cognitive”, “behavioral”, “emotional” and “attitudinal”, subdivided into 25 subcategories. For negative outcomes, four categories were used: “workflow issues”, “technology effectiveness issues”, “medication management process issues”, and “patient safety issues”.

As for the cause-consequence links, 129 links between usability flaws and usage problems were reported along with 53 links between usability flaws, usage problems, and negative outcomes. Some trends did arise that highlighted the important role of “workload issues”, “guidance issues”, and “low signal-to-noise ratio issues” along with the role of understanding information; however, there were only a few complete pathways leading from usability flaws to negative outcomes. The clearest pathway was the absence of ongoing influence from attitudinal and emotional problems to outcomes. This result can be explained by the fact that feelings cannot directly impact the work system: they need to be mediated through a decision and / or a behavior. There was no other definitive, clear association that appeared between categories of usability flaws, categories of usage problems, and categories of negative outcomes.

The instances collected, once put in relation, illustrated the difficulties and even the pain clinicians may experience while using a poorly designed alerting system. This “journey” collects the worst occurrences from all observed usability flaws and their consequences. Such a list of concrete illustrations of the consequences of usability flaws can be used to make designers and/or project managers more aware of the importance of considering usability during the design process of HIT tools.

Publication and selective reporting biases in the analyzed papers may have impacted the comprehensiveness and representativeness of the reported usage problems and negative outcomes. In addition, the papers analyzed in this review were selected because they present usability flaws, not because they report usage problems and negative outcomes. This inclusion criterion may explain the small number (n = 20) of negative outcomes retrieved from the analyzed papers and ultimately the few spreading lines observed. Therefore, the presented results must be handled carefully. They do not exhaustively represent the usage problems and negative outcomes that are related to medication alerting systems. Rather, the results present the usage problems and negative outcomes that are caused by usability flaws according to the authors of the studies analyzed.

Of course, this review should be regularly updated with additional insights from new publications. To improve the collection of usability data, researchers should follow reporting guidelines [43] and take advantage of the online appendices to publish complete sets of usability results. Other sources of usability data should also be explored, such as incident report systems that include appropriate descriptions of usability flaws, usage problems, and negative outcomes [6, 7, 44].

Several studies have shown that implementing alerting functions actually contributes to the improvement of medication management safety [45] by improving, for instance, medication dosing [46], antibiotic use [47], and clinical practice [48, 49]. When associated with Computerized Physician Order Entry (CPOE), they enhance healthcare quality and safety [50]. However, these benefits are not always observed [16, 17]. Considering all reported usability issues, one may reasonably think that poor usability of those technologies is partly responsible for either reducing their impact or preventing this impact. Several studies have recently shown that improving the usability of HIT (e.g. CPOE or alerting systems), by applying usability design principles or by following a user-centered design process, improves the efficiency of technology [51, 52], reduces user workload [52], and increases user satisfaction [52, 53]. Nonetheless, usability is not the only technology characteristic that may negatively impact the user experience and the outcomes. For instance, Magrabi and colleagues noticed that technical issues such as configuration issues, access or availability issues, or data capture issues may also ultimately endanger patient safety [44]. Therefore, other characteristics of HIT besides its usability should be considered to address the problems experienced by clinicians and prevent negative outcomes. Even so, improving the usability of alerting systems may also decrease usage problems and negative outcomes in the work system. The appendices published in [19] and with this paper aim precisely at that.

6 Conclusion

This paper aimed at exploring and synthesizing the consequences of usability flaws in terms of usage problems and negative outcomes. We performed a secondary analysis of 26 papers that were included in a prior systematic review of the usability flaws reported in medication-related alerting functions. Results showed that poor usability impacts the user and the work system in various ways. Only a few lines of dissemination of usability flaws in the work system through the user can be drawn along with noticeable tendencies. The results highlight the large variety of difficulties and the consequences thereof that users may experience when using a poorly designed alerting system. Improving the usability, along with technical issues, of alerting systems may contribute to improving the expected positive clinical impact of this promising technology.

Supplementary Material

Acknowledgements

The authors would like to thank the members of the IMIA working group on Human Factors Engineering for Healthcare Informatics for their support and advice in performing this research. The authors would also like to thank Emmanuel Castets for the design of the figures. Finally, the authors would like to thank the reviewers for their helpful comments.

References

- 1.Koln L, Corrigan J, Donaldson M, editors. To err is human, building a safer health system. Washington, D.C., National Academic Press; 2000. [PubMed] [Google Scholar]

- 2.Kaplan B, Harris-Salamone KD. Health IT success and failure: recommendations from literature and an AMIA workshop. J Am Med Inform Assoc 2009. May;16(3):291-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McManus J, Wood-Harper T. Understanding the sources of information systems project failure. Manag Serv 2007;38-43. [Google Scholar]

- 4.International Standardization Organization. Ergonomic requirements for office work with visual display terminals (VDTs) -- Part 11: Guidance on usability (Rep N° 9241-11). Geneva: International Standardization Organization; 1998. [Google Scholar]

- 5.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc 2013. June;20(e1):e2-e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Magrabi F, Ong MS, Runciman W, Coiera E. An analysis of computer-related patient safety incidents to inform the development of a classification. J Am Med Inform Assoc 2010. November;17(6):663-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Samaranayake NR, Cheung ST, Chui WC, Cheung BM. Technology-related medication errors in a tertiary hospital: a 5-year analysis of reported medication incidents. Int J Med Inform 2012. December;81(12):828-33. [DOI] [PubMed] [Google Scholar]

- 8.Lavery D, Cockton G. Representing Predicted and Actual Usability Problems. Johnson H, Johnson P, O’Neill E, editors. Proceedings of International Workshop on Representations in Interactive Software Development. Queen Mary and Westfield College, University of London; 1997. p. 97-108. [Google Scholar]

- 9.Carayon P, Alvadaro CJ, Schoofs HA. Work system design in health care. Carayon P, editor. Handbook of human factors and ergonomics in health care and patient safety. Laurence Erlbaum Associates, New Jersey; 2007. p. 61-78. [Google Scholar]

- 10.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA 2005. March 9;293(10):1197-203. [DOI] [PubMed] [Google Scholar]

- 11.Kushniruk A, Beuscart-Zephir MC, Grzes A, Borycki E, Watbled L, Kannry J. Increasing the safety of healthcare information systems through improved procurement: toward a framework for selection of safe healthcare systems. Healthc Q 2010. September;13 Spec No:53-8. [DOI] [PubMed] [Google Scholar]

- 12.Weiner JP, Kfuri T, Chan K, Fowles JB. “e-Iatrogenesis”: the most critical unintended consequence of CPOE and other HIT. J Am Med Inform Assoc 2007. May;14(3):387-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006. September;13(5):547-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kushniruk A, Triola M, Stein B, Borycki E, Kannry J. The relationship of usability to medical error: an evaluation of errors associated with usability problems in the use of a handheld application for prescribing medications. Stud Health Technol Inform 2004;107(Pt 2):1073-6. [PubMed] [Google Scholar]

- 15.Nanji KC, Rothschild JM, Boehne JJ, Keohane CA, Ash JS, Poon EG. Unrealized potential and residual consequences of electronic prescribing on pharmacy workflow in the outpatient pharmacy. J Am Med Inform Assoc 2014. May;21(3):481-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA 1998. October 21;280(15):1339-46. [DOI] [PubMed] [Google Scholar]

- 17.Ranji SR, Rennke S, Wachter RM. Computerised provider order entry combined with clinical decision support systems to improve medication safety: a narrative review. BMJ Qual Saf 2014. April 12. [DOI] [PubMed] [Google Scholar]

- 18.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004. March;11(2):104-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Marcilly R, Ammenwerth E, Vasseur F, Roehrer E, Beuscart-Zephir MC. Usability flaws of medication-related alerting systems: a systematic review. J Biomed Inform 2015. June;55:260-71. [DOI] [PubMed] [Google Scholar]

- 20.Scapin DL, Bastien JMC. Ergonomic criteria for evaluating the ergonomic quality of interactive systems. Behaviour and Information Technology 1997;6(4-5):220-31. [Google Scholar]

- 21.Sebillotte S. Action representation for home automation. North-Holland: Elsevier, Science Publishers, B.V.; 1990. p. 985-90. [Google Scholar]

- 22.Russ AL, Zillich AJ, McManus MS, Doebbeling BN, Saleem JJ. A human factors investigation of medication alerts: barriers to prescriber decision-making and clinical workflow. AMIA Annu Symp Proc 2009;548-52. [PMC free article] [PubMed] [Google Scholar]

- 23.Chused AE, Kuperman GJ, Stetson PD. Alert override reasons: a failure to communicate. AMIA Annu Symp Proc 2008;111-5. [PMC free article] [PubMed] [Google Scholar]

- 24.Krall MA, Sittig DF. Clinician’s assessments of outpatient electronic medical record alert and reminder usability and usefulness requirements. Proc AMIA Symp 2002;400-4. [PMC free article] [PubMed] [Google Scholar]

- 25.Horsky J, Kaufman DR, Patel VL. Computer-based drug ordering: evaluation of interaction with a decision-support system. Stud Health Technol Inform 2004;107(Pt 2):1063-7. [PubMed] [Google Scholar]

- 26.Russ AL, Saleem JJ, McManus MS, Zillich AJ, Doebbling BN. Computerized medication alerts and prescriber mental models: observing routine patient care. Proceedings of the Human Factors and Ergonomics Society Annual Meeting; 2009. p. 655-9. [Google Scholar]

- 27.Khajouei R, Peek N, Wierenga PC, Kersten MJ, Jaspers MW. Effect of predefined order sets and usability problems on efficiency of computerized medication ordering. Int J Med Inform 2010. October;79(10):690-8. [DOI] [PubMed] [Google Scholar]

- 28.Feldstein A, Simon SR, Schneider J, Krall M, Laferriere D, Smith DH, et al. How to design computerized alerts to safe prescribing practices. Jt Comm J Qual Saf 2004. November;30(11):602-13. [DOI] [PubMed] [Google Scholar]

- 29.Russ AL, Zillich AJ, McManus MS, Doebbeling BN, Saleem JJ. Prescribers’ interactions with medication alerts at the point of prescribing: A multi-method, in situ investigation of the human-computer interaction. Int J Med Inform 2012. April;81(4):232-43. [DOI] [PubMed] [Google Scholar]

- 30.Duke JD, Bolchini D. A successful model and visual design for creating context-aware drug-drug interaction alerts. AMIA Annu Symp Proc 2011;339-48. [PMC free article] [PubMed] [Google Scholar]

- 31.Russ AL, Saleem JJ, McManus MS, Frankel RM, Zillich AJ. The Workflow of Computerized Medication Ordering in Primary Care is Not Prescriptive;2010. p. 840-4. [Google Scholar]

- 32.van der Sijs H, van GT, Vulto A, Berg M, Aarts J. Understanding handling of drug safety alerts: a simulation study. Int J Med Inform 2010. May;79(5):361-9. [DOI] [PubMed] [Google Scholar]

- 33.Wipfli R, Betrancourt M, Guardia A, Lovis C. A qualitative analysis of prescription activity and alert usage in a computerized physician order entry system. Stud Health Technol Inform 2011;169:940-4. [PubMed] [Google Scholar]

- 34.Weingart SN, Massagli M, Cyrulik A, Isaac T, Morway L, Sands DZ, et al. Assessing the value of electronic prescribing in ambulatory care: a focus group study. Int J Med Inform 2009. September;78(9):571-8. [DOI] [PubMed] [Google Scholar]

- 35.Ash JS, Sittig DF, Dykstra RH, Guappone K, Carpenter JD, Seshadri V. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007. June;76 Suppl 1:S21-S27. [DOI] [PubMed] [Google Scholar]

- 36.Trafton J, Martins S, Michel M, Lewis E, Wang D, Combs A, et al. Evaluation of the acceptability and usability of a decision support system to encourage safe and effective use of opioid therapy for chronic, non cancer pain by primary care providers. Pain Med 2010. April;11(4):575-85. [DOI] [PubMed] [Google Scholar]

- 37.Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SM. Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc 2005. July;12(4):438-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Patterson ES, Nguyen AD, Halloran JP, Asch SM. Human factors barriers to the effective use of ten HIV clinical reminders. J Am Med Inform Assoc 2004. January;11(1):50-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Saleem JJ, Patterson ES, Militello L, Anders S, Falciglia M, Wissman JA, et al. Impact of clinical reminder redesign on learnability, efficiency, usability, and workload for ambulatory clinic nurses. J Am Med Inform Assoc 2007. September;14(5):632-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Baysari MT, Westbrook JI, Richardson KL, Day RO. The influence of computerized decision support on prescribing during ward-rounds: are the decision-makers targeted? J Am Med Inform Assoc 2011. November;18(6):754-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kortteisto T, Komulainen J, Makela M, Kunnamo I, Kaila M. Clinical decision support must be useful, functional is not enough: a qualitative study of computer-based clinical decision support in primary care. BMC Health Serv Res 2012;12:349-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hartmann Hamilton AR, Anhoj J, Hellebek A, Egebart J, Bjorn B, Lilja B. Computerised Physician Order Entry (CPOE). Stud Health Technol Inform 2009;148:159-62. [PubMed] [Google Scholar]

- 43.Peute LW Driest KF Marcilly R, BrasDa Costa S Beuscart-Zephir MC Jaspers MW. A Framework for reporting on Human Factor/Usability studies of Health Information Technologies. Stud Health Technol Inform 2013;194:54-60. [PubMed] [Google Scholar]

- 44.Magrabi F, Ong MS, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc 2012. January;19(1):45-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jaspers MW, Smeulers M, Vermeulen H, Peute LW. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc 2011. May 1;18(3):327-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Oppenheim MI, Vidal C, Velasco FT, Boyer AG, Cooper MR, Hayes JG, et al. Impact of a computerized alert during physician order entry on medication dosing in patients with renal impairment. Proc AMIA Symp 2002;577-81. [PMC free article] [PubMed] [Google Scholar]

- 47.Thursky K. Use of computerized decision support systems to improve antibiotic prescribing. Expert Rev Anti Infect Ther 2006. June;4(3):491-507. [DOI] [PubMed] [Google Scholar]

- 48.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005. April 2;330:765-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schedlbauer A, Prasad V, Mulvaney C, Phansalkar S, Stanton W, Bates DW, et al. What evidence supports the use of computerized alerts and prompts to improve clinicians’ prescribing behavior? J Am Med Inform Assoc 2009. July;16(4):531-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ammenwerth E, Schnell-Inderst P, Machan C, Siebert U. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc 2008. September;15(5):585-600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Chan J, Shojania KG, Easty AC, Etchells EE. Does user-centred design affect the efficiency, usability and safety of CPOE order sets? J Am Med Inform Assoc 2011. May 1;18(3):276-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Russ AL, Zillich AJ, Melton BL, Russell SA, Chen S, Spina JR, et al. Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. J Am Med Inform Assoc 2014. March 25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Tsopra R, Jais JP, Venot A, Duclos C. Comparison of two kinds of interface, based on guided navigation or usability principles, for improving the adoption of computerized decision support systems: application to the prescription of antibiotics. J Am Med Inform Assoc 2014. February;21(e1):e107-e116. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.